A Novel Point Cloud Registration Method Based on ROPNet

Abstract

:1. Introduction

- •

- A new loss function based on cross-entropy is designed, which helps improve the point clouds registration accuracy, especially in overlapping regions.

- •

- The performance of the ROPNet is also improved by adding a channel attention mechanism.

- •

- A large number of experiments on commonly used datasets were conducted and verified the effectiveness of our proposed method.

2. Related Work

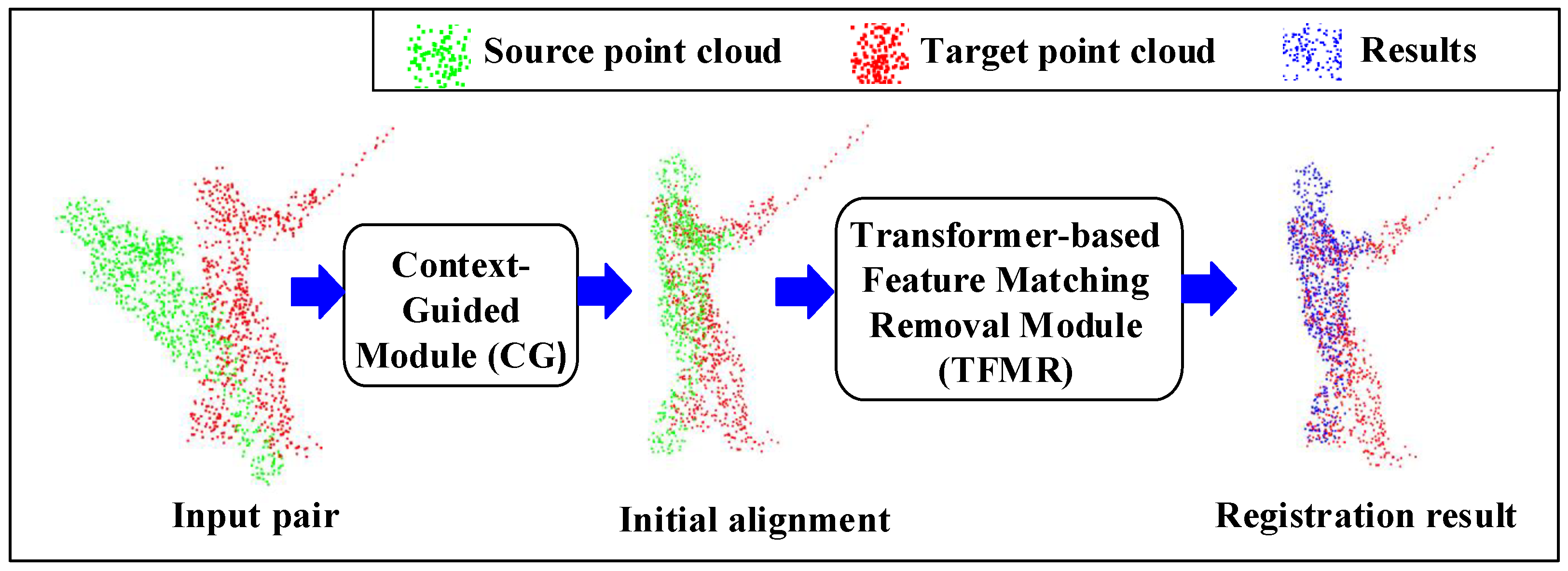

2.1. ROPNet

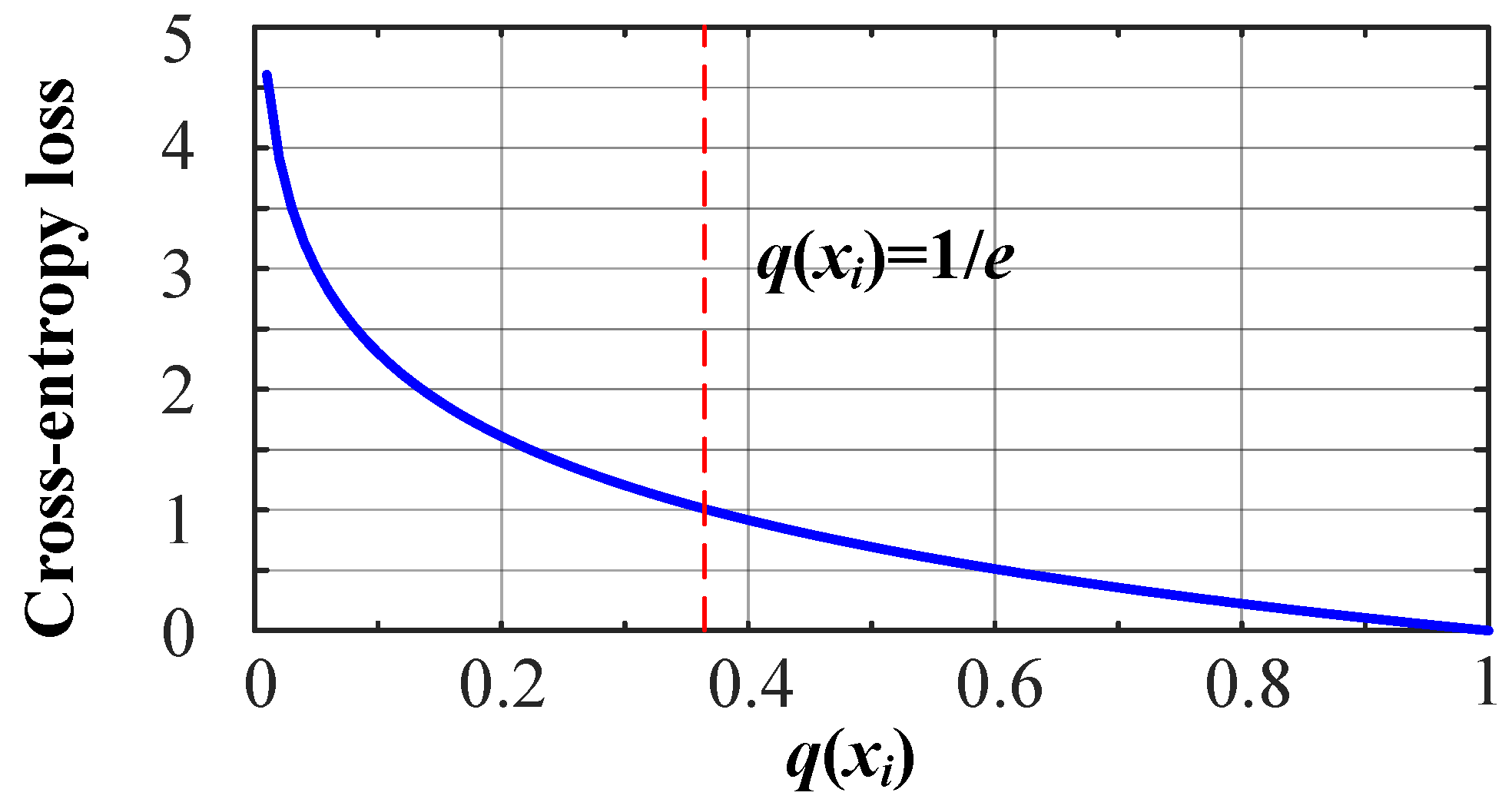

2.2. Cross-Entropy Loss

2.3. Attention Mechanism

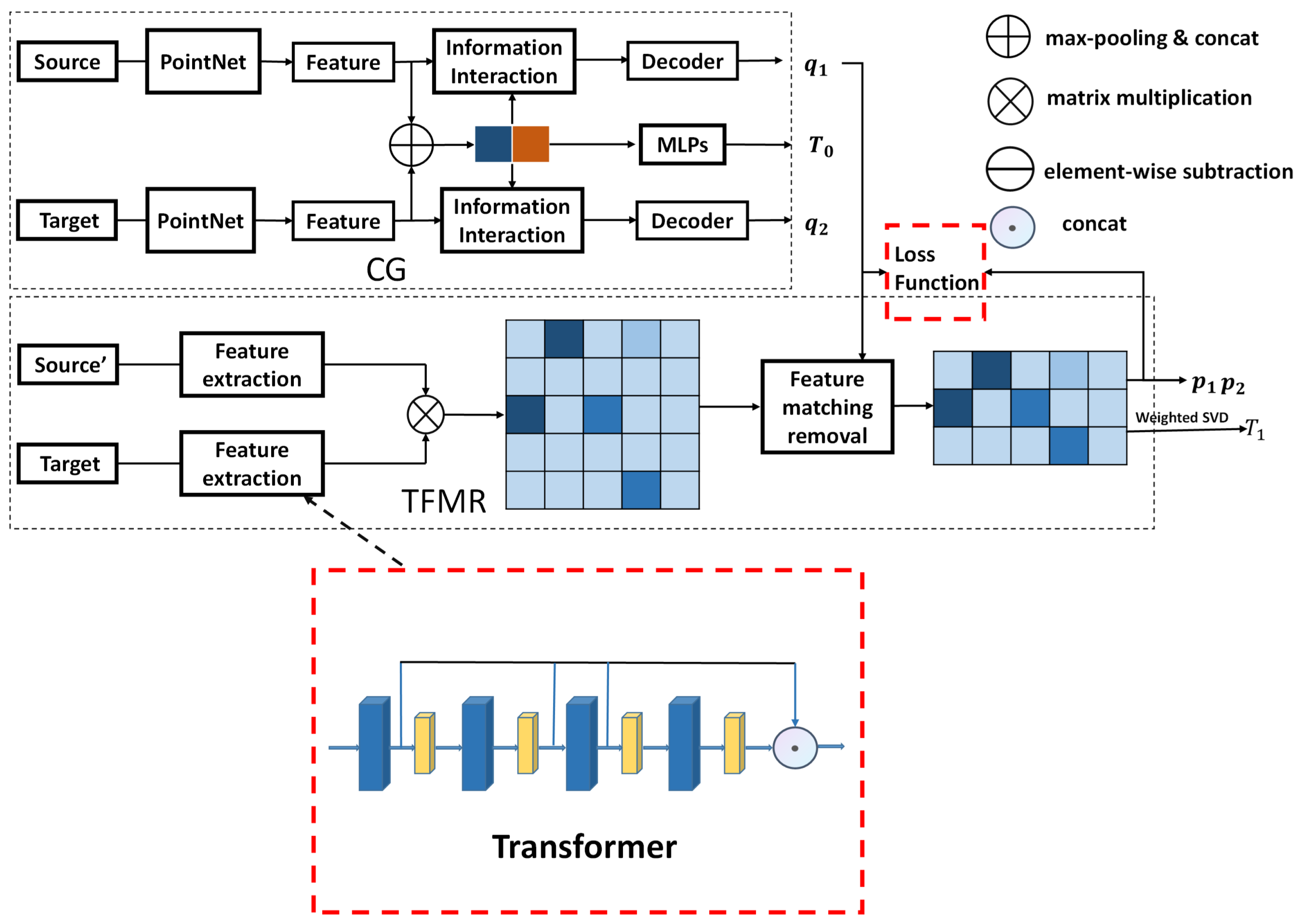

3. Proposed Method

3.1. Modification of Loss Function

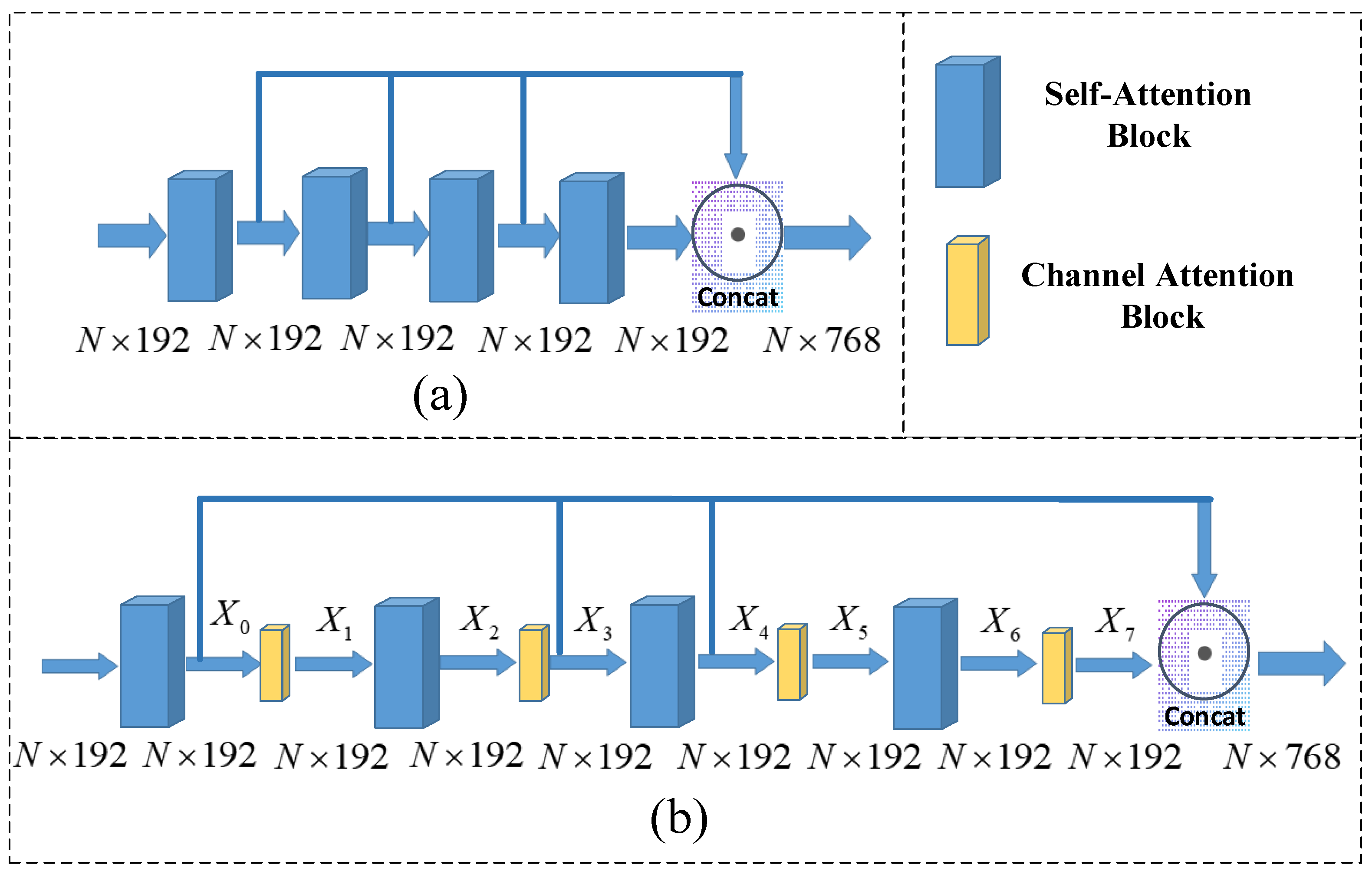

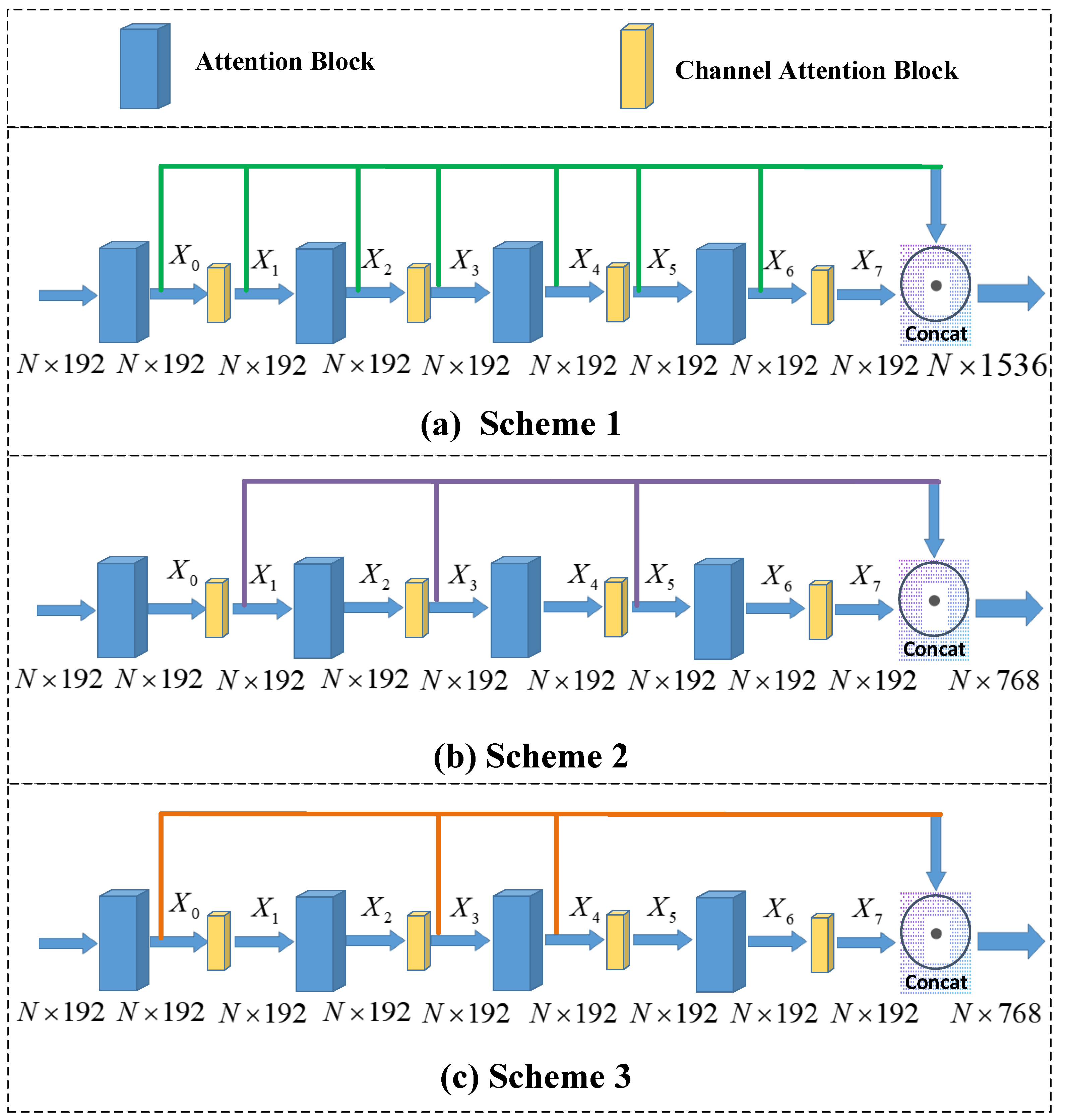

3.2. Channel Attention-Based ROPNet

3.3. Overall Improved Architecture

4. Experiments

4.1. Dataset and Evaluation Metrics

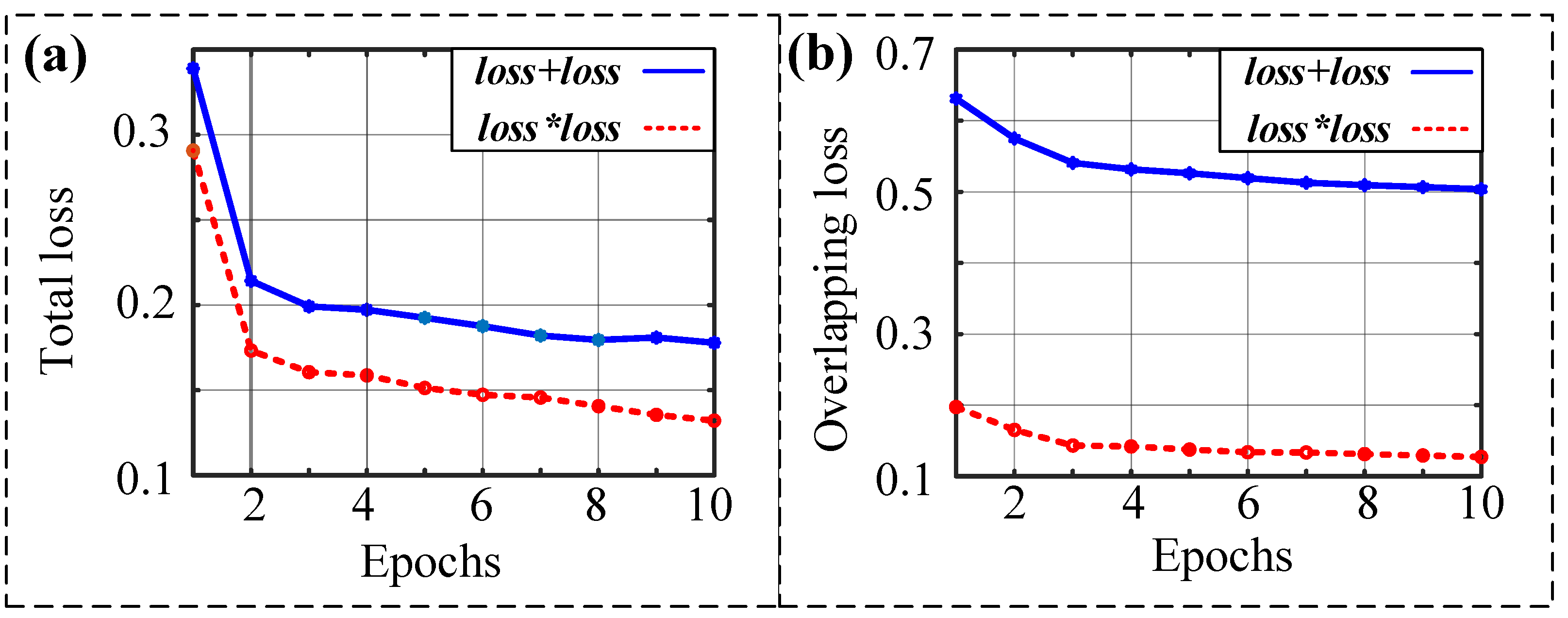

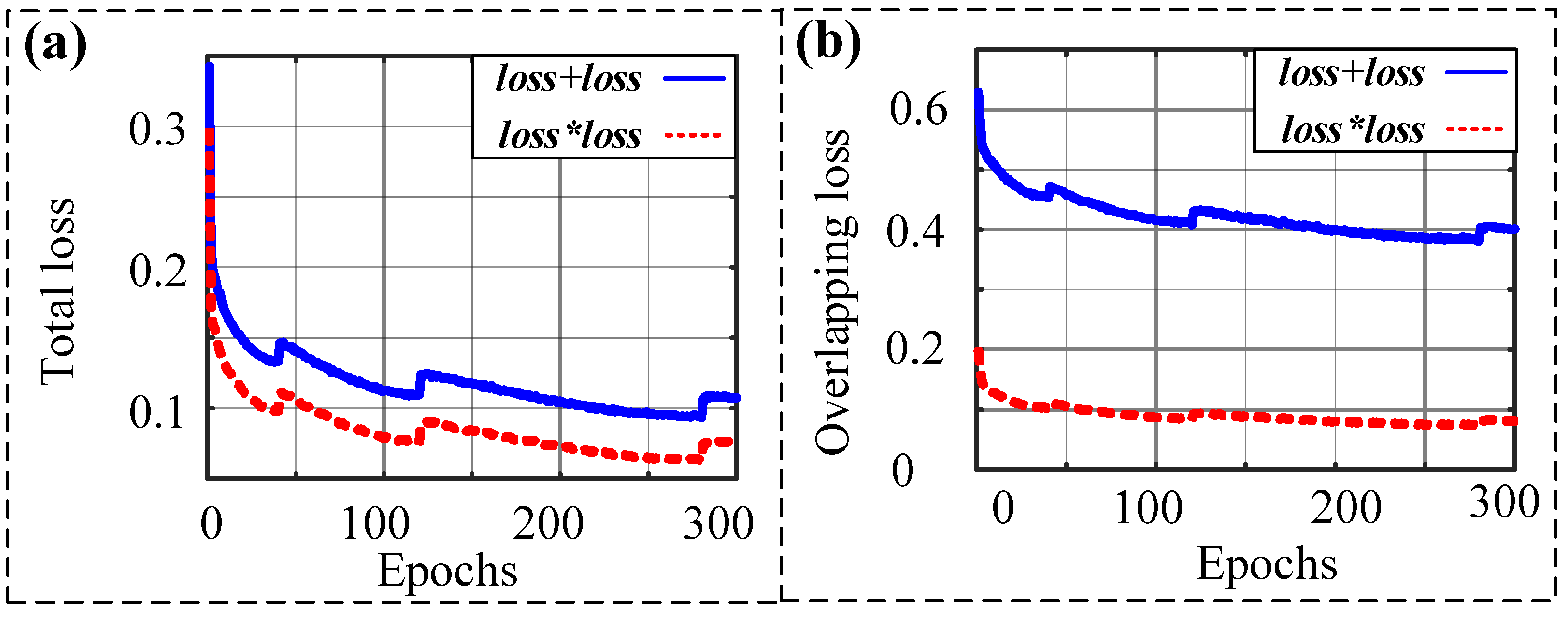

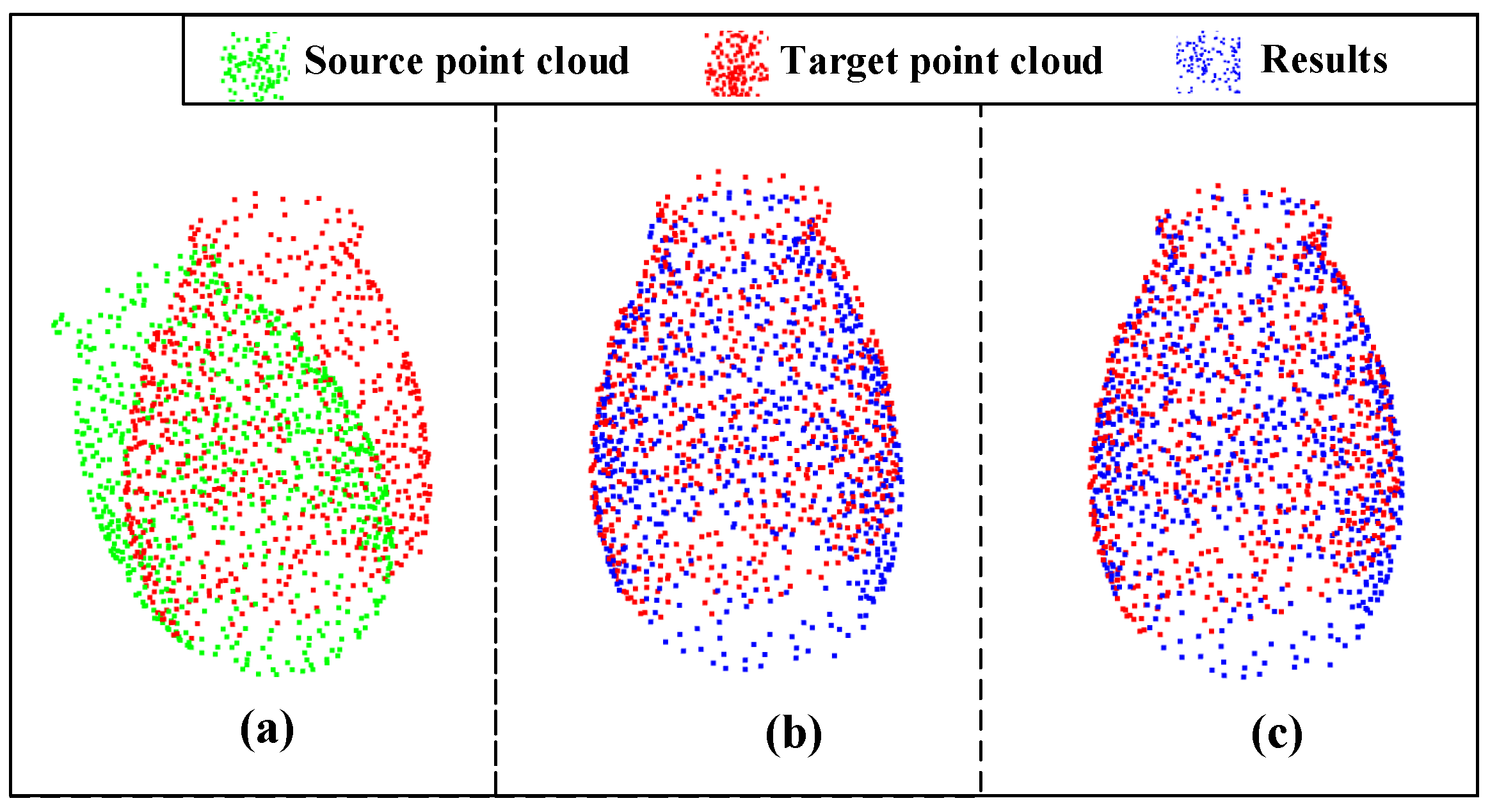

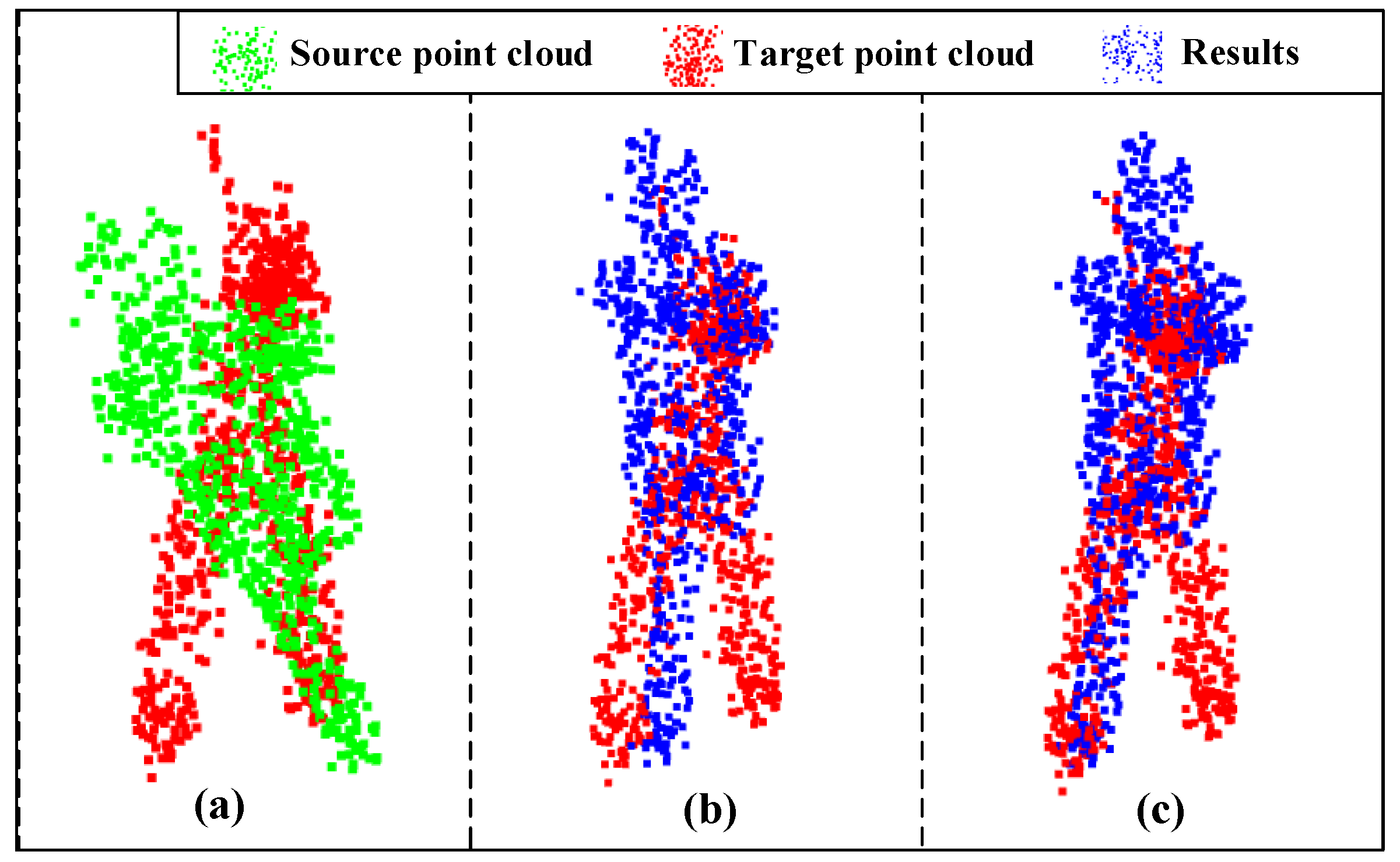

4.2. Improvement by New Loss Function

4.3. Ablation Experiment

4.4. Combination of Two Improvements

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Elhousni, M.; Huang, X. Review on 3d lidar localization for autonomous driving cars. arXiv 2020, arXiv:2006.00648. [Google Scholar]

- Nagy, B.; Benedek, C. Real-time point cloud alignment for vehicle localization in a high resolution 3D map. In Proceedings of the 15th European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, A.; Min, Z.; Zhang, Z.; Meng, M.Q.-H. Generalized point set registration with fuzzy correspondences based on variational Bayesian Inference. IEEE Trans. Fuzzy Syst. 2022, 30, 1529–1540. [Google Scholar] [CrossRef]

- Fu, Y.; Brown, N.M.; Saeed, S.U.; Casamitjana, A.; Baum, Z.M.C.; Delaunay, R.; Yang, Q.; Grimwood, A.; Min, Z.; Blumberg, S.B.; et al. DeepReg: A deep learning toolkit for medical image registration. arXiv 2020, arXiv:2011.02580. [Google Scholar] [CrossRef]

- Li, Y.; Harada, T. Lepard: Learning partial point cloud matching in rigid and deformable scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 20–25 June 2022; pp. 5554–5564. [Google Scholar]

- Min, Z.; Wang, J.; Pan, J.; Meng, M.-Q.H. Generalized 3-D point set registration with hybrid mixture models for computer-assisted orthopedic surgery: From isotropic to anisotropic positional error. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1679–1691. [Google Scholar] [CrossRef]

- Min, Z.; Liu, J.; Liu, L.; Meng, M.-Q.H. Generalized coherent point drift with multi-variate gaussian distribution and watson distribution. IEEE Robot. Autom. Lett. 2021, 6, 6749–6756. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. Sensor fusion IV: Control paradigms and data structures. Spie 1992, 1611, 586–606. [Google Scholar]

- Sarode, V.; Li, X.; Goforth, H.; Aoki, Y.; Srivatsan, R.A.; Lucey, S.; Choset, H. Pcrnet: Point cloud registration network using pointnet encoding. arXiv 2019, arXiv:1908.07906. [Google Scholar]

- Bai, X.; Luo, Z.; Zhou, L.; Fu, H.; Quan, L.; Tai, C.-L. D3feat: Joint learning of dense detection and description of 3d local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6359–6367. [Google Scholar]

- Li, J.; Zhang, C.; Xu, Z.; Zhou, H.; Zhang, C. Iterative distance-aware similarity matrix convolution with mutual-supervised point elimination for efficient point cloud registration. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 378–394. [Google Scholar]

- Huang, S.; Gojcic, Z.; Usvyatsov, M.; Wieser, A.; Schindler, K. Predator: Registration of 3d point clouds with low overlap. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4267–4276. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Rpm-net: Robust point matching using learned features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11824–11833. [Google Scholar]

- Zhu, L.; Liu, D.; Lin, C.; Yan, R.; Gómez-Fernández, F.; Yang, N.; Feng, Z. Point cloud registration using representative overlapping points. arXiv 2021, arXiv:2107.02583. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Sun, Y.; Cheng, C.; Zhang, Y.; Zhang, C.; Zheng, L.; Wang, Z.; Wei, Y. Circle loss: A unified perspective of pair similarity optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6398–6407. [Google Scholar]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice loss for data-imbalanced NLP tasks. arXiv 2019, arXiv:1911.02855. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Pihur, V.; Datta, S.; Datta, S. Weighted rank aggregation of cluster validation measures: A monte carlo cross-entropy approach. Bioinformatics 2007, 23, 1607–1615. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Viña del Mar, Chile, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Guo, M.H.; Cai, J.X.; Liu, Z.N.; Mu, T.J.; Martin, R.R.; Hu, S.M. Pct: Point cloud transformer. IEEE Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Xu, H.; Liu, S.; Wang, G.; Liu, G.; Zeng, B. Omnet: Learning overlapping mask for partial-to-partial point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3132–3141. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, March 2018; pp. 3–19. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Qiu, S.; Wu, Y.; Anwar, S.; Li, C. Investigating attention mechanism in 3d point cloud object detection. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 403–412. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

| AO | TO | |||||||

|---|---|---|---|---|---|---|---|---|

| Error(R) | Error(t) | MAE(R) | MAE(t) | Error(R) | Error(t) | MAE(R) | MAE(t) | |

| noiseless | ||||||||

| loss*loss | 1.2994 | 0.0138 | 0.6186 | 0.0066 | 1.6687 | 0.0146 | 0.7634 | 0.0069 |

| loss+loss | 1.3926 | 0.0141 | 0.6287 | 0.0067 | 1.7064 | 0.0151 | 0.7951 | 0.0072 |

| noise | ||||||||

| loss*loss | 1.47 | 0.016 | 0.7378 | 0.0076 | 1.8856 | 0.0178 | 0.9303 | 0.0085 |

| loss+loss | 1.5239 | 0.0163 | 0.7516 | 0.0078 | 1.9385 | 0.0179 | 0.9606 | 0.0086 |

| AO | TO | |||||||

|---|---|---|---|---|---|---|---|---|

| Error(R) | Error(t) | MAE(R) | MAE(t) | Error(R) | Error(t) | MAE(R) | MAE(t) | |

| noiseless | ||||||||

| loss+loss | 1.191 | 0.0113 | 0.5089 | 0.0053 | 1.4868 | 0.0127 | 0.6514 | 0.006 |

| 0*loss | 2.8917 | 0.0311 | 1.5149 | 0.0148 | 3.2228 | 0.0327 | 1.6547 | 0.0156 |

| loss*loss | 1.1819 | 0.011 | 0.5001 | 0.0052 | 1.4255 | 0.0122 | 0.6241 | 0.0058 |

| noise | ||||||||

| loss+loss | 1.3935 | 0.0135 | 0.6502 | 0.0065 | 1.7197 | 0.0154 | 0.8458 | 0.0074 |

| 0*loss | 3.0078 | 0.0327 | 1.6225 | 0.0156 | 3.5492 | 0.0362 | 1.8584 | 0.0173 |

| loss*loss | 1.3308 | 0.0136 | 0.6305 | 0.0066 | 1.6164 | 0.0145 | 0.7754 | 0.0070 |

| AO | TO | |||||||

|---|---|---|---|---|---|---|---|---|

| Error(R) | Error(t) | MAE(R) | MAE(t) | Error(R) | Error(t) | MAE(R) | MAE(t) | |

| noiseless | ||||||||

| Original result | 1.191 | 0.0113 | 0.5089 | 0.0053 | 1.4868 | 0.0127 | 0.6514 | 0.006 |

| Scheme 1 | 1.1754 | 0.0101 | 0.4759 | 0.0047 | 1.4637 | 0.0121 | 0.6508 | 0.0057 |

| Scheme 2 | 1.233 | 0.0113 | 0.5304 | 0.0054 | 1.4461 | 0.0123 | 0.6536 | 0.0058 |

| Scheme 3 | 1.2215 | 0.0114 | 0.5057 | 0.0053 | 1.3976 | 0.0117 | 0.6215 | 0.0056 |

| noise | ||||||||

| Original result | 1.3935 | 0.0135 | 0.6502 | 0.0065 | 1.7197 | 0.0154 | 0.8458 | 0.0074 |

| Scheme 1 | 1.2797 | 0.0128 | 0.6055 | 0.0062 | 1.7157 | 0.0149 | 0.8235 | 0.0072 |

| Scheme 2 | 1.3741 | 0.0133 | 0.6389 | 0.0065 | 1.6765 | 0.0152 | 0.8302 | 0.0073 |

| Scheme 3 | 1.3357 | 0.0136 | 0.6162 | 0.0066 | 1.5287 | 0.0141 | 0.7399 | 0.0068 |

| AO | TO | |||||||

|---|---|---|---|---|---|---|---|---|

| Error(R) | Error(t) | MAE(R) | MAE(t) | Error(R) | Error(t) | MAE(R) | MAE(t) | |

| noiseless | ||||||||

| Original result | 1.191 | 0.0113 | 0.5089 | 0.0053 | 1.4868 | 0.0127 | 0.6514 | 0.006 |

| loss*loss | 1.1819 | 0.011 | 0.5001 | 0.0052 | 1.4255 | 0.0122 | 0.6241 | 0.0058 |

| Scheme 3 | 1.2215 | 0.0114 | 0.5057 | 0.0053 | 1.3976 | 0.0117 | 0.6215 | 0.0056 |

| Final result | 1.1335 | 0.0097 | 0.4717 | 0.0046 | 1.3855 | 0.011 | 0.5749 | 0.0052 |

| noise | ||||||||

| Original result | 1.3935 | 0.0135 | 0.6502 | 0.0065 | 1.7197 | 0.0154 | 0.8458 | 0.0074 |

| loss*loss | 1.3308 | 0.0136 | 0.6305 | 0.0066 | 1.6164 | 0.0145 | 0.7754 | 0.0070 |

| Scheme 3 | 1.3357 | 0.0136 | 0.6162 | 0.0066 | 1.5287 | 0.0141 | 0.7399 | 0.0068 |

| Final result | 1.2597 | 0.0123 | 0.5953 | 0.0059 | 1.5916 | 0.0137 | 0.7467 | 0.0066 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Yang, F.; Zheng, W. A Novel Point Cloud Registration Method Based on ROPNet. Sensors 2023, 23, 993. https://doi.org/10.3390/s23020993

Li Y, Yang F, Zheng W. A Novel Point Cloud Registration Method Based on ROPNet. Sensors. 2023; 23(2):993. https://doi.org/10.3390/s23020993

Chicago/Turabian StyleLi, Yuan, Fang Yang, and Wanning Zheng. 2023. "A Novel Point Cloud Registration Method Based on ROPNet" Sensors 23, no. 2: 993. https://doi.org/10.3390/s23020993

APA StyleLi, Y., Yang, F., & Zheng, W. (2023). A Novel Point Cloud Registration Method Based on ROPNet. Sensors, 23(2), 993. https://doi.org/10.3390/s23020993