Communication and Computing Task Allocation for Energy-Efficient Fog Networks

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Work

1.3. Contribution and Work Outline

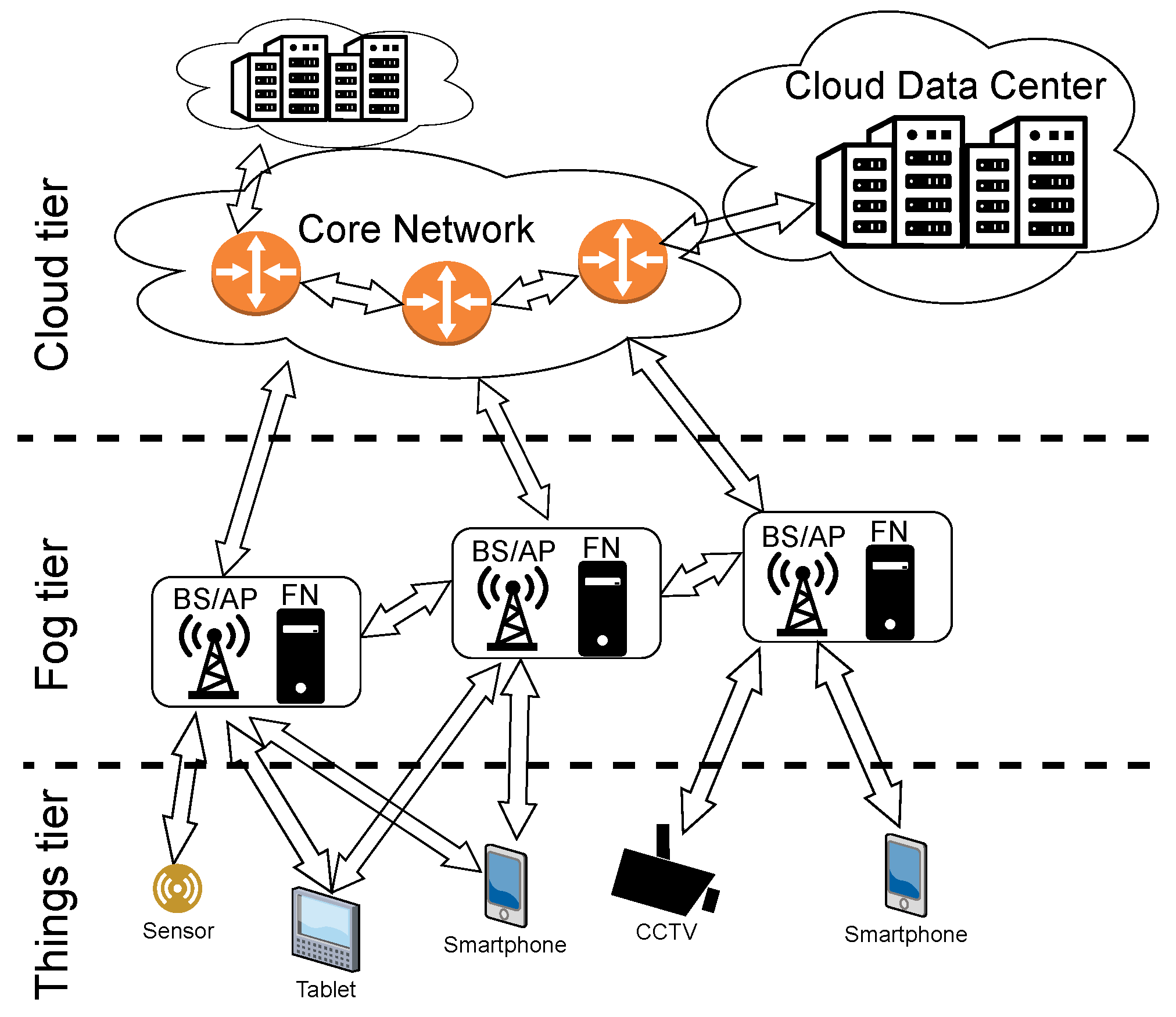

2. Network Model

2.1. Computational Requests

- MD , which offloads the task (letters in superscript are used throughout this work as upper indices, nor exponents, e.g., does not denote m to the power of r);

- Size in bits;

- Arithmetic intensity in FLOP/bit;

- Ratio of the size of the result of the processed task r to the size of the offloaded task r;

- Maximum tolerated delay .

| Symbol | Description |

|---|---|

| set of all considered time instances, when one or more computational request arrives | |

| set of all Mobile/End Devices | |

| set of all Fog Nodes | |

| set of all Cloud Nodes | |

| set of all computational requests arriving at | |

| size of request | |

| computational complexity of request | |

| MD which offloads the request | |

| output-to-input data size ratio of request | |

| maximum tolerated delay requirement for request | |

| time at which request arrives in the network, | |

| energy-per-bit cost of transmitting data between nodes x and y | |

| number of FLOPs performed per single clock cycle at node | |

| link bitrate between nodes x and y | |

| fiberline distance to CN | |

| a parameter characterizing delay depending on distance | |

| minimum clock frequency of node | |

| maximum clock frequency of node | |

| , , , | parameters of the power model of CPU installed in node |

| time at which node finishes computing its last task | |

| variable showing whether request is computed at node , | |

| variable showing whether request is transmitted wirelessly to node , | |

| clock frequency of node , | |

| energy efficiency (FLOPS per Watt) characterizing node | |

| power consumption related to computations at node | |

| energy spent on transmission and processing of request | |

| energy spent in the network on processing request | |

| energy spent on transmission of request | |

| , | energy spent on wireless/wired transmission of request |

| energy cost for transmission of request between nodes x and y | |

| energy cost of processing request at node | |

| total delay of request | |

| delay caused by transmitting request | |

| , | wireless/wired delay of request |

| delay of transmission of request between nodes x and y | |

| uplink delay of transmitting request to node , provided that | |

| queuing delay of request | |

| queuing delay of request at node , provided that | |

| computational delay caused by processing request | |

| computational delay caused by processing request at node |

2.2. Energy Consumption

2.3. Delay

2.4. Updating Scheduling Variables in the Fog

3. Optimization Problem

4. Problem Solution

4.1. Auxiliary Variables

| Symbol | Description |

|---|---|

| variable showing whether request is transmitted wirelessly to node , provided that | |

| clock frequency of node , provided that and , | |

| total delay of , provided that and | |

| computational delay of , provided that and | |

| energy spent on processing of request , provided that and | |

| set of requests rejected due to delay requirements | |

| set of not rejected requests, | |

| variable showing whether request is transmitted wirelessly to node , provided that | |

| clock frequency of node , provided that and , | |

| total delay of , provided that and | |

| computational delay of , provided that and | |

| energy spent on processing of request , provided that and | |

| set of requests rejected due to delay requirements | |

| set of not rejected requests, |

4.2. Finding Optimal Frequencies

4.3. Transmission Allocation

4.4. Computation Allocation

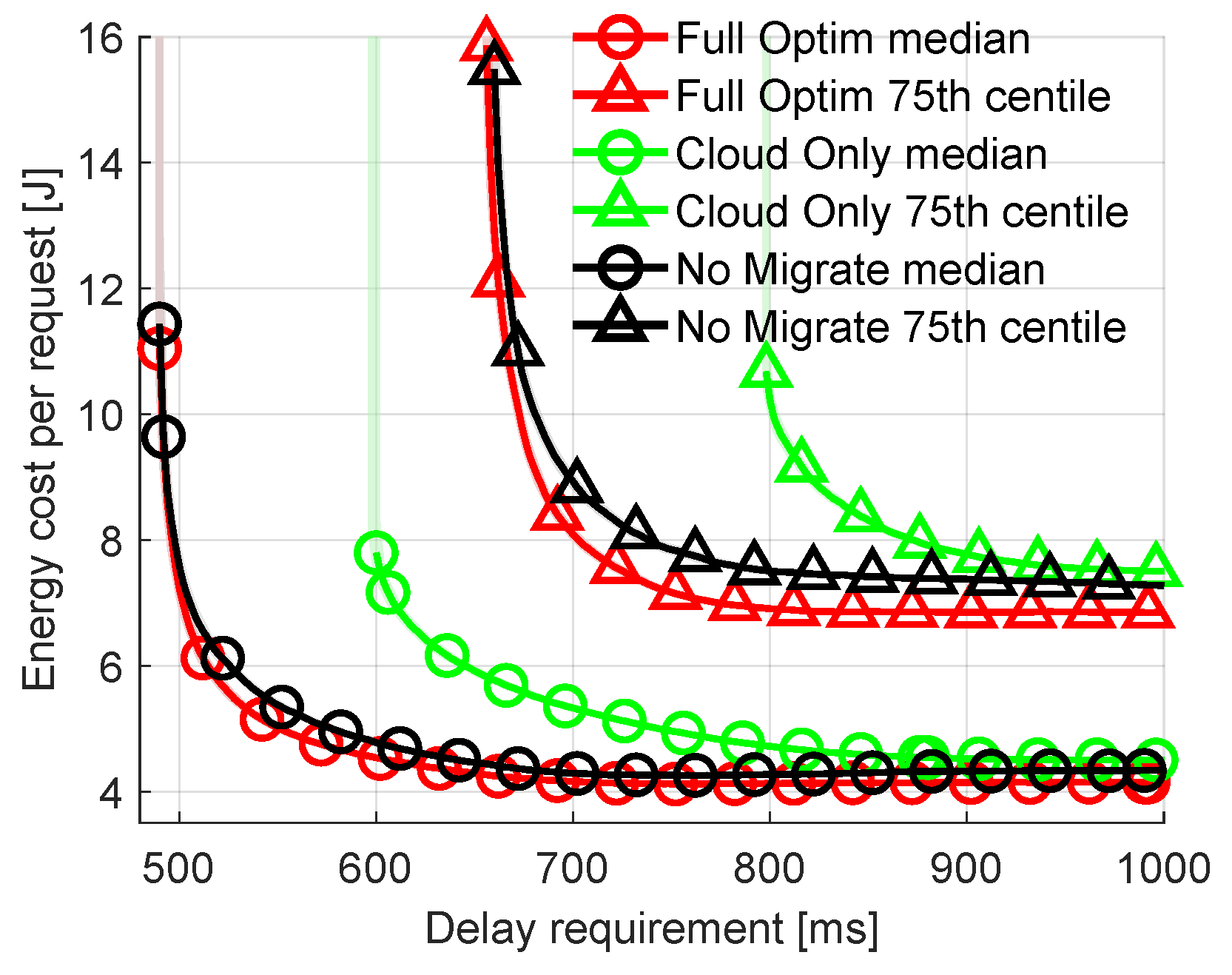

5. Results

5.1. Scenario Overview

5.2. Baseline/Suboptimal Solutions

5.3. Comparison with Exhaustive Search and All Possible Allocations

5.4. Impact of Network Parameters

5.5. Impact of Traffic Parameters

6. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AWS | Amazon Web Services |

| AP | Access Point |

| API | Application Programming Interface |

| BAN | Body Area Network |

| BS | Base Station |

| BSN | Body Sensor Network |

| CDN | Content Delivery Network |

| CDF | Cumulative Distribution Function |

| CPE | Customer Premises Equipment |

| CN | Cloud Node |

| CPU | Central Processing Unit |

| C-RAN | Cloud Radio Access Network |

| DC | Data Center |

| DVFS | Dynamic Voltage and Frequency Scaling |

| EA | Energy-Aware |

| ECG | Electrocardiogram |

| EEFFRA | Energy-EFFicient Resource Allocation |

| EH | Energy Harvesting |

| ETSI | European Telecommunications Standards Institute |

| EPON | Ethernet Passive Optical Network |

| FI | Fog Instance |

| FLOP | Floating Point Operation |

| FLOPS | Floating Point Operations per Second |

| FN | Fog Node |

| F-RAN | Fog Radio Access Network |

| GPS | Global Positioning System |

| GSM | Global System for Mobile communications |

| HD | High Definition |

| HT | Higher Throughput |

| IBStC | If Busy Send to Cloud |

| IBKiF | If Busy Keep in FN |

| IBStOF | If Busy Send to Other FN |

| IEEE | Institute of Electrical and Electronics Engineers |

| ICT | Information and Communication Technology |

| IoE | Internet of Everything |

| IoT | Internet of Things |

| IP | Internet Protocol |

| KKT | Karush–Kuhn–Tucker |

| LAN | Local Area Network |

| LC | Low-Complexity |

| LTE | Long Term Evolution |

| MCC | Mobile Cloud Computing |

| MINLP | Mixed Integer Nonlinear Programming |

| MD | Mobile Device |

| MEC | Mobile/Multi-Access Edge Computing |

| MIB | Management Interface Base |

| nDC | nano Data Center |

| NFV | Network Function Virtualization |

| NR | New Radio |

| OSI | Open Systems Interconnection |

| PA | Power-Aware |

| PC | Personal Computer |

| PGN | Portable Game Notation |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| RAM | Random Access Memory |

| RAN | Radio Access Network |

| RFC | Request for Comments |

| RRH | Remote Radio Head |

| RTT | Round-Trip Time |

| SCA | Successive Convex Approximation |

| SCN | Small Cell Network |

| SDN | Software Defined Network |

| SDR | SemiDefinite Relaxation |

| SINR | Signal to Interference-plus-Noise Ratio |

| SM | Sleep Mode |

| SOA | Service Oriented Architecture |

| TDM | Time Division Multiplexing |

| TE | Traffic Engineering |

| TM | Traffic Matrix |

| TM | Traffic Matrices |

| V2V | Vehicle-to-Vehicle |

| V2X | Vehicle-to-Anything |

| VC | Virtual Cluster |

| VM | Virtual Machine |

| WAN | Wide Area Network |

| WDM | Wavelength Division Multiplexing |

| WE | WeekEnd day |

| WLAN | Wireless Local Area Network |

References

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A Comprehensive Survey on Fog Computing: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 416–464. [Google Scholar] [CrossRef] [Green Version]

- Shih, Y.; Chung, W.; Pang, A.; Chiu, T.; Wei, H. Enabling Low-Latency Applications in Fog-Radio Access Networks. IEEE Netw. 2017, 31, 52–58. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the Mobile Cloud Computing (MCC) Workshop, Helsinki, Finland, 17 August 2012. [Google Scholar] [CrossRef]

- Google. GOOGLE Environmental Report: 2019. Technical Report, Google. 2019. Available online: https://www.gstatic.com/gumdrop/sustainability/google-2019-environmental-report.pdf (accessed on 11 April 2022).

- Ojagh, S.; Cauteruccio, F.; Terracina, G.; Liang, S.H. Enhanced air quality prediction by edge-based spatiotemporal data preprocessing. Comput. Electr. Eng. 2021, 96, 107572. [Google Scholar] [CrossRef]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q.S. Offloading in Mobile Edge Computing: Task Allocation and Computational Frequency Scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-Efficient Resource Allocation for Mobile-Edge Computation Offloading. IEEE Trans. Wirel. Commun. 2017, 16, 1397–1411. [Google Scholar] [CrossRef]

- Liu, L.; Chang, Z.; Guo, X. Socially Aware Dynamic Computation Offloading Scheme for Fog Computing System With Energy Harvesting Devices. IEEE Internet Things J. 2018, 5, 1869–1879. [Google Scholar] [CrossRef] [Green Version]

- Bai, W.; Qian, C. Deep Reinforcement Learning for Joint Offloading and Resource Allocation in Fog Computing. In Proceedings of the 2021 IEEE 12th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 20–21 August 2021; pp. 131–134. [Google Scholar] [CrossRef]

- Vu, T.T.; Nguyen, D.N.; Hoang, D.T.; Dutkiewicz, E.; Nguyen, T.V. Optimal Energy Efficiency with Delay Constraints for Multi-Layer Cooperative Fog Computing Networks. IEEE Trans. Commun. 2021, 69, 3911–3929. [Google Scholar] [CrossRef]

- Deng, R.; Lu, R.; Lai, C.; Luan, T.H.; Liang, H. Optimal Workload Allocation in Fog-Cloud Computing Toward Balanced Delay and Power Consumption. IEEE Internet Things J. 2016, 3, 1171–1181. [Google Scholar] [CrossRef]

- Kopras, B.; Idzikowski, F.; Kryszkiewicz, P. Power Consumption and Delay in Wired Parts of Fog Computing Networks. In Proceedings of the 2019 IEEE Sustainability through ICT Summit (StICT), Montreal, QC, Canada, 18–19 June 2019. [Google Scholar]

- Vakilian, S.; Fanian, A. Enhancing Users’ Quality of Experienced with Minimum Energy Consumption by Fog Nodes Cooperation in Internet of Things. In Proceedings of the 2020 28th Iranian Conference on Electrical Engineering (ICEE), Tabriz, Iran, 4–6 August 2020. [Google Scholar] [CrossRef]

- Khumalo, N.; Oyerinde, O.; Mfupe, L. Reinforcement Learning-based Computation Resource Allocation Scheme for 5G Fog-Radio Access Network. In Proceedings of the 2020 Fifth International Conference on Fog and Mobile Edge Computing (FMEC), Paris, France, 20–23 April 2020; pp. 353–355. [Google Scholar] [CrossRef]

- Ghanavati, S.; Abawajy, J.; Izadi, D. An Energy Aware Task Scheduling Model Using Ant-Mating Optimization in Fog Computing Environment. IEEE Trans. Serv. Comput. 2022, 15, 2007–2017. [Google Scholar] [CrossRef]

- Sarkar, S.; Chatterjee, S.; Misra, S. Assessment of the Suitability of Fog Computing in the Context of Internet of Things. IEEE Trans. Cloud Comput. 2018, 6, 46–59. [Google Scholar] [CrossRef]

- Kopras, B.; Bossy, B.; Idzikowski, F.; Kryszkiewicz, P.; Bogucka, H. Task Allocation for Energy Optimization in Fog Computing Networks With Latency Constraints. IEEE Trans. Commun. 2022, 70, 8229–8243. [Google Scholar] [CrossRef]

- Strohmaier, E.; Dongarra, J.; Simon, H.; Martin, M. Green500 List for June 2020. Available online: https://www.top500.org/lists/green500/2020/06/ (accessed on 7 April 2022).

- Dolbeau, R. Theoretical peak FLOPS per instruction set: A tutorial. J. Supercomput. 2018, 74, 1341–1377. [Google Scholar] [CrossRef]

- Park, S.; Park, J.; Shin, D.; Wang, Y.; Xie, Q.; Pedram, M.; Chang, N. Accurate Modeling of the Delay and Energy Overhead of Dynamic Voltage and Frequency Scaling in Modern Microprocessors. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2013, 32, 695–708. [Google Scholar] [CrossRef]

- Jalali, F.; Hinton, K.; Ayre, R.; Alpcan, T.; Tucker, R.S. Fog Computing May Help to Save Energy in Cloud Computing. IEEE J. Sel. Areas Commun. 2016, 34, 1728–1739. [Google Scholar] [CrossRef]

- Van Heddeghem, W.; Idzikowski, F.; Le Rouzic, E.; Mazeas, J.Y.; Poignant, H.; Salaun, S.; Lannoo, B.; Colle, D. Evaluation of power rating of core network equipment in practical deployments. In Proceedings of the OnlineGreenComm, Online, 25–28 September 2012. [Google Scholar]

- Van Heddeghem, W.; Idzikowski, F.; Vereecken, W.; Colle, D.; Pickavet, M.; Demeester, P. Power consumption modeling in optical multilayer networks. Photonic Netw. Commun. 2012, 24, 86–102. [Google Scholar] [CrossRef] [Green Version]

- Olbrich, M.; Nadolni, F.; Idzikowski, F.; Woesner, H. Measurements of Path Characteristics in PlanetLab; Technical Report TKN-09-005; TU Berlin: Berlin, Germany, 2009. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Edmonds, J.; Karp, R.M. Theoretical Improvements in Algorithmic Efficiency for Network Flow Problems. J. ACM 1972, 19, 248–264. [Google Scholar] [CrossRef] [Green Version]

- Wong, H. A Comparison of Intel’s 32 nm and 22 nm Core i5 CPUs: Power, Voltage, Temperature and Frequency. 2012. Available online: http://blog.stuffedcow.net/2012/10/intel32nm-22nm-core-i5-comparison/ (accessed on 7 April 2022).

- Gunaratne, C.; Christensen, K.; Nordman, B. Managing energy consumption costs in desktop PCs and LAN switches with proxying, split TCP connections and scaling of link speed. Int. J. Netw. Manag. 2005, 15, 297–310. [Google Scholar] [CrossRef]

- Kryszkiewicz, P.; Kliks, A.; Kulacz, L.; Bossy, B. Stochastic Power Consumption Model of Wireless Transceivers. Sensors 2020, 20, 4704. [Google Scholar] [CrossRef] [PubMed]

- IEEE Standard 802.11-2020; IEEE Standard for Information Technology–Telecommunications and Information Exchange between Systems—Local and Metropolitan Area Networks–Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications. IEEE: Piscataway, NJ, USA, 2021. [CrossRef]

- ITU. Propagation Data and Prediction Methods for the Planning of Indoor Radiocommunication Systems and Radio Local Area Networks in the Frequency Range 300 MHz to 450 GHz; ITU: Geneva, Switzerland, 2019. [Google Scholar]

- Intel. Intel Delivers New Architecture for Discovery with Intel Xeon Phi Coprocessor. 2012. Available online: https://newsroom.intel.com/news-releases/intel-delivers-new-architecture-for-discovery-with-intel-xeon-phi-coprocessors/ (accessed on 7 April 2022).

- Gunaratne, C.; Christensen, K.; Nordman, B.; Suen, S. Reducing the Energy Consumption of Ethernet with Adaptive Link Rate (ALR). Trans. Comput. 2008, 57, 448–461. [Google Scholar] [CrossRef] [Green Version]

- European Commission, Joint Research Centre; Bertoldi, P. EU Code of Conduct on Energy Consumption of Broadband Equipment: Version 6; Publications Office of the European Union: Luxembourg, 2017. [Google Scholar] [CrossRef]

- Strohmaier, E.; Dongarra, J.; Simon, H.; Martin, M. Green500 List for November 2021. Available online: https://www.top500.org/lists/green500/list/2021/11/ (accessed on 7 April 2022).

| Energy (E) consumption and Delay (D) | |||||||

|---|---|---|---|---|---|---|---|

| Work | MD | MD-FN | FN | FN-FN | FN-CN | CN | Requests |

| Dinh et al. [6] | Optim. incl. frequency | Optim. | Ign. E, Cons. D | N\A | Ign. E, Cons. D | Ign. E, Cons. D | Sets |

| You et al. [7] | Optim. | Optim. | Ign. E, Cons. D, One FN | N\A | Ign. E, Cons. D | Ign. E, Cons. D | Load |

| Liu et al. [8] | Optim. | Optim. | Ign. E, Cons. D | N\A | Ign. E, Cons. D | Ign. E, Cons. D | Load |

| Bai et al. [9] | Optim. | Optim. | Ign. E, Cons. D | N\A | Ign. E, Cons. D | Ign. | Sets |

| Vu et al. [10] | Optim. | Optim. | Ign. E, Cons. D | N\A | Ign. E, Cons. D | Ign. E, Cons. D | Sets |

| Deng et al. [11] | N\A | Ign. | Optim. incl. frequency | Ign. | Ign. E, Optim. D | Optim. incl. frequency | Load |

| Kopras et al. [12] | N\A | Cons. | Cons. | Ign. | Cons. | Cons. | Load |

| Vakilian et al. [13] | N\A | Ign. E, Cons. D | Optim. | Ign. E, Optim. D | Ign. E, Optim. D | Ign. E, Cons. D | Load |

| Khumalo et al. [14] | N\A | Optim. | Optim. | N\A | Ign. E, Cons. D | Ign. E, Cons. D | Load |

| Ghanavati et al. [15] | N\A | Optim. | Optim. | N\A | N\A | N\A | Sets |

| Sarkar et al. [16] | N\A | Cons. | Cons. | Ign. | Cons. | Cons. | Load |

| Kopras et al. [17] | N\A | Ign. E, Cons. D | Optim. incl. frequency | Optim. | Cons. | Cons. | Sets |

| This work | N\A | Optim. | Optim. incl. frequency | Optim. | Cons. | Cons. | Sets |

| Symbol | Value/Range | Symbol | Value/Range |

|---|---|---|---|

| Requests, | |||

| [1, 5] MB | [1, 500] FLOP/bit | ||

| [0.01, 0.2] | [500, 3000] ms | ||

| [5, 10] | 200 ms | ||

| Computations in fog [19,20,27], | |||

| , | 5.222, 34.256 | , | 88.594, −47.152 |

| 1.6 GHz | 4.2 GHz | ||

| 16 FLOP/cycle | |||

| Computations in cloud [18,19], | |||

| 1.5 GHz | 32 FLOP/cycle | ||

| Wired Transmission [23,24,28] | |||

| , | 2000 km | 7500 ns/km | |

| 10 Gbps | 1 Gbps | ||

| , | {2, 3} × 2 nJ/(bit) | , | 12 nJ/bit |

| Wireless Transmission [29,30,31] | |||

| , , | depends on rate and path loss | , , | {0, 6.5, 13, 18.5, 26, 39, 52, 58.5, 65} Mbps |

| Name | Limitation | Optimization Variables |

|---|---|---|

| Full Optimization | None | Computing allocation—, transmission allocation—, computing frequency— |

| Exhaustive Search | None | , , |

| Cloud Only | , (if there are multiple Cloud Nodes) | |

| No Migrate | interdependently on , | |

| Closest Wireless | , |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kopras, B.; Idzikowski, F.; Bossy, B.; Kryszkiewicz, P.; Bogucka, H. Communication and Computing Task Allocation for Energy-Efficient Fog Networks. Sensors 2023, 23, 997. https://doi.org/10.3390/s23020997

Kopras B, Idzikowski F, Bossy B, Kryszkiewicz P, Bogucka H. Communication and Computing Task Allocation for Energy-Efficient Fog Networks. Sensors. 2023; 23(2):997. https://doi.org/10.3390/s23020997

Chicago/Turabian StyleKopras, Bartosz, Filip Idzikowski, Bartosz Bossy, Paweł Kryszkiewicz, and Hanna Bogucka. 2023. "Communication and Computing Task Allocation for Energy-Efficient Fog Networks" Sensors 23, no. 2: 997. https://doi.org/10.3390/s23020997