CARRT—Motion Capture Data for Robotic Human Upper Body Model

Abstract

:1. Introduction

- Motion Capture Dataset: The dataset includes recordings captured using a VICON motion capture system, comprising a total of nine activities of daily living which includes eight unimanual activities and one bimanual activity of daily living and eight Range of Motion activities. These activities were performed by a group of ten individuals.

- OpenSim Motion Files: For each of the tasks in the dataset, motion files compatible with OpenSim are provided. These files enable the study and analysis of the captured motions using OpenSim software.

- An Upper Body Model for MATLAB: An approximate upper body model is included, specifically designed for MATLAB utilizing Peter Corke Robotic Toolbox. This model facilitates the investigation of various performance metrics utilizing MATLAB.

- Post-processed Dataset: The dataset has undergone further processing to enable the exploration of a wide range of performance metrics within the MATLAB environment. This processed dataset allows researchers to conduct in-depth analyses and investigations.

2. Related Work

Human Motion Datasets

3. The Dataset

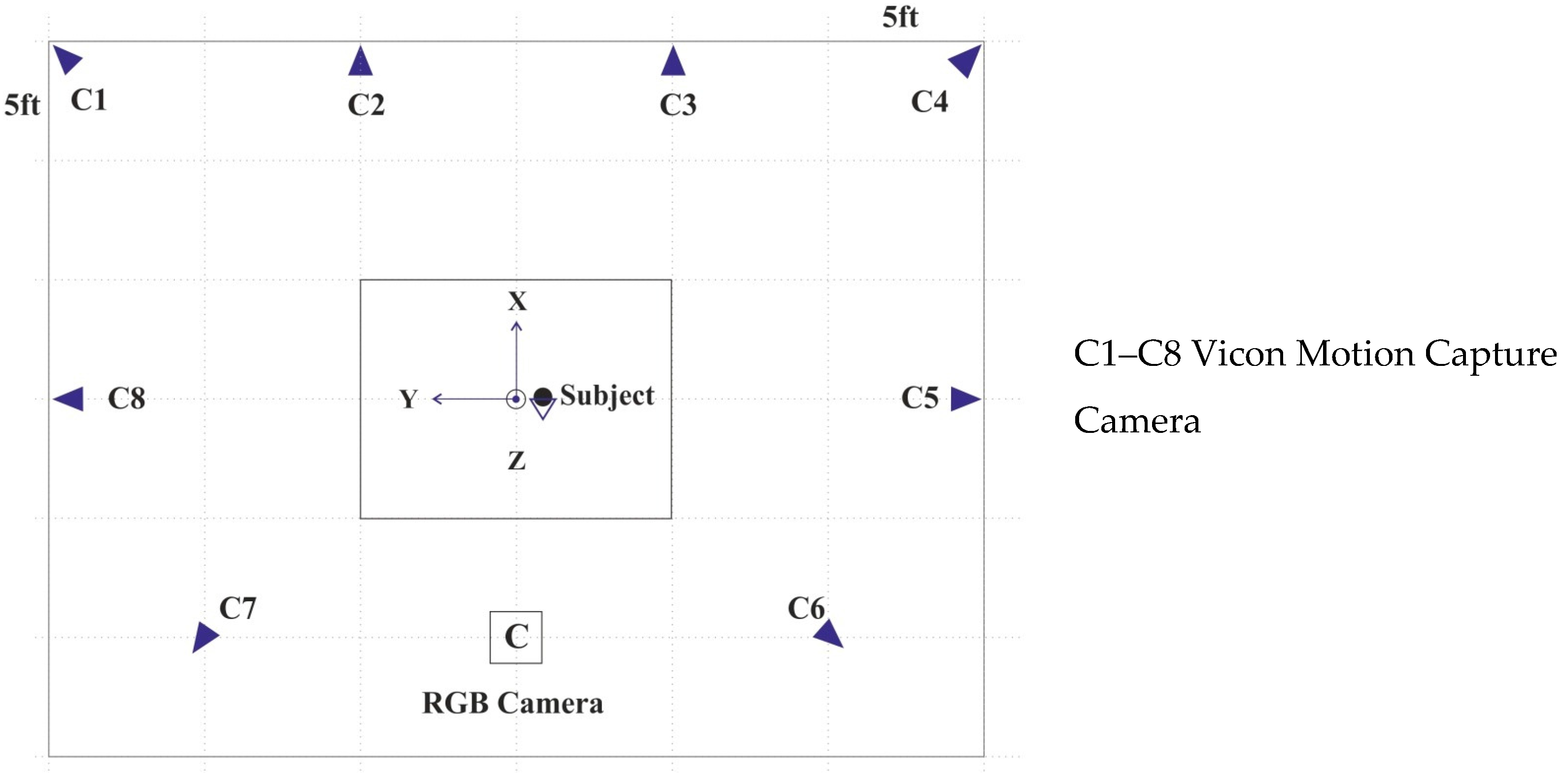

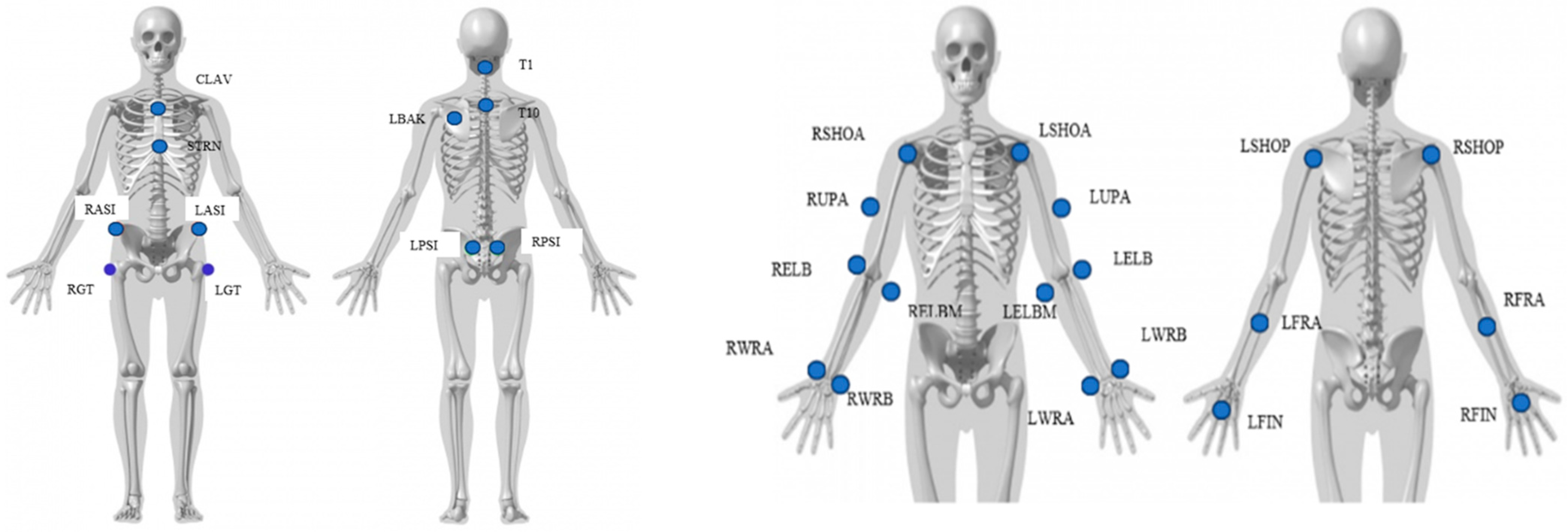

3.1. Marker Placement and Camera Setup

3.2. Participants

3.3. Experimental Setup

3.3.1. Activities of Daily Living Tasks and Procedure

3.3.2. Objects

4. Data Post Processing

4.1. File Formats and Organization

4.2. OpenSim Musculoskeletal Model

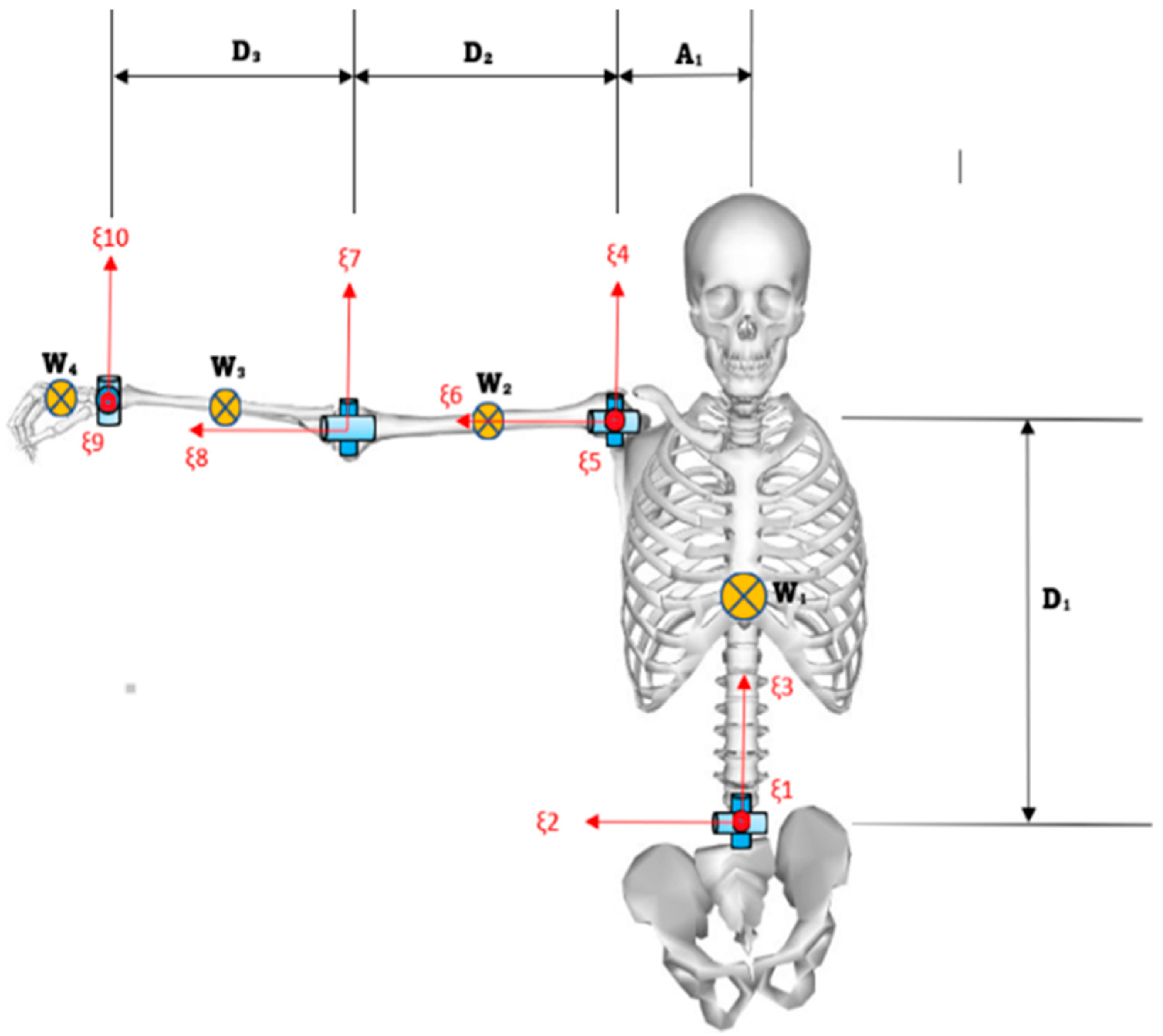

4.3. MATLAB Robotic Toolbox Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ray, C.; Mondada, F.; Siegwart, R. What do people expect from robots? In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008. [Google Scholar]

- Welfare, K.S.; Hallowell, M.R.; Shah, J.A.; Riek, L.D. Consider the human work experience when integrating robotics in the workplace. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019. [Google Scholar]

- Yang, J.; Chew, E. A systematic review for service humanoid robotics model in hospitality. Int. J. Soc. Robot. 2021, 13, 1397–1410. [Google Scholar] [CrossRef]

- Koppenborg, M.; Nickel, P.; Naber, B.; Lungfiel, A.; Huelke, M.J.H.F.; Manufacturing, E.; Industries, S. Effects of movement speed and predictability in human–robot collaboration. Hum. Factors Ergon. Manuf. 2017, 27, 197–209. [Google Scholar] [CrossRef]

- Tanizaki, Y.; Jimenez, F.; Yoshikawa, T.; Furuhashi, T. Impression Investigation of Educational Support Robots using Sympathy Expression Method by Body Movement and Facial Expression. In Proceedings of the 2018 Joint 10th International Conference on Soft Computing and Intelligent Systems (SCIS) and 19th International Symposium on Advanced Intelligent Systems (ISIS), Toyama, Japan, 5–8 December 2018. [Google Scholar]

- Tanie, K. Humanoid robot and its application possibility. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, MFI2003, Tokyo, Japan, 1 August 2003. [Google Scholar]

- Sim, J.; Kim, S.; Park, S.; Kim, S.; Kim, M.; Park, J. Design of JET humanoid robot with compliant modular actuators for industrial and service applications. Appl. Sci. 2021, 11, 6152. [Google Scholar] [CrossRef]

- Trivedi, U. CARRT—Motion Capture Data for Robotic Human Upper Body Model (Version 1). Zenodo 2023. [Google Scholar] [CrossRef]

- Trivedi, U.; Menychtas, D.; Alqasemi, R.; Dubey, R. Biomimetic Approaches for Human Arm Motion Generation: Literature Review and Future Directions. Sensors 2023, 23, 3912. [Google Scholar] [CrossRef] [PubMed]

- Krebs, F.; Meixner, A.; Patzer, I.; Asfour, T. The KIT Bimanual Manipulation Dataset. In Proceedings of the 2020 IEEE-RAS 20th International Conference on Humanoid Robots (Humanoids), Munich, Germany, 19–21 July 2021. [Google Scholar]

- Maurice, P.; Adrien, M.; Serena, I.; Olivier, R.; Clelie, A.; Nicolas, P.; Guy-Junior, R.; Lars, F. AndyData-lab-onePerson [Data set]. In The International Journal of Robotics Research. Zenodo 2019. [Google Scholar] [CrossRef]

- De la Torre, F.; Hodgins, J.; Bargteil, A.; Martin, X.; Macey, J.; Collado, A.; Beltran, P. Guide to the Carnegie Mellon University Multimodal Activity (Cmu-Mmac) Database; CMU-RI-TR-08-22. 2009. Available online: https://www.ri.cmu.edu/publications/guide-to-the-carnegie-mellon-university-multimodal-activity-cmu-mmac-database/ (accessed on 8 October 2023).

- Jing, G.; Ying, K.Y. SFU Motion Capture Database. 2023. Available online: http://mocap.cs.sfu.ca (accessed on 8 October 2023).

- Mahmood, N.; Ghorbani, N.; Troje, N.F.; Pons-Moll, G.; Black, M.J. AMASS: Archive of motion capture as surface shapes. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Taheri, O.; Ghorbani, N.; Black, M.J.; Tzionas, D. GRAB: A Dataset of Whole-Body Human Grasping of Objects. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Vicon Motion Capture System. 2023. Available online: https://www.vicon.com/ (accessed on 8 October 2023).

- Lura, D.J. The Creation of a Robotics Based Human Upper Body Model for Predictive Simulation of Prostheses Performanc; University of South Florida: Tampa, FL, USA, 2012. [Google Scholar]

- Magermans, D.; Chadwick, E.; Veeger, H.; Van Der Helm, F.C.T. Requirements for upper extremity motions during activities of daily living. Clin. Biomech. 2005, 20, 591–599. [Google Scholar] [CrossRef]

- Bucks, R.S.; Ashworth, D.L.; Wilcock, G.K.; Siegfried, K. Assessment of activities of daily living in dementia: Development of the Bristol Activities of Daily Living Scale. Age Ageing 1996, 25, 113–120. [Google Scholar] [CrossRef]

- Edemekong, P.F.; Bomgaars, D.; Sukumaran, S.; Levy, S.B. Activities of Daily Living; StatPearls. 2019. Available online: https://digitalcollections.dordt.edu/faculty_work/1222 (accessed on 8 October 2023).

- Rajagopal, A.; Dembia, C.L.; DeMers, M.S.; Delp, D.D.; Hicks, J.L.; Delp, S.L. Full-body musculoskeletal model for muscle-driven simulation of human gait. IEEE Trans. Biomed. Eng. 2016, 63, 2068–2079. [Google Scholar] [CrossRef]

- Denavit, J.; Hartenberg, R. A Kinematic Notation for Lower. ASME J. Appl. Mech. 1955, 11, 337–359. [Google Scholar]

- Holzbaur, K.R.S.; Murray, W.M.; Delp, S.L. A model of the upper extremity for simulating musculoskeletal surgery and analyzing neuromuscular control. Ann. Biomed. Eng. 2005, 33, 829–840. [Google Scholar] [CrossRef]

- Weight of Human Body Parts as Percentages of Total Body Weight. 2023. Available online: https://robslink.com/SAS/democd79/body_part_weights.htm (accessed on 8 October 2023).

- Corke, P.I.; Khatib, O. Robotics, Vision and Control: Fundamental Algorithms in MATLAB; Springer: Berlin/Heidelberg, Germany, 2011; Volume 73. [Google Scholar]

| Name | Marker Placement |

|---|---|

| T1 | Spinous Process; 1st Thoracic Vertebrae |

| T10 | Spinous Process; 10th Thoracic Vertebrae |

| CLAV | Jugular Notch |

| STRN | Xiphoid Process |

| LBAK | Middle Of Left Scapula (Asymmetrical) |

| R/LASI | Right/Left Anterior Superior Iliac Spine |

| R/LPSI | Right/Left Posterior Superior Iliac Spine |

| R/LGT | Right/Left Greater Trochanters |

| R/LSHOA | Anterior Portion of Right/Left Acromion |

| R/LSHOP | Posterior Portion of Right/Left Acromion |

| R/LUPA | Right/Left Lateral Upper Arm |

| R/LELB | Right/Left Lateral Epicondyle |

| R/LELBM | Right/Left Medial Epicondyle |

| R/LFRA | Right/Left Lateral Forearm |

| R/LWRA | Right/Left Wrist Radial Styloid |

| R/LWRB | Right/Left Wrist Ulnar Styloid |

| R/LFIN | Dorsum Of Right Hand Just Proximal To 3rd Metacarpal Head |

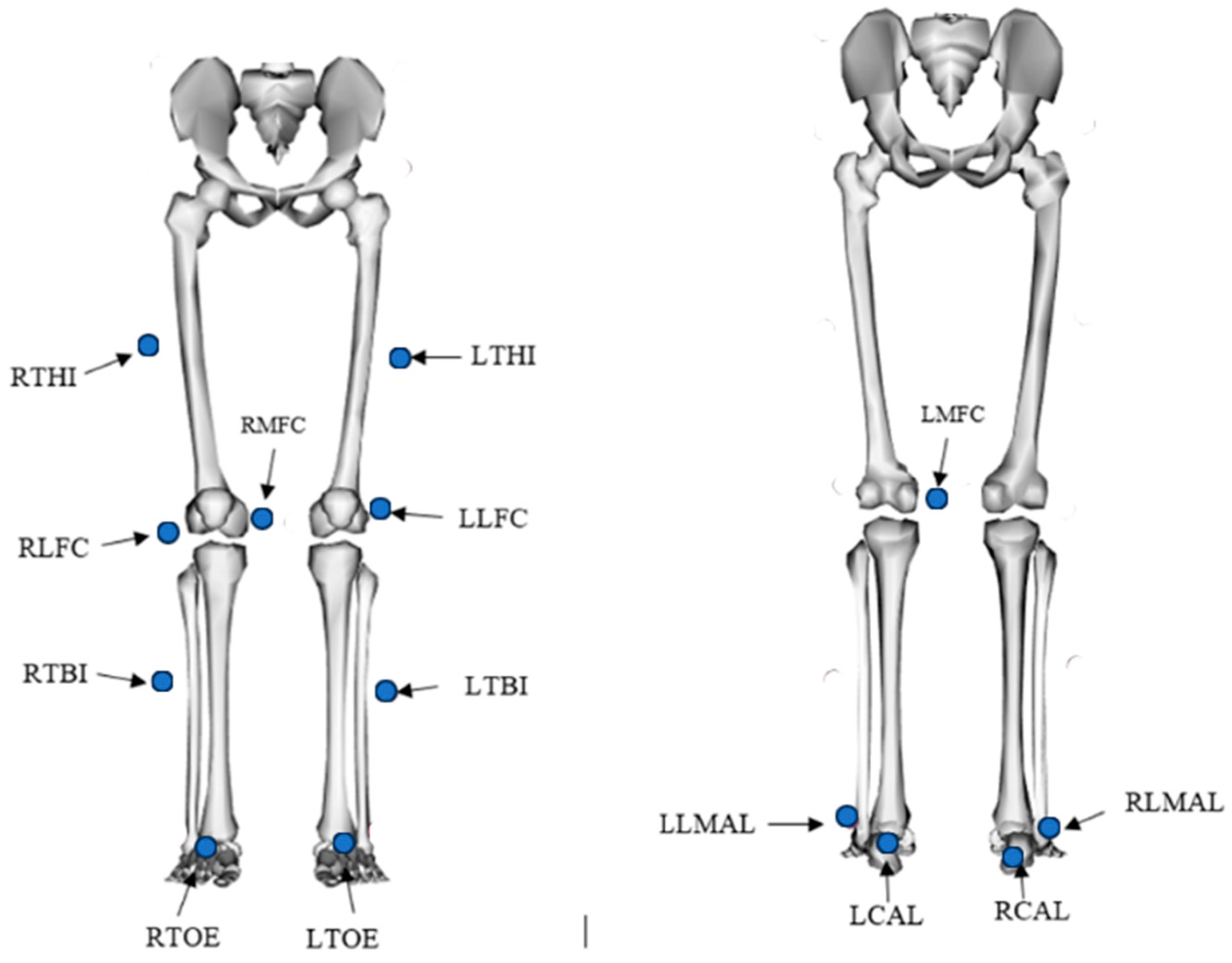

| R/LTHI | Right/Left Thigh |

| R/LLFC | Right/Left Lateral Epicondyle of Femur |

| R/LMFC | Right/Left Medial Epicondyle of Femur |

| R/LTBI | Right/Left Tibia Interior |

| R/LLMAL | Right/Left Lateral Malleolus |

| R/LCAL | Right/Left Calcaneus |

| R/LTOE | Right/Left Toe |

| Subject | Gender | Dominant Hand | Height (cm) | Body Weight (Kg) |

|---|---|---|---|---|

| Subject 1 | Male | R | 162.5 | 60 |

| Subject 2 | Male | R | 165.09 | 65 |

| Subject 3 | Female | R | 160.02 | 58 |

| Subject 4 | Male | R | 172.72 | 86 |

| Subject 5 | Female | R | 152.4 | 58 |

| Subject 6 | Female | R | 162.5 | 68 |

| Subject 7 | Female | R | 165.09 | 51 |

| Subject 8 | Female | R | 162.5 | 79 |

| Subject 9 | Male | L | 172.72 | 60 |

| Subject 10 | Female | R | 167.64 | 76 |

| ADL Task Name | Abbreviation | Object Used | Object Weight (Kg) | Object Size (m) |

|---|---|---|---|---|

| Brushing Hair | BH | Hairbrush | 0.02 | 0.243 × 0.081 × 0.0381 |

| Drinking From a Cup | FPC | Plastic Cup | 0.02 | 0.053 × 0.053 × 0.109 |

| Opening a Lower-Level Cabinet | OCL | * NA | NA | NA |

| Opening a Higher-Level Cabinet | OCH | NA | NA | NA |

| Picking Up the Box | PB | Carboard Box | 0.52 | 0.457 × 0.356 × 0.305 |

| Picking Up the Duster and Cleaning | PDC | Cleaning Duster | 0.18 | 0.356 × 0.051 × 0.076 |

| Picking Up an Empty Water Jug | PEWJ | 1 Gallon Water Jug | 0.90 | 0.15 × 0.15 × 0.269 |

| Picking Up a Full Water Jug | PFWJ | 1 Gallon Water Jug | 3.79 | 0.15 × 0.15 × 0.269 |

| Picking Up a Water Jug and Pouring | PWJ | 1/2 Gallon Water Jug and Plastic Cup | 2.55 | 0.191 × 0.105 × 0.289 |

| Dataset | Format | Number of Files |

|---|---|---|

| Participant Data | .trc | 426 |

| Vicon Data | .c3d | 426 |

| Matlab Raw Data | .xls | 215 |

| Matlab Code | .M | 7 |

| Demographic Data and Task Name | .xls | 2 |

| i | αi-1 (deg) | ai-1 (m) | di (m) | Ɵi (deg) | Joint |

|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 90 + Ɵ1 | Torso Lateral Flexion |

| 2 | 90 | 0 | 0 | 90 + Ɵ2 | Torso Flexion/Extension |

| 3 | −90 | 0 | 0 | −90 + Ɵ3 | Torso Rotation |

| 4 | 0 | A1 | D1 | Ɵ4 | Shoulder Flexion/Extension |

| 5 | −90 | 0 | 0 | −90 + Ɵ5 | Shoulder Abduction/Adduction |

| 6 | −90 | 0 | D2 | Ɵ6 | Shoulder Rotation |

| 7 | −90 | 0 | 0 | 180 + Ɵ7 | Elbow Flexion |

| 8 | −90 | 0 | D3 | 90 + Ɵ8 | Elbow Pronation/Supination |

| 9 | −90 | 0 | 0 | 90 + Ɵ9 | Wrist Flexion/Extension |

| 10 | −90 | 0 | 0 | 180 + Ɵ10 | Wrist Abduction/Adduction |

| Weight (Kg) | Segment | |

|---|---|---|

| W1 | 0.551 × Body Weight | Torso |

| W2 | 0.0325 × Body Weight | Upper Arm |

| W3 | 0.0187 × Body Weight | Lower Arm |

| W4 | 0.0065 × Body Weight | Hand |

| Subject | W1 (Kg) | D1 (m) | W2 (Kg) | A1 (m) | W3 (Kg) | D2 (m) | W4 (Kg) | D3 (m) |

|---|---|---|---|---|---|---|---|---|

| Subject 1 | 29.820 | 0.435 | 1.680 | 0.200 | 0.960 | 0.270 | 0.360 | 0.265 |

| Subject 2 | 32.305 | 0.432 | 1.820 | 0.280 | 1.040 | 0.280 | 0.390 | 0.254 |

| Subject 3 | 28.826 | 0.368 | 1.624 | 0.140 | 0.928 | 0.300 | 0.348 | 0.250 |

| Subject 4 | 42.742 | 0.457 | 2.408 | 0.200 | 1.376 | 0.318 | 0.516 | 0.265 |

| Subject 5 | 28.826 | 0.356 | 1.624 | 0.190 | 0.928 | 0.254 | 0.348 | 0.228 |

| Subject 6 | 33.796 | 0.356 | 1.904 | 0.203 | 1.088 | 0.267 | 0.408 | 0.254 |

| Subject 7 | 25.347 | 0.432 | 1.428 | 0.200 | 0.816 | 0.254 | 0.306 | 0.229 |

| Subject 8 | 39.263 | 0.457 | 2.212 | 0.200 | 1.264 | 0.254 | 0.474 | 0.228 |

| Subject 9 | 29.820 | 0.435 | 1.680 | 0.200 | 0.960 | 0.270 | 0.360 | 0.265 |

| Subject 10 | 37.772 | 0.432 | 2.128 | 0.200 | 1.216 | 0.254 | 0.456 | 0.228 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trivedi, U.; Alqasemi, R.; Dubey, R. CARRT—Motion Capture Data for Robotic Human Upper Body Model. Sensors 2023, 23, 8354. https://doi.org/10.3390/s23208354

Trivedi U, Alqasemi R, Dubey R. CARRT—Motion Capture Data for Robotic Human Upper Body Model. Sensors. 2023; 23(20):8354. https://doi.org/10.3390/s23208354

Chicago/Turabian StyleTrivedi, Urvish, Redwan Alqasemi, and Rajiv Dubey. 2023. "CARRT—Motion Capture Data for Robotic Human Upper Body Model" Sensors 23, no. 20: 8354. https://doi.org/10.3390/s23208354