Adversarially Learning Occlusions by Backpropagation for Face Recognition

Abstract

:1. Introduction

- We propose the ALOB model, a double-network OFR approach, which learns the corrupted and significant features via Trimmer and Identifier without annotating the occlusion location.

- We present a new way to learn occlusion to form the feature vector from Trimmer added with GRL that optimizes the models by maximizing the loss computed for class labels only. The formed vector contains contaminated information, which should be cleaned from another feature generated from Identifier to gain the final feature vector for recognition.

2. Related Work

2.1. Traditional Machine Learning-Based Methods

2.2. Deep-Learning-Based Methods

3. Method

3.1. ResNet Extractor

3.2. Trimmer: Gradient Reversal Layer

3.3. Identifier: Feature Projection Purification

3.4. Loss Function

3.5. Overall Training Objective

4. Experiments

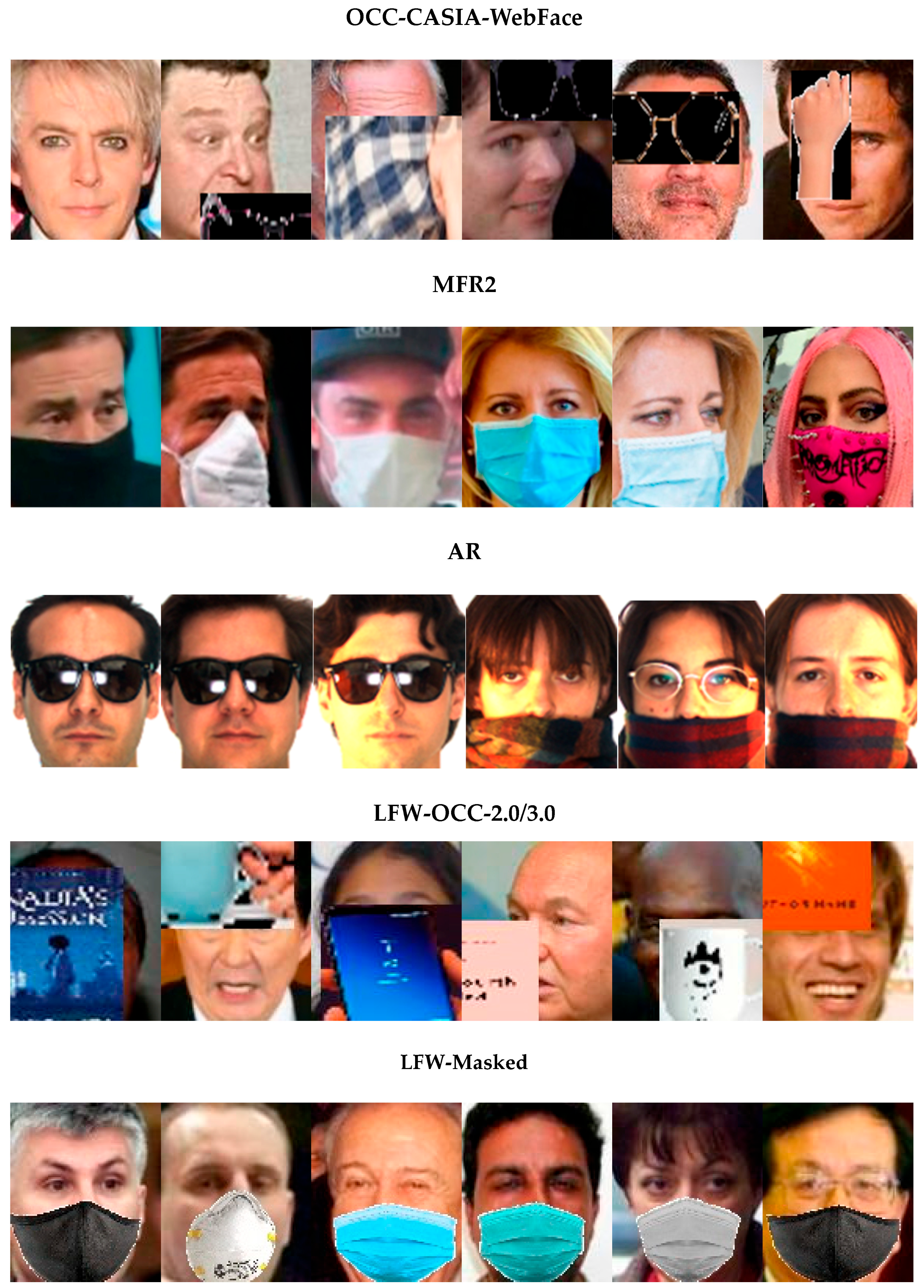

4.1. Datasets and Evaluation Protocols

| Names | IDs | Images | Test Pairs | Matching | Types |

|---|---|---|---|---|---|

| Training Dataset | |||||

| CASIA-WebFace | 10 K | 0.5 M | - | 1:1 | Synthetic |

| Testing Datasets | |||||

| LFW | 5749 | 13,233 | 6000 pairs | 1:1 | Synthetic |

| AR | 126 | 4000 | - | 1: N | Realistic |

| MFR2 | 53 | 269 | 800 pairs * | 1:1 | Realistic |

4.2. Implementation Details

4.3. Test Performances

4.4. Discussion of

4.5. Ablation Study

4.6. Visualization of the Proposed Model

4.6.1. Feature Visualization

4.6.2. Feature Space Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.K.; Guo, J.; Xue, N.N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the 2019 IEEE/Cvf Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; pp. 4685–4694. [Google Scholar]

- Wang, H.; Wang, Y.T.; Zhou, Z.; Ji, X.; Gong, D.H.; Zhou, J.C.; Li, Z.; Liu, W. CosFace: Large Margin Cosine Loss for Deep Face Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5265–5274. [Google Scholar]

- Liu, W.Y.; Wen, Y.D.; Yu, Z.D.; Li, M.; Raj, B.; Song, L. SphereFace: Deep Hypersphere Embedding for Face Recognition. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2016; pp. 6738–6746. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition. In Proceedings of the European Conference on Computer Vision, Amstedrdam, The Netherlands, 11–14 October 2016; Volume 9907, pp. 87–102. [Google Scholar]

- Huang, G.B.; Mattar, M.; Berg, T.; Learned-Miller, E. (Eds.) Labeled faces in the wild: A database forstudying face recognition in unconstrained environments. In Proceedings of the Workshop on faces in ‘Real-Life’ Images: Detection, Alignment, and Recognition, Marseille, France, 25 August 2008. [Google Scholar]

- Kim, J.H.; Poulose, A.; Han, D.S. The Extensive Usage of the Facial Image Threshing Machine for Facial Emotion Recognition Performance. Sensors 2021, 21, 2026. [Google Scholar] [CrossRef] [PubMed]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. Proc. Mach. Learn. Res. 2015, 37, 1180–1189. [Google Scholar]

- Qiu, H.; Gong, D.; Li, Z.; Liu, W.; Tao, D. End2End Occluded Face Recognition by Masking Corrupted Features. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6939–6952. [Google Scholar] [CrossRef]

- Song, L.; Gong, D.; Li, Z.; Liu, C.; Liu, W. Occlusion Robust Face Recognition Based on Mask Learning With Pairwise Differential Siamese Network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 773–782. [Google Scholar]

- Yin, B.; Tran, L.; Li, H.; Shen, X.; Liu, X. Towards Interpretable Face Recognition. In Proceedings of the 2019 IEEE/Cvf Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; pp. 9347–9356. [Google Scholar]

- Yuan, G.; Zheng, H.; Dong, J. MSML: Enhancing Occlusion-Robustness by Multi-Scale Segmentation-Based Mask Learning for Face Recognition. Proc. Conf. AAAI Artif. Intell. 2022, 36, 3197–3205. [Google Scholar] [CrossRef]

- Anwar, A.; Raychowdhury, A. Masked face recognition for secure authentication. arXiv 2020, arXiv:200811104. [Google Scholar]

- Ding, F.F.; Peng, P.X.; Huang, Y.R.; Geng, M.Y.; Tian, Y.H. Masked Face Recognition with Latent Part Detection. In Proceedings of the Mm’20: 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2281–2289. [Google Scholar]

- Zhang, Y.; Wang, X.; Shakeel, M.S.; Wan, H.; Kang, W. Learning upper patch attention using dual-branch training strategy for masked face recognition. Pattern Recognit. 2022, 126, 108522. [Google Scholar] [CrossRef]

- Li, Y.; Guo, K.; Lu, Y.; Liu, L. Cropping and attention based approach for masked face recognition. Appl. Intell. 2021, 51, 3012–3025. [Google Scholar] [CrossRef]

- Wan, W.; Chen, J. Occlusion robust face recognition based on mask learning. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3795–3799. [Google Scholar]

- Karnati, M.; Seal, A.; Bhattacharjee, D.; Yazidi, A.; Krejcar, O. Understanding Deep Learning Techniques for Recognition of Human Emotions Using Facial Expressions: A Comprehensive Survey. IEEE Trans. Instrum. Meas. 2023, 72, 1–31. [Google Scholar] [CrossRef]

- Qin, Q.; Hu, W.; Liu, B. (Eds.) Feature projection for improved text classification. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Martinez, A.; Benavente, R. The AR Face Database: CVC Technical Report; Purdue University: West Lafayette, IN, USA, 1998; p. 24. [Google Scholar]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust Face Recognition via Sparse Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 210–227. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Yang, J.; Zhang, D. Robust Sparse Coding for Face Recognition. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 625–632. [Google Scholar]

- Li, X.-X.; Dai, D.-Q.; Zhang, X.-F.; Ren, C.-X. Structured Sparse Error Coding for Face Recognition with Occlusion. IEEE Trans. Image Process. 2013, 22, 1889–1900. [Google Scholar] [CrossRef] [PubMed]

- McLaughlin, N.; Ming, J.; Crookes, D. Largest Matching Areas for Illumination and Occlusion Robust Face Recognition. IEEE Trans. Cybern. 2016, 47, 796–808. [Google Scholar] [CrossRef]

- Weng, R.; Lu, J.; Tan, Y.-P. Robust Point Set Matching for Partial Face Recognition. IEEE Trans. Image Process. 2016, 25, 1163–1176. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Gao, Y. Recognizing Partially Occluded Faces from a Single Sample Per Class Using String-Based Matching. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Volume 6313, pp. 496–509. [Google Scholar]

- Alsalibi, B.; Venkat, I.; Al-Betar, M.A. A membrane-inspired bat algorithm to recognize faces in unconstrained scenarios. Eng. Appl. Artif. Intell. 2017, 64, 242–260. [Google Scholar] [CrossRef]

- Zheng, H.; Lin, D.; Lian, L.; Dong, J.; Zhang, P. Laplacian-Uniform Mixture-Driven Iterative Robust Coding with Applications to Face Recognition Against Dense Errors. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3620–3633. [Google Scholar] [CrossRef]

- Zhao, F.; Feng, J.; Zhao, J.; Yang, W.; Yan, S. Robust LSTM-Autoencoders for Face De-Occlusion in the Wild. IEEE Trans. Image Process. 2017, 27, 778–790. [Google Scholar] [CrossRef] [PubMed]

- Vo, D.M.; Nguyen, D.M.; Lee, S.-W. Deep softmax collaborative representation for robust degraded face recognition. Eng. Appl. Artif. Intell. 2020, 97, 104052. [Google Scholar] [CrossRef]

- Chen, M.; Zang, S.; Ai, Z.; Chi, J.; Yang, G.; Chen, C.; Yu, T. RFA-Net: Residual feature attention network for fine-grained image inpainting. Eng. Appl. Artif. Intell. 2023, 119, 105814. [Google Scholar] [CrossRef]

- Ge, S.; Li, C.; Zhao, S.; Zeng, D. Occluded Face Recognition in the Wild by Identity-Diversity Inpainting. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 3387–3397. [Google Scholar] [CrossRef]

- Vu, H.N.; Nguyen, M.H.; Pham, C. Masked face recognition with convolutional neural networks and local binary patterns. Appl. Intell. 2021, 52, 5497–5512. [Google Scholar] [CrossRef]

- Albalas, F.; Alzu’Bi, A.; Alguzo, A.; Al-Hadhrami, T.; Othman, A. Learning Discriminant Spatial Features with Deep Graph-Based Convolutions for Occluded Face Detection. IEEE Access 2022, 10, 35162–35171. [Google Scholar] [CrossRef]

- Hoffer, E.; Ailon, N. Deep Metric Learning Using Triplet Network. In Proceedings of the Similarity-Based Pattern Recognition: Third International Workshop, SIMBAD 2015, Copenhagen, Denmark, 12–14 October 2015; Volume 9370, pp. 84–92. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 539–546. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Learning face representation from scratch. arXiv 2014, arXiv:14117923. [Google Scholar]

- Ghazi, M.M.; Ekenel, H.K. A Comprehensive Analysis of Deep Learning Based Representation for Face Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 102–109. [Google Scholar]

- Li, C.; Ge, S.; Zhang, D.; Li, J. Look Through Masks: Towards Masked Face Recognition with De-Occlusion Distillation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3016–3024. [Google Scholar]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; Junior, A.R.d.S.; Pozzebon, E.; Sobieranski, A.C. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Inf. Sci. 2021, 582, 593–617. [Google Scholar] [CrossRef]

- Wu, X.; He, R.; Sun, Z.; Tan, T. A Light CNN for Deep Face Representation With Noisy Labels. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2884–2896. [Google Scholar] [CrossRef]

- Dosi, M.; Chiranjeev, M.; Agarwal, S.; Chaudhary, J.; Manchanda, S.; Balutia, K.; Bhagwatkar, K.; Vatsa, M.; Singh, R. Seg-DGDNet: Segmentation based Disguise Guided Dropout Network for Low Resolution Face Recognition. IEEE J. Sel. Top. Signal Process. 2023, 1–13. [Google Scholar] [CrossRef]

- Yang, J.; Luo, L.; Qian, J.; Tai, Y.; Zhang, F.; Xu, Y. Nuclear Norm Based Matrix Regression with Applications to Face Recognition with Occlusion and Illumination Changes. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 156–171. [Google Scholar] [CrossRef]

- Weng, R.L.; Lu, J.W.; Hu, J.L.; Yang, G.; Tan, Y.P. Robust Feature Set Matching for Partial Face Recognition. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 601–608. [Google Scholar]

- Yang, M.; Zhang, L.; Shiu, S.C.-K.; Zhang, D. Robust Kernel Representation With Statistical Local Features for Face Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 900–912. [Google Scholar] [CrossRef]

- He, L.X.; Li, H.Q.; Zhang, Q.; Sun, Z.N. Dynamic Feature Learning for Partial Face Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7054–7063. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Models | Training Datasets | Occlusions in Training |

|---|---|---|

| MSML(L29) | OCC-CASIA-WebFace | geometric shapes, realistic objects (sunglasses, scarf, face mask, hand, etc.), masks |

| FROM(ResNet50) | OCC-CASIA-WebFace | sunglasses, scarf, face mask, hand, eye mask, eyeglasses, book, phone, cup |

| UPA(ResNet28) | CASIA-Webface-Masked | face masks (cloth, surgical-blue, surgical-green, KN95, surgical-white) |

| Models Accuracy | AR (sg 1/2) | AR (scarf 1/2) |

|---|---|---|

| Baseline-L9 | 97.5/94.72 | 97.64/89.03 |

| Baseline-ResNet18 | 100/99.58 | 99.96/99.30 |

| ALOB-L9 (ours) | 100/99.03 | 99.86/98.61 |

| ALOB (ours) | 100/99.72 | 100/99.58 |

| * L29 | 98.02/96.44 | 98.78/96.76 |

| Seg-DGDNet (2023) [43] | 99.71/98.73 | 100/99.03 |

| MSLM (2022) [13] | 99.84/98.80 | 100/99.37 |

| PDSN (2019) [11] | 99.72/98.19 | 100/98.33 |

| RPSM (2016) [26] | 96/84.84 | 97.66/90.16 |

| DDF (2020) [40] | -/98 | -/94.10 |

| * LUMIRC (2020) [29] | 97.35/- | 96.7/- |

| MaskNet (2017) [18] | 90.90/- | 96.70/- |

| NMR (2017) [44] | 96.9/- | 73.5/- |

| LMA (2016) [25] | -/96.30 | -/93.70 |

| MLERPM (2013) [45] | 98.0/- | 97.0/- |

| SCF-PKR (2013) [46] | 95.65/- | 98.0/- |

| StringFace (2010) [27] | -/82.00 | -/92.00 |

| SRC (2008) [22] | 87.0/- | 59.50/- |

| Models | LFW-OCC-2.0 (Face–Occlusion) | LFW-OCC-3.0 (Face–Occlusion) |

|---|---|---|

| Baseline-ResNet18 | 93.40/64.00 | 90.08/61.73 |

| ALOB (ours) | 94.87/78.93 | 92.05/71.57 |

| FROM (2022) [10] | 94.70/76.53 | 91.60 */70.27 * |

| Models Accuracy | LFW (Face–Face) | LFW-Masked (Face–Mask) | MFR2 (Face–Mask) |

|---|---|---|---|

| Baseline-ResNet18 | 98.27 | 96.80 | 91.76 |

| ALOB (ours) | 98.77 | 97.62 | 93.76 |

| UPA (2022) [16] | 98.02 | 97.60 | 92.38 |

| LPD (2020) [15] | 94.82 | 94.28 | 88.76 |

| INFR (2019) [12] | 97.66 | 93.03 | 90.63 |

| PDSN (2019) [11] | - | 86.72 | - |

| DFM (2018) [47] | - | 92.88 | - |

| Models | LFW (Face–Face) | LFW-Masked (Face–Mask) | MFR2 (Face–Mask) |

|---|---|---|---|

| Baseline-ResNet18 | 88.40 | 83.07 | 56.11 |

| ALOB | 95.90 | 89.63 | 66.83 |

| LFW-OCC-2.0 (Face–Occlusion) | LFW-OCC-3.0 (Face–Occlusion) | MFR2 (Face–Mask) | |

|---|---|---|---|

| 0 | 92.45/66.10 | 91.82/67.43 | 91.89/57.11 |

| 0.001 | 94.22/72.13 | 91.28/70.27 | 91.76/52.34 |

| 0.01 | 94.38/77.13 | 91.88/69.77 | 92.01/57.33 |

| 0.1 | 94.13/74.17 | 90.33/58.47 | 92.51/57.86 |

| 0.5 | 92.32/66.67 | 89.85/59.33 | 90.14/58.83 |

| 1.0 | 94.87/78.93 | 92.05/71.57 | 93.76/66.83 |

| 2.0 | 93.78/73.70 | 91.65/66.17 | 92.01/60.33 |

| 3.0 | 93.67/67.4 | 91.37/65.77 | 92.13/60.85 |

| Models | LFW-OCC-2.0 (Face–Occlusion) | LFW-OCC-3.0 (Face–Occlusion) | MFR2 (Face–Mask) |

|---|---|---|---|

| ALOB-G | 92.45/66.10 | 91.82/67.43 | 91.89/57.11 |

| ALOB-F | 94.62/74.27 | 90.35/64.10 | 91.51/60.59 |

| ALOB-G-F (sum) | 94.38/70.47 | 92.02/70.10 | 91.51/62.01 |

| ALOB-G-F (concat) | 94.45/74.77 | 91.77/70.13 | 92.01/63.84 |

| ALOB | 94.87/78.93 | 92.05/71.57 | 93.76/66.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, C.; Qin, Y.; Zhang, B. Adversarially Learning Occlusions by Backpropagation for Face Recognition. Sensors 2023, 23, 8559. https://doi.org/10.3390/s23208559

Zhao C, Qin Y, Zhang B. Adversarially Learning Occlusions by Backpropagation for Face Recognition. Sensors. 2023; 23(20):8559. https://doi.org/10.3390/s23208559

Chicago/Turabian StyleZhao, Caijie, Ying Qin, and Bob Zhang. 2023. "Adversarially Learning Occlusions by Backpropagation for Face Recognition" Sensors 23, no. 20: 8559. https://doi.org/10.3390/s23208559

APA StyleZhao, C., Qin, Y., & Zhang, B. (2023). Adversarially Learning Occlusions by Backpropagation for Face Recognition. Sensors, 23(20), 8559. https://doi.org/10.3390/s23208559