1. Introduction

With the great advancements in science and technology, wireless devices have been applied in various fields, such as radar systems, the Internet of Things (IoT), and more. As a result, the security of wireless communication has attracted considerable attention [

1]. The address resolution of the transmitted protocol is a traditional method used to identify wireless devices; however, it has practical limitations, as it can be easily intercepted and tampered with. To resolve these problems, reference [

2] first proposed the concept of a radio frequency fingerprint (RFF) for transient signals, which is unique to each wireless transmitter. The various manufacturing biases in different hardware components of wireless devices result in each device having a unique RFF, like human fingerprints. Moreover, reference [

3] explored a method to extract RFF from steady signals, which are easier to collect. This further advanced the development of RFF technology.

The enhanced RFF technique greatly improves the security of identification within the wireless domain. Furthermore, the introduction of the RF machine learning system by the Defense Advanced Research Projects Agency (DARPA) has propelled RFF research to pivot toward deep learning (DL) [

4]. With the improvement of RFF and DL [

5,

6], SEI has also made great progress in distinguishing specific emitters based on physical layer characteristics. Typically, popular SEI systems consist of an RFF feature extractor and a DL classifier. References [

7,

8,

9,

10] extracted robust RFF features to improve the performance of SEI in complex environments. Moreover, references [

11,

12] proposed complex-valued convolutional neural networks (CVCNNs) to enhance the adaptability of the DL model when dealing with complex-valued signals in SEI, and compressed CVCNNs to save calculation resources.

Although there have been many breakthroughs in the field of SEI, previous works have mainly focused on optimizing a typical signal for higher robustness. In practical applications, working devices tend to use more than one modal signal to address the vulnerability or interference of a single modal signal in challenging environments. Multimodal signals usually operate in different frequency bands or have different modulation methods, which can effectively solve this problem. For example, in fast flight mode, high-frequency signals are extremely sensitive to Doppler shifts, while low-frequency signals are less affected by speed changes. Moreover, aircraft broadcast multi-modal signals, such as automatic dependent surveillance–broadcast (ads-b) signals, air traffic control (atc) signals [

13], and radar signals, enabling accurate detection and guidance by air traffic controllers on the ground. Reference [

14] explored the RFFs of aircraft based on radar signals. Moreover, references [

15,

16,

17] explored the RFFs of airplanes, targeting the ads-b s modal signal. The traditional ads-b signal represents the ads-b s mode, which is also used in this paper. We use rm1/2 to represent the ads-b a/c mode in this paper, as seen in

Table 1. Moreover, civil ATCRBS contains the ads-b a/c/s mode, which might be emitted by different parts of the same aircraft. Therefore, it is crucial for a robust SEI system to recognize wireless devices across different modal signals.

Typically, existing SEI systems [

5,

6,

10,

19,

20] consist of RFF feature extractors and emitter classifiers, both of which are based on a single modal signal. However, when dealing with multi-modal signals, it is necessary to first identify the signal mode as a signal arrives. Moreover, different modal signals require different RFF extractors and SEI classifiers, resulting in increased complexity and reduced robustness when dealing with unknown modal signals. To tackle the challenge of identifying wireless devices across different modal signals in a more cost-effective manner, one possible solution is to develop a universal RFF extractor that unifies the structures and semantics for multi-modalities. Additionally, it is crucial to introduce DL methods to improve the adaptability of classifiers for multi-modal signals [

21,

22]. A previous study [

21] proposed pre-training a SEI DL classifier using ads-b signals and subsequently fine-tuning it to recognize emitters with Wi-Fi signals. However, this approach requires continuous fine-tuning whenever new modal signals are introduced, which also leads to building multiple models for different modal signals.

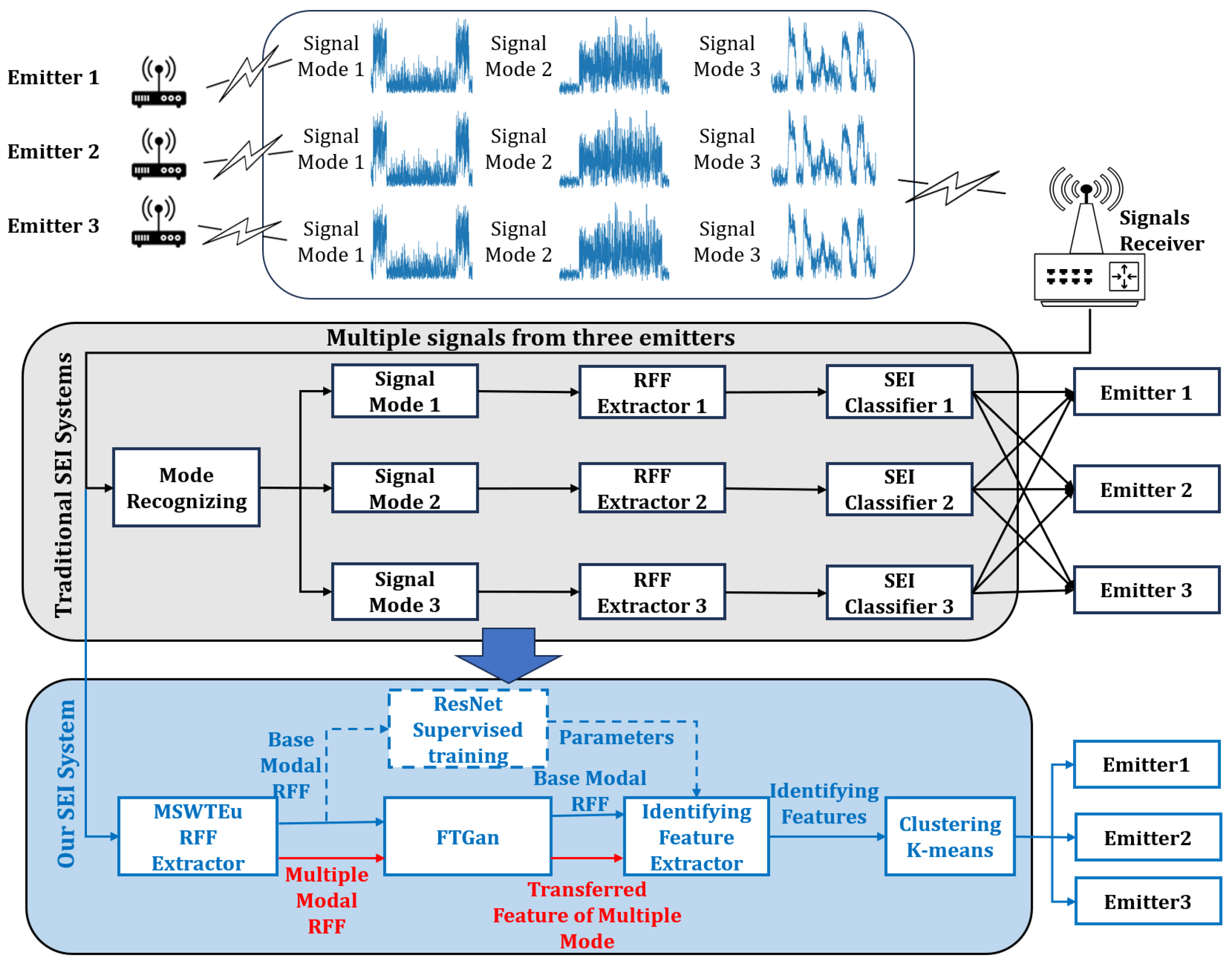

To resolve these issues, the MSWTEu is proposed to extract general and robust RFF features, unifying the structures and semantics of different modal signals. However, solely relying on the general RFF extraction method may not be sufficient, as some modal signals exhibit significant differences that MSWTEu cannot fully unify. To address this limitation, we propose a novel FTGAN to enhance the adaptation of the entire SEI system to multi-modal signals. Since it is impractical to build signal models for all existing modal signals, we focus on analyzing and obtaining information from one representative modal signal, which we refer to as the “known modal signal“. The remainder of the modal signals are deemed as “unknown modal signals”. The FTGAN tries to transfer the MSWTEu distributions of unknown modal signals to match the distribution of the known modal signal. With the above methods, only one SEI classifier is required to be built and trained for the known modal signal. But when confronted with other modal signals, the SEI classifier can also recognize their emitters and achieve improved comprehensive accuracy recognition. Furthermore, with slight modifications to the trained SEI classifier, open-set signal mode recognition can be achieved. An overview of traditional SEI systems, as well as our system addressing multi-modal signals, is depicted in

Figure 1.

The main contributions of this paper are as follows:

- 1.

We explore an efficient and general method for extracting prominent RFF features from multi-modal signals;

- 2.

We explore the MSWTEu algorithm for unifying the structure and semantics of different modal signals;

- 3.

We explore an FTGAN to further unify RFF features, thus enhancing the efficiency and performance of the SEI system while accommodating multi-modal signals. With this approach, the SEI classifier is trained only with known modal signal features, but it can also recognize emitters using unknown modal signals without additional training;

- 4.

We leverage MSWTEu and FTGAN; the trained SEI classifier can achieve open-set modulation recognition with minimal fine-tuning;

- 5.

We construct a hybrid dataset for SEI experiments crossing signal modes, consisting of a real dataset, a simulated dataset, and a public dataset. The public dataset has been widely used in recent works and consists of Wi-Fi signals. The simulated dataset comprises two modal communication reply signals, whose waveforms are carefully designed and generated by different waveform generators to simulate emitters. The real dataset is comprised of ads-b signals collected over a span of 12 months; it has undergone comprehensive preprocessing. Each modal signal is sent by three different emitters. The details of the dataset are depicted in

Table 1. The simulated dataset and real dataset consist of ads-b a/c/s modal signals, which can be built as a complete dataset of civil ATCRBS (CATCRBS). With the datasets, our optimized SEI system can obtain the identification of airplanes, disregarding variations in signal modes and, thus, replacing the identification-decoding module within CATCRBS.

Therefore, our proposed system can solve the SEI problems posed by multi-modal signals at the same time: (1) MSWTEu allows different modal signals to only utilize one RFF extractor, decreasing the number of RFF extractors; (2) FTGAN ensures that different modal signals require only one unsupervised classifier, solving the hard labeling work and reducing the number of classifiers; (3) the proposed training strategy for MSWTEu and FTGAN makes it unnecessary to add additional processes to recognize signal modes, increasing the efficiency of the whole system; (4) a little fine-tuning of the proposed SEI system enables open-set signal mode recognition. The rest of the paper is organized as follows.

Section 2 describes the process of the feature extractor MSWTEu. In

Section 3, we present the details of FTGAN and the training strategy. The dataset details and experimental results are shown in

Section 4. Lastly,

Section 5 summarizes this paper.

2. RFF Feature Extracting and Unifying MSWTEu

This work is mainly inspired by our previous work [

13], which proposed a robust RFF extractor denoted as improving SWT by energy regularization (ISWTE), enhancing the performance of SEI at low SNRs. In a previous work [

13], we fully discussed the advantages of wavelet transformation in improving RFF features and conducted experiments to compare ISWTE, SWT, spectrograms, and traditional signal processing methods. We concluded that SWT outperforms other RFF extractors in terms of accuracy and efficiency. Moreover, by optimizing SWT with energy regularization, ISWTE can enhance the performance of SEI under low SNRs. However, this kind of enhancement is limited to one particular modal signal. Thus, our aim is to expand our previous work, and further extract general and robust RFF features from multi-modal signals.

This work is roughly divided into three stages, as shown in

Figure 2.

In the first stage, we propose a reasonable downsampling strategy to unify the lengths of multi-modal signals. This strategy helps to address the signal variation issue and ensures the effectiveness of subsequent processing.

The second stage focuses on improving the versatility and efficiency of the MSWTEu method based on the ISWTE method. While ISWTE decomposes signals into a fixed, limited number of layers, and adopts fixed parameters, which are manually adjusted to achieve the best performance, MSWTEu allows for optional layers and optional parameters for each layer. We propose the MSWTEu, which introduces an optional parameter for each layer, and design an adaptive selection algorithm to select the best SWT decomposition layers and the best parameter per layer. Here, we use the known modal dataset and pick a few unknown modal signals to join the selecting algorithm. The accuracy of a clustering algorithm is adopted to assess the performance. And in turn, the layers and parameters are adaptively determined by the best performance. By doing so, the MSWTEu method combines the advantages of multiple SWT layers and the automatic selection of the best parameters, eliminating the need for manual adjustments. This approach ensures that different modal signals have a unified structure and shape, and also achieves robust RFF feature extraction.

In the third stage, we address the energy explosion issue commonly encountered in SWT transformation and optimize MSWTEu for greater efficiency. The energy explosion is mainly concentrated in the latter decomposition layer from SWT, resulting in a substantial increase in the weight of these latter layers in the RFF feature. It could cause the information of previous layers to be missing. Therefore, the ISWTE is optimized by energy regularization, specifically, by computing the energy of each layer and reversely weakening them by an energy scale. In this way, ISWTE has achieved good performance in noisy domains based on ads-b signals. However, the time complexity of energy regularization by ISWTE is not ideal since the procedure of reverse weakening is complex. The MSWTEu aims to simplify the energy strategy and be more adaptive to multi-modal signals.

In conclusion, the MSWTEu overcomes the constraints of relying on one modal signal and manual parameter tuning during RFF feature extraction. It improves the efficiency of energy computation and resolves the problem of energy explosion.

The MSWTEu calculation process is shown in

Figure 2 and the details are below:

(1)

Wavelet transformation (WT): In practice, signals are usually downsampled upon reception and transformed to the discrete domain. Therefore, the signal is represented as

, not

. The normal wavelet transformation (WT) is calculated as below:

where

N is the length of

,

is the mother wavelet function,

a scales the wavelet function, and

b shifts the time domain. The WT can transform signals from the time domain to the time–frequency domain, by slicing the frequency domain based on the continuous short-time Fourier transform (STFT). Reference [

3] proposed that the signal in the frequency domain performs better in SEI than in the time domain. Our previous experiments show that the signals in the time domain are vulnerable to noises but robust in Doppler shifts, while the signals in the frequency domain behave in the opposite way. The WT combined the advantages of the time domain and frequency domain, which is the main reason we adopted it as the base transformation of the RFF extractor. Normally, the WT can decompose signals into multiple layers, with each containing a high-frequency part (the detail coefficient) and a low-frequency part (the approximation coefficient).

(2)

Synchrosqueezed wavelet transformation (SWT): Reference [

23] proposed SWT, which is inspired by empirical mode decomposition (EMD) and confirms its effectiveness through mathematical methods. Unlike the traditional discrete wavelet transform (DWT), EMD does not require much prior knowledge and is more stable and adaptive in signal decomposition and feature extraction. Moreover, DWT reduces the length by half with each decomposition to the subsequent layer, whereas SWT maintains the same length at each layer, making it challenging to evaluate the length and unify the shapes of multi-modal signal features. Therefore, we adopt SWT as the main transformation for signals. SWT can be calculated as below:

where

is given and proved by reference [

23], L(n) is a low-pass filter, and H(n) is a high pass filter. With L(n) and H(n), SWT can obtain detailed and approximate information for each layer. In

Figure 2,

.

(3)

Multiple synchrosqueezed wavelet transformations (MSWT): Utilizing SWT based on SFST, signals are sliced from long-term time domain features into multiple short-frequency domain features, transforming from the time domain to the time–frequency domain. Therefore, SWT can obtain more obvious RFF features and extract multiple-layer features from one signal. Each layer feature includes an approximation feature and a detail feature. However, during experiments, the RFF features of different modal signals exhibit varying performance in each layer, which poses a challenge in selecting the appropriate and general decomposition layer for multi-modal signals. One work [

24] proposed the method of downsampling one dataset into several subsets to replace one single dataset, augmenting the datasets [

25] and enhancing the pre-existing knowledge. However, this approach has limited impact on the improvement of the SEI system and requires significant computational resources for training the classifier. Based on the idea of multiple data enhancement, MSWT is proposed to integrate the details and approximations information from multiple SWT layers, effectively enhancing the RFF feature extraction and disregarding the disparate performances of different modal signals across various SWT layers. With it, feature extraction is simple and easy, but with strong and general features.

where

N is the max decomposition layer of SWT for limitation, which is the same as the signal length, and

represents the weight of each decomposition layer using

g and

h.

(4)

RFF structure unifying: The MSWT is still a complex-valued feature with a real part and an imaginary part, making it challenging to unify the structures of different modal features. In this paper, we focus more on unifying the structures of different modal signals for SEI while maintaining the effectiveness of each modal RFF feature. To accomplish this, we incorporate FFT to further extract RFF features for enhanced frequency information [

3] and apply a modulus operation to unify the structures. The MSWT can be redefined as follows:

where

is the fast Fourier transform,

and

are the real and imaginary parts of the complex-valued feature.

(5)

Special particle swarm optimization (SPSO):

has successfully addressed the challenges of unifying and enhancing the effectiveness of RFF features from multiple modalities. However, the problem of determining the appropriate number and weights of layers remains unresolved due to the inefficiency of manual adjustment. In order to minimize parameter adjustment efforts and optimize the effectiveness of RFF features, it is necessary to implement an adaptive algorithm in

to determine and unify the best combination of layers and their respective weights for multi-modal signal features. This algorithm can adaptively achieve high RFF performance while using fewer layers and be applicable to all modal signals. To address this issue, we propose an SPSO, which is an adaptive selection algorithm for

based on PSO [

26]. As in

Figure 2, the best

requires appropriate layer decomposition and proper weights for each layer, including

g and

h. The SPSO selects these parameters; the details are as follows. (This paper does not seek to optimize the traditional selection algorithm, PSO, but only applies it to suit our RFF extractor).

- 1:

Our dataset has four modal signals and each mode has three devices. Each device introduces 20 labeled signals to join the SPSO selecting procedure. The details of the dataset are presented in

Table 1;

- 2:

The initial l is set to 1 and SPSO reaches the best clustering results by selecting , . The features are clustered for different emitters, and the performance is evaluated by the mean distance between each point and its clustering center. The minimum mean distance is denoted as , when ;

- 3:

, and SPSO selects , as above. The minimum mean distance is denoted as ;

- 4:

Repeat step 3 until

, selecting

(some modal signals cannot be decomposed to more than 3 layers); select

l when obtaining the minimum

. The mean distance is calculated as follows:

where

r is the total signal number in clustering,

f is the unifying feature length, and

is the clustering center for

.

(6)

Energy balancing and multiple synchrosqueezed wavelet transformation of energy unified (MSWTEu): Combining the above steps, the RFF features of different modal signals can be unified into a unified and efficient structure. However, the unification of different modal RFF features is still inadequate, as the inherent properties and distributions are not fully unified. Moreover, the SWT energy explosion issue remains unresolved. Reference [

13] proposed that energy regularization for combining SWT layers can enhance the RFF features. Furthermore, an energy-balancing strategy can unify the semantics of different modal signals and improve RFF, as energy accumulation and computation have always been important for signal processing and analysis in emitter identification. We expand upon our previous work [

13] to accommodate multi-modal signals and simplify the energy-balancing algorithm, resulting in increased recognition speed. Subsequent experiments have compared the complexity and time cost between ISWTE [

13] and MSWTEu. With energy balancing, the performance of MSWTEu also exhibits significant improvements in identification, as depicted in the following experiments. The implementation details of energy balancing by MSWTEu are as follows.

The total energy and average energy can be calculated:

where

and

represent the detailed and approximation information of each decomposition layer, respectively, and are unified in the structure according to (6). On the other hand,

and

are the relative weight values, which represent the

weight value among the total

g and

h values across the

l layers. By integrating these relative values into MSWTE and substituting MSWT with them, we can effectively attain the targeted result:

Based on MSWTE, MSWTEu is designed to unify and balance the energy of each layer. MSWTE is proposed to calculate the energy of each layer to a relative value, which effectively resolves the energy explosion problem. Moreover, MSWTEu proposes energy normalization based on the thought of normalization, which not only reduces the difference value between the maximum and minimum energies but also ensures a consistent energy across each layer.

Based on the above, when confronted with multi-modal signals, the MSWTEu demonstrates higher efficiency, better adaptivity, and fewer limitations compared to other RFF feature extractors [

7,

8,

13,

27], which have also been verified by subsequent experiments. Unlike these extractors, which are only based on one typical signal mode, requiring extensive manual tuning or having high complexity, the MSWTEu offers a unified approach to extracting universal RFF features from different signal modes. This ultimately enables the SEI system to identify emitters based on a wider range of modal signals without the need for complex RFF extractors and additional classifiers.

3. RFF Feature Transferring—FTGAN

As depicted in

Figure 3, there are four signal modes, and each mode can be transmitted by different emitters. Our proposed method can accurately identify the signal types and emitter IDs of all modal signals using only one modal signal, the SEI classifier. Specifically, we train an SEI classifier for the known modal RFF features, denoted as Mode 1, which has label and signal information. Then we utilize the network of classifier, excluding the softmax layer, as an identifying feature extraction network for all modal RFF features. Although the unknown modal (Mode 2/3/4) RFF features miss prior knowledge of labels and signals’ information, features extracted by the classifier network can be directly clustered into different emitters. With some modifications, the clustering can also be grouped into different signal modes. Some references [

27,

28] have also used clustering for evaluating and testing SEI performance. However, it is insufficient at extracting identifying features for all modal signals relying only on the network of one single modal SEI classifier. Therefore, we propose FTGAN to transfer the distribution of RFF features from unknown modal signals to match the distribution of the known modal signal.

There are three steps to complete the final clustering based on the above ideas: training the Mode 1 SEI classifier to identify feature extraction; training FTGAN to transfer unknown modal RFF features to match the known modal RFF features; combining the identifying feature extraction network and FTGAN to obtain identifying features from all modal signals to cluster emitters and signal modes.

Training the Mode 1 SEI classifier and obtaining the identifying feature extractor: To develop an SEI classifier for identifying emitters based on known modal signals, we utilize a ResNet model comprising three ResNet blocks and two dense layers. The ResNet model exhibits superior performance in managing gradient explosion compared to traditional convolutional networks. Commonly used networks for feature extraction, such as AlexNet, VGG, or GoogLeNet, have numerous layers, which might be excessive given the low dimensionality of signal features. Therefore, we adopt a more efficient network with fewer layers to build the SEI classifier, which also demonstrates excellent performance in classifying. Moreover, based on the classifier, we utilize its network up to the point before the softmax layer as the identifying feature extractor.

Training FTGAN and matching all modal RFF features: GANs have become popular in graph generation, but their architectures are not well-suited for waveform direct generation. The FTGAN has been inspired and upgraded based on GANs [

13,

22,

29].

Figure 4 depicts the architectures of a normal GAN and FTGAN. Unlike traditional GAN designs, the FTGAN generator focuses on learning only the differences between different modes of signals. This approach allows for simpler networks and is more suitable for waveform calculation. Moreover, since the differences between different modes of signals have a lower information entropy compared to complete signals, the FTGAN is proposed to generate the feature difference between the features of known modal signals and unknown modal signals. Removing the feature differences from unknown modal signal features can achieve features matching with the known modal, which improves the accuracy and efficiency of waveform feature generation. The training procedure of FTGAN is as depicted in

Figure 4: (1) There are two kinds of inputs, the known mode Mode 1 features denoted as

x and the unknown mode Mode 2/3/4 features denoted as

. It is important to note that no labels are required during the training process of FTGAN; (2)

is fed into

, which outputs the generated differences

; (3) The unknown modal features by removing the differences obtain

to match with the known modal features

x; (4) The discriminator is improved by enhancing the inputs. Normally, traditional

is to calculate the similarity between

x and

to determine whether inputting samples are real or generated ‘real’.

is the generated ‘real’ samples by GAN’s generator. Then, the similarity is used to update and enhance the parameters of

. The FTGAN is proposed to input

and

to push the discriminator and also utilize the enhanced discriminator to encourage the generator. These inputs can calculate the similarity between real samples and generated ‘real’ samples, as well as the similarity between real differences and generated ‘real’ differences; (5) Three loss functions are added to improve the performance of generators and enhance the realism of the generated features.

The entire process for clustering emitters and signal modes: With the above steps, we can easily recognize different emitters and signal modes with high accuracy by achieving robust features for all modal signals. The entire process can be summarized as follows: (1) the MSWTEu extracts RFF features and unifies the structures of features for all modal signals; (2) all modal RFF features transformed by FTGAN are in a distribution matching that of Mode 1, enabling the Mode 1 SEI classifier network to extract clear identifying features for all modal signals; (3) With the identifying features extracted by the trained Mode 1 classifier network, all modal signals can be clustered by the emitter identification or signal mode. The following are the FTGAN details of the description and handling. The entire process can also be seen in Algorithm 1.

| Algorithm 1 The entire process for identifying emitters. |

Step1: Train the Mode 1 SEI classifier and obtain the identifying feature extractor Input: Known Mode 1 sample MSWTEu features x and labels Output: Identifying feature extractor 1: Building Mode 1 classifier, as depicted in Figure 32: Training Mode 1 classifier by x and labels, as depicted in Figure 33: Obtaining from the trained Mode 1 classifier, as depicted in Figure 3

Step2: Train FTGAN Input: Known Mode 1 sample MSWTEu features, denoted as x, unknown Mode 2/3/4 sample MSWTEu features, denoted as Output: The “Mode 1” MSWTEu features transformed from Mode 2/3/4 MSWTEu features for the number of training iterations do Sample minibatch of m Mode 1 samples from Sample minibatch of m Mode 2/3/4 samples from Calculate and by x, , , and (20b) Update parameters Calculate and by x, , , and (20a) Update parameters = −

Step3: Identify Emitters Input: Mode 1/2/3/4 sample MSWTEu features , emitter number Output: SEI classifier and emitter 1: Load 2: Transferring multi-modal features to match Mode 1 features: 3: Extracting identifying features: 4: Building clustering model as in Figure 35: Unsupervised training by , 6: Randomly selecting 10 samples from each cluster for labeling 7:

|

The unlabeled samples from Mode 1 belong to the base domain, with their MSWTEu feature distribution represented as

. The unlabeled samples from Modes 2/3/4 exist within the transferred domain, characterized by their MSWTEu feature distribution

. The FTGAN aims to train

, thereby minimizing and maximizing the adversarial objective function

:

where

,

D is the discriminator function, and

G is the generator function of FTGAN.

Normally, GAN’s [

30] loss functions of D and G are calculated as follows:

where

denotes ascending and

denotes descending while training GANs;

’s size is

, filling with 0, and

’s size is

, filling with 1;

is the popular binary function for loss calculation. The improving details and loss functions of FTGAN are optimized as follows:

(1)

Upgrading and : As depicted in the previous part, FTGAN is enhanced to be more robust by improving the inputs. Therefore,

and

are both upgraded:

where

x is the known modal RFF feature,

is the unknown modal RFF feature,

is the real RFF feature difference between the known mode and the unknown mode,

is the generated feature difference,

is the generated known modal RFF feature,

is the real difference;

simultaneously calculates the similarity between

and the real, and the similarity between

and the fake; conversely,

attempts to generate ‘real’ samples that cannot be discriminated by

. By calculating losses on the combination of differences and samples, the

G and

D of FTGAN can be trained more robustly. The enhanced inputs could significantly improve the generation ability of ‘real’ differences by FTGAN, enabling the transfer of unknown modal features to known modal features.

(2)

Transfer loss : To ensure the accuracy of the generated differences between

, the

is added and the FTGAN is trained by descending

:

where

is the mean absolute error (MAE) loss function, which performs better than the mean squared error (MSE) in the signal waveforms [

13]. By decreasing the loss between the generated differences and real differences, and the loss between generated known modal features and real known modal features,

allows

G to generate more reliable ‘real’ differences.

(3)

Amplitude loss : To generate a more appropriate difference, the amplitude of generating value should be limited:

where

is the binary regularization function,

’s size is as

, filling with 0, and

is the batch size. Moreover, (18a) and (18b) allow the generated differences

to fit the amplitude distribution of real differences

.

(4)

Smooth transition loss : To smooth the MSWTEu features of the transferred signals, the FTGAN optimized it and descended it while training:

where

is a sample set of one epoch of

, and

. The loss function is added to improve the distribution balance of different modal features.

(5)

The FTGAN’s total loss function: Above all, combining the added three loss functions and improved

G and

D functions, the final loss functions of FTGAN are as follows:

where

, and

.

5. Conclusions

In this paper, we addressed the challenge of how an SEI system can identify wireless devices across multi-modal signals using only a single RFF extractor and a single SEI classifier. In typical scenarios that encounter multi-modal signals, no prior works have efficiently proposed an SEI system for identifying emitters without the need for different extractors and classifiers for each modal signal. Specifically, the enhanced SEI system with MSWTEu and FTGAN that we propose addresses the above issues. Confronting multi-modal signals, the MSWTEu unifies the features first, and further optimizes feature energy calculation to improve the comprehensive RFF performance. Moreover, with FTGAN and the proposed training process, the MSWTEu of different modal signals can be transferred to a matching distribution, unifying the final identifying multi-modal signal features for SEI and requiring only one unsupervised clustering for the identifying emitters. Experimental results, evaluated on a mixture of simulated, public, and real received datasets, show that MSWTEu, FTGAN, and the proposed training strategy can significantly improve the SEI performance and save resources when dealing with multiple types of signals. Solely using feature tackling, MSWTEu can reach 87% accuracy under the same modal signals in unsupervised clustering for identifying emitters, 75% accuracy under different modal signals in unsupervised clustering for identifying emitters, and 98.1% accuracy in unsupervised clustering for identifying signal modes. The results are far better compared to existing SEI studies that pay attention to feature tackling. With the inclusion of FTGAN and our proposed training approach, the feature performance in cross-modal emitter identification is further enhanced, rising from 75% to 86% in clustering. In conclusion, we believe that further improvements in feature optimization and matching for multi-modal signals and utilizing these improved features through unsupervised clustering to identify emitters have great potential in improving the comprehensive performance in crossing-modal SEI and reducing manual work, such as labeling.

However, there are some limitations in our work: (1) the simple clustering algorithm used for identifying emitters has limited adaptability when confronted with a constantly changing number of emitters; (2) the proposed methods cannot fully resolve the identification problem for new modal signals that lack labels. This issue still requires some manual labeling for correction; (3) the same emitters with different signal modes cannot be clustered into the same class. This can be seen in the boxplot of

Figure 7b in rm1 and rm2, as the rm1 and rm2 modes share the same emitters. Future work on SEI cross-multiple modal signals should be further improved (1) to research a proper dynamic clustering algorithm to replace the simple clustering in our SEI system and solve limitation 1; (2) to research a feature extraction and matching method that is more adaptable and robust, to reduce limitation 2 and resolve limitation (3).