Shadow-Imaging-Based Triangulation Approach for Tool Deflection Measurement

Abstract

:1. Introduction

1.1. Motivation

1.2. State of the Art

1.3. Aim and Outline

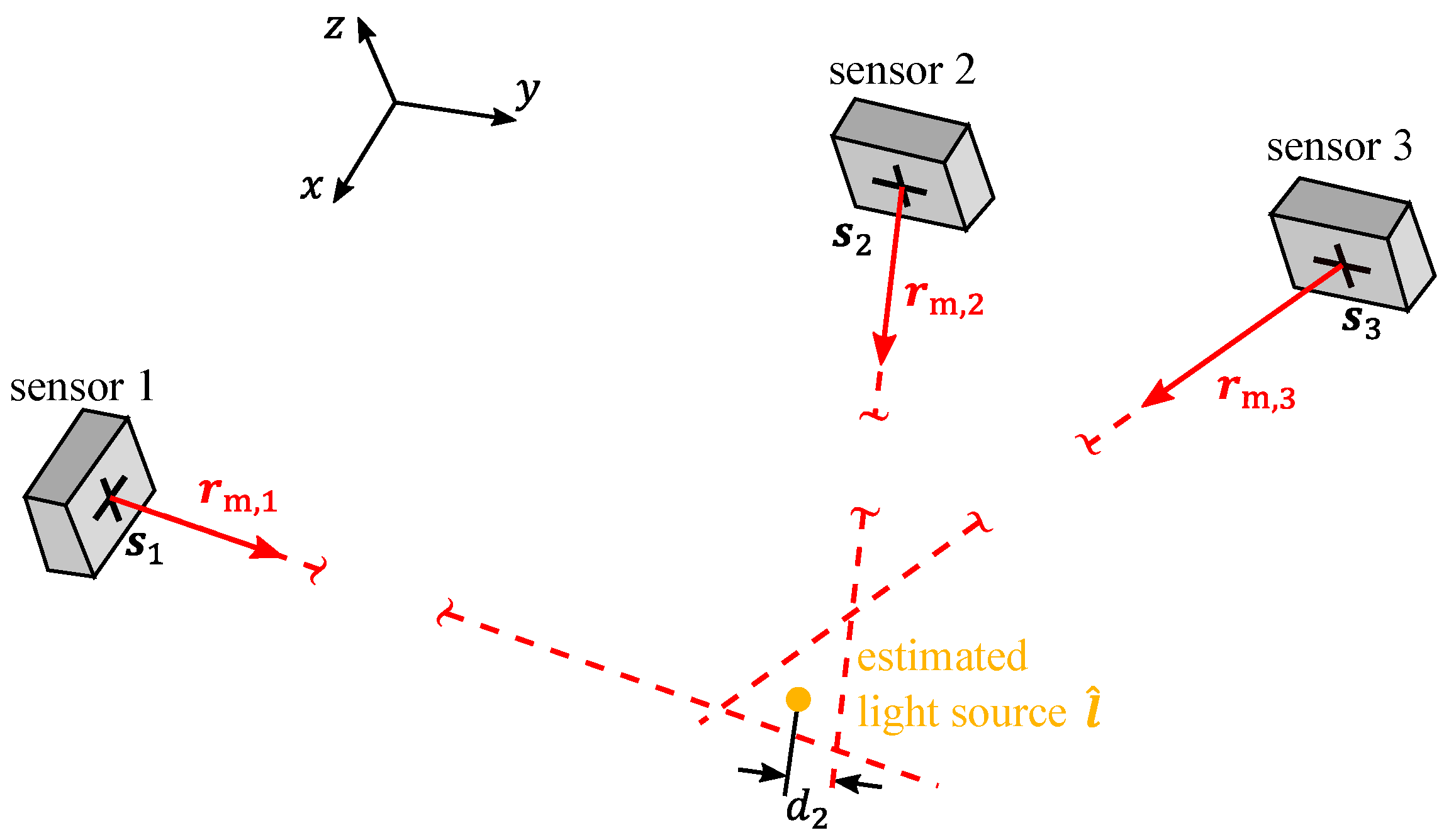

2. Principle of Measurement

3. Methods

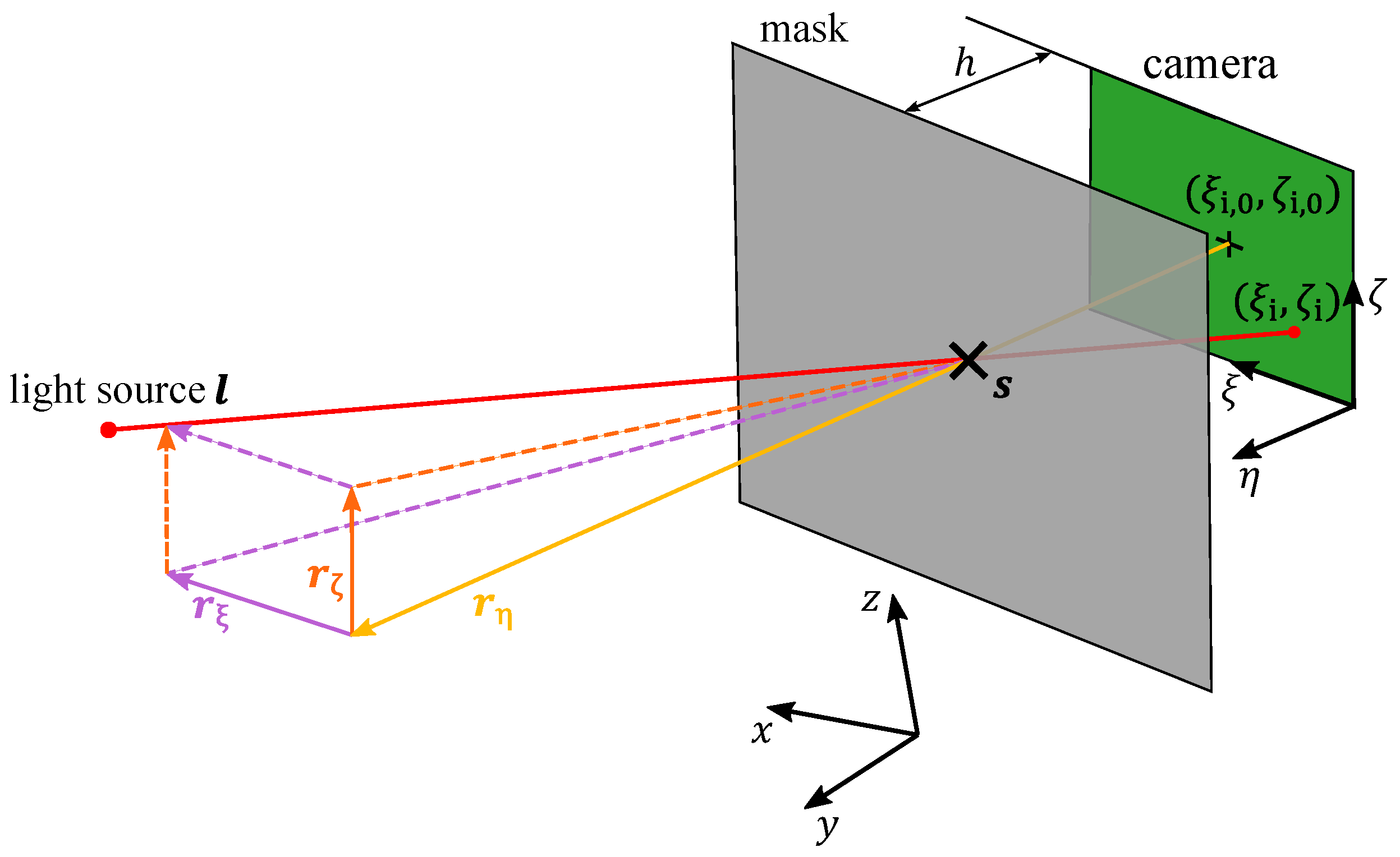

3.1. Shadow Imaging Sensor

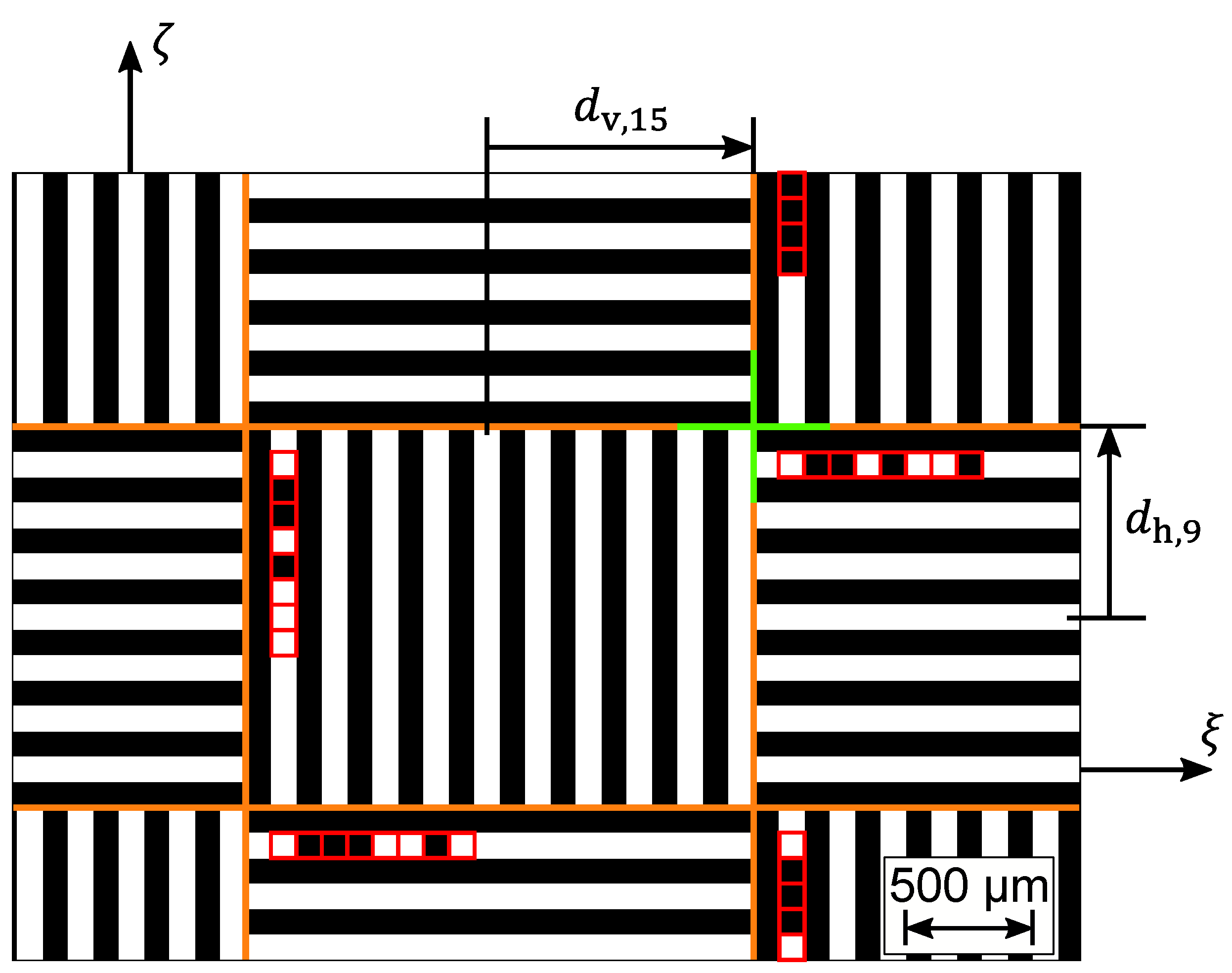

3.1.1. Mask

3.1.2. Image Processing

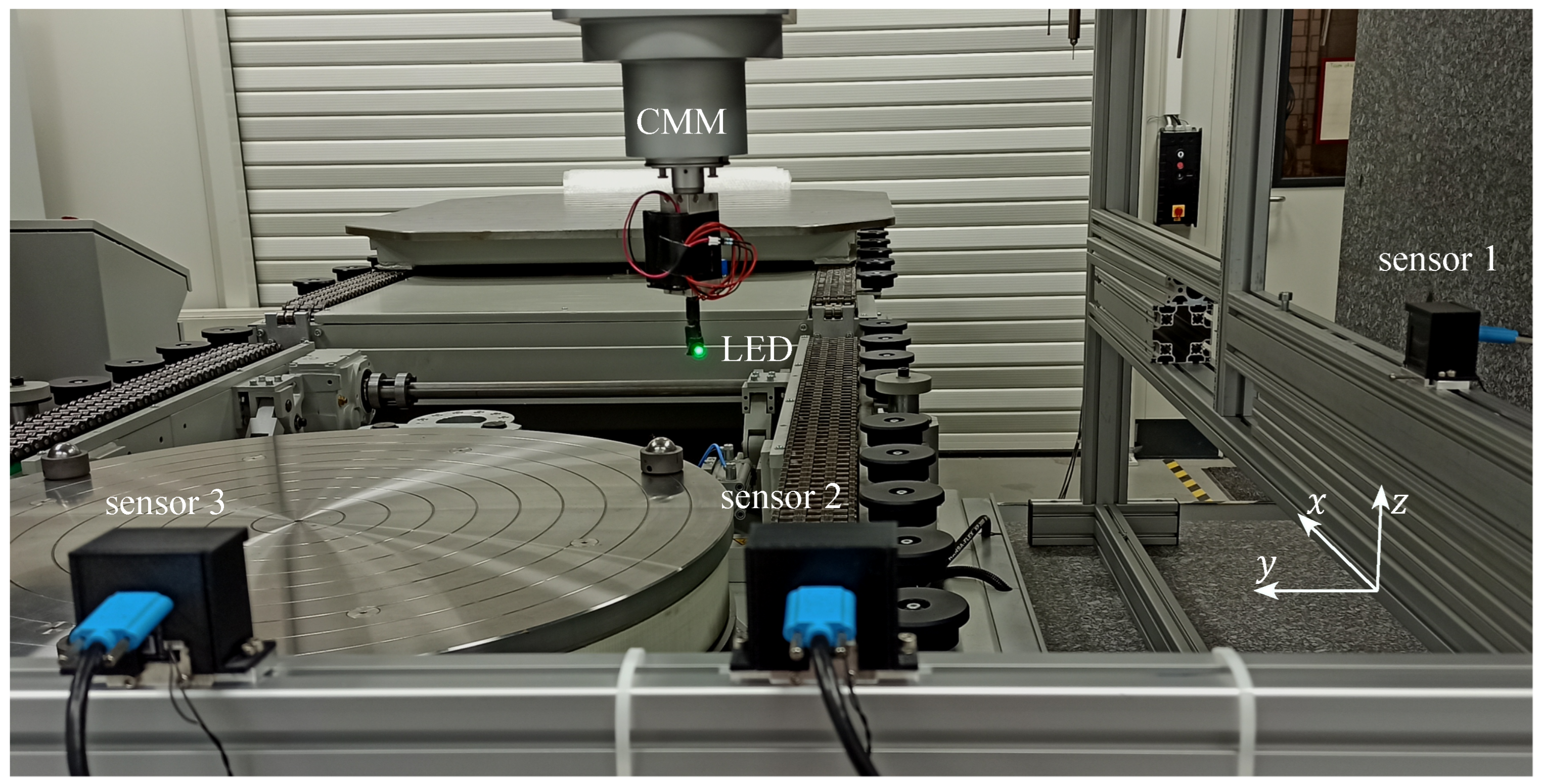

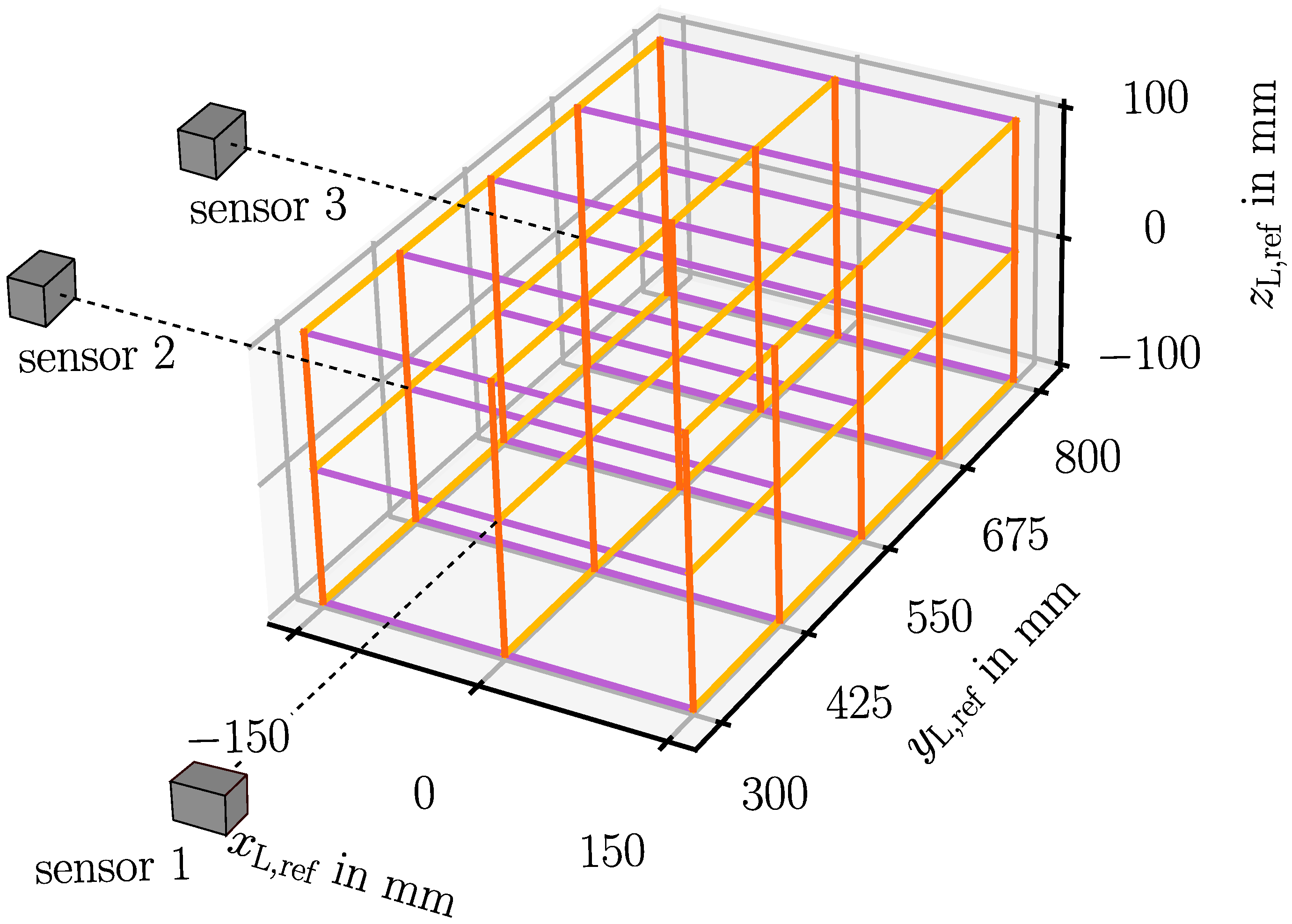

3.2. Experimental Setup with Three Sensors

3.3. Calibration

4. Results and Discussion

4.1. Measurement Range

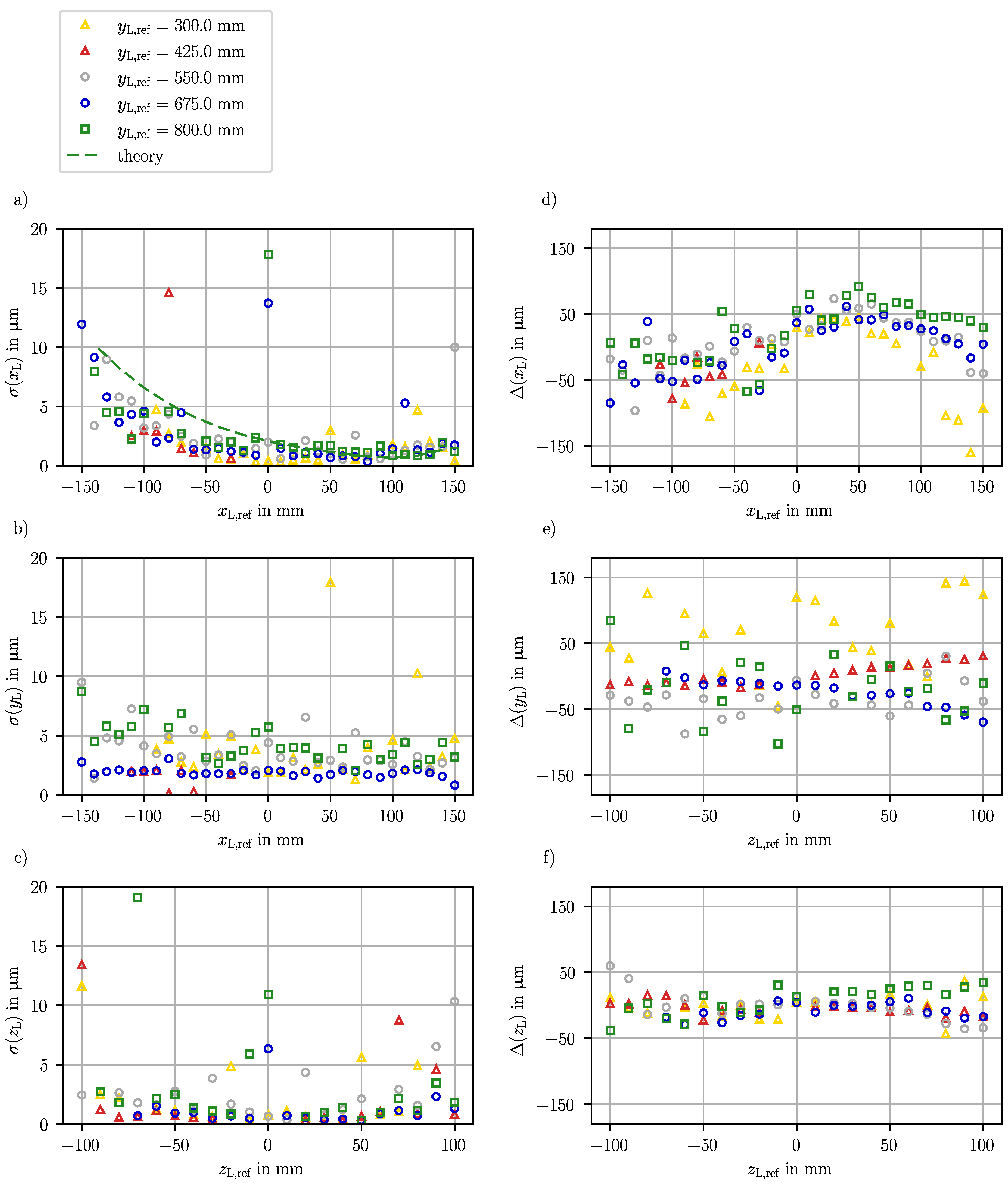

4.2. Random Error

4.3. Systematic Error

5. Conclusions and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CMM | coordinate measuring machine; |

| CNR | contrast-to-noise ratio; |

| DIC | digital image correlation; |

| ISF | incremental sheet forming. |

Appendix A. Uncertainty Propagation for Lateral Position Components

References

- Kumar, A.; Gulati, V. Experimental investigations and optimization of forming force in incremental sheet forming. Sādhanā 2018, 43, 42. [Google Scholar] [CrossRef]

- Devarajan, N.; Sivaswamy, G.; Bhattacharya, R.; Heck, D.P.; Siddiq, M.A. Complex incremental sheet forming using back die support on Aluminium 2024, 5083 and 7075 alloys. Procedia Eng. 2014, 81, 2298–2304. [Google Scholar] [CrossRef]

- Ren, H.; Xie, J.; Liao, S.; Leem, D.; Ehmann, K.; Cao, J. In-situ springback compensation in incremental sheet forming. CIRP Ann. 2019, 68, 317–320. [Google Scholar] [CrossRef]

- Konka, P.; Lingam, R.; Singh, U.A.; Shivaprasad, C.; Reddy, N.V. Enhancement of accuracy in double sided incremental forming by compensating tool path for machine tool errors. Int. J. Adv. Manuf. Technol. 2020, 111, 1187–1199. [Google Scholar] [CrossRef]

- Konka, P.; Lingam, R.; Reddy, N.V. Tool path design system to enhance accuracy during double sided incremental forming—An analytical model to predict compensations for small/large components. J. Manuf. Process. 2020, 58, 510–523. [Google Scholar]

- Sims-Waterhouse, D.; Isa, M.; Piano, S.; Leach, R. Uncertainty model for a traceable stereo-photogrammetry system. Precis. Eng. 2020, 63, 1–9. [Google Scholar] [CrossRef]

- Mutilba, U.; Yagüe-Fabra, J.A.; Gomez-Acedo, E.; Kortaberria, G.; Olarra, A. Integrated multilateration for machine tool automatic verification. CIRP Ann. 2018, 67, 555–558. [Google Scholar] [CrossRef]

- Sazonnikova, N.A.; Ilyuhin, V.N.; Surudin, S.V.; Svinaryov, N.N. Increasing of the industrial robot movement accuracy at the incremental forming process. In Proceedings of the 2020 International Conference on Dynamics and Vibroacoustics of Machines (DVM), Samara, Russia, 16–18 September 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Poozesh, P.; Sabato, A.; Sarrafi, A.; Niezrecki, C.; Avitabile, P.; Yarala, R. Multicamera measurement system to evaluate the dynamic response of utility–scale wind turbine blades. Wind. Energy 2020, 23, 1619–1639. [Google Scholar] [CrossRef]

- Kumar, A.; Gulati, V.; Kumar, P. Investigation of process variables on forming forces in incremental sheet forming. Int. J. Eng. Technol. 2018, 10, 680–684. [Google Scholar] [CrossRef]

- Luhmann, T. Close range photogrammetry for industrial applications. ISPRS J. Photogramm. Remote. Sens. 2010, 65, 558–569. [Google Scholar] [CrossRef]

- Gramola, M.; Bruce, P.J.K.; Santer, M. Photogrammetry for accurate model deformation measurement in a supersonic wind tunnel. Exp. Fluids 2019, 60, 8. [Google Scholar] [CrossRef]

- Poozesh, P.; Baqersad, J.; Niezrecki, C.; Avitabile, P.; Harvey, E.; Yarala, R. Large-area photogrammetry based testing of wind turbine blades. Mech. Syst. Signal Process. 2017, 86, 98–115. [Google Scholar] [CrossRef]

- Mendikute, A.; Yagüe-Fabra, J.A.; Zatarain, M.; Bertelsen, Á.; Leizea, I. Self-calibrated in-process photogrammetry for large raw part measurement and alignment before machining. Sensors 2017, 17, 2066. [Google Scholar] [CrossRef] [PubMed]

- Shu, T.; Gharaaty, S.; Xie, W.; Joubair, A.; Bonev, I.A. Dynamic path tracking of industrial robots with high accuracy using photogrammetry sensor. IEEE/ASME Trans. Mechatron. 2018, 23, 1159–1170. [Google Scholar] [CrossRef]

- Luo, P.F.; Chao, Y.J.; Sutton, M.A. Application of stereo vision to three-dimensional deformation analyses in fracture experiments. Opt. Eng. 1994, 33, 981–990. [Google Scholar]

- Fischer, J.D.; Woodside, M.R.; Gonzalez, M.M.; Lutes, N.A.; Bristow, D.A.; Landers, R.G. Iterative learning control of single point incremental sheet forming process using digital image correlation. Procedia Manuf. 2019, 34, 940–949. [Google Scholar] [CrossRef]

- Siebert, T.; Hack, E.; Lampeas, G.; Patterson, E.A.; Splitthof, K. Uncertainty quantification for DIC displacement measurements in industrial environments. Exp. Tech. 2021, 45, 685–694. [Google Scholar] [CrossRef]

- Tausendfreund, A.; Stöbener, D.; Fischer, A. In-process measurement of three-dimensional deformations based on speckle photography. Appl. Sci. 2021, 11, 4981. [Google Scholar] [CrossRef]

- Grenet, E.; Masa, P.; Franzi, E.; Hasler, D. Measurement System of a Light Source in Space. U.S. Patent 9,103,661, 11 August 2015. [Google Scholar]

- Andre, A.N.; Sandoz, P.; Mauze, B.; Jacquot, M.; Laurent, G.J. Sensing one nanometer over ten centimeters: A microencoded target for visual in-plane position measurement. IEEE/ASME Trans. Mechatron. 2020, 25, 1193–1201. [Google Scholar] [CrossRef]

- Dai, F.; Feng, Y.; Hough, R. Photogrammetric error sources and impacts on modeling and surveying in construction engineering applications. Vis. Eng. 2014, 2, 2. [Google Scholar] [CrossRef]

- Terlau, M.; von Freyberg, A.; Stöbener, D.; Fischer, A. In-process tool deflection measurement in incremental sheet metal forming. In Proceedings of the 2022 IEEE Sensors Applications Symposium (SAS), Sundsvall, Sweden, 1–3 August 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Terlau, M.; von Freyberg, A.; Stöbener, D.; Fischer, A. In-Prozess-Messung der Werkzeugablenkung beim inkrementellen Blechumformen. In Proceedings of the 21. GMA/ITG-Fachtagung: Sensoren und Messsysteme 2022, Nürnberg, Germany, 10–11 May 2022; VDE Verlag GmbH: Berlin, Germany; Offenbach, Germany, 2022; pp. 90–96. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Terlau, M.; von Freyberg, A.; Stöbener, D.; Fischer, A. Shadow-Imaging-Based Triangulation Approach for Tool Deflection Measurement. Sensors 2023, 23, 8593. https://doi.org/10.3390/s23208593

Terlau M, von Freyberg A, Stöbener D, Fischer A. Shadow-Imaging-Based Triangulation Approach for Tool Deflection Measurement. Sensors. 2023; 23(20):8593. https://doi.org/10.3390/s23208593

Chicago/Turabian StyleTerlau, Marina, Axel von Freyberg, Dirk Stöbener, and Andreas Fischer. 2023. "Shadow-Imaging-Based Triangulation Approach for Tool Deflection Measurement" Sensors 23, no. 20: 8593. https://doi.org/10.3390/s23208593