1. Introduction

GPS tracking is a crucial field in autonomous driving and machine learning applications, such as motion robotics [

1,

2], computer vision [

3,

4], motion training [

5,

6], autonomous driving [

7,

8], and so on. GPS tracking uses state-of-the-art estimation methods to estimate the actual trajectory from measurements. The Kalman filter (KF) [

9], a popular state estimation method, is a recursive filter designed to estimate the state and signal of a system from noisy, limited, and incomplete measurements. It has several advantages, such as accurately estimating the system’s state and signal in the presence of incomplete measurements and uncertainty. Additionally, the Kalman filter is computationally efficient, easy to implement, and widely used in various systems, such as navigation, communication, control, signal processing, and machine learning.

Although the Kalman filter is effective in many situations, it has some limitations. The Kalman filter assumes that the noise follows a Gaussian distribution and relies on model parameters. In practical autonomous driving applications, especially at levels four and five, there are various challenging environments that can affect GPS data accuracy. These environments include areas with high buildings, dense trees, long tunnels, multilayer junctions, underpasses, and bridges. Additionally, severe weather conditions such as snow, wet roads, old roads, grassy areas, foggy weather, and roads that have been shoveled can further impact the accuracy of GPS data. In these situations, the GPS data often experience different types of noise, including colored noise and outliers. Obtaining accurate statistical characteristics for these noise types can be difficult, and this in turn affects the accuracy of the filters used to process the GPS data.

As for the nonlinear estimation problem, researchers have proposed a series of nonlinear Kalman filters, such as the extended Kalman filter [

10] (EKF), the unscented Kalman filter [

11] (UKF), etc. The EKF calculates a Jacobi matrix to linearize nonlinear system models using first-order Taylor expansion. Therefore, it is only appropriate for low nonlinearity. The UKF obtains nonlinear equations by performing an unscented transformation on sampling points, approximating the probability density function of nonlinear states. The UKF’s estimation accuracy can be within the second order and it has performed better than the EKF. However, similarly to the EKF, the UKF must transfer the state covariance matrix, where mathematical operations can cause the covariance matrix to lose its positive definiteness and symmetry, leading to filter failure.To address the limitations of the EKF and UKF, a particle filter [

12], also known as the Monte Carlo filter, was introduced. The particle filter is a non-parametric approach that uses a set of particles to approximate the posterior distribution of the state variables.

Therefore, researchers have proposed various improved filters such as model-free, particle filter, wavelet transform, Gaussian mixture model, adaptive filtering, etc. The different filters have pros and cons that must be considered and adjusted based on the specific application requirements. Model-free filters and particle filters [

13] are two popular improved filters. The former method does not require an a priori model and can adaptively estimate the statistical characteristics of noise, showing better robustness. For example, based on the expectation maximization (EM) algorithm [

14], Shumway and Stoffer proposed an algorithm for estimating Kalman filter parameters using the EM algorithm [

15]. Another EM algorithm proposed combines the Kalman filter for linear dynamic system state estimation and the EM algorithm for parameter estimation [

16]. The latter, through simulation and approximating the posterior probability distribution through sampling, can handle nonlinear and non-Gaussian problems and is more flexible and robust. Although model-free and particle filters can improve the robustness and resilience, they have issues with accuracy and computational efficiency.

In addition, wavelet transform filters, Gaussian mixture model filters, and adaptive filters are also widely used. The wavelet transform filter [

17] is primarily suitable for static signal processing and has limitations for signals with a non-stationary behavior. The Gaussian mixture model filter [

18] requires the specification of prior and mixture model counts, and its computational complexity is high. The adaptive filter [

19] does not require the establishment of an accurate model, but it still requires some prior knowledge or empirical parameters. Therefore, it is necessary to select a filter method based on the specific application needs and to perform a comprehensive analysis and optimization to improve the performance of these algorithms. In summary, despite their unique benefits, these filters have shortcomings regarding their adaptability, computational complexities, and modeling assumptions.

The above filters are based on model parameters. This means they must use prior knowledge to guarantee filtering performance, as they do not fully utilize the measurement data. As previously mentioned, severe environments and weather in autonomous driving will complicate the statistical characteristics of GPS data, and this system model information will be included in the measurement data. Using measurement big data in filtering algorithms is becoming increasingly crucial and practical, and this can capture complex patterns and relationships among hidden variables, providing high-dimensional information for data modeling. Additionally, it can train machine learning models, such as artificial neural networks, capable of learning highly nonlinear and complex relationships. By leveraging big data and modern machine learning techniques, more efficient filtering algorithms can be developed, to handle the complexity and diversity of real-world data streams. Hence, it is crucial to study the use of big data for feature extraction and develop improved filtering methods, to increase accuracy and efficiency.

Leveraging the vast amount of sensor-collected big data can greatly enhance our understanding of synthesized signals and facilitate the identification of environmental noise [

20]. This underscores the significance of utilizing such datasets for feature extraction. By harnessing these extensive and intricate datasets, it becomes possible to develop various filters or enhance the effectiveness and performance of the existing ones. Since these datasets are typically large-scale and intricate, leveraging advanced machine learning techniques like neural networks becomes crucial for improving filtering performance. These powerful algorithms enable the analysis and processing of sensor-collected big data, leading to the extraction of more accurate features, such as periodicity, high-frequency components, and mixed noise. The combination of sensor-collected big data and modern machine learning techniques can thus drive the advancement of filtering algorithms, enabling them to effectively handle the diversity and complexity of real-world scenarios.

Researchers have started investigating network-based estimation methods based on the views mentioned above. In recent years, deep learning networks have been widely used in trajectory tracking, utilizing their powerful modeling capabilities and ability to extract potential features from the data. Recurrent neural networks (RNNs) [

21] are commonly used for analyzing time-series data, as they are able to capture the dependencies between data points. The long short-term memory (LSTM) network, a variant of the RNN network, was introduced by Hochreiter et al. (1997) [

22]. LSTMs employ a complex architecture, incorporating a hidden state unit to determine the importance of input data. This additional layer enhances the network’s ability to capture long-term dependencies by preventing the vanishing gradient problem frequently encountered in regular RNNs. To effectively overcome the issue of gradient explosion, LSTMs employ distinct activation functions and calculation methods, such as the use of gates (e.g., input gates, forget gates, and output gates), which regulate the flow of information through the network, enabling it to model complex, long-term sequences with greater accuracy. LSTMs have become increasingly popular in various fields, such as natural language processing, speech recognition, and image recognition.

Chang-hao Chenet al. [

23] proposed IONet, to investigate motion estimation using IMUs based on the Bi-LSTM model, which has a more accurate tracking effect than traditional pedestrian dead reckoning (PDR). The extended nine-axis IONet proposed by Won-Yeol Kim et al. [

24] improves trajectory tracking using both gravitational acceleration and geomagnetic data from the IMU. By reducing the data based on the original six-axis IONet input, the estimation accuracy of the position change is improved. Rui-peng Gao et al. [

25] proposed a method to track a vehicle in real-time using a temporal convolutional network (TCN), to obtain historical data from the IMU in a mobile phone, when a GPS signal is unavailable.

Deep learning methods have a good learning capability, but in the era of big data, neural networks often need a large amount of input information [

26]. This information usually contains a lot of useless or less valuable data, making it difficult for the neural network to learn, reducing the learning efficiency of deep learning methods, and potentially falling into overfitting, which remains a challenge for complex target tracking problems. Recently, the attention mechanism [

27] has been used to solve this problem. The attention mechanism can be seen as a simulation of human attention; that is, humans can pay attention to valuable information, while ignoring useless information. A new attention mechanism architecture, a transformer based on the self-attention Seq2seq [

28] model, has demonstrated powerful capabilities in sequential data processing, such as natural language processing [

29], audio processing [

30], and even computer vision [

31]. Unlike a RNN, transformer allows the model to access any part of history without considering the distance, making it more suitable for mastering repeated patterns with long-term dependencies and for preventing overfitting. Currently, applying this framework to model tracking has attracted extensive attention from researchers.

Based on the aforementioned disadvantages, traditional estimation methods are usually based on statistical principles, with relatively low computational requirements and a lower requirement for an accurate number of samples. However, conventional estimation methods may not be able to account for nonlinear relationships among multiple variables. On the other hand, deep learning methods can handle large-scale datasets using techniques such as neural networks and can discover more complex patterns and extract critical features. Moreover, deep learning models can adaptively learn relationship patterns in a dataset, reducing the risk of dependence on human rules and prior knowledge. Therefore, combining deep learning with traditional estimation methods can find a balance between model interpretability and high accuracy, thereby improving model performance and robustness, making this more suitable for practical problems.

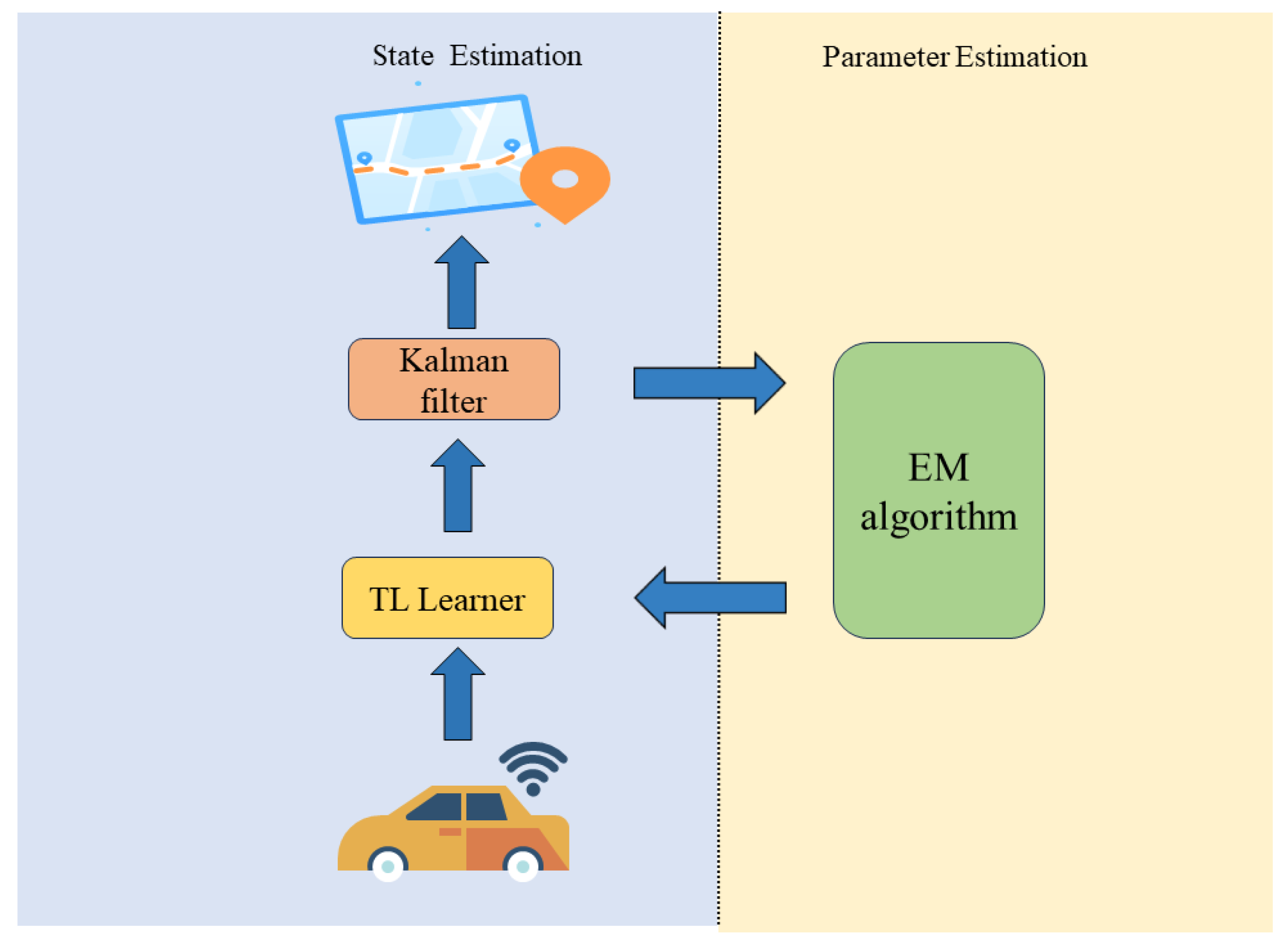

However, the existing methods rely on predefined parameters and have difficulty with complex nonlinear models. This article intends to use neural networks to learn parameters and address the limitations of traditional methods. To better estimate the trajectory of maneuvering targets, this paper proposes a new tracking method, to solve the problems faced in practice by estimation methods: learning measurement data using a mechanism containing transformer multi-headed self-attention and LSTM, to obtain the statistical properties of its complex motion; at the same time, based on the output of the network, the EM method is used to estimate the dynamics and measurement characteristics of moving targets, and real-time system parameter modeling is carried out in the estimation, to provide more accurate model parameters for the Kalman filter. After learning, the EM algorithm can obtain more real-time and accurate system parameters and improve the trajectory tracking estimation accuracy.

The remainder of this paper is structured as follows: In

Section 2, we provide a comprehensive description of the proposed model, which includes a detailed explanation of the network model structure, the mathematical background of the EM algorithm, the Kalman filter, and the overall model framework. Specifically, we present the design of different components, including the input layer, hidden layer, and output layer, as well as the training process for the network. Additionally, we delve into the EM algorithm, which is used to estimate the parameters of the deep learning model, and the Kalman filter, which is applied to smooth the hidden states of a dynamic system. In

Section 3, we present the dataset used in the experiment, describe the experimental environment, detail the contents and evaluation metrics of the experiment, and finally analyze the results. Specifically, we describe the characteristics, size, sources of the dataset, and experimental setup, including the software, hardware, and preprocessing steps. In

Section 4, we provide the conclusions of the paper and future directions for research.

2. Parameter-Free State Estimation

Generally, the linear system model is as follows:

where

is the state,

are the measurement data,

A is the State transform matrix,

Q is the state noise,

is the state noise covariance,

C is the measurement matrix formula,

R is the measurement noise,

is the measurement noise covariance,

and

are the mean and covariance of the initial state, respectively. Usually, the above parameters can be modeled based on historical knowledge or system mechanisms. (The use of linear models can reduce the model complexity, simplify algorithm derivation and calculation, and make problems easier to model and solve, which is beneficial for explaining the principles of the algorithm.)

The Kalman filter is the optimal autoregressive data processing algorithm, and the Kalman filtering process is as follows:

and,

In the above equation,

K is the Kalman filter gain. It is clear from the above equation that the Kalman filtering algorithm consists of two steps. Equation (

2) is the estimation process, where the estimates

are estimated from the previous one

obtained. Equation (3) is the update process, the estimation is based on the filtering gain of the update.

The classic Kalman filter method relies on accurate model parameters, which can be challenging to obtain in practical applications. Additionally, the simplified linear model used in the Kalman filter may have a significant discrepancy from the actual nonlinear model, due to the inherent nonlinear characteristics of real-world systems. This discrepancy becomes evident in the measurement data collected from the system.

In this paper, we address this issue by incorporating measured data to complement the linear ideal model mentioned in Equation (

1). Specifically, we utilize a deep neural network to train the relationship between the measured data and the reference state offline. This approach allows us to capture the nonlinearities present in the system model and bridge the gap between the simplified linear model and the actual system. The overall system model and the integration of the deep neural network are illustrated in

Figure 1.

The proposed model structure in this paper consists of two steps: (1) Offline training: The offline training involves training a system model learner based on LSTM with attention, using the system’s initial parameters (

Figure 2). By combining the encoding attention structure of a transformer and LSTM, the model learns from observation data without modeling system dynamics and measurement characteristics, capturing the motion characteristics of the system through neural network training. (2) Online estimation: During estimation, measurement data are input into the attention LSTM learning module to obtain accurate dynamic characteristics, the online part employs the EM algorithm to update the model parameters in real-time. Kalman filtering is then used for online recursive estimation (

Figure 3).

2.1. Offline Training System Learner

The transformer’s ability to capture long-term dependencies is due to its integration of multi-head self-attention and residual connections, which enhances the model’s training depth and reduces the risk of overfitting. The combination of LSTM and a transformer encoder enhances the structural advantages and sequence modeling ability of the transformer encoder (shown as

Figure 2). This model can leverage the known information of the system’s mechanistic model and also obtain the complex dependency relationship of the system from historical measurement data through training the network model.

Multi-headed self-attention [

28] generates multiple attentions in the network, acting on features separately and in parallel, with the input to the module being the observed data

, the attention task-related query vector represented as

, and information such as the input features represented as key-value pairs,

and

, respectively.

,

, and

are obtained by linearly varying the input measurements

. The process of attentional action can be represented as follows:

where

,

, and

are trainable parameter projection matrices and

is the feature dimension of

. According to the input

and

, the data can be used to obtain the dot product, the weights corresponding to the elements of the input data

can then be obtained from the

function.

is used to scale the dot product, to prevent the obtained dot product from being too large, which is conducive to rapid learning.

The essence of the multi-headed self-attendance mechanism is a linear transformation after stitching together the results of multiple attention calculations. This mechanism allows the model to use different feature information obtained at different locations, thus increasing the diversity of features. The multi-headed self-attention is calculated as shown below.

where

t is the total number of heads,

is the weight matrix for ensuring that the target dimension is met,

is the splicing operation of the vector, and

denotes the features of the

i header.

In this paper, a transformer encoder structure is used to encode and learn the underlying dynamical properties of the observed data, using the multi-headed attention mechanism to construct higher dimensional, multi-channel attentional information and uncover rich information. The attention-based learning module feeds the observed data into the transformer encoder module, which encodes the location of the observed data and feeds the encoded data into the multi-headed self-attentive layer. To prevent network degradation and accelerate convergence, the encoder is also structured with residual connections, layer-norm layers, and feed-forward neural networks. We output potential coding features through the transformer encoder module.

After the transformer, we use the LSTM to learn longer dependencies between the measurement data.

where

indicates the hidden state at the previous moment.

The system model learner (as shown in

Figure 2) achieves the learning of system dynamics through offline training. The input–output pairs for learning are the observed data and the reference state of the system. The transformer encoder structure with multi-head self-attention and LSTM networks are utilized to learn the latent features of the data. This approach eliminates the need for modeling system dynamics and measurement characteristics. First, the transformer encoder learns the long-term dependencies of the observed data through a self-attention mechanism. Then, the LSTM network further enhances the modeling ability of the sequence data. The combination of the two can effectively extract the features required for parameter estimation. The goal of this deep neural network is to learn the dynamic characteristics of the system, and its output will be input into the EM algorithm as observation data.

2.2. Online Kalman Filtering Based on EM Algorithm

The EM algorithm uses the observation data output from deep neural networks to estimate parameters such as the state transition matrix, observation matrix, and noise covariance matrix required for Kalman filter.

After offline training, the network model still deviates from the actual system. During the estimation process, the online estimation part uses the EM algorithm to update the model parameters in real time. Specifically, the measurement data are input into the trained attention LSTM learning module, to obtain accurate dynamic characteristics data that characterize the motion sequence. Then, online recursive estimation is performed through Kalman filtering. Furthermore, the EM algorithm is used to update the current state-transition matrix, measurement matrix, state noise, measurement noise variance, and other parameters (as shown in

Figure 3).

The left side of

Figure 3 shows the EM algorithm, according to Jenson’s inequality, we have

where

is the hidden variable,

is the unknown parameter to be estimated, and

denotes the parameter at the nth iteration. The PDFs of the latent variables are

Substituting (13) into (12), we obtain

It can be seen that the latter term of the above equation is not relevant to

and can be omitted. For state estimation, maximization of the measurement

of the PDF is equivalent to maximization of the log likelihood of

.

The EM algorithm starts with an initial estimate

and then iterates over the E and M steps of Algorithm 1.

| Algorithm 1 EM algorithm |

- Input:

Initial estimation of parameters , error , maximum iteration number - Output:

- 1:

repeat - 2:

E-step: compute - 3:

M-step: - 4:

until, or iteration number is up to return

|

Let the state to be estimated for the system be

and the measured value be

, with the probability distribution of

Therefore, by extending the probability distribution function in Equation (14) to an exponential function, we obtain

where

u and

v are the dimensions of the state transfer matrix and measurement matrix, respectively.

Under the assumptions of the Markov property of states and the conditional independence of measurements, the current state is only related to the previous state, and the current observation is only related to the current state.Based on the above assumptions, the joint probability distribution can be obtained:

Combining (21) and (15), substitute the probability density function of the hidden variable

into Jensen’s inequality, and note that the parameter independent term in the density function can be omitted. We can obtain the expected form of the parameter:

Based on the maximum value theory, calculate the partial derivative of (22) and obtain the parameters:

Utilizing the offline training system learner mentioned in

Section 2.2, we can capture the long-term dependence between data and the potential correlations between data, which can better estimate the parameters of the filter model. Based on the linear least squares method, the observations and state estimators output by the neural network are constructed into a linear regression model, and the state transition matrix

A and observation matrix

C are obtained by solving the covariance matrix of the estimators, then there are

where

denotes the estimate of the filter at step

k.

Then, based on

, statistical modeling based on the error of state estimators and calculating the covariance matrix of state noise

Q, construct a statistical model of observation noise using the covariance matrix of residuals and obtain the covariance matrix of observation noise

R:

Using recursive output states through Kalman filtering, the estimated trajectory is obtained. When using Kalman filter, A is the state-transition matrix calculated using (24), Q is the state noise covariance calculated using (26), C is the measurement matrix formula (25), and R is the measurement noise covariance calculated using Formula (27).