A Normalized Absolute Values Adaptive Evaluation Function of Image Clarity

Abstract

:1. Introduction

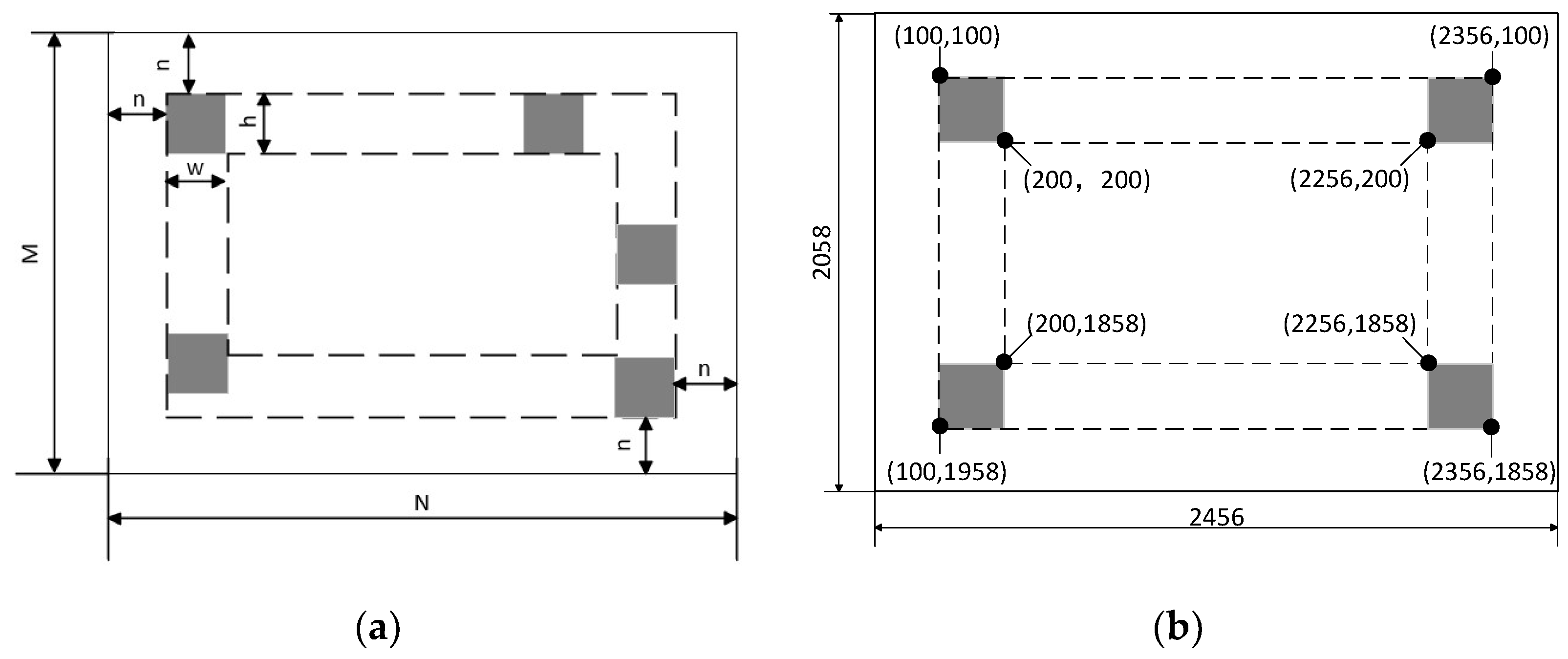

2. Principle of the Clarity Evaluation Function

2.1. Classical Clarity Evaluation Functions

2.2. NAVA Function

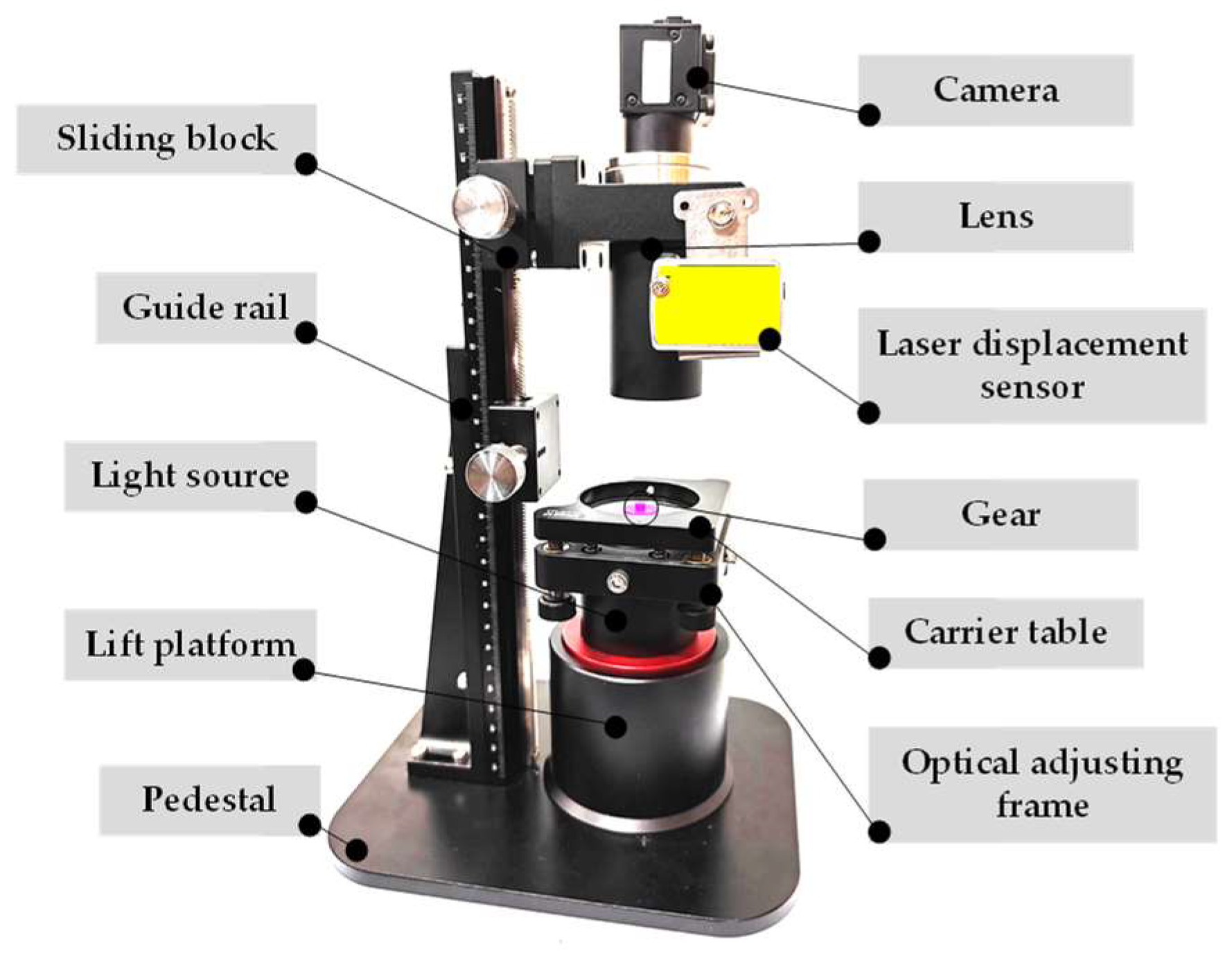

3. Testing and Analysis

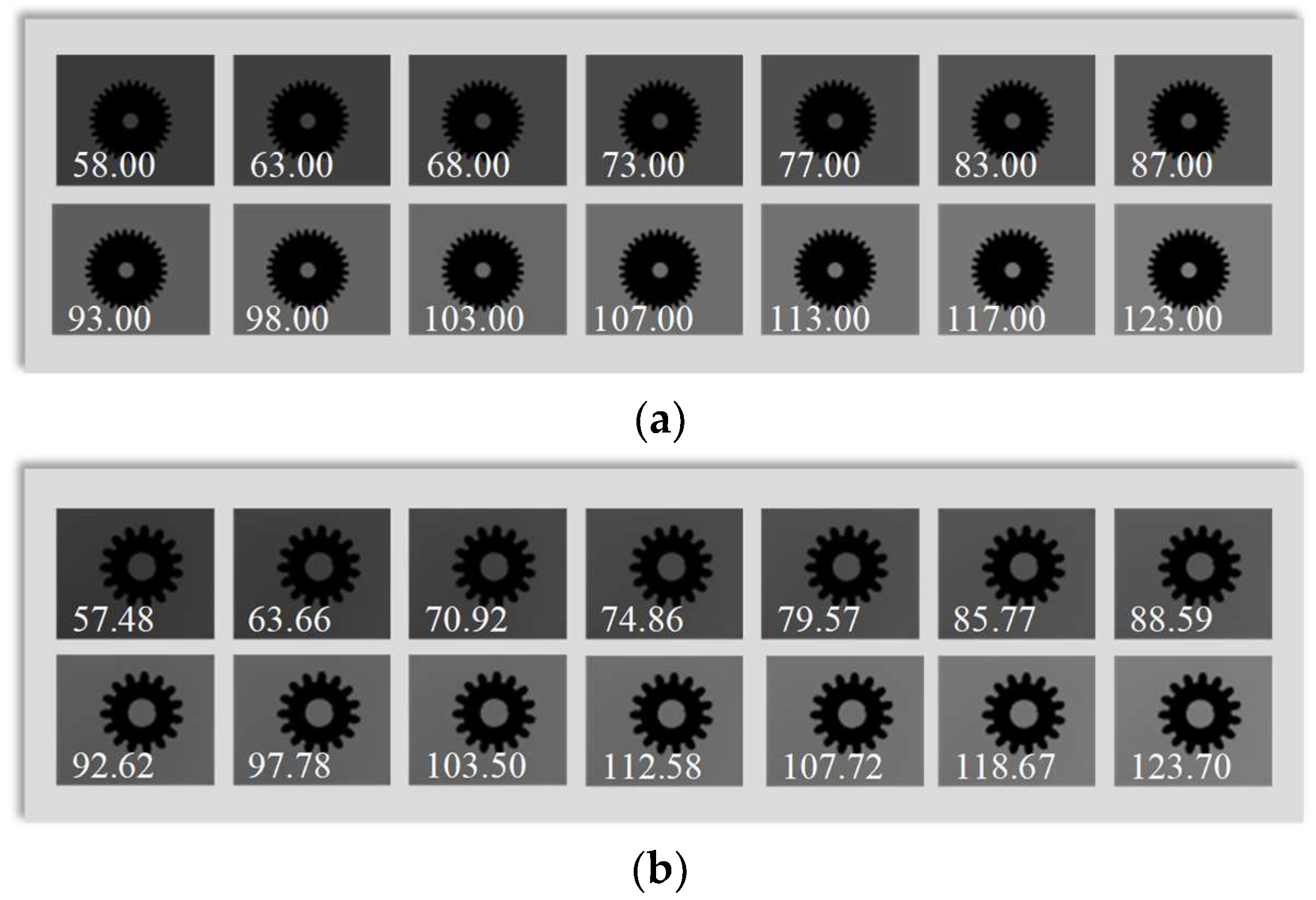

3.1. Experiment on Changing Background Brightness

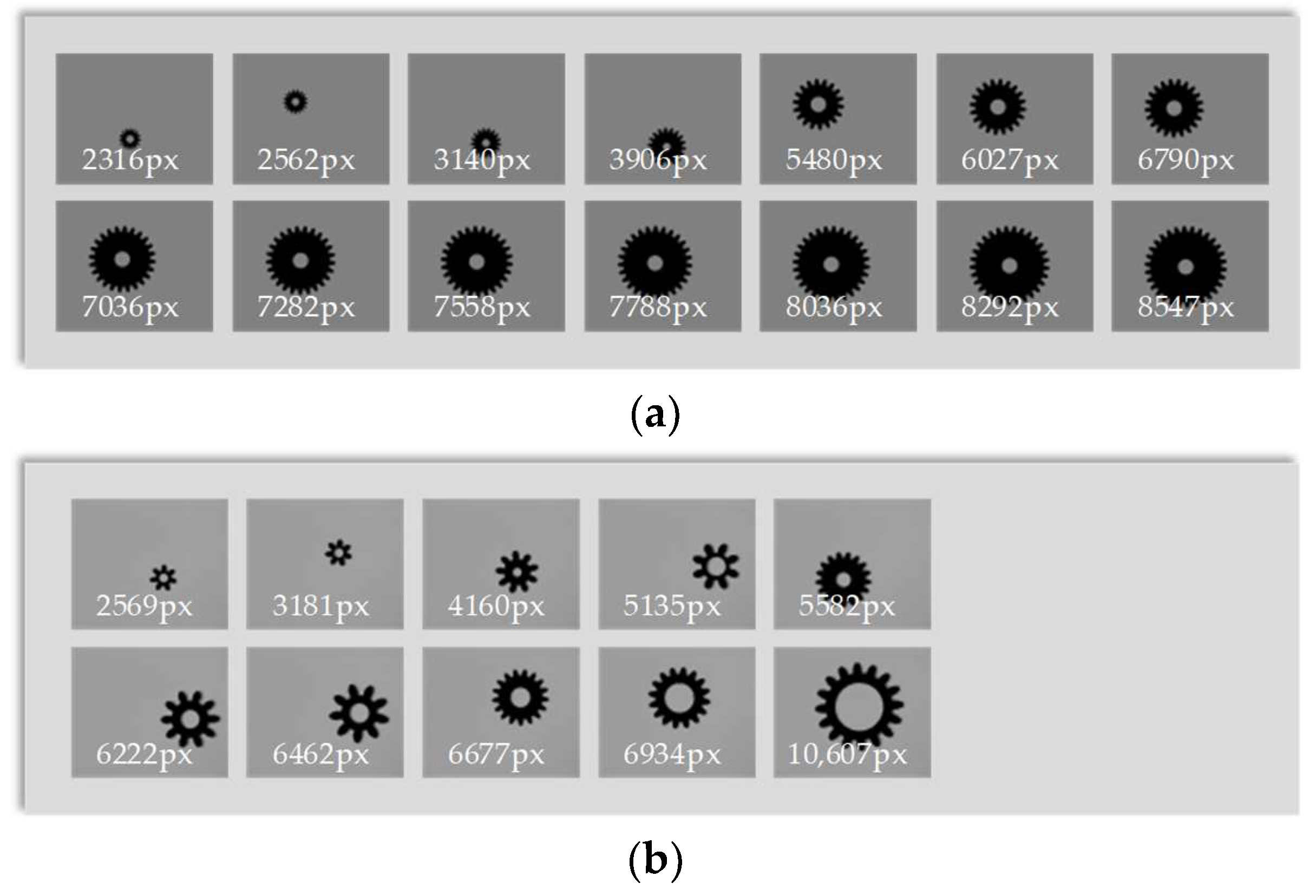

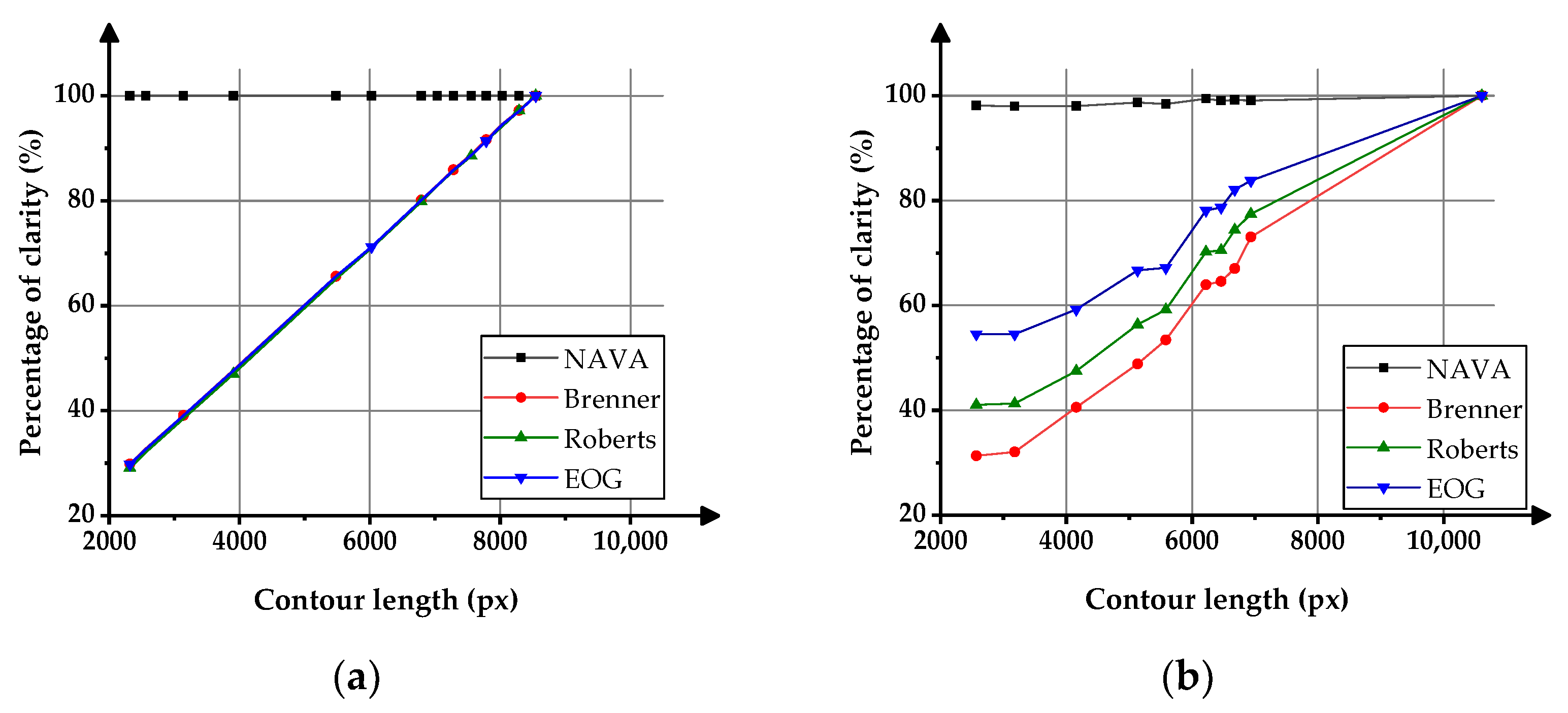

3.2. Experiment on Changing the Contour Length of the Measured Object

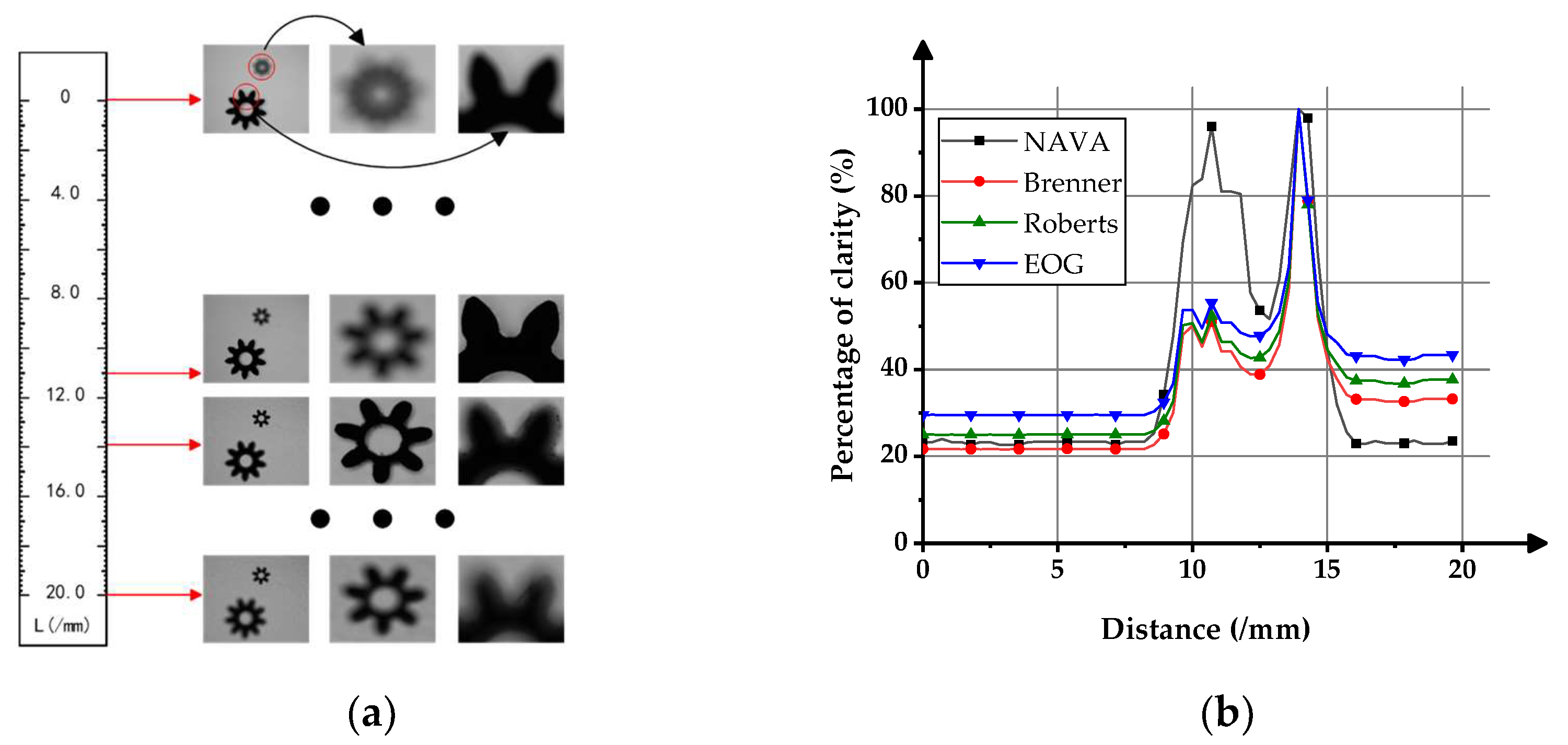

3.3. Experiment on Two Objects with Different Object Distances

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lu, R.; Wu, A.; Zhang, T.; Wang, Y. Review on automated optical (visual) inspection and its applications in defect detection. Acta Optica Sinica 2018, 38, 23–58. [Google Scholar]

- Qi, K.; Shen, P.; Chen, J.; Wang, H. Image Definition Evaluation Method Based on Edge Feature. Mach. Des. Manuf. 2023, 10, 174–176. [Google Scholar]

- Ye, S.; Zhong, W.; Qu, X. Review and Prospect of Precision Inspection. China Mech. Eng. 2000, 03, 262–263. [Google Scholar]

- Shi, Z.; Fang, Y.; Wang, X. Research Progress in Gear Machine Vision Inspection Instrument and Technology. Laser Optoelectron. Prog. 2022, 59, 74–86. [Google Scholar]

- Liao, J.; Chen, X.; Ding, G.; Dong, P.; Ye, H.; Wang, H.; Zhang, Y.; Yao, J. Deep learning-based single-shot autofocus method for digital microscopy. Biomed. Opt. Express 2022, 13, 314. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Gao, S.; Jiang, Z.; Kong, Y.; Liu, C.; Wang, S. Wavefront sensing based autofocus method and its applications. J. Optoelectron. Laser 2020, 31, 46–55. [Google Scholar]

- Li, Z.; Li, X.; Ma, L.; Hu, Y.; Tang, L. Research of Definition Assessment based on No-reference Digital Image Quality. Remote Sens. Technol. Appl. 2011, 26, 239–246. [Google Scholar]

- Zhu, K.; Jiang, W.; Gao, Z.; Zhou, X.; Zhang, J. Focusing Window Choice and Parameters Determination in Automatic Focusing System. Acta Optica Sinica 2006, 26, 836–840. [Google Scholar]

- Xie, X.; Zhou, J.; Wu, Q. An Adaptive Autofocus Method Using No-reference Structural Sharpness. Opto-Electron. Eng. 2011, 38, 84–89. [Google Scholar]

- Liu, S.; Liu, M.; Yang, Z. An image auto-focusing algorithm for industrial image measurement. EURASIP J. Adv. Signal Process. 2016, 2016, 70. [Google Scholar] [CrossRef]

- Jin, S. Optical Design and Verification of Multi-Layer Rapid Autofocus System for Microscopic Systems. Bachelor’s Thesis, University of Chinese Academy of Sciences, Changchun, China, 2022. [Google Scholar]

- Caviedes, J.; Oberti, F. A new sharpness metric based on local kurtosis, edge and energy information. Signal Process. Image Commun. 2004, 19, 147–161. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, H.; Xu, Z.; Li, Q.; Chen, Y. Autofocus Evaluation Function Based on Saturate Pixels Removing. Acta Optica Sinica 2016, 36, 81–88. [Google Scholar]

- Zhang, L. Research of Image Sharpness Assessment Algorithm for Autofocus. Bachelor’s Thesis, University of Chinese Academy of Sciences, Xi’an, China, 2016. [Google Scholar]

- Liu, J.; Lu, R.; Zhang, Z.; Zhang, A. Sharpness Evaluation Function for Line Patterns in Focal Length Measurement. Acta Optica Sinica 2023, 43, 125–134. [Google Scholar]

- Cha, Z. Research on Technologies of Auto-Focus and Multi-Focus Fusion for Medical Ultre HD Camera System. Bachelor’s Thesis, Zhejiang University, Hangzhou, China, 2022. [Google Scholar]

- Bahy, R.M. Autofocus microscope system based on blur measurement approach. J. Physics. Conf. Ser. 2021, 1721, 12058. [Google Scholar] [CrossRef]

- Wang, L.; Gong, Y.; Zhang, Y.; Lang, S.; Zheng, H. Human Eye-Autofocus and Pupil Center Auto-Alignment system. Acta Optica Sinica 2023, 43, 118–126. [Google Scholar]

- Xiong, R.; Gu, N.; Xu, H. An Auto-Focusing Evaluation Function Adapted to Multi-Directional Gray Gradient Change. Laser Optoelectron. Prog. 2022, 59, 373–380. [Google Scholar]

- Zhou, P.; Hu, C.; Bi, C.; Hao, X. Auto focusing technology of three-axis vision measuring system. Infrared Laser Eng. 2021, 50, 443–450. [Google Scholar]

- Ao, X.; Liu, C.; Zhang, D. EOG-DS: Zoom Dual-Mode Tracking Algorithm for Dynamic Targets. Comput. Eng. Appl. 2023, 59, 113–121. [Google Scholar]

- Zhi, S.; Zhao, W.; Zhao, W.; Duan, Z.; Sun, H. Visual measurement method of pitch machine based on gear local image. Chin. J. Sci. Instrum. 2018, 39, 225–231. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Yao, T.; Liu, M.; Zheng, K.; Zhao, C.; Xiao, L.; Zhu, D. A Normalized Absolute Values Adaptive Evaluation Function of Image Clarity. Sensors 2023, 23, 9017. https://doi.org/10.3390/s23229017

Wang X, Yao T, Liu M, Zheng K, Zhao C, Xiao L, Zhu D. A Normalized Absolute Values Adaptive Evaluation Function of Image Clarity. Sensors. 2023; 23(22):9017. https://doi.org/10.3390/s23229017

Chicago/Turabian StyleWang, Xiaoyi, Tianyang Yao, Mingkang Liu, Kunlei Zheng, Chengxiang Zhao, Longyuan Xiao, and Dongjie Zhu. 2023. "A Normalized Absolute Values Adaptive Evaluation Function of Image Clarity" Sensors 23, no. 22: 9017. https://doi.org/10.3390/s23229017