Low-Pass Image Filtering to Achieve Adversarial Robustness

Abstract

1. Introduction

- Defensive distillation [24] implies using two or more networks; it is good for some undefined threats, but weak against fine-tuning the high-frequency attacks;

- Denoisers—they are used mostly for visual image enhancement or upscaling, not proven to be effective against gradient-based attacks; little quantitative evaluation is available [26];

- There is a work implementing a generator for synthesizing images [37], its authors use incomparable CNN model and datasets;

- Generative adversarial networks [27] are effective for detecting adversarial noise; the discriminator (the important part of GANs) is also vulnerable to the same adversarial attacks;

- Low-level transformations [38] are easy and effective techniques. Still, available results are incomparable (different CNN model and datasets).

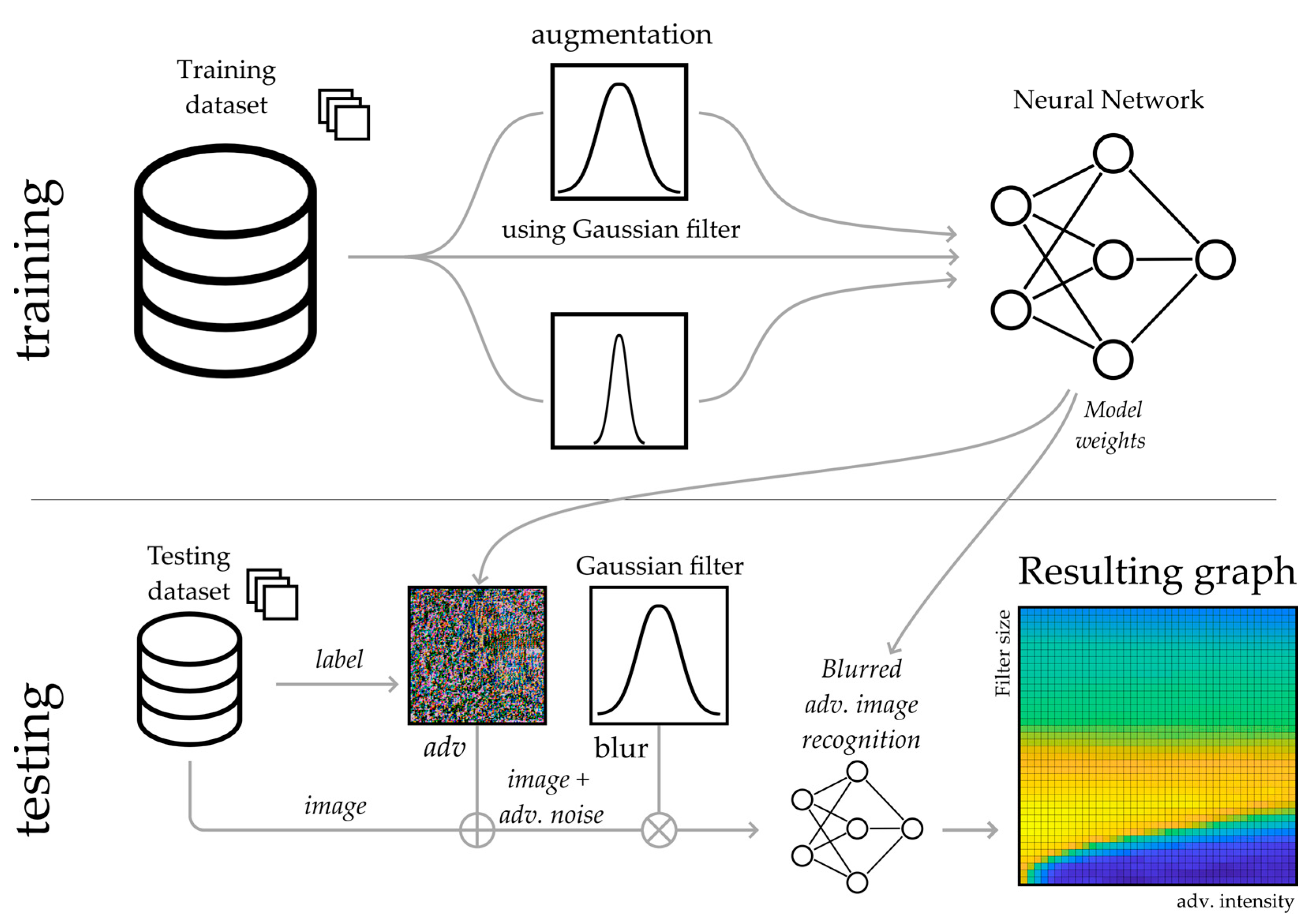

2. Materials and Methods

2.1. Datasets

2.2. Convolutional Nets

- Simplified high-speed CNN called SimConvNet; defined below;

- The commonly used EfficientNetB3 [43].

2.3. Adversarial Attacks

2.4. The Theoretical Approach to the Problem Solution

2.5. The Proposed Technique

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, F.; Lin, G.; Shen, C. CRF Learning with CNN Features for Image Segmentation. Pattern Recognit. 2015, 48, 2983–2992. [Google Scholar] [CrossRef]

- Yang, L.; Liu, R.; Zhang, D.; Zhang, L. Deep Location-Specific Tracking. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 19 October 2017; pp. 1309–1317. [Google Scholar]

- Ren, Y.; Yu, X.; Chen, J.; Li, T.H.; Li, G. Deep Image Spatial Transformation for Person Image Generation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 7687–7696. [Google Scholar]

- Borji, A. Generated Faces in the Wild: Quantitative Comparison of Stable Diffusion, Midjourney and DALL-E 2. arXiv 2022. [Google Scholar] [CrossRef]

- Jasim, H.A.; Ahmed, S.R.; Ibrahim, A.A.; Duru, A.D. Classify Bird Species Audio by Augment Convolutional Neural Network. In Proceedings of the 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 9–11 June 2022; pp. 1–6. [Google Scholar]

- Mustaqeem; Kwon, S. A CNN-Assisted Enhanced Audio Signal Processing for Speech Emotion Recognition. Sensors 2019, 20, 183. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Wang, Y.; Erfani, S.M.; Gu, Q.; Bailey, J.; Ma, X. Exploring Architectural Ingredients of Adversarially Robust Deep Neural Networks. In Proceedings of the Thirty-Fifth Annual Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; Volume 34, pp. 5545–5559. [Google Scholar]

- Wu, B.; Chen, J.; Cai, D.; He, X.; Gu, Q. Do Wider Neural Networks Really Help Adversarial Robustness? In Proceedings of the Thirty-Fifth Annual Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; Volume 34, pp. 7054–7067. [Google Scholar]

- Akrout, M. On the Adversarial Robustness of Neural Networks without Weight Transport. arXiv 2019. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing Properties of Neural Networks. arXiv 2014. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014. [Google Scholar] [CrossRef]

- Moosavi-Dezfooli, S.-M.; Fawzi, A.; Frossard, P. DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One Pixel Attack for Fooling Deep Neural Networks. IEEE Trans. Evol. Computat. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The Limitations of Deep Learning in Adversarial Settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout Networks. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 1319–1327. [Google Scholar]

- Hu, Y.; Kuang, W.; Qin, Z.; Li, K.; Zhang, J.; Gao, Y.; Li, W.; Li, K. Artificial Intelligence Security: Threats and Countermeasures. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Chakraborty, A.; Alam, M.; Dey, V.; Chattopadhyay, A.; Mukhopadhyay, D. A Survey on Adversarial Attacks and Defences. CAAI Trans Intel Tech 2021, 6, 25–45. [Google Scholar] [CrossRef]

- Xu, H.; Ma, Y.; Liu, H.-C.; Deb, D.; Liu, H.; Tang, J.-L.; Jain, A.K. Adversarial Attacks and Defenses in Images, Graphs and Text: A Review. Int. J. Autom. Comput. 2020, 17, 151–178. [Google Scholar] [CrossRef]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of Representations for Domain Adaptation. In Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems (NIPS 2006), Vancouver, BC, Canada, 4–7 December 2006; Volume 19. [Google Scholar]

- Athalye, A.; Logan, E.; Andrew, I.; Kevin, K. Synthesizing Robust Adversarial Examples. PLMR 2018, 80, 284–293. [Google Scholar]

- Hendrycks, D.; Zhao, K.; Basart, S.; Steinhardt, J.; Song, D. Natural Adversarial Examples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15262–15271. [Google Scholar]

- Shaham, U.; Yamada, Y.; Negahban, S. Understanding Adversarial Training: Increasing Local Stability of Supervised Models through Robust Optimization. Neurocomputing 2018, 307, 195–204. [Google Scholar] [CrossRef]

- Samangouei, P.; Kabkab, M.; Chellappa, R. Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015. [Google Scholar] [CrossRef]

- Xu, W.; Evans, D.; Qi, Y. Feature Squeezing: Detecting Adversarial Examples in Deep Neural Networks. In Proceedings of the 2018 Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Liao, F.; Liang, M.; Dong, Y.; Pang, T.; Hu, X.; Zhu, J. Defense Against Adversarial Attacks Using High-Level Representation Guided Denoiser. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Creswell, A.; Bharath, A.A. Denoising Adversarial Autoencoders. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 968–984. [Google Scholar] [CrossRef] [PubMed]

- Rahimi, N.; Maynor, J.; Gupta, B. Adversarial Machine Learning: Difficulties in Applying Machine Learning to Existing Cybersecurity Systems. In Proceedings of the 35th International Conference on Computers and Their Applications, CATA 2020, San Francisco, CA, USA, 23–25 March 2020; Volume 69, pp. 40–47. [Google Scholar]

- Xu, H.; Li, Y.; Jin, W.; Tang, J. Adversarial Attacks and Defenses: Frontiers, Advances and Practice. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; pp. 3541–3542. [Google Scholar]

- Rebuffi, S.-A.; Gowal, S.; Calian, D.A.; Stimberg, F.; Wiles, O.; Mann, T. Fixing Data Augmentation to Improve Adversarial Robustness. arXiv 2021. [Google Scholar] [CrossRef]

- Wang, D.; Jin, W.; Wu, Y.; Khan, A. Improving Global Adversarial Robustness Generalization with Adversarially Trained GAN. arXiv 2021. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, H.; Song, Z.; Boning, D.; Dhillon, I.S.; Hsieh, C.-J. The Limitations of Adversarial Training and the Blind-Spot Attack. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Lee, H.; Kang, S.; Chung, K. Robust Data Augmentation Generative Adversarial Network for Object Detection. Sensors 2022, 23, 157. [Google Scholar] [CrossRef]

- Xiao, L.; Xu, J.; Zhao, D.; Shang, E.; Zhu, Q.; Dai, B. Adversarial and Random Transformations for Robust Domain Adaptation and Generalization. Sensors 2023, 23, 5273. [Google Scholar] [CrossRef]

- Ross, A.; Doshi-Velez, F. Improving the Adversarial Robustness and Interpretability of Deep Neural Networks by Regularizing Their Input Gradients. Proc. AAAI Conf. Artif. Intell. 2018, 32, 1660–1669. [Google Scholar] [CrossRef]

- Ross, A.S.; Hughes, M.C.; Doshi-Velez, F. Right for the Right Reasons: Training Differentiable Models by Constraining Their Explanations. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2662–2670. [Google Scholar]

- Li, H.; Zeng, Y.; Li, G.; Lin, L.; Yu, Y. Online Alternate Generator Against Adversarial Attacks. IEEE Trans. Image Process. 2020, 29, 9305–9315. [Google Scholar] [CrossRef]

- Yin, Z.; Wang, H.; Wang, J.; Tang, J.; Wang, W. Defense against Adversarial Attacks by Low-level Image Transformations. Int. J. Intell. Syst. 2020, 35, 1453–1466. [Google Scholar] [CrossRef]

- Ito, K.; Xiong, K. Gaussian Filters for Nonlinear Filtering Problems. IEEE Trans. Automat. Contr. 2000, 45, 910–927. [Google Scholar] [CrossRef]

- Blinchikoff, H.J.; Zverev, A.I. Filtering in the Time and Frequency Domains, revised ed.; SciTech Publishing: Raleigh, NC, USA, 2001; ISBN 978-1-884932-17-5. [Google Scholar]

- Ziyadinov, V.V.; Tereshonok, M.V. Neural Network Image Recognition Robustness with Different Augmentation Methods. In Proceedings of the 2022 Systems of Signal Synchronization, Generating and Processing in Telecommunications (SYNCHROINFO), Arkhangelsk, Russia, 29 June–1 July 2022; pp. 1–4. [Google Scholar]

- Ziyadinov, V.; Tereshonok, M. Noise Immunity and Robustness Study of Image Recognition Using a Convolutional Neural Network. Sensors 2022, 22, 1241. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Roy, P.; Ghosh, S.; Bhattacharya, S.; Pal, U. Effects of Degradations on Deep Neural Network Architectures. arXiv 2023. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Kaggle. Rock-Paper-Scissors Images. Available online: https://www.kaggle.com/drgfreeman/rockpaperscissors (accessed on 9 June 2023).

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv 2017. [Google Scholar] [CrossRef]

- Tramèr, F.; Papernot, N.; Goodfellow, I.; Boneh, D.; McDaniel, P. The Space of Transferable Adversarial Examples. arXiv 2017. [Google Scholar] [CrossRef]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Chen, P.-Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.-J. ZOO: Zeroth Order Optimization Based Black-Box Attacks to Deep Neural Networks without Training Substitute Models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar]

- Chen, J.; Jordan, M.I.; Wainwright, M.J. HopSkipJumpAttack: A Query-Efficient Decision-Based Attack. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–20 May 2020; pp. 1277–1294. [Google Scholar]

- Wang, J.; Yin, Z.; Hu, P.; Liu, A.; Tao, R.; Qin, H.; Liu, X.; Tao, D. Defensive Patches for Robust Recognition in the Physical World. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2446–2455. [Google Scholar]

- Andriushchenko, M.; Croce, F.; Flammarion, N.; Hein, M. Square Attack: A Query-Efficient Black-Box Adversarial Attack via Random Search. In Proceedings of the 16th European Conference Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Volume 12368, pp. 484–501, ISBN 978-3-030-58591-4. [Google Scholar]

- Wang, H.; Wu, X.; Huang, Z.; Xing, E.P. High-Frequency Component Helps Explain the Generalization of Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8681–8691. [Google Scholar]

- Bradley, A.; Skottun, B.C.; Ohzawa, I.; Sclar, G.; Freeman, R.D. Visual Orientation and Spatial Frequency Discrimination: A Comparison of Single Neurons and Behavior. J. Neurophysiol. 1987, 57, 755–772. [Google Scholar] [CrossRef][Green Version]

- Zhou, Y.; Hu, X.; Han, J.; Wang, L.; Duan, S. High Frequency Patterns Play a Key Role in the Generation of Adversarial Examples. Neurocomputing 2021, 459, 131–141. [Google Scholar] [CrossRef]

- Zhang, Z.; Jung, C.; Liang, X. Adversarial Defense by Suppressing High-Frequency Components. arXiv 2019. [Google Scholar] [CrossRef]

- Thang, D.D.; Matsui, T. Automated Detection System for Adversarial Examples with High-Frequency Noises Sieve. In Cyberspace Safety and Security; Vaidya, J., Zhang, X., Li, J., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11982, pp. 348–362. ISBN 978-3-030-37336-8. [Google Scholar]

- Ziyadinov, V.V.; Tereshonok, M.V. Mathematical Models and Recognition Methods For Mobile Subscribers Mutual Placement. T-Comm 2021, 15, 49–56. [Google Scholar] [CrossRef]

| FGSM Intensity | FGSM Intensity | Optimal Low-Pass Filter Size | Accuracy Gain G | ||

|---|---|---|---|---|---|

| SimConvNet (Natural Dataset) | 5 | 0.206 | 0.913 | 10 | 9.1 |

| 10 | 0.206 | 0.9 | 7.9 | ||

| 20 | 0.1875 | 0.894 | 6.7 | ||

| SimConvNet (RPS) | 5 | 0.738 | 0.947 | 8 | 4.9 |

| 10 | 0.66 | 0.879 | 2.8 | ||

| 20 | 0.576 | 0.738 | 1.6 | ||

| EfficientNetB3 (ImageNet) | 15 | 0.699 | 0.781 | 7 | 1.4 |

| 20 | 0.481 | 0.72 | 1.9 | ||

| EfficientNetB3 (Natural Dataset) | 5 | 0.977 | 1 | 7 | ∞ |

| 10 | 0.814 | 0.996 | 46.5 | ||

| 20 | 0.25 | 0.881 | 6.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ziyadinov, V.; Tereshonok, M. Low-Pass Image Filtering to Achieve Adversarial Robustness. Sensors 2023, 23, 9032. https://doi.org/10.3390/s23229032

Ziyadinov V, Tereshonok M. Low-Pass Image Filtering to Achieve Adversarial Robustness. Sensors. 2023; 23(22):9032. https://doi.org/10.3390/s23229032

Chicago/Turabian StyleZiyadinov, Vadim, and Maxim Tereshonok. 2023. "Low-Pass Image Filtering to Achieve Adversarial Robustness" Sensors 23, no. 22: 9032. https://doi.org/10.3390/s23229032

APA StyleZiyadinov, V., & Tereshonok, M. (2023). Low-Pass Image Filtering to Achieve Adversarial Robustness. Sensors, 23(22), 9032. https://doi.org/10.3390/s23229032