This paper proposes that the accuracy of CNN-based automated cow body condition scoring can be improved by making use of multiple cameras viewing the cow from different angles and exposing the CNN models to different anatomical features compared to the simple top view used in most approaches.

3.1. Data Collection

This study made use of three Microsoft Kinect™ v2 cameras. These cameras allow for the capture of RGB, infrared, and depth images. Only the depth images were captured and used for this study. The RGB and infrared images were not used since the only useful information they provide in this use case is the outline of the cow which can also be produced from a depth image. The depth sensor has a resolution of 512 × 424 and can capture depth images at 30 fps with millimetre precision [

26]. The depth images are saved in an array where each pixel value represents the distance from the camera in millimetres.

A camera mounting frame was needed to mount the three cameras and hold them steady while cows passed through the crush. The frame was built in order to be installed over the crush to prevent the cows from bumping the frame or injuring themselves.

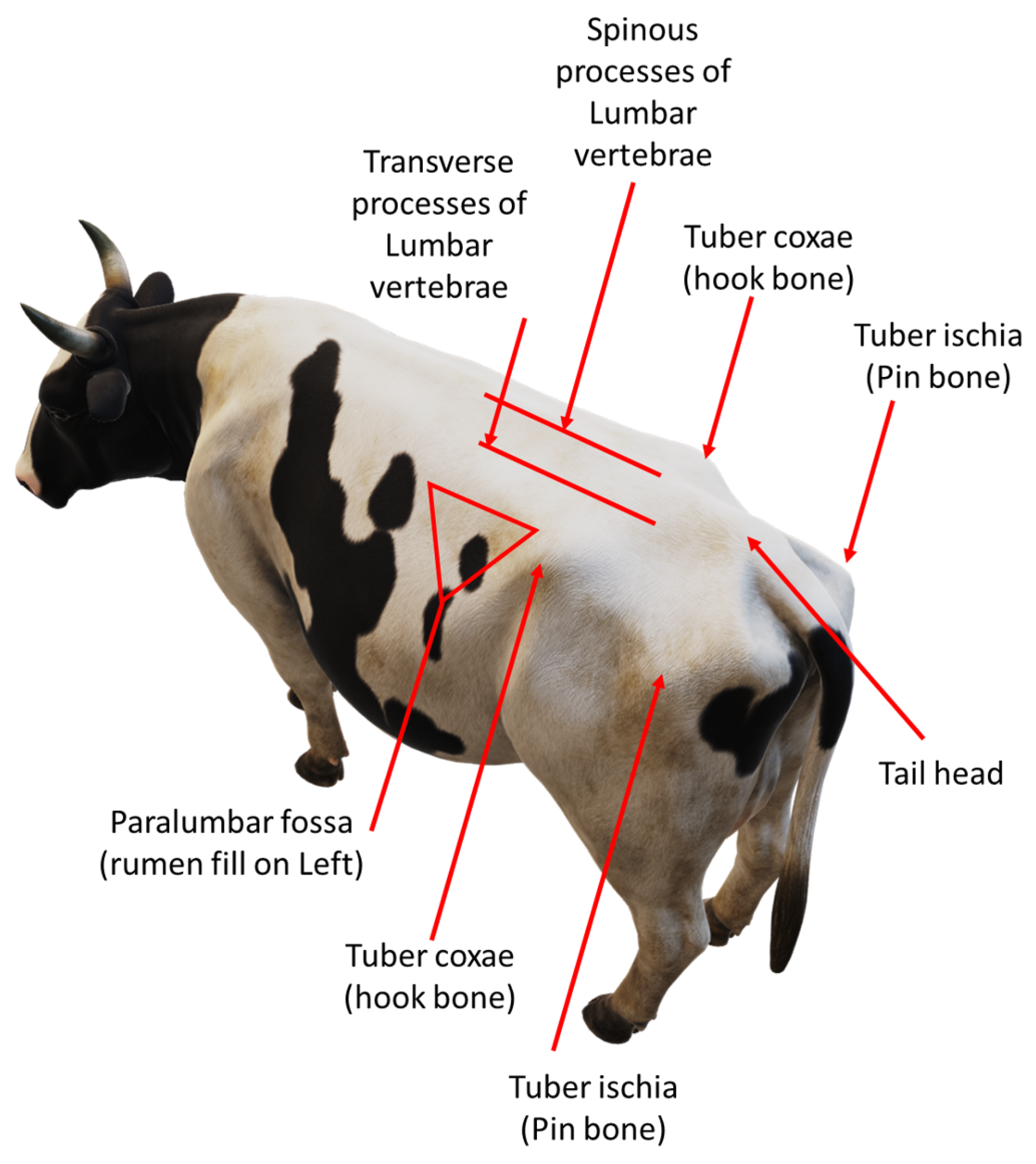

Figure 2a shows the frame with the cameras standing over the crush where the cows would pass through. The three camera angles that were chosen for this study are a top view, a rear view, and an angled view. These three camera angles captured the most important anatomical regions used for body condition scoring. These anatomical regions can be seen in

Figure 1. The rear, top and side regions of the cow are used in body condition scoring and are widely accepted as the most important regions for visually scoring a cow.

Figure 2b shows the placement of the three Kinect cameras on the frame.

The system made use of three Jetson Nano™ (Nvidia, Santa Clara, CA, USA) single-board computers to connect to the cameras. This ensured that the system did not encounter any USB bandwidth limits and meant that there was individual control over the cameras. The single-board computers were connected to a laptop using an ethernet LAN with gigabit connections. This setup meant that start and stop commands could be sent to the single-board computers for when to capture depth images. After each stop command, the depth images were sent to the laptop and backed up on an external hard drive.

The data collection for this study took place at a single dairy farm in Rayton near Pretoria, South Africa. The farm has a large dairy parlour in which over six hundred Holstein cows from the host farm and surrounding farms are milked three times a day. This variety meant that there was a large variation in size and body condition score amongst the cows.

Two experienced scorers performed the manual body condition scoring on all the cows used in this study according to the five-point BCS system. The distribution of the BCS scores for the animals in this study is illustrated in

Figure 3. The scores followed a similar distribution to related studies [

7,

9,

13].

Class imbalance is a common problem in machine learning. Automated body condition scoring research knows this problem all too well with the majority of the training samples sitting in the middle classes and a small number of samples in the edge classes, as can be seen in

Figure 3. There are numerous techniques available for dealing with imbalanced classes such as oversampling under-represented classes, undersampling over-represented classes, cost-sensitive learning, and even transfer learning [

27]. The technique chosen for this study was cost-sensitive learning and the approach is explained in detail in

Section 3.5.3.

Data from a total of 462 cows were used for this study. For the purposes of training the models, approximately 70% of the cows were used for training and 30% were used for testing. Since the Kinect cameras capture at 30 fps, multiple depth frames were captured for each cow that passed the cameras. This helped increase the amount of training data for the models. Since some cows passed quickly and some slowly underneath the camera frame, some cows ended up with a low number of frames captured and some had dozens of frames captured. In order to prevent a large difference in the amount of training data presented to the models from each camera, the number of depth frames per cow was limited to seven. This meant that each cow had at most seven depth frames captured per camera angle. The angled camera captured 2053 depth frames, the rear camera captured 2211 frames, and the top camera captured 2016 frames. A slightly different approach was used for splitting the training and testing data for the CNN models. The typical 70/30 split was performed on the cows and not the overall collected depth frames. In addition, only a single depth frame was used for each cow during testing. This approach was followed to prevent the models from producing the same results for each cow several times over. This meant that, while over 2000 frames were captured for training the models, only 137 unprocessed depth frames were used for testing. Due to data augmentation, which is explained later in this paper, this number was increased to 548.

3.2. Image Pre-Processing

The Kinect cameras have a good range of between 0.5 m and 4.5 m, in which they can accurately determine the distance to an object [

26]. While perfectly suited to this study, the depth images from the cameras contain large amounts of background noise in the form of background objects which may hinder the performance of the CNN models. Before the depth images are processed and converted into the different CNN channels, the background noise must first be removed.

Figure 4a shows the raw depth image from the angled Kinect camera. The floor of the inspection pen can be seen behind the cow. The crush can also be seen surrounding the cow. A general approach to removing the background is through background subtraction.

Figure 4a,

Figure 5a, and

Figure 6a show that the cows often cover these background objects, making background subtraction difficult without removing portions of the cow. Looking at the top camera view in

Figure 6a, an above-average-sized cow occasionally passes the camera and may touch the top bars of the crush. Therefore, instead of using background subtraction, the depth images were distance-limited, removing any objects which are further than a certain distance from the camera from the image, by zeroing all pixels larger than a certain threshold. The threshold distance was experimentally determined and was chosen to minimise background pixels whilst ensuring that all important anatomical features of the cow are present in the image. Unfortunately, this method does not remove all the background pixels. In principle, the CNN models should learn that these pixels can be ignored and do not affect the BCS of the cows.

Another pre-processing step which was performed is cropping. The depth images were cropped to a specific section of the image, which was found to be ideal for capturing the important regions of the cows as they pass the camera. This region of the image was also determined experimentally. The cropped images are the same size and of the same position in the image for each training and testing sample for each camera. The size and position of the cropped portion of the image are different for each camera, which is why

Figure 4b is square,

Figure 5b is a vertical rectangle, and

Figure 6b is a horizontal rectangle.

The angled camera perspective gives a clear view of the hook bones, pin bones, tail head, rumen-fill, and spinous processes. All of these anatomical regions are crucial to determining the body condition score of a cow. The result of the distance limiting and the cropping for the angled camera is shown in

Figure 4b.

The raw depth image from the rear camera has more background noise than the angled camera and can be seen in

Figure 5a. The rear camera gives a clear view of the tail head, hook bones, pin bones and spinous processes of the cow.

Figure 5b shows the result of the pre-processing of the rear camera depth image.

The top camera angle is the typical camera angle used by many automated body condition scoring studies.

Figure 6b shows that most of the important anatomical regions for body condition scoring are visible from the top camera angle.

Figure 6a shows that the raw depth image coming from the top camera also contains a substantial amount of background noise and objects. The top camera gives a clear view of the tail head, hook bones, pin bones, and spinous processes of the cow.

Figure 6b shows the result of the post-processing of the top camera depth image.

Figure 6b shows a fairly small and thin cow which is why there is so much space on either side of the cow.

Even though the three cameras observe similar anatomical regions of the cows, the angles at which these regions are observed are different. This allows the cameras to gather different visual information about each cow which is then presented to the CNN models.

3.3. CNN Channel Image Processing

After the depth images were pre-processed, each depth image was transformed to produce two additional images that would be used as additional input channels to the models. The objective is to provide the models with additional information in order for the CNN models to determine which information is most useful. The two transformations that were chosen are binarization and a first derivative filter.

The derivative transform has not been used in previous studies; however, Alvarez et al. [

13] made use of the Fourier transform. The derivative transform was chosen with the idea that an over-conditioned cow would produce lower gradient values due to fewer bones protruding and the cow being more rounded whilst an under-conditioned cow would produce larger gradient values due to the protruding of bones leading to larger gradients on the surface of the cow.

The outline of a cow has already been used in previous studies for BCS predictions [

4] and has been shown to produce good results. However, the binarized depth image provides the same information but is more pronounced compared to a single thin edge and is likely to be more useful to the CNN model; therefore, binarization is used instead of edge detection. Binarization is an image processing technique in which a set threshold value is used to decide whether a pixel should be set to a one or a zero. Any pixels further away than this threshold are set to 0 and any pixels closer than this threshold are set to 1. The threshold value used in this process was determined experimentally and was chosen such that a silhouette of the cow is formed from the depth image.

The first derivative process calculates the first derivative of the depth image. This process produces an array with the contour gradients over the cow. The gradients were calculated from left to right. Once the gradients were calculated, any gradients above a threshold value were set to zero since some gradients were relatively large along the edge of objects in the depth image compared to the gradients across the body of the cow.

3.4. Data Augmentation

Data augmentation is a common technique used in machine learning to increase the number of training and/or testing samples available by introducing slight changes to these samples [

28]. There are many forms of data augmentation. In the field of image-based machine learning, techniques such as geometric transformations, sub-sampling and even filters can be used to slightly change or augment images. In the specific use case of CNN-based BCS models, techniques such as flipping or rotating the images are often used [

29]. Unfortunately, rotating the depth image can only be performed if perfect background subtraction has been performed, resulting in only the cow being present in the image. Similarly, flipping the image can only be performed for the top and rear cameras; however, it would not be possible for the angled camera due to the asymmetry. Therefore, the chosen data augmentation technique for this study was sub-sampling. Image sub-sampling, also known as image down-sampling or image decimation, is a technique used in image processing to reduce the size or resolution of an image [

30]. It involves reducing the number of pixels in an image while attempting to preserve the important visual information and overall appearance of the image. For this study, each depth image was sub-sampled by a factor of 2, meaning that every second pixel across and every second pixel down was taken to form a new image. This yielded four new images with slightly different information and at half the resolution of the original depth image. This was performed both to increase the number of training samples and to decrease the size of the image being fed into the CNN.

Due to data augmentation, the number of training and testing samples was increased by a factor of four.

Table 1 and

Figure 10 show the distribution of training data across the different cameras and BCS values.

3.6. Model Performance Evaluation

When evaluating the performance of any model, it is important to use the correct analysis for the specific experiment. In the case of automated body condition scoring, model accuracy and F1-scores are often used. The accuracy is often given in tolerance bands, where the accuracy is calculated by observing how often the model estimates the exact BCS value or estimates within 0.25 or 0.5 of the actual BCS value. Other metrics such as MAE are also often used; however, since the current state-of-the-art generally evaluates the model accuracy, precision, recall, and F1-score, the results in this paper did as well.

Precision is defined as the ratio of true positive predictions to the total positive predictions. In the view of BCS, where multiple classes are present, this can be seen as the ratio of true positive predictions to the total positive predictions for a specific class. For example, when calculating the precision of a model for all cows with a BCS of 3.0, precision is calculated by dividing the number of times the model correctly predicts a cow to be a 3.0 (true positive) by the number of times the model incorrectly predicted a cow to be a 3.0 (false positive).

Recall measures the ratio of true positive predictions to the total actual positives. For example, when calculating the precision of a model for all cows with a BCS of 3.0, recall is calculated by dividing the number of times the model correctly predicts a cow to be a 3.0 (true positive) by the number of times the model incorrectly predicted a cow to have a BCS other than 3.0 (false negative).

The F1-score is the harmonic mean of precision and recall, balancing the trade-off between these two metrics. The goal of the F1-score is to provide a single metric that weights the two ratios (precision and recall) in a balanced way, requiring both to have a higher value for the F1-score value to rise. This means that, if, for example, one model has ten times the precision compared to a second model, the F1-score will not increase ten-fold. The F1-score is a valuable metric for identifying models with both good precision and recall, and since the F1-score can be calculated on a per-class basis, it is possible to see which models have a greater or poorer performance for specific classes.

The accuracy of a typical model can be calculated as

and is generally a good indicator of the performance of a model and serves as a good metric to compare various models.

The precision, recall, and F1-score of a model for a single class can be calculated with

where

is the number of true positives,

is the number of false positives, and

is the number of false negatives for the model with

c as the class for which the F1-score is calculated.

Values for precision, recall, and F1-scores are usually calculated for a single class. However, in this paper, the models produce estimations for numerous unbalanced classes. Therefore, instead of producing precision, recall, and F1-score values for each class, a single weighted F1-score can be calculated with the following equation:

where