Frontier Research on Low-Resource Speech Recognition Technology

Abstract

:1. Introduction

- (1)

- The research status of low-resource speech recognition is described from three aspects: feature extraction, acoustic model, and resource expansion.

- (2)

- The technical challenges faced in realizing low-resource language recognition are analyzed.

- (3)

- For low-resource conditions, the future research directions of speech recognition technology are prospected and measures that can be solved are proposed.

2. Speech Recognition Technology

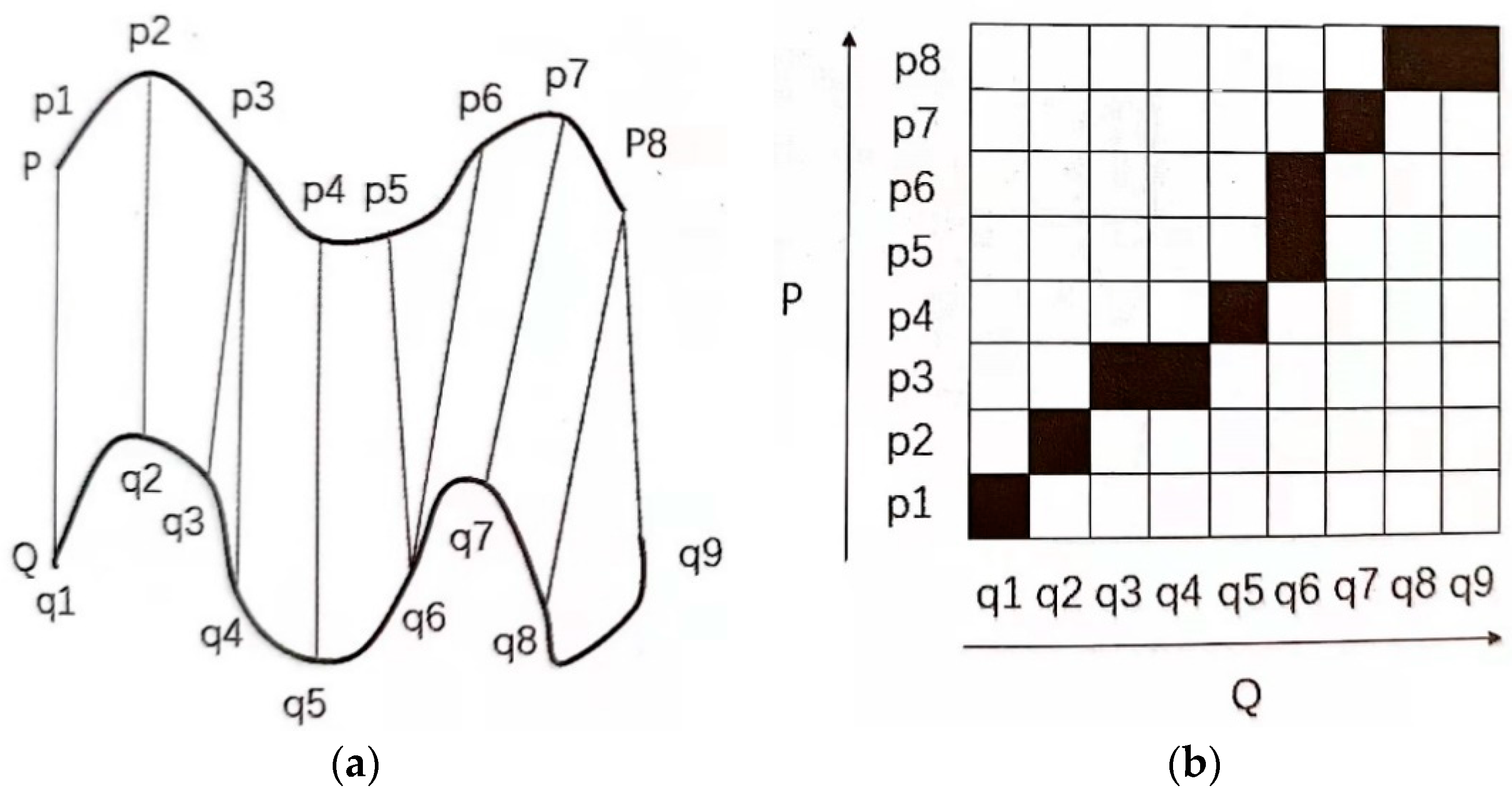

2.1. DTW

2.2. GMM-HMM/DNN-HMM

2.3. End-to-End Model

3. Feature Extraction

3.1. General Approach to Feature Extraction

3.2. DNN-Based Approach to Extract Deep Acoustic Features

4. Acoustic Model

4.1. General Approach to Acoustic Modeling

4.2. DNN-Based Approach to Build Acoustic Model

4.3. GMM-Based Approach to Building an Acoustic Model

5. Low-Resource Speech Recognition Resource Expansion

5.1. Data Resource Expansion

5.2. Pronunciation Dictionary Extension

6. Technical Challenges and Prospects

7. Discussion

8. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| WER | Word Error Rate |

| SGMM | Subspace Gaussian Mixture Model |

| LDA | Linear Discriminant Analysis |

| HDA | Heteroscedastic Discriminant Analysis |

| GLRDA | Generalized Likelihood Ratio Discriminant Analysis |

| MLLR | Maximum Likelihood Linear Regression |

| FMLLR | Feature-space Maximum Likelihood Linear Regression |

| VTLN | Vocal Tract Length Nomalization |

| HMM | Hidden Markov Model |

| MFCC | Mel Frequency Cepstrum Coefficient |

| PLP | Perceptual Linear Predictive |

| MLP | Multi-Layor Perceptron |

| DNN | Deep Neural Network |

| BN | Bottleneck |

| GMM-HMM | Gaussian Mixture Model Hidden Markov Model |

| DNN-HMM | Deep Neural Network Hidden Markov Model |

| SHL-MDNN | Shared-Hidden-Layer Multilingual Deep Neural Network |

| LRMF | Low-Rank Matrix Factorization |

| CNN | Convolutional Neural Network |

| AM | Amplitude Modulation |

| TDNN | Time-Delayed Neural Network |

| CTC | Connectionist Temporal Classification |

| AF | Articulatory Feature |

| SBF | Stacked Bottleneck Feature |

| BLSTM | Bidirectional Long Short-Term Memory |

| CD-DNN-HMM | Context-Dependent Deep Neural Network Hidden Markov Model |

| KLD | Kullback-Leibler Divergence |

| KL-HMM | Kullback-Leibler Hidden Markov Models |

| SHL-MLSTM | Shared-Hidden-Layer Multilingual Long Short-Term Memory |

| G2P | Grapheme-to-Phoneme |

| VTLP | Vocal Tract Length Perturbation |

| SP | Speed Perturbation |

| SFM | Stochastic Feature Mapping |

References

- Besacier, L.; Barnard, E.; Karpov, A.; Schultz, T. Automatic Speech Recognition for Under-Resourced Languages: A Survey. Speech Commun. 2014, 56, 85–100. [Google Scholar] [CrossRef]

- Thomas, S. Data-Driven Neural Network-Based Feature Front-Ends for Automatic Speech Recognition. Ph.D. Thesis, The Johns Hopkins University, Baltimore, MD, USA, 2012. [Google Scholar]

- Imseng, D.; Bourlard, H.; Dines, J.; Garner, P.N.; Doss, M.M. Applying multi-and cross-lingual stochastic phone space transformations to non-native speech recognition. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 1713–1726. [Google Scholar] [CrossRef]

- Burget, L.; Schwarz, P.; Agarwal, M.; Akyazi, P.; Feng, K.; Ghoshal, A.; Glembek, O.; Goel, N.; Karafiát, M.; Povey, D.; et al. Multilingual acoustic modeling for speech recognition based on subspace Gaussian mixture models. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 4334–4337. [Google Scholar]

- Le, V.B.; Besacier, L. Automatic Speech Recognition for Under-Resourced Languages: Application to Vietnamese Language. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 1471–1482. [Google Scholar]

- IARPA. The Babel Program. Available online: http://www.iarpa.gov/index.php/research-programs/babel (accessed on 1 December 2021).

- Cui, J.; Cui, X.; Ramabhadran, B.; Kim, J.; Kingsbury, B.; Mamou, J.; Mangu, L.; Picheny, M.; Sainath, T.N.; Sethy, A. Developing speech recognition systems for corpus indexing under the IARPA BABEL program. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6753–6757. [Google Scholar]

- Miao, Y.; Metze, F.; Rawat, S. Deep maxout networks for low-resource speech recognition. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Olomouc, Czech Republic, 8–12 December 2013; pp. 398–403. [Google Scholar]

- Chen, G.; Khudanpur, S.; Povey, D.; Trmal, J.; Yarowsky, D.; Yilmaz, O. Quantifying the value of pronunciation lexicons for keyword search in low-resource languages. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 8560–8564. [Google Scholar]

- Karafiát, M.; Grézl, F.; Hannemann, M.; Veselý, K.; Cernocký, J. BUT BABEL system for spontaneous Cantonese. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Lyon, France, 25–29 August 2013; pp. 2589–2593. [Google Scholar]

- Yanmin, Q.; Jia, L. Acoustic modeling of unsupervised speech recognition based on optimized data selection strategy under low data resource conditions. J. Tsinghua Univ. 2013, 7, 1001–1004. [Google Scholar]

- Cai, M.; Lv, Z.; Lu, C.; Kang, J.; Hui, L.; Zhang, Z.; Liu, J. High-performance Swahili keyword search with very limited language pack: The THUEE system for the OpenKWS15 evaluation. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; pp. 215–222. [Google Scholar]

- Jia, L.; Zhang, W. Research progress on several key technologies of low-resource speech recognition. Data Acquis. Process. 2017, 32, 205–220. [Google Scholar]

- Canavan, A.; David, G.; George, Z. Callhome American English Speech. Available online: https://catalog.ldc.upenn.edu/LDC97S42 (accessed on 15 December 2021).

- Lu, L.; Ghoshal, A.; Renals, S. Cross-lingual subspace gaussian mixture models for low-resource speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 17–27. [Google Scholar] [CrossRef]

- Do, V.H.; Xiao, X.; Chng, E.S.; Li, H. Context-dependent phone mapping for LVCSR of under-resourced languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Hanoi, Vietnam, 13–15 November 2012; pp. 500–504. [Google Scholar]

- Zhang, W.; Fung, P. Sparse inverse covariance matrices for low resource speech recognition. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 3862–3866. [Google Scholar] [CrossRef]

- Qian, Y.; Yu, K.; Liu, J. Combination of data borrowing strategies for low-resource LVCSR. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Olomouc, Czech Republic, 8–12 December 2013; pp. 404–409. [Google Scholar]

- Davis, S.; Mermelstein, P. Comparison of Parametric Representations for Monosyllabic Word Recognition in Continuously Spoken Sentences. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Hermansky, H. Perceptual linear predictive (PLP) analysis of speech. J. Acoust. Soc. Am. 1990, 87, 1738. [Google Scholar] [CrossRef]

- Ripley, B.D. Pattern Recognition and Neural Networks; Cambridge University Press: Cambridge, MA, UK, 1996. [Google Scholar]

- Umbach, R.; Ney, H. Linear discriminant analysis for improved large vocabulary continuous speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), San Francisco, CA, USA, 23–26 March 1992; pp. 13–16. [Google Scholar]

- Doddington, G.R. Phonetically sensitive discriminants for improved speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Glasgow, UK, 23–26 May 1989; pp. 556–559. [Google Scholar]

- Zahorian, S.A.; Qian, D.; Jagharghi, A.J. Acoustic-phonetic transformations for improved speaker-independent isolated word recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 14–17 April 1991; pp. 561–564. [Google Scholar]

- Hunt, M.J.U.; Lefebvre, C. A comparison of several acoustic representations for speech recognition with degraded and undegraded speech. In Proceedings of the IEEE Transactions on Audio, Speech, and Language Processing, Glasgow, UK, 23–26 May 1989; pp. 262–265. [Google Scholar]

- Kumar, N.; Andreou, A.G. Heteroscedastic discriminant analysis and reduced rank HMMs for improved speech recognition. Speech Commun. 1998, 26, 283–297. [Google Scholar] [CrossRef]

- Lee, H.S.; Chen, B. Generalised likelihood ratio discriminant analysis. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Moreno, Italy, 13 November–17 December 2009; pp. 158–163. [Google Scholar]

- Leggetter, C.J.; Woodland, P.C. Maximum likelihood linear regression for speaker adaptation of continuous density hidden Markov models. Comput. Speech Lang. 1995, 9, 171–185. [Google Scholar] [CrossRef]

- Varadarajan, B.; Povey, D.; Chu, S.M. Quick FMLLR for speaker adaptation in speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 31 March–4 April 2008; pp. 4297–4300. [Google Scholar]

- Ghoshal, A.; Povey, D.; Agarwal, M.; Akyazi, P.; Burget, L.; Feng, K.; Glembek, O.; Goel, N.; Karafiát, M.; Rastrow, A.; et al. A novel estimation of feature space MLLR for full-covariance models. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 4310–4313. [Google Scholar]

- Lee, L.; Rose, R. A Frequency Warping Approach to Speaker Normalization. IEEE Trans. Audio Speech Lang. Process. 1998, 6, 49–59. [Google Scholar] [CrossRef]

- Rath, S.P.; Umesh, S. Acoustic class specific VTLN-warping using regression class trees. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brighton, UK, 6–10 September 2009; pp. 556–559. [Google Scholar]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Fisher, R.A. The statistical utilization of multiple measurements. Ann. Eugen. 2011, 8, 376–386. [Google Scholar] [CrossRef]

- Chistyakov, V.P. Linear statistical inference and its applications. USSR Comput. Math. Math. Phys. 1966, 6, 780. [Google Scholar] [CrossRef]

- Woodland, P.C. Speaker adaptation for continuous density HMMs: A review. In Proceedings of the ISCA Tutorial and Research Workshop (ITRW) on Adaptation Methods for Speech Recognition, Sophia Antipolis, France, 29–30 August 2001; pp. 29–30. [Google Scholar]

- Hermansky, H.; Daniel, P.W.; Sharma, S. Tandem connectionist feature extraction for conventional hmm systems. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Istanbul, Turkey, 5–9 June 2000; pp. 1635–1638. [Google Scholar]

- Grezl, F.; Karafiat, M.; Kontar, S.; Cernocky, J. Probabilistic and Bottle-neck Features for LVCSR of Meetings. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Honolulu, HI, USA, 15–20 April 2007; pp. 757–760. [Google Scholar]

- Veselý, K.; Karafiát, M.; Grézl, F.; Janda, M.; Egorova, E. The language- independent bottleneck features. In Proceedings of the IEEE Workshop on Spoken Language Technology (SLT), Miami, FL, USA, 2–5 December 2012; pp. 336–341. [Google Scholar]

- Ye-bo, B.; Hui, J.; Dai, L.; Liu, C. Incoherent training of deep neural networks to de-correlate bottleneck features for speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6980–6984. [Google Scholar]

- Sivadas, S.; Hermansky, H. On use of task independent training data in tandem feature extraction. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Montreal, QC, Canada, 17–21 May 2004; pp. 541–544. [Google Scholar]

- Pinto, J. Multilayer Perceptron Based Hierarchical Acoustic Modeling for Automatic Speech Recognition. Available online: https://infoscience.epfl.ch/record/145889 (accessed on 10 December 2021).

- Stolcke, A.; Grezl, F.; Hwang, M.; Lei, X.; Morgan, N.; Vergyri, D. Cross-domain and cross-language portability of acoustic features estimated by multilayer perceptrons. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toulouse, France, 14–19 May 2006; pp. 321–324. [Google Scholar]

- Huang, J.T.; Li, J.; Yu, D.; Deng, L.; Gong, Y.F. Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 7304–7308. [Google Scholar]

- Karafiat, M.; Vesely, K.; Szoke, L.; Burget, L.; Grezl, F.; Hannemann, M.; Černocký, J. BUT ASR system for BABEL Surprise evaluatin 2014. In Proceedings of the IEEE Workshop on Spoken Language Technology (SLT), South Lake Tahoe, NV, USA, 7–10 December 2014; pp. 501–506. [Google Scholar]

- Thomas, S.; Ganapathy, S.; Hermansky, H. Multilingual MLP features for low-resource LVCSR systems. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 4269–4272. [Google Scholar]

- Thomas, S.; Ganapathy, S.; Jansen, A. Cross-lingual and multistream posterior features for low resource LVCSR systems. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Makuhari, Chiba, Japan, 26–30 September 2010; pp. 877–880. [Google Scholar]

- Zhang, Y.; Chuangsuwanich, E.; Glass, J.R. Extracting deep neural network bottleneck features using low-rank matrix factorization. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 185–189. [Google Scholar]

- Miao, Y.J.; Metze, F. Improving language-universal feature extraction with deep maxout and convolutional neural networks. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Singapore, 14–18 September 2014; pp. 800–804. [Google Scholar]

- Sailor, H.B.; Maddala, V.S.K.; Chhabra, D.; Patil, A.P.; Patil, H. DA-IICT/IIITV System for Low Resource Speech Recognition Challenge 2018. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 3187–3191. [Google Scholar]

- Yi, J.; Tao, J.; Bai, Y. Language-invariant bottleneck features from adversarial end-to-end acoustic models for low resource speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6071–6075. [Google Scholar]

- Shetty, V.M.; Sharon, R.A.; Abraham, B.; Seeram, T.; Prakash, A. Articulatory and Stacked Bottleneck Features for Low Resource Speech Recognition. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 3202–3206. [Google Scholar]

- Yi, J.; Tao, J.; Wen, Z.; Bai, Y. Adversarial multilingual training for low-resource speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4899–4903. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Miao, Y.; Gowayyed, M.; Metze, F. Eesen: Endto-end speech recognition using deep rnn models and wfst-based decoding. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU); 2016; pp. 167–174. [Google Scholar]

- Kim, S.; Hori, T.; Watanabe, S. Joint ctc-attention based end-to-end speech recognition using multi-task learning. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4835–4839. [Google Scholar]

- Schmidbauer, O. Robust statistic modelling of systematic variabilities in continuous speech incorporating acoustic-articulatory relations. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Glasgow, UK, 23–26 May 1989; pp. 616–619. [Google Scholar]

- Elenius, K.; Takács, G. Phoneme recognition with an artificial neural network. In Proceedings of the Second European Conference on Speech Communication and Technology, Amsterdam, The Netherlands, 25–27 September 1991; pp. 121–124. [Google Scholar]

- Eide, E.; Rohlicek, J.R.; Gish, H.; Mitter, S. A linguistic feature representation of the speech waveform. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Minneapolis, MN, USA, 27–30 April 1993; pp. 483–486. [Google Scholar]

- Deng, L.; Sun, D. Phonetic classification and recognition using hmm representation of overlapping articulatory features for all classes of english sounds. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Adelaide, South Australia, Australia, 19–22 April 1994; p. I-45. [Google Scholar]

- Erle, K.; Freeman, G.H. An hmm-based speech recognizer using overlapping articulatory features. J. Acoust. Soc. Am. 1996, 100, 2500–2513. [Google Scholar] [CrossRef]

- Kirchhoff, K.; Fink, G.A.; Sagerer, S. Combining acoustic and articulatory feature information for robust speech recognition. Speech Commun. 2002, 37, 303–319. [Google Scholar] [CrossRef]

- Lal, P.; King, S. Cross-lingual automatic speech recognition using tandem features. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 2506–2515. [Google Scholar] [CrossRef]

- Huybrechts, G.; Merritt, T.; Comini, G.; Perz, B.; Shah, R.; Lorenzo-Trueba, J. Low-resource expressive text-to-speech using data augmentation. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6593–6597. [Google Scholar]

- Toth, L.; Frankel, J.; Gosztolya, G.; King, S. Cross-lingual portability of mlp-based tandem features–a case study for english and hungarian. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brisbane, Australia, 22–26 September 2008; pp. 22–26. [Google Scholar]

- Cui, J.; Kingsbury, B.; Ramabhadran, B.; Sethy, A.; Audhkhasi, K.; Kislal, E.; Mangu, L.; Picheny, M.; Golik, P.; Schluter, R.; et al. Multilingual representations for low resource speech recognition and keyword search. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Scottsdale, AZ, USA, 13–17 October 2015; pp. 259–266. [Google Scholar]

- Mohamed, A.; Dahl, G.E.; Hinton, G. Acoustic modeling using deep belief networks. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 14–22. [Google Scholar] [CrossRef]

- Dahl, G.E.; Yu, D.; Deng, L.; Acero, A. Context-dependent pre-trained deep neural networks for large vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 30–42. [Google Scholar] [CrossRef]

- Imseng, D.; Bourlard, H.; Garner, P.N. Using KL-divergence and multilingual information to improve ASR for under-resourced languages. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 4869–4872. [Google Scholar]

- Imseng, D.; Bourlard, H.; Dines, J.; Garner, P.N.; Magimai, M. Improving non-native ASR through stochastic multilingual phoneme space transformations. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Florence, Italy, 27–31 August 2011; pp. 537–540. [Google Scholar]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.H.; Chen, Z.F.; Zhang, Y.; Wang, Y.X.; Agiomyrgiannakis, Y.; et al. Natural TTS synthesis by conditioning wavenet on MEL spectrogram predictions. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4779–4783. [Google Scholar]

- William, C.; Ian, L. Deep convolutional neural networks for acoustic modeling in low resource languages. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2015; pp. 2056–2060. [Google Scholar]

- Karafidt, M.; Baskar, M.K.; Vesely, K.; Grezl, F.; Burget, L.; Cernocky, J. Analysis of Multilingual Blstm Acoustic Model on Low and High Resource Languages. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5789–5793. [Google Scholar]

- Zhou, S.; Zhao, Y.; Shuang, X.; Bo, X. Multilingual Recurrent Neural Networks with Residual Learning for Low-Resource Speech Recognition. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Stockholm, Sweden, 20–24 August 2017; pp. 704–708. [Google Scholar]

- Fathima, N.; Patel, T.; Mahima, C.; Iyengar, A. TDNN-based Multilingual Speech Recognition System for Low Resource Indian Languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 3197–3201. [Google Scholar]

- Pulugundla, B.; Baskar, M.K.; Kesiraju, S.; Egorova, E.; Karafiat, M.; Burget, L.; Cernocky, J. BUT System for Low Resource Indian Language ASR. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 3182–3186. [Google Scholar]

- Abraham, B.; Seeram, T.; Umesh, S. Transfer Learning and Distillation Techniques to Improve the Acoustic Modeling of Low Resource Languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Stockholm, Sweden, 20–24 August 2017; pp. 2158–2162. [Google Scholar]

- Zhao, J.; Lv, Z.; Han, A.; Wang, G.B.; Shi, G.; Kang, J.; Yan, J.H.; Hu, P.F.; Huang, S.; Zhang, W.Q. The TNT Team System Descriptions of Cantonese and Mongolian for IARPA OpenASR20. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4344–4348. [Google Scholar]

- Alum, A.T.; Kong, J. Combining Hybrid and End-to-end Approaches for the OpenASR20 Challenge. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4349–4353. [Google Scholar]

- Lin, H.; Zhang, Y.; Chen, C. Systems for Low-Resource Speech Recognition Tasks in Open Automatic Speech Recognition and Formosa Speech Recognition Challenges. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4339–4343. [Google Scholar]

- Madikeri, S.; Motlicek, P.; Bourlard, H. Multitask adaptation with Lattice-Free MMI for multi-genre speech recognition of low resource languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4329–4333. [Google Scholar]

- Morris, E.; Jimerson, R.; Prud’hommeaux, E. One size does not fit all in resource-constrained ASR. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4354–4358. [Google Scholar]

- Zhang, W.; Fung, P. Low-resource speech representation learning and its applications. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 3862–3866. [Google Scholar]

- Povey, D.; Burget, L.; Agarwal, M.; Akyazi, P.; Feng, K.; Ghoshal, A.; Glembek, O.; Karafiát, M.; Rastrow, A.; Rose, R.; et al. Subspace Gaussian mixture models for speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 4330–4333. [Google Scholar]

- Battenberg, E.; Skerry-Ryan, R.J.; Mariooryad, S.; Stanton, D.; Kao, D.; Shannon, M.; Bagby, T. Location-relative attention mechanisms for robust long-form speech synthesis. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6194–6198. [Google Scholar]

- Qian, Y.; Povey, D.; Liu, J. State-Level Data Borrowing for Low-Resource Speech Recognition Based on Subspace GMMs. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Florence, Italy, 27–31 August 2011; pp. 553–560. [Google Scholar]

- Miao, Y.; Metze, F.; Waibel, A. Subspace mixture model for low-resource speech recognition in cross-lingual settings. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–30 May 2013; pp. 7339–7343. [Google Scholar]

- Abdel-Hamid, O.; Mohamed, A.; Jiang, H.; Penn, G. Applying convolutional neural networks concepts to hybrid NN-HMM model for speech recognition. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 4277–4280. [Google Scholar]

- Grézl, F.; Karafiát, M.; Janda, M. Study of probabilistic and bottle-neck features in multilingual environment. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Waikoloa, HI, USA, 11–15 December 2011; pp. 359–364. [Google Scholar]

- Grézl, F.; Karafiát, M. Adapting multilingual neural network hierarchy to a new language. In Proceedings of the 4th International Workshop on Spoken Language Technologies for Under-Resourced Languages SLTU-2014, Petersburg, Russia, 14–16 May 2014; pp. 39–45. [Google Scholar]

- Mller, M.; Stker, S.; Sheikh, Z.; Metze, F.; Waibel, A. Multilingual deep bottleneck features: A study on language selection and training techniques. In Proceedings of the 11th International Workshop on Spoken Language Translation (IWSLT), Lake Tahoe, CA, USA, 4–5 December 2014; pp. 4–5. [Google Scholar]

- Tuske, Z.; Nolden, D.; Schluter, R.; Ney, H. Multilingual MRASTA features for low-resource keyword search and speech recognition systems. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 5607–5611. [Google Scholar]

- Weiss, R.J.; Skerry-ryan, R.J.; Battenberg, E.; Mariooryad, S.; Kingma, D. Wave-tacotron: Spectrogram-free end-to-end text-to-speech synthesis. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 5679–5683. [Google Scholar]

- Marcos, L.M.; Richardson, F. Multi-lingual deep neural networks for language recognition. In Proceedings of the IEEE Workshop on Spoken Language Technology (SLT), San Diego, CA, USA, 13–16 December 2016; pp. 330–334. [Google Scholar]

- Peterson, A.K.; Tong, A.; Yu, Y. OpenASR20: An Open Challenge for Automatic Speech Recognition of Conversational Telephone Speech in Low-Resource Languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4324–4328. [Google Scholar]

- Wang, A.Y.; Snyder, D.; Xu, H.; Manohar, V.; Khudanpur, S. The JHU ASR system for VOiCES from a distance challenge 2019. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Graz, Austria, 15–19 September 2019; pp. 2488–2492. [Google Scholar]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. SpecAugment: A simple data augmentation method for automatic speech recognition. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Graz, Austria, 15–19 September 2019; pp. 2613–2617. [Google Scholar]

- Kharitonov, E.; Rivière, M.; Synnaeve, G.; Wolf, L.; Mazare, P.E.; Douze, M.; Dupoux, E. Data augmenting contrastive leaning of speech representations in the time domain. In Proceedings of the IEEE Workshop on Spoken Language Technology (SLT), Shenzhen, China, 13–16 December 2020; pp. 1–5. [Google Scholar]

- Liao, Y.F.; Chang, C.Y.; Tiun, H.K.; Su, H.L.; Khoo, H.L.; Tsay, J.S.; Tan, L.K.; Kang, P.; Thiann, T.G.; Iunn, U.G.; et al. For- mosa speech recognition challenge 2020 and taiwanese across taiwan corpus. In Proceedings of the 2020 23rd Conference of the Oriental CO-COSDA International Committee for the Co-Ordination and Stan-Dardisation of Speech Databases and Assessment Techniques (OCOCOSDA), Yangon, Myanmar, 5–7 November 2020; pp. 65–70. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y. Conformer: Convolution-augmented Transformer for Speech Recognition. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Shanghai, China, 25–29 October 2020; pp. 5036–5040. [Google Scholar]

- Lu, Y.; Li, Z.; He, D.; Sun, Z.; Dong, B.; Qin, T.; Wang, L.; Liu, T. Understanding and improving transformer from a multi-particle dynamic system point of view. arXiv 2020, arXiv:1906.02762. [Google Scholar]

- Medennikov, I.; Khokhlov, Y.; Romanenko, A.; Sorokin, I.; Zatvornitskiy, A. The STC ASR system for the voices from a distance challenge 2019. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Graz, Austria, 15–19 September 2019; pp. 2453–2457. [Google Scholar]

- Thai, B.; Jimerson, R.; Ptucha, R.; Prudhommeaux, E. Fully convolutional ASR for less-resourced endangered languages. In Proceedings of the lst Joint Workshop on Spoken Language Technologies for Under-Resourced Languages (SLTU) and Collaboration and Computing for Under-Resourced Languages (CCURL), Marseille, France, 11–12 May 2020; pp. 126–130. [Google Scholar]

- Sivaram, G.; Hermansky, H. Sparse multilayer perceptron for phoneme recognition. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 23–29. [Google Scholar] [CrossRef]

- Chen, S.; Gopinath, R. Model Selection in Acoustic Modeling. In Proceedings of the European Conference on Speech Communication and Technology (EUROSPEECH’99), Budapest, Hungary, 5–9 September 1999; pp. 1087–1090. [Google Scholar]

- Bilmes, J. Factored sparse inverse covariance matrices. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Istanbul, Turkey, 5–9 June 2000; p. 2. [Google Scholar]

- Gales, M. Semi-tied covariance matrices for hidden Markov models. IEEE Trans. Speech Audio Process. 1999, 7, 272–281. [Google Scholar] [CrossRef]

- Jaitly, N.; Hinton, G.E. Vocal tract length perturbation (VTLP) improves speech recognition. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Tuske, Z.; Golik, P.; Nolden, D.; Schluter, R.; Ney, H. Data augmentation, feature combination, and multilingual neural networks to improve ASR and KWS performance for low-resource languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Singapore, 14–18 September 2014; pp. 1420–1424. [Google Scholar]

- Deng, L.; Acero, A.; Plumpe, M.; Huang, X. Large-vocabulary speech recognition under adverse acoustic environments. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Beijing, China, 16–20 October 2000; pp. 806–809. [Google Scholar]

- Ko, T.; Peddinti, V.; Povey, D. A study on data augmentation of reverberant speech for speech recogni-tion. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5220–5224. [Google Scholar]

- Gauthier, E.; Besacier, L.; Voisin, S. Speed perturbation and vowel duration modeling for ASR in Hausa and Wolof languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), San Francisco, CA, USA, 8–12 September 2016; pp. 3529–3533. [Google Scholar]

- Pandia, D.S.K.; Prakash, A.; Kumar, M.R.; Murthy, H.A. Exploration of End-to-end Synthesisers for Zero Resource Speech Challenge 2020. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Shanghai, China, 25–29 October 2020. [Google Scholar]

- Morita, T.; Koda, H. Exploring TTS without T Using Biologically/Psychologically Motivated Neural Network Modules (ZeroSpeech 2020). In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Shanghai, China, 25–29 October 2020; pp. 4856–4860. [Google Scholar]

- Vesely, K.; Hannemann, M.; Burget, L. Semi-supervised training of Deep Neural Networks. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Olomouc, Czech Republic, 8–12 December 2013; pp. 267–272. [Google Scholar]

- Cui, X.; Goel, V.; Kingsbury, B. Data augmentation for deep neural network acoustic modeling. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1469–1477. [Google Scholar]

- Ragni, A.; Knill, K.M.; Rath, S.P.; Gales, M.J.F. Data augmentation for low resource languages. In Proceedings of the Annual Conference of International Speech Communication Association (INTERSPEECH), Singapore, 14–18 September 2014; pp. 810–814. [Google Scholar]

- Tim, S.; Ochs, S.; Tanja, S. Web-based tools and methods for rapid pronunciation dictionary creation. Speech Commun. 2014, 56, 101–118. [Google Scholar]

- Bisani, M.; Ney, H. Joint-sequence models for grapheme-to-phoneme conversion. Speech Commun. 2008, 50, 434–451. [Google Scholar] [CrossRef]

- Rao, K.; Peng, F.; Sak, H.; Beaufays, F. Grapheme-to-phoneme conversion using long short-term memory recurrent neural networks. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, 19–24 April 2015; pp. 4225–4229. [Google Scholar]

- Sneff, S. Reversible sound-to-letter modeling based on syllable structure. In Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics, Rochester, NY, USA, 22–27 April 2007; pp. 153–156. [Google Scholar]

- Schlippe, T.; Ochs, S.; Vu, N.T.; Schultz, T. Automatic error recovery for pronunciation dictionaries. In Proceedings of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012; pp. 2298–2301. [Google Scholar]

- Lu, L.; Ghoshal, A.; Renals, S. Acoustic data-driven pronunciation lexicon for large vocabulary speech recognition. In Proceedings of the IEEE Automatic Speech Recognition & Understanding Workshop (ASRU), Olomouc, Czech Republic, 8–12 December 2013; pp. 374–3794. [Google Scholar]

- Prenger, R.; Valle, R.; Catanzaro, B. Waveglow: A Flow-based Generative Network for Speech Synthesis. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3617–3621. [Google Scholar]

- Chen, G.; Povey, D.; Khudanpur, S. Acoustic data-driven pronunciation lexicon generation for logographic languages. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5350–5354. [Google Scholar]

- Vozila, P.; Adams, J.; Lobacheva, Y.; Thomas, R. Grapheme to Phoneme Conversion and Dictionary Verification using Graphonemes. In Proceedings of the 8th European Conference on Speech Communication and Technology, Geneva, Switzerland, 1–4 September 2003; pp. 2469–2472. [Google Scholar]

- Wiktionary—A Wiki-Based Open Content Dictionary. Available online: https://www.wiktionary.org (accessed on 9 December 2021).

- Schlippe, T.; Djomgang, K.; Vu, T.; Ochs, S.; Schultz, T. Hausa Large Vocabulary Continuous Speech Recognition. In Proceedings of the 3rd Workshop on Spoken Language Technologies for Under-Resourced Languages (SLTU), Cape Town, South Africa, 7–9 May 2012. [Google Scholar]

- Vu, T.; Lyu, D.C.; Weiner, J.; Telaar, D.; Schlippe, T.; Blaicher, F.; Chng, E.S.; Schultz, T.; Li, H.Z. A First Speech Recognition System For Mandarin-English Code-Switch Conversational Speech. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 4889–4892. [Google Scholar]

- Choueiter, G.F.; Ohannessian, M.I.; Seneff, S. A turbo-style algorithm for lexical baseforms estimation. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 31 March–4 April 2008; pp. 4313–4316. [Google Scholar]

- McGraw, I.; Badr, I.; Glass, J.R. Learning lexicons from speech using a pronunciation mixture model. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 357–366. [Google Scholar] [CrossRef]

| Method | Database | Principle | Improve Characteristics | Ref |

|---|---|---|---|---|

| LDA | ARPA RM1 | Statistical pattern classification technology, through linear transformation to improve the resolution of feature vectors and compress the information content related to classification | Discrimination and dimensionality reduction | [22] |

| TI/NBS connected digit database | [23] | |||

| A 30-word single syllable highly confusable vocabulary | [24] | |||

| CVC syllables database | [25] | |||

| HAD | TI-DIGITS | Promote LDA to deal with heteroscedasticity | Discrimination and dimensionality reduction | [26] |

| GLRDA | 200 h of MATBN Mandarin television news | Find a low-dimensional feature subspace by avoiding the most chaotic situation described by the null hypothesis as possible | Discrimination and dimensionality reduction | [27] |

| MLLR | ARPA RM1 | Use a set of regression-based transformations to adjust the mean parameters of HMM | Robustness | [28] |

| FMLLR | A broadcast transcription task | Constrained MLLR, that is, the mean and variance of Gaussian share a linear transformation matrix | Robustness | [29] |

| English in the Callhome database | [30] | |||

| VLTN | Two telephone-based, connected digital databases | Appropriate spectral distortion is performed on the frequency spectrum of the speech frame to reduce the spectral variation between different speakers | Robustness | [31] |

| Wall Street Journal | [32] |

| Model | Feature | Method to Realize | Database | Ref |

|---|---|---|---|---|

| MLP | Tandem feature | Deep feature | Aurora multi-condition database | [37] |

| MLP | BN feature | Deep feature | Complete NIST, ISL, AMI, and ICSI conference data composition | [38] |

| MLP | BN feature | Deep feature and multilingual corpus assistance | GlobalPhone database | [39] |

| DNN | BN feature | Deep feature | 70 h of Mandarin transcription task and 309 h of Switchboard task | [40] |

| MLP | Posterior feature | High-resource corpus assistance | OGI-Stories and OGI-Numbers | [41] |

| MLP | Posterior feature | High-resource corpus assistance | DARPA GALE Mandarin database | [42] |

| MLP | Posterior feature | High-resource corpus assistance | English, Mandarin, and Mediterranean Arabic | [43] |

| DNN | - | Multilingual corpus assistance | 138 h in French, 195 h in German, 63 h in Spanish, and 63 h in Italian | [44] |

| DNN | Mel filterbank, three different pitch features, and fundamental frequency variance | Feature stitching | Tamil | [45] |

| MLP | The BN features corresponding to the long and short-term complementary features separately trained with MLP | Feature stitching | English, German, and Spanish in the Callhome database | [46] |

| MLP | High-resource posterior features and low-resource acoustic features | High-resource corpus assistance and feature stitching | English, Spanish, and German in the Callhome database | [47] |

| DNN | Extract BN features from DNN weight decomposition matrix | Deep feature | Turkish, Assamese, and Bengali in the Babel project | [48] |

| CNN | Convolutional network neurons | Multilingual corpus assistance | Tagalog in the Babel project | [49] |

| standard and data-driven auditory filterbanks | AM feature | - | Gujarati | [50] |

| adversarial end-to-end acoustic model | Language-independent BN feature | Multilingual corpus assistance | Assamese, Bengali, Kurmanji, Lithuanian, Pashto, Turkish, and Vietnamese in the IARPA Babel | [51] |

| BLSTM & TDNN | AF and SBF | Multilingual corpus assistance and feature stitching | Gujarati, Tamil, and Telugu | [52] |

| Year | Database | Acoustic Model/Main Content | Method to Realize | Ref |

|---|---|---|---|---|

| 2012 | TIMIT | DNN-HMM | - | [67] |

| 2012 | Business search | CD-DNN-HMM | - | [68] |

| 2012 | Greek speech hdat (II) | KL-HMM | Borrow data | [69] |

| 2011 | HIWIRE | Random phoneme space conversion | Borrow data | [70] |

| 2012 | Callhome | The mapping idea solves the mismatch of the posterior probabilities of the phonemes of different language modeling units during multilingual training | Borrow data | [71] |

| 2015 | Babel Bengali | CNN-HMM | - | [72] |

| 2018 | Javanese and Amharic in IARPA Babel | multilingual BLSTM | Borrow data | [73] |

| 2013 | French, German, Spanish, Italian, English, and Chinese | SHL-MDNN | Borrow data | [44] |

| 2017 | Callhome | SHL-MSLTM | Borrow data | [74] |

| 2018 | Tamil, Telugu, and Gujarati | multilingual TDNN | Borrow data | [75] |

| 2018 | Tamil, Telugu, and Gujarati | DTNN low-rank DTNN low-rank TDNN with transfer learning | Borrow data | [76] |

| 2017 | Hindi, Tamil, and Kannada in MANDI | KLD-MDNN | Borrow data | [77] |

| 2021 | Cantonese and Mongolian | CNN-TDNN-F-A | - | [78] |

| 2021 | Restricted training conditions for all 10 languages of OpenASR20 | CNN-TDNN-F | Borrow data | [79] |

| 2021 | Taiwanese Hokkien | TRSA-Transformer TRSA-Transformer + TDNN-F TRSA-Transformer + TDNN-F + Macaron | - | [80] |

| 2021 | MATERIAL | CNN-TDNN-F | Borrow data | [81] |

| 2021 | Seneca, Wolof, Amharic, Iban, and Bemba | SGMM DNN WireNet | Compression model and Borrow data | [82] |

| 2013 | Wall Street Journal | Regularize GMM | Compression model | [83] |

| 2010 | Callhome | SGMM | Compression model | [84] |

| 2010 | Callhome | Method of multilingual training SGMM | Compression model and Borrow data | [85] |

| 2011 | Callhome | Data borrowing method combined with SGMM | Compression model and Borrow data | [86] |

| 2013 | GlobalPhone | SMM | Compression model and Borrow data | [87] |

| Parameter Name | Number of Parameters |

|---|---|

| Gaussian Mean | D × J × I |

| Covariance Matrix | D × J × I |

| Gaussian Weight | J × I |

| Activation Function | Mathematical Formula |

|---|---|

| Sigmoid | |

| Tanh | |

| ReLU |

| Parameter Name | Number of Parameters |

|---|---|

| Number of input layers | 1 |

| Number of hidden layers | B |

| Number of output layers | 1 |

| Number of input layer nodes | A |

| Number of hidden layer nodes | B × C |

| Number of output layer nodes | D × E |

| Weights between the input layer and the hidden layer | B × C × A |

| Weights between the hidden layer and the hidden layer | B × C × B × C |

| Weights between the hidden layer and the output layer | D × E × B × C |

| Offset between input layer and hidden layer | B × C |

| Offset between hidden layer and hidden layer | B × C |

| Offset between hidden layer and output layer | D × E |

| Hidden layer activation function |

| Database | Extended Resources | Existing Resources | Generate Resources | Means of Extension | Ref |

|---|---|---|---|---|---|

| TIMIT | Data resource | Text | Speech | VTLP | [108] |

| Limited data packages for Assamese and Zulu in the IARPA Babel project | Data resource | Text | Speech | VTLP | [109] |

| Wall Street Journal | Data resource | Text | Speech | Add noise | [110] |

| Switchboard | Data resource | Text | Speech | Add noise | [111] |

| Hausa in GlobalPhone and Wolof language collected | Data resource | Text | Speech | SP | [112] |

| English and Indonesian | Data resource | Without Text | Speech | Text-to-Speech (TTS) | [113] |

| English and Surprise | Data resource | Without Text | Speech | Text-to-Speech (TTS) | [114] |

| Vietnamese in the IARPA project | Data resource | Speech | text | Semi-supervised training | [115] |

| Limited data packages for Assamese and Haitian Creole in the IARPA Babel project | Data resource | Text | Speech | VTLP and SFM | [116] |

| Limited data packages for Assamese and Zulu in the IARPA Babel project | Data resource | Speech Text | TextSpeech | Semi-supervised training and VTLP | [117] |

| Word pronunciation in the Wiki dictionary website | Pronunciation dictionary | Text | Speech | Crawl the pronunciation of words in the Wiki dictionary website to construct pronunciation dictionary | [118] |

| On the database of various English pronunciations | Pronunciation dictionary | Text | Speech | Joint sequence model | [119] |

| U.S. English CMU | Pronunciation dictionary | Text | Speech | G2P model based on LSTM | [120] |

| CELEX lexical Database of English version | Pronunciation dictionary | Text | Speech | Use a random G2P model to confirm the error items in the newly generated dictionary | [121] |

| Wiktionary and GlobalPhone Hausa Dictionary and English-Mandarin Code-Switch Dictionary | Pronunciation dictionary | Text | Speech | Fully automatic pronunciation dictionary error recovery method | [122] |

| Switchboard | Pronunciation dictionary | Text | Speech | Use acoustic features to generate pronunciation dictionary | [123] |

| Acoustic Model | Feature | Feature Transformation | Whether the Feature Is Spliced |

|---|---|---|---|

| GMM-HMM | MFCC | LDA + MLLT | Yes |

| DNN-HMM | Fbank | - | No |

| Database | Feature | Acoustic Model | Best WER (%) (1)/Improvement (2) | Ref |

|---|---|---|---|---|

| Aurora multi-condition database | Tandem feature | hybrid connectionist-HMM | 35 (1) | [37] |

| Complete NIST, ISL, AMI, and ICSI conference data composition | BN feature | GMM-HMM | 24.9 (1) | [38] |

| GlobalPhone database | BN feature | Multilingual Artificial Neural Network (ANN) | 1–2 (2) | [39] |

| 70 h of Mandarin transcription task and 309 h of Switchboard task | BN feature | GMM-HMM | 2–3 (2) | [40] |

| OGI-Stories and OGI-Numbers | Posterior feature | GMM-HMM | 11 (2) | [41] |

| 138 h in French, 195 h in German, 63 h in Spanish, and 63 h in Italian | - | SHL-MDNN | 3–5 (2) | [44] |

| Tamil | Mel filterbank, three different pitch feature, and fundamental frequency variance | DNN-HMM | 1.4 (2) | [45] |

| English, German, and Spanish in the Callhome database | The BN features corresponding to the long and short-term complementary features separately trained with MLP | MLP | 30 (2) | [46] |

| English, Spanish, and German in the Callhome database | High-resource posterior features and low-resource acoustic features | MLP | 11 (2) | [47] |

| Turkish, Assamese, and Bengali in the Babel project | Extract BN features from DNN weight decomposition matrix | Stacked DNN | 10.6 (2) | [48] |

| Tagalog in the Babel project | Convolutional network neurons | CNN-HMM | 2.5 (2) | [49] |

| Gujarati | AM feature | TDNN | 1.89 (2) | [50] |

| Assamese, Bengali, Kurmanji, Lithuanian, Pashto, Turkish, and and Vietnamese in the IARPA Babel | Language-independent BN feature | Adversarial E2E | 9.7 (2) | [51] |

| Gujarati, Tamil, and Telugu | AF and SBF | TDNN | 3.4 (2) | [52] |

| TIMIT | MFCC | DNN-HMM | 12.3 (2) | [67] |

| Business search | MFCC | CD-DNN-HMM | 5.8–9.2 (2) | [68] |

| Greek speech hdat (II) | MFCC-PLP | KL-HMM | 77 (1) | [69] |

| Babel Bengali | Fbank | CNN-HMM | 2–3 (2) | [72] |

| Javanese and Amharic in IARPA Babel | Fbank | multilingual BLSTM | 5.5 (2) | [73] |

| Callhome | PLP | SHL-MSLTM | 2.1–6.8 (2) | [74] |

| Tamil, Telugu, and Gujarati | MFCC | multilingual TDNN | 0.97–2.38 (2) | [75] |

| Tamil, Telugu, and Gujarati | Fbank | DTNNlow-rank DTNNlow-rank TDNN with transfer learning | 13.92/14.71/14.06 (1) | [76] |

| Hindi, Tamil, and Kannada in MANDI | Fbank | KLD-MDNN | 1.06–3.64 (2) | [77] |

| Cantonese and Mongolian | i-vectors and hires-MFCC | CNN-TDNN-F-A | 48.3/40.2/52.4/44.9 (1) | [78] |

| Restricted training conditions for all 10 languages of OpenASR20 | Fbank | CNN-TDNNF | 5–10 (2) | [79] |

| Taiwanese Hokkien | Fbank | TRSA-Transformer TRSA-Transformer + TDNN-F TRSA-Transformer + TDNN-F + Macaron | 43.4 (1) | [80] |

| MATERIAL | Fbank | CNN-TDNN-F | 7.1 (2) | [81] |

| Wall Street Journal | MFCC | Regularize GMM | 10.9–16.5 (2) | [83] |

| Callhome | MFCC | Method of multilingual training SGMM | 8 (2) | [85] |

| Callhome | MFCC | Data borrowing method combined with SGMM | 1.19–1.72 (2) | [86] |

| GlobalPhone | MFCC | SMM | 1.0–5.2 (2) | [87] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Slam, W.; Li, Y.; Urouvas, N. Frontier Research on Low-Resource Speech Recognition Technology. Sensors 2023, 23, 9096. https://doi.org/10.3390/s23229096

Slam W, Li Y, Urouvas N. Frontier Research on Low-Resource Speech Recognition Technology. Sensors. 2023; 23(22):9096. https://doi.org/10.3390/s23229096

Chicago/Turabian StyleSlam, Wushour, Yanan Li, and Nurmamet Urouvas. 2023. "Frontier Research on Low-Resource Speech Recognition Technology" Sensors 23, no. 22: 9096. https://doi.org/10.3390/s23229096

APA StyleSlam, W., Li, Y., & Urouvas, N. (2023). Frontier Research on Low-Resource Speech Recognition Technology. Sensors, 23(22), 9096. https://doi.org/10.3390/s23229096