Physical Exertion Recognition Using Surface Electromyography and Inertial Measurements for Occupational Ergonomics

Abstract

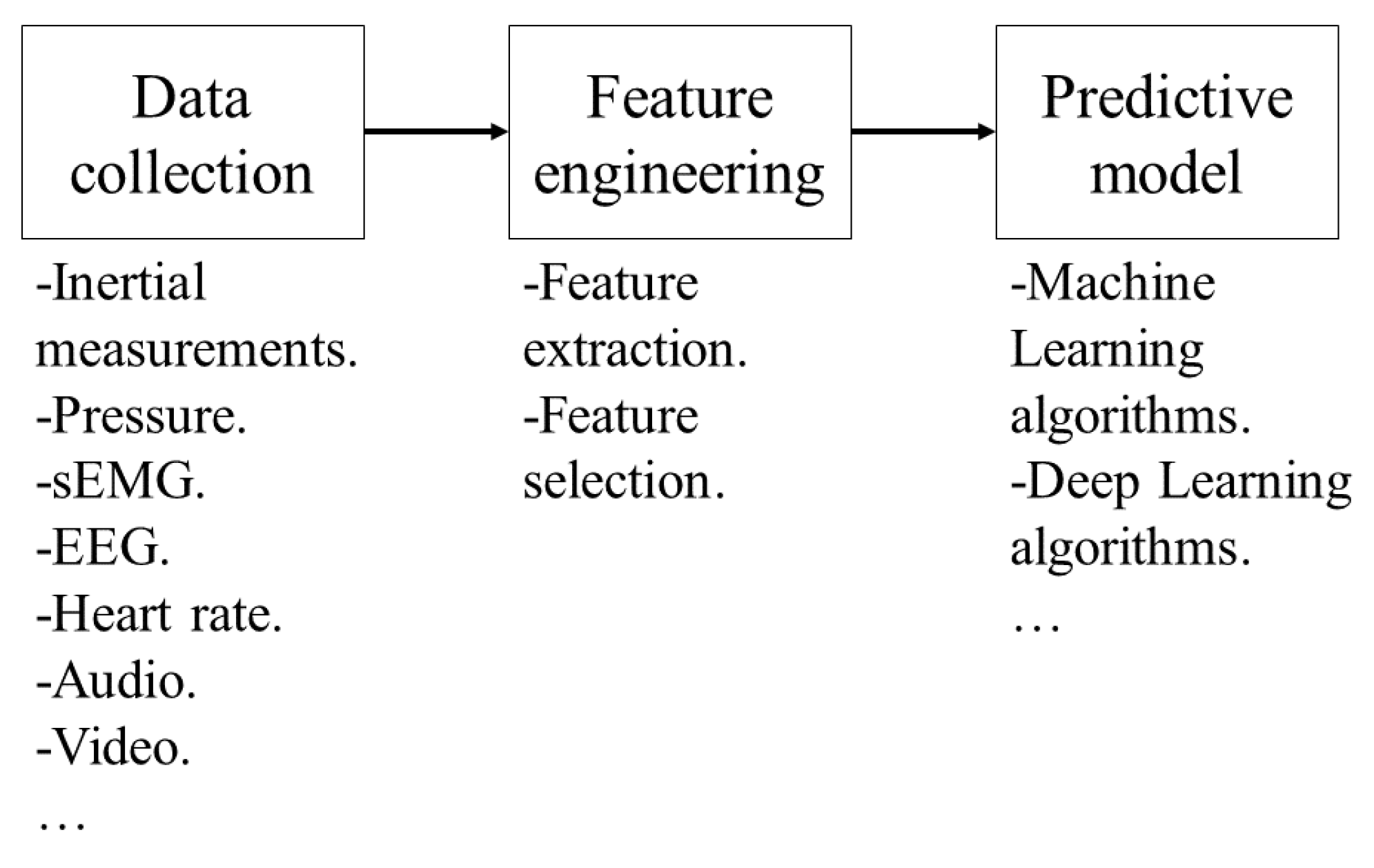

:1. Introduction

2. Materials and Methods

2.1. Materials

2.1.1. MindRove Armband

- EMG LSB: 0.045 μV.

- Gyroscope LSB: 0.015267 dps.

- Accelerometer LSB: 0.061035 × 10−3 g.

2.1.2. Video Recording Cameras

2.1.3. Host PC

2.1.4. MindRove Visualizer on Desktop

2.2. Methods

2.2.1. MindRove Armband Setup

- Channel 1 coincided with the flexor carpi radialis muscle in 93% of users.

- Channel 2 coincided with the palmaris longus muscle in 93% of users.

- Channel 3 coincided with the flexor carpi ulnaris muscle in 96% of users.

- Channel 4 coincided with the extensor carpi ulnaris muscle in 53% of users.

- Channel 5 coincided with the extensor digitorum muscle or with the extensor carpi ulnaris muscle in 50% of users.

- Channel 6 coincided with the extensor carpi radialis in 53% of users, and in the rest with the extensor digitorum muscle.

- Channel 7 coincided with the brachioradialis muscle or with the extensor carpi radialis in 50% of users.

- Channel 8 coincided with the brachioradialis muscle in 50% of users.

2.2.2. VoD Setup

2.2.3. Participants’ Demographics

2.2.4. Class Labels

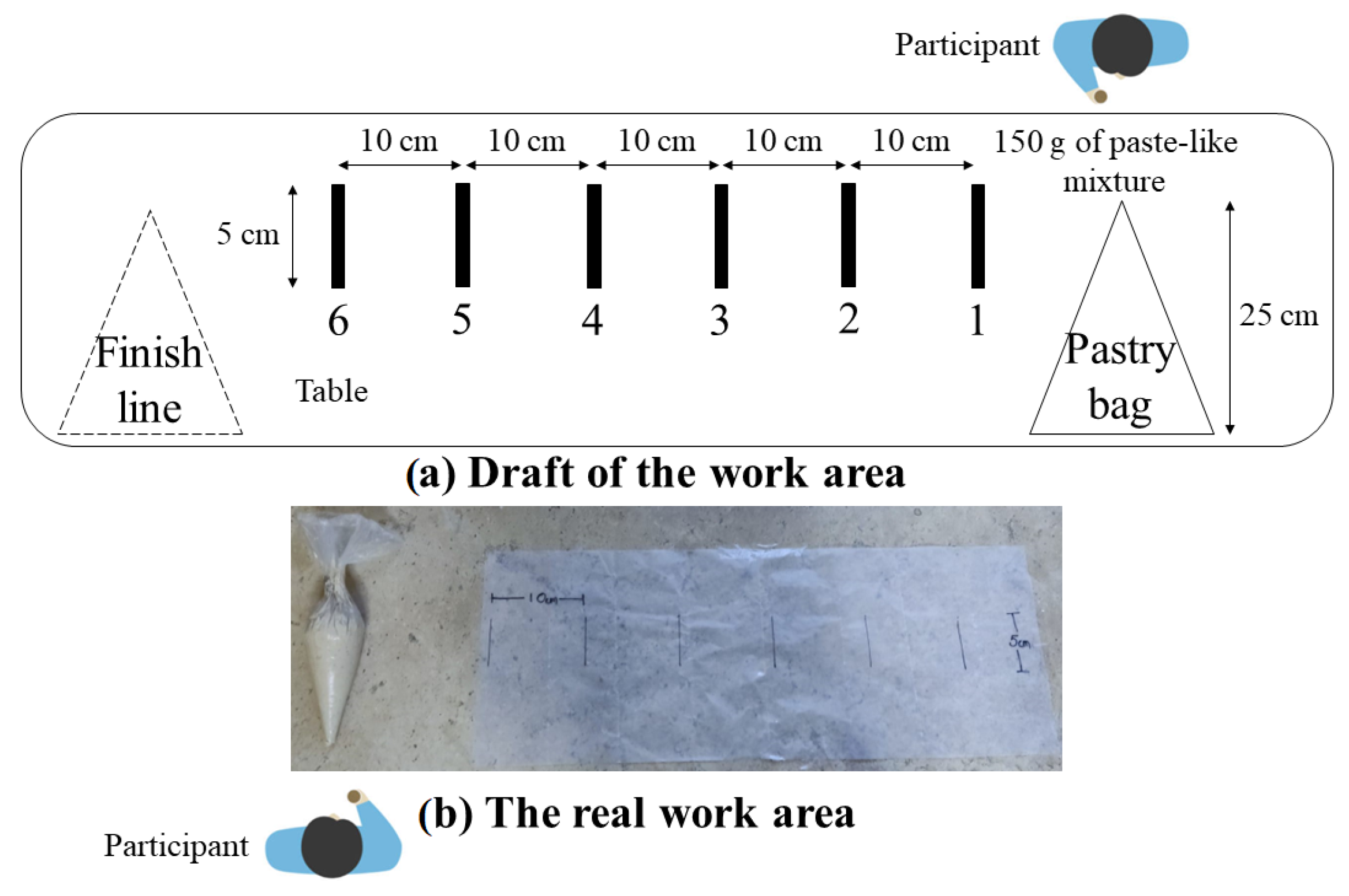

2.2.5. Preliminary Test

2.2.6. The Calibration Activity

2.2.7. The Main Test

2.2.8. Visual Data Analysis

- sEMG behavior: the amplitude of the signal moves away from zero each time there is an exertion and it is well-defined.

- Activity periods: amplitudes remain constant at a certain level when the exertion of force is sustained, otherwise, the device is probably misplaced. At least 5 out of 8 channels of the MindRove armband must show a clear amplitude to obtain relevant muscle information and avoid introducing noise.

- Inactivity periods: when the forearm muscles are inactive, the baseline of the raw sEMG remains at zero. If the baseline has an offset, the magnitude-based computations are not valid, hence they must be identified and corrected; the amplitudes of rest periods should be averaged and subtracted from each data point. Random spikes can be seen in periods of muscle inactivity; however, these should not exceed 15 μV, and the mean baseline noise varies between 1 and 3.5 μV [44]; it is recommended to average 500 samples or one second of the inactivity period to estimate the baseline noise.

- Amplitude range: normal amplitude can range from −5000 to 5000 μV, athletes easily reach these limits [44]. The sharp peaks are probably noise that could be mitigated by treating it with a digital filter. If the peaks have a considerable amplitude after filtering, it is recommended to treat them as outliers to remove them.

- Peak frequency: this is often located between 50 and 80 Hz [48]. As the 60 Hz notch filter was applied, the amplitude at that frequency and its harmonics will be zero.

- Noise analysis: the majority of the sEMG frequency power is in the range of 10 to 250 Hz but shows the most frequency power between 20 and 150 Hz. A rapid increase in amplitude is noted after 10 Hz, and a decrease that reaches zero after 200 Hz [44]. Power peaks outside the band range are considered noises due to electrode motion artifacts, and power peaks with substantial amplitudes at 50 Hz in Europe or 60 Hz in the USA and Mexico represent noise due to the power line interference, this noise can be attenuated by applying digital filters [49].

2.2.9. Data Processing

3. Results

4. Discussion

- With the device off, palpate the superficial muscles of the forearm in a neutral posture; try to match the widest part of the muscle with at least five channels; the reference electrode must be over a bony region, for example, the radius.

- Turn on the device and connect it to the host PC via WiFi, open de VoD, and wait one minute for the signal to stabilize.

- Apply all the filters available in the VoD, and wait one minute for the signal to stabilize.

- Sustain three grips with medium force in neutral posture for five seconds spaced by five seconds, starting and ending in neutral posture too.

- Check if at least five channels have a well-defined muscular signal, otherwise, reposition the armband and perform the exercise again until well-defined muscular signals are obtained.

- Apply filters and wait a minute in neutral posture before starting any recording.

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| WRMSDs | Work-related musculoskeletal disorders |

| CTS | Carpal tunnel syndrome |

| OCRA | Occupational repetitive action |

| JSI | Job Strain Index |

| ACGIH TLV | American Conference of Governmental Industrial Hygienists Threshold Limit Value |

| HAL | Hand activity level |

| DOFs | Degrees of freedom |

| IMU | Inertial measurement unit |

| LSBs | Least significant bits |

| VoD | MindRove Visualizer on Desktop |

| PSD | Power spectral density |

| sEMG | Surface electromyography |

| MVC | Maximum voluntary contraction |

| RMS | Root mean square |

| ML | Machine learning |

| SVM | Support vector machine |

| QSVM | Quadratic support vector machine |

| FFT | Fast Fourier transform |

| KNN | K-nearest neighbor |

| RF | Random forest |

| ANN | Artificial neural network |

| GB | Gradient boost |

| MLP | Multi-layer perceptron neural network |

| DT | Decision tree |

| CNN | Convolutional neural network |

| HMM | Hidden Markov model |

| LDA | Linear discriminant analysis |

| LSTM | Long short-term memory algorithm |

| ANOVA | Analysis of variance |

References

- National Institute for Occupational Safety and Health. How to Prevent Musculoskeletal Disorders. 2012. Available online: https://www.cdc.gov/niosh/docs/2012-120/default.html (accessed on 28 June 2021).

- Podniece, Z.; Heuvel, S.; Blatter, B. Work-Related Musculoskeletal Disorders: Prevention Report; European Agency for Safety and Health at Work: Bilbao, Spain, 2008. [Google Scholar]

- ISO 11228-3:2007; Ergonomics—Manual Handling—Part 3: Handling of Low Loads at High Frequency. International Organization for Standardization: Geneva, Switzerland, 2007.

- Moore, J.S.; Garg, A. The strain index: A proposed method to analyze jobs for risk of distal upper extremity disorders. Am. Ind. Hyg. Assoc. J. 1995, 56, 443–458. [Google Scholar] [CrossRef]

- Antwi-Afari, M.F.; Li, H.; Umer, W.; Yu, Y.; Xing, X. Construction activity recognition and ergonomic risk assessment using a wearable insole pressure system. J. Constr. Eng. Manag. 2020, 146, 04020077. [Google Scholar] [CrossRef]

- Rybnikár, F.; Kačerová, I.; Hořejší, P.; Šimon, M. Ergonomics Evaluation Using Motion Capture Technology—Literature Review. Appl. Sci. 2022, 13, 162. [Google Scholar] [CrossRef]

- Bortolini, M.; Gamberi, M.; Pilati, F.; Regattieri, A. Automatic assessment of the ergonomic risk for manual manufacturing and assembly activities through optical motion capture technology. Procedia CIRP 2018, 72, 81–86. [Google Scholar] [CrossRef]

- Aiello, G.; Certa, A.; Abusohyon, I.; Longo, F.; Padovano, A. Machine Learning approach towards real time assessment of hand-arm vibration risk. In Proceedings of the 17th IFAC Symposium on Information Control Problems in Manufacturing INCOM 2021, Budapest, Hungary, 7–9 June 2021; Volume 54, pp. 1187–1192. [Google Scholar]

- Cerqueira, S.M.; Moreira, L.; Alpoim, L.; Siva, A.; Santos, C.P. An inertial data-based upper body posture recognition tool: A machine learning study approach. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–17 April 2020; pp. 4–9. [Google Scholar]

- Wang, M.; Zhao, C.; Barr, A.; Yu, S.; Kapellusch, J.; Harris Adamson, C. Hand Posture and Force Estimation Using Surface Electromyography and an Artificial Neural Network. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage CA: Los Angeles, CA, USA, 2020; Volume 64, pp. 1247–1248. [Google Scholar]

- Lim, S.; D’Souza, C. A narrative review on contemporary and emerging uses of inertial sensing in occupational ergonomics. Int. J. Ind. Ergon. 2020, 76, 102937. [Google Scholar] [CrossRef]

- Bangaru, S.S.; Wang, C.; Busam, S.A.; Aghazadeh, F. ANN-based automated scaffold builder activity recognition through wearable EMG and IMU sensors. Autom. Constr. 2021, 126, 103653. [Google Scholar] [CrossRef]

- Sherafat, B.; Ahn, C.R.; Akhavian, R.; Behzadan, A.H.; Golparvar-Fard, M.; Kim, H.; Lee, Y.C.; Rashidi, A.; Azar, E.R. Automated methods for activity recognition of construction workers and equipment: State-of-the-art review. J. Constr. Eng. Manag. 2020, 146, 03120002. [Google Scholar] [CrossRef]

- Jung, M.; Chi, S. Human activity classification based on sound recognition and residual convolutional neural network. Autom. Constr. 2020, 114, 103177. [Google Scholar] [CrossRef]

- Sers, R.; Forrester, S.; Moss, E.; Ward, S.; Ma, J.; Zecca, M. Validity of the Perception Neuron inertial motion capture system for upper body motion analysis. Measurement 2020, 149, 107024. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Maurer-Grubinger, C.; Holzgreve, F.; Fraeulin, L.; Betz, W.; Erbe, C.; Brueggmann, D.; Wanke, E.M.; Nienhaus, A.; Groneberg, D.A.; Ohlendorf, D. Combining Ergonomic Risk Assessment (RULA) with Inertial Motion Capture Technology in Dentistry—Using the Benefits from Two Worlds. Sensors 2021, 21, 4077. [Google Scholar] [CrossRef] [PubMed]

- Conforti, I.; Mileti, I.; Del Prete, Z.; Palermo, E. Measuring biomechanical risk in lifting load tasks through wearable system and machine-learning approach. Sensors 2020, 20, 1557. [Google Scholar] [CrossRef] [PubMed]

- Olivas-Padilla, B.E.; Manitsaris, S.; Menychtas, D.; Glushkova, A. Stochastic-Biomechanic Modeling and Recognition of Human Movement Primitives, in Industry, Using Wearables. Sensors 2021, 21, 2497. [Google Scholar] [CrossRef] [PubMed]

- Côté-Allard, U.; Gagnon-Turcotte, G.; Laviolette, F.; Gosselin, B. A low-cost, wireless, 3-d-printed custom armband for semg hand gesture recognition. Sensors 2019, 19, 2811. [Google Scholar] [CrossRef] [PubMed]

- Manjarres, J.; Narvaez, P.; Gasser, K.; Percybrooks, W.; Pardo, M. Physical workload tracking using human activity recognition with wearable devices. Sensors 2020, 20, 39. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Vega, G.; Rodríguez-Vega, D.A.; Zaldívar-Colado, X.P.; Zaldívar-Colado, U.; Castillo-Ortega, R. A Motion Capture System for Hand Movement Recognition. In Proceedings of the 21st Congress of the International Ergonomics Association (IEA 2021); Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2021; Volume 223, pp. 114–121. [Google Scholar]

- Zare, M.; Bodin, J.; Sagot, J.C.; Roquelaure, Y. Quantification of Exposure to Risk Postures in Truck Assembly Operators: Neck, Back, Arms and Wrists. Int. J. Environ. Res. Public Health 2020, 17, 6062. [Google Scholar] [CrossRef] [PubMed]

- Donisi, L.; Cesarelli, G.; Coccia, A.; Panigazzi, M.; Capodaglio, E.M.; D’Addio, G. Work-Related Risk Assessment According to the Revised NIOSH Lifting Equation: A Preliminary Study Using a Wearable Inertial Sensor and Machine Learning. Sensors 2021, 21, 2593. [Google Scholar] [CrossRef]

- Giannini, P.; Bassani, G.; Avizzano, C.A.; Filippeschi, A. Wearable sensor network for biomechanical overload assessment in manual material handling. Sensors 2020, 20, 3877. [Google Scholar] [CrossRef]

- Jahanbanifar, S.; Akhavian, R. Evaluation of wearable sensors to quantify construction workers muscle force: An ergonomic analysis. In Proceedings of the 2018 Winter Simulation Conference (WSC), Gothenburg, Sweden, 9–12 December 2018; pp. 3921–3929. [Google Scholar]

- Malaisé, A.; Maurice, P.; Colas, F.; Ivaldi, S. Activity recognition for ergonomics assessment of industrial tasks with automatic feature selection. IEEE Robot. Autom. Lett. 2019, 4, 1132–1139. [Google Scholar] [CrossRef]

- Hosseinian, S.M.; Zhu, Y.; Mehta, R.K.; Erraguntla, M.; Lawley, M.A. Static and dynamic work activity classification from a single accelerometer: Implications for ergonomic assessment of manual handling tasks. IISE Trans. Occup. Ergon. Hum. Factors 2019, 7, 59–68. [Google Scholar] [CrossRef]

- Mudiyanselage, S.E.; Nguyen, P.H.D.; Rajabi, M.S.; Akhavian, R. Automated workers’ ergonomic risk assessment in manual material handling using sEMG wearable sensors and machine learning. Electronics 2021, 10, 2558. [Google Scholar] [CrossRef]

- Tao, W.; Lai, Z.H.; Leu, M.C.; Yin, Z. Worker activity recognition in smart manufacturing using IMU and sEMG signals with convolutional neural networks. Procedia Manuf. 2018, 26, 1159–1166. [Google Scholar] [CrossRef]

- Taborri, J.; Bordignon, M.; Marcolin, F.; Donati, M.; Rossi, S. Automatic identification and counting of repetitive actions related to an industrial worker. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd4. 0&IoT), Naples, Italy, 4–6 June 2019; pp. 394–399. [Google Scholar]

- Tsao, L.; Nussbaum, M.A.; Kim, S.; Ma, L. Modelling performance during repetitive precision tasks using wearable sensors: A data-driven approach. Ergonomics 2020, 63, 831–849. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Ahn, C.R.; Kim, H. Deep learning-based classification of work-related physical load levels in construction. Adv. Eng. Inform. 2020, 45, 101104. [Google Scholar] [CrossRef]

- Zhao, J.; Obonyo, E. Convolutional long short-term memory model for recognizing construction workers’ postures from wearable inertial measurement units. Adv. Eng. Inform. 2020, 46, 101177. [Google Scholar] [CrossRef]

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The grasp taxonomy of human grasp types. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 66–77. [Google Scholar] [CrossRef]

- MindRove. Armband, Emg-Based Gesture Control Armband. 2021. Available online: https://mindrove.com/armband/ (accessed on 25 August 2021).

- Xiaomi. POCO X3 Pro. 2022. Available online: https://www.mi.com/mx/product/poco-x3-pro/specs, (accessed on 14 October 2022).

- Stegeman, D.; Hermens, H. Standards for surface electromyography: The European project Surface EMG for non-invasive assessment of muscles (SENIAM). Enschede Roessingh Res. Dev. 2007, 10, 8–12. [Google Scholar]

- Anastasiev, A.; Kadone, H.; Marushima, A.; Watanabe, H.; Zaboronok, A.; Watanabe, S.; Matsumura, A.; Suzuki, K.; Matsumaru, Y.; Ishikawa, E. Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides. Sensors 2022, 22, 8733. [Google Scholar] [CrossRef]

- Brull, S.J.; Silverman, D.G.; Naguib, M. Chapter 15—Monitoring Neuromuscular Blockade. In Anesthesia Equipment, 2nd ed.; Ehrenwerth, J., Eisenkraft, J.B., Berry, J.M., Eds.; W.B. Saunders: Philadelphia, PA, USA, 2013; pp. 307–327. [Google Scholar] [CrossRef]

- Takala, E.P.; Toivonen, R. Placement of forearm surface EMG electrodes in the assessment of hand loading in manual tasks. Ergonomics 2013, 56, 1159–1166. [Google Scholar] [CrossRef]

- De Luca, C.J. The use of surface electromyography in biomechanics. J. Appl. Biomech. 1997, 13, 135–163. [Google Scholar] [CrossRef]

- Konrad, P. The abc of emg. A Pract. Introd. Kinesiol. Electromyogr. 2005, 1, 30–35. [Google Scholar]

- Chuang, T.D.; Acker, S.M. Comparing functional dynamic normalization methods to maximal voluntary isometric contractions for lower limb EMG from walking, cycling and running. J. Electromyogr. Kinesiol. 2019, 44, 86–93. [Google Scholar] [CrossRef]

- Tabard-Fougère, A.; Rose-Dulcina, K.; Pittet, V.; Dayer, R.; Vuillerme, N.; Armand, S. EMG normalization method based on grade 3 of manual muscle testing: Within- and between-day reliability of normalization tasks and application to gait analysis. Gait Posture 2018, 60, 6–12. [Google Scholar] [CrossRef] [PubMed]

- Salvendy, G. Handbook of Human Factors and Ergonomics; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Motion Lab Systems. EMG Analysis Software. 2022. Available online: https://www.motion-labs.com/software_emg_analysis.html (accessed on 15 November 2022).

- McManus, L.; De Vito, G.; Lowery, M.M. Analysis and biophysics of surface EMG for physiotherapists and kinesiologists: Toward a common language with rehabilitation engineers. Front. Neurol. 2020, 11, 576729. [Google Scholar] [CrossRef] [PubMed]

- Nath, N.D.; Chaspari, T.; Behzadan, A.H. Automated ergonomic risk monitoring using body-mounted sensors and machine learning. Adv. Eng. Inform. 2018, 38, 514–526. [Google Scholar] [CrossRef]

- Erickson, B.J.; Kitamura, F. Magician’s corner: 9. Performance metrics for machine learning models. Radiol. Artif. Intell. 2021, 3, e200126. [Google Scholar] [CrossRef]

| Study | Data Type | Activities Recognized | Inertial Measurements Procedures | sEMG Procedures | Model and Accuracy |

|---|---|---|---|---|---|

| [8] | Acceleration. | Six operations performed by rotating tools. | Vibration spectrum extracted by means of FFT. | - | KNN, 94%. |

| [5] | Acceleration and foot plantar pressure. | Four manual material handling (MMH) tasks. | - | - | RF, 97.6%. |

| [12] | Inertial measurements and sEMG. | Fifteen scaffold builder activities. | Fusing via concatenation. Annotation. Z-score standardization. | EMG reshaping. Fusing via concatenation. Annotation. Z-score standardization. | ANN, 93.29%. |

| [9] | Acceleration. | Upper body postures (six static and ten transitional). | Low-pass filtering. Normalization with Z-Score and min–max. | - | Quadratic SVM, 97.3%. |

| [19] | Inertial measurements. | Four MMH tasks. | Low-pass filtering. | - | Quadratic SVM, 99.4%. |

| [25] | Acceleration and angular velocity. | Risk and non-risk lifting tasks. | - | - | GB, 95%. |

| [26] | Inertial measurements and sEMG. | MMH tasks. | Low-pass filtering. | Band-pass and notch filtering. | MLP, 92.1%. |

| [27] | Acceleration. | Pushing and pulling activities. | - | - | ANN, 87.5%. |

| [28] | Inertial measurements and force. | Seven activities in a pick-and-place task. | - | - | ANN, 94%. |

| [29] | Acceleration. | Fifteen activities in a MMH task. | First-order differencing transformation. | - | RF, 98.2%. |

| [30] | sEMG. | Weightlifting as MMH tasks. | - | - | DT, 99.98%. |

| [31] | Acceleration, angular velocity, and sEMG. | Six common activities in assembly tasks. | Resampling. Samples were stacked and shuffled. Transformation into an activity image by 2D discrete Fourier transform. Normalization. | Resampling. Averaging along each channel. | CNN, 97.6%. |

| [20] | Inertial measurements. | 28 general postures. | Low-pass filtering. | - | HMM, 95.05%. |

| [23] | Inertial measurements and force. | Five wrist postures. | Zero calibration. | - | DT, 95.9% |

| [32] | Inertial measurements. | Eleven manual technical actions. | Low-pass filtering. Signal envelope extraction. | - | Quadratic SVM, 89.5%. |

| [33] | Inertial measurements, sEMG, and heart rate. | Errors while performing two assembly tasks. | Down-sampling. | Band-pass filtering. RMS amplitude. Normalization. | LDA, 94.1%. |

| [10] | sEMG. | Different gripping and pinching loads. | - | - | ANN, 82%. |

| [34] | Inertial measurements. | Different lifting loads in a masonry task. | Low-pass filtering. Resampling. | - | LSTM, 98.6%. |

| [35] | Acceleration and angular velocity. | Six construction workers’ postures. | Down-sampling. | - | Convolutional LSTM, 0.87 (F1 score). |

| Abbreviation | Treatment |

|---|---|

| A | Hampel identifier to remove the outliers of inertial measurements with 3 standard deviations and a window length of 1001. |

| B | Labeling. |

| C | Merging datasets from 28 subjects to train the model, i.e., 56 different datasets. The remaining four datasets were used to test the model, one at a time. |

| D | Feature extraction with the sliding window technique. A window size of 125 samples was used with 50% overlapping. The features extracted in the time domain were mean, minimum, maximum, standard deviation, variance, median, range (maximum–minimum), RMS value, and kurtosis. |

| E | Removal of the offset in the normalized sEMG signal by setting its baseline at the lowest data point in the time series to eliminate its offset. |

| F | Zero calibration for inertial measurements by calculating the mean of the first 500 samples and subtracting it from the rest of the data points. |

| Treatment E | Treatment F | Order of Treatments |

|---|---|---|

| Not applied | Not applied | A, B, C, D |

| Applied | Not applied | E, A, B, C, D |

| Not applied | Applied | F, A, B, C, D |

| Applied | Applied | E, F, A, B, C, D |

| Solution | E | F | Training Accuracy Fit | 95% CI |

|---|---|---|---|---|

| 1 | Applied | Applied | 0.9310 | (0.930460; 0.931540) |

| Subject | Run | Testing Accuracy | Precision | Recall | F1 Score | ||||

|---|---|---|---|---|---|---|---|---|---|

| Best | Worst | Best | Worst | Best | Worst | Best | Worst | ||

| 4 | 1 | 95.13% | 75.13% | 91.10% | 90.38% | 95.68% | 33.81% | 93.33% | 49.21% |

| 4 | 2 | 94.82% | 75.91% | 91.47% | 65.1% | 95.16% | 78.23% | 93.28% | 71.06% |

| 5 | 1 | 90.38% | 78.73% | 91.52% | 70.97% | 86.29% | 88% | 88.82% | 78.73% |

| 5 | 2 | 93.37% | 78.92% | 98.58% | 73.3% | 87.42% | 88.05% | 92.67% | 80% |

| Mean | 93.42% | 77.17% | 93.17% | 74.94% | 91.14% | 72.02% | 92.03% | 69.71% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Concha-Pérez, E.; Gonzalez-Hernandez, H.G.; Reyes-Avendaño, J.A. Physical Exertion Recognition Using Surface Electromyography and Inertial Measurements for Occupational Ergonomics. Sensors 2023, 23, 9100. https://doi.org/10.3390/s23229100

Concha-Pérez E, Gonzalez-Hernandez HG, Reyes-Avendaño JA. Physical Exertion Recognition Using Surface Electromyography and Inertial Measurements for Occupational Ergonomics. Sensors. 2023; 23(22):9100. https://doi.org/10.3390/s23229100

Chicago/Turabian StyleConcha-Pérez, Elsa, Hugo G. Gonzalez-Hernandez, and Jorge A. Reyes-Avendaño. 2023. "Physical Exertion Recognition Using Surface Electromyography and Inertial Measurements for Occupational Ergonomics" Sensors 23, no. 22: 9100. https://doi.org/10.3390/s23229100

APA StyleConcha-Pérez, E., Gonzalez-Hernandez, H. G., & Reyes-Avendaño, J. A. (2023). Physical Exertion Recognition Using Surface Electromyography and Inertial Measurements for Occupational Ergonomics. Sensors, 23(22), 9100. https://doi.org/10.3390/s23229100