Instance Segmentation and Ensemble Learning for Automatic Temperature Detection in Multiparous Sows

Abstract

:1. Introduction

- (1)

- Regarding the segmentation of the thermal window region, an instance segmentation algorithm, predicated on YOLOv5s and DeepLabv3+, is proposed, incorporating the Convolutional Block Attention Mechanism (CBAM) and the MobileNetv2 network to facilitate the precise localization and rapid segmentation of the vulva region.

- (2)

- Pertaining to temperature prediction, a random forest algorithm, optimized by an adaptive genetic algorithm, constructs an inversion model based on environmental parameters, vulvar temperature, and rectal temperature, aspiring to predict the sows’ temperature accurately.

- (3)

- In the context of practical farming, test results exhibit that the sow temperature monitoring method posited in this study can accurately predict the rectal temperature of sows in sheltered scenarios, thereby establishing a foundation for health monitoring and estrus detection in sows.

2. Materials and Methods

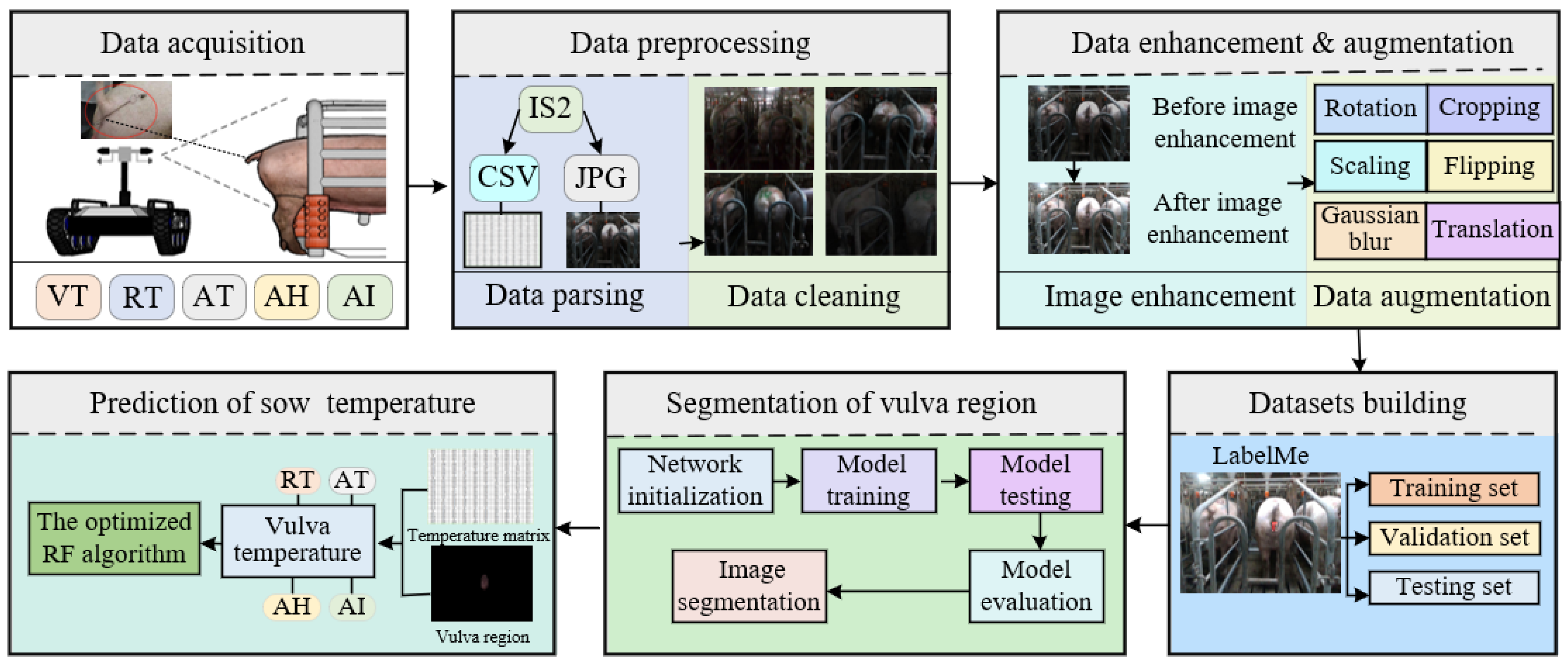

2.1. Overall Workflow

2.2. Animals, Housing, and Data Collection

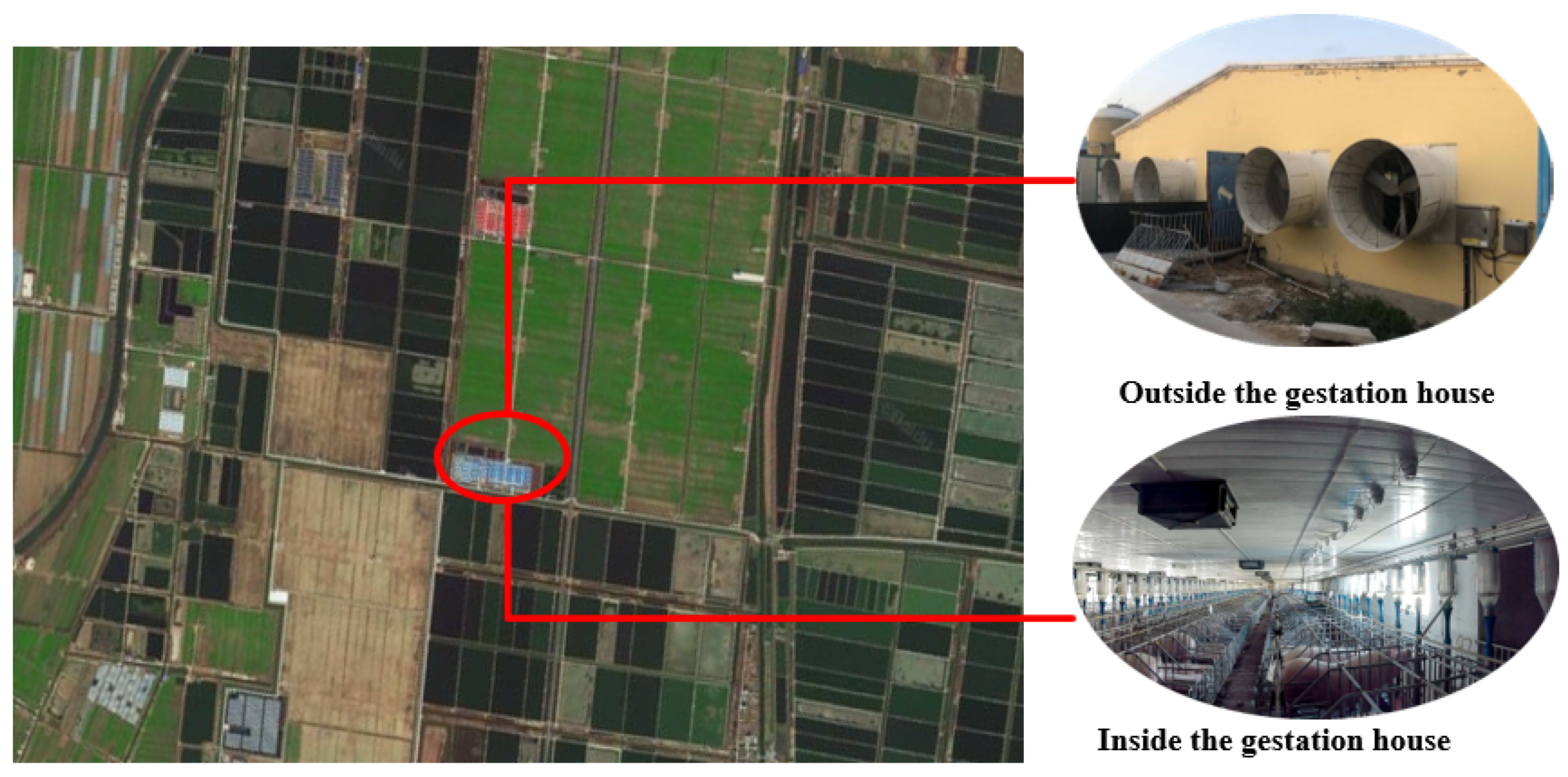

2.2.1. Experimental Animals, Site, and Time

2.2.2. Data Acquisition

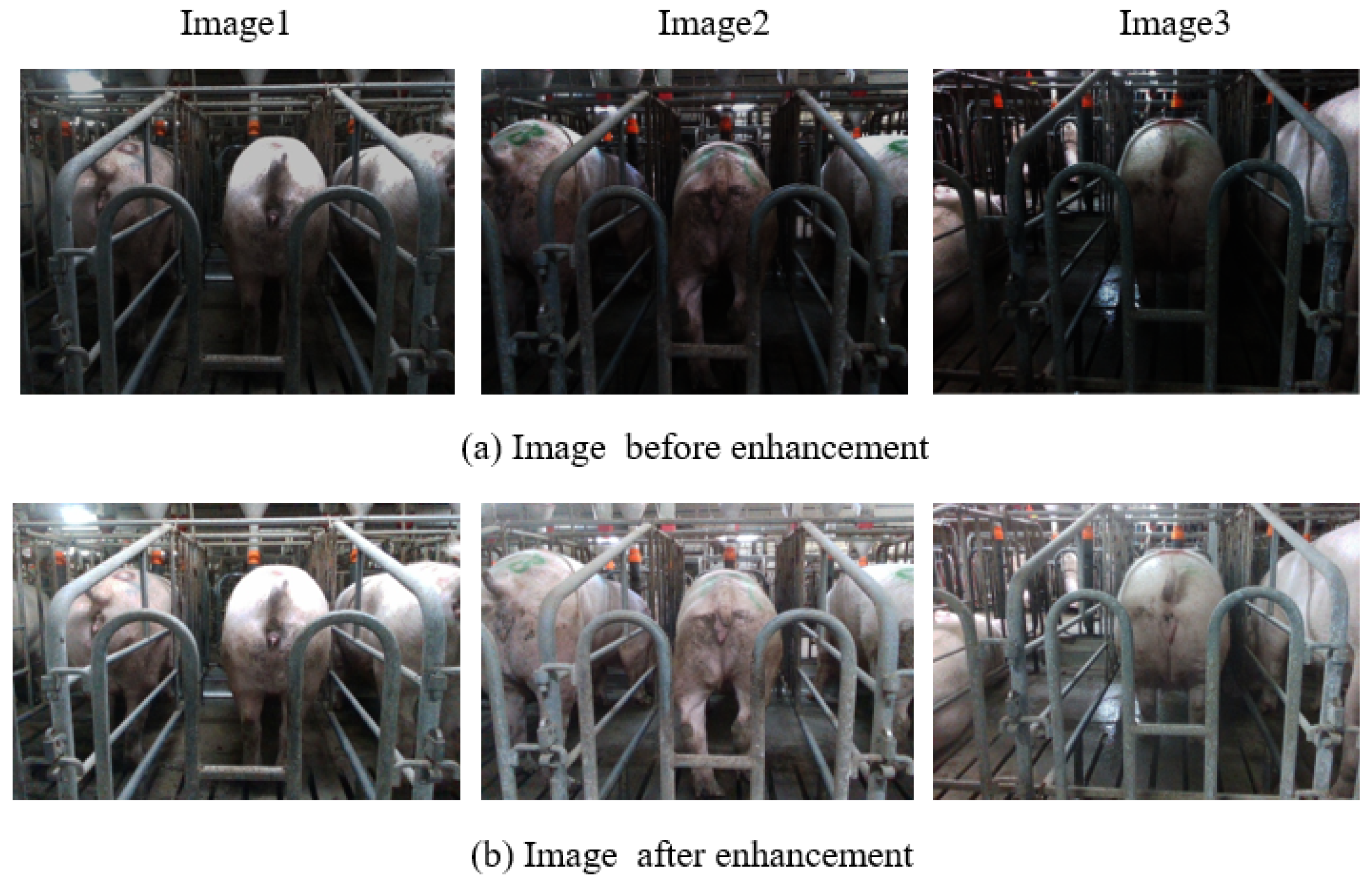

2.2.3. Data Preprocessing and Dataset Building

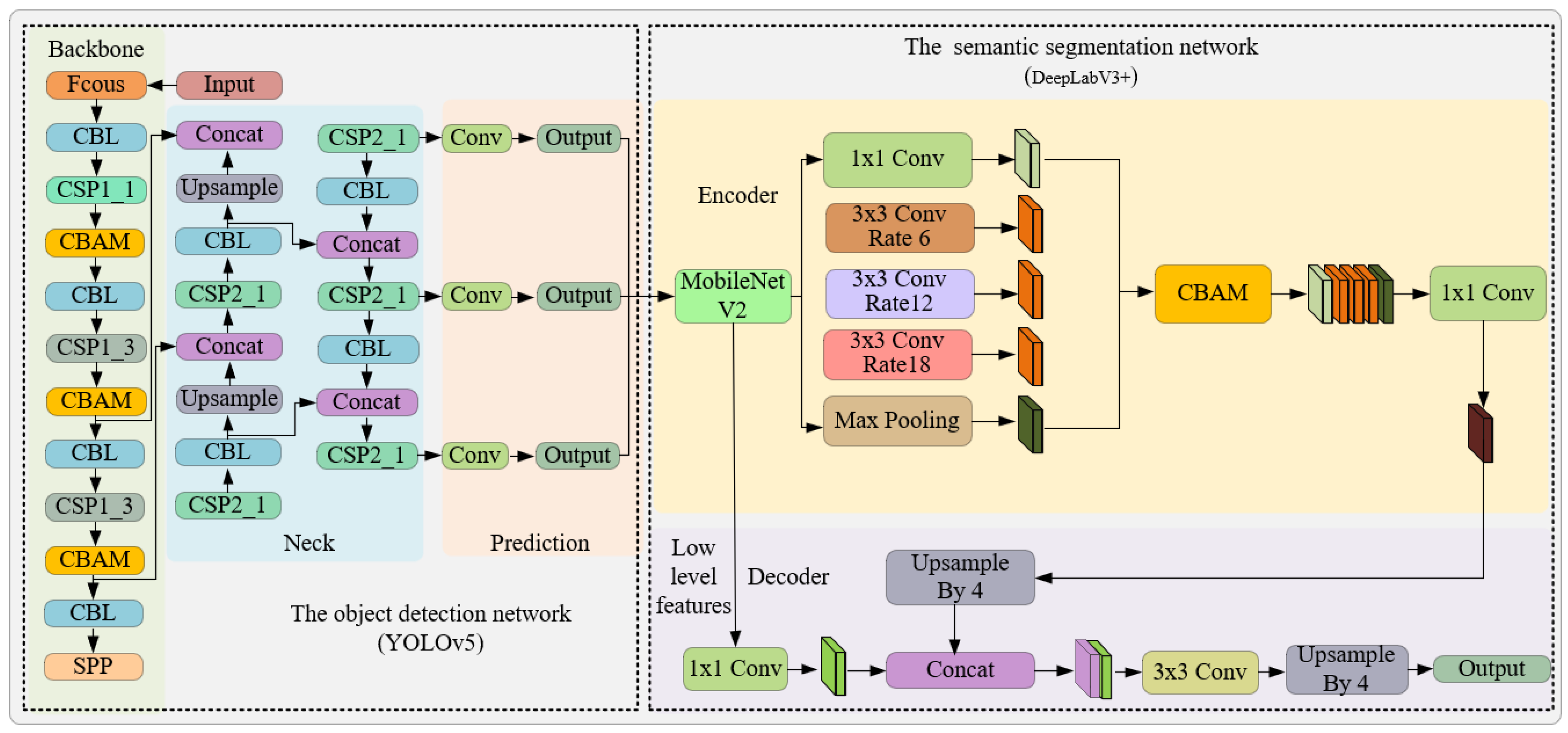

2.3. Sow Vulva Region Segmentation Model Construction

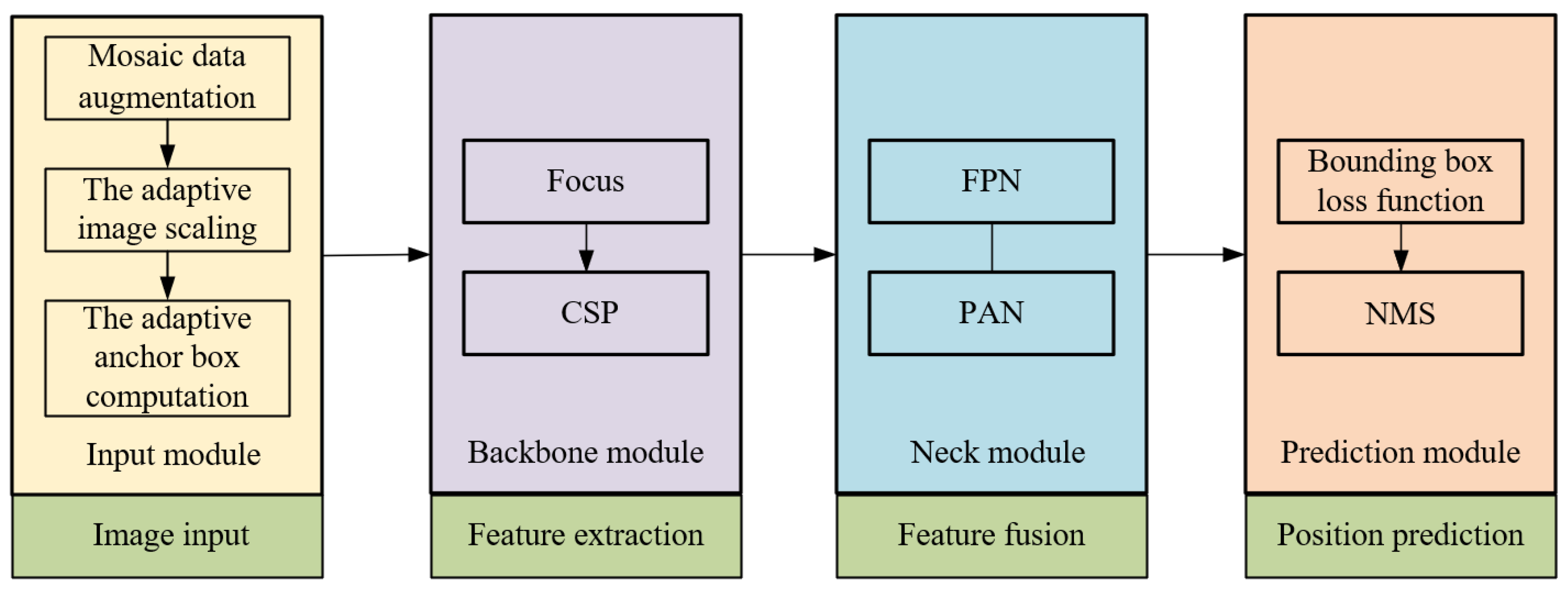

2.3.1. YOLOv5 Network Model

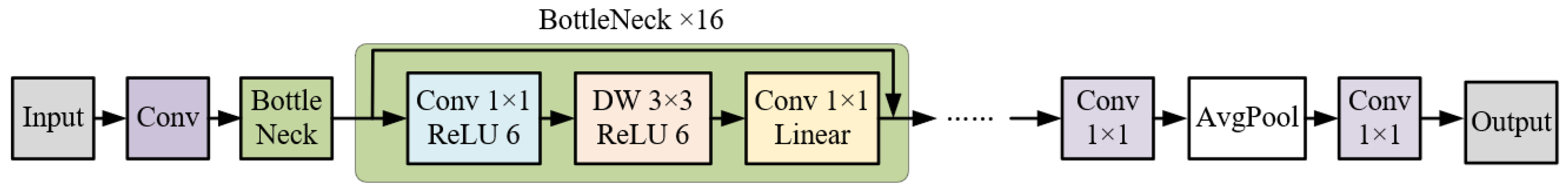

2.3.2. DeepLabv3+ Network Model

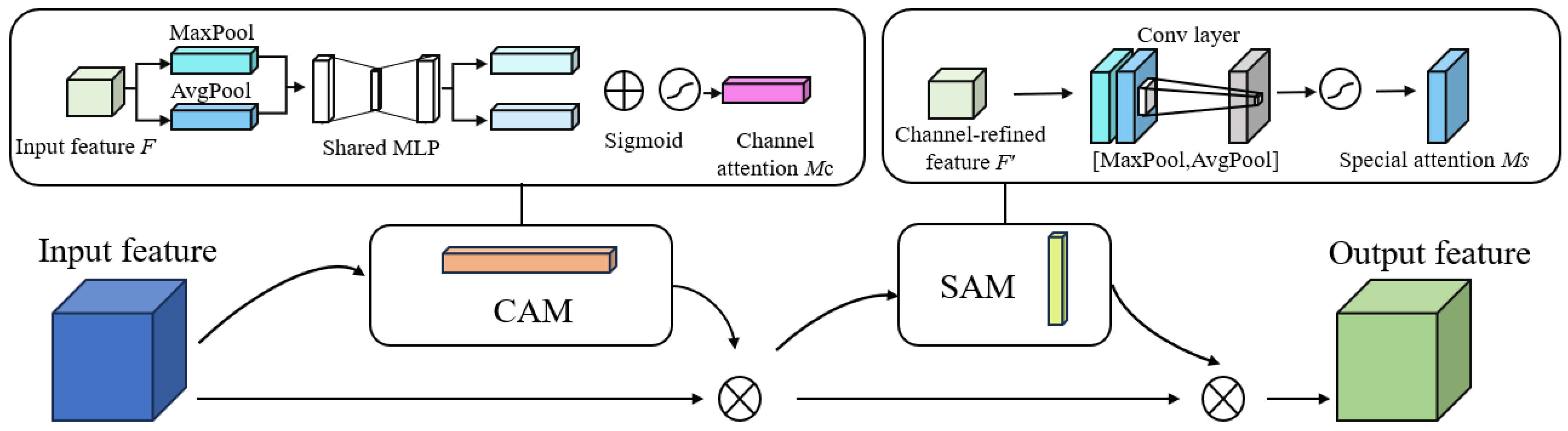

2.3.3. CBAM Module

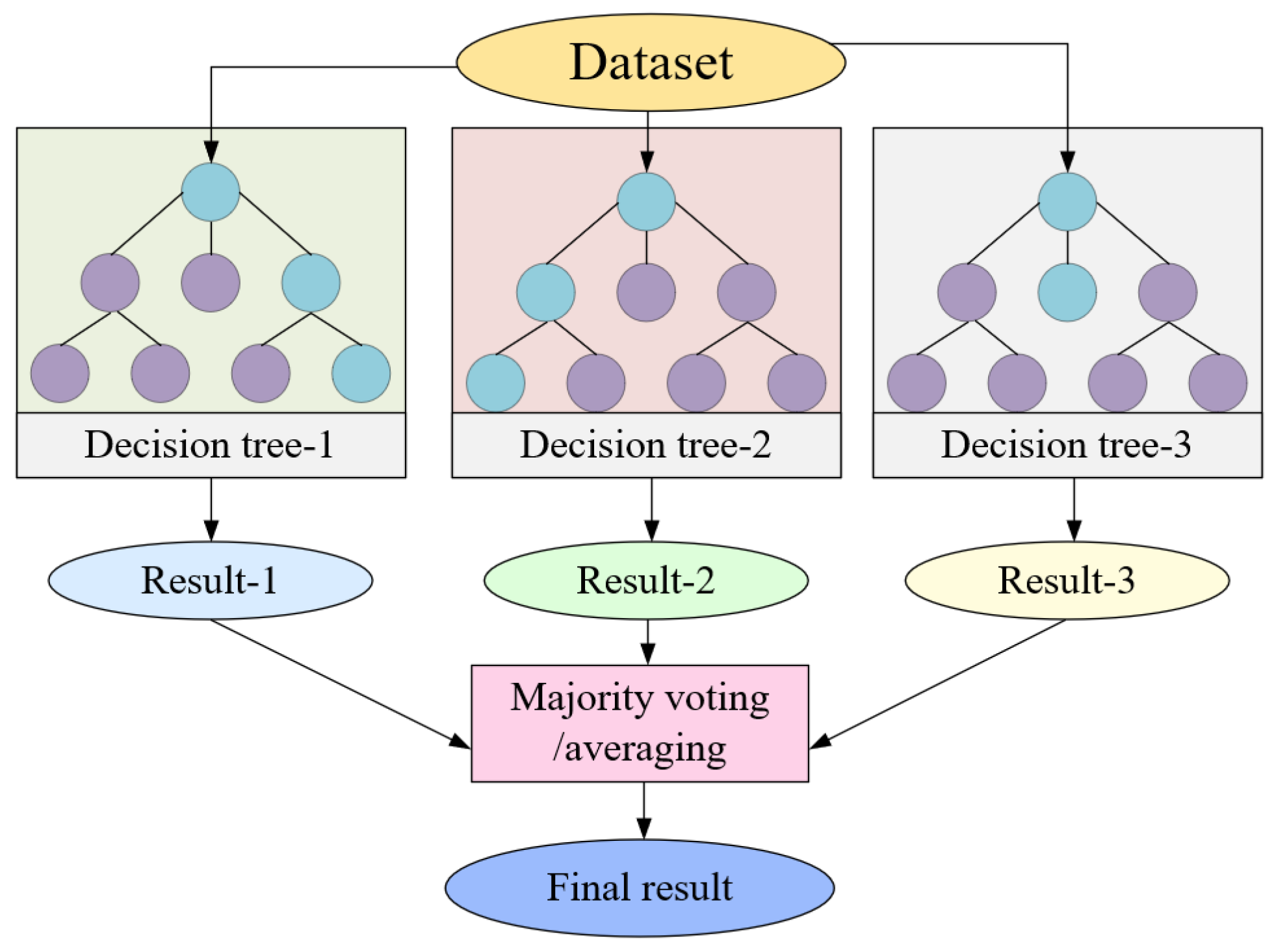

2.4. Model Construction for Prediction of Sow Temperature

2.5. Model Evaluation Metrics

3. Results

3.1. Segmentation Model Analysis

3.1.1. Model Training

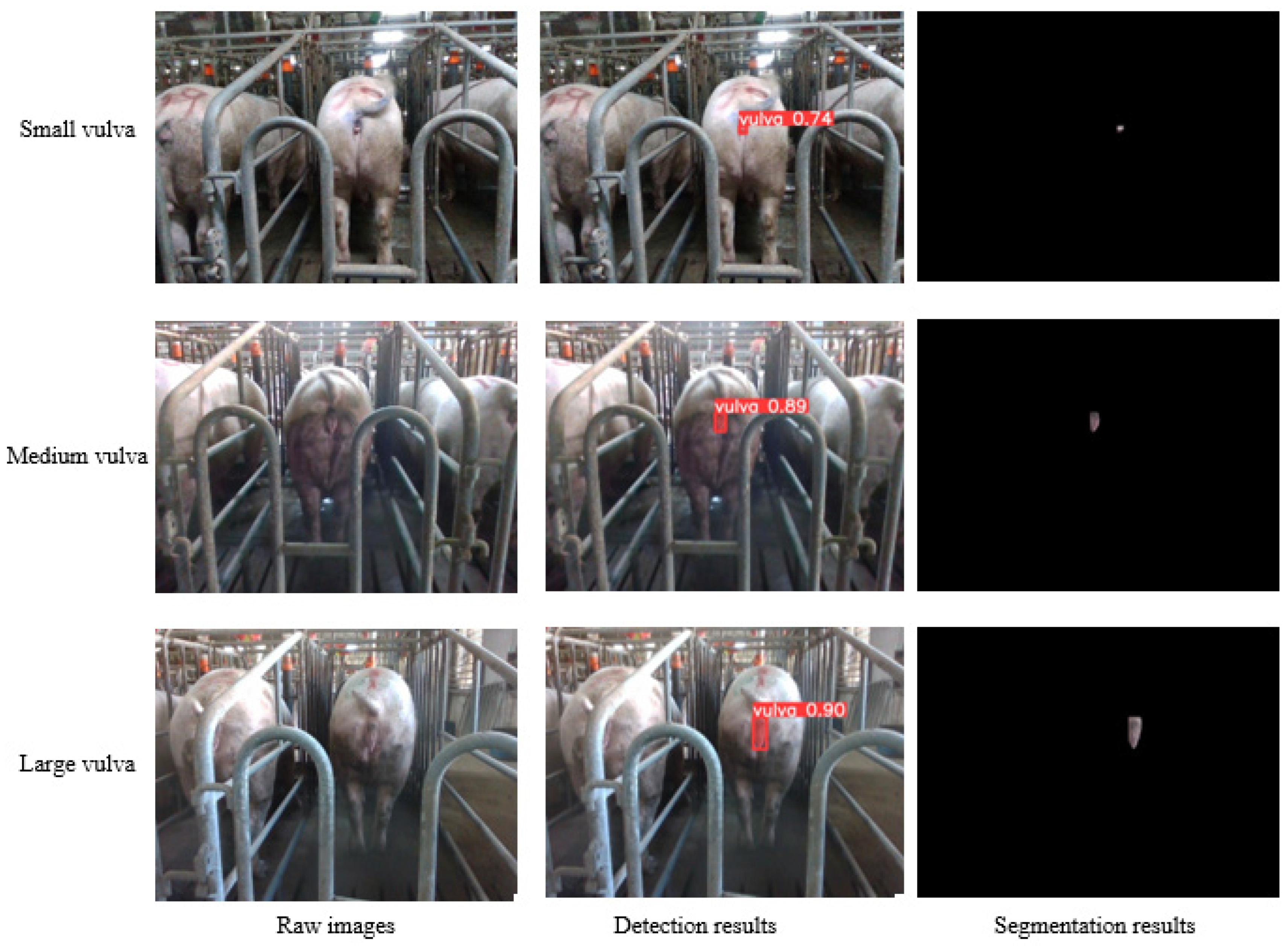

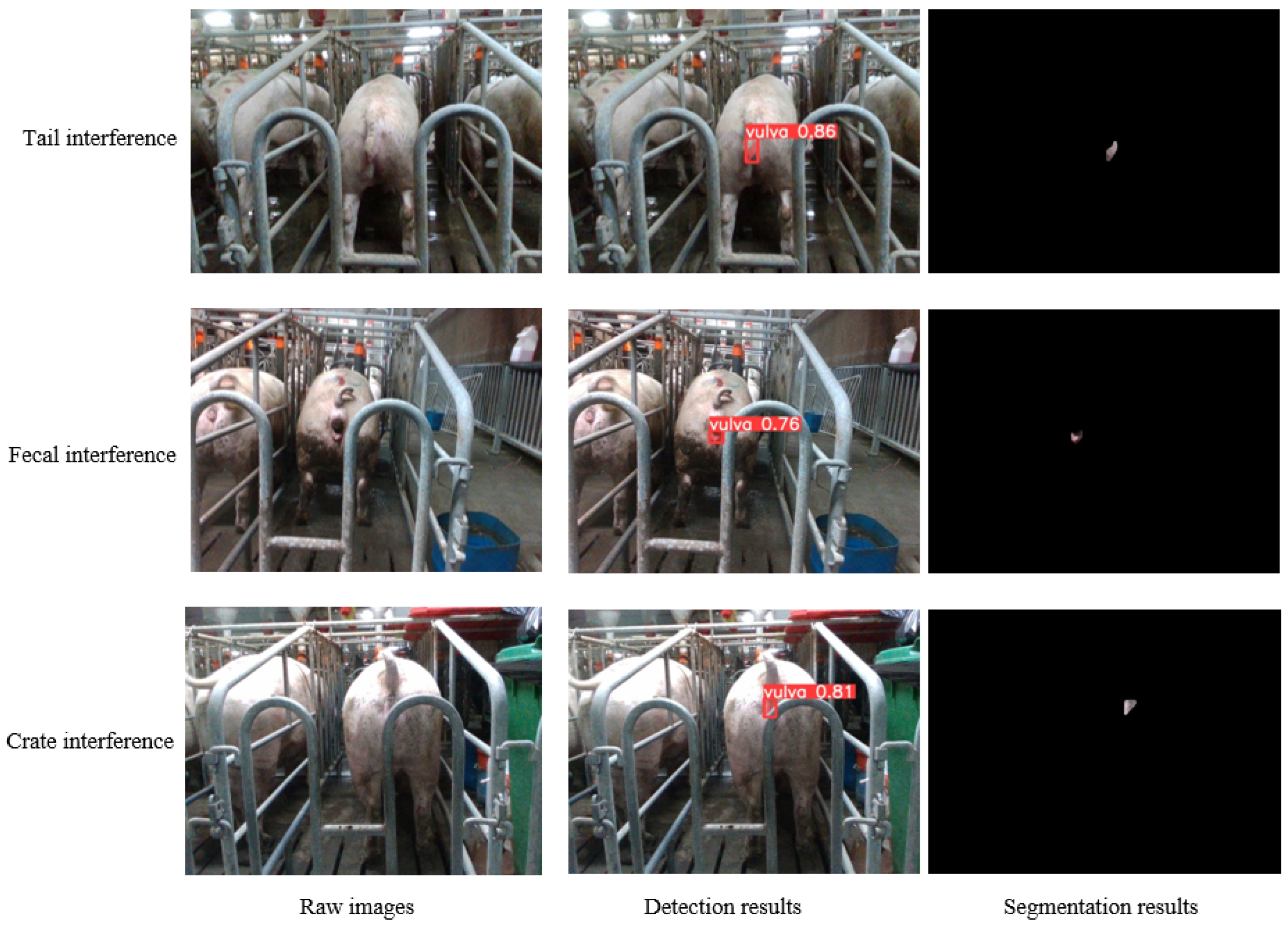

3.1.2. Model Prediction Tests

3.1.3. Comparison of Different Segmentation Algorithms

3.2. Analysis of Temperature Prediction Models for Sows

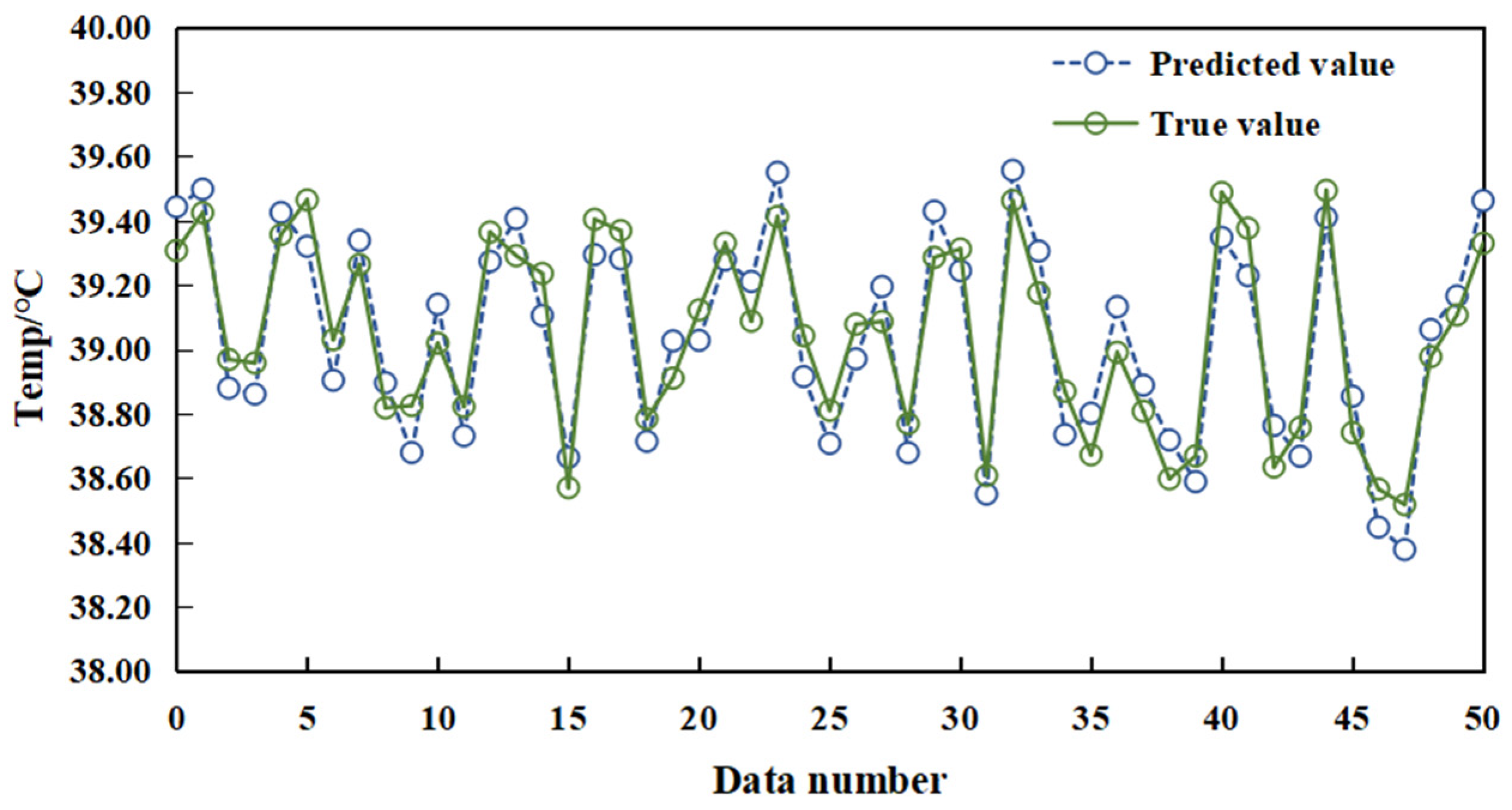

3.2.1. Sow Temperature Prediction Tests

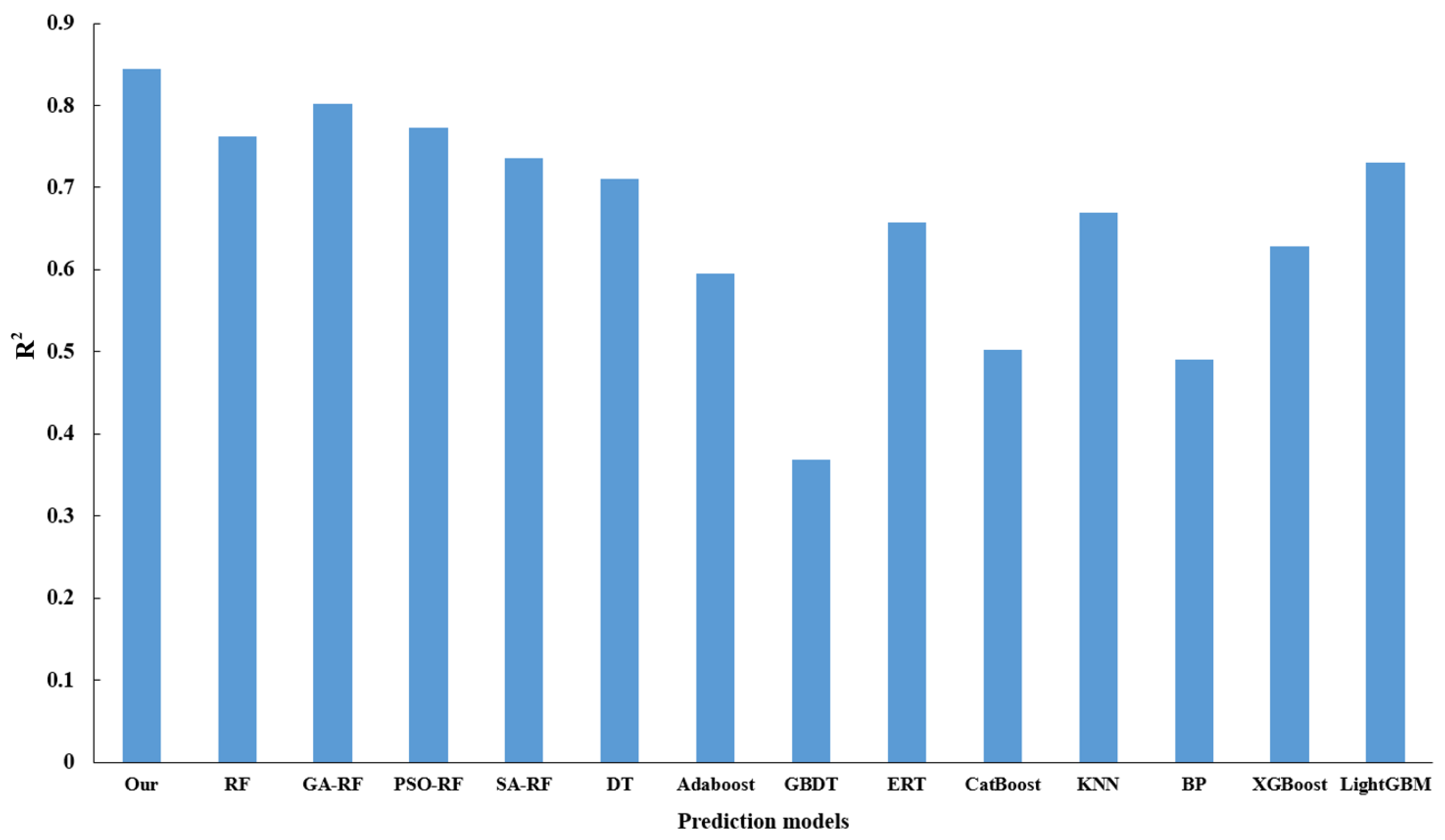

3.2.2. Comparison of Different Prediction Algorithms

4. Discussion

4.1. Comparison of Different Methods of Temperature Monitoring

4.2. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Traulsen, I.; Naunin, K.; Mueller, K.; Krieter, J. Application of infrared thermography to measure body temperature of sows. Züchtungskunde 2010, 82, 437–446. [Google Scholar]

- Amezcua, R.; Walsh, S.; Luimes, P.H.; Friendship, R.M. Infrared thermography to evaluate lameness in pregnant sows. Can. Vet. J. 2014, 55, 268. [Google Scholar] [PubMed]

- Gulliksen, S.M.; Framstad, T.; Kielland, C.; Velazquez, M.A.; Terøy, M.M.; Helland, E.M.; Lyngstad, R.H.; Oropeza Delgado, A.J.; Oropeza-Moe, M. Infrared thermography as a possible technique for the estimation of parturition onset in sows. Porc. Health Manag. 2023, 9, 3. [Google Scholar] [CrossRef]

- Soerensen, D.D.; Pedersen, L.J. Infrared skin temperature measurements for monitoring health in pigs: A review. Acta Vet. Scand. 2015, 57, 5. [Google Scholar] [CrossRef] [PubMed]

- Schaefer, A.; Von Gaza, H.; Cook, N.; Bench, C.; Charagu, P.; Simpson, G.; Huisman, A. PSXVII-38 Metabolic efficiency in swine determined with automated real time infrared thermography. J. Anim. Sci. 2018, 96, 146. [Google Scholar] [CrossRef]

- Staveley, L.M.; Zemitis, J.E.; Plush, K.J.; D’Souza, D.N. Infrared Thermography for Early Identification and Treatment of Shoulder Sores to Improve Sow and Piglet Welfare. Animals 2022, 12, 3136. [Google Scholar] [CrossRef] [PubMed]

- Jia, G.; Li, W.; Meng, J.; Tan, H.; Feng, Y. Non-contact evaluation of pigs’ body temperature incorporating environmental factors. Sensors 2020, 20, 4282. [Google Scholar] [CrossRef]

- Ma, L.; Zhang, X.; Xing, Z.; Feng, Y.; Liu, G.; Liu, Y. FDSST Detection Method for Pig Ear Base Surface Temperature Based on Skeleton Scanning Strategy. Trans. Chin. Soc. Agric. Mach. 2020, 51, 371–377. [Google Scholar]

- Zhou, L.; Chen, D.; Chen, Z.; Yuan, Y.; Wang, L.; Sun, X. Pig Ear Abnormal Color Detection on lmage Processing Techniques. Trans. Chin. Soc. Agric. Mach. 2017, 48, 166–172. [Google Scholar]

- Xiong, X.; Lu, M.; Yang, W.; Duan, G.; Yuan, Q.; Shen, M.; Norton, T.; Berckmans, D. An Automatic Head Surface Temperature Extraction Method for Top-View Thermal Image with Individual Broiler. Sensors 2019, 19, 5286. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Lorna; Abhiram, V.; Montes, D.; et al. ultralytics/yolov5: v6. 1-TensorRT, TensorFlow edge TPU and OpenVINO export and inference. Zenodo 2022. [Google Scholar] [CrossRef]

- Yang, Q.; Li, W.; Yang, X.; Yue, L.; Li, H. lmproved YOLOv5 Method for Detecting Growth Status of Apple Flowers. Comput. Eng. Appl. 2022, 58, 237–246. [Google Scholar]

- Xiao, D.; Lin, S.; Liu, Q.; Huang, Y.; Zeng, R.; Chen, L. Automatic Ear Temperature Extraction Algorithm for Live Pigs Based on Infrared Thermography. Trans. Chin. Soc. Agric. Mach. 2021, 52, 255–262. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, X.; Dai, J.; Li, L.P.; Su, Z.; Ma, X.F. Deep learning-based laser and infrared composite imaging for armor target identification and segmentation in complex battlefield environments. Infrared Phys. Technol. 2023, 132, 104725. [Google Scholar] [CrossRef]

- Ding, W.; Zhou, Z.; Li, H.; Zhan, L.; Wang, S. Eye lmage Segmentation Based on Optimized MobileNetV2 Deeplabv3+. Inf. Tradit. Chin. Med. 2022, 39, 1–5. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Sykes, D.J.; Couvillion, J.S.; Cromiak, A.; Bowers, S.; Schenck, E.; Crenshaw, M.; Ryan, P.L. The use of digital infrared thermal imaging to detect estrus in gilts. Theriogenology 2012, 78, 147–152. [Google Scholar] [CrossRef]

- Qing, Y.; Qiao, Y.; Zhang, Q.; Ding, X. Study on pig body temperature collection system based on infrared temperature measuring equipment. In Proceedings of the Association of Animal Seience and Veterinary Medicine the 10th Symposium Proeeedings, Beijing, China, 7 August 2015; pp. 87–89. [Google Scholar]

- Jin, M. Identification and Classification Methods of Pig Behavior Based on Machine Learning. Ph.D. Thesis, Inner Mongolia Agricultural University, Hohhot, China, 2021. [Google Scholar]

- Wang, N. Research and Design of Pig Disease Early Warning SystemBased on Hadoop. Master’s Thesis, Shanxi Agricultural University, Taiyuan, China, 2019. [Google Scholar]

- Liu, J. Explorations on Key Molecular Affecting Pork Quality Based on WGCNA. Master’s Thesis, Shanxi Agricultural University, Taiyuan, China, 2021. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Daniel, B.; Chong, Z.; Fanyi, X.; Yong, J.L. YOLACT: Real-Time Instance Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Zhang, Z. Detected Onset of Estrus and Behavior in the Different Varieties of Gilts Using the New Electronic Chip. Master’s Thesis, Sichuan Agricultural University, Ya’an, China, 2015. [Google Scholar]

- Schmidt, M.; Ammon, C.; Schon, P.; Manteuffel, C.; Hoffmann, G. Assessment of continuous vaginal logger-based temperature monitoring in fever-induced gilts. Am. J. Anim. Vet. Sci. 2013, 8, 55–65. [Google Scholar] [CrossRef]

- Bai, G.; Liu, L.; Shen, M.; Yao, W.; Yang, X. Design of sow body temperature monitoring node based on wireless sensor network. J. Nanjing Agric. Univ. 2014, 37, 128–134. [Google Scholar]

- Xiong, L.; Qian, Z.; Yu, J.; Shao, D.; Zhu, H.; Wu, X. Non-invasive Temperature Measurement Using Acoustic-inversion Method: Experimental Study. Chin. J. Ultrasound Med. 2008, 1, 17–19. [Google Scholar]

- Zhang, Z. Design and Implementation of Infrared Monitoring System for Sow Body Temperature. Master’s Thesis, Shanxi Agricultural University, Taiyuan, China, 2019. [Google Scholar]

- Zhou, L.; Chen, Z.; Chen, D.; Yuan, Y.; Li, Y.; Zheng, J. Pig Ear Root Detection Based on Adapted Otsu. Trans. Chin. Soc. Agric. Mach. 2016, 47, 228–232+14. [Google Scholar]

- Zheng, H.; Zhang, H.; Song, S.; Wang, Y.; Liu, T. Automatic detection of sow estrus using a lightweight real-time detector and thermal images. Int. J. Agric. Biol. Eng. 2023, 16, 194–207. [Google Scholar] [CrossRef]

| Model | IoU/% | Speed/fps |

|---|---|---|

| Ours | 91.50 | 49.26 |

| FCN | 88.28 | 33.81 |

| Mask R-CNN | 89.06 | 11.26 |

| YOLACT | 80.86 | 37.76 |

| U-Net | 86.38 | 17.20 |

| ISANet | 89.30 | 37.78 |

| ANN | 88.89 | 32.84 |

| GCNet | 89.33 | 33.53 |

| EncNet | 88.74 | 36.56 |

| PSANet | 88.82 | 26.22 |

| DANet | 87.99 | 30.97 |

| NonLocalNet | 89.35 | 31.00 |

| UPerNet | 88.30 | 34.72 |

| CCNet | 89.52 | 32.84 |

| PSPNet | 89.29 | 34.38 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, H.; Shen, M.; Sun, Y.; Tian, H.; Liu, Z.; Chen, J.; Xu, P. Instance Segmentation and Ensemble Learning for Automatic Temperature Detection in Multiparous Sows. Sensors 2023, 23, 9128. https://doi.org/10.3390/s23229128

Xue H, Shen M, Sun Y, Tian H, Liu Z, Chen J, Xu P. Instance Segmentation and Ensemble Learning for Automatic Temperature Detection in Multiparous Sows. Sensors. 2023; 23(22):9128. https://doi.org/10.3390/s23229128

Chicago/Turabian StyleXue, Hongxiang, Mingxia Shen, Yuwen Sun, Haonan Tian, Zihao Liu, Jinxin Chen, and Peiquan Xu. 2023. "Instance Segmentation and Ensemble Learning for Automatic Temperature Detection in Multiparous Sows" Sensors 23, no. 22: 9128. https://doi.org/10.3390/s23229128

APA StyleXue, H., Shen, M., Sun, Y., Tian, H., Liu, Z., Chen, J., & Xu, P. (2023). Instance Segmentation and Ensemble Learning for Automatic Temperature Detection in Multiparous Sows. Sensors, 23(22), 9128. https://doi.org/10.3390/s23229128