A Novel ST-YOLO Network for Steel-Surface-Defect Detection

Abstract

:1. Introduction

2. Related Work

2.1. Deep-Learning-Based Defect Detection

2.2. Advances in Object Detection

3. Methodology

3.1. Shunt Fusion Network

3.2. Adaptive Core Prior

3.3. Self-Tuning Transport Assignment

4. Experiments

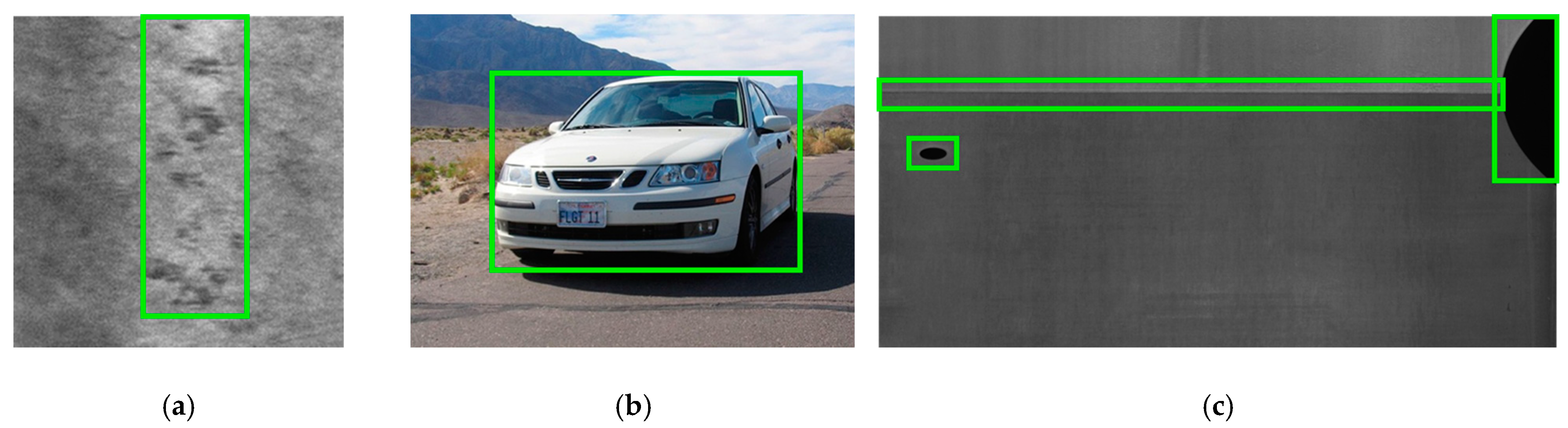

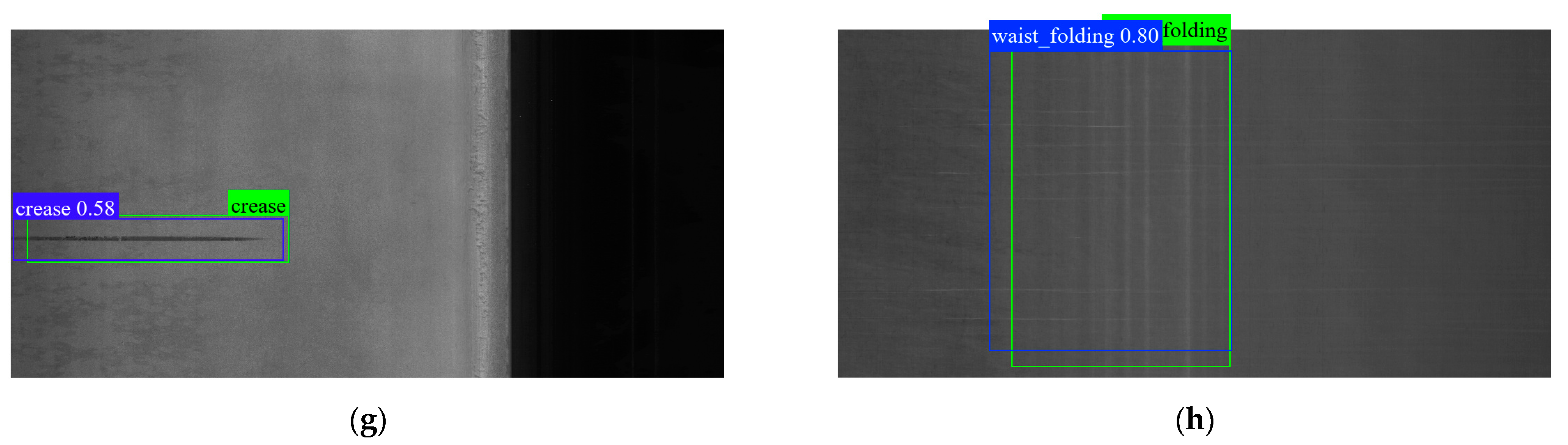

4.1. Datasets and Metrics

4.2. Implementation Details

4.3. Experiment Results

4.4. Comparison with the State-of-the-Art Methods

5. Discussion and Analysis

5.1. Ablation Studies

5.1.1. Shunt Fusion Network Structure

5.1.2. Self-Tuning Label Assignment for Training

5.2. Limitations and Future Works

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Song, G.; Song, K.; Yan, Y. EDRNet: Encoder-Decoder Residual Network for Salient Object Detection of Strip Steel Surface Defects. IEEE Trans. Instrum. Meas. 2020, 69, 9709–9719. [Google Scholar] [CrossRef]

- Ahmad, H.M.; Rahimi, A. Deep learning methods for object detection in smart manufacturing: A survey. J. Manuf. Syst. 2022, 64, 181–196. [Google Scholar] [CrossRef]

- Tao, X.; Hou, W.; Xu, D. A Survey of Surface Defect Detection Methods Based on Deep Learning. Acta Autom. Sin. 2021, 47, 1017–1034. [Google Scholar] [CrossRef]

- Chu, M.; Gong, R.; Gao, S.; Zhao, J. Steel surface defects recognition based on multi-type statistical features and enhanced twin support vector machine. Chemom. Intell. Lab. Syst. 2017, 171, 140–150. [Google Scholar] [CrossRef]

- Wang, J.; Li, Q.; Gan, J.; Yu, H.; Yang, X. Surface defect detection via entity sparsity pursuit with intrinsic priors. IEEE Trans. Industr. Inform. 2020, 16, 141–150. [Google Scholar] [CrossRef]

- Liu, X.; Xu, K.; Zhou, P.; Zhou, D.; Zhou, Y. Surface defect identification of aluminium strips with non-subsampled shearlet transform. Opt. Lasers Eng. 2020, 127, 105986. [Google Scholar] [CrossRef]

- Yu, H.; Li, Q.; Tan, Y.; Gan, J.; Wang, J.; Geng, Y.-A.; Jia, L. A Coarse-to-Fine Model for Rail Surface Defect Detection. IEEE Trans. Instrum. Meas. 2019, 68, 656–666. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, H.; Tian, Y.; Liu, K. An accurate fuzzy measure-based detection method for various types of defects on strip steel surfaces. Comput. Ind. 2020, 122, 103231. [Google Scholar] [CrossRef]

- Liu, K.; Wang, H.; Chen, H.; Qu, E.; Tian, Y.; Sun, H. Steel Surface Defect Detection Using a New Haar–Weibull-Variance Model in Unsupervised Manner. IEEE Trans. Instrum. Meas. 2017, 66, 2585–2596. [Google Scholar] [CrossRef]

- Gao, Y.; Li, X.; Wang, X.V.; Wang, L.; Gao, L. A Review on Recent Advances in Vision-based Defect Recognition towards Industrial Intelligence. J. Manuf. Syst. 2022, 62, 753–766. [Google Scholar] [CrossRef]

- Tulbure, A.-A.; Dulf, E.-H. A review on modern defect detection models using DCNNs—Deep convolutional neural networks. J. Adv. Res. 2022, 35, 33–48. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Lema, D.G.; Pedrayes, O.D.; Garcia, D.F. Automated Surface Defect Detection in Metals: A Comparative Review of Object Detection and Semantic Segmentation Using Deep Learning. IEEE Trans. Ind. Appl. 2022, 58, 4203–4213. [Google Scholar] [CrossRef]

- Zhou, T.; Zhang, J.; Su, H.; Zou, W.; Zhang, B. EDDs: A series of Efficient Defect Detectors for fabric quality inspection. Measurement 2021, 172, 108885. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. 2021. Available online: http://arxiv.org/abs/2107.08430 (accessed on 18 July 2022).

- Liu, Z.; Tang, R.; Duan, G.; Tan, J. TruingDet: Towards high-quality visual automatic defect inspection for mental surface. Opt. Lasers Eng. 2021, 138, 106423. [Google Scholar] [CrossRef]

- Cheng, X.; Yu, J. RetinaNet with Difference Channel Attention and Adaptively Spatial Feature Fusion for Steel Surface Defect Detection. IEEE Trans. Instrum. Meas. 2021, 70, 2503911. [Google Scholar] [CrossRef]

- Ma, Z.; Li, Y.; Huang, M.; Huang, Q.; Cheng, J.; Tang, S. A lightweight detector based on attention mechanism for aluminum strip surface defect detection. Comput. Ind. 2022, 136, 103585. [Google Scholar] [CrossRef]

- Kou, X.; Liu, S.; Cheng, K.; Qian, Y. Development of a YOLO-V3-based model for detecting defects on steel strip surface. Measurement 2021, 182, 109454. [Google Scholar] [CrossRef]

- Tian, R.; Jia, M. DCC-CenterNet: A rapid detection method for steel surface defects. Measurement 2022, 187, 110211. [Google Scholar] [CrossRef]

- Yu, J.; Cheng, X.; Li, Q. Surface Defect Detection of Steel Strips Based on Anchor-Free Network With Channel Attention and Bidirectional Feature Fusion. IEEE Trans. Instrum. Meas. 2022, 71, 5000710. [Google Scholar] [CrossRef]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance Problems in Object Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3388–3415. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22 October 2017. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Available online: https://arxiv.org/abs/1804.02767 (accessed on 18 July 2022).

- Song, G.; Liu, Y.; Wang, X. Revisiting the Sibling Head in Object Detector. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking Classification and Localization for Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A simple and strong anchor-free object detector. arXiv 2020, arXiv:2006.09214. [Google Scholar] [CrossRef]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyound Anchor-Based Object Detection. IEEE Trans. Image Process 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 16–18 June 2020. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Kim, K.; Lee, H.S. Probabilistic Anchor Assignment with IoU Prediction for Object Detection. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Li, Z.; Yoshie, O.; Sun, J. OTA: Optimal Transport Assignment for Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934v1. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.-J.; Fu, X.; Gan, L. Deep Metallic Surface Defect Detection: The New Benchmark and Detection Network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals, and Image Processing, Niteroi, Brazil, 1–3 July 2020. [Google Scholar] [CrossRef]

- Li, Z.; Tian, X.; Liu, X.; Liu, Y.; Shi, X. A Two-Stage Industrial Defect Detection Framework Based on Improved-YOLOv5 and Optimized-Inception-ResnetV2 Models. Appl. Sci. 2022, 12, 834. [Google Scholar] [CrossRef]

- Chen, X.; Lv, J.; Fang, Y.; Du, S. Online Detection of Surface Defects Based on Improved YOLOV3. Sensors 2022, 22, 817. [Google Scholar] [CrossRef]

- Liu, X.; Gao, J. Surface Defect Detection Method of Hot Rolling Strip Based on Improved SSD Model. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2021. [Google Scholar]

- Tang, M.; Li, Y.; Yao, W.; Hou, L.; Sun, Q.; Chen, J. A strip steel surface defect detection method based on attention mechanism and multi-scale maxpooling. Meas. Sci. Technol. 2021, 32, 115401. [Google Scholar] [CrossRef]

- Bustillo, A.; Urbikain, G.; Perez, J.M.; Pereira, O.M.; de Lacalle, L.N.L. Smart optimization of a friction-drilling process based on boosting ensembles. J. Manuf. Syst. 2018, 48, 108–121. [Google Scholar] [CrossRef]

- Wang, G.; Liu, Z.; Sun, H.; Zhu, C.; Yang, Z. Yolox-BTFPN: An anchor-free conveyor belt damage detector with a biased feature extraction network. Measurement 2022, 200, 111675. [Google Scholar] [CrossRef]

- Tapia, E.; Sastoque-Pinilla, L.; Lopez-Novoa, U.; Bediaga, I.; de Lacalle, N.L. Assessing Industrial Communication Protocols to Bridge the Gap between Machine Tools and Software Monitoring. Sensors 2023, 23, 5694. [Google Scholar] [CrossRef] [PubMed]

- Fernández, B.; González, B.; Artola, G.; De Lacalle, N.L.; Angulo, C. A Quick Cycle Time Sensitivity Analysis of Boron Steel Hot Stamping. Metals 2019, 9, 235. [Google Scholar] [CrossRef]

| Model | mAP@0.5 | Crazing | Inclusion | Patches | Pitted Surface | Rolled-in Scale | Scratches |

|---|---|---|---|---|---|---|---|

| YOLOX | 77.1 | 46.6 | 83.1 | 88.6 | 83.5 | 64.8 | 95.7 |

| S-YOLO | 79.3 | 48.8 | 83.2 | 90.7 | 87.4 | 69.9 | 96.0 |

| ST-YOLO | 80.3 | 54.6 | 83.0 | 89.2 | 84.7 | 73.2 | 97.0 |

| Model | mAP@0.5 | Pu | Wl | Cg | Ws | Os | Ss | In | Rp | Cr | Wf |

|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOX | 70.1 | 91.9 | 92.0 | 99.2 | 72.9 | 72.1 | 54.7 | 36.6 | 44.4 | 60.4 | 77.0 |

| S-YOLO | 71.6 | 87.1 | 92.1 | 99.6 | 75.3 | 69.7 | 58.2 | 36.0 | 49.0 | 74.5 | 74.5 |

| ST-YOLO | 72.8 | 93.4 | 97.3 | 99.6 | 73.5 | 72.5 | 59.3 | 37.9 | 44.6 | 70.5 | 79.7 |

| Model | Backbone | mAP@[0.5:0.95] (%) | mAP@0.5(%) | F1 Score(%) | FPS | Params |

|---|---|---|---|---|---|---|

| Faster-RCNN w FPN | ResNet-50 | 38.5 | 76.5 | 72 | 28.7 | 41.38 M |

| RetinaNet | ResNet-50 | 34.8 | 69.6 | 67 | 42.6 | 36.43 M |

| YOLOv4 | CSPDarkNet-53 | 35.8 | 76.2 | 72 | 40.3 | 63.96 M |

| FCOS | ResNet-50 | 40.1 | 77.5 | 73 | 45.9 | 32.13 M |

| CenterNet | ResNet-50 | 38.5 | 75.1 | 71 | 78.3 | 32.66 M |

| YOLOX | CSPDarkNet-53 | 40.3 | 77.1 | 74 | 48.9 | 54.15 M |

| YOLOX w boosting | CSPDarkNet-53 | 40.9 | 78.2 | 74 | 48.7 | 57.96 M |

| S-YOLO (Ours) | CSPDarkNet-53 | 40.8 | 79.3 | 76 | 46.0 | 55.82 M |

| ST-YOLO (Ours) | CSPDarkNet-53 | 40.9 | 80.3 | 78 | 46.0 | 55.82 M |

| Model | Backbone | mAP@[0.5:0.95] (%) | mAP@0.5(%) | F1 Score(%) | FPS | Params |

|---|---|---|---|---|---|---|

| Faster-RCNN w FPN | ResNet-50 | 32.7 | 67.4 | 65 | 26.1 | 41.40 M |

| RetinaNet | ResNet-50 | 25.7 | 54.4 | 56 | 39.3 | 36.52 M |

| YOLOv4 | CSPDarkNet-53 | 28.0 | 67.0 | 65 | 41.0 | 63.99 M |

| FCOS | ResNet-50 | 32.0 | 69.3 | 67 | 41.8 | 32.14 M |

| CenterNet | ResNet-50 | 29.1 | 63.8 | 62 | 68.8 | 32.66 M |

| YOLOX | CSPDarkNet-53 | 31.4 | 70.1 | 69 | 48.5 | 54.15 M |

| S-YOLO (Ours) | CSPDarkNet-53 | 32.0 | 71.6 | 68 | 44.7 | 55.83 M |

| ST-YOLO (Ours) | CSPDarkNet-53 | 32.9 | 72.8 | 71 | 44.7 | 55.83 M |

| Related Researches | mAP@0.5 (%) | FPS |

|---|---|---|

| Li’s Optimized-Inception-ResnetV2 [39] | 78.1 | 24.0 |

| Chen’s FRCN [40] | 77.9 | 27.5 |

| Cheng’s DE_RetinaNet [16] | 78.25 | 30.0 |

| Liu’s RAF-SSD [41] | 75.1 | 35.5 |

| Tang’s ECA+MSMP [42] | 80.86 | 27.9 |

| YOLOX w boosting [43] | 78.2 | 40.7 |

| S-YOLO (Ours) | 79.3 | 46.0 |

| ST-YOLO (Ours) | 80.3 | 46.0 |

| Fusion Structures | mAP@0.5 on NEU-DET | Crazing | Inclusion | Patches | Pitted Surface | Rolled-in Scale | Scratches |

|---|---|---|---|---|---|---|---|

| FPN-PAN | 77.1 | 46.6 | 83.1 | 88.6 | 83.5 | 64.8 | 95.7 |

| single FPN | 77.9 | 48.9 | 83.2 | 88.6 | 84.9 | 66.0 | 95.9 |

| double FPN | 78.7 | 48.8 | 82.2 | 91.0 | 87.8 | 66.3 | 96.2 |

| BTFPN | 78.1 | 49.7 | 81.2 | 90.4 | 86.6 | 65.9 | 94.6 |

| S-Cls-Loc | 77.2 | 44.1 | 82.6 | 91.4 | 84.8 | 64.6 | 95.4 |

| S-Loc-Cls (Ours) | 79.3 | 48.8 | 83.2 | 90.7 | 87.4 | 69.9 | 96.0 |

| Model Structure | Label Assignment Method | mAP@0.5 on NEU-DET | FPS in Training on NEU-DET | mAP@0.5 on GC10-DET | FPS in Training on GC10-DET |

|---|---|---|---|---|---|

| YOLOX | SimOTA | 77.1 | 53.7 | 70.1 | 47.9 |

| YOLOX | STTA | 77.9 | 49.6 | 71.9 | 44.5 |

| S-YOLO | SimOTA | 79.3 | 51.2 | 71.6 | 46.3 |

| S-YOLO | STTA | 80.3 | 47.7 | 72.8 | 43.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Zhang, Z.; Zhao, J. A Novel ST-YOLO Network for Steel-Surface-Defect Detection. Sensors 2023, 23, 9152. https://doi.org/10.3390/s23229152

Ma H, Zhang Z, Zhao J. A Novel ST-YOLO Network for Steel-Surface-Defect Detection. Sensors. 2023; 23(22):9152. https://doi.org/10.3390/s23229152

Chicago/Turabian StyleMa, Hongtao, Zhisheng Zhang, and Junai Zhao. 2023. "A Novel ST-YOLO Network for Steel-Surface-Defect Detection" Sensors 23, no. 22: 9152. https://doi.org/10.3390/s23229152