Comparative Analysis of Resident Space Object (RSO) Detection Methods

Abstract

:1. Introduction

1.1. Overview of RSO Imaging Technologies

1.1.1. LiDAR and RADAR Systems

1.1.2. Optical Systems

1.2. Research Objectives

2. Survey of Object Detection Algorithms in SSA Applications

3. RSO Detection Methods

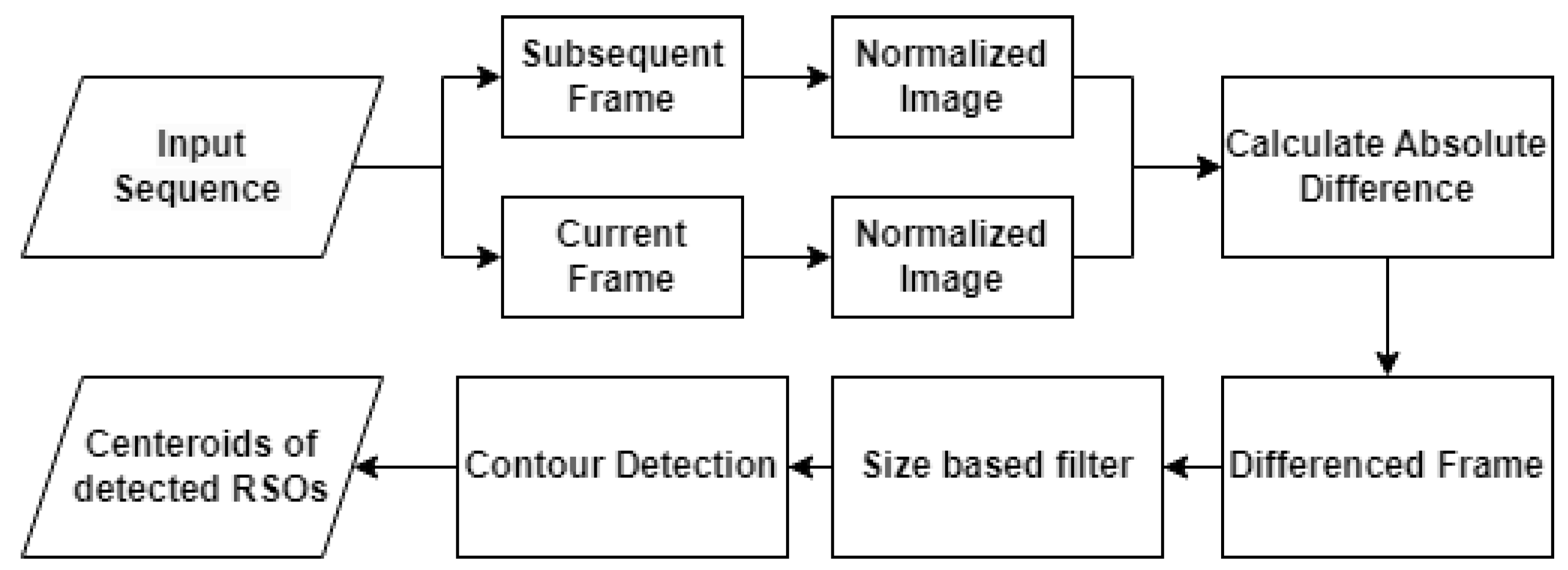

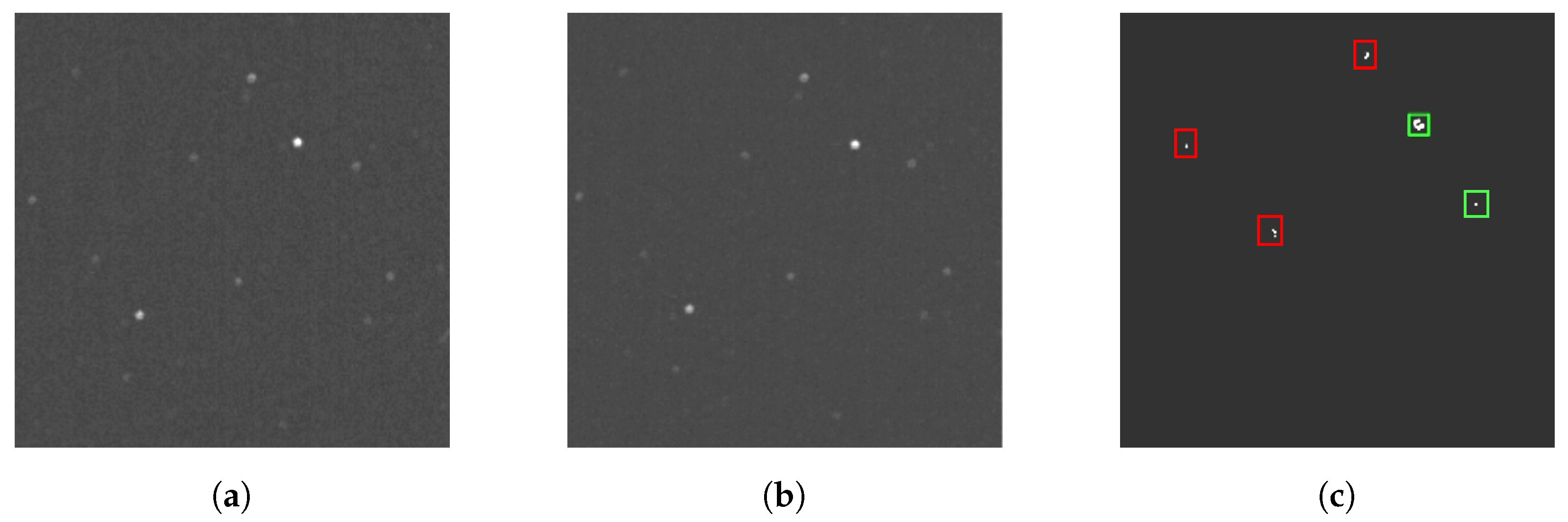

3.1. Adjacent Frame Differencing (AFD)

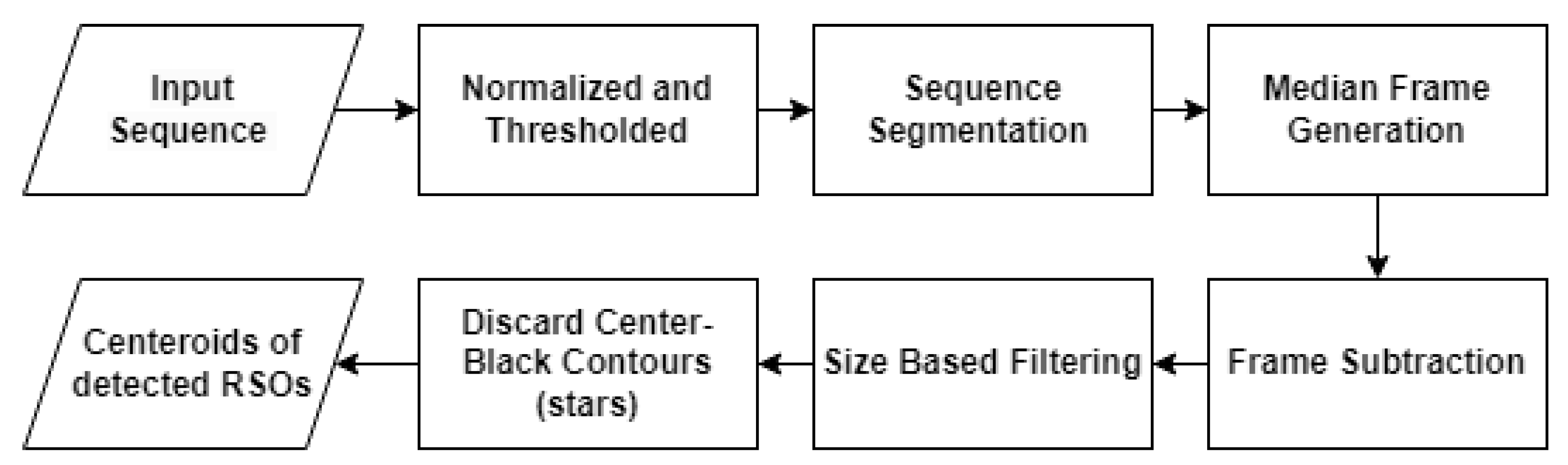

3.2. Median Frame Differencing (MFD)

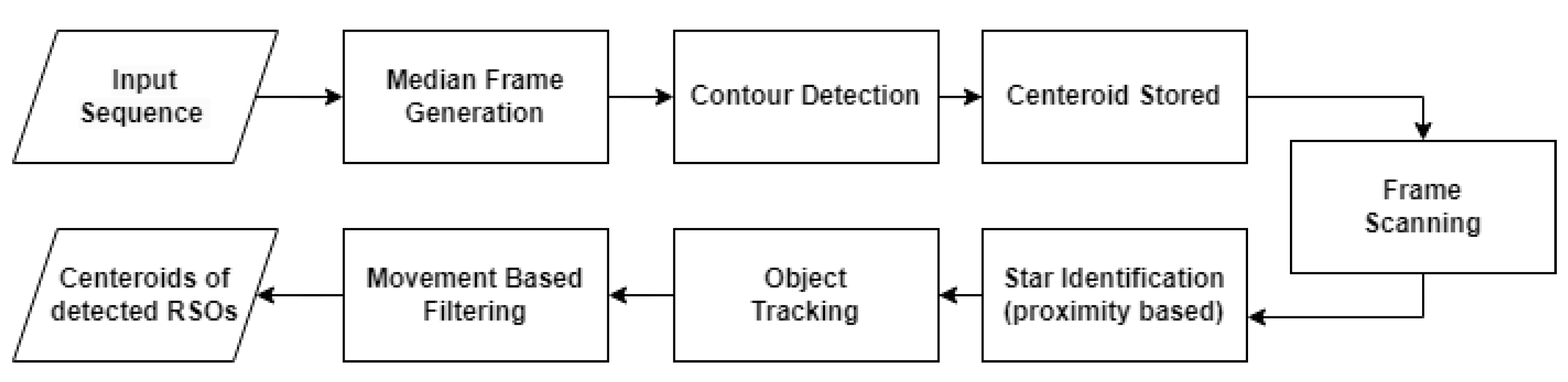

3.3. Proximity Filtering and Tracking (PFT)

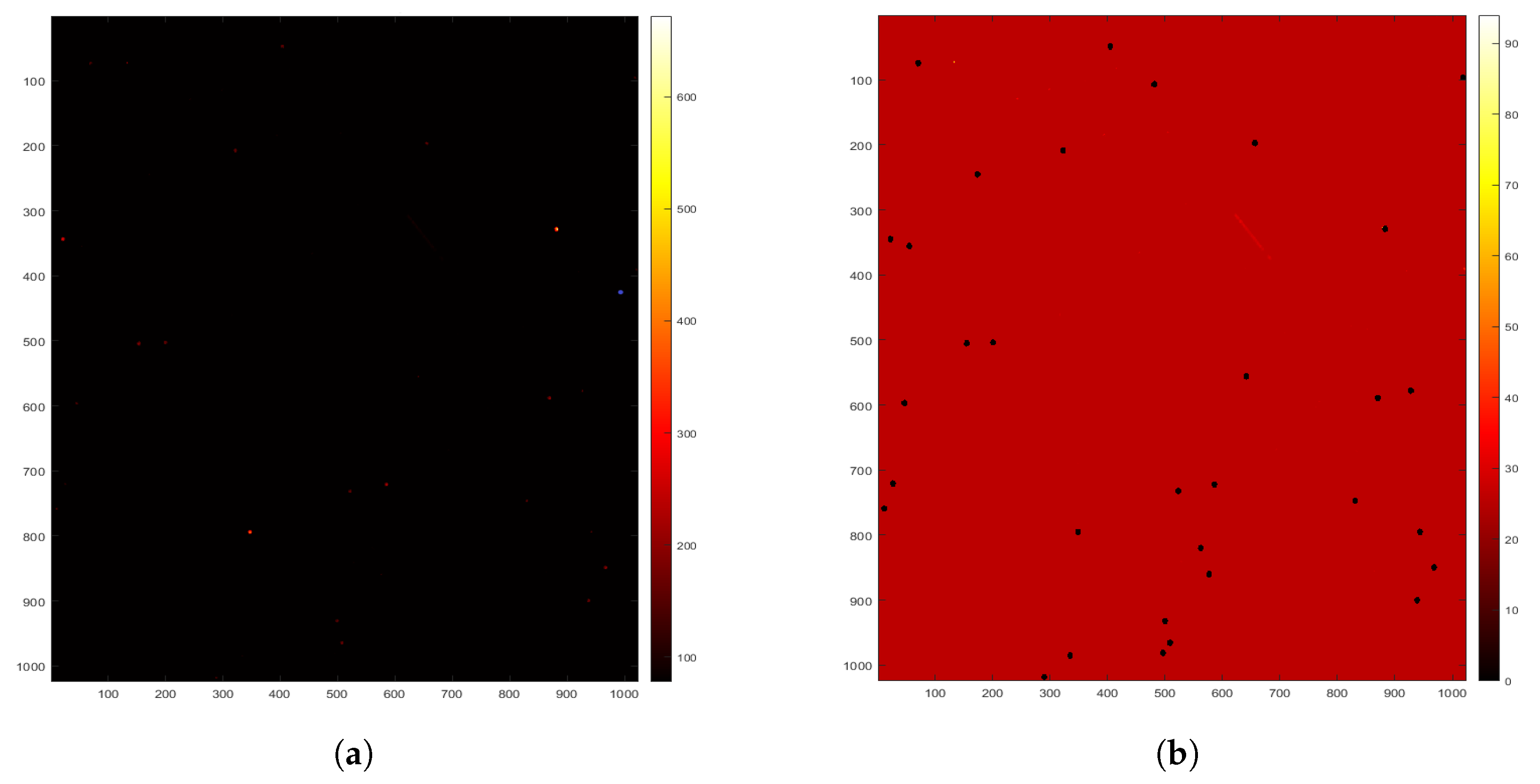

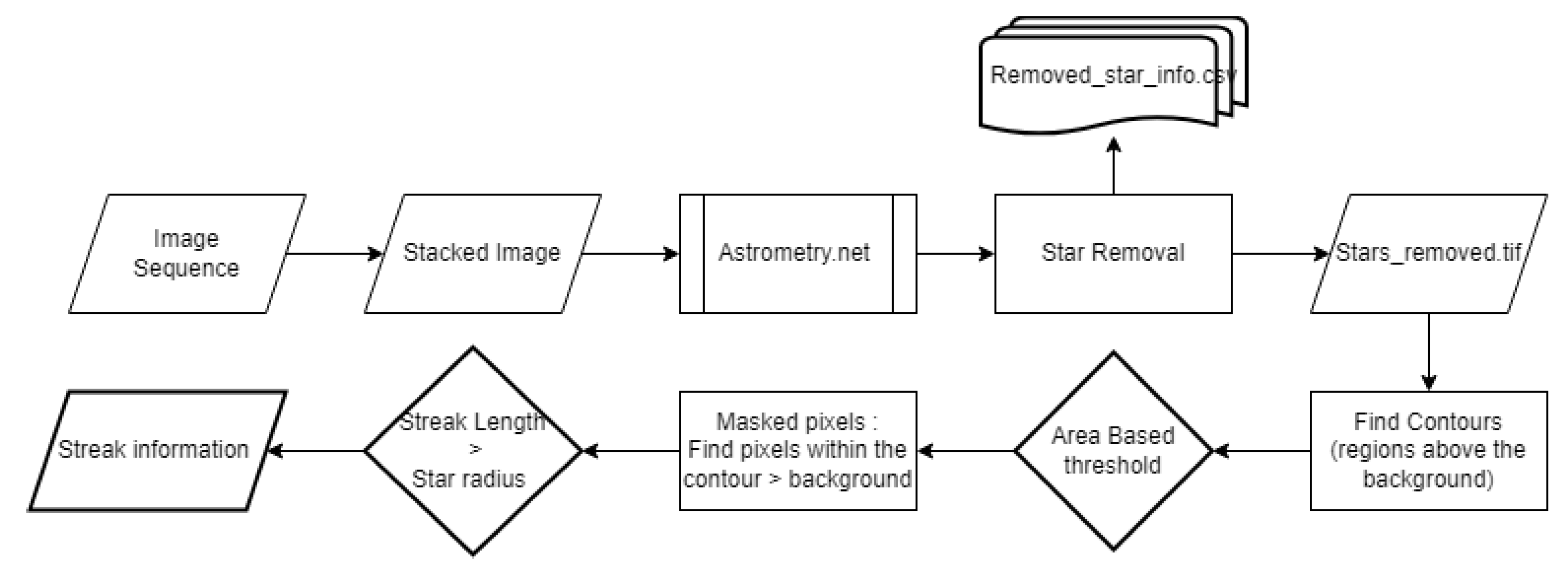

3.4. Streak Detection from Stacked Short-Exposure RSO Images

4. Dataset Used in the Current Study

RSONAR Mission Overview

5. Results

5.1. Metrics Used

5.2. Results of Object Detection

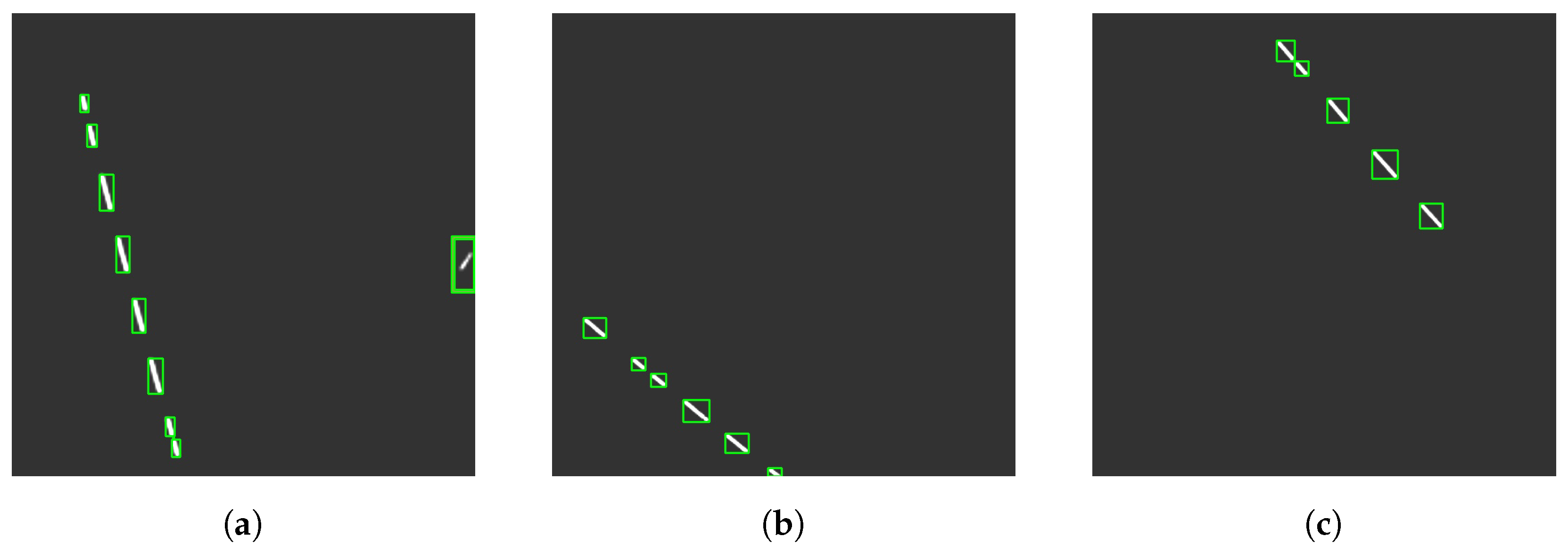

5.3. Results of Streak Detection

6. Conclusions

6.1. Summary

6.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Space Debris by the Numbers. Available online: https://www.esa.int/Space_Safety/Space_Debris/Space_debris_by_the_numbers (accessed on 5 October 2023).

- Hakima, H.; Stoute, B.; Fricker, M.; Williams, J.; Boone, P.; Rey, M.; Dupuis, A.; Turbide, S.; Desbiens, L.; Marchese, L.; et al. Space-Object Identification Satellite (SOISat) Mission. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Maui, HI, USA, 15–18 September 2020. [Google Scholar]

- Nakajima, Y.; Sasaki, T.; Okada, N.; Yamamoto, T. Development of LiDAR Measurement Simulator Considering Target Surface Reflection. In Proceedings of the 8th European Conference on Space Debris, Darmstadt, Germany, 20–23 April 2021; Volume 8. [Google Scholar]

- Fuller, L.; Karl, R.; Anderson, B.; Lee-Roller, M. Development of a Versatile LiDAR Point Cloud Simulation Testbed for Advanced RSO Algorithms. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Maui, HI, USA, 27–30 September 2022. [Google Scholar]

- Piedra, S.; Rivo, S.; Morollón, C. Orbit Determination of Space Debris Using Radar, Laser and Optical Measurements. In Proceedings of the 2nd NEO and Debris Detection Conference, Darmstadt, Germany, 24–26 January 2023. [Google Scholar]

- Facchini, L.; Montaruli, M.F.; Lizia, P.D.; Massari, M.; Pupillo, G.; Bianchi, G. Resident Space Object Track Reconstruction Using A Multireceiver Radar System. In Proceedings of the 8th European Conference on Space Debris, Darmstadt, Germany, 20–23 April 2021; Volume 8. [Google Scholar]

- Ma, H. Initial Orbits Of Leo Objects Using Radar Observations. In Proceedings of the 8th European Conference on Space Debris, Darmstadt, Germany, 20–23 April 2021; Volume 8. [Google Scholar]

- Ender, J.; Leushacke, L.; Brenner, A.; Wilden, H. Radar Techniques for Space Situational Awareness. In Proceedings of the 2011 12th International Radar Symposium (IRS), Leipzig, Germany, 7–9 September 2011; pp. 21–26. [Google Scholar]

- Challenges of Space-Based Space Situational Awareness. Innovation News Network. Available online: https://www.innovationnewsnetwork.com/challenges-of-space-based-space-situational-awareness/34979/ (accessed on 5 October 2023).

- Biria, A.; Marchand, B. Constellation Design for Space-Based Space Situational Awareness Applications: An Analytical Approach. J. Spacecr. Rocket. 2014, 51, 545–562. [Google Scholar] [CrossRef]

- Clemens, S.; Lee, R.; Harrison, P.; Soh, W. Feasibility of using commercial star trackers for on-orbit resident space object detection. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference, Maui, HI, USA, 11–14 September 2018. [Google Scholar]

- Xu, T.; Yang, X.; Fu, Z.; Wu, M.; Gao, S. A Staring Tracking Measurement Method of Resident Space Objects Based on the Star Tacker. Photonics 2023, 10, 288. [Google Scholar] [CrossRef]

- Spiller, D.; Magionami, E.; Schiattarella, V.; Curti, F.; Facchinetti, C.; Ansalone, L.; Tuozzi, A. On-Orbit Recognition of Resident Space Objects by Using Star Trackers. Acta Astronaut. 2020, 177, 478–496. [Google Scholar] [CrossRef]

- Dave, S.; Clark, R.; Gabriel, C.; Lee, R. Machine Learning Implementation for in-Orbit RSO Orbit Estimation Using Star Tracker Cameras. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Online, 15–18 September 2020; p. 15. [Google Scholar]

- Dave, S.; Clark, R.; Lee, R.S.K. RSOnet: An Image-Processing Framework for a Dual-Purpose Star Tracker as an Opportunistic Space Surveillance Sensor. Sensors 2022, 22, 5688. [Google Scholar] [CrossRef] [PubMed]

- Ragland, K.; Tharcis, P. A Survey on Object Detection, Classification and Tracking Methods. Int. J. Eng. Res. Technol. 2014, 3, 622–628. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. arXiv 2019, arXiv:1809.02165. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2023, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent Advances in Deep Learning for Object Detection. arXiv 2019, arXiv:1908.03673. [Google Scholar] [CrossRef]

- Massimi, F.; Ferrara, P.; Benedetto, F. Deep Learning Methods for Space Situational Awareness in Mega-Constellations Satellite-Based Internet of Things Networks. Sensors 2023, 23, 124. [Google Scholar] [CrossRef] [PubMed]

- Cziranka-Crooks, N.; Hrynyk, T.; Balam, D.D.; Abbasi, V.; Scott, L.; Thorsteinson, S. NEOSSat: Operational and Scientific Evolution of Canada’s Resilient Space Telescope. In Proceedings of the 2nd NEO and Debris Detection Conference, Darmstadt, Germany, 24–26 January 2023. [Google Scholar]

- Cogger, L.; Howarth, A.; Yau, A.; White, A.; Enno, G.; Trondsen, T.; Asquin, D.; Gordon, B.; Marchand, P.; Ng, D.; et al. Fast Auroral Imager (FAI) for the e-POP Mission. Space Sci. Rev. 2015, 189, 15–25. [Google Scholar] [CrossRef]

- Abercromby, K.J.; Seitzer, P.; Cowardin, H.M.; Barker, E.S.; Matney, M.J. Michigan Orbital DEbris Survey Telescope Observations of the Geosynchronous Orbital Debris Environment Observing Years: 2007–2009; NASA Technical Report; National Aeronautics and Space Administration, Johnson Space Center: Houston, TX, USA, 2011.

- Qashoa, R.; Lee, R. Classification of Low Earth Orbit (LEO) Resident Space Objects’ (RSO) Light Curves Using a Support Vector Machine (SVM) and Long Short-Term Memory (LSTM). Sensors 2023, 23, 6539. [Google Scholar] [CrossRef] [PubMed]

- Muthukrishnan, R.; Radha, M. Edge Detection Techniques For Image Segmentation. Int. J. Comput. Sci. Inf. Technol. 2011, 3, 259–267. [Google Scholar] [CrossRef]

- Lang, D.; Hogg, D.W.; Mierle, K.; Blanton, M.; Roweis, S. Astrometry.Net: Blind Astrometric Calibration Of Arbitrary Astronomical Images. Astron. J. 2010, 139, 1782. [Google Scholar] [CrossRef]

- Sara, R.; Cvrcek, V. Faint Streak Detection With Certificate By Adaptive Multi-Level Bayesian Inference. In Proceedings of the 7th European Conference on Space Debris, Darmstadt, Germany, 18–21 April 2017. [Google Scholar]

- Musallam, M.A.; Ismaeil, K.A.; Oyedotun, O.; Perez, M.D.; Poucet, M.; Aouada, D. SPARK: Spacecraft Recognition Leveraging Knowledge of Space Environment. arXiv 2021, arXiv:2104.05978. [Google Scholar]

- Meng, G.; Jiang, Z.; Liu, Z.; Zhang, H.; Zhao, D. Full-viewpoint 3D Space Object Recognition Based on Kernel Locality Preserving Projections. Chin. J. Aeronaut. 2010, 23, 563–572. [Google Scholar]

- Afshar, S.; Nicholson, A.P.; van Schaik, A.; Cohen, G. Event-Based Object Detection and Tracking for Space Situational Awareness. IEEE Sens. J. 2020, 20, 15117–15132. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, Y.; Bettens, A.; Eun, Y.; Wu, X. A Simulation-Augmented Benchmarking Framework for Automatic RSO Streak Detection in Single-Frame Space Images. arXiv 2023, arXiv:2305.00412. [Google Scholar]

- Dentamaro, A.V.; Dao, P.D.; Knobel, K.R. Test of Neural Network Techniques using Simulated Dual-Band Data of LEO Satellites. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Maui, HI, USA, 14–17 September 2010. [Google Scholar]

- Antón, A.M.; Mcnally, K.; Ramirez, D.; Smith, D.; Dick, J. Artificial Intelligence For Space Resident Objects Characterisation With Lightcurves. In Proceedings of the 8th European Conference on Space Debris, Online, 20 April 2021. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. arXiv 2016, arXiv:1604.01685. [Google Scholar]

- pco.panda 4.2 Ultra Compact SCMOS Camera; Excelitas PCO GmbH. pp. 1–7. Available online: https://www.pco.de/fileadmin/user_upload/pco-product_sheets/DS_PCOPANDA42_V104.pdf (accessed on 6 October 2023).

- ZEISS Dimension 2/25; Carl Zeiss AG. 2018, pp. 1–5. Available online: https://www.zeiss.com/content/dam/consumer-products/downloads/industrial-lenses/datasheets/en/dimension-lenses/datasheet-zeiss-dimension-225.pdf (accessed on 6 October 2023).

- Kunalakantha, P.; Baires, A.V.; Dave, S.; Clark, R.; Chianelli, G.; Lee, R.S.K. Stratospheric Night Sky Imaging Payload for Space Situational Awareness (SSA). Sensors 2023, 23, 6595. [Google Scholar] [CrossRef] [PubMed]

- Strato-Science 2022 Campaign. Available online: https://www.asc-csa.gc.ca/eng/sciences/balloons/campaign-2022.asp (accessed on 6 October 2023).

- Collins, K.A.; Kielkopf, J.F.; Stassun, K.G.; Hessman, F.V. AstroImageJ: Image Processing and Photometric Extraction for Ultra-precise Astronomical Light Curves. Astron. J. 2017, 153, 77. [Google Scholar] [CrossRef]

| Algorithm | Description | Pros | Cons |

|---|---|---|---|

| Frame differencing and background subtraction | Models the background using a running average. The background is subtracted from frames in sequence, and leftover pixels are in motion. | Simple implementation. Computationally inexpensive. Adapts to changing backgrounds. | Does not account for uninteresting motion (i.e., motion due to background objects moving). Limited to a fixed camera; relies on frames aligning with background. |

| Optical flow | The 2D motion vector for each pixel of an image is computed by comparing it with the next image. | Accurate; measures motion at pixel level. | Requires detailed features for effective use; RSOs may not be detailed enough. |

| Nearest neighbor | Objects are associated between images by finding the objects closest to them in the next image. | Can perform further analysis to differentiate the movements of RSOs and stars using position/velocity. | Not robust to overlapping RSOs/stars, illumination changes, or fast-moving RSOs. |

| Streak detection | Models use the plate solver for star removal for streak detection. | Avoids additional blurring. Accounts for uncalibrated images. | Needs Astrometry.net. The observer’s motion can heavily influence the output. |

| Characteristic | Values |

|---|---|

| Aperture | 12.5 mm |

| Bit depth | 16 bits |

| Chromaticity | Monochrome |

| Exposure time | 100 ms |

| Field of View | 29.7 |

| Focal length | 25 mm |

| Pixel size | 6.5 m / pixel |

| Pixel scale | 104 arcsec/pixel |

| Quantum efficiency | 82% |

| Resolution | 1024 pixels |

| Method | Precision | Recall | F1 Score | TP | FP | FN |

|---|---|---|---|---|---|---|

| AFD | 73% | 63% | 68% | 387 | 143 | 226 |

| MFD | 95% | 65% | 77% | 397 | 22 | 216 |

| PFT | 95% | 73% | 82% | 447 | 25 | 166 |

| Sequence Order | Mean Streak Length (Pixels) | SBR (dB) |

|---|---|---|

| First | 56.51 | 27.32 |

| Second | 49.8 | 27.67 |

| Third | 61.04 | 26.64 |

| Sequence Order | Precision | Recall | F-1 Score | TP | FP | FN |

|---|---|---|---|---|---|---|

| First | 100% | 48% | 65% | 158 | 0 | 169 |

| Second | 100% | 71% | 83% | 117 | 0 | 47 |

| Third | 100% | 79% | 88% | 99 | 0 | 27 |

| Parameters | Optimal Algorithms |

|---|---|

| Onboard Processing | MFD |

| Real-time Processing | PFT |

| Least False Negatives | Streak Detection |

| FPGA Implementation | MFD |

| Changing Light Conditions | Streak Detection |

| Best Accuracy | Streak Detection |

| Most Flexible | MFD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suthakar, V.; Sanvido, A.A.; Qashoa, R.; Lee, R.S.K. Comparative Analysis of Resident Space Object (RSO) Detection Methods. Sensors 2023, 23, 9668. https://doi.org/10.3390/s23249668

Suthakar V, Sanvido AA, Qashoa R, Lee RSK. Comparative Analysis of Resident Space Object (RSO) Detection Methods. Sensors. 2023; 23(24):9668. https://doi.org/10.3390/s23249668

Chicago/Turabian StyleSuthakar, Vithurshan, Aiden Alexander Sanvido, Randa Qashoa, and Regina S. K. Lee. 2023. "Comparative Analysis of Resident Space Object (RSO) Detection Methods" Sensors 23, no. 24: 9668. https://doi.org/10.3390/s23249668

APA StyleSuthakar, V., Sanvido, A. A., Qashoa, R., & Lee, R. S. K. (2023). Comparative Analysis of Resident Space Object (RSO) Detection Methods. Sensors, 23(24), 9668. https://doi.org/10.3390/s23249668