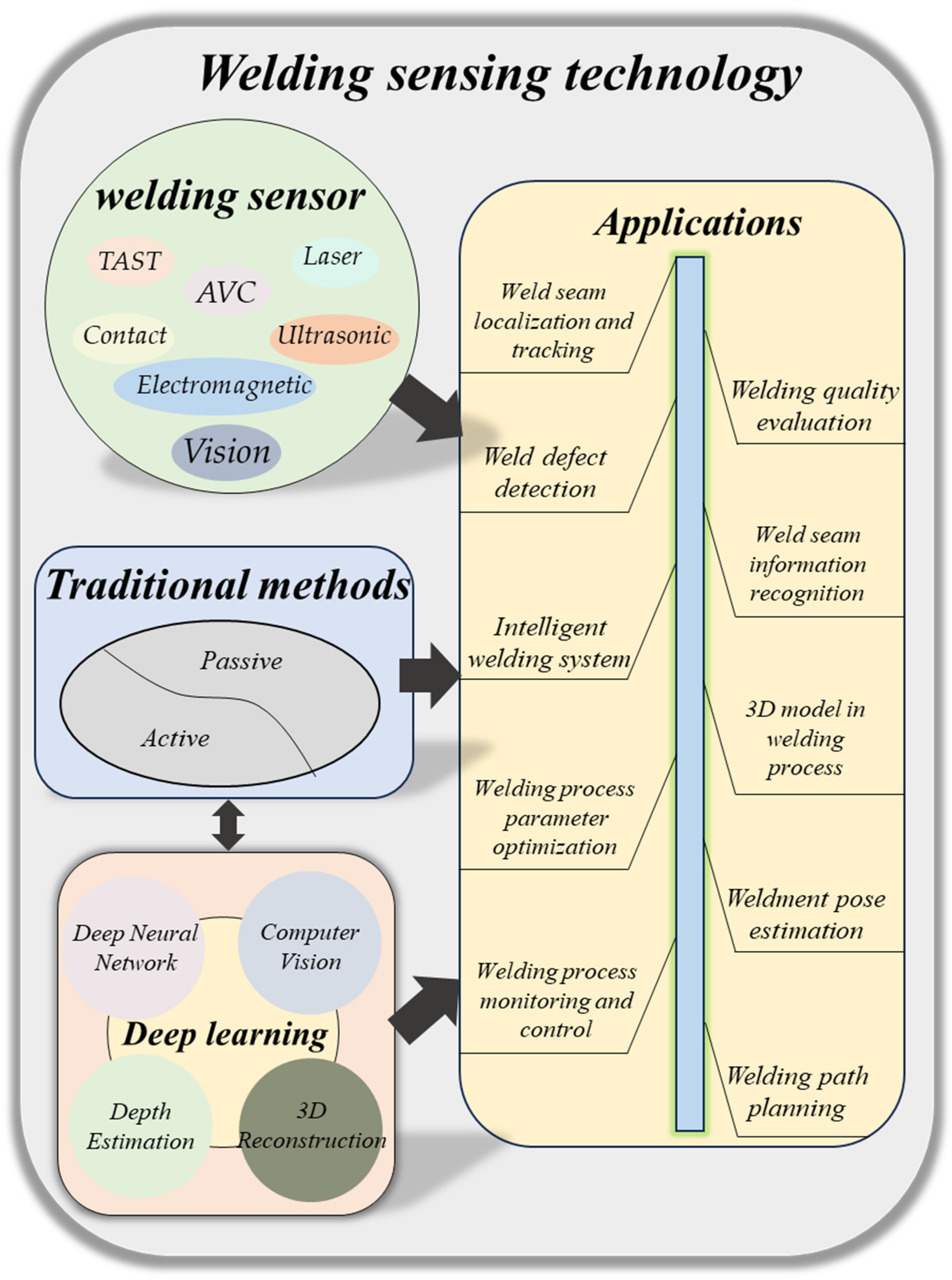

Visual Sensing and Depth Perception for Welding Robots and Their Industrial Applications

Abstract

:1. Introduction

2. Research Method

- Relevance to technologies of visual sensors for welding robots.

- Sensors used in the welding process.

- Depth perception methods based on computer vision.

- Welding robot sensors used in industry.

3. Sensors for Welding Process

3.1. Thru-Arc Seam Tracking (TAST) Sensors

3.2. Arc Voltage Control (AVC) Sensors

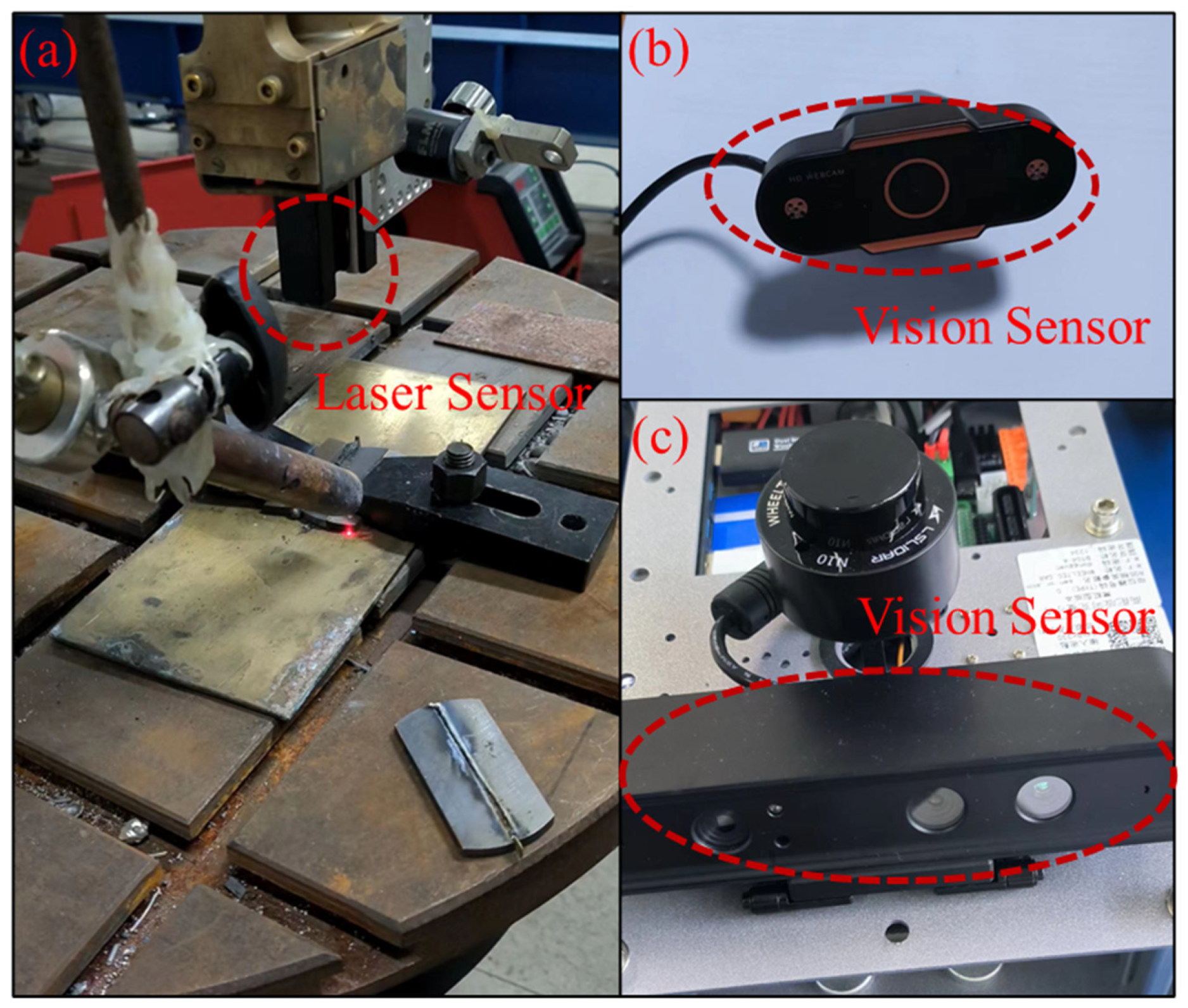

3.3. Laser Sensors

3.4. Contact Sensing

3.5. Ultrasonic Sensing

3.6. Electromagnetic Sensing

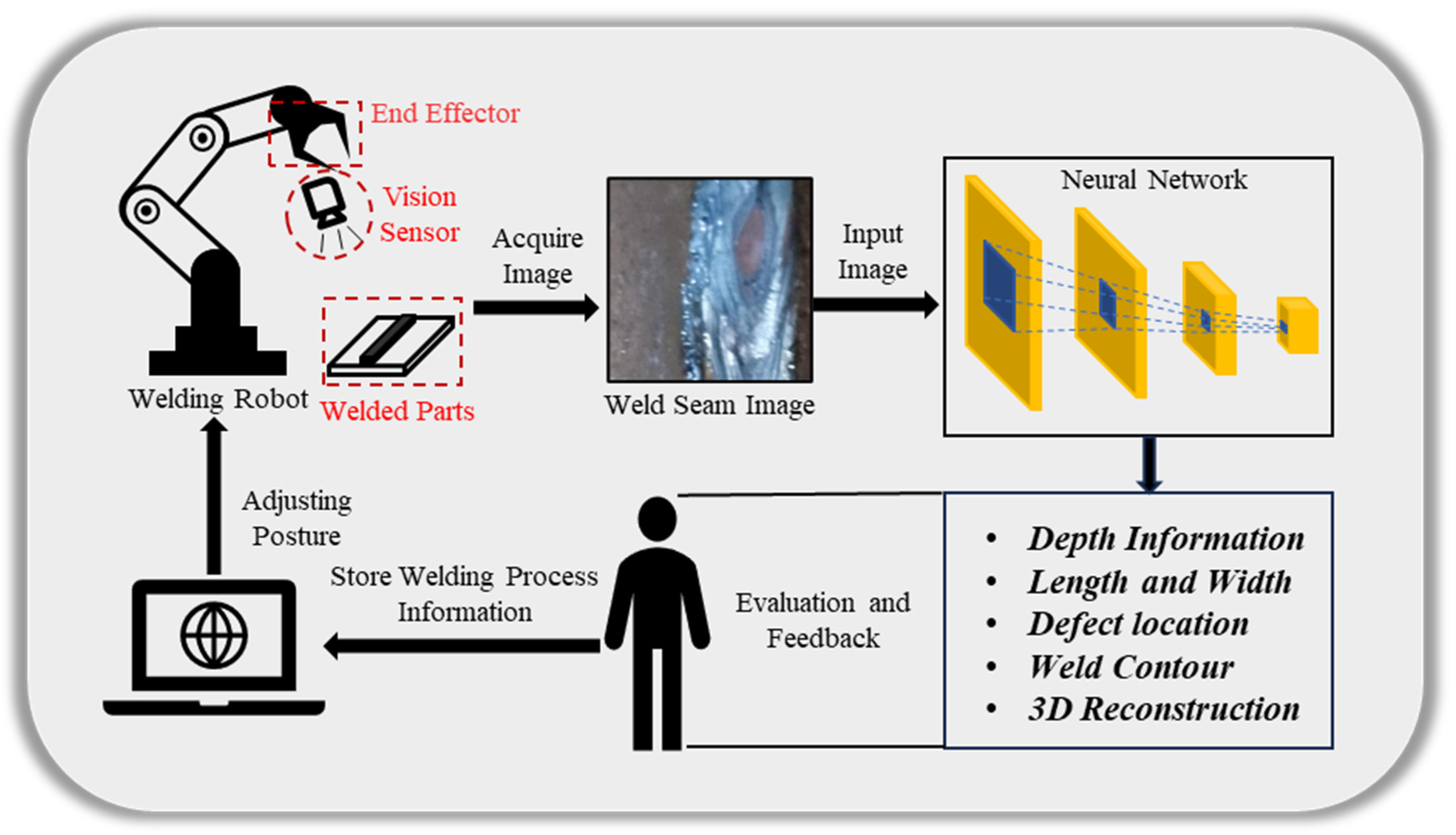

3.7. Vision Sensor

4. Depth Perception Method Based on Computer Vision

4.1. Traditional Methods for 3D Reconstruction Algorithms

4.1.1. Active Methods

| Year | Method | Description | References |

|---|---|---|---|

| 2019 | Structured light | A new active light field depth estimation method is proposed. | [75] |

| 2015 | Structured light | A structured light system for enhancing the surface texture of objects is proposed. | [76] |

| 2021 | Structured light | A global cost minimization framework is proposed for depth estimation using phase light field and re-formatted phase epipolar plane images. | [77] |

| 2024 | Structured light | A novel active stereo depth perception method based on adaptive structured light is proposed. | [78] |

| 2023 | Structured light | A parallel CNN transformer network is proposed to achieve an improved depth estimation for structured light images in complex scenes. | [79] |

| 2022 | Time-of-Flight (TOF) | DELTAR is proposed to enable lightweight Time-of-Flight sensors to measure high-resolution and accurate depth by collaborating with color images. | [80] |

| 2020 | Time-of-Flight (TOF) | Based on the principle and imaging characteristics of TOF cameras, a single pixel is considered as a continuous Gaussian source, and its differential entropy is proposed as an evaluation parameter. | [81] |

| 2014 | Time-of-Flight (TOF) | Time-of-Flight cameras are presented and common acquisition errors are described. | [82] |

| 2003 | Triangulation | A universal framework is proposed based on the principle of triangulation to address various depth recovery problems. | [83] |

| 2021 | Triangulation | Laser power is controlled via triangulation camera in a remote laser welding system. | [84] |

| 2020 | Triangulation | A data acquisition system is assembled based on differential laser triangulation method. | [85] |

| 2017 | Laser scanning | The accuracy of monocular depth estimation is improved by introducing 2D plane observations from the remaining laser rangefinder without any additional cost. | [86] |

| 2021 | Laser scanning | An online melt pool depth estimation technique is developed for the directed energy deposition (DED) process using a coaxial infrared (IR) camera, laser line scanner, and artificial neural network (ANN). | [87] |

| 2018 | Laser scanning | An automatic crack depth measurement method using image processing and laser methods is developed. | [88] |

4.1.2. Passive Methods

| Year | Method | Description | References |

|---|---|---|---|

| 2010 | Monocular vision | Photometric stereo | [89] |

| 2004 | Monocular vision | Shape from texture | [90] |

| 2000 | Monocular vision | Shape from shading | [91] |

| 2018 | Monocular vision | Depth from defocus | [92] |

| 2003 | Monocular vision | Concentric mosaics | [93] |

| 2014 | Monocular vision | Bayesian estimation and convex optimization techniques are combined in image processing. | [94] |

| 2020 | Monocular vision | Deep learning-based 3D position estimation | [95] |

| 2023 | Binocular/multi-view vision | Increasing the baseline distance between two cameras to improve the accuracy of a binocular vision system. | [96] |

| 2018 | Multi-view vision | Deep learning-based multi-view stereo | [97] |

| 2020 | Multi-view vision | A new sparse-to-dense coarse-to-fine framework for fast and accurate depth estimation in multi-view stereo (MVS) | [98] |

| 2011 | RGB-D camera-based | Kinect Fusion | [99] |

| 2019 | RGB-D camera-based | ReFusion | [100] |

| 2015 | RGB-D camera-based | Dynamic Fusion | [101] |

| 2017 | RGB-D camera-based | Bundle Fusion | [102] |

4.2. Deep Learning-Based 3D Reconstruction Algorithms

4.2.1. Voxel-Based 3D Reconstruction

4.2.2. Point Cloud-Based 3D Reconstruction

4.2.3. Mesh-Based 3D Reconstruction

5. Robotic Welding Sensors in Industrial Applications

6. Existing Issues, Proposed Solutions, and Possible Future Work

- Adaptation to changing environmental conditions: robotic welding vision systems often struggle to swiftly adjust to varying lighting, camera angles, and other environmental factors that impact the welding process.

- Limited detection and recognition capabilities: conventional computer vision techniques used in these systems have restricted abilities to detect and recognize objects, causing errors during welding.

- Vulnerability to noise and interference: robotic welding vision systems are prone to sensitivity issues concerning noise and interference, stemming from sources such as the welding process, robotic movement, and external factors like dust and smoke.

- Challenges in depth estimation and 3D reconstruction: variations in material properties and welding techniques contribute to discrepancies in the welding process, leading to difficulties in accurately estimating depth and achieving precise 3D reconstruction.

- The existing welding setup is intricately interconnected, often space-limited, and the integration of a multimodal sensor fusion system necessitates modifications to accommodate new demands. Effectively handling voluminous data and extracting pertinent information present challenges, requiring preprocessing and fusion algorithms. Integration entails comprehensive system integration and calibration, ensuring seamless hardware and software dialogue for the accuracy and reliability of data.

- Develop deep learning for object detection and recognition: The integration of deep learning techniques, like convolutional neural networks (CNNs), can significantly enhance the detection and recognition capabilities of robotic welding vision systems. This empowers them to accurately identify objects and adapt to dynamic environmental conditions.

- Transfer deep learning for welding robot adaptation: leveraging pre-trained deep learning models and customizing them to the specifics of robotic welding enables the vision system to learn and recognize welding-related objects and features, elevating its performance and resilience.

- Develop multi-modal sensor fusion: The fusion of visual data from cameras with other sensors such as laser radar and ultrasonic sensors creates a more comprehensive understanding of the welding environment. This synthesis improves the accuracy and reliability of the vision system.

- Integrate models and hardware: Utilizing diverse sensors to gather depth information and integrating this data into a welding-specific model enhances the precision of depth estimation and 3D reconstruction.

- Perform a comprehensive requirements analysis and system evaluation in collaboration with welding experts to design a multi-modal sensor fusion architecture. Select appropriate algorithms for data extraction and fusion to ensure accurate and reliable results. Conduct data calibration and system integration, including hardware configuration and software interface design. Calibrate the sensors and assess the system performance to ensure stable and reliable welding operations.

- Enhancing robustness in deep learning models: advancing deep learning models to withstand noise and interference will broaden the operational scope of robotic welding vision systems across diverse environmental conditions.

- Infusing domain knowledge into deep learning models: integrating welding-specific expertise into deep learning models can elevate their performance and adaptability within robotic welding applications.

- Real-time processing and feedback: developing mechanisms for real-time processing and feedback empowers robotic welding vision systems to promptly respond to welding environment changes, enhancing weld quality and consistency.

- Autonomous welding systems: integrating deep learning with robotic welding vision systems paves the way for autonomous welding systems capable of executing complex welding tasks without human intervention.

- Multi-modal fusion for robotic welding: merging visual and acoustic signals with welding process parameters can provide a comprehensive understanding of the welding process, enabling the robotic welding system to make more precise decisions and improve weld quality.

- Establishing a welding knowledge base: creating a repository of diverse welding methods and materials enables robotic welding systems to learn and enhance their welding performance and adaptability from this knowledge base.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Christensen, N.; Davies, V.L.; Gjermundsen, K. Distribution of Temperature in Arc Welding. Brit. Weld. J. 1965, 12, 54–75. [Google Scholar]

- Chin, B.A.; Madsen, N.H. Goodling J S. Infrared Thermography for Sensing the Arc Welding Process. Weld. J. 1983, 62, 227s–234s. [Google Scholar]

- Soori, M.; Arezoo, B.; Dastres, R. Artificial Intelligence, Machine Learning and Deep Learning in Advanced Robotics, a Review. Cogn. Robot. 2023, 3, 54–70. [Google Scholar] [CrossRef]

- Sun, A.; Kannatey-Asibu, E., Jr.; Gartner, M. Sensor Systems for Real-Time Monitoring of Laser Weld Quality. J. Laser Appl. 1999, 11, 153–168. [Google Scholar] [CrossRef]

- Vilkas, E.P. Automation of the Gas Tungsten Arc Welding Process. Weld. J. 1966, 45, 410s–416s. [Google Scholar]

- Wang, J.; Huang, L.; Yao, J.; Liu, M.; Du, Y.; Zhao, M.; Su, Y.; Lu, D. Weld Seam Tracking and Detection Robot Based on Artificial Intelligence Technology. Sensors 2023, 23, 6725. [Google Scholar] [CrossRef] [PubMed]

- Ramsey, P.W.; Chyle, J.J.; Kuhr, J.N.; Myers, P.S.; Weiss, M.; Groth, W. Infrared Temperature Sensing Systems for Automatic Fusion Welding. Weld. J. 1963, 42, 337–346. [Google Scholar]

- Chen, S.B.; Lou, Y.J.; Wu, L.; Zhao, D.B. Intelligent Methodology for Sensing, Modeling and Control of Pulsed GTAW: Part 1-Bead-on-Plate Welding. Weld. J. 2000, 79, 151–263. [Google Scholar]

- Kim, E.W.; Allem, C.; Eagar, T.W. Visible Light Emissions during Gas Tungsten Arc Welding and Its Application to Weld Image Improvement. Weld. J. 1987, 66, 369–377. [Google Scholar]

- Rai, V.K. Temperature Sensors and Optical Sensors. Appl. Phys. B 2007, 88, 297–303. [Google Scholar] [CrossRef]

- Romrell, D. Acoustic Emission Weld Monitoring of Nuclear Components. Weld. J. 1973, 52, 81–87. [Google Scholar]

- Li, P.; Zhang, Y.-M. Robust Sensing of Arc Length. IEEE Trans. Instrum. Meas. 2001, 50, 697–704. [Google Scholar] [CrossRef]

- Lebosse, C.; Renaud, P.; Bayle, B.; de Mathelin, M. Modeling and Evaluation of Low-Cost Force Sensors. IEEE Trans. Robot. 2011, 27, 815–822. [Google Scholar] [CrossRef]

- Kurada, S.; Bradley, C. A Review of Machine Vision Sensors for Tool Condition Monitoring. Comput. Ind. 1997, 34, 55–72. [Google Scholar] [CrossRef]

- Braggins, D. Oxford Sensor Technology—A Story of Perseverance. Sens. Rev. 1998, 18, 237–241. [Google Scholar] [CrossRef]

- Jia, X.; Ma, P.; Tarwa, K.; Wang, Q. Machine Vision-Based Colorimetric Sensor Systems for Food Applications. J. Agric. Food Res. 2023, 11, 100503. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Kah, P.; Shrestha, M.; Hiltunen, E.; Martikainen, J. Robotic Arc Welding Sensors and Programming in Industrial Applications. Int. J. Mech. Mater. Eng. 2015, 10, 13. [Google Scholar] [CrossRef]

- Xu, P.; Xu, G.; Tang, X.; Yao, S. A Visual Seam Tracking System for Robotic Arc Welding. Int. J. Adv. Manuf. Technol. 2008, 37, 70–75. [Google Scholar] [CrossRef]

- Walk, R.D.; Gibson, E.J. A Comparative and Analytical Study of Visual Depth Perception. Psychol. Monogr. Gen. Appl. 1961, 75, 1–44. [Google Scholar] [CrossRef]

- Julesz, B. Binocular Depth Perception without Familiarity Cues. Science 1964, 145, 356–362. [Google Scholar] [CrossRef] [PubMed]

- Julesz, B. Binocular Depth Perception of Computer-Generated Patterns. Bell Syst. Tech. J. 1960, 39, 1125–1162. [Google Scholar] [CrossRef]

- Cumming, B.; Parker, A. Responses of Primary Visual Cortical Neurons to Binocular Disparity without Depth Perception. Nature 1997, 389, 280–283. [Google Scholar] [CrossRef] [PubMed]

- Tyler, C.W. Depth Perception in Disparity Gratings. Nature 1974, 251, 140–142. [Google Scholar] [CrossRef] [PubMed]

- Langlands, N.M.S. Experiments on Binocular Vision. Trans. Opt. Soc. 1926, 28, 45. [Google Scholar] [CrossRef]

- Livingstone, M.S.; Hubel, D.H. Psychophysical Evidence for Separate Channels for the Perception of Form, Color, Movement, and Depth. J. Neurosci. 1987, 7, 3416–3468. [Google Scholar] [CrossRef] [PubMed]

- Wheatstone, C., XVIII. Contributions to the Physiology of Vision.—Part the First. On Some Remarkable, and Hitherto Unobserved, Phenomena of Binocular Vision. Philos. Trans. R. Soc. Lond. 1997, 128, 371–394. [Google Scholar]

- Parker, A.J. Binocular Depth Perception and the Cerebral Cortex. Nat. Rev. Neurosci. 2007, 8, 379–391. [Google Scholar] [CrossRef]

- Roberts, L. Machine Perception of Three-Dimensional Solids. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1963. [Google Scholar]

- Ban, Y.; Liu, M.; Wu, P.; Yang, B.; Liu, S.; Yin, L.; Zheng, W. Depth Estimation Method for Monocular Camera Defocus Images in Microscopic Scenes. Electronics 2022, 11, 2012. [Google Scholar] [CrossRef]

- Luo, R.C.; Kay, M.G. Multisensor Integration and Fusion in Intelligent Systems. IEEE Trans. Syst. Man Cybern. 1989, 19, 901–931. [Google Scholar] [CrossRef]

- Attalla, A.; Attalla, O.; Moussa, A.; Shafique, D.; Raean, S.B.; Hegazy, T. Construction Robotics: Review of Intelligent Features. Int. J. Intell. Robot. Appl. 2023, 7, 535–555. [Google Scholar] [CrossRef]

- Schlett, T.; Rathgeb, C.; Busch, C. Deep Learning-Based Single Image Face Depth Data Enhancement. Comput. Vis. Image Underst. 2021, 210, 103247. [Google Scholar] [CrossRef]

- Bloom, L.C.; Mudd, S.A. Depth of Processing Approach to Face Recognition: A Test of Two Theories. J. Exp. Psychol. Learn. Mem. Cogn. 1991, 17, 556–565. [Google Scholar] [CrossRef]

- Abreu de Souza, M.; Alka Cordeiro, D.C.; Oliveira, J.d.; Oliveira, M.F.A.d.; Bonafini, B.L. 3D Multi-Modality Medical Imaging: Combining Anatomical and Infrared Thermal Images for 3D Reconstruction. Sensors 2023, 23, 1610. [Google Scholar] [CrossRef] [PubMed]

- Gehrke, S.R.; Phair, C.D.; Russo, B.J.; Smaglik, E.J. Observed Sidewalk Autonomous Delivery Robot Interactions with Pedestrians and Bicyclists. Transp. Res. Interdiscip. Perspect. 2023, 18, 100789. [Google Scholar] [CrossRef]

- Yang, Y.; Siau, K.; Xie, W.; Sun, Y. Smart Health: Intelligent Healthcare Systems in the Metaverse, Artificial Intelligence, and Data Science Era. J. Organ. End User Comput. 2022, 34, 1–14. [Google Scholar] [CrossRef]

- Singh, A.; Bankiti, V. Surround-View Vision-Based 3d Detection for Autonomous Driving: A Survey. arXiv 2023, arXiv:2302.06650. [Google Scholar]

- Korkut, E.H.; Surer, E. Visualization in Virtual Reality: A Systematic Review. Virtual Real. 2023, 27, 1447–1480. [Google Scholar] [CrossRef]

- Mirzaei, B.; Nezamabadi-pour, H.; Raoof, A.; Derakhshani, R. Small Object Detection and Tracking: A Comprehensive Review. Sensors 2023, 23, 6887. [Google Scholar] [CrossRef]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Haug, K.; Pritschow, G. Robust Laser-Stripe Sensor for Automated Weld-Seam-Tracking in the Shipbuilding Industry. In Proceedings of the IECON ’98, Aachen, Germany, 31 August–4 September 1998; pp. 1236–1241. [Google Scholar]

- Zhang, Z.; Chen, S. Real-Time Seam Penetration Identification in Arc Welding Based on Fusion of Sound, Voltage and Spectrum Signals. J. Intell. Manuf. 2017, 28, 207–218. [Google Scholar] [CrossRef]

- Wang, B.; Hu, S.J.; Sun, L.; Freiheit, T. Intelligent Welding System Technologies: State-of-the-Art Review and Perspectives. J. Manuf. Syst. 2020, 56, 373–391. [Google Scholar] [CrossRef]

- Zhang, K.; Yan, M.; Huang, T.; Zheng, J.; Li, Z. 3D Reconstruction of Complex Spatial Weld Seam for Autonomous Welding by Laser Structured Light Scanning. J. Manuf. Process. 2019, 39, 200–207. [Google Scholar] [CrossRef]

- Yang, L.; Liu, Y.; Peng, J. Advances in Techniques of the Structured Light Sensing in Intelligent Welding Robots: A Review. Int. J. Adv. Manuf. Technol. 2020, 110, 1027–1046. [Google Scholar] [CrossRef]

- Lei, T.; Rong, Y.; Wang, H.; Huang, Y.; Li, M. A Review of Vision-Aided Robotic Welding. Comput. Ind. 2020, 123, 103326. [Google Scholar] [CrossRef]

- Yang, J.; Wang, C.; Jiang, B.; Song, H.; Meng, Q. Visual Perception Enabled Industry Intelligence: State of the Art, Challenges and Prospects. IEEE Trans. Ind. Inform. 2021, 17, 2204–2219. [Google Scholar] [CrossRef]

- Ottoni, A.L.C.; Novo, M.S.; Costa, D.B. Deep Learning for Vision Systems in Construction 4.0: A Systematic Review. Signal Image Video Process. 2023, 17, 1821–1829. [Google Scholar] [CrossRef]

- Siores, E. Self Tuning Through-the-Arc Sensing for Robotic M.I.G. Welding. In Control 90: The Fourth Conference on Control Engineering; Control Technology for Australian Industry; Preprints of Papers; Institution of Engineers: Barton, ACT, Australia, 1990; pp. 146–149. [Google Scholar]

- Fridenfalk, M.; Bolmsjö, G. Design and Validation of a Universal 6D Seam-Tracking System in Robotic Welding Using Arc Sensing. Adv. Robot. 2004, 18, 1–21. [Google Scholar] [CrossRef]

- Lu, B. Basics of Welding Automation; Huazhong Institute of Technology Press: Wuhan, China, 1985. [Google Scholar]

- Available online: www.abb.com (accessed on 1 November 2023).

- Fujimura, H. Joint Tracking Control Sensor of GMAW: Development of Method and Equipment for Position Sensing in Welding with Electric Arc Signals (Report 1). Trans. Jpn. Weld. Soc. 1987, 18, 32–40. [Google Scholar]

- Zhu, B.; Xiong, J. Increasing Deposition Height Stability in Robotic GTA Additive Manufacturing Based on Arc Voltage Sensing and Control. Robot. Comput.-Integr. Manuf. 2020, 65, 101977. [Google Scholar] [CrossRef]

- Mao, Y.; Xu, G. A Real-Time Method for Detecting Weld Deviation of Corrugated Plate Fillet Weld by Laser Vision. Sensor Optik 2022, 260, 168786. [Google Scholar] [CrossRef]

- Ushio, M.; Mao, W. Sensors for Arc Welding: Advantages and Limitations. Trans. JWRI. 1994, 23, 135–141. [Google Scholar]

- Fenn, R. Ultrasonic Monitoring and Control during Arc Welding. Weld. J. 1985, 9, 18–22. [Google Scholar] [CrossRef]

- Graham, G.M. On-Line Laser Ultrasonic for Control of Robotic Welding Quality. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 1995. [Google Scholar]

- Abdullah, B.M.; Mason, A.; Al-Shamma’a, A. Defect Detection of the Weld Bead Based on Electromagnetic Sensing. J. Phys. Conf. Ser. 2013, 450, 012039. [Google Scholar] [CrossRef]

- You, B.-H.; Kim, J.-W. A Study on an Automatic Seam Tracking System by Using an Electromagnetic Sensor for Sheet Metal Arc Welding of Butt Joints. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2002, 216, 911–920. [Google Scholar] [CrossRef]

- Kim, J.-W.; Shin, J.-H. A Study of a Dual-Electromagnetic Sensor System for Weld Seam Tracking of I-Butt Joints. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2003, 217, 1305–1313. [Google Scholar] [CrossRef]

- Xu, F.; Xu, Y.; Zhang, H.; Chen, S. Application of Sensing Technology in Intelligent Robotic Arc Welding: A Review. J. Manuf. Process. 2022, 79, 854–880. [Google Scholar] [CrossRef]

- Boillot, J.P.; Noruk, J. The Benefits of Laser Vision in Robotic Arc Welding. Weld. J. 2002, 81, 32–34. [Google Scholar]

- Zhang, P.; Wang, J.; Zhang, F.; Xu, P.; Li, L.; Li, B. Design and Analysis of Welding Inspection Robot. Sci. Rep. 2022, 12, 22651. [Google Scholar] [CrossRef]

- Wexler, M.; Boxtel, J.J.A.v. Depth Perception by the Active Observer. Trends Cogn. Sci. 2005, 9, 431–438. [Google Scholar] [CrossRef]

- Wikle, H.C., III; Zee, R.H.; Chin, B.A. A Sensing System for Weld Process Control. J. Mater. Process. Technol. 1999, 89, 254–259. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B. Advances in Weld Seam Tracking Techniques for Robotic Welding: A Review. Robot Comput.-Integr. Manuf. 2019, 56, 12–37. [Google Scholar] [CrossRef]

- Griffin, B.; Florence, V.; Corso, J. Video Object Segmentation-Based Visual Servo Control and Object Depth Estimation on A Mobile Robot. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–8 January 2022; pp. 1647–1657. [Google Scholar]

- Jiang, S.; Wang, S.; Yi, Z.; Zhang, M.; Lv, X. Autonomous Navigation System of Greenhouse Mobile Robot Based on 3D Lidar and 2D Lidar SLAM. Front. Plant Sci. 2022, 13, 815218. [Google Scholar] [CrossRef] [PubMed]

- Nomura, K.; Fukushima, K.; Matsumura, T.; Asai, S. Burn-through Prediction and Weld Depth Estimation by Deep Learning Model Monitoring the Molten Pool in Gas Metal Arc Welding with Gap Fluctuation. J. Manuf. Process. 2021, 61, 590–600. [Google Scholar] [CrossRef]

- Garcia, F.; Aouada, D.; Abdella, H.K.; Solignac, T.; Mirbach, B.; Ottersten, B. Depth Enhancement by Fusion for Passive and Active Sensing. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 506–515. [Google Scholar]

- Yang, A.; Scott, G.J. Efficient Passive Sensing Monocular Relative Depth Estimation. In Proceedings of the 2019 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 15–17 October 2019; pp. 1–9. [Google Scholar]

- Li, Q.; Biswas, M.; Pickering, M.R.; Frater, M.R. Accurate Depth Estimation Using Structured Light and Passive Stereo Disparity Estimation. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 969–972. [Google Scholar]

- Cai, Z.; Liu, X.; Pedrini, G.; Osten, W.; Peng, X. Accurate Depth Estimation in Structured Light Fields. Opt. Express. 2019, 27, 13532–13546. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.T.; Slaughter, D.C.; Max, N.; Maloof, J.N.; Sinha, N. Structured Light-Based 3D Reconstruction System for Plants. Sensors 2015, 15, 18587–18612. [Google Scholar] [CrossRef] [PubMed]

- Xiang, S.; Liu, L.; Deng, H.; Wu, J.; Yang, Y.; Yu, L. Fast Depth Estimation with Cost Minimization for Structured Light Field. Opt. Express. 2021, 29, 30077–30093. [Google Scholar] [CrossRef]

- Jia, T.; Li, X.; Yang, X.; Lin, S.; Liu, Y.; Chen, D. Adaptive Stereo: Depth Estimation from Adaptive Structured Light. Opt. Laser Technol. 2024, 169, 110076. [Google Scholar] [CrossRef]

- Zhu, X.; Han, Z.; Zhang, Z.; Song, L.; Wang, H.; Guo, Q. PCTNet: Depth Estimation from Single Structured Light Image with a Parallel CNN-Transformer Network. Meas. Sci. Technol. 2023, 34, 085402. [Google Scholar] [CrossRef]

- Li, Y.; Liu, X.; Dong, W.; Zhou, H.; Bao, H.; Zhang, G.; Zhang, Y.; Cui, Z. DELTAR: Depth Estimation from a Light-Weight ToF Sensor and RGB Image. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 619–636. [Google Scholar]

- Fang, Y.; Wang, X.; Sun, Z.; Zhang, K.; Su, B. Study of the Depth Accuracy and Entropy Characteristics of a ToF Camera with Coupled Noise. Opt. Lasers Eng. 2020, 128, 106001. [Google Scholar] [CrossRef]

- Alenyà, G.; Foix, S.; Torras, C. ToF Cameras for Active Vision in Robotics. Sens. Actuators Phys. 2014, 218, 10–22. [Google Scholar] [CrossRef]

- Davis, J.; Ramamoorthi, R.; Rusinkiewicz, S. Spacetime Stereo: A Unifying Framework for Depth from Triangulation. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; pp. II–359. [Google Scholar]

- Kos, M.; Arko, E.; Kosler, H.; Jezeršek, M. Penetration-Depth Control in a Remote Laser-Welding System Based on an Optical Triangulation Loop. Opt. Lasers Eng. 2021, 139, 106464. [Google Scholar] [CrossRef]

- Wu, C.; Chen, B.; Ye, C. Detecting Defects on Corrugated Plate Surfaces Using a Differential Laser Triangulation Method. Opt. Lasers Eng. 2020, 129, 106064. [Google Scholar] [CrossRef]

- Liao, Y.; Huang, L.; Wang, Y.; Kodagoda, S.; Yu, Y.; Liu, Y. Parse Geometry from a Line: Monocular Depth Estimation with Partial Laser Observation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5059–5066. [Google Scholar]

- Jeon, I.; Yang, L.; Ryu, K.; Sohn, H. Online Melt Pool Depth Estimation during Directed Energy Deposition Using Coaxial Infrared Camera, Laser Line Scanner, and Artificial Neural Network. Addit. Manuf. 2021, 47, 102295. [Google Scholar] [CrossRef]

- Shehata, H.M.; Mohamed, Y.S.; Abdellatif, M.; Awad, T.H. Depth Estimation of Steel Cracks Using Laser and Image Processing Techniques. Alex. Eng. J. 2018, 57, 2713–2718. [Google Scholar] [CrossRef]

- Vogiatzis, G.; Hernández, C. Practical 3D Reconstruction Based on Photometric Stereo. In Computer Vision: Detection, Recognition and Reconstruction; Cipolla, R., Battiato, S., Farinella, G.M., Eds.; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2010; pp. 313–345. [Google Scholar]

- Yemez, Y.; Schmitt, F. 3D Reconstruction of Real Objects with High Resolution Shape and Texture. Image Vis. Comput. 2004, 22, 1137–1153. [Google Scholar] [CrossRef]

- Quartucci Forster, C.H.; Tozzi, C.L. Towards 3D Reconstruction of Endoscope Images Using Shape from Shading. In Proceedings of the 13th Brazilian Symposium on Computer Graphics and Image Processing (Cat. No.PR00878), Gramado, Brazil, 17–20 October 2000; pp. 90–96. [Google Scholar]

- Carvalho, M.; Le Saux, B.; Trouve-Peloux, P.; Almansa, A.; Champagnat, F. Deep Depth from Defocus: How Can Defocus Blur Improve 3D Estimation Using Dense Neural Networks? In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Feldmann, I.; Kauff, P.; Eisert, P. Image Cube Trajectory Analysis for 3D Reconstruction of Concentric Mosaics. In Proceedings of the VMV, Munich, Germany, 19–21 November 2003; pp. 569–576. [Google Scholar]

- Pizzoli, M.; Forster, C.; Scaramuzza, D. REMODE: Probabilistic, Monocular Dense Reconstruction in Real Time. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2609–2616. [Google Scholar]

- Liu, J.; Li, Q.; Cao, R.; Tang, W.; Qiu, G. A Contextual Conditional Random Field Network for Monocular Depth Estimation. Image Vision Comput. 2020, 98, 103922. [Google Scholar] [CrossRef]

- Liu, X.; Yang, L.; Chu, X.; Zhou, L. A Novel Phase Unwrapping Method for Binocular Structured Light 3D Reconstruction Based on Deep Learning. Optik 2023, 279, 170727. [Google Scholar] [CrossRef]

- Yao, Y.; Luo, Z.; Li, S.; Fang, T.; Quan, L. MVSNet: Depth Inference for Unstructured Multi-View Stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 767–783. [Google Scholar]

- Yu, Z.; Gao, S. Fast-MVSNet: Sparse-to-Dense Multi-View Stereo with Learned Propagation and Gauss-Newton Refinement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020; pp. 1949–1958. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-Time 3D Reconstruction and Interaction Using a Moving Depth Camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, 16 October 2011; pp. 559–568. [Google Scholar]

- Palazzolo, E.; Behley, J.; Lottes, P.; Giguère, P.; Stachniss, C. ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 7855–7862. [Google Scholar]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. DynamicFusion: Reconstruction and Tracking of Non-Rigid Scenes in Real-Time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 580–587. [Google Scholar]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. BundleFusion: Real-Time Globally Consistent 3D Reconstruction Using On-the-Fly Surface Reintegration. ACM Trans. Graph. 2017, 36, 76a. [Google Scholar] [CrossRef]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the Art on 3D Reconstruction with RGB-D Cameras. Comput. Graph. Forum. 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image Using a Multi-Scale Deep Network. Adv. Neural Inf. Process. Syst. 2014, 27, 2366–2374. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 1912–1920. [Google Scholar]

- Choy, C.B.; Xu, D.; Gwak, J.; Chen, K.; Savarese, S. 3D-R2N2: A Unified Approach for Single and Multi-View 3D Object Reconstruction. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 628–644. [Google Scholar]

- Girdhar, R.; Fouhey, D.F.; Rodriguez, M.; Gupta, A. Learning a Predictable and Generative Vector Representation for Objects. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 484–499. [Google Scholar]

- Yan, X.; Yang, J.; Yumer, E.; Guo, Y.; Lee, H. Perspective Transformer Nets: Learning Single-View 3D Object Reconstruction without 3D Supervision. In Proceedings of the NIPS’16: Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 1696–1704. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L.J. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 605–613. [Google Scholar]

- Lin, C.-H.; Kong, C.; Lucey, S. Learning Efficient Point Cloud Generation for Dense 3D Object Reconstruction. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; p. 32. [Google Scholar]

- Chen, R.; Han, S.; Xu, J.; Su, H. Point-Based Multi-View Stereo Network. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1538–1547. [Google Scholar]

- Wang, Y.; Ran, T.; Liang, Y.; Zheng, G. An attention-based and deep sparse priori cascade multi-view stereo network for 3D reconstruction. Comput. Graph. 2023, 116, 383–392. [Google Scholar] [CrossRef]

- Chen, J.; Kira, Z.; Cho, Y.K. Deep Learning Approach to Point Cloud Scene Understanding for Automated Scan to 3D Reconstruction. J. Comput. Civ. Eng. 2019, 33, 04019027. [Google Scholar] [CrossRef]

- Mandikal, P.; Navaneet, K.L.; Agarwal, M.; Babu, R.V. 3D-LMNet: Latent Embedding Matching for Accurate and Diverse 3D Point Cloud Reconstruction from a Single Image. arXiv 2018, arXiv:1807.07796. [Google Scholar]

- Ren, S.; Hou, J.; Chen, X.; He, Y.; Wang, W. GeoUDF: Surface Reconstruction from 3D Point Clouds via Geometry-Guided Distance Representation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 14214–14224. [Google Scholar]

- Kato, H.; Ushiku, Y.; Harada, T. Neural 3D Mesh Renderer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, 18–22 June 2018; pp. 3907–3916. [Google Scholar]

- Piazza, E.; Romanoni, A.; Matteucci, M. Real-Time CPU-Based Large-Scale Three-Dimensional Mesh Reconstruction. IEEE Robot. Autom. Lett. 2018, 3, 1584–1591. [Google Scholar] [CrossRef]

- Pan, J.; Han, X.; Chen, W.; Tang, J.; Jia, K. Deep Mesh Reconstruction from Single RGB Images via Topology Modification Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9964–9973. [Google Scholar]

- Choi, H.; Moon, G.; Lee, K.M. Pose2Mesh: Graph Convolutional Network for 3D Human Pose and Mesh Recovery from a 2D Human Pose. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 769–787. [Google Scholar]

- Henderson, P.; Ferrari, V. Learning Single-Image 3D Reconstruction by Generative Modelling of Shape, Pose and Shading. Int. J. Comput. Vis. 2020, 128, 835–854. [Google Scholar] [CrossRef]

- Wang, N.; Zhang, Y.; Li, Z.; Fu, Y.; Yu, H.; Liu, W.; Xue, X.; Jiang, Y.-G. Pixel2Mesh: 3D Mesh Model Generation via Image Guided Deformation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3600–3613. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Chen, Z.; Fu, Y.; Cui, Z.; Zhang, Y. Deep Hybrid Self-Prior for Full 3D Mesh Generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 10 March 2021; pp. 5805–5814. [Google Scholar]

- Majeed, T.; Wahid, M.A.; Ali, F. Applications of Robotics in Welding. Int. J. Emerg. Res. Manag. Technol. 2018, 7, 30–36. [Google Scholar] [CrossRef]

- Eren, B.; Demir, M.H.; Mistikoglu, S. Recent Developments in Computer Vision and Artificial Intelligence Aided Intelligent Robotic Welding Applications. Int. J. Adv. Manuf. Technol. 2023, 126, 4763–4809. [Google Scholar] [CrossRef]

- Lin, T.; Chen, H.B.; Li, W.H.; Chen, S.B. Intelligent Methodology for Sensing, Modeling, and Control of Weld Penetration in Robotic Welding System. Ind. Robot Int. J. 2009, 36, 585–593. [Google Scholar] [CrossRef]

- Jones, J.E.; Rhoades, V.L.; Beard, J.; Arner, R.M.; Dydo, J.R.; Fast, K.; Bryant, A.; Gaffney, J.H. Development of a Collaborative Robot (COBOT) for Increased Welding Productivity and Quality in the Shipyard. In Proceedings of the SNAME Maritime Convention, Providence, RI, USA, 4–6 November 2015; p. D011S001R005. [Google Scholar]

- Ang, M.H.; Lin, W.; Lim, S. A Walk-through Programmed Robot for Welding in Shipyards. Ind. Robot Int. J. 1999, 26, 377–388. [Google Scholar] [CrossRef]

- Ferreira, L.A.; Figueira, Y.L.; Iglesias, I.F.; Souto, M.Á. Offline CAD-Based Robot Programming and Welding Parametrization of a Flexible and Adaptive Robotic Cell Using Enriched CAD/CAM System for Shipbuilding. Procedia Manuf. 2017, 11, 215–223. [Google Scholar] [CrossRef]

- Lee, D. Robots in the Shipbuilding Industry. Robot. Comput.-Integr. Manuf. 2014, 30, 442–450. [Google Scholar] [CrossRef]

- Pellegrinelli, S.; Pedrocchi, N.; Tosatti, L.M.; Fischer, A.; Tolio, T. Multi-Robot Spot-Welding Cells for Car-Body Assembly: Design and Motion Planning. Robot. Comput.-Integr. Manuf. 2017, 44, 97–116. [Google Scholar] [CrossRef]

- Walz, D.; Werz, M.; Weihe, S. A New Concept for Producing High Strength Aluminum Line-Joints in Car Body Assembly by a Robot Guided Friction Stir Welding Gun. In Advances in Automotive Production Technology–Theory and Application: Stuttgart Conference on Automotive Production (SCAP2020); Springer: Berlin/Heidelberg, Germany, 2021; pp. 361–368. [Google Scholar]

- Chai, X.; Zhang, N.; He, L.; Li, Q.; Ye, W. Kinematic Sensitivity Analysis and Dimensional Synthesis of a Redundantly Actuated Parallel Robot for Friction Stir Welding. Chin. J. Mech. Eng. 2020, 33, 1. [Google Scholar] [CrossRef]

- Liu, Z.; Bu, W.; Tan, J. Motion Navigation for Arc Welding Robots Based on Feature Mapping in a Simulation Environment. Robot. Comput.-Integr. Manuf. 2010, 26, 137–144. [Google Scholar] [CrossRef]

- Jin, Z.; Li, H.; Zhang, C.; Wang, Q.; Gao, H. Online Welding Path Detection in Automatic Tube-to-Tubesheet Welding Using Passive Vision. Int. J. Adv. Manuf. Technol. 2017, 90, 3075–3084. [Google Scholar] [CrossRef]

- Yao, T.; Gai, Y.; Liu, H. Development of a robot system for pipe welding. In Proceedings of the 2010 International Conference on Measuring Technology and Mechatronics Automation, Changsha, China, 13–14 March 2010; pp. 1109–1112. [Google Scholar]

- Luo, H.; Zhao, F.; Guo, S.; Yu, C.; Liu, G.; Wu, T. Mechanical Performance Research of Friction Stir Welding Robot for Aerospace Applications. Int. J. Adv. Robot. Syst. 2021, 18, 1729881421996543. [Google Scholar] [CrossRef]

- Haitao, L.; Tingke, W.; Jia, F.; Fengqun, Z. Analysis of Typical Working Conditions and Experimental Research of Friction Stir Welding Robot for Aerospace Applications. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2021, 235, 1045–1056. [Google Scholar] [CrossRef]

- Bres, A.; Monsarrat, B.; Dubourg, L.; Birglen, L.; Perron, C.; Jahazi, M.; Baron, L. Simulation of robotic friction stir welding of aerospace components. Ind. Robot: Int. J. 2010, 37, 36–50. [Google Scholar] [CrossRef]

- Caggiano, A.; Nele, L.; Sarno, E.; Teti, R. 3D Digital Reconfiguration of an Automated Welding System for a Railway Manufacturing Application. Procedia CIRP 2014, 25, 39–45. [Google Scholar] [CrossRef]

- Wu, W.; Kong, L.; Liu, W.; Zhang, C. Laser Sensor Weld Beads Recognition and Reconstruction for Rail Weld Beads Grinding Robot. In Proceedings of the 2017 5th International Conference on Mechanical, Automotive and Materials Engineering (CMAME), Guangzhou, China, 1–3 August 2017; pp. 143–148. [Google Scholar]

- Kochan, A. Automating the construction of railway carriages. Ind. Robot. 2000, 27, 108–110. [Google Scholar] [CrossRef]

- Luo, Y.; Tao, J.; Sun, Q.; Deng, Z. A New Underwater Robot for Crack Welding in Nuclear Power Plants. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 77–82. [Google Scholar]

- French, R.; Marin-Reyes, H.; Benakis, M. Advanced Real-Time Weld Monitoring Evaluation Demonstrated with Comparisons of Manual and Robotic TIG Welding Used in Critical Nuclear Industry Fabrication. In Advances in Ergonomics of Manufacturing: Managing the Enterprise of the Future: Proceedings of the AHFE 2017 International Conference on Human Aspects of Advanced Manufacturing, Los Angeles, California, USA, 17–21 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 3–13. [Google Scholar]

- Gao, Y.; Lin, J.; Chen, Z.; Fang, M.; Li, X.; Liu, Y.H. Deep-Learning Based Robotic Manipulation of Flexible PCBs. In Proceedings of the 2020 IEEE International Conference on Real-Time Computing and Robotics (IEEE RCAR 2020), Hokkaido, Japan, 28–29 September 2020; IEEE: Washington, DC, USA, 2020; pp. 164–170. [Google Scholar]

- Liu, F.; Shang, W.; Chen, X.; Wang, Y.; Kong, X. Using Deep Reinforcement Learning to Guide PCBS Welding Robot to Solve Multi-Objective Optimization Tasks. In Proceedings of the Third International Conference on Advanced Algorithms and Neural Networks (AANN 2023), Qingdao, China, 5–7 May 2023; SPIE: Bellingham, WA, USA, 2023; Volume 12791, pp. 560–566. [Google Scholar]

- Nagata, M.; Baba, N.; Tachikawa, H.; Shimizu, I.; Aoki, T. Steel Frame Welding Robot Systems and Their Application at the Construction Site. Comput.-Aided Civ. Infrastruct. Eng. 1997, 12, 15–30. [Google Scholar] [CrossRef]

- Heimig, T.; Kerber, E.; Stumm, S.; Mann, S.; Reisgen, U.; Brell-Cokcan, S. Towards Robotic Steel Construction through Adaptive Incremental Point Welding. Constr. Robot. 2020, 4, 49–60. [Google Scholar] [CrossRef]

- Prusak, Z.; Tadeusiewicz, R.; Jastrzębski, R.; Jastrzębska, I. Advances and Perspectives in Using Medical Informatics for Steering Surgical Robots in Welding and Training of Welders Applying Long-Distance Communication Links. Weld. Technol. Rev. 2020, 92, 37–49. [Google Scholar] [CrossRef]

- Wu, Y.; Yang, M.; Zhang, J. Open-Closed-Loop Iterative Learning Control with the System Correction Term for the Human Soft Tissue Welding Robot in Medicine. Math. Probl. Eng. 2020, 2020, 1–9. [Google Scholar] [CrossRef]

- Hatwig, J.; Reinhart, G.; Zaeh, M.F. Automated Task Planning for Industrial Robots and Laser Scanners for Remote Laser Beam Welding and Cutting. Prod. Eng. 2010, 4, 327–332. [Google Scholar] [CrossRef]

- Lu, X.; Liu, W.; Wu, Y. Review of Sensors and It’s Applications in the Welding Robot. In Proceedings of the 2014 International Conference on Robotic Welding, Intelligence and Automation (RWIA’2014), Shanghai, China, 25 October 2014; pp. 337–349. [Google Scholar]

- Shah, H.N.M.; Sulaiman, M.; Shukor, A.Z.; Jamaluddin, M.H.; Rashid, M.Z.A. A Review Paper on Vision Based Identification, Detection and Tracking of Weld Seams Path in Welding Robot Environment. Mod. Appl. Sci. 2016, 10, 83–89. [Google Scholar] [CrossRef]

| Year | Method | Description | References |

|---|---|---|---|

| 2014 | Voxel | A supervised coarse-to-fine deep learning network is proposed, consisting of two networks, for depth estimation. | [104] |

| 2015 | Voxel | A method is proposed to represent geometric 3D shapes as a probabilistic distribution of binary variables in a 3D voxel grid. | [105] |

| 2016 | Voxel | The proposed 3D-R2N2 model utilizes an Encoder-3DLSTM-Decoder network architecture to establish a mapping from 2D images to 3D voxel models, enabling voxel-based single-view/multi-view 3D reconstruction. | [106] |

| 2016 | Voxel | Predicting voxels from 2D images and performing 3D model retrieval becomes feasible. | [107] |

| 2016 | Voxel | A novel encoder–decoder network is proposed, which incorporates a new projection loss defined by projection transformations. | [108] |

| 2017 | Point cloud | Exploring 3D geometric generation networks based on point cloud representations. | [109] |

| 2018 | Point cloud | A novel 3D generation model framework is proposed to effectively generate target shapes in the form of dense point clouds. | [110] |

| 2019 | Point cloud | A novel point cloud-based multi-view stereo network is proposed, which directly processes the target scene as a point cloud. This approach provides a more efficient representation, especially in high-resolution scenarios. | [111] |

| 2023 | Point cloud | An attention-based deep sparse prior cascade multi-view stereo network is proposed for 3D reconstruction. | [112] |

| 2019 | Point cloud | This study proposes the use of a data-driven deep learning framework to automatically detect and classify building elements from point cloud scenes obtained through laser scanning. | [113] |

| 2019 | Point cloud | Three-dimenional LMNet is proposed as a latent embedding matching method for 3D reconstruction. | [114] |

| 2023 | Point cloud | A learning-based method called GeoUDF is proposed to address the long-standing and challenging problem of reconstructing discrete surfaces from sparse point clouds. | [115] |

| 2018 | Mesh | Using 2D supervision to perform gradient-based 3D mesh editing operations. | [116] |

| 2018 | Mesh | The state-of-the-art incremental manifold mesh algorithm proposed by Litvinov and Lhuillier has been improved and extended by Romanoni and Matteucci. | [117] |

| 2019 | Mesh | A passive translation-based method is proposed for single-view mesh reconstruction, which can generate high-quality meshes with complex topological structures from a single template mesh with zero genus. | [118] |

| 2020 | Mesh | Pose2Mesh is proposed as a novel system based on graph convolutional neural networks, which can directly estimate the 3D coordinates of human body mesh vertices from 2D human pose estimation. | [119] |

| 2020 | Mesh | By employing different mesh parameterizations, we can incorporate useful modeling priors such as smoothness or composition from primitives. | [120] |

| 2021 | Mesh | A novel end-to-end deep learning architecture is proposed that generates 3D shapes from a single color image. The architecture represents the 3D mesh in graph neural networks and generates accurate geometries using progressively deforming ellipsoids. | [121] |

| 2021 | Mesh | A deep learning method based on network self-priors is proposed to recover complete 3D models consisting of triangulated meshes and texture maps from colored 3D point clouds. | [122] |

| Year | Area | Key Technology | Description | References |

|---|---|---|---|---|

| 2015 | Shipyard | Human–robot interaction mobile welding robot | Human–machine interaction mobile welding robots successfully remotely produced welds. | [126] |

| 1999 | Shipyard | Ship welding robot system | A ship welding robot system was developed for welding process technology. | [127] |

| 2017 | Shipyard | Super flexible welding robot | A super flexible welding robot module with 9 degrees of freedom was developed. | [128] |

| 2014 | Shipyard | Welding vehicle and six-axis robotic arm | A new type of welding robot system was developed. | [129] |

| 2017 | Automobile | Multi-robot welding system | An extended formulation of the design and motion planning problems for a multi-robot welding system was proposed. | [130] |

| 2021 | Automobile | Robot-guided friction stir welding gun | A new type of robot-guided friction stir welding gun technology was developed. | [131] |

| 2020 | Automobile | Friction welding robot | A redundant 2UPR-2RPU parallel robotic system for friction stir welding was proposed. | [132] |

| 2010 | Automobile | Arc welding robot | A motion navigation method based on feature mapping in a simulated environment was proposed. The method includes initial position guidance and weld seam tracking. | [133] |

| 2017 | Machinery | Visual system calibration program | A visual system’s calibration program was proposed and the position relationship between the camera and the robot was obtained. | [134] |

| 2010 | Machinery | Robot system for welding seawater desalination pipes | A robotic system for welding and cutting seawater desalination pipes was introduced. | [135] |

| 2021 | Aerospace | Aerospace friction stir welding robot | By analyzing the system composition and configuration of the robot, the loading conditions of the robot’s arm during the welding process were accurately simulated, and the simulation results were used for strength and fatigue checks. | [136] |

| 2021 | Aerospace | New type of friction stir welding robot | An iterative closest point algorithm was used to plan the welding trajectory for the most complex petal welding conditions. | [137] |

| 2010 | Aerospace | Industrial robot | Using industrial robots for the friction stir welding (FSW) of metal structures, with a focus on the assembly of aircraft parts made of aluminum alloy. | [138] |

| 2014 | Railway | Industrial robot | The system was developed and implemented based on a three-axis motion device and a visual system composed of a camera, a laser head, and a band-pass filter. | [139] |

| 2017 | Railway | Rail welding path grinding robot | A method for measuring and reconstructing a steel rail welding model was proposed. | [140] |

| 2000 | Railway | Industrial robot | Automation in welding production for manufacturing railroad car bodies was introduced, involving friction stir welding, laser welding, and other advanced welding techniques. | [141] |

| 2018 | Nuclear | New type of underwater welding robot | An underwater robot for the underwater welding of cracks in nuclear power plants and other underwater scenarios was developed. | [142] |

| 2017 | Nuclear | Robot TIG welding | Manual and robotic TIG welding used in key nuclear industry manufacturing was compared. | [143] |

| 2020 | PCB | Flexible PCB welding robot | A deep learning-based automatic welding operation scheme for flexible PCBs was proposed. | [144] |

| 2023 | PCB | Soldering robot | The optimized PCB welding sequence was crucial for improving the welding speed and safety of robots. | [145] |

| 1997 | Construction | Steel frame structure welding robot | Two welding robot systems were developed to rationalize the welding of steel frame structures. | [146] |

| 2020 | Construction | Steel frame structure welding robot | The adaptive tool path of the robot system enabled the robot to generate welds at complex approach angles, thereby increasing the potential of the process. | [147] |

| 2020 | Medical equipment | Surgical robot performing remote welding | The various challenges of using surgical robots equipped with digital cameras for remote welding, used to observe welding areas, especially the difficulty of detecting weld pool boundaries, were described. | [148] |

| 2020 | Medical equipment | Intelligent welding system for human soft tissue | By combining manual welding machines with automatic welding systems, intelligent welding systems for human soft tissue welding could be developed in medicine. | [149] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Li, L.; Xu, P. Visual Sensing and Depth Perception for Welding Robots and Their Industrial Applications. Sensors 2023, 23, 9700. https://doi.org/10.3390/s23249700

Wang J, Li L, Xu P. Visual Sensing and Depth Perception for Welding Robots and Their Industrial Applications. Sensors. 2023; 23(24):9700. https://doi.org/10.3390/s23249700

Chicago/Turabian StyleWang, Ji, Leijun Li, and Peiquan Xu. 2023. "Visual Sensing and Depth Perception for Welding Robots and Their Industrial Applications" Sensors 23, no. 24: 9700. https://doi.org/10.3390/s23249700

APA StyleWang, J., Li, L., & Xu, P. (2023). Visual Sensing and Depth Perception for Welding Robots and Their Industrial Applications. Sensors, 23(24), 9700. https://doi.org/10.3390/s23249700