Quaternary Categorization Strategy for Reconstructing High-Reflectivity Surface in Structured Light Illumination

Abstract

:1. Introduction

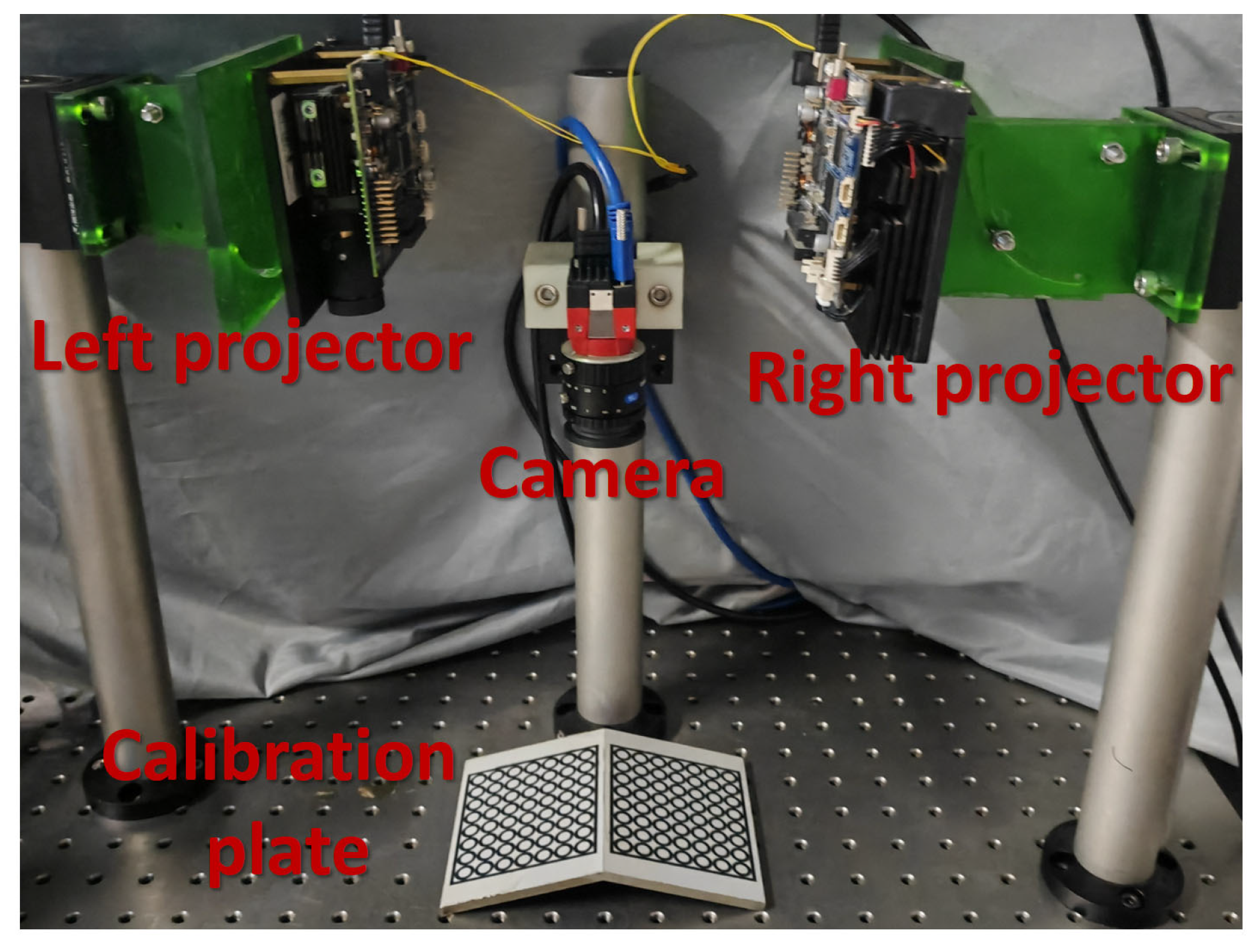

2. Methods

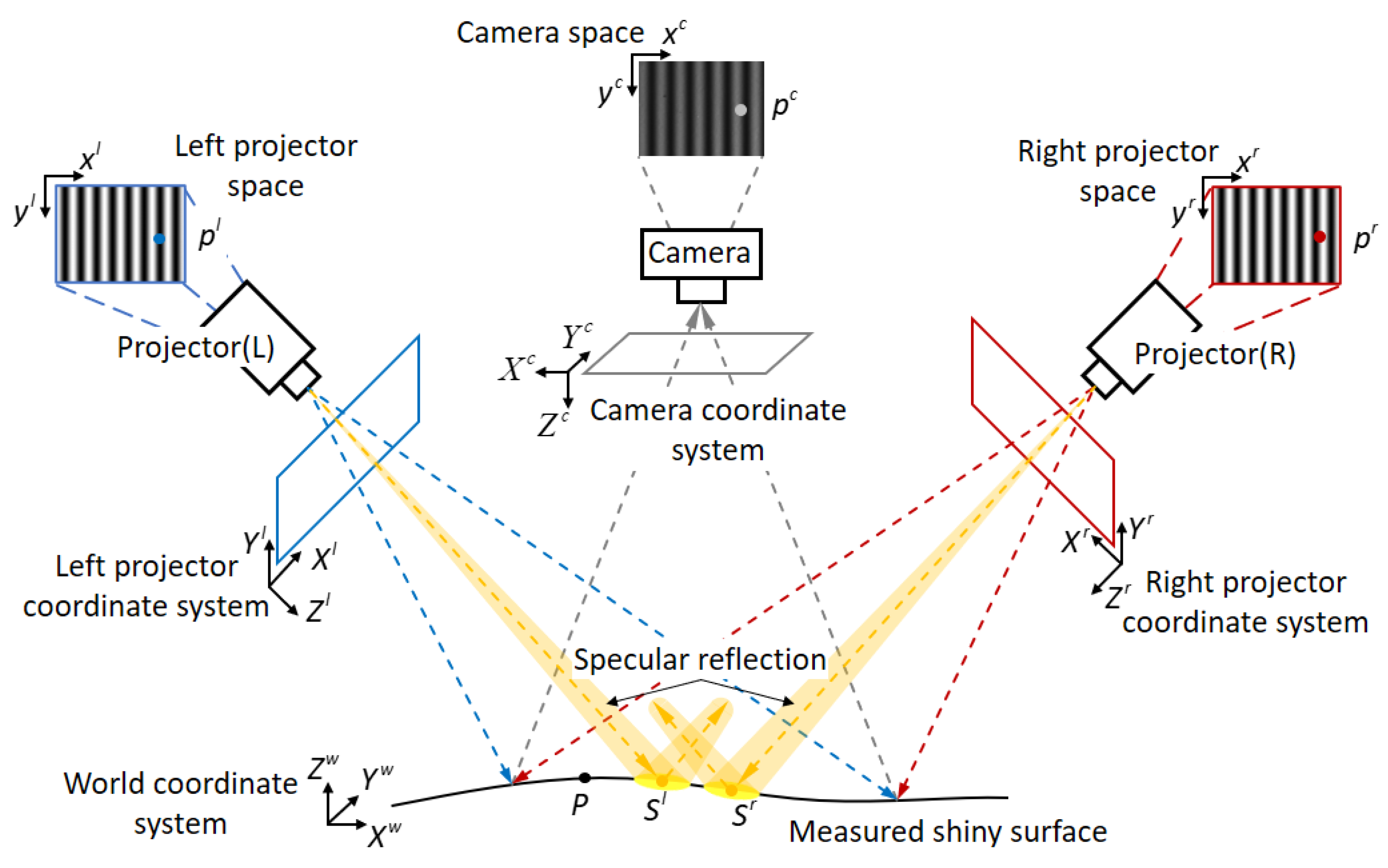

2.1. DSLI Model for 3D Reconstruction

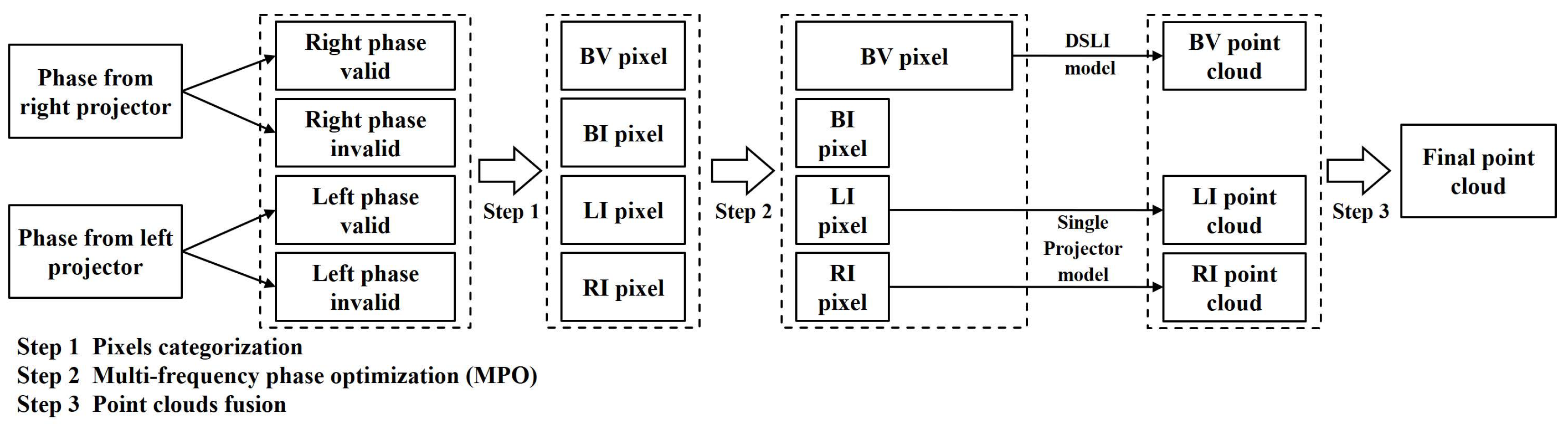

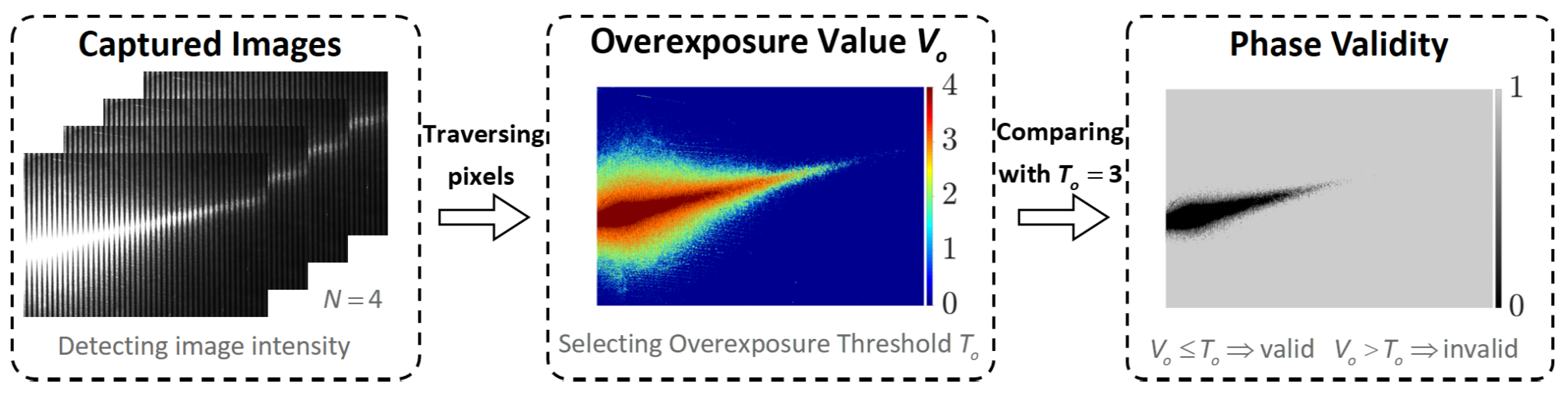

2.2. Pixel Categorization Based on Phase Validity Detection

- (1)

- Pixels with are classified as BV pixels, and their 3D coordinates are calculated using the DSLI model.

- (2)

- Pixels with and are classified as LI pixels, and their 3D coordinates are calculated using the single-projector model.

- (3)

- Pixels with and are classified as RI pixels, and their 3D coordinates are calculated using the single-projector model.

- (4)

- Pixels with are classified as BI pixels, and it is not possible to calculate their 3D coordinates.

2.3. MPO Based on Phase Validity Detection

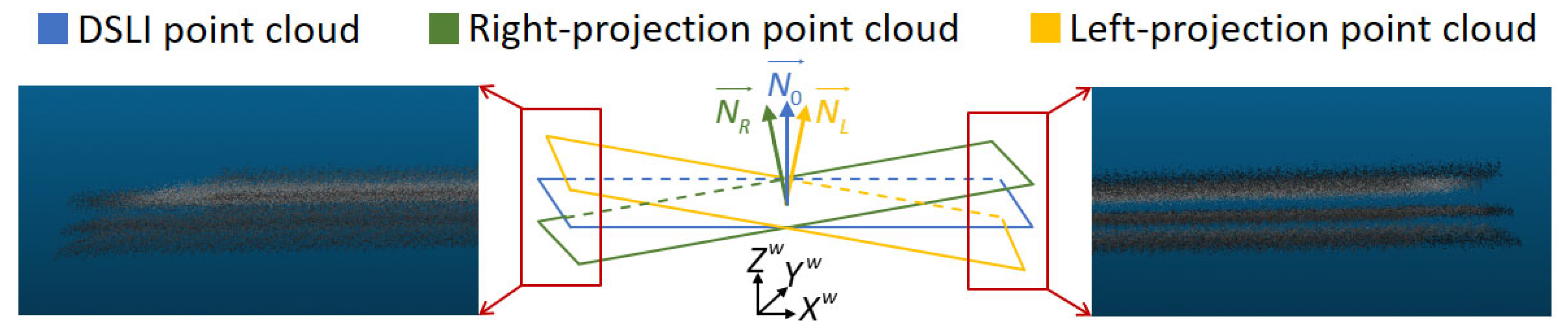

2.4. Point Cloud Fusion Based on Pixel Categorization

3. Experiments

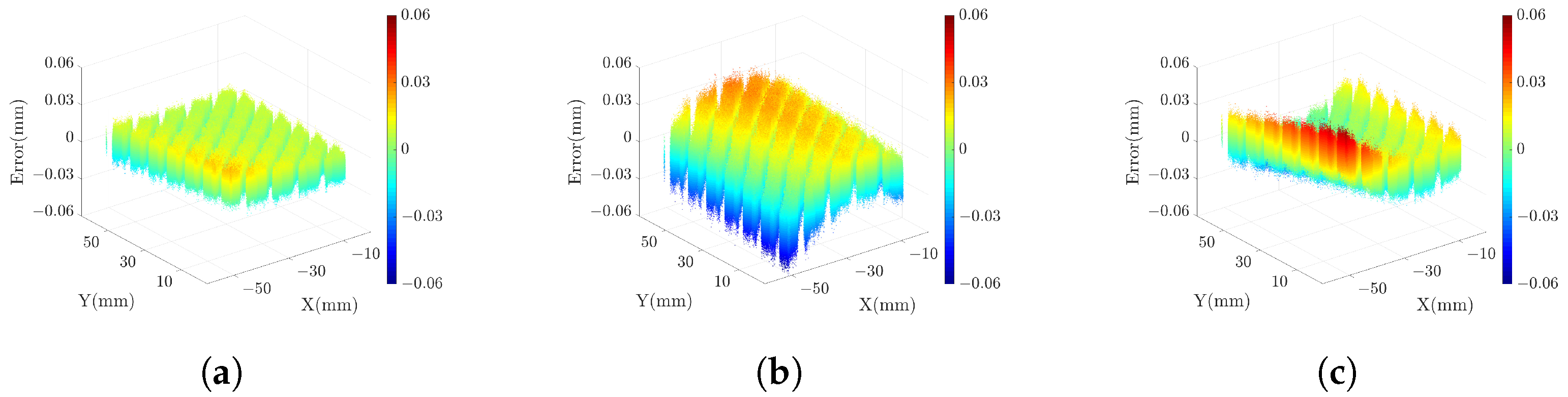

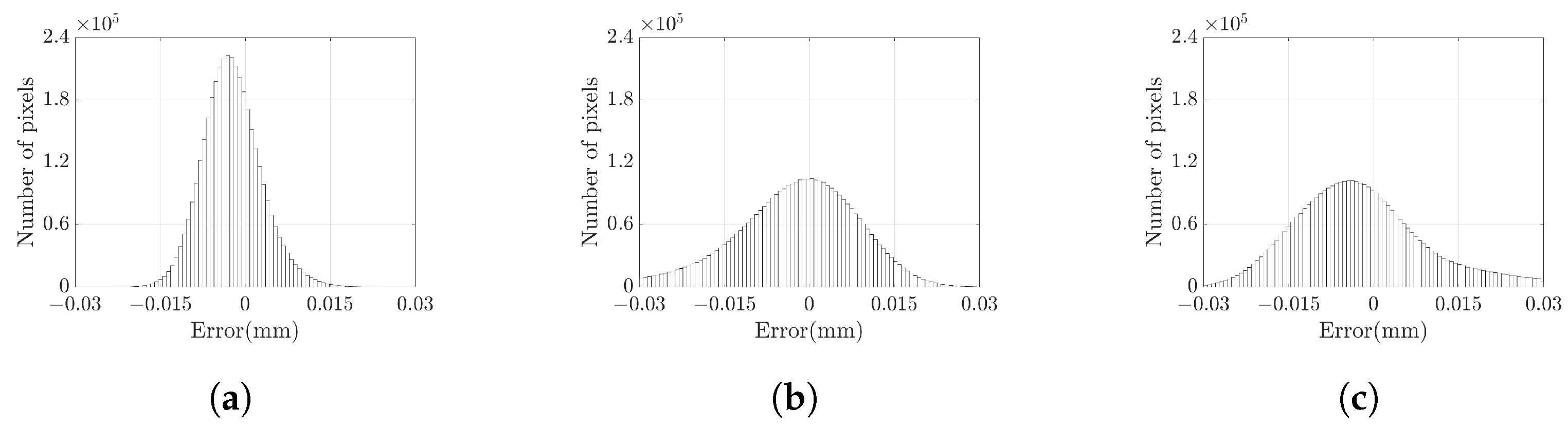

3.1. Measuring Standard Plane

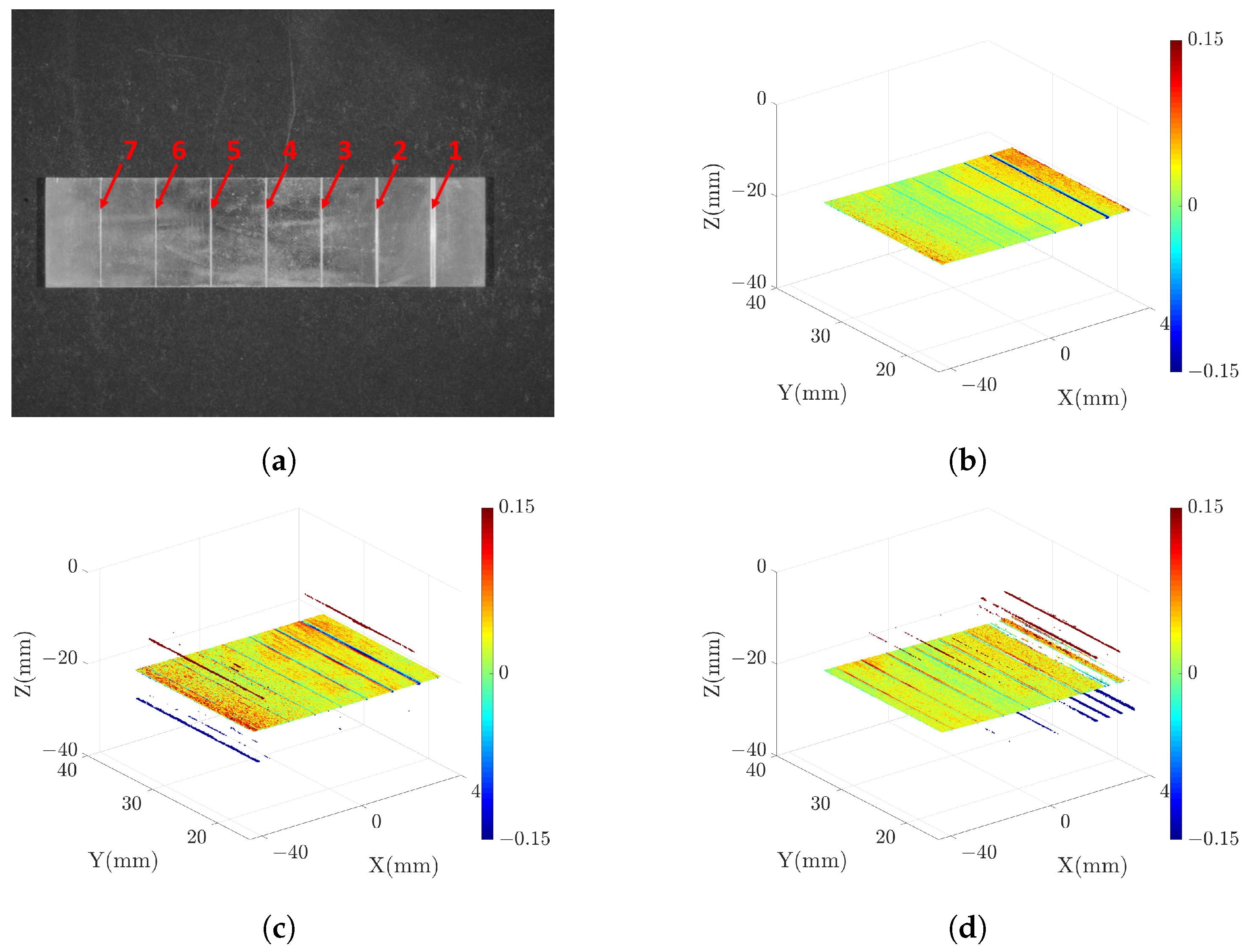

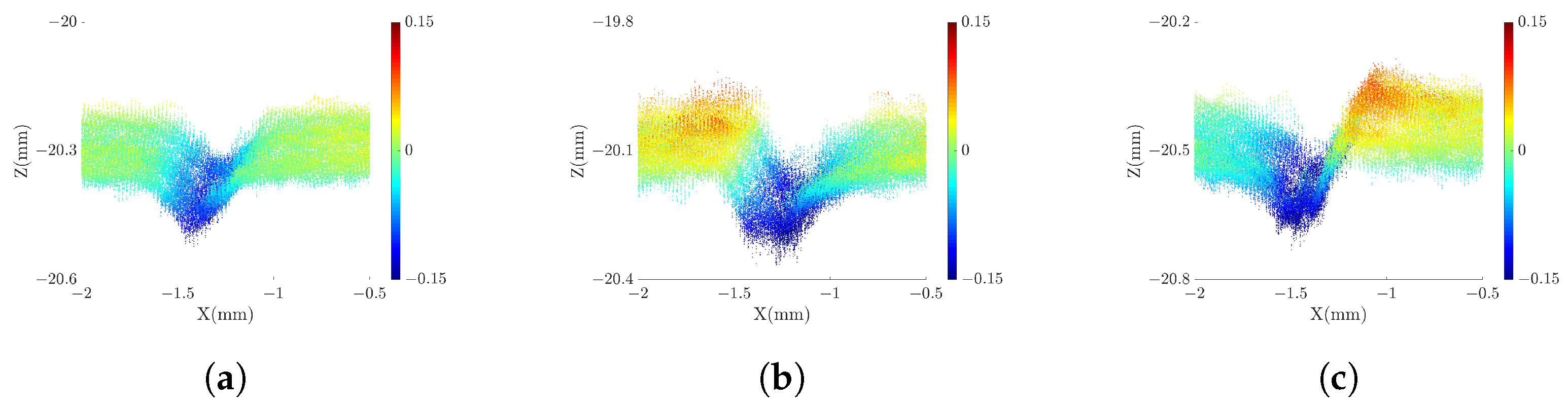

3.2. Measuring Precision Microgrooves

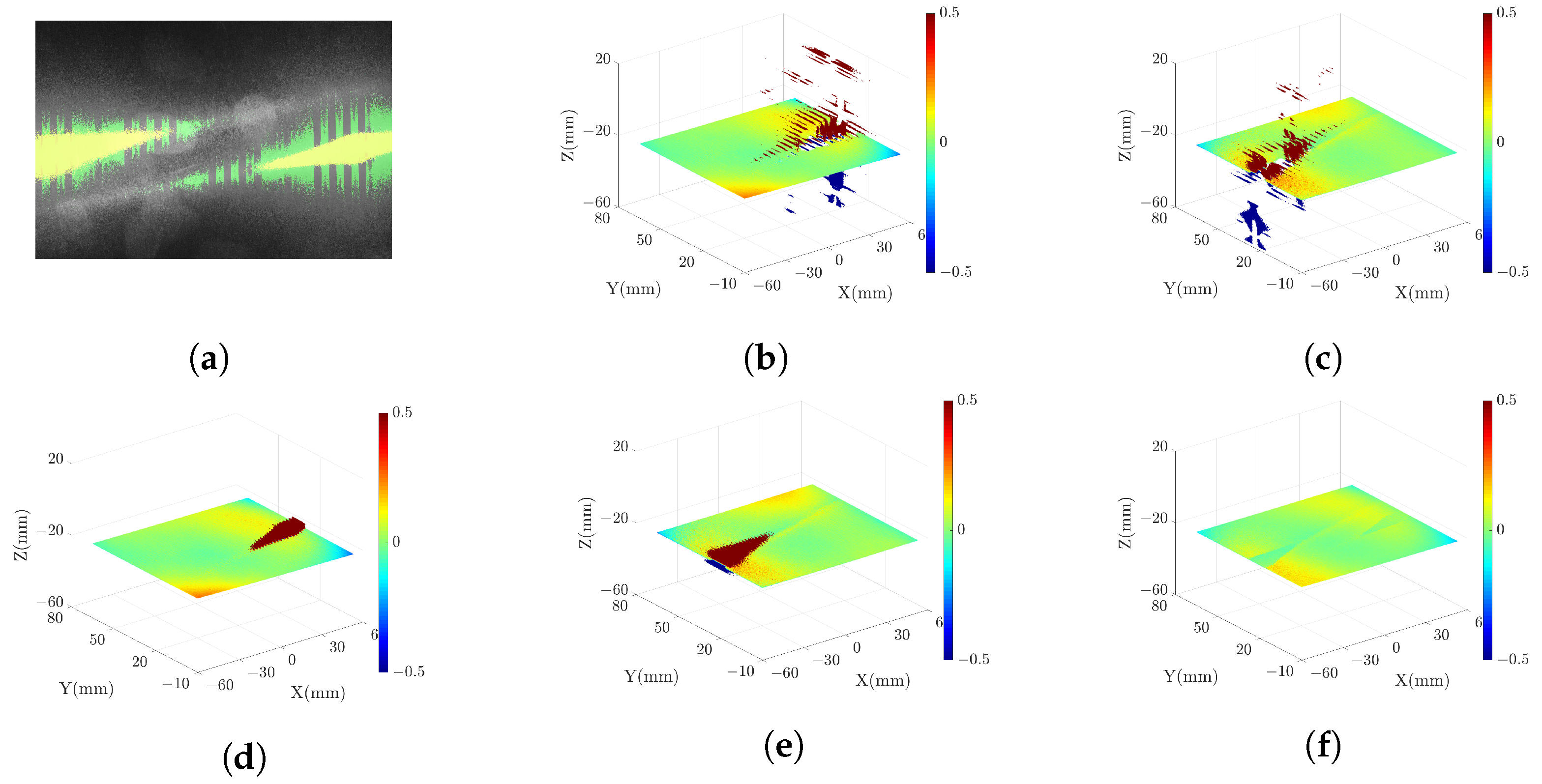

3.3. Measuring High-Reflectivity Metal Plate

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fang, F. On the Three Paradigms of Manufacturing Advancement. Nanomanuf. Metrol. 2023, 6, 1–3. [Google Scholar] [CrossRef]

- Ito, S.; Kameoka, D.; Matsumoto, K.; Kamiya, K. Design and development of oblique-incident interferometer for form measurement of hand-scraped surfaces. Nanomanuf. Metrol. 2021, 4, 69–76. [Google Scholar] [CrossRef]

- Shkurmanov, A.; Krekeler, T.; Ritter, M. Slice thickness optimization for the focused ion beam-scanning electron microscopy 3D tomography of hierarchical nanoporous gold. Nanomanuf. Metrol. 2022, 5, 112–118. [Google Scholar] [CrossRef]

- Bai, J.; Wang, Y.; Wang, X.; Zhou, Q.; Ni, K.; Li, X. Three-probe error separation with chromatic confocal sensors for roundness measurement. Nanomanuf. Metrol. 2021, 4, 247–255. [Google Scholar] [CrossRef]

- Han, M.; Lei, F.; Shi, W.; Lu, S.; Li, X. Uniaxial MEMS-based 3D reconstruction using pixel refinement. Opt. Express 2023, 31, 536–554. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yin, Y.; Wu, Q.; Li, X.; Zhang, G. On-site calibration method for outdoor binocular stereo vision sensors. Opt. Lasers Eng. 2016, 86, 75–82. [Google Scholar] [CrossRef]

- Sun, Q.; Chen, J.; Li, C. A robust method to extract a laser stripe centre based on grey level moment. Opt. Lasers Eng. 2015, 67, 122–127. [Google Scholar] [CrossRef]

- Liu, G.; Liu, X.; Feng, Q. 3D shape measurement of objects with high dynamic range of surface reflectivity. Appl. Opt. 2011, 50, 4557–4565. [Google Scholar] [CrossRef]

- Qi, Z.; Wang, Z.; Huang, J.; Xing, C.; Gao, J. Error of image saturation in the structured-light method. Appl. Opt. 2018, 57, A181–A188. [Google Scholar] [CrossRef]

- Palousek, D.; Omasta, M.; Koutny, D.; Bednar, J.; Koutecky, T.; Dokoupil, F. Effect of matte coating on 3D optical measurement accuracy. Opt. Mater. 2015, 40, 1–9. [Google Scholar] [CrossRef]

- Jahid, T.; Karmouni, H.; Hmimid, A.; Sayyouri, M.; Qjidaa, H. Image moments and reconstruction by Krawtchouk via Clenshaw’s reccurence formula. In Proceedings of the 2017 International Conference on Electrical and Information Technologies (ICEIT), IEEE, Rabat, Morocco, 15–18 November 2017; pp. 1–7. [Google Scholar]

- Karmouni, H.; Jahid, T.; Hmimid, A.; Sayyouri, M.; Qjidaa, H. Fast computation of inverse Meixner moments transform using Clenshaw’s formula. Multimed. Tools Appl. 2019, 78, 31245–31265. [Google Scholar] [CrossRef]

- Feng, S.; Zhang, L.; Zuo, C.; Tao, T.; Chen, Q.; Gu, G. High dynamic range 3D measurements with fringe projection profilometry: A review. Meas. Sci. Technol. 2018, 29, 122001. [Google Scholar] [CrossRef]

- Zhang, S.; Yau, S. High dynamic range scanning technique. Opt. Eng. 2009, 48, 033604. [Google Scholar]

- Qi, Z.; Wang, Z.; Huang, J.; Xue, Q.; Gao, J. Improving the quality of stripes in structured-light three-dimensional profile measurement. Opt. Eng. 2016, 56, 031208. [Google Scholar] [CrossRef]

- Ekstrand, L.; Zhang, S. Autoexposure for three-dimensional shape measurement using a digital-light-processing projector. Opt. Eng. 2011, 50, 123603. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, Q. A 3D shape measurement method for high-reflective surface based on accurate adaptive fringe projection. Opt. Lasers Eng. 2022, 153, 106994. [Google Scholar] [CrossRef]

- Zhu, Z.; Li, M.; Zhou, F.; You, D. Stable 3D measurement method for high dynamic range surfaces based on fringe projection profilometry. Opt. Lasers Eng. 2023, 166, 107542. [Google Scholar] [CrossRef]

- Zhu, Z.; You, D.; Zhou, F.; Wang, S.; Xie, Y. Rapid 3D reconstruction method based on the polarization-enhanced fringe pattern of an HDR object. Opt. Express 2021, 29, 2162–2171. [Google Scholar] [CrossRef] [PubMed]

- Salahieh, B.; Chen, Z.; Rodriguez, J.J.; Liang, R. Multi-polarization fringe projection imaging for high dynamic range objects. Opt. Express 2014, 22, 10064–10071. [Google Scholar] [CrossRef]

- Feng, S.; Chen, Q.; Zuo, C.; Asundi, A. Fast three-dimensional measurements for dynamic scenes with shiny surfaces. Opt. Commun. 2017, 382, 18–27. [Google Scholar] [CrossRef]

- Kowarschik, R.M.; Kuehmstedt, P.; Gerber, J.; Schreiber, W.; Notni, G. Adaptive optical 3-D-measurement with structured light. Opt. Eng. 2000, 39, 150–158. [Google Scholar]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

- Jia, Z.; Sun, H.; Liu, W.; Yu, X.; Yu, J.; Rodrıguez-Andina, J.J.; Gao, H. 3-D Reconstruction Method for a Multiview System Based on Global Consistency. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Yu, Y.; Lau, D.L.; Ruffner, M.P.; Liu, K. Dual-projector structured light 3D shape measurement. Appl. Opt. 2020, 59, 964–974. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Lim, B.; Zhang, S. Three-dimensional shape measurement using a structured light system with dual projectors. Appl. Opt. 2018, 57, 3983–3990. [Google Scholar] [CrossRef]

- Zhang, Y.; Qu, X.; Li, Y.; Zhang, F. A Separation Method of Superimposed Gratings in Double-Projector Fringe Projection Profilometry Using a Color Camera. Appl. Sci. 2021, 11, 890. [Google Scholar] [CrossRef]

- Zhang, R.; Guo, H.; Asundi, A.K. Geometric analysis of influence of fringe directions on phase sensitivities in fringe projection profilometry. Appl. Opt. 2016, 55, 7675–7687. [Google Scholar] [CrossRef]

- Liu, K.; Wang, Y.; Lau, D.L.; Hao, Q.; Hassebrook, L.G. Dual-frequency pattern scheme for high-speed 3-D shape measurement. Opt. Express 2010, 18, 5229–5244. [Google Scholar] [CrossRef]

- Yalla, V.G.; Hassebrook, L.G. Very high resolution 3D surface scanning using multi-frequency phase measuring profilometry. In Proceedings of the Spaceborne Sensors II, International Society for Optics and Photonics, SPIE, Orlando, FL, USA, 28 March–1 April 2005; Tchoryk, P., Jr., Holz, B., Eds.; Volume 5798, pp. 44–53. [Google Scholar]

- Lv, S.; Tang, D.; Zhang, X.; Yang, D.; Deng, W.; Kemao, Q. Fringe projection profilometry method with high efficiency, precision, and convenience: Theoretical analysis and development. Opt. Express 2022, 30, 33515–33537. [Google Scholar] [CrossRef]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Han, M.; Kan, J.; Yang, G.; Li, X. Robust Ellipsoid Fitting Using Combination of Axial and Sampson Distances. IEEE Trans. Instrum. Meas. 2023, 72, 2526714. [Google Scholar] [CrossRef]

- Du, H. DMPFIT: A Tool for Atomic-Scale Metrology via Nonlinear Least-Squares Fitting of Peaks in Atomic-Resolution TEM Images. Nanomanuf. Metrol. 2022, 5, 101–111. [Google Scholar] [CrossRef]

- Overmann, S.P. Thermal Design Considerations for Portable DLP TM Projectors. In Proceedings of the High-Density Interconnect and Systems Packaging, Santa Clara, CA, USA, 17–20 April 2001; Volume 4428, p. 125. [Google Scholar]

- Zhang, G.; Xu, B.; Lau, D.L.; Zhu, C.; Liu, K. Correcting projector lens distortion in real time with a scale-offset model for structured light illumination. Opt. Express 2022, 30, 24507–24522. [Google Scholar] [CrossRef] [PubMed]

| Projection | DSLI Model (μm) | Single-Projector Model (μm) | |

|---|---|---|---|

| SRP Model | SLP Model | ||

| MAE | 4.55 | 8.83 | 9.7 |

| PV | 57.04 | 111.6 | 108.13 |

| RMSE | 5.65 | 11.53 | 12.38 |

| Improvement a | 51.00% b | 54.36% c | |

| Microgroove Number | Ground Truth (μm) | Proposed Strategy (μm) | Single-Projector Model (μm) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| SRP Model | SLP Model | ||||||||

| Depth | Width | D/W | DMV | Error | DMV | Error | DMV | Error | |

| 1 | 297 | 1002 | 29.6% | 290.8 | 265.5 | UM a | \ | ||

| 2 | 198 | 551 | 35.9% | 189.1 | 196.9 | UM a | \ | ||

| 3 | 148 | 399 | 37.1% | 146.1 | 139.9 | UM a | \ | ||

| 4 | 109 | 280 | 38.9% | 97.5 | 127.3 | 18.3 | 127 | 18.0 | |

| 5 | 79 | 191 | 41.4% | 63.9 | 101.5 | 22.5 | 53.3 | ||

| 6 | 59 | 130 | 45.9% | 46.7 | 81.2 | 22.2 | 72.2 | 13.2 | |

| 7 | 49 | 100 | 49.0% | 33.5 | UM a | \ | 37.4 | ||

| PV | \ | \ | 13.6 | \ | 54.0 | \ | 38.9 | ||

| MAE | \ | \ | 10.2 | \ | 17.3 | \ | 17.1 | ||

| Improvement b | 40.70% | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, B.; Qu, S.; Li, J.; Deng, Z.; Li, H.; Zhang, B.; Zhang, G.; Liu, K. Quaternary Categorization Strategy for Reconstructing High-Reflectivity Surface in Structured Light Illumination. Sensors 2023, 23, 9740. https://doi.org/10.3390/s23249740

Xu B, Qu S, Li J, Deng Z, Li H, Zhang B, Zhang G, Liu K. Quaternary Categorization Strategy for Reconstructing High-Reflectivity Surface in Structured Light Illumination. Sensors. 2023; 23(24):9740. https://doi.org/10.3390/s23249740

Chicago/Turabian StyleXu, Bin, Shangcheng Qu, Jinhua Li, Zhiyong Deng, Hongyu Li, Bo Zhang, Geyou Zhang, and Kai Liu. 2023. "Quaternary Categorization Strategy for Reconstructing High-Reflectivity Surface in Structured Light Illumination" Sensors 23, no. 24: 9740. https://doi.org/10.3390/s23249740

APA StyleXu, B., Qu, S., Li, J., Deng, Z., Li, H., Zhang, B., Zhang, G., & Liu, K. (2023). Quaternary Categorization Strategy for Reconstructing High-Reflectivity Surface in Structured Light Illumination. Sensors, 23(24), 9740. https://doi.org/10.3390/s23249740