InertialNet: Inertial Measurement Learning for Simultaneous Localization and Mapping †

Abstract

:1. Introduction

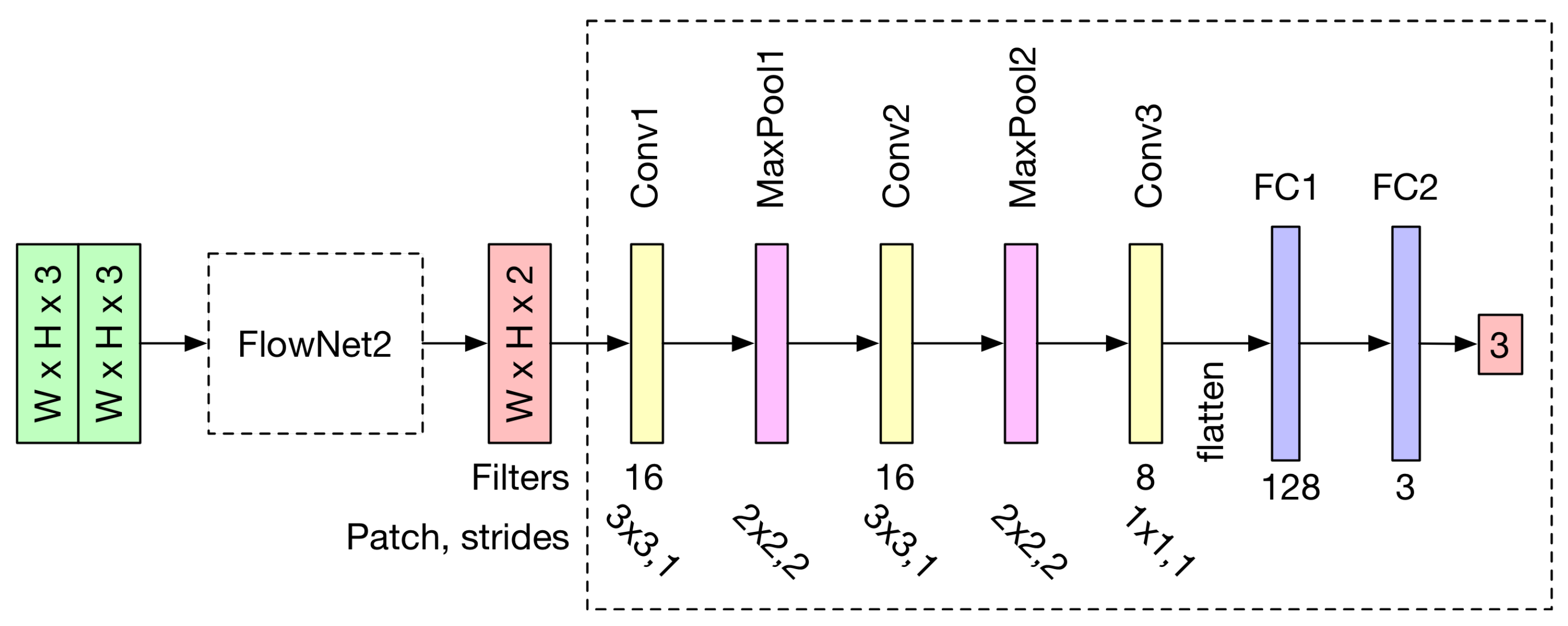

- A new neural network structure, InertialNet, is proposed. It is designed for camera rotation prediction from image sequences, and the architecture is able to converge well and fast.

- The model generalization for new environment scenes is achieved via the architecture design with an optical flow substructure.

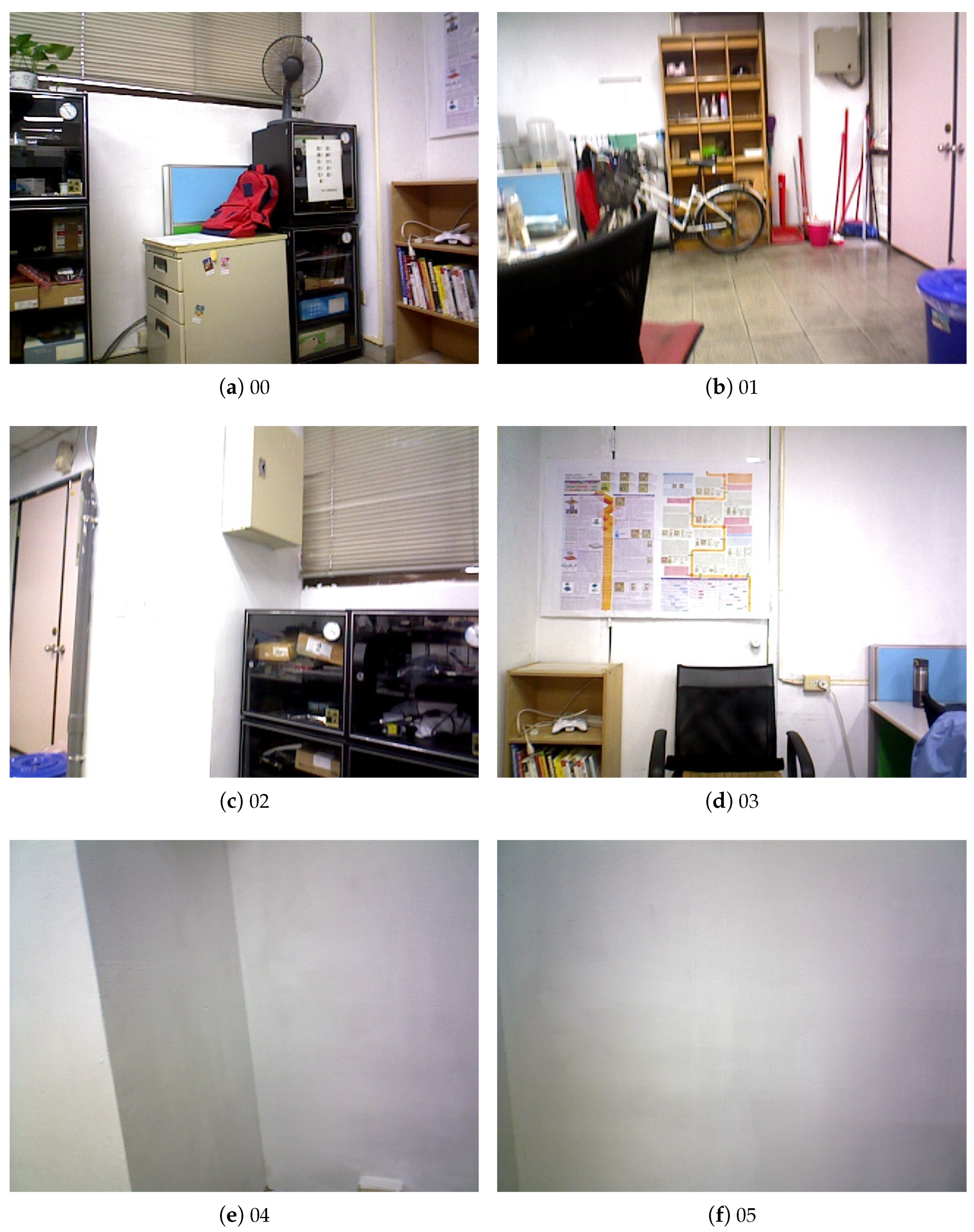

- Our proposed system is able to provide stable predictions under image blur, illumination change and low-texture environments.

2. Related Work

3. Approach

4. Experiments

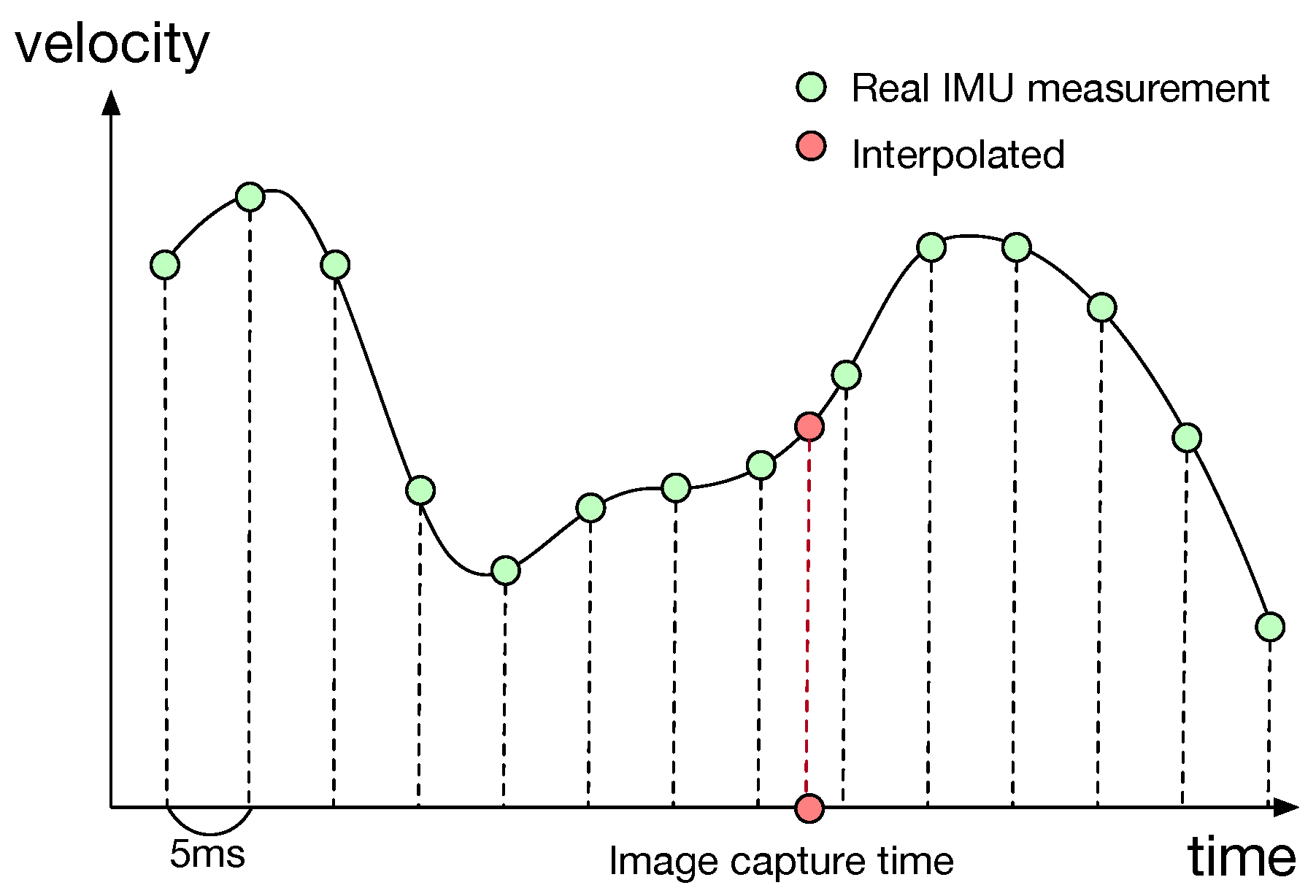

4.1. IMU and Image Synchronization

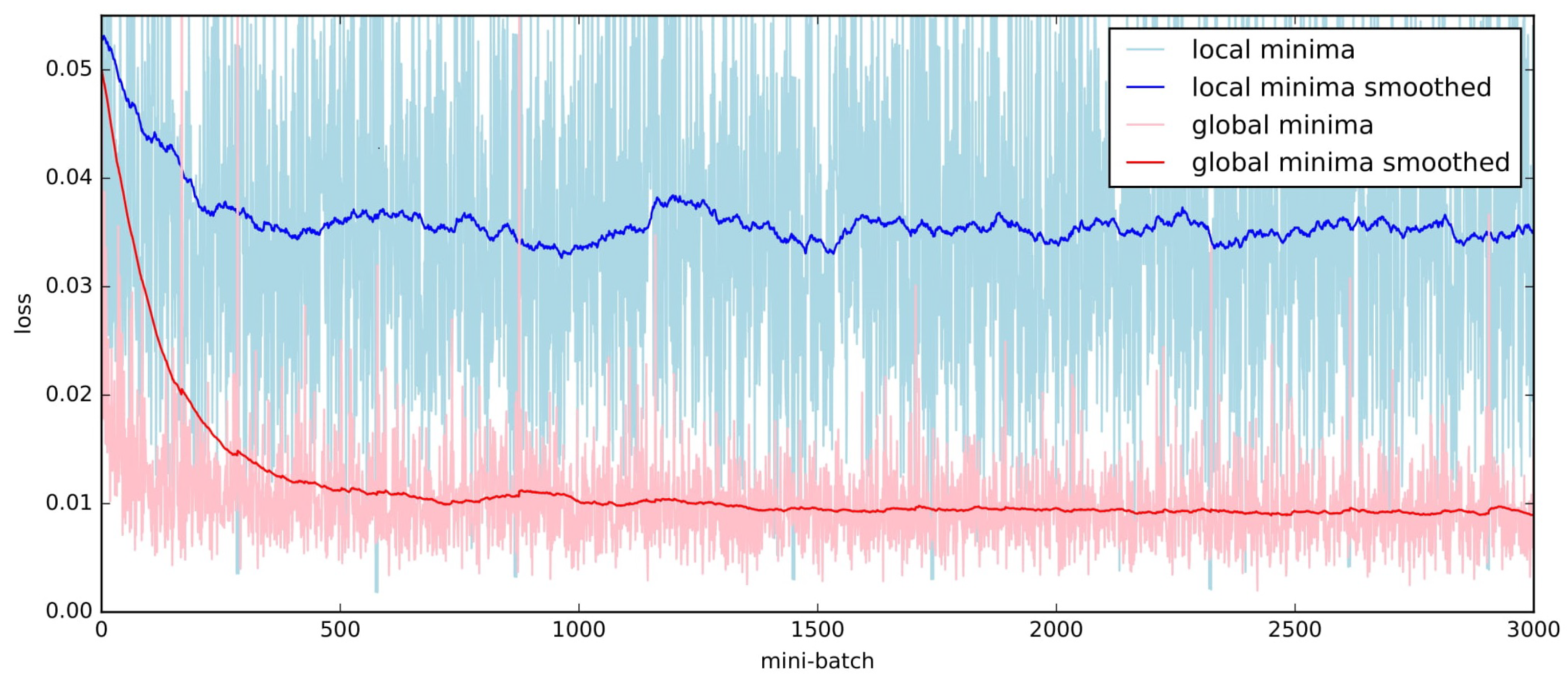

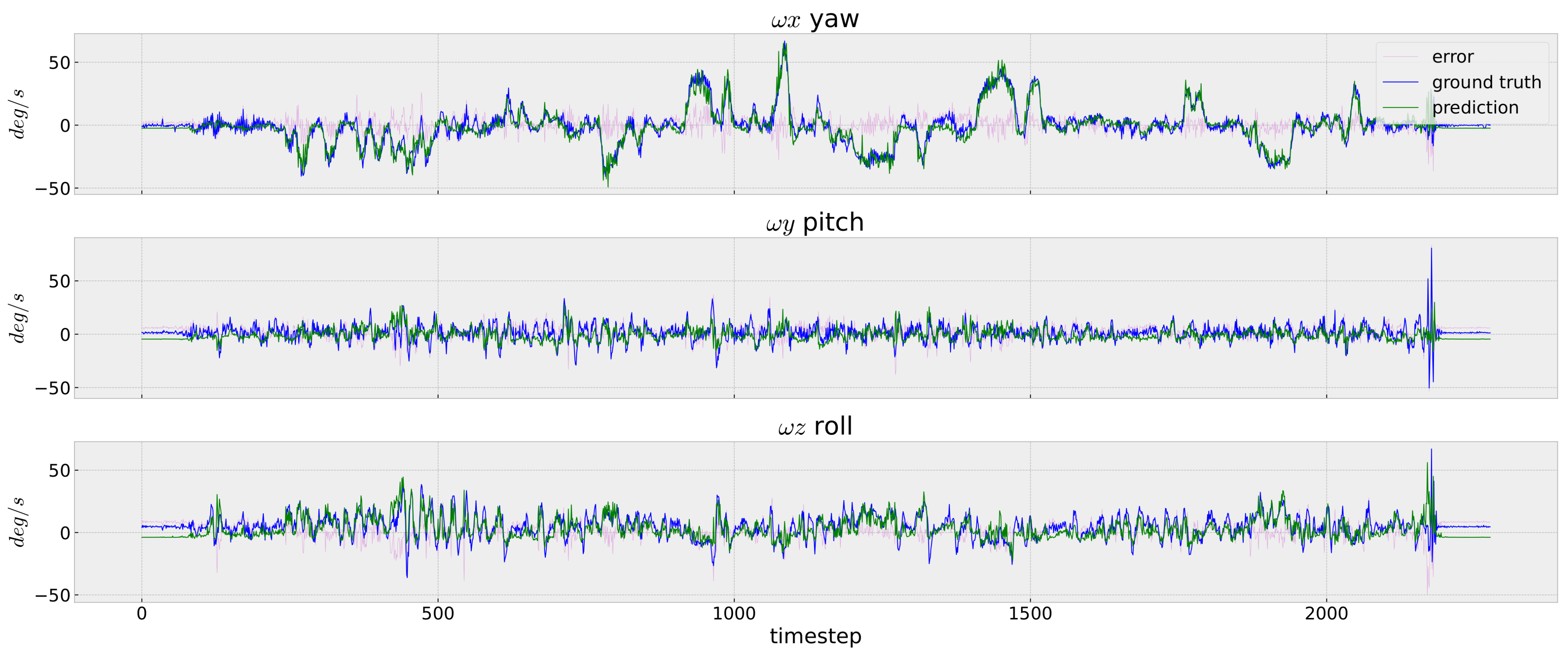

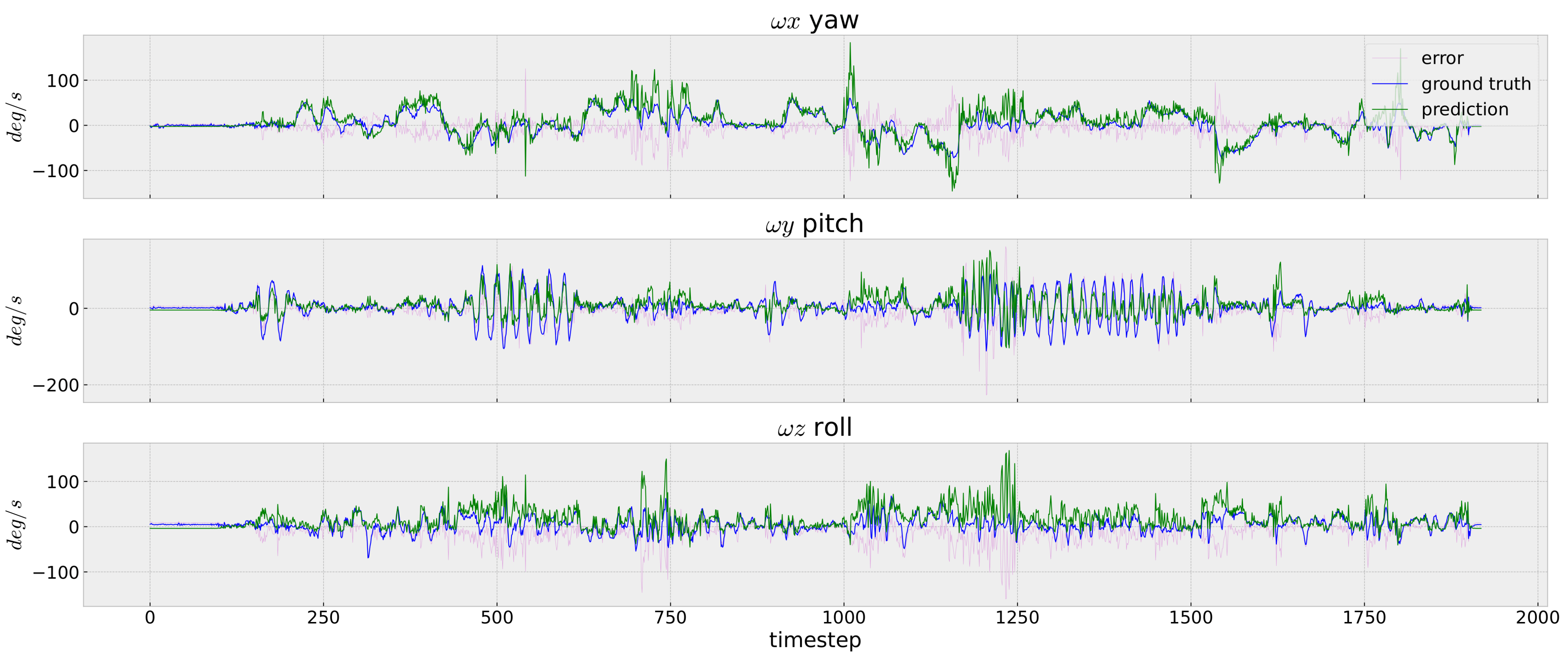

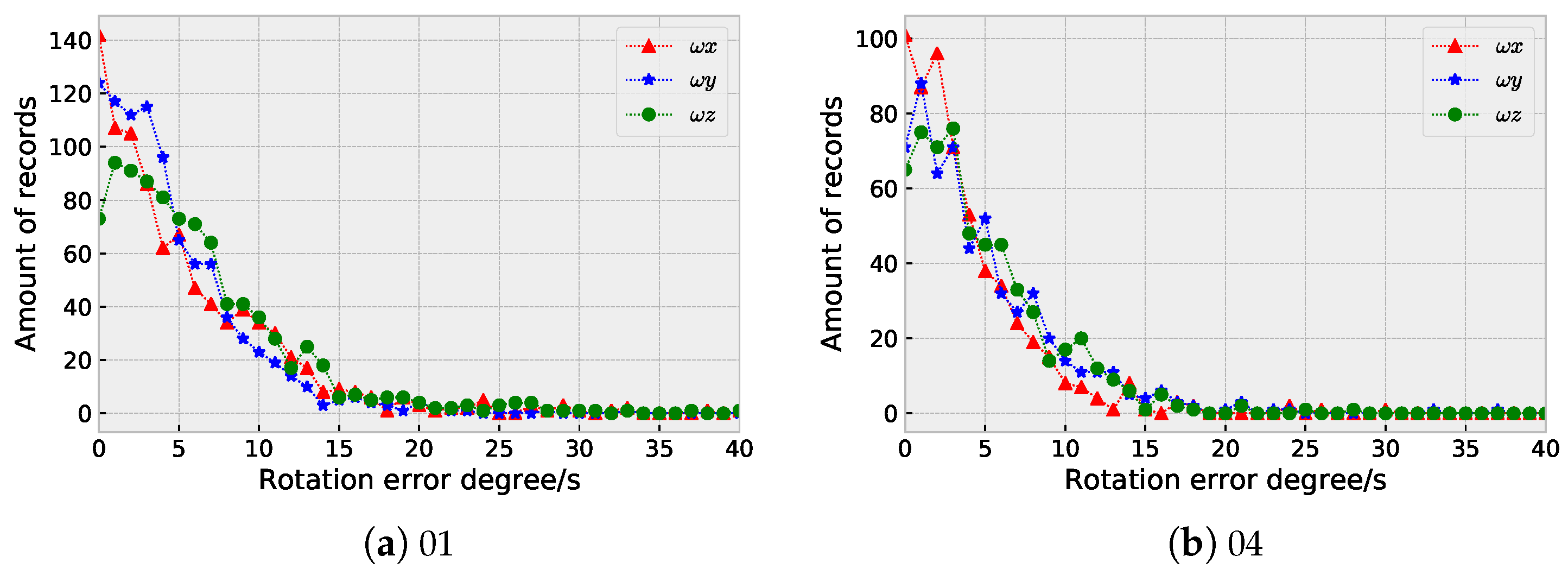

4.2. Prediction Results

- The rotation error for time t.

- The prediction RMS error (root-mean-square error).

- The distribution of the prediction errors.

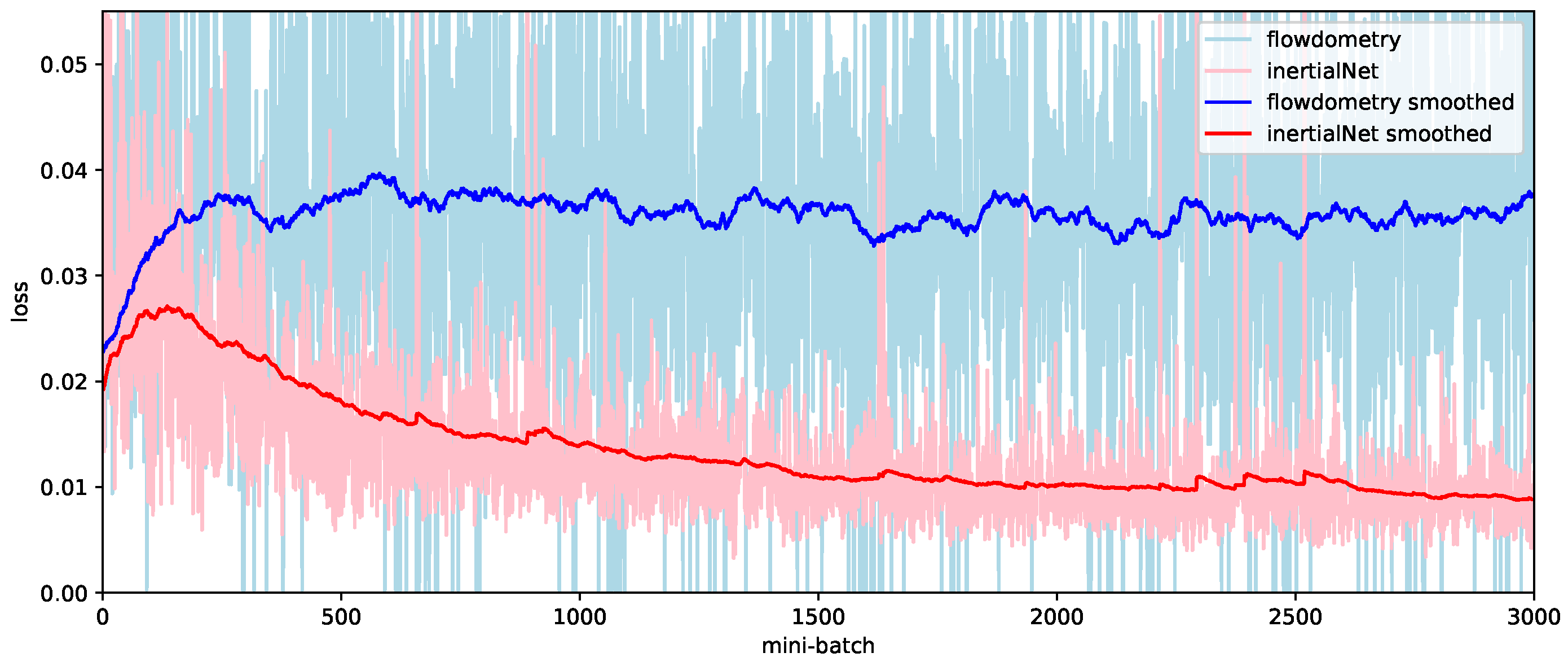

4.3. Comparison with Similar Approaches

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Do, C.H.; Lin, H.Y. Incorporating neuro-fuzzy with extended Kalman filter for simultaneous localization and mapping. Int. J. Adv. Robot. Syst. 2019, 16, 1–13. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Moemen, M.Y.; Elghamrawy, H.; Givigi, S.N.; Noureldin, A. 3-D reconstruction and measurement system based on multimobile robot machine vision. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar] [CrossRef]

- Jiang, G.; Yin, L.; Liu, G.; Xi, W.; Ou, Y. FFT-based scan-matching for SLAM applications with low-cost laser range finders. Appl. Sci. 2019, 9, 41. [Google Scholar] [CrossRef]

- Lin, H.Y.; Chung, Y.C.; Wang, M.L. Self-Localization of Mobile Robots Using a Single Catadioptric Camera with Line Feature Extraction. Sensors 2021, 21, 4719. [Google Scholar] [CrossRef] [PubMed]

- Sergiyenko, O.Y.; Tyrsa, V.V. 3D optical machine vision sensors with intelligent data management for robotic swarm navigation improvement. IEEE Sens. J. 2020, 21, 11262–11274. [Google Scholar] [CrossRef]

- Sergiyenko, O.Y.; Ivanov, M.V.; Tyrsa, V.; Kartashov, V.M.; Rivas-López, M.; Hernández-Balbuena, D.; Flores-Fuentes, W.; Rodríguez-Quiñonez, J.C.; Nieto-Hipólito, J.I.; Hernandez, W.; et al. Data transferring model determination in robotic group. Robot. Auton. Syst. 2016, 83, 251–260. [Google Scholar] [CrossRef]

- Xie, W.; Liu, P.X.; Zheng, M. Moving object segmentation and detection for robust RGBD-SLAM in dynamic environments. IEEE Trans. Instrum. Meas. 2020, 70, 20151904. [Google Scholar] [CrossRef]

- Chiodini, S.; Giubilato, R.; Pertile, M.; Debei, S. Retrieving scale on monocular visual odometry using low-resolution range sensors. IEEE Trans. Instrum. Meas. 2020, 69, 5875–5889. [Google Scholar] [CrossRef]

- Sun, R.; Yang, Y.; Chiang, K.W.; Duong, T.T.; Lin, K.Y.; Tsai, G.J. Robust IMU/GPS/VO integration for vehicle navigation in GNSS degraded urban areas. IEEE Sens. J. 2020, 20, 10110–10122. [Google Scholar] [CrossRef]

- Fan, C.; Hou, J.; Yu, L. A nonlinear optimization-based monocular dense mapping system of visual-inertial odometry. Measurement 2021, 180, 109533. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Krombach, N.; Droeschel, D.; Houben, S.; Behnke, S. Feature-based visual odometry prior for real-time semi-dense stereo SLAM. Robot. Auton. Syst. 2018, 109, 38–58. [Google Scholar] [CrossRef]

- Lin, H.Y.; Hsu, J.L. A sparse visual odometry technique based on pose adjustment with keyframe matching. IEEE Sens. J. 2020, 21, 11810–11821. [Google Scholar] [CrossRef]

- Engel, J.; Stückler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 1935–1942. [Google Scholar]

- Zhou, H.; Ni, K.; Zhou, Q.; Zhang, T. An SFM algorithm with good convergence that addresses outliers for realizing mono-SLAM. IEEE Trans. Ind. Inform. 2016, 12, 515–523. [Google Scholar] [CrossRef]

- Chen, C.; Rosa, S.; Miao, Y.; Lu, C.X.; Wu, W.; Markham, A.; Trigoni, N. Selective sensor fusion for neural visual-inertial odometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10542–10551. [Google Scholar]

- Lin, H.Y.; Zhan, J.R. GNSS-denied UAV indoor navigation with UWB incorporated visual inertial odometry. Measurement 2023, 206, 112256. [Google Scholar] [CrossRef]

- Al Hage, J.; Mafrica, S.; El Najjar, M.E.B.; Ruffier, F. Informational framework for minimalistic visual odometry on outdoor robot. IEEE Trans. Instrum. Meas. 2018, 68, 2988–2995. [Google Scholar] [CrossRef]

- Zheng, F.; Tsai, G.; Zhang, Z.; Liu, S.; Chu, C.C.; Hu, H. Trifo-VIO: Robust and efficient stereo visual inertial odometry using points and lines. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3686–3693. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Convolutional networks for real-time 6-DOF camera relocalization. arXiv 2015, arXiv:1505.07427. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Li, G.; Yu, L.; Fei, S. A deep-learning real-time visual SLAM system based on multi-task feature extraction network and self-supervised feature points. Measurement 2021, 168, 108403. [Google Scholar] [CrossRef]

- Parisotto, E.; Singh Chaplot, D.; Zhang, J.; Salakhutdinov, R. Global pose estimation with an attention-based recurrent network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 237–246. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2043–2050. [Google Scholar]

- Clark, R.; Wang, S.; Wen, H.; Markham, A.; Trigoni, N. Vinet: Visual-inertial odometry as a sequence-to-sequence learning problem. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Li, C.; Wang, S.; Zhuang, Y.; Yan, F. Deep sensor fusion between 2D laser scanner and IMU for mobile robot localization. IEEE Sens. J. 2019, 21, 8501–8509. [Google Scholar] [CrossRef]

- Li, C.; Yu, L.; Fei, S. Large-scale, real-time 3D scene reconstruction using visual and IMU sensors. IEEE Sens. J. 2020, 20, 5597–5605. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; van der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Liu, L.; Zhang, J.; He, R.; Liu, Y.; Wang, Y.; Tai, Y.; Luo, D.; Wang, C.; Li, J.; Huang, F. Learning by analogy: Reliable supervision from transformations for unsupervised optical flow estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6489–6498. [Google Scholar]

- Xu, H.; Zhang, J.; Cai, J.; Rezatofighi, H.; Tao, D. Gmflow: Learning optical flow via global matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8121–8130. [Google Scholar]

- Zhao, S.; Zhao, L.; Zhang, Z.; Zhou, E.; Metaxas, D. Global matching with overlapping attention for optical flow estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17592–17601. [Google Scholar]

- Jeong, J.; Lin, J.M.; Porikli, F.; Kwak, N. Imposing consistency for optical flow estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3181–3191. [Google Scholar]

- Zhu, R.; Yang, M.; Liu, W.; Song, R.; Yan, B.; Xiao, Z. DeepAVO: Efficient pose refining with feature distilling for deep Visual Odometry. Neurocomputing 2022, 467, 22–35. [Google Scholar] [CrossRef]

- Lu, G. Deep Unsupervised Visual Odometry Via Bundle Adjusted Pose Graph Optimization. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 6131–6137. [Google Scholar]

- Li, R.; Wang, S.; Long, Z.; Gu, D. UnDeepVO: Monocular Visual Odometry through Unsupervised Deep Learning. arXiv 2017, arXiv:1709.06841. [Google Scholar]

- Li, S.; Wu, X.; Cao, Y.; Zha, H. Generalizing to the open world: Deep visual odometry with online adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13184–13193. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Jin, H.; Song, Q.; Hu, X. Efficient Neural Architecture Search with Network Morphism. arXiv 2018, arXiv:1806.10282. [Google Scholar]

- Muller, P.; Savakis, A. Flowdometry: An optical flow and deep learning based approach to visual odometry. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 624–631. [Google Scholar]

| Seq. Name | # of Images | IMU | RMSE (deg) (wx, wy, wz) |

|---|---|---|---|

| V1_01 | 2912 | 29,120 | for training |

| V1_02 | 1710 | 17,100 | 10.16, 12.92, 15.41 |

| V1_03 | 2149 | 21,500 | 10.85, 20.12, 18.95 |

| V2_01 | 2280 | 22,800 | 5.97, 7.52, 7.64 |

| V2_02 | 2348 | 23,490 | 10.50, 17.88, 17.32 |

| V2_03 | 1922 | 23,370 | 18.52, 26.81, 25.10 |

| MH_01 | 3682 | 36,820 | 5.93, 8.75, 8.50 |

| MH_02 | 3040 | 30,400 | 7.09, 8.91, 9.36 |

| MH_03 | 2700 | 27,008 | 7.61, 9.79, 9.21 |

| MH_04 | 2033 | 20,320 | 6.51, 7.92, 7.91 |

| MH_05 | 2273 | 22,721 | 6.06, 7.72, 6.71 |

| Total | 27,049 | 274,649 |

| Seq. | Records | Content | RMSE (deg) (wx, wy, wz) |

|---|---|---|---|

| 00 | 1140 | all rotation and translation | for training |

| 01 | 905 | all rotation and translation | 9.11, 6.46, 9.14 |

| 02 | 1126 | pure rotation | 3.95, 6.09, 4.48 |

| 03 | 1183 | pure rotation | 5.01, 8.72, 4.94 |

| 04 | 578 | white wall | 5.64, 7.01, 6.59 |

| 05 | 1094 | white wall | 5.25, 7.24, 6.47 |

| 06 | 858 | all rotation and translation | 8.45, 9.35, 6.75 |

| Total | 6884 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-Y.; Liu, T.-A.; Lin, W.-Y. InertialNet: Inertial Measurement Learning for Simultaneous Localization and Mapping. Sensors 2023, 23, 9812. https://doi.org/10.3390/s23249812

Lin H-Y, Liu T-A, Lin W-Y. InertialNet: Inertial Measurement Learning for Simultaneous Localization and Mapping. Sensors. 2023; 23(24):9812. https://doi.org/10.3390/s23249812

Chicago/Turabian StyleLin, Huei-Yung, Tse-An Liu, and Wei-Yang Lin. 2023. "InertialNet: Inertial Measurement Learning for Simultaneous Localization and Mapping" Sensors 23, no. 24: 9812. https://doi.org/10.3390/s23249812

APA StyleLin, H.-Y., Liu, T.-A., & Lin, W.-Y. (2023). InertialNet: Inertial Measurement Learning for Simultaneous Localization and Mapping. Sensors, 23(24), 9812. https://doi.org/10.3390/s23249812