1. Introduction

In recent years, deep learning and intelligent devices have become increasingly ubiquitous, especially in the fields of marine bioengineering, marine environmental protection, and underwater target detection. Research on underwater detection is gaining increasing importance due to the complex underwater environment, diverse underwater species, and related issues. Active vision techniques are particularly crucial for underwater detection, and there is a growing need to develop and utilize these techniques to enhance detection capabilities. Therefore, high-quality research in this area is in demand to advance state-of-the-art underwater detection technology. Line structured light technology is a typical active vision measurement method [

1], which is widely used for non-contact precision measurement of geometric parameters due to its flexibility and accuracy.

Depending on the measurement distance and equipment, line-structured laser stripes are usually several to tens of pixels wide when acquired, and center extraction is an important step in the measurement process [

2]. According to Steger [

3], for a lens with a focal length of 12 mm and a distance of 50 cm from the workpiece, each deviation of 0.1 pixels in the image results in a shift of 50

m in practice. Therefore, the center extraction of a laser stripe at the pixel level is a challenge to meet the requirements for high measurement accuracy. Many researchers have investigated center extraction methods with the goal of achieving better accuracy, higher efficiency, greater robustness, and lower noise. Depending on the minimum coordinate value, these algorithms can be divided into two categories: pixel-level center extraction and sub-pixel-level center extraction. The pixel level includes the extreme-value method, the threshold method, and the directional-template method. The other category includes the grayscale gravity method, curve fitting, and the Hessian-matrix method.

The method of extreme value [

4] selects the maximum pixel on the section as the center point, which has low accuracy and is sensitive to noise. When extracting the cross-section of laser stripes using the threshold methods [

5], identification errors can occur due to asymmetric grayscale distributions or the influence of noise. The improved adaptive grayscale threshold method [

6] and variable threshold centroid method [

7] can alleviate the limitations of conventional approaches, but the extraction accuracy still falls short of expectations. The direction template and the improved direction template methods [

8,

9] can also obtain the stripe center at the pixel level, and the influence of noise on the center line extraction can be suppressed effectively, but these methods do not yield satisfactory results when applied to irregular laser stripes and require a large amount of calculation due to the cross-correlation processes.

The grayscale gravity method [

10] is based on the characteristics of the grayscale distribution within the cross-section of each row of laser stripes and extracts the grayscale centroid of the laser stripe region by computing line-by-line in the direction of the line coordinates. This reduces the error caused by the uneven grayscale of the laser stripes and is fast, but requires that the grayscale is Gaussian-distributed and susceptible to noise. The curve fitting method [

11] uses a Gaussian curve or parabola to sketch the grayscale distribution of the laser stripe’s cross-section. The center of the cross-section is the local maximum of the fitted curve; this method is only applicable for wide laser stripes with constant normal vector direction. In addition, the actual grayscale distribution of the pixels in the laser line is not strictly symmetrical, so the extreme points found by curve fitting are often not in the actual center of the laser line. The Hessian-matrix method [

12,

13] of the laser stripe normal vector direction is derived from the eigenvectors corresponding to the eigenvalues of the Hessian-matrix. The subpixel center coordinates of the laser line are calculated by applying the Taylor expansion in the normal vector direction. The Hessian-matrix method is characterized by high noise insensitivity, accurate extraction, and good robustness, and it has obvious advantages under the conditions of complex environments and high precision requirements. However, it generates redundant center points in wider cross-sections and is less satisfactory because of the high real-time computational cost.

In complex underwater measurement environments, it is difficult to ensure that the energy distribution and morphological characteristics of the stripes remain stable, and these stripes are often influenced by the reflectance properties of objects, resulting in variable energy and morphology at different locations. Therefore, in this paper, based on the traditional methods, we propose a grayscale normalization method. First, the structured light stripe is extracted using the semantic segmentation method to determine the region of interest, which can effectively avoid the interference of Fresnel scattering on the outside of the light stripe and the influence of noise that cannot be avoided by the thresholding method. Finally, the center of the subpixel light stripe of the region of interest is determined by normalizing the grayscale values.

2. Background

In complex underwater environments, when the laser illuminates an object’s surface, speckles (Fresnel scattering [

14]) are generated in the nearby free space with regard to the light field at any point on the screen, and the wavelets, which are generated by all scattering sources from the entire diffusing surfaces, are superimposed, which then has a noisy effect on the original laser stripe. Depending on the reflective properties, the surface of the object can be divided into a Lambert surface, a mirror surface, and a mixed reflective surface (weakly scattering surface), whose reflective properties are in between the above two. If there are multiple components of reflected light from the scattering surface, the observed surface scattering is a superposition of these partial light fields, according to the Phong [

15] hybrid model:

where

I is the brightness of the received reflected light,

is the reflected ambient component,

is the diffuse component,

is the specularly reflected component,

,

, and

are the reflection coefficients of ambient, diffuse, and specular light, respectively,

is the viewing angle and direction of the specularly reflected light,

n is the specular refractive index, and

is the intensity of the illumination source.

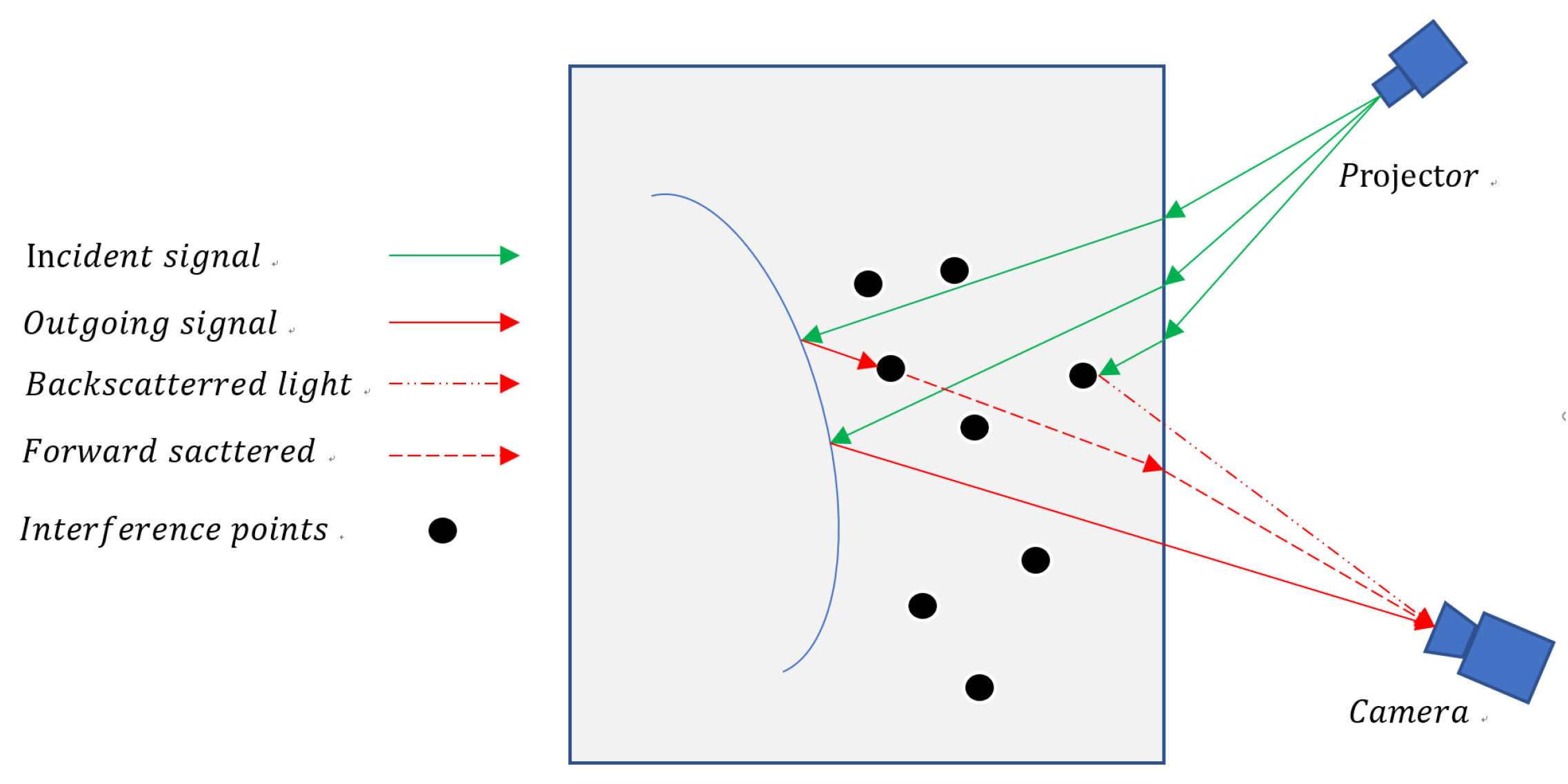

The images taken underwater have a significant loss in contrast and brightness due to underwater floating objects, etc. The underwater light transmission model is shown in

Figure 1. The light that the detector receives includes direct light, back-scattering light, and forward-scattering light. The captured intensity of the camera becomes:

where

refers to the medium transmittance of turbid water.

denotes the observed value of the color image or the observed value of a particular channel of the image (e.g., red, green, or blue channel) at pixel

[

16].

The forward scattering of light describes the direct light scattered by particles before reaching the detector; the forward scattering will blur the direct light. The captured intensity of the camera becomes:

where

is the camera resolution, and

denotes the underwater point spread function (PSF).

Since PSF is influenced by water-suspended particles, the PSF models are usually parameterized by choosing various empirical constants as:

where

,

is an empirical constant [

17] (i.e.,

),

Q is an empirical damping factor related to the water turbidity,

denotes the inverse Fourier transform, and

is the spatial frequency of the captured image in the image plane [

18,

19].

The backscattering of light describes the direct light scattered by particles before achieving the object’s surface. The backscattering adds specific strongly correlated noise to the direct light and the light of forward scattering. The captured intensity of the camera should be:

where

is the white noise with zero mean and variance.

When analyzing the underwater image, the gray levels of different columns along the cross-sectional direction of the laser stripe are extracted and compared, and the gray levels of the image vary significantly when Fresnel scattering is present, as shown in

Figure 2.

By extracting the grayscale values of different columns along the cross-sectional direction of the laser stripes—specifically on rough surfaces, transitions, and smooth surfaces—the respective columns selected are the 168th, 344th, and 400th. We analyzed the imaging characteristics of different reflective surfaces, and the grayscale plots for these columns are shown in

Figure 2c. From the diagram, it can be seen that surfaces with high absorption have small gray level variations (168th column), which resemble a Gaussian distribution. Fresnel diffraction occurs at the transitions between different objects (344th column), leading to strong scattering at the edges of the stripes and causing significant distortion of the grayscale distribution. On surfaces with high reflectance, the grayscale distribution is strongly affected by noise, resulting in larger fluctuations of the grayscale values. In the case of surfaces that are specularly reflective, strong reflections usually occur when the laser illuminates such an area. The resulting image shows central spots and extended spots in the surrounding regions, as shown in

Figure 2a. The size and contrast of the spots are determined by several factors, including illumination, illumination distribution, scattering angle, and distances between surfaces of the medium [

20,

21].

Conventional algorithms such as noise reduction by filtering, threshold segmentation, the difference method, or adding structured light-specific filters to the waterproof cover of the viewing device to eliminate ambient light cannot avoid the influence of speckles. As shown in

Figure 3, according to the grayscale distribution in

Figure 3, the image is segmented by the grayscale threshold after median filtering. When the threshold is set to 170 (

Figure 3a), most of the scattered spots can be eliminated, but for the objects with low reflectivity, most of the laser lines on their surfaces are also masked out. When the threshold is set to 155 (

Figure 3b), the laser lines on the surfaces of the objects with low reflectivity are segmented more completely. However, due to the specular reflection, the segmentation of error on the objects with smooth surfaces is large, and the interference caused by the scattered light cannot be eliminated.

Among the most popular light bar center extraction methods, both the directional template method and the grayscale center of gravity method for images with scattering need to go through pre-processing of the image, and both need to go through threshold segmentation, although the processing is different.

Figure 4 shows the two center extraction effects in the case of threshold 150 segmentation.

When the gray value is greater than 150, its valid light bar information is ignored. As a result, speckles at the edges of light stripes directly affect the position when the center of the stripe is extracted after processing with the conventional threshold algorithm. This leads to detection errors and a rapid increase in measurement inaccuracy.

3. Segmentation of Regions of Interest

The regions of interest, as the name implies, are the actual areas that need to be operated. In this paper, they represent the structured light stripes on the surface of the object to be measured by effectively extracting the regions of interest; unnecessary computations of the grayscale values in other areas can be reduced, improving the computational speed of extracting the light stripe centers. When using the normalized grayscale gravity method to calculate the light stripe center, the influence of noise in the background region is avoided; in addition, the speckle points around the specular reflections are effectively eliminated, which increases the accuracy of the center extraction.

Semantic segmentation is a well-studied problem in robot vision and deep learning [

22,

23] because it is practical and effective in estimating scene geometry, inferring interactions and spatial relationships between objects, and detecting target objects, which plays an important role in extracting structured light regions of interest in underwater machine vision. Currently, manually labeled datasets, such as ImageNet [

24], ADE20K [

25], PASCAL [

26], and COCO [

27], are playing a significant role in improving image processing tasks and driving research in new directions. Datasets with underwater images, such as SUIM [

28] or Seagrass [

29], are intended for semantic segmentation tasks of the classification of fish or marine life. In this paper, a large number of underwater images of green light stripes are acquired, which include the test object, the green light stripes projected on the test object, the background, and the green light stripes projected on the background; however, only the light stripe on the object under test is the actual area of interest in the entire image. As shown in

Figure 5, we create the underwater structured light dataset (USLD) from multiple viewpoints for different underwater turbidities, different lighting environments, and different targets and label the foreground structured light as the region of interest, which contains a total of 860 RGB images. The percentages of specific classifications are shown in

Table 1. For the sake of description, the test object and the light stripe on the test object are referred to as the foreground and foreground light stripes, respectively. The area outside the test object is the background, and the background is divided into pure background and background light stripes. The laser light stripes on the target are labeled as GS (Green_stripe), and the rest are labeled as BG (Background). The purpose of semantic segmentation is to extract the laser stripes on the target effectively and determine the region of interest for subsequent extraction of the laser stripe’s centroid.

In this article, several state-of-the-art models for deep convolutional networks are presented. CNN models are generally divided into encoders and decoders. Encoders typically use backbone networks to extract features and generate feature maps that contain semantic information about the input image that can be used in subsequent decoding and segmentation tasks. During the evaluation in this work, some segmentation models were used multiple times but with different backbone networks. The complete list of models can be found in

Table 2.

During the training process, more training data were generated by rotating, flipping, scaling, and horizontally flipping the dataset, which enables the network to better evaluate the learning and enhance its generalization ability. To assess the segmentation effects of different network structures, all models were implemented in Python using the PyTorch library, and a server equipped with two NVIDIA 3090 GPUS was used to ensure consistent hardware configuration across all models.

To evaluate the correctness of the pixel-by-pixel classification, two supervised evaluation methods were utilized: the intersection over union (IoU) and the

score. The former, also known as the Jaccard Index, is one of the most used metrics for semantic segmentation tasks; it consists of the area of overlap between the predicted masks and the ground truth divided by the area of union between the prediction and the ground truth

In the context of evaluation, TP refers to the true positive cases where the model correctly predicts a positive case, TN refers to the true negative cases where the model correctly predicts a negative case, FP refers to the false positive cases where the model falsely predicts a negative case as a positive case, and FN refers to the false negative cases where the model falsely predicts a positive case as a negative case. It is also regarded as a region similarity metric.

The latter is also called the dice coefficient and provides the contour accuracy

It is defined as the harmonic mean of the precision and recall of the model. is the ratio of the number of correctly classified samples to the total number of samples, and is the ratio of the number of correctly classified samples to the actual number of positive samples.

In addition, this work considers that there is a certain time limit for the practical application of the extraction of the light stripe center, so the inference time must be taken into account, and for the real-time function, at least 15 frames per second (FPS) are required.

Baseline evaluations with state-of-the-art deep learning segmentation models show that good results (

Table 3) can be obtained for all selected models. Moreover, all models show similar convergence behavior, as shown in

Figure 6.

Table 3 presents the results of the benchmark evaluation. According to the table, Pspnet using a ResNet101 backbone provides the best results for underwater laser line segmentation with an average IoU of 88.95 and an average

score of 93.80. The inference time of the FastSCNN model shows the best time of 43.8 FPS. The visualization of the test set, according to the above model, to segment the image is shown in

Figure 7.

In determining the region of interest, we attempted to circumvent certain limitations by providing a dataset for semantic segmentation of underwater laser line images, and benchmark evaluation showed that the Pspnet segmentation model with a Resnet101 backbone gave the best overall performance in terms of segmentation results and inference time, making it a good candidate for the next step of the work. Determining the region of interest through semantic segmentation can effectively exclude background light stripes to reduce computational effort and avoid underwater scattering spot and noise interference that cannot be handled by traditional segmentation.

Accurate and complete segmentation of the region of interest is crucial to prepare for subsequent matching and measurement tasks.

5. Experiments and Results

Structured light 3D measurement is a scanning, non-contact survey technique with the advantages of a simple system and high accuracy. An experimental platform for underwater scanning measurements with structured light has been designed, as shown in

Figure 13. It mainly consists of a CMOS camera, a lens, a line laser emitter, an oscillator, a D/A converter card, an oscillator controller, and other hardware. The camera selected was an acA1300-200uc industrial camera from Basler, Germany, with a resolution of 1280 (H) × 1024 (V), a pixel size of

, a chip size of 1/2 CMOS, a frame rate of 200 fps, and selected industrial lens with a focal length of 12 mm. The TS8720 model optical scanning oscillator with a lens thickness of 1 mm was selected, and the input voltage of the oscillator was controlled using a D/A converter card from ADLINK (model 6208) with an input voltage range of

corresponding to an oscillator rotation angle of

. A green line with a laser wavelength of 532 nm was selected, considering that the water body has the weakest absorption of the blue-green structured light emitter. In this section, the proposed method is implemented in practice in the experimental platform.

With the platform, several comparative experiments were carried out.

Experiment 1: Light stripe collection and processing for an uneven-scattering surface.

We used the platform to collect laser stripe images of inhomogeneous surfaces, applied the proposed method to extract the laser stripe centers, and displayed the extracted centers on grayscale images, as shown in

Figure 14. The results indicate that our proposed method can accurately detect laser stripe centers on underwater surfaces of different objects and is highly robust under inhomogeneous surface conditions.

Experiment 2: Light stripe center extraction and comparison of different methods.

Figure 4 shows the inadequacy of conventional methods for laser streak center extraction when flares or scattering spots are produced. In

Figure 15, we extract more centers without the need for greyscale thresholding after effectively determining the region of interest by semantic segmentation, and the center extraction via the normalized grayscale gravity method is more convergent and robust compared to the traditional method.

In

Figure 15, we extract the light stripes center for a 365 × 520 pixel photo. From

Table 4, which shows the comparison of the three different methods, we know that by determining the region of interest by semantic segmentation comparison of thresholding segmentation, the number of center points of the light stripe increases significantly. The template method obviously takes longer and has a larger computational cost, while the remaining two perform well.

Experiment 3: Linear comparison of smooth light stripe center extraction.

We use the grayscale gravity method and the normalized grayscale gravity method to extract the center of the laser stripes on the surface of planar underwater objects, as shown in

Figure 16a. Since the regular surface has good flatness, the coordinates of the line centroids of the regular surface can be used to compare the accuracy of the different algorithms.

Figure 16b,c use the normalized grayscale gravity method and the grayscale gravity method, respectively, to extract the center of the laser stripes, both of which can be performed quickly and efficiently.

Figure 17 shows the results of comparing the two methods for optical stripe center extraction. Both the proposed algorithm and the grayscale gravity method operate at the subpixel level. Compared to the traditional grayscale gravity method, the algorithm achieves a more stable center of the laser stripe with less variance, is insensitive to small gray values at the edges, and can converge better in the extreme value region, while the grayscale gravity method achieves more errors in the results due to noise and gray values at the edges.

Experiment 4: Repeatability error experiment

The repeatability error

of an algorithm refers to the deviation of the center points extracted at different moments, which reflects the ability of the algorithm to resist random noise at different times.

can be represented as:

where

p is the total number of laser stripe images taken by the CMOS camera at different moments in time. The error

can be obtained by

where

is the coordinate of the

ith center point of the

jth laser image, and

n is the number of extracted center points of the laser stripe.

For laser stripe images acquired at different time points, the repeatability error

was calculated for the two different extraction methods, as shown in

Table 5. Compared with the grayscale gravity method, our proposed method retains a smaller repeatability error, so it can extract the centroid of the laser stripe with higher accuracy and reliability.

We also captured images of the extracted stripes at different laser powers to verify the robustness of the method and conducted repeatability error experiments with medium- and low-luminance laser images, using the center of the stripe at the highest luminance image as the reference point.

Table 6 shows that as the laser power changes, the center of the laser stripe also changes to some extent at medium and low power. Compared with the gray center of gravity method, the normalized gray center method has low sensitivity to the laser intensity, which is because the normalized function is extremely sensitive to the change in the gray value, and the extreme value has a large weight on the center point coordinates. The laser stripe edge information or pixel points with low gray values have a negligible weight on the laser center of gravity, which greatly improves the robustness and adaptability of the method.