Unsupervised Stereo Matching with Surface Normal Assistance for Indoor Depth Estimation

Abstract

:1. Introduction

2. Related Work

2.1. Surface Normal Estimation

2.2. Stereo Matching

2.3. Indoor Depth Estimation

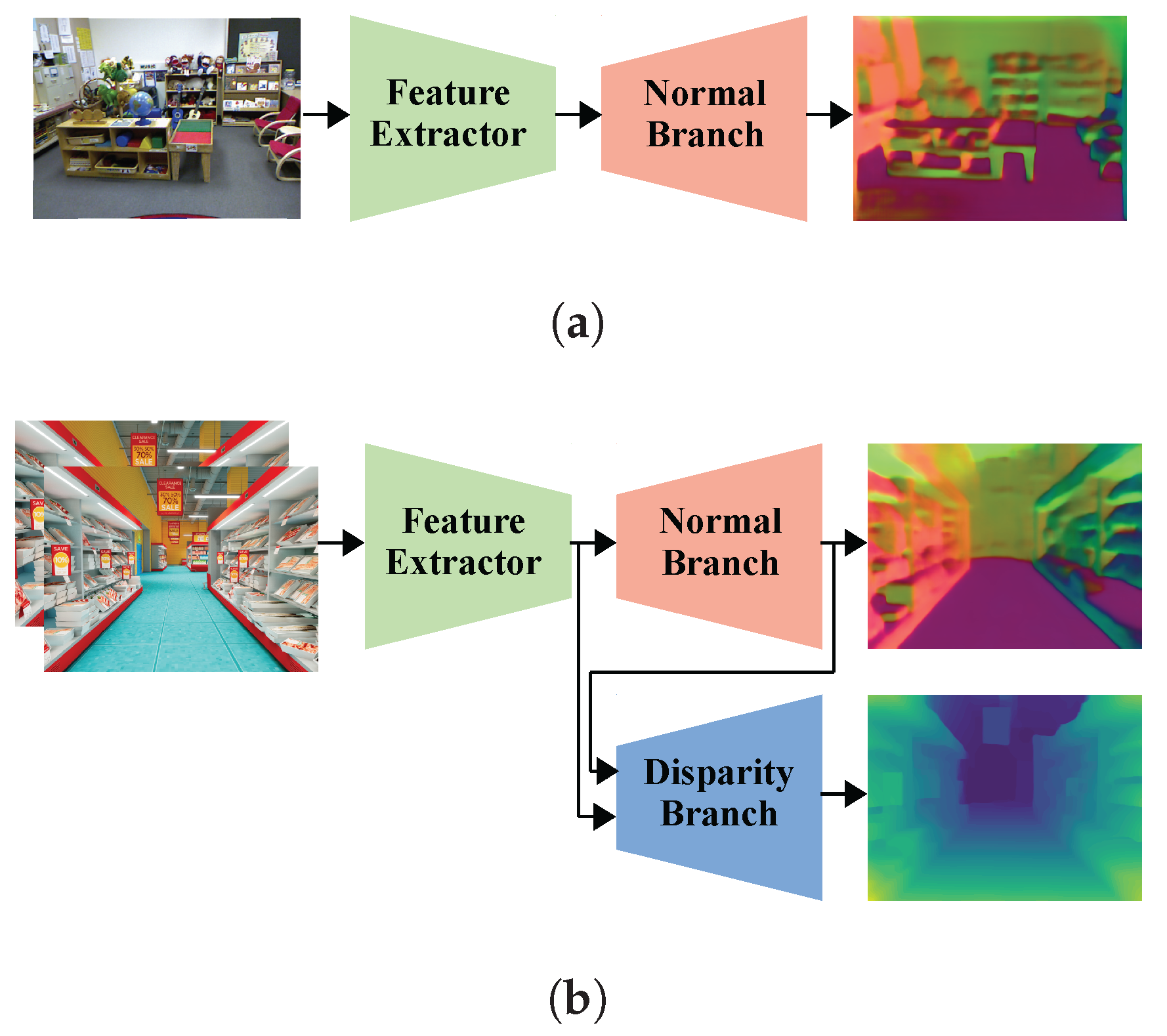

3. Proposed Neural Network Design

3.1. Feature Extraction

3.2. Normal-Estimation Branch

3.3. Disparity-Estimation Branch

3.3.1. Normal Integration

3.3.2. Matching Cost Construction

3.3.3. Cost Aggregation

3.3.4. Disparity Refinement

4. Training Strategy

4.1. Training for Normal Estimation

4.2. Training for Disparity Estimation

4.2.1. Photometric Loss

4.2.2. Disparity Smoothness Loss

4.2.3. Normal Consistency Loss

4.2.4. Left–Right Consistency Loss

5. Experimental Results

5.1. Implementation Details

5.2. NYU v2 Dataset

5.3. IRS Dataset

5.4. InStereo2K Dataset

5.5. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, Z.; Juang, J.; Nguyen, T.Q. Local Disparity Estimation With Three-Moded Cross Census and Advanced Support Weight. IEEE Trans. Multimed. 2013, 15, 1855–1864. [Google Scholar] [CrossRef]

- Kolmogorov, V.; Zabih, R. Computing visual correspondence with occlusions using graph cuts. In Proceedings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 508–515. [Google Scholar]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Mei, X.; Sun, X.; Zhou, M.; Jiao, S.; Wang, H.; Zhang, X. On building an accurate stereo matching system on graphics hardware. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 467–474. [Google Scholar]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P.; Kennedy, R.; Bachrach, A.; Bry, A. End-to-End Learning of Geometry and Context for Deep Stereo Regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid Stereo Matching Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H. GA-Net: Guided Aggregation Net for End-To-End Stereo Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 185–194. [Google Scholar]

- Cheng, X.; Zhong, Y.; Harandi, M.; Dai, Y.; Chang, X.; Li, H.; Drummond, T.; Ge, Z. Hierarchical Neural Architecture Search for Deep Stereo Matching. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 22158–22169. [Google Scholar]

- Wang, L.; Guo, Y.; Wang, Y.; Liang, Z.; Lin, Z.; Yang, J.; An, W. Parallax Attention for Unsupervised Stereo Correspondence Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2108–2125. [Google Scholar] [CrossRef] [PubMed]

- Mayer, N.; Ilg, E.; Häusser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image Using a Multi-Scale Deep Network. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2366–2374. [Google Scholar]

- Wofk, D.; Ma, F.; Yang, T.; Karaman, S.; Sze, V. FastDepth: Fast Monocular Depth Estimation on Embedded Systems. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 6101–6108. [Google Scholar]

- Farooq Bhat, S.; Alhashim, I.; Wonka, P. AdaBins: Depth Estimation Using Adaptive Bins. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4008–4017. [Google Scholar]

- Zhou, J.; Wang, Y.; Qin, K.; Zeng, W. Moving Indoor: Unsupervised Video Depth Learning in Challenging Environments. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8617–8626. [Google Scholar]

- Yu, Z.; Jin, L.; Gao, S. P2Net: Patch-Match and Plane-Regularization for Unsupervised Indoor Depth Estimation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 206–222. [Google Scholar]

- Wang, Q.; Zheng, S.; Yan, Q.; Deng, F.; Zhao, K.; Chu, X. IRS: A Large Naturalistic Indoor Robotics Stereo Dataset to Train Deep Models for Disparity and Surface Normal Estimation. In Proceedings of the IEEE International Conference on Multimedia and Expo, Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Kusupati, U.; Cheng, S.; Chen, R.; Su, H. Normal Assisted Stereo Depth Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2186–2196. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor Segmentation and Support Inference from RGBD Images. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 746–760. [Google Scholar]

- Wei, B.; Wang, W.; Xu, Y.; Guo, Y.; Hong, S.; Zhang, X. InStereo2K: A large real dataset for stereo matching in indoor scenes. Sci. China Inf. Sci. 2020, 63, 1869–1919. [Google Scholar]

- Fouhey, D.F.; Gupta, A.; Hebert, M. Data-Driven 3D Primitives for Single Image Understanding. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3392–3399. [Google Scholar]

- Fouhey, D.F.; Gupta, A.; Hebert, M. Unfolding an Indoor Origami World. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 687–702. [Google Scholar]

- Ladický, L.; Zeisl, B.; Pollefeys, M. Discriminatively Trained Dense Surface Normal Estimation. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 468–484. [Google Scholar]

- Wang, X.; Fouhey, D.F.; Gupta, A. Designing deep networks for surface normal estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 539–547. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-scale Convolutional Architecture. In Proceedings of the IEEE IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Wang, P.; Shen, X.; Russell, B.; Cohen, S.; Price, B.; Yuille, A.L. SURGE: Surface Regularized Geometry Estimation from a Single Image. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29, pp. 172–180. [Google Scholar]

- Bansal, A.; Russell, B.; Gupta, A. Marr Revisited: 2D-3D Alignment via Surface Normal Prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5965–5974. [Google Scholar]

- Qi, X.; Liao, R.; Liu, Z.; Urtasun, R.; Jia, J. GeoNet: Geometric Neural Network for Joint Depth and Surface Normal Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 283–291. [Google Scholar]

- Qi, X.; Liu, Z.; Liao, R.; Torr, P.H.S.; Urtasun, R.; Jia, J. GeoNet++: Iterative Geometric Neural Network with Edge-Aware Refinement for Joint Depth and Surface Normal Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 969–984. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Cui, Z.; Xu, C.; Yan, Y.; Sebe, N.; Yang, J. Pattern-Affinitive Propagation Across Depth, Surface Normal and Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4101–4110. [Google Scholar]

- Liao, S.; Gavves, E.; Snoek, C.G.M. Spherical Regression: Learning Viewpoints, Surface Normals and 3D Rotations on N-Spheres. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9751–9759. [Google Scholar]

- Do, T.; Vuong, K.; Roumeliotis, S.I.; Park, H.S. Surface Normal Estimation of Tilted Images via Spatial Rectifier. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 265–280. [Google Scholar]

- Bae, G.; Budvytis, I.; Cipolla, R. Estimating and Exploiting the Aleatoric Uncertainty in Surface Normal Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13117–13126. [Google Scholar]

- Piccinelli, L.; Sakaridis, C.; Yu, F. iDisc: Internal Discretization for Monocular Depth Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21477–21487. [Google Scholar]

- Ikehata, S. PS-Transformer: Learning Sparse Photometric Stereo Network using Self-Attention Mechanism. In Proceedings of the British Machine Vision Conference, Virtual, 22–25 November 2021. [Google Scholar]

- Ju, Y.; Jian, M.; Wang, C.; Zhang, C.; Dong, J.; Lam, K.M. Estimating High-resolution Surface Normals via Low-resolution Photometric Stereo Images. IEEE Trans. Circuits Syst. Video Technol. 2023. Available online: https://ieeexplore.ieee.org/document/10208243 (accessed on 3 November 2023). [CrossRef]

- Scharstein, D.; Szeliski, R.; Zabih, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. In Proceedings of the IEEE Workshop on Stereo and Multi-Baseline Vision, Kauai, HI, USA, 9–10 December 2001; pp. 131–140. [Google Scholar]

- Zbontar, J.; LeCun, Y. Computing the stereo matching cost with a convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1592–1599. [Google Scholar]

- Li, Z.; Liu, X.; Drenkow, N.; Ding, A.; Creighton, F.X.; Taylor, R.H.; Unberath, M. Revisiting Stereo Depth Estimation From a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6177–6186. [Google Scholar]

- Zhao, H.; Zhou, H.; Zhang, Y.; Chen, J.; Yang, Y.; Zhao, Y. High-Frequency Stereo Matching Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1327–1336. [Google Scholar]

- Li, J.; Wang, P.; Xiong, P.; Cai, T.; Yan, Z.; Yang, L.; Liu, J.; Fan, H.; Liu, S. Practical Stereo Matching via Cascaded Recurrent Network with Adaptive Correlation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16242–16251. [Google Scholar] [CrossRef]

- Tankovich, V.; Häne, C.; Zhang, Y.; Kowdle, A.; Fanello, S.; Bouaziz, S. HITNet: Hierarchical Iterative Tile Refinement Network for Real-time Stereo Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14357–14367. [Google Scholar]

- Wang, Q.; Xing, H.; Ying, Y.; Zhou, M. CGFNet: 3D Convolution Guided and Multi-scale Volume Fusion Network for fast and robust stereo matching. Pattern Recognit. Lett. 2023, 173, 38–44. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, H.; Shen, X.; Jia, J. Unsupervised Learning of Stereo Matching. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1576–1584. [Google Scholar]

- Li, A.; Yuan, Z. Occlusion Aware Stereo Matching via Cooperative Unsupervised Learning. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 197–213. [Google Scholar]

- Liu, P.; King, I.; Lyu, M.R.; Xu, J. Flow2Stereo: Effective Self-Supervised Learning of Optical Flow and Stereo Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6647–6656. [Google Scholar]

- Song, T.; Kim, S.; Sohn, K. Unsupervised Deep Asymmetric Stereo Matching with Spatially-Adaptive Self-Similarity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13672–13680. [Google Scholar]

- Li, B.; Shen, C.; Dai, Y.; van den Hengel, A.; He, M. Depth and surface normal estimation from monocular images using regression on deep features and hierarchical CRFs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1119–1127. [Google Scholar]

- Roy, A.; Todorovic, S. Monocular Depth Estimation Using Neural Regression Forest. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5506–5514. [Google Scholar]

- Jung, H.; Kim, Y.; Min, D.; Oh, C.; Sohn, K. Depth prediction from a single image with conditional adversarial networks. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 1717–1721. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Khamis, S.; Fanello, S.; Rhemann, C.; Kowdle, A.; Valentin, J.; Izadi, S. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 573–590. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Nekrasov, V.; Dharmasiri, T.; Spek, A.; Drummond, T.; Shen, C.; Reid, I. Real-Time Joint Semantic Segmentation and Depth Estimation Using Asymmetric Annotations. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 7101–7107. [Google Scholar]

- Guo, X.; Yang, K.; Yang, W.; Wang, X.; Li, H. Group-Wise Correlation Stereo Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3268–3277. [Google Scholar]

| Method | Error | Accuracy (%) | ||||

|---|---|---|---|---|---|---|

| Mean | Median | RMSE | ||||

| Wang et al. [25] | 26.9 | 14.8 | - | 42.0 | 61.2 | 68.2 |

| Multi-scale [26] | 20.9 | 13.2 | - | 44.4 | 67.2 | 75.9 |

| SURGE [27] | 20.6 | 12.2 | - | 47.3 | 68.9 | 76.6 |

| Bansal et al. [28] | 19.8 | 12.0 | 28.2 | 47.9 | 70.0 | 77.8 |

| GeoNet [29] | 19.0 | 11.8 | 26.9 | 48.4 | 71.5 | 79.5 |

| Nekrasov et al. [55] | 24.0 | 17.7 | - | - | - | - |

| PAP [31] | 18.6 | 11.7 | 25.5 | 48.8 | 72.2 | 79.8 |

| Liao et al. [32] | 19.7 | 12.5 | - | 45.8 | 72.1 | 80.6 |

| Bae et al. [34] | 14.9 | 7.5 | 23.5 | 62.2 | 79.3 | 85.2 |

| GeoNet++ [30] | 18.5 | 11.2 | 26.7 | 50.2 | 73.2 | 80.7 |

| iDisc [35] | 14.6 | 7.3 | 22.8 | 63.8 | 79.8 | 85.6 |

| Ours | 17.8 | 11.3 | 25.4 | 52.1 | 74.2 | 81.6 |

| Method | Training | EPE-a (px) | >3 px-a (%) | EPE-t (px) | >3 px-t (%) |

|---|---|---|---|---|---|

| FADNet [18] | Sup. | 0.75 | - | - | - |

| GwcNet [56] | Sup. | 3.01 | - | - | - |

| PASMnet [9] | Unsup. | 2.91 | 15.08 | 2.97 | 14.96 |

| Ours | Unsup. | 2.89 | 14.33 | 2.92 | 14.43 |

| Method | EPE-a (px) | >3 px-a (%) | EPE-t (px) | >3 px-t (%) | FPS |

|---|---|---|---|---|---|

| PASMnet [9] | 3.13 | 15.18 | 3.90 | 19.32 | 7.20 |

| Ours | 2.90 | 13.02 | 3.67 | 16.80 | 14.01 |

| Configuration | Pre-Train | Normal Integration | EPE-a (px) | >3 px-a (%) | |

|---|---|---|---|---|---|

| I | 5.080 | 28.45 | |||

| II | ✓ | 2.996 | 15.49 | ||

| III | ✓ | ✓ | 2.894 | 14.64 | |

| IV | ✓ | ✓ | ✓ | 2.885 | 14.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, X.; Amiri, A.J.; Fidan, B.; Jeon, S. Unsupervised Stereo Matching with Surface Normal Assistance for Indoor Depth Estimation. Sensors 2023, 23, 9850. https://doi.org/10.3390/s23249850

Fan X, Amiri AJ, Fidan B, Jeon S. Unsupervised Stereo Matching with Surface Normal Assistance for Indoor Depth Estimation. Sensors. 2023; 23(24):9850. https://doi.org/10.3390/s23249850

Chicago/Turabian StyleFan, Xiule, Ali Jahani Amiri, Baris Fidan, and Soo Jeon. 2023. "Unsupervised Stereo Matching with Surface Normal Assistance for Indoor Depth Estimation" Sensors 23, no. 24: 9850. https://doi.org/10.3390/s23249850

APA StyleFan, X., Amiri, A. J., Fidan, B., & Jeon, S. (2023). Unsupervised Stereo Matching with Surface Normal Assistance for Indoor Depth Estimation. Sensors, 23(24), 9850. https://doi.org/10.3390/s23249850