Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization

Abstract

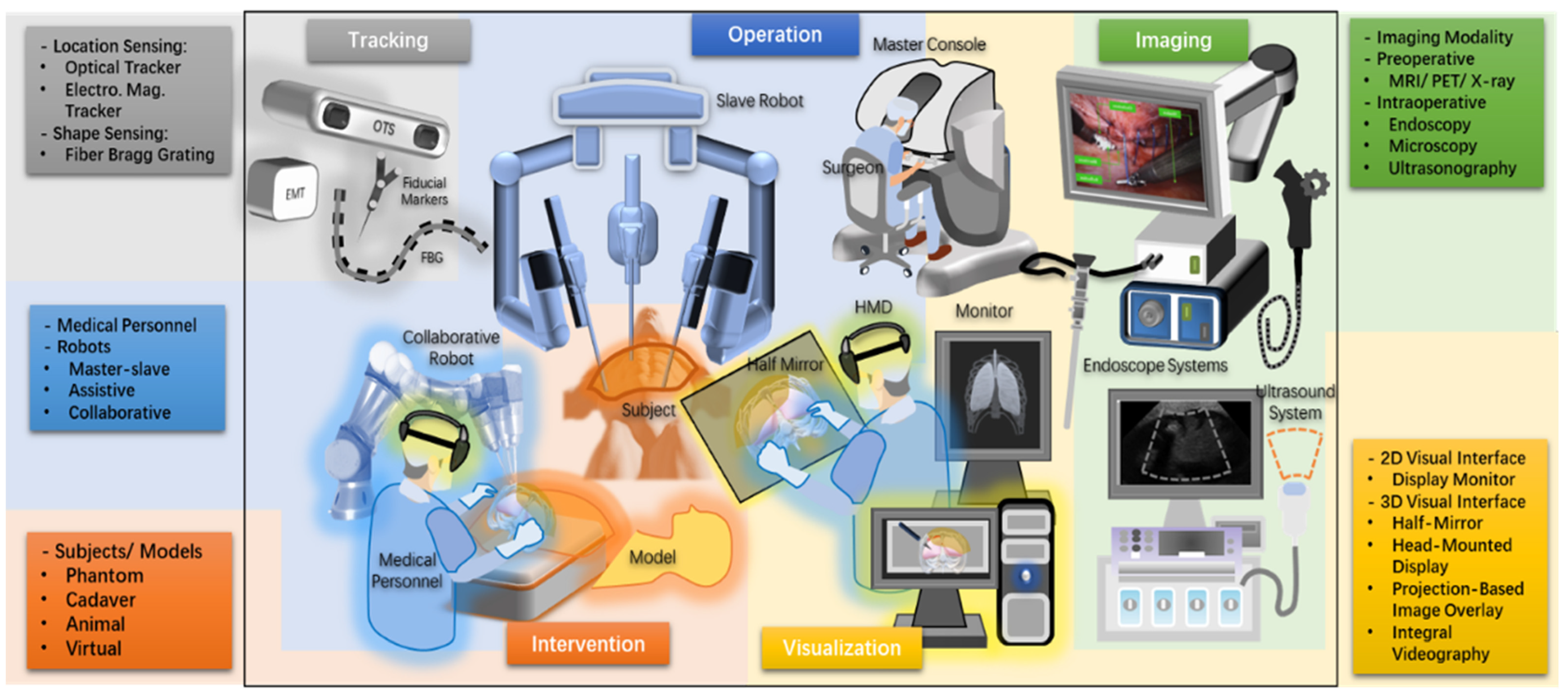

:1. Introduction

2. Materials and Methods

3. Results

3.1. Medical Image Processing

3.1.1. Segmentation

- A lack of annotated data: Medical images are often scarce and expensive to label by experts, which limits the availability of training data for supervised learning methods.

- Inhomogeneous intensity: Medical images may have different contrast, brightness, noise, and artifacts depending on the imaging modality, device, and settings, which make it hard to apply a single threshold or feature extraction method across different images.

- Vast memory usage: Medical images are often high-resolution and 3D, which requires a large amount of memory and computational resources to process and store.

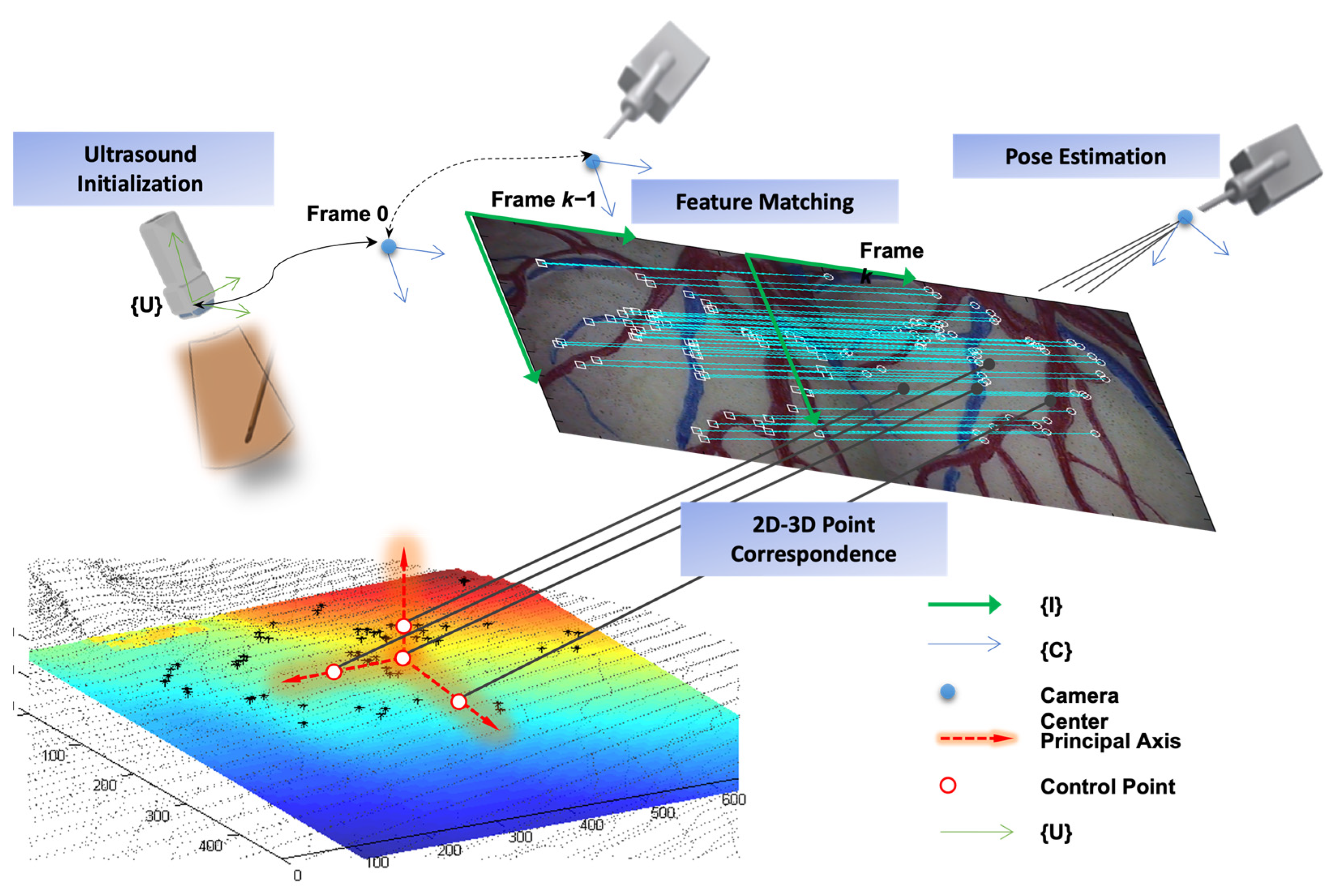

3.1.2. Object Tracking

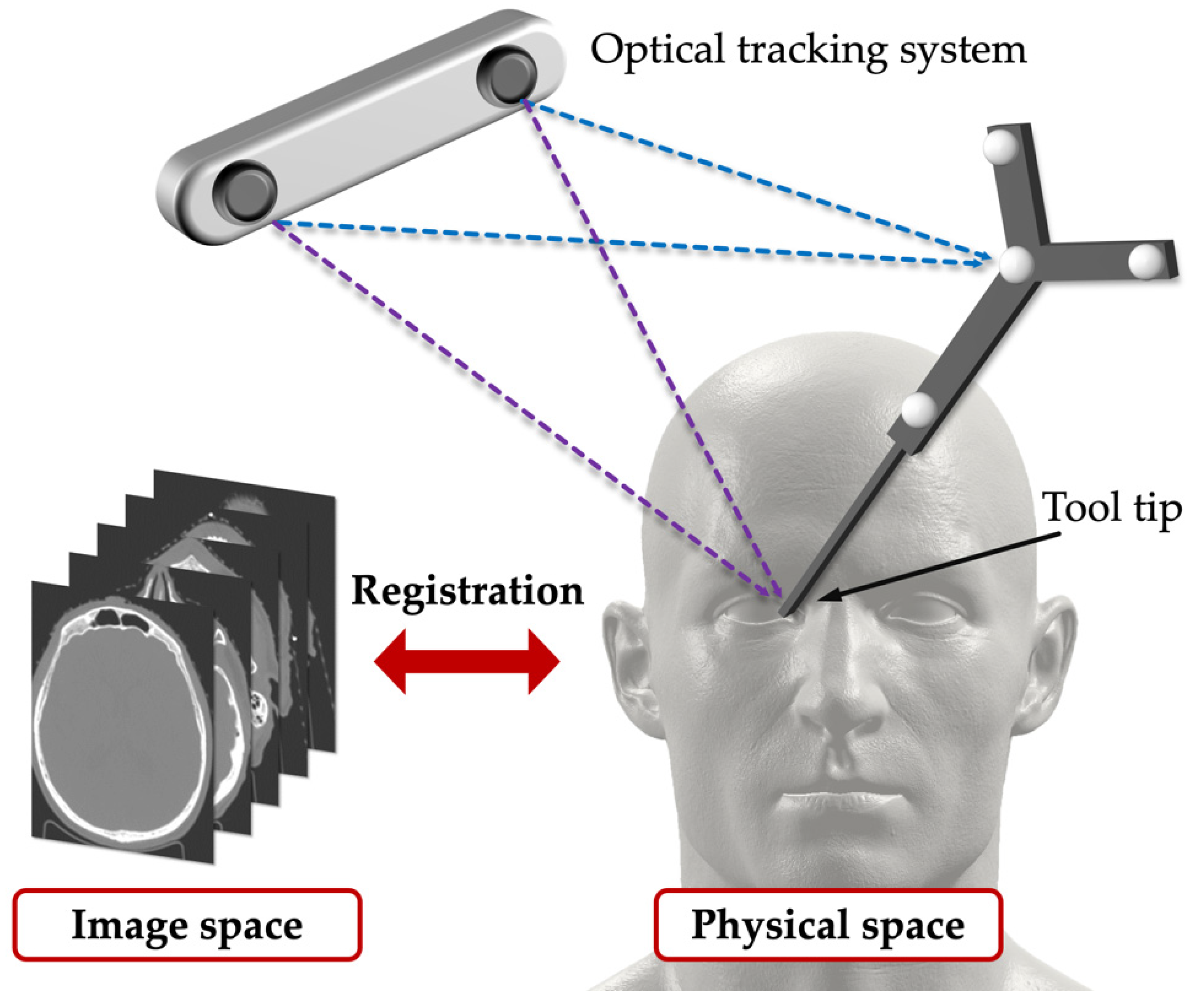

3.1.3. Registration and Fusion

3.1.4. Planning

3.2. Visualization

3.2.1. 3D Reconstruction and Rendering

3.2.2. User Interface and Medium of Visualization

3.2.3. Media of Visualization: VR/AR/MR

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wijsmuller, A.R.; Romagnolo, L.G.C.; Consten, E.; Melani, A.E.F.; Marescaux, J. Navigation and Image-Guided Surgery. In Digital Surgery; Atallah, S., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 137–144. ISBN 978-3-030-49100-0. [Google Scholar]

- ReportLinker Global Image-Guided Therapy Systems Market Size, Share & Industry Trends Analysis Report by Application, by End User, by Product, by Regional Outlook and Forecast, 2022–2028. Available online: https://www.reportlinker.com/p06315020/?utm_source=GNW (accessed on 24 May 2023).

- Wang, K.; Du, Y.; Zhang, Z.; He, K.; Cheng, Z.; Yin, L.; Dong, D.; Li, C.; Li, W.; Hu, Z.; et al. Fluorescence Image-Guided Tumour Surgery. Nat. Rev. Bioeng. 2023, 1, 161–179. [Google Scholar] [CrossRef]

- Monterubbianesi, R.; Tosco, V.; Vitiello, F.; Orilisi, G.; Fraccastoro, F.; Putignano, A.; Orsini, G. Augmented, Virtual and Mixed Reality in Dentistry: A Narrative Review on the Existing Platforms and Future Challenges. Appl. Sci. 2022, 12, 877. [Google Scholar] [CrossRef]

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The Impact of Virtual, Augmented and Mixed Reality Technologies on the Customer Experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Kochanski, R.B.; Lombardi, J.M.; Laratta, J.L.; Lehman, R.A.; O’Toole, J.E. Image-Guided Navigation and Robotics in Spine Surgery. Neurosurgery 2019, 84, 1179–1189. [Google Scholar] [CrossRef] [PubMed]

- Eu, D.; Daly, M.J.; Irish, J.C. Imaging-Based Navigation Technologies in Head and Neck Surgery. Curr. Opin. Otolaryngol. Head Neck Surg. 2021, 29, 149–155. [Google Scholar] [CrossRef] [PubMed]

- DeLong, M.R.; Gandolfi, B.M.; Barr, M.L.; Datta, N.; Willson, T.D.; Jarrahy, R. Intraoperative Image-Guided Navigation in Craniofacial Surgery: Review and Grading of the Current Literature. J. Craniofac Surg. 2019, 30, 465–472. [Google Scholar] [CrossRef]

- Du, J.P.; Fan, Y.; Wu, Q.N.; Zhang, J.; Hao, D.J. Accuracy of Pedicle Screw Insertion among 3 Image-Guided Navigation Systems: Systematic Review and Meta-Analysis. World Neurosurg. 2018, 109, 24–30. [Google Scholar] [CrossRef]

- Mezger, U.; Jendrewski, C.; Bartels, M. Navigation in Surgery. Langenbeck’s Arch. Surg. 2013, 398, 501–514. [Google Scholar] [CrossRef]

- Preim, B.; Baer, A.; Cunningham, D.; Isenberg, T.; Ropinski, T. A Survey of Perceptually Motivated 3D Visualization of Medical Image Data. Comput. Graph. Forum 2016, 35, 501–525. [Google Scholar] [CrossRef]

- Zhou, L.; Fan, M.; Hansen, C.; Johnson, C.R.; Weiskopf, D. A Review of Three-Dimensional Medical Image Visualization. Health Data Sci. 2022, 2022, 9840519. [Google Scholar] [CrossRef]

- Srivastava, A.K.; Singhvi, S.; Qiu, L.; King, N.K.K.; Ren, H. Image Guided Navigation Utilizing Intra-Operative 3D Surface Scanning to Mitigate Morphological Deformation of Surface Anatomy. J. Med. Biol. Eng. 2019, 39, 932–943. [Google Scholar] [CrossRef]

- Shams, R.; Picot, F.; Grajales, D.; Sheehy, G.; Dallaire, F.; Birlea, M.; Saad, F.; Trudel, D.; Menard, C.; Leblond, F. Pre-Clinical Evaluation of an Image-Guided in-Situ Raman Spectroscopy Navigation System for Targeted Prostate Cancer Interventions. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 867–876. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; He, T.; Zhang, Z.; Chen, Q.; Zhang, L.; Xia, G.; Yang, L.; Wang, H.; Wong, S.T.C.; Li, H. A Personalized Image-Guided Intervention System for Peripheral Lung Cancer on Patient-Specific Respiratory Motion Model. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1751–1764. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Fan, J.C.; Tao, B.X.; Wang, S.G.; Mo, J.Q.; Wu, Y.Q.; Liang, Q.H.; Chen, X.J. An Image-Guided Hybrid Robot System for Dental Implant Surgery. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 15–26. [Google Scholar] [CrossRef] [PubMed]

- Rüger, C.; Feufel, M.A.; Moosburner, S.; Özbek, C.; Pratschke, J.; Sauer, I.M. Ultrasound in Augmented Reality: A Mixed-Methods Evaluation of Head-Mounted Displays in Image-Guided Interventions. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1895–1905. [Google Scholar] [CrossRef] [PubMed]

- Sugino, T.; Nakamura, R.; Kuboki, A.; Honda, O.; Yamamoto, M.; Ohtori, N. Comparative Analysis of Surgical Processes for Image-Guided Endoscopic Sinus Surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 93–104. [Google Scholar] [CrossRef] [PubMed]

- Chaplin, V.; Phipps, M.A.; Jonathan, S.V.; Grissom, W.A.; Yang, P.F.; Chen, L.M.; Caskey, C.F. On the Accuracy of Optically Tracked Transducers for Image-Guided Transcranial Ultrasound. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1317–1327. [Google Scholar] [CrossRef] [PubMed]

- Richey, W.L.; Heiselman, J.S.; Luo, M.; Meszoely, I.M.; Miga, M.I. Impact of Deformation on a Supine-Positioned Image-Guided Breast Surgery Approach. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 2055–2066. [Google Scholar] [CrossRef]

- Glossop, N.; Bale, R.; Xu, S.; Pritchard, W.F.; Karanian, J.W.; Wood, B.J. Patient-Specific Needle Guidance Templates Drilled Intraprocedurally for Image Guided Intervention: Feasibility Study in Swine. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 537–544. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, C.; Ji, D.; Wang, M.; Song, Z. Regional-Surface-Based Registration for Image-Guided Neurosurgery: Effects of Scan Modes on Registration Accuracy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1303–1315. [Google Scholar] [CrossRef]

- Shapey, J.; Dowrick, T.; Delaunay, R.; Mackle, E.C.; Thompson, S.; Janatka, M.; Guichard, R.; Georgoulas, A.; Pérez-Suárez, D.; Bradford, R.; et al. Integrated Multi-Modality Image-Guided Navigation for Neurosurgery: Open-Source Software Platform Using State-of-the-Art Clinical Hardware. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1347–1356. [Google Scholar] [CrossRef] [PubMed]

- Fauser, J.; Stenin, I.; Bauer, M.; Hsu, W.H.; Kristin, J.; Klenzner, T.; Schipper, J.; Mukhopadhyay, A. Toward an Automatic Preoperative Pipeline for Image-Guided Temporal Bone Surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 967–976. [Google Scholar] [CrossRef] [PubMed]

- Romaguera, L.V.; Mezheritsky, T.; Mansour, R.; Tanguay, W.; Kadoury, S. Predictive Online 3D Target Tracking with Population-Based Generative Networks for Image-Guided Radiotherapy. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1213–1225. [Google Scholar] [CrossRef] [PubMed]

- Ruckli, A.C.; Schmaranzer, F.; Meier, M.K.; Lerch, T.D.; Steppacher, S.D.; Tannast, M.; Zeng, G.; Burger, J.; Siebenrock, K.A.; Gerber, N.; et al. Automated Quantification of Cartilage Quality for Hip Treatment Decision Support. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 2011–2021. [Google Scholar] [CrossRef] [PubMed]

- Teatini, A.; Kumar, R.P.; Elle, O.J.; Wiig, O. Mixed Reality as a Novel Tool for Diagnostic and Surgical Navigation in Orthopaedics. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 407–414. [Google Scholar] [CrossRef] [PubMed]

- Léger, É.; Reyes, J.; Drouin, S.; Popa, T.; Hall, J.A.; Collins, D.L.; Kersten-Oertel, M. MARIN: An Open-Source Mobile Augmented Reality Interactive Neuronavigation System. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1013–1021. [Google Scholar] [CrossRef]

- Sun, Q.; Mai, Y.; Yang, R.; Ji, T.; Jiang, X.; Chen, X. Fast and Accurate Online Calibration of Optical See-through Head-Mounted Display for AR-Based Surgical Navigation Using Microsoft HoloLens. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1907–1919. [Google Scholar] [CrossRef]

- Ma, C.; Cui, X.; Chen, F.; Ma, L.; Xin, S.; Liao, H. Knee Arthroscopic Navigation Using Virtual-Vision Rendering and Self-Positioning Technology. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 467–477. [Google Scholar] [CrossRef]

- Shao, L.; Fu, T.; Zheng, Z.; Zhao, Z.; Ding, L.; Fan, J.; Song, H.; Zhang, T.; Yang, J. Augmented Reality Navigation with Real-Time Tracking for Facial Repair Surgery. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 981–991. [Google Scholar] [CrossRef]

- Mellado, N.; Aiger, D.; Mitra, N.J. Super 4PCS Fast Global Pointcloud Registration via Smart Indexing. Computer graphics forum 2014, 33, 205–215. [Google Scholar] [CrossRef]

- Ma, L.; Liang, H.; Han, B.; Yang, S.; Zhang, X.; Liao, H. Augmented Reality Navigation with Ultrasound-Assisted Point Cloud Registration for Percutaneous Ablation of Liver Tumors. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1543–1552. [Google Scholar] [CrossRef] [PubMed]

- Ter Braak, T.P.; Brouwer de Koning, S.G.; van Alphen, M.J.A.; van der Heijden, F.; Schreuder, W.H.; van Veen, R.L.P.; Karakullukcu, M.B. A Surgical Navigated Cutting Guide for Mandibular Osteotomies: Accuracy and Reproducibility of an Image-Guided Mandibular Osteotomy. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1719–1725. [Google Scholar] [CrossRef] [PubMed]

- Kokko, M.A.; Van Citters, D.W.; Seigne, J.D.; Halter, R.J. A Particle Filter Approach to Dynamic Kidney Pose Estimation in Robotic Surgical Exposure. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1079–1089. [Google Scholar] [CrossRef] [PubMed]

- Peoples, J.J.; Bisleri, G.; Ellis, R.E. Deformable Multimodal Registration for Navigation in Beating-Heart Cardiac Surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 955–966. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Hayashi, Y.; Oda, M.; Kitasaka, T.; Takabatake, H.; Mori, M.; Honma, H.; Natori, H.; Mori, K. Depth-Based Branching Level Estimation for Bronchoscopic Navigation. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1795–1804. [Google Scholar] [CrossRef] [PubMed]

- Oda, M.; Tanaka, K.; Takabatake, H.; Mori, M.; Natori, H.; Mori, K. Realistic Endoscopic Image Generation Method Using Virtual-to-Real Image-Domain Translation. Healthc. Technol. Lett. 2019, 6, 214–219. [Google Scholar] [CrossRef] [PubMed]

- Hammami, H.; Lalys, F.; Rolland, Y.; Petit, A.; Haigron, P. Catheter Navigation Support for Liver Radioembolization Guidance: Feasibility of Structure-Driven Intensity-Based Registration. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1881–1894. [Google Scholar] [CrossRef]

- Tibamoso-Pedraza, G.; Amouri, S.; Molina, V.; Navarro, I.; Raboisson, M.J.; Miró, J.; Lapierre, C.; Ratté, S.; Duong, L. Navigation Guidance for Ventricular Septal Defect Closure in Heart Phantoms. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1947–1956. [Google Scholar] [CrossRef]

- Chan, A.; Parent, E.; Mahood, J.; Lou, E. 3D Ultrasound Navigation System for Screw Insertion in Posterior Spine Surgery: A Phantom Study. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 271–281. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Wang, T.; Ji, X.; Shen, Y.; Sun, Z.; Zhang, X. A Markerless Automatic Deformable Registration Framework for Augmented Reality Navigation of Laparoscopy Partial Nephrectomy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1285–1294. [Google Scholar] [CrossRef]

- Wang, C.; Oda, M.; Hayashi, Y.; Villard, B.; Kitasaka, T.; Takabatake, H.; Mori, M.; Honma, H.; Natori, H.; Mori, K. A Visual SLAM-Based Bronchoscope Tracking Scheme for Bronchoscopic Navigation. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1619–1630. [Google Scholar] [CrossRef] [PubMed]

- Lee, L.K.; Liew, S.C.; Thong, W.J. A Review of Image Segmentation Methodologies in Medical Image. In Advanced Computer and Communication Engineering Technology: Proceedings of the 1st International Conference on Communication and Computer Engineering; Springer: Cham, Switzerland, 2015; pp. 1069–1080. [Google Scholar]

- Sharma, N.; Aggarwal, L. Automated Medical Image Segmentation Techniques. J. Med. Phys. 2010, 35, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Dobbe, J.G.G.; Peymani, A.; Roos, H.A.L.; Beerens, M.; Streekstra, G.J.; Strackee, S.D. Patient-Specific Plate for Navigation and Fixation of the Distal Radius: A Case Series. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 515–524. [Google Scholar] [CrossRef] [PubMed]

- Chitsaz, M.; Seng, W.C. A Multi-Agent System Approach for Medical Image Segmentation. In Proceedings of the 2009 International Conference on Future Computer and Communication, Kuala Lumpur, Malaysia, 3–5 April 2009; pp. 408–411. [Google Scholar]

- Bennai, M.T.; Guessoum, Z.; Mazouzi, S.; Cormier, S.; Mezghiche, M. A Stochastic Multi-Agent Approach for Medical-Image Segmentation: Application to Tumor Segmentation in Brain MR Images. Artif. Intell. Med. 2020, 110, 101980. [Google Scholar] [CrossRef] [PubMed]

- Moussa, R.; Beurton-Aimar, M.; Desbarats, P. Multi-Agent Segmentation for 3D Medical Images. In Proceedings of the 2009 9th International Conference on Information Technology and Applications in Biomedicine, Larnaka, Cyprus, 4–7 November 2009; pp. 1–5. [Google Scholar]

- Nachour, A.; Ouzizi, L.; Aoura, Y. Multi-Agent Segmentation Using Region Growing and Contour Detection: Syntetic Evaluation in MR Images with 3D CAD Reconstruction. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2016, 8, 115–124. [Google Scholar]

- Bennai, M.T.; Guessoum, Z.; Mazouzi, S.; Cormier, S.; Mezghiche, M. Towards a Generic Multi-Agent Approach for Medical Image Segmentation. In Proceedings of the PRIMA 2017: Principles and Practice of Multi-Agent Systems, Nice, France, 30 October–3 November 2017; An, B., Bazzan, A., Leite, J., Villata, S., van der Torre, L., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 198–211. [Google Scholar]

- Nachour, A.; Ouzizi, L.; Aoura, Y. Fuzzy Logic and Multi-Agent for Active Contour Models. In Proceedings of the Third International Afro-European Conference for Industrial Advancement—AECIA 2016, Marrakech, Morocco, 21–23 November 2016; Abraham, A., Haqiq, A., Ella Hassanien, A., Snasel, V., Alimi, A.M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 229–237. [Google Scholar]

- Benchara, F.Z.; Youssfi, M.; Bouattane, O.; Ouajji, H.; Bensalah, M.O. A New Distributed Computing Environment Based on Mobile Agents for SPMD Applications. In Proceedings of the Mediterranean Conference on Information & Communication Technologies 2015, Saidia, Morocco, 7–9 May 2015; El Oualkadi, A., Choubani, F., El Moussati, A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 353–362. [Google Scholar]

- Allioui, H.; Sadgal, M.; Elfazziki, A. Intelligent Environment for Advanced Brain Imaging: Multi-Agent System for an Automated Alzheimer Diagnosis. Evol. Intell. 2021, 14, 1523–1538. [Google Scholar] [CrossRef]

- Liao, X.; Li, W.; Xu, Q.; Wang, X.; Jin, B.; Zhang, X.; Wang, Y.; Zhang, Y. Iteratively-Refined Interactive 3D Medical Image Segmentation With Multi-Agent Reinforcement Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9394–9402. [Google Scholar]

- Allioui, H.; Mohammed, M.A.; Benameur, N.; Al-Khateeb, B.; Abdulkareem, K.H.; Garcia-Zapirain, B.; Damaševičius, R.; Maskeliūnas, R. A Multi-Agent Deep Reinforcement Learning Approach for Enhancement of COVID-19 CT Image Segmentation. J. Pers. Med. 2022, 12, 309. [Google Scholar] [CrossRef]

- Du, G.; Cao, X.; Liang, J.; Chen, X.; Zhan, Y. Medical Image Segmentation Based on U-Net: A Review. J. Imaging Sci. Technol. 2020, 64, 020508-1. [Google Scholar] [CrossRef]

- Huang, R.; Lin, M.; Dou, H.; Lin, Z.; Ying, Q.; Jia, X.; Xu, W.; Mei, Z.; Yang, X.; Dong, Y. Boundary-Rendering Network for Breast Lesion Segmentation in Ultrasound Images. Med. Image Anal. 2022, 80, 102478. [Google Scholar] [CrossRef]

- Silva-Rodríguez, J.; Naranjo, V.; Dolz, J. Constrained Unsupervised Anomaly Segmentation. Med. Image Anal. 2022, 80, 102526. [Google Scholar] [CrossRef]

- Pace, D.F.; Dalca, A.V.; Brosch, T.; Geva, T.; Powell, A.J.; Weese, J.; Moghari, M.H.; Golland, P. Learned Iterative Segmentation of Highly Variable Anatomy from Limited Data: Applications to Whole Heart Segmentation for Congenital Heart Disease. Med. Image Anal. 2022, 80, 102469. [Google Scholar] [CrossRef] [PubMed]

- Ding, Y.; Member, I.; Yang, Q.; Wang, Y.; Chen, D.; Qin, Z.; Zhang, J. MallesNet: A Multi-Object Assistance Based Network for Brachial Plexus Segmentation in Ultrasound Images. Med. Image Anal. 2022, 80, 102511. [Google Scholar] [CrossRef] [PubMed]

- Han, C.; Lin, J.; Mai, J.; Wang, Y.; Zhang, Q.; Zhao, B.; Chen, X.; Pan, X.; Shi, Z.; Xu, Z. Multi-Layer Pseudo-Supervision for Histopathology Tissue Semantic Segmentation Using Patch-Level Classification Labels. Med. Image Anal. 2022, 80, 102487. [Google Scholar] [CrossRef] [PubMed]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net Architecture for Multimodal Biomedical Image Segmentation. Neural networks 2020, 121, 74. [Google Scholar] [CrossRef] [PubMed]

- Punn, N.S.; Agarwal, S. Modality Specific U-Net Variants for Biomedical Image Segmentation: A Survey. Artif. Intell. Rev. 2022, 55, 5845–5889. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Song, L.; Liu, S.; Zhang, Y. A Review of Deep-Learning-Based Medical Image Segmentation Methods. Sustainability 2021, 13, 1224. [Google Scholar] [CrossRef]

- Gibson, E.; Li, W.; Sudre, C.; Fidon, L.; Shakir, D.I.; Wang, G.; Eaton-Rosen, Z.; Gray, R.; Doel, T.; Hu, Y. NiftyNet: A Deep-Learning Platform for Medical Imaging. Comput. Methods Programs Biomed. 2018, 158, 113–122. [Google Scholar] [CrossRef]

- Müller, D.; Kramer, F. MIScnn: A Framework for Medical Image Segmentation with Convolutional Neural Networks and Deep Learning. BMC Med. Imaging 2021, 21, 1–11. [Google Scholar] [CrossRef]

- de Geer, A.F.; van Alphen, M.J.A.; Zuur, C.L.; Loeve, A.J.; van Veen, R.L.P.; Karakullukcu, M.B. A Hybrid Registration Method Using the Mandibular Bone Surface for Electromagnetic Navigation in Mandibular Surgery. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1343–1353. [Google Scholar] [CrossRef]

- Strzeletz, S.; Hazubski, S.; Moctezuma, J.L.; Hoppe, H. Fast, Robust, and Accurate Monocular Peer-to-Peer Tracking for Surgical Navigation. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 479–489. [Google Scholar] [CrossRef]

- Smit, J.N.; Kuhlmann, K.F.D.; Ivashchenko, O.V.; Thomson, B.R.; Langø, T.; Kok, N.F.M.; Fusaglia, M.; Ruers, T.J.M. Ultrasound-Based Navigation for Open Liver Surgery Using Active Liver Tracking. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1765–1773. [Google Scholar] [CrossRef] [PubMed]

- Ivashchenko, O.V.; Kuhlmann, K.F.D.; van Veen, R.; Pouw, B.; Kok, N.F.M.; Hoetjes, N.J.; Smit, J.N.; Klompenhouwer, E.G.; Nijkamp, J.; Ruers, T.J.M. CBCT-Based Navigation System for Open Liver Surgery: Accurate Guidance toward Mobile and Deformable Targets with a Semi-Rigid Organ Approximation and Electromagnetic Tracking of the Liver. Med. Phys. 2021, 48, 2145–2159. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Hu, C.; He, Z.; Fu, Z.; Xu, L.; Ding, G.; Wang, P.; Zhang, H.; Ye, X. Shape Estimation of the Anterior Part of a Flexible Ureteroscope for Intraoperative Navigation. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1787–1799. [Google Scholar] [CrossRef] [PubMed]

- Attivissimo, F.; Lanzolla, A.M.L.; Carlone, S.; Larizza, P.; Brunetti, G. A Novel Electromagnetic Tracking System for Surgery Navigation. Comput. Assist. Surg. 2018, 23, 42–52. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, A.; Javed, O.; Shah, M. Object Tracking: A Survey. ACM Comput. Surv. 2006, 38, 13-es. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Anton, M.; Zhang, X.; Liu, W.; Kim, T.-K. Multiple Object. Tracking: A Literature Review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Li, X.; Hu, W.; Shen, C.; Zhang, Z.; Dick, A.; Hengel, A.V.D. A Survey of Appearance Models in Visual Object Tracking. ACM Trans. Intell. Syst. Technol. 2013, 4, 1–48. [Google Scholar] [CrossRef]

- Soleimanitaleb, Z.; Keyvanrad, M.A.; Jafari, A. Object Tracking Methods: A Review. In Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 24–25 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 282–288. [Google Scholar]

- Zhang, Y.; Wang, T.; Liu, K.; Zhang, B.; Chen, L. Recent Advances of Single-Object Tracking Methods: A Brief Survey. Neurocomputing 2021, 455, 1–11. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Q.; Liu, Z.; Gu, L. Visual Detection and Tracking Algorithms for Minimally Invasive Surgical Instruments: A Comprehensive Review of the State-of-the-Art. Rob. Auton. Syst. 2022, 149, 103945. [Google Scholar] [CrossRef]

- Bouget, D.; Allan, M.; Stoyanov, D.; Jannin, P. Vision-Based and Marker-Less Surgical Tool Detection and Tracking: A Review of the Literature. Med. Image Anal. 2017, 35, 633–654. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Etsuko, K. Review on vision-based tracking in surgical navigation. IET Cyber-Syst. Robot. 2020, 2, 107–121. [Google Scholar] [CrossRef]

- Teske, H.; Mercea, P.; Schwarz, M.; Nicolay, N.H.; Sterzing, F.; Bendl, R. Real-time markerless lung tumor tracking in fluoroscopic video: Handling overlapping of projected structures. Med Phys. 2015, 42, 2540–2549. [Google Scholar] [CrossRef] [PubMed]

- Hirai, R.; Sakata, Y.; Tanizawa, A.; Mori, S. Real-time tumor tracking using fluoroscopic imaging with deep neural network analysis. Phys. Medica 2019, 59, 22–29. [Google Scholar] [CrossRef] [PubMed]

- De Luca, V.; Banerjee, J.; Hallack, A.; Kondo, S.; Makhinya, M.; Nouri, D.; Royer, L.; Cifor, A.; Dardenne, G.; Goksel, O.; et al. Evaluation of 2D and 3D ultrasound tracking algorithms and impact on ultrasound-guided liver radiotherapy margins. Med Phys. 2018, 45, 4986–5003. [Google Scholar] [CrossRef] [PubMed]

- Konh, B.; Padasdao, B.; Batsaikhan, Z.; Ko, S.Y. Integrating robot-assisted ultrasound tracking and 3D needle shape prediction for real-time tracking of the needle tip in needle steering procedures. Int. J. Med Robot. Comput. Assist. Surg. 2021, 17, e2272. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Wang, J.; Ando, T.; Kubota, A.; Yamashita, H.; Sakuma, I.; Chiba, T.; Kobayashi, E. Vision-based endoscope tracking for 3D ultrasound image-guided surgical navigation. Comput. Med. Imaging Graph. 2015, 40, 205–216. [Google Scholar] [CrossRef]

- Yang, L.; Wang, J.; Ando, T.; Kubota, A.; Yamashita, H.; Sakuma, I.; Chiba, T.; Kobayashi, E. Self-contained image mapping of placental vasculature in 3D ultrasound-guided fetoscopy. Surg. Endosc. 2016, 90, 4136–4149. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, Z.; Cheng, X. Surgical Instruments Tracking Based on Deep Learning with Lines Detection and Spatio-Temporal Context. In Proceedings of the 2017 Chinese Automation Congress, CAC 2017, Jinan, China, 20–22 October 2017; pp. 2711–2714. [Google Scholar] [CrossRef]

- Choi, B.; Jo, K.; Choi, S.; Choi, J. Surgical-Tools Detection Based on Convolutional Neural Network in Laparoscopic Robot-Assisted Surgery. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Jeju Island, Republic of Korea, 11–15 July 2017; pp. 1756–1759. [Google Scholar] [CrossRef]

- Li, Y.; Richter, F.; Lu, J.; Funk, E.K.; Orosco, R.K.; Zhu, J.; Yip, M.C. Super: A Surgical Perception Framework for Endoscopic Tissue Manipulation with Surgical Robotics. IEEE Robot. Autom. Lett. 2020, 5, 2294–2301. [Google Scholar] [CrossRef]

- Haskins, G.; Kruger, U.; Yan, P. Deep Learning in Medical Image Registration: A Survey. Mach. Vis. Appl. 2020, 31, 8. [Google Scholar] [CrossRef]

- Fu, Y.; Lei, Y.; Wang, T.; Curran, W.J.; Liu, T.; Yang, X. Deep Learning in Medical Image Registration: A Review. Phys. Med. Biol. 2020, 65, 20TR01. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, G.; Zhao, A.; Sabuncu, M.R.; Guttag, J.; Dalca, A.V. VoxelMorph: A Learning Framework for Deformable Medical Image Registration. IEEE Trans. Med. Imaging 2019, 38, 1788–1800. [Google Scholar] [CrossRef] [PubMed]

- de Vos, B.D.; Berendsen, F.F.; Viergever, M.A.; Sokooti, H.; Staring, M.; Išgum, I. A Deep Learning Framework for Unsupervised Affine and Deformable Image Registration. Med. Image Anal. 2019, 52, 128–143. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Li, Y.; Liu, W.; Huang, J. SIRF: Simultaneous Satellite Image Registration and Fusion in a Unified Framework. IEEE Trans. Image Process. 2015, 24, 4213–4224. [Google Scholar] [CrossRef] [PubMed]

- Mankovich, N.J.; Samson, D.; Pratt, W.; Lew, D.; Beumer, J., III. Surgical Planning Using Three-Dimensional Imaging and Computer Modeling. Otolaryngol. Clin. N. Am. 1994, 27, 875. [Google Scholar] [CrossRef]

- Selle, D.; Preim, B.; Schenk, A.; Peitgen, H.-. Analysis of Vasculature for Liver Surgical Planning. IEEE Trans. Med. Imaging 2002, 21, 1344–1357. [Google Scholar] [CrossRef] [PubMed]

- Byrd, H.S.; Hobar, P.C. Rhinoplasty: A Practical Guide for Surgical Planning. Plast. Reconstr. Surg. (1963) 1993, 91, 642–654. [Google Scholar] [CrossRef]

- Han, R.; Uneri, A.; De Silva, T.; Ketcha, M.; Goerres, J.; Vogt, S.; Kleinszig, G.; Osgood, G.; Siewerdsen, J.H. Atlas-Based Automatic Planning and 3D–2D Fluoroscopic Guidance in Pelvic Trauma Surgery. Phys. Med. Biol. 2019, 64, 095022. [Google Scholar] [CrossRef]

- Li, H.; Xu, J.; Zhang, D.; He, Y.; Chen, X. Automatic Surgical Planning Based on Bone Density Assessment and Path Integral in Cone Space for Reverse Shoulder Arthroplasty. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1017–1027. [Google Scholar] [CrossRef]

- Sternheim, A.; Rotman, D.; Nayak, P.; Arkhangorodsky, M.; Daly, M.J.; Irish, J.C.; Ferguson, P.C.; Wunder, J.S. Computer-Assisted Surgical Planning of Complex Bone Tumor Resections Improves Negative Margin Outcomes in a Sawbones Model. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 695–701. [Google Scholar] [CrossRef]

- Hammoudeh, J.A.; Howell, L.K.; Boutros, S.; Scott, M.A.; Urata, M.M. Current Status of Surgical Planning for Orthognathic Surgery: Traditional Methods versus 3D Surgical Planning. Plast. Reconstr. Surg. Glob. Open 2015, 3, e307. [Google Scholar] [CrossRef] [PubMed]

- Chim, H.; Wetjen, N.; Mardini, S. Virtual Surgical Planning in Craniofacial Surgery. Semin. Plast. Surg. 2014, 28, 150–158. [Google Scholar] [CrossRef] [PubMed]

- Regodić, M.; Bárdosi, Z.; Diakov, G.; Galijašević, M.; Freyschlag, C.F.; Freysinger, W. Visual Display for Surgical Targeting: Concepts and Usability Study. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1565–1576. [Google Scholar] [CrossRef] [PubMed]

- Mazzola, F.; Smithers, F.; Cheng, K.; Mukherjee, P.; Low, T.-H.H.; Ch’ng, S.; Palme, C.E.; Clark, J.R. Time and Cost-Analysis of Virtual Surgical Planning for Head and Neck Reconstruction: A Matched Pair Analysis. Oral. Oncol. 2020, 100, 104491. [Google Scholar] [CrossRef] [PubMed]

- Tang, X. The role of artificial intelligence in medical imaging research. BJR|Open 2020, 2, 20190031. [Google Scholar] [CrossRef] [PubMed]

- Wagner, J.B. Artificial Intelligence in Medical Imaging. Radiol. Technol. 2019, 90, 489–501. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Cao, G.; Wang, Y.; Liao, S.; Wang, Q.; Shi, J.; Li, C.; Shen, D. Review and Prospect: Artificial Intelligence in Advanced Medical Imaging. Front. Radiol. 2021, 1, 781868. [Google Scholar] [CrossRef]

- Wolterink, J.M.; Leiner, T.; Viergever, M.A.; Išgum, I. Generative Adversarial Networks for Noise Reduction in Low-Dose CT. IEEE Trans. Med. Imaging 2017, 36, 2536–2545. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, Y.; Kalra, M.K.; Lin, F.; Chen, Y.; Liao, P.; Zhou, J.; Wang, G. Low-Dose CT with a Residual Encoder-Decoder Convolutional Neural Network. IEEE Trans. Med. Imaging 2017, 36, 2524–2535. [Google Scholar] [CrossRef]

- Lu, S.; Yang, B.; Xiao, Y.; Liu, S.; Liu, M.; Yin, L.; Zheng, W. Iterative Reconstruction of Low-Dose CT Based on Differential Sparse. Biomed. Signal Process. Control. 2023, 79, 104204. [Google Scholar] [CrossRef]

- Wang, J.; Tang, Y.; Wu, Z.; Tsui, B.M.W.; Chen, W.; Yang, X.; Zheng, J.; Li, M. Domain-Adaptive Denoising Network for Low-Dose CT via Noise Estimation and Transfer Learning. Med Phys. 2023, 50, 74–88. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Zhi, M.; Wei, L.; Yang, X.; Zhang, J.; Li, Y.; Wang, P.; Huang, J.; Yang, G. FA-GAN: Fused Attentive Generative Adversarial Networks for MRI Image Super-Resolution. Comput. Med Imaging Graph. 2021, 92, 101969. [Google Scholar] [CrossRef] [PubMed]

- Guo, P.; Wang, P.; Zhou, J.; Jiang, S.; Patel, V.M. Multi-Institutional Collaborations for Improving Deep Learning-Based Magnetic Resonance Image Reconstruction Using Federated Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2423–2432. [Google Scholar]

- Dhengre, N.; Sinha, S. Multiscale U-Net-Based Accelerated Magnetic Resonance Imaging Reconstruction. Signal, Image Video Process. 2022, 16, 881–888. [Google Scholar] [CrossRef]

- Maken, P.; Gupta, A. 2D-to-3D: A Review for Computational 3D Image Reconstruction from X-Ray Images. Arch. Comput. Methods Eng. 2023, 30, 85–114. [Google Scholar] [CrossRef]

- Gobbi, D.G.; Peters, T.M. Interactive intra-operative 3D ultrasound reconstruction and visualization. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Tokyo, Japan, 25–28 September 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 156–163. [Google Scholar]

- Solberg, O.V.; Lindseth, F.; Torp, H.; Blake, R.E.; Hernes, T.A.N. Freehand 3D Ultrasound Reconstruction Algorithms—A Review. Ultrasound Med. Biol. 2007, 33, 991–1009. [Google Scholar] [CrossRef]

- Yang, L.; Wang, J.; Kobayashi, E.; Ando, T.; Yamashita, H.; Sakuma, I.; Chiba, T. Image mapping of untracked free-hand endoscopic views to an ultrasound image-constructed 3D placenta model. Int. J. Med Robot. Comput. Assist. Surg. 2015, 11, 223–234. [Google Scholar] [CrossRef]

- Chen, X.; Chen, H.; Peng, Y.; Liu, L.; Huang, C. A Freehand 3D Ultrasound Reconstruction Method Based on Deep Learning. Electronics 2023, 12, 1527. [Google Scholar] [CrossRef]

- Luo, M.; Yang, X.; Wang, H.; Dou, H.; Hu, X.; Huang, Y.; Ravikumar, N.; Xu, S.; Zhang, Y.; Xiong, Y.; et al. RecON: Online learning for sensorless freehand 3D ultrasound reconstruction. Med Image Anal. 2023, 87, 102810. [Google Scholar] [CrossRef]

- Lin, B.; Sun, Y.; Qian, X.; Goldgof, D.; Gitlin, R.; You, Y. Video-based 3D reconstruction, laparoscope localization and deformation recovery for abdominal minimally invasive surgery: A survey. Int. J. Med Robot. Comput. Assist. Surg. 2016, 12, 158–1788. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef]

- Mahmoud, N.; Cirauqui, I.; Hostettler, A.; Doignon, C.; Soler, L.; Marescaux, J.; Montiel, J.M.M. ORBSLAM-based endoscope tracking and 3D reconstruction. In Proceedings of the Computer-Assisted and Robotic Endoscopy: Third International Workshop, CARE 2016, Held in Conjunction with MICCAI 2016, Athens, Greece, 17 October 2016; Revised Selected Papers 3. Springer International Publishing: Cham, Switzerland, 2017; pp. 72–83. [Google Scholar]

- Grasa, O.G.; Civera, J.; Guemes, A.; Munoz, V.; Montiel, J.M.M. EKF monocular SLAM 3D modeling, measuring and augmented reality from endoscope image sequences. In Proceedings of the 5th Workshop on Augmented Environments for Medical Imaging including Augmented Reality in Computer-Aided Surgery (AMI-ARCS), London, UK, 24 September 2009; Volume 2, pp. 102–109. [Google Scholar]

- Widya, A.R.; Monno, Y.; Okutomi, M.; Suzuki, S.; Gotoda, T.; Miki, K. Whole Stomach 3D Reconstruction and Frame Localization from Monocular Endoscope Video. IEEE J. Transl. Eng. Health Med. 2019, 7, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Tang, W.; John, N.W.; Wan, T.R.; Zhang, J.J. SLAM-based dense surface reconstruction in monocular Minimally Invasive Surgery and its application to Augmented Reality. Comput. Methods Programs Biomed. 2018, 158, 135–146. [Google Scholar] [CrossRef] [PubMed]

- Hayashibe, M.; Suzuki, N.; Nakamura, Y. Laser-scan endoscope system for intraoperative geometry acquisition and surgical robot safety management. Med Image Anal. 2006, 10, 509–519. [Google Scholar] [CrossRef] [PubMed]

- Sui, C.; Wu, J.; Wang, Z.; Ma, G.; Liu, Y.-H. A Real-Time 3D Laparoscopic Imaging System: Design, Method, and Validation. IEEE Trans. Biomed. Eng. 2020, 67, 2683–2695. [Google Scholar] [CrossRef] [PubMed]

- Ciuti, G.; Visentini-Scarzanella, M.; Dore, A.; Menciassi, A.; Dario, P.; Yang, G.-Z. Intra-operative monocular 3D reconstruction for image-guided navigation in active locomotion capsule endoscopy. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 768–774. [Google Scholar] [CrossRef]

- Fan, Y.; Meng MQ, H.; Li, B. 3D reconstruction of wireless capsule endoscopy images. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 5149–5152. [Google Scholar]

- Yang, L.; Wang, J.; Kobayashi, E.; Liao, H.; Sakuma, I.; Yamashita, H.; Chiba, T. Ultrasound image-guided mapping of endoscopic views on a 3D placenta model: A tracker-less approach. In Proceedings of the Augmented Reality Environments for Medical Imaging and Computer-Assisted Interventions: 6th International Workshop, MIAR 2013 and 8th International Workshop, AE-CAI 2013, Nagoya, Japan, 22 September 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 107–116. [Google Scholar]

- Liangjing, Y. Development of a Self-Contained Image Mapping Framework for Ultrasound-Guided Fetoscopic Procedures via Three-Dimensional Dynamic View Expansion. Ph.D. Thesis, The University of Tokyo, Tokyo, Japan, 2014. [Google Scholar]

- Fan, Z.; Ma, L.; Liao, Z.; Zhang, X.; Liao, H. Three-Dimensional Image-Guided Techniques for Minimally Invasive Surgery. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 575–584. [Google Scholar] [CrossRef]

- Nishino, H.; Hatano, E.; Seo, S.; Nitta, T.; Saito, T.; Nakamura, M.; Hattori, K.; Takatani, M.; Fuji, H.; Taura, K.; et al. Real-Time Navigation for Liver Surgery Using Projection Mapping with Indocyanine Green Fluorescence: Development of the Novel Medical Imaging Projection System. Ann. Surg. 2018, 267, 1134–1140. [Google Scholar] [CrossRef] [PubMed]

- Deng, H.; Wang, Q.H.; Xiong, Z.L.; Zhang, H.L.; Xing, Y. Magnified Augmented Reality 3D Display Based on Integral Imaging. Optik 2016, 127, 4250–4253. [Google Scholar] [CrossRef]

- He, C.; Liu, Y.; Wang, Y. Sensor-Fusion Based Augmented-Reality Surgical Navigation System. In Proceedings of the IEEE Instrumentation and Measurement Technology Conference, Taipei, Taiwan, 23–26 May 2016. [Google Scholar] [CrossRef]

- Suenaga, H.; Tran, H.H.; Liao, H.; Masamune, K.; Dohi, T.; Hoshi, K.; Takato, T. Vision-Based Markerless Registration Using Stereo Vision and an Augmented Reality Surgical Navigation System: A Pilot Study. BMC Med. Imaging 2015, 15, 1–11. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, G.; Liao, H. High-Quality See-through Surgical Guidance System Using Enhanced 3-D Autostereoscopic Augmented Reality. IEEE Trans. Biomed. Eng. 2017, 64, 1815–1825. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, G.; Liao, H. A High-Accuracy Surgical Augmented Reality System Using Enhanced Integral Videography Image Overlay. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS 2015, Milan, Italy, 25–29 August 2015; pp. 4210–4213. [Google Scholar] [CrossRef]

- Gavaghan, K.A.; Peterhans, M.; Oliveira-Santos, T.; Weber, S. A Portable Image Overlay Projection Device for Computer-Aided Open Liver Surgery. IEEE Trans. Biomed. Eng. 2011, 58, 1855–1864. [Google Scholar] [CrossRef]

- Wen, R.; Chui, C.K.; Ong, S.H.; Lim, K.B.; Chang, S.K.Y. Projection-Based Visual Guidance for Robot-Aided RF Needle Insertion. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 1015–1025. [Google Scholar] [CrossRef]

- Yu, J.; Wang, T.; Zong, Z.; Yang, L. Immersive Human-Robot Interaction for Dexterous Manipulation in Minimally Invasive Procedures. In Proceedings of the 4th WRC Symposium on Advanced Robotics and Automation 2022, WRC SARA 2022, Beijing, China, 20 August 2022. [Google Scholar]

- Nishihori, M.; Izumi, T.; Nagano, Y.; Sato, M.; Tsukada, T.; Kropp, A.E.; Wakabayashi, T. Development and Clinical Evaluation of a Contactless Operating Interface for Three-Dimensional Image-Guided Navigation for Endovascular Neurosurgery. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 663–671. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Day, T.W.; Tang, W.; John, N.W. Recent Developments and Future Challenges in Medical Mixed Reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2017, Nantes, France, 9–13 October 2017. [Google Scholar]

- Hu, H.; Feng, X.; Shao, Z.; Xie, M.; Xu, S.; Wu, X.; Ye, Z. Application and Prospect of Mixed Reality Technology in Medical Field. Curr. Med. Sci. 2019, 39, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Yamaguchi, S.; Ohtani, T.; Yatani, H.; Sohmura, T. Augmented Reality System for Dental Implant Surgery. In Virtual and Mixed Reality; Springer: Berlin/Heidelberg, Germany, 2009; pp. 633–638. ISBN 0302-9743. [Google Scholar]

- Burström, G.; Nachabe, R.; Persson, O.; Edström, E.; Elmi Terander, A. Augmented and Virtual Reality Instrument Tracking for Minimally Invasive Spine Surgery: A Feasibility and Accuracy Study. Spine 2019, 44, 1097–1104. [Google Scholar] [CrossRef] [PubMed]

- Schijven, M.; Jakimowicz, J. Virtual Reality Surgical Laparoscopic Simulators: How to Choose. Surg. Endosc. 2003, 17, 1943–1950. [Google Scholar] [CrossRef] [PubMed]

- Khalifa, Y.M.; Bogorad, D.; Gibson, V.; Peifer, J.; Nussbaum, J. Virtual Reality in Ophthalmology Training. Surv. Ophthalmol. 2006, 51, 259. [Google Scholar] [CrossRef] [PubMed]

- Jaramaz, B.; Eckman, K. Virtual Reality Simulation of Fluoroscopic Navigation. Clin. Orthop. Relat. Res. 2006, 442, 30–34. [Google Scholar] [CrossRef]

- Ayoub, A.; Pulijala, Y. The Application of Virtual Reality and Augmented Reality in Oral & Maxillofacial Surgery. BMC Oral. Health 2019, 19, 238. [Google Scholar] [CrossRef]

- Haluck, R.S.; Webster, R.W.; Snyder, A.J.; Melkonian, M.G.; Mohler, B.J.; Dise, M.L.; Lefever, A. A Virtual Reality Surgical Trainer for Navigation in Laparoscopic Surgery. Stud. Health Technol. Inform. 2001, 81, 171. [Google Scholar]

- Barber, S.R.; Jain, S.; Son, Y.-J.; Chang, E.H. Virtual Functional Endoscopic Sinus Surgery Simulation with 3D-Printed Models for Mixed-Reality Nasal Endoscopy. Otolaryngol.–Head Neck Surg. 2018, 159, 933–937. [Google Scholar] [CrossRef]

- Martel, A.L.; Abolmaesumi, P.; Stoyanov, D.; Mateus, D.; Zuluaga, M.A.; Zhou, S.K.; Racoceanu, D.; Joskowicz, L. An Interactive Mixed Reality Platform for Bedside Surgical Procedures. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020; Springer International Publishing AG: Cham, Switzerland, 2020; Volume 12263, pp. 65–75. ISBN 0302-9743. [Google Scholar]

- Zhou, Z.; Yang, Z.; Jiang, S.; Zhang, F.; Yan, H. Design and Validation of a Surgical Navigation System for Brachytherapy Based on Mixed Reality. Med. Phys. 2019, 46, 3709–3718. [Google Scholar] [CrossRef]

- Mehralivand, S.; Kolagunda, A.; Hammerich, K.; Sabarwal, V.; Harmon, S.; Sanford, T.; Gold, S.; Hale, G.; Romero, V.V.; Bloom, J.; et al. A Multiparametric Magnetic Resonance Imaging-Based Virtual Reality Surgical Navigation Tool for Robotic-Assisted Radical Prostatectomy. Turk. J. Urol. 2019, 45, 357–365. [Google Scholar] [CrossRef] [PubMed]

- Frangi, A.F.; Schnabel, J.A.; Davatzikos, C.; Alberola-López, C.; Fichtinger, G. A Novel Mixed Reality Navigation System for Laparoscopy Surgery. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Springer International Publishing AG: Cham, Switzerland, 2018; Volume 11073, pp. 72–80. ISBN 0302-9743. [Google Scholar]

- Incekara, F.; Smits, M.; Dirven, C.; Vincent, A. Clinical Feasibility of a Wearable Mixed-Reality Device in Neurosurgery. World Neurosurg. 2018, 118, e422. [Google Scholar] [CrossRef] [PubMed]

- McJunkin, J.L.; Jiramongkolchai, P.; Chung, W.; Southworth, M.; Durakovic, N.; Buchman, C.A.; Silva, J.R. Development of a Mixed Reality Platform for Lateral Skull Base Anatomy. Otol. Neurotol. 2018, 39, e1137–e1142. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Jiang, S.; Yang, Z.; Xu, B.; Jiang, B. Surgical Navigation System for Brachytherapy Based on Mixed Reality Using a Novel Stereo Registration Method. Virtual Real. 2021, 25, 975–984. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Li, Q.; Yu, S.; Chen, W.; Wan, S.; Chen, D.; Liu, R.; Ding, F. Treating Lumbar Fracture Using the Mixed Reality Technique. Biomed. Res. Int. 2021, 2021, 6620746. [Google Scholar] [CrossRef] [PubMed]

- Holi, G.; Murthy, S.K. An Overview of Image Security Techniques. Int. J. Comput. Appl. 2016, 154, 975–8887. [Google Scholar]

- Magdy, M.; Hosny, K.M.; Ghali, N.I.; Ghoniemy, S. Security of Medical Images for Telemedicine: A Systematic Review. Multimed. Tools Appl. 2022, 81, 25101–25145. [Google Scholar] [CrossRef] [PubMed]

- Lungu, A.J.; Swinkels, W.; Claesen, L.; Tu, P.; Egger, J.; Chen, X. A Review on the Applications of Virtual Reality, Augmented Reality and Mixed Reality in Surgical Simulation: An Extension to Different Kinds of Surgery. Expert. Rev. Med. Devices 2021, 18, 47–62. [Google Scholar] [CrossRef]

- Hussain, R.; Lalande, A.; Guigou, C.; Bozorg-Grayeli, A. Contribution of Augmented Reality to Minimally Invasive Computer-Assisted Cranial Base Surgery. IEEE J. Biomed. Health Inform. 2020, 24, 2093–2106. [Google Scholar] [CrossRef]

- Moody, L.; Waterworth, A.; McCarthy, A.D.; Harley, P.J.; Smallwood, R.H. The Feasibility of a Mixed Reality Surgical Training Environment. Virtual Real. 2008, 12, 77–86. [Google Scholar] [CrossRef]

- Zuo, Y.; Jiang, T.; Dou, J.; Yu, D.; Ndaro, Z.N.; Du, Y.; Li, Q.; Wang, S.; Huang, G. A Novel Evaluation Model for a Mixed-Reality Surgical Navigation System: Where Microsoft HoloLens Meets the Operating Room. Surg. Innov. 2020, 27, 193–202. [Google Scholar] [CrossRef] [PubMed]

| Database | Search String | Number |

|---|---|---|

| Web of Science™ | (TS = (surgical navigation)) AND ((KP = (surgical navigation system)) OR (KP = (image guided surgery)) OR (KP = (computer assisted surgery)) OR (KP = (virtual reality)) OR (KP = (augmented reality)) OR (KP = (mixed reality))OR (KP = (3D))) and Preprint Citation Index (Exclude—Database) | 597 |

| Scopus | ((TITLE-ABS-KEY(“surgical navigation”)) AND ((KEY(“surgical navigation system”)) OR (KEY(“image guided surgery”)) OR (KEY(“computer assisted surgery”)) OR (KEY(“virtual reality”)) OR (KEY(“augmented reality”)) OR (KEY(“mixed reality”)) OR (KEY(“3D”))) AND PUBYEAR > 2012 AND PUBYEAR < 2024 AND (LIMIT-TO (SUBJAREA,”MEDI”) OR LIMIT-TO (SUBJAREA,”COMP”) OR LIMIT-TO (SUBJAREA,”ENGI”)) AND (LIMIT-TO (DOCTYPE,”ar”) OR LIMIT-TO (DOCTYPE,”cp”))) | 1594 |

| IEEE Xplore® | ((“surgical navigation”) AND ((“surgical navigation system”) OR (“image guided surgery”) OR (“computer assisted surgery”) OR (“virtual reality) OR (“augmented reality) OR (“mixed reality”) OR (“3D”))) | 146 |

| Paper | Segmentation | Tracking | Registration |

|---|---|---|---|

| [13] | No | EMT 1 | Rigid landmark |

| [14] | 3D Slicer 6 | EMT | PDM 2 |

| [15] | Threshold | EMT | ICP 3/B-Spline |

| [16,17,18,19,20] | No | OTS 4 | Fiducial markers |

| [21] | 3D Slicer | No | Fiducial markers |

| [22] | Yes | No | ICP |

| [23] | 3D Slicer | OTS | Surface-matching |

| [24] | Learning-based | No | ICP |

| [25] | No | Learning-based | Learning-based |

| [26] | Learning-based | No | No |

| [27,28] | Yes | OTS | Fiducial markers |

| [29] | Threshold/Region growing | OTS | ICP |

| [30] | Region growing | Visual–inertial stereo slam | ICP |

| [31] | Threshold | Learning-based | Super4PCS [32] |

| [33] | Yes | OTS | ICP |

| [34] | 3D Slicer | EMT | Fiducial markers |

| [35] | Mimics 6 | OTS | Anatomical landmark |

| [36] | Manual | No | ICP |

| [37] | No | Depth estimation | Learning-based [38] |

| [39] | EndoSize 6 | No | Rigid intensity-based |

| [40] | Yes | EMT | ICP |

| [41] | Threshold | OTS | ICP |

| [42] | 3D Slicer/Learning-based | No | ICP and CPD 5 |

| [43] | Yes | Visual SLAM | Visual SLAM |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Z.; Lei, C.; Yang, L. Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization. Sensors 2023, 23, 9872. https://doi.org/10.3390/s23249872

Lin Z, Lei C, Yang L. Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization. Sensors. 2023; 23(24):9872. https://doi.org/10.3390/s23249872

Chicago/Turabian StyleLin, Zhefan, Chen Lei, and Liangjing Yang. 2023. "Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization" Sensors 23, no. 24: 9872. https://doi.org/10.3390/s23249872

APA StyleLin, Z., Lei, C., & Yang, L. (2023). Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization. Sensors, 23(24), 9872. https://doi.org/10.3390/s23249872