Deep Learning Accelerators’ Configuration Space Exploration Effect on Performance and Resource Utilization: A Gemmini Case Study

Abstract

:1. Introduction

- Detailing the software and hardware components generated using the Gemmini framework. This was done to make it easier to understand and implement Gemmini since the documentations have a long learning curve.

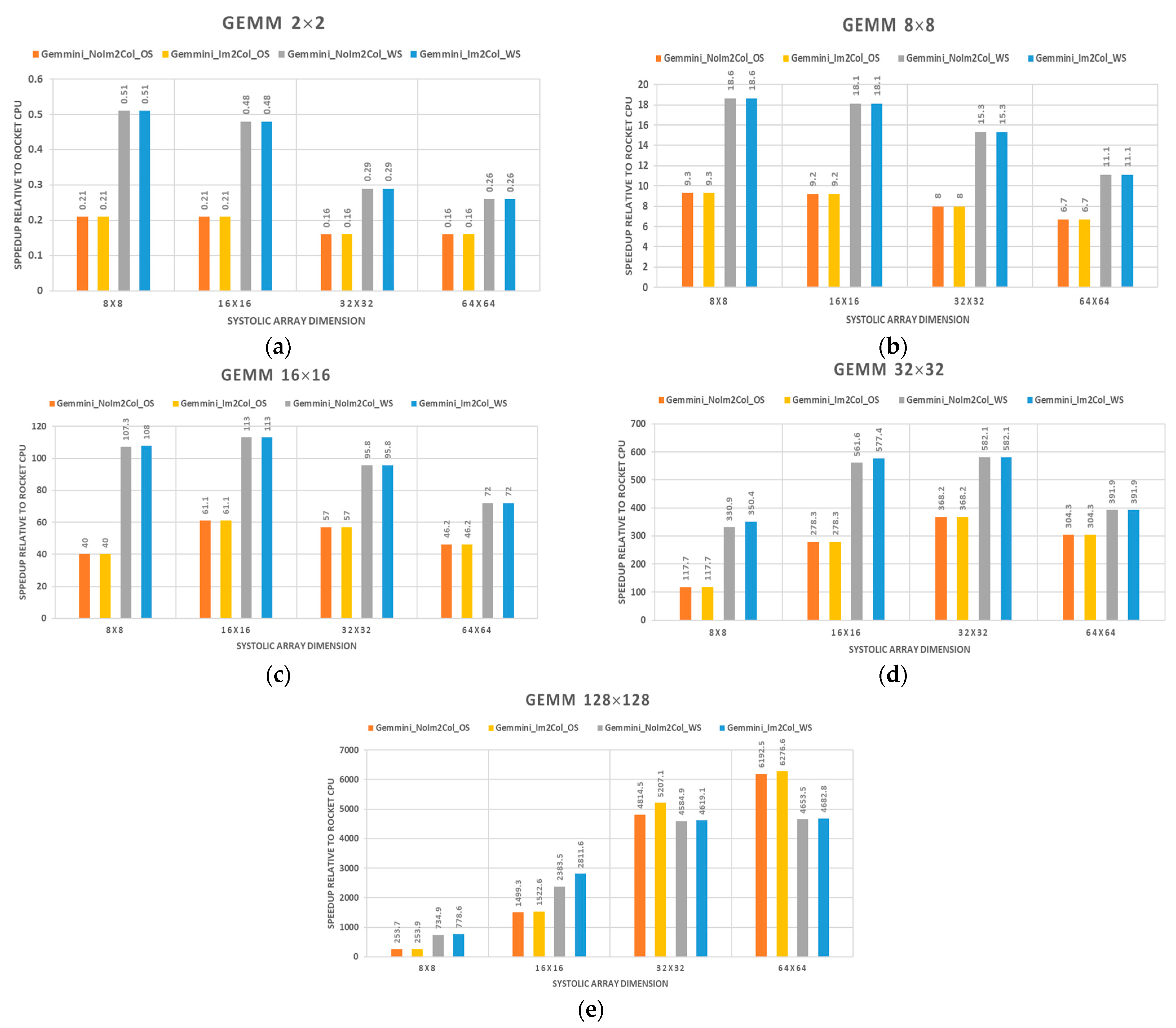

- The exploration of various GEMM dimensions and dataflow options on different configurations of the Gemmini accelerator is reported to measure performance metrics under various configurations and workloads.

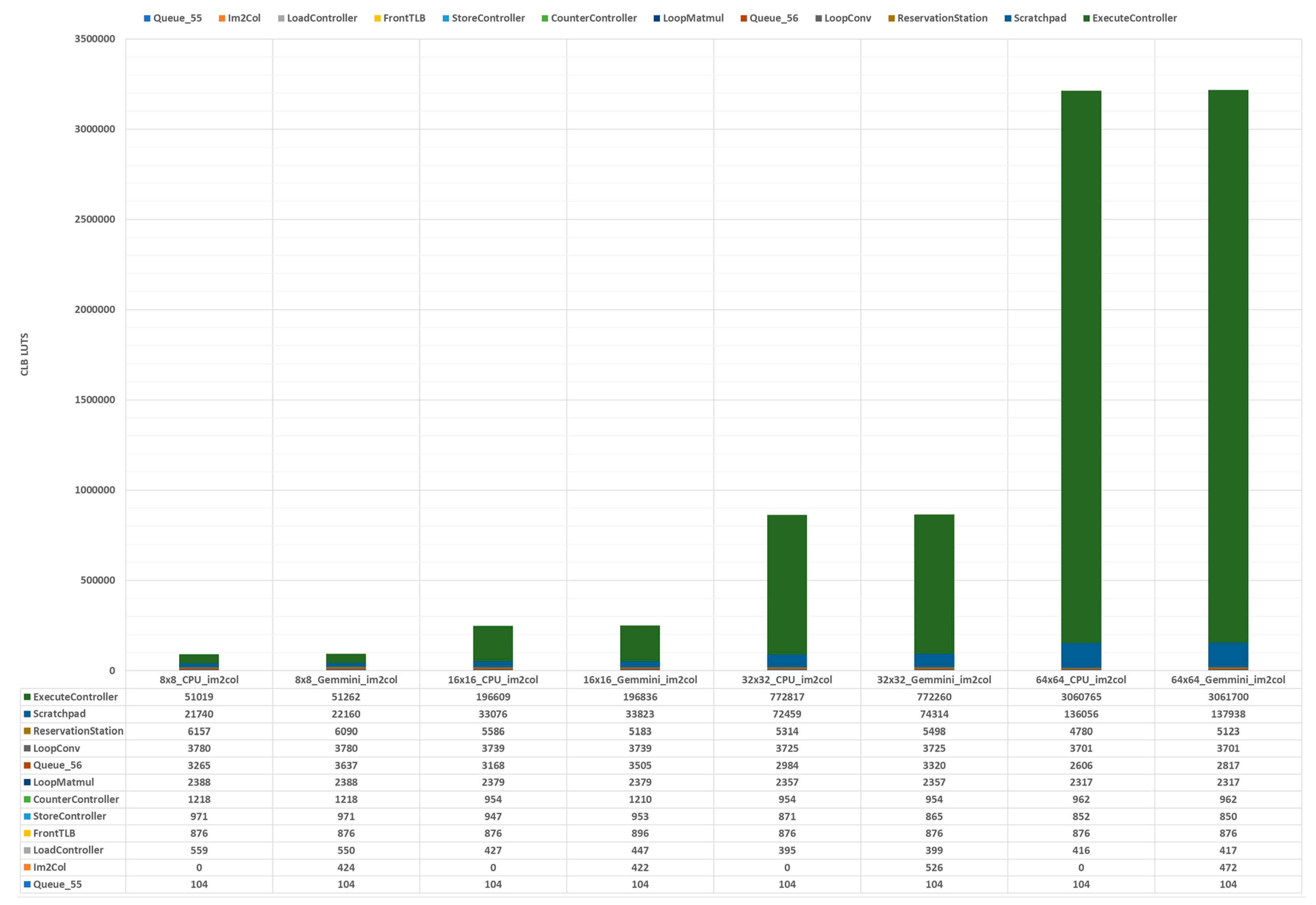

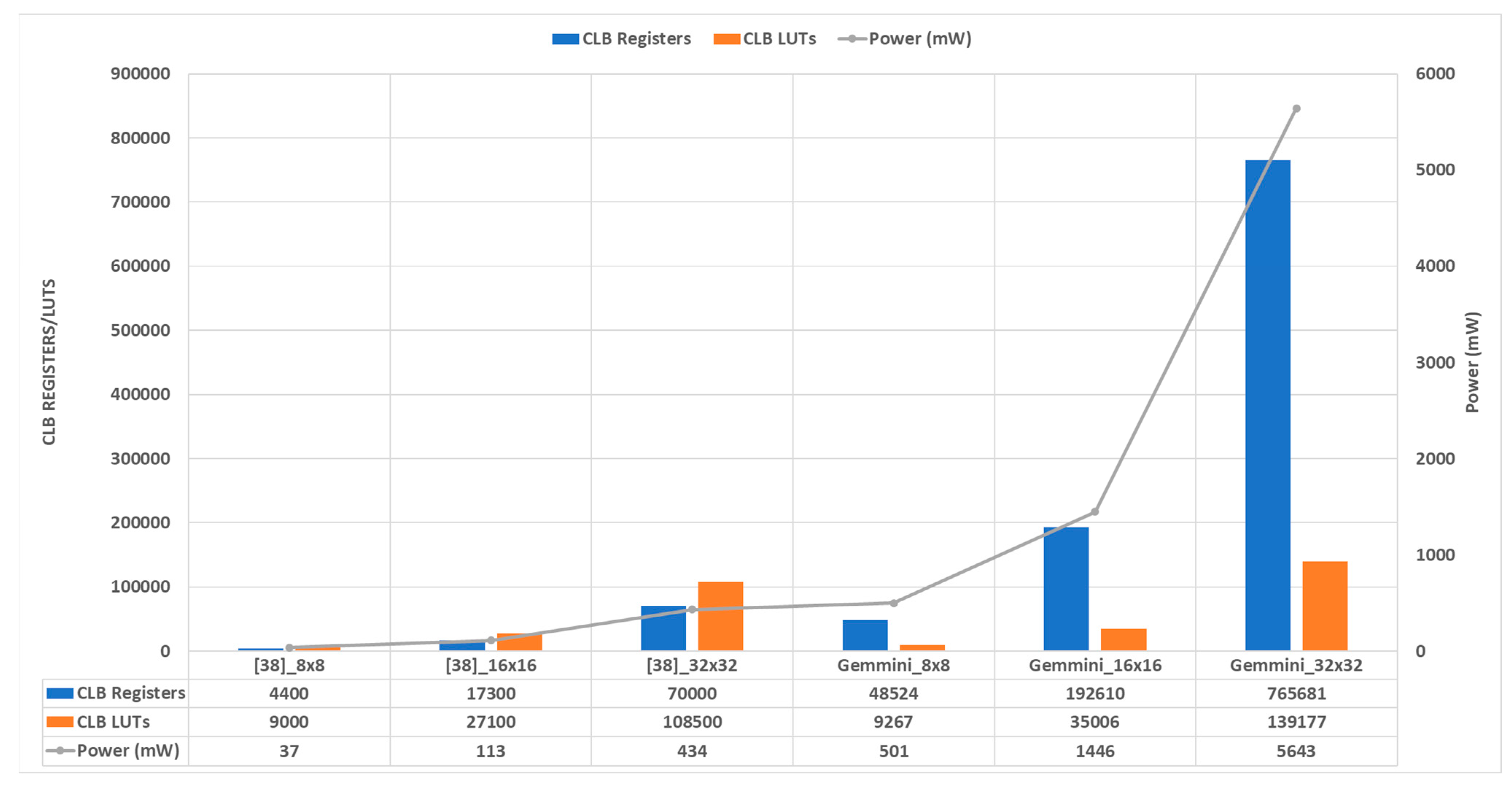

- Mapping the generated register transfer level (RTL) code of various Gemmini configurations on a field programmable gate array (FPGA) device to extract synthesis results, such as area, frequency, and power.

- Demonstrating the effects of various Gemmini configuration options on performance and hardware resource utilization metrics.

2. Background

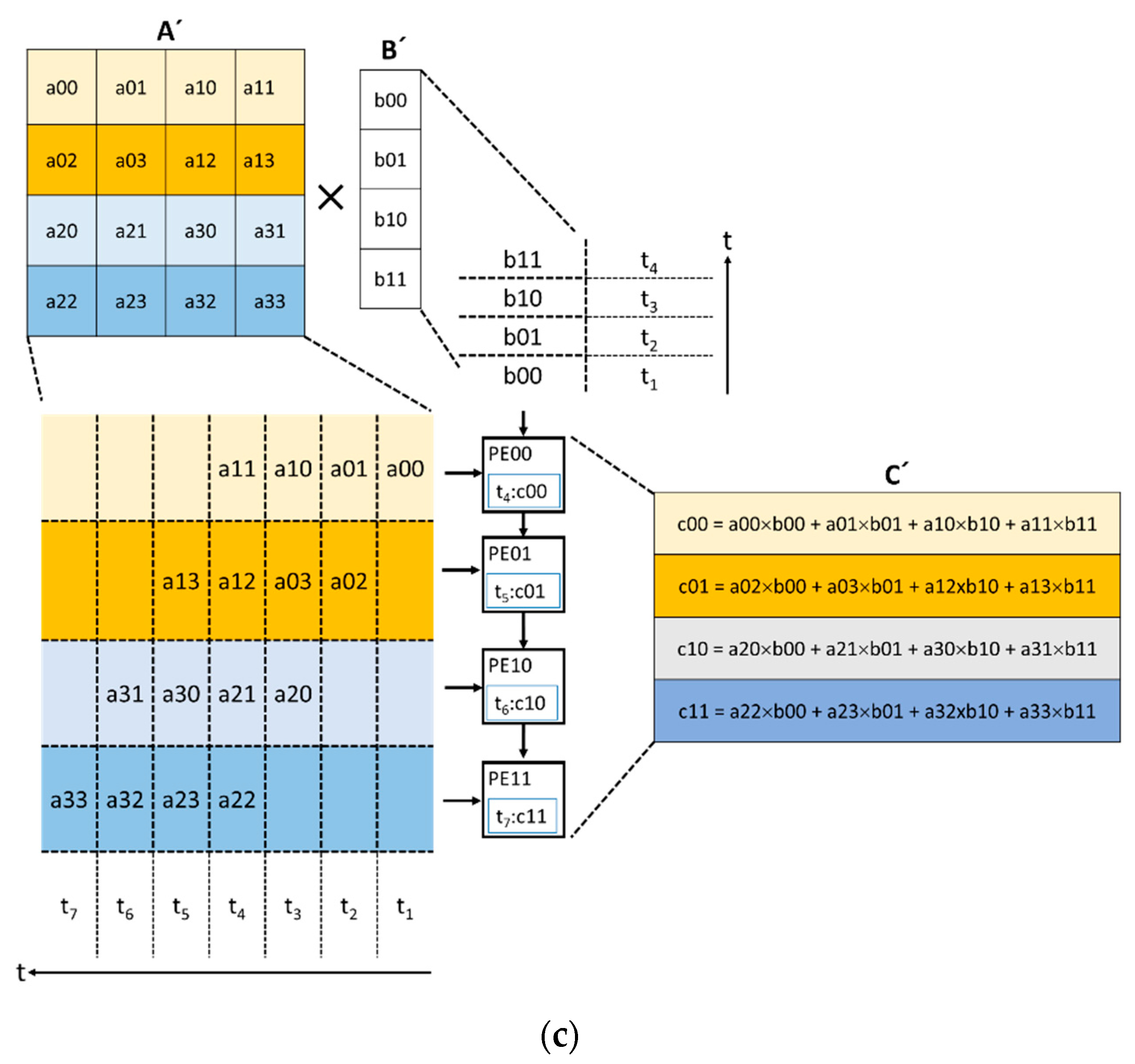

2.1. From Convolution to GEMM

2.2. Related Work

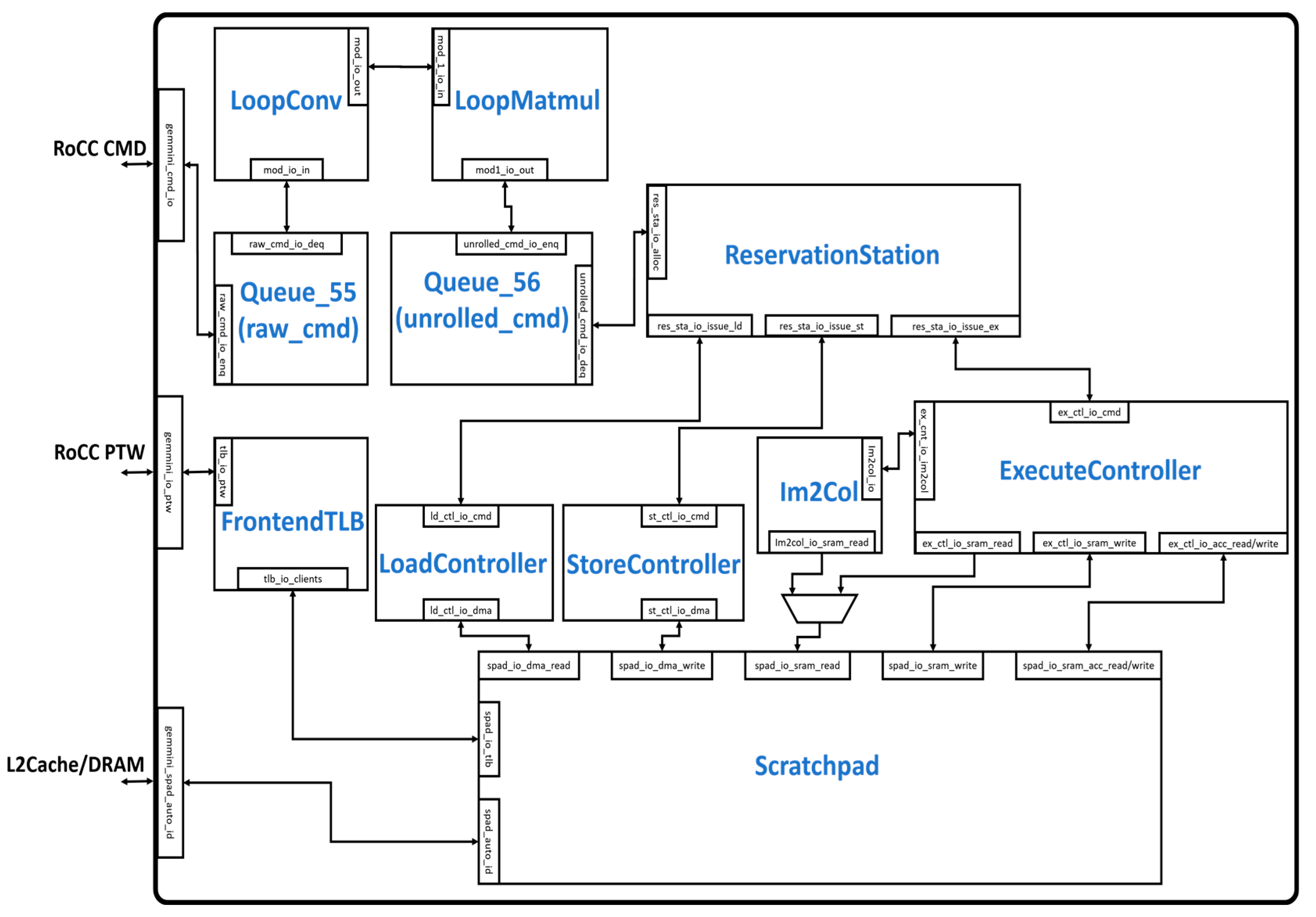

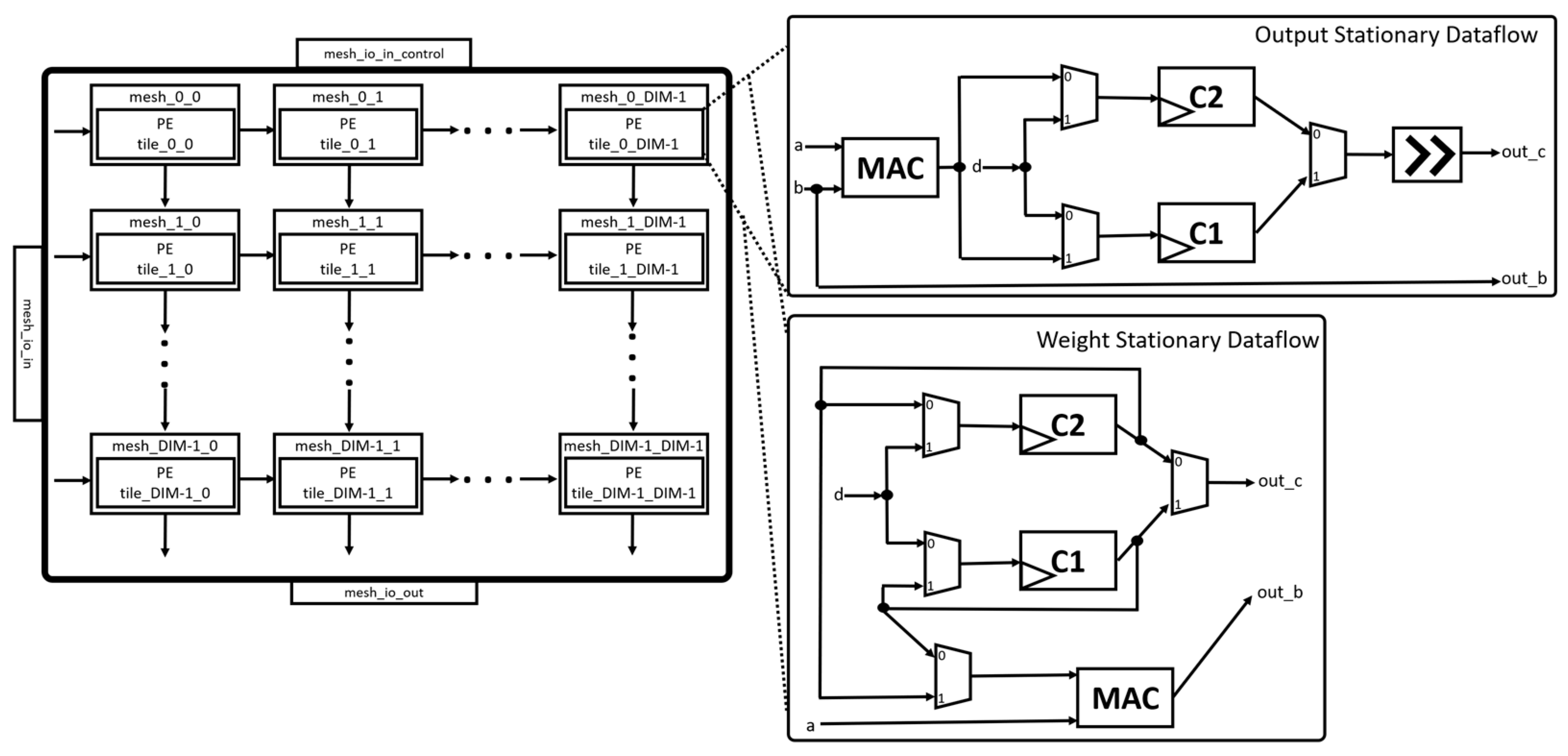

3. The Gemmini Hardware Architecture Template

3.1. The RoCC Interface

3.2. LoopConv and LoopMatmul Modules

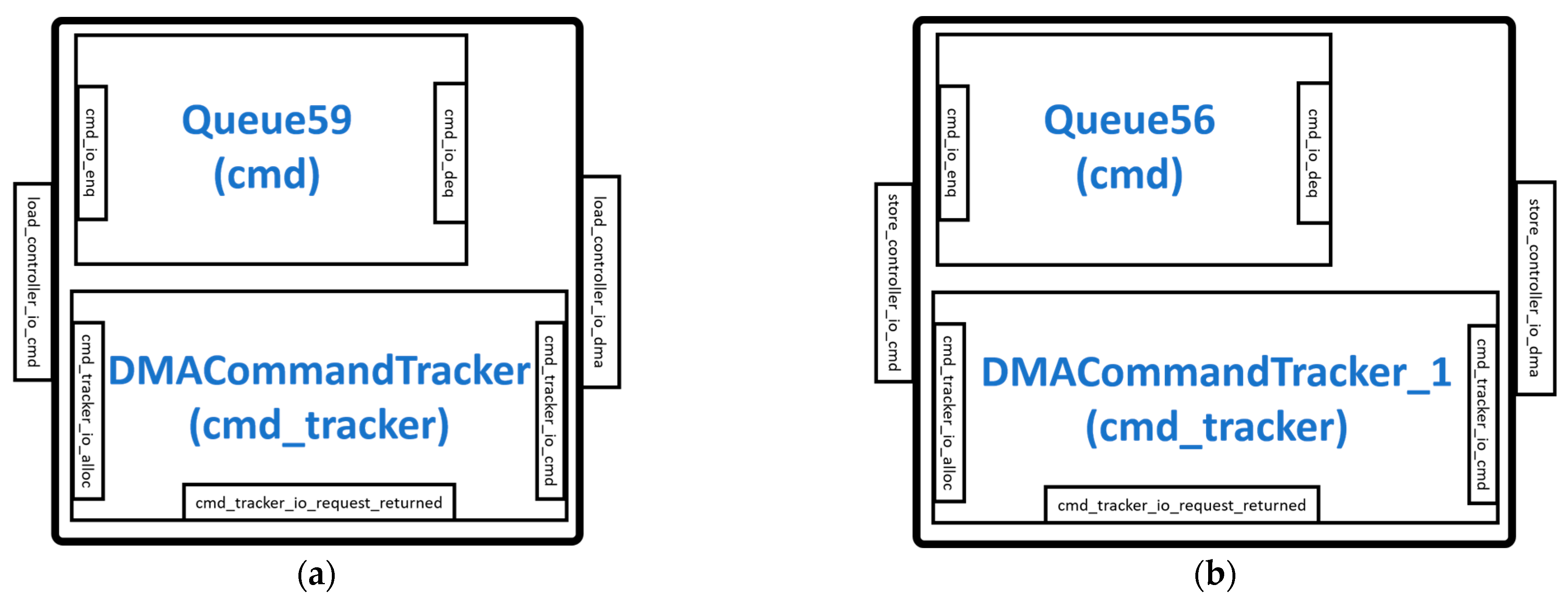

3.3. LoadController and StoreController Modules

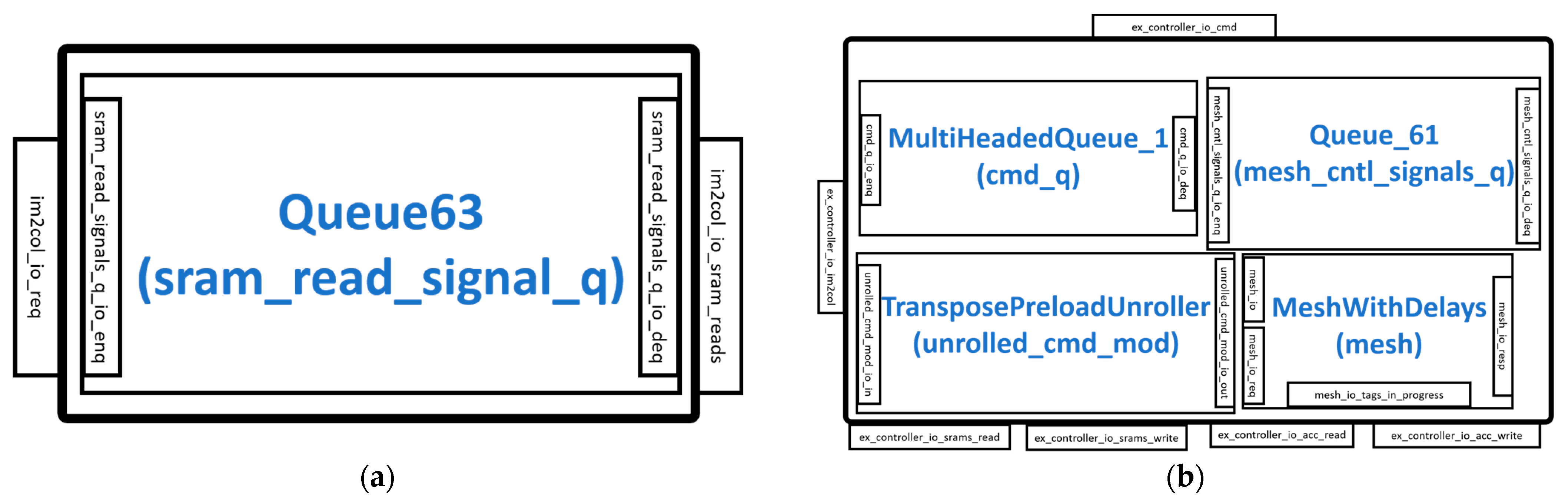

3.4. im2col and ExecuteController Modules

3.5. Scratchpad Module

4. Methodology

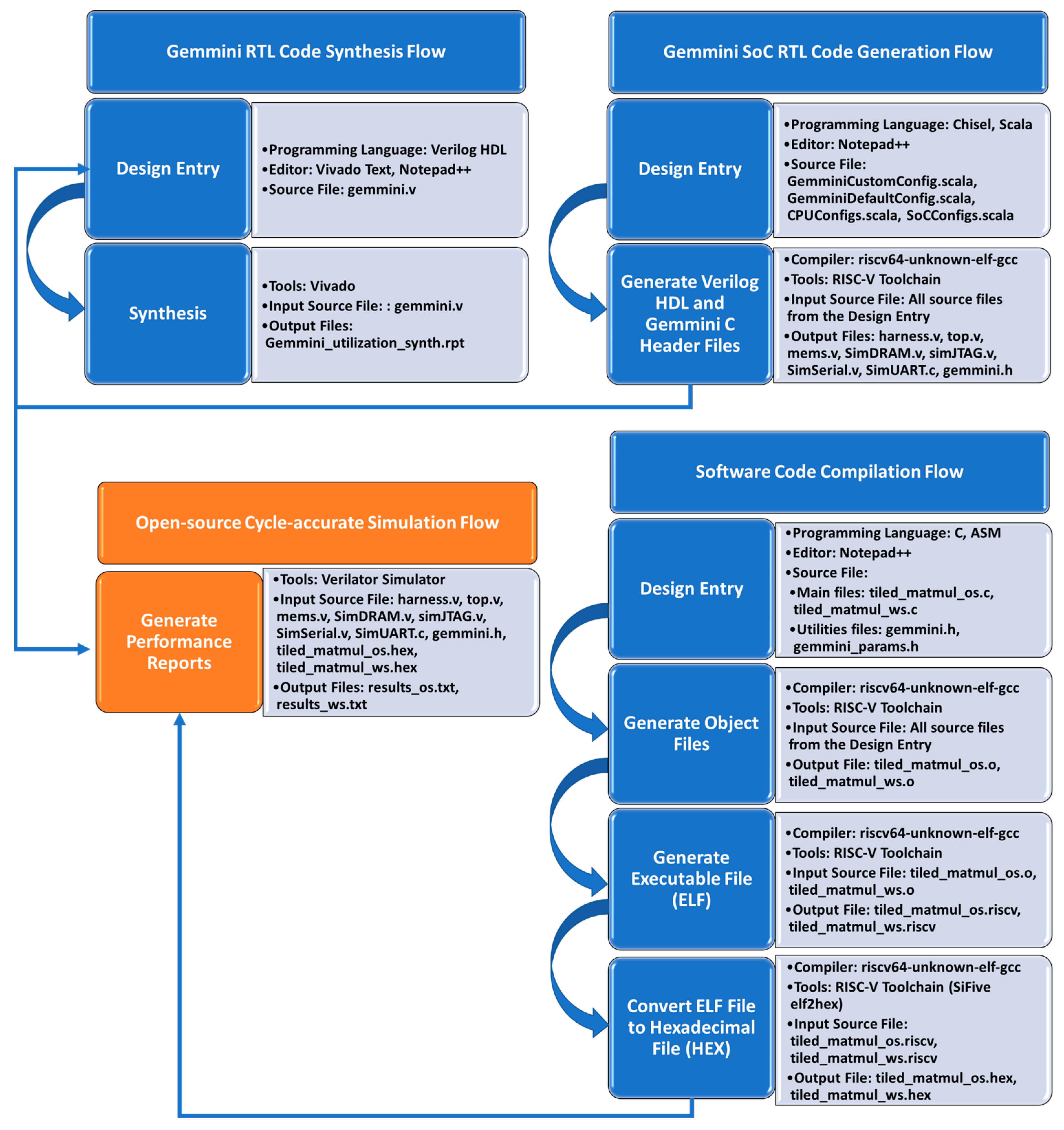

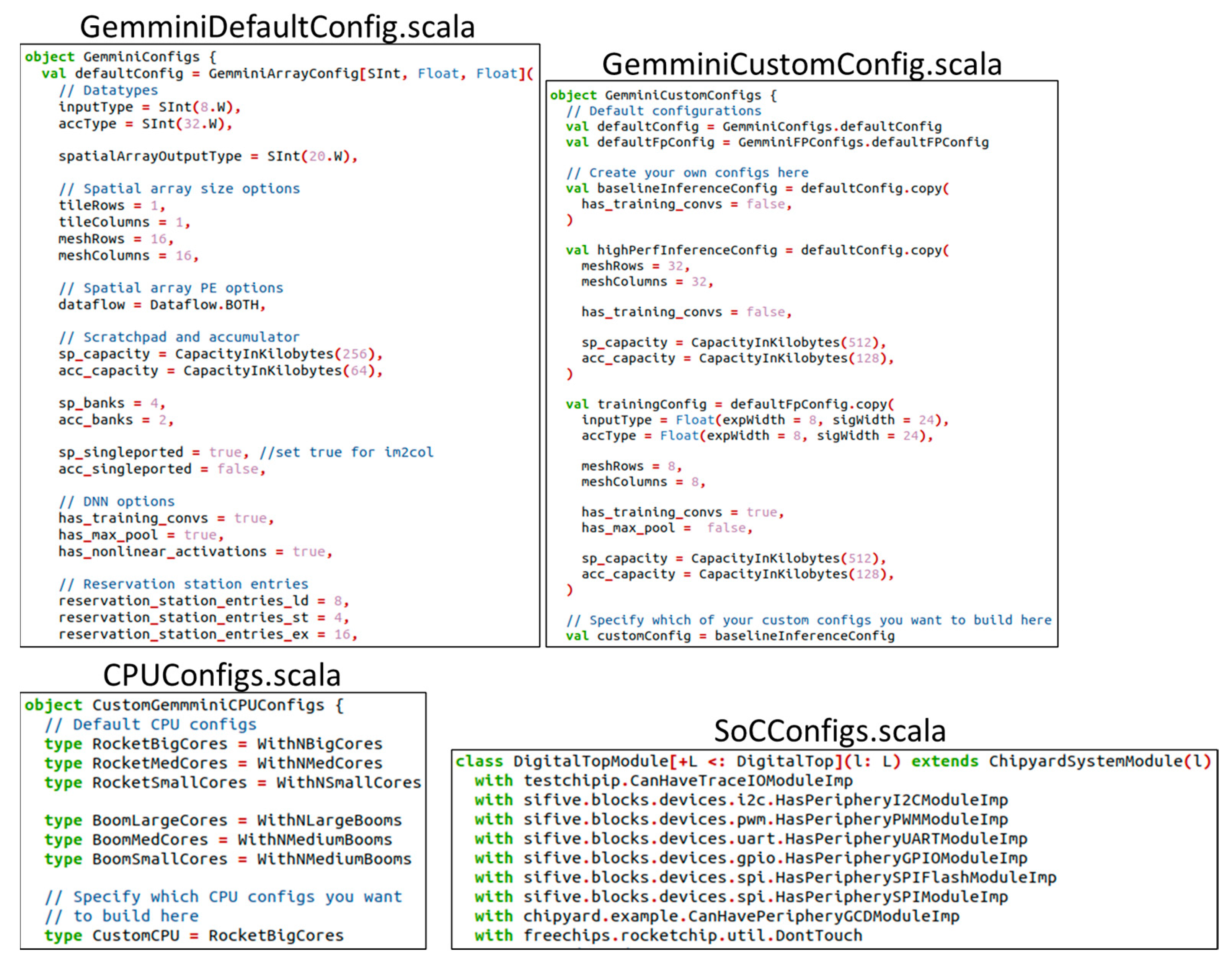

4.1. Gemmini SoC RTL Code Generation Flow

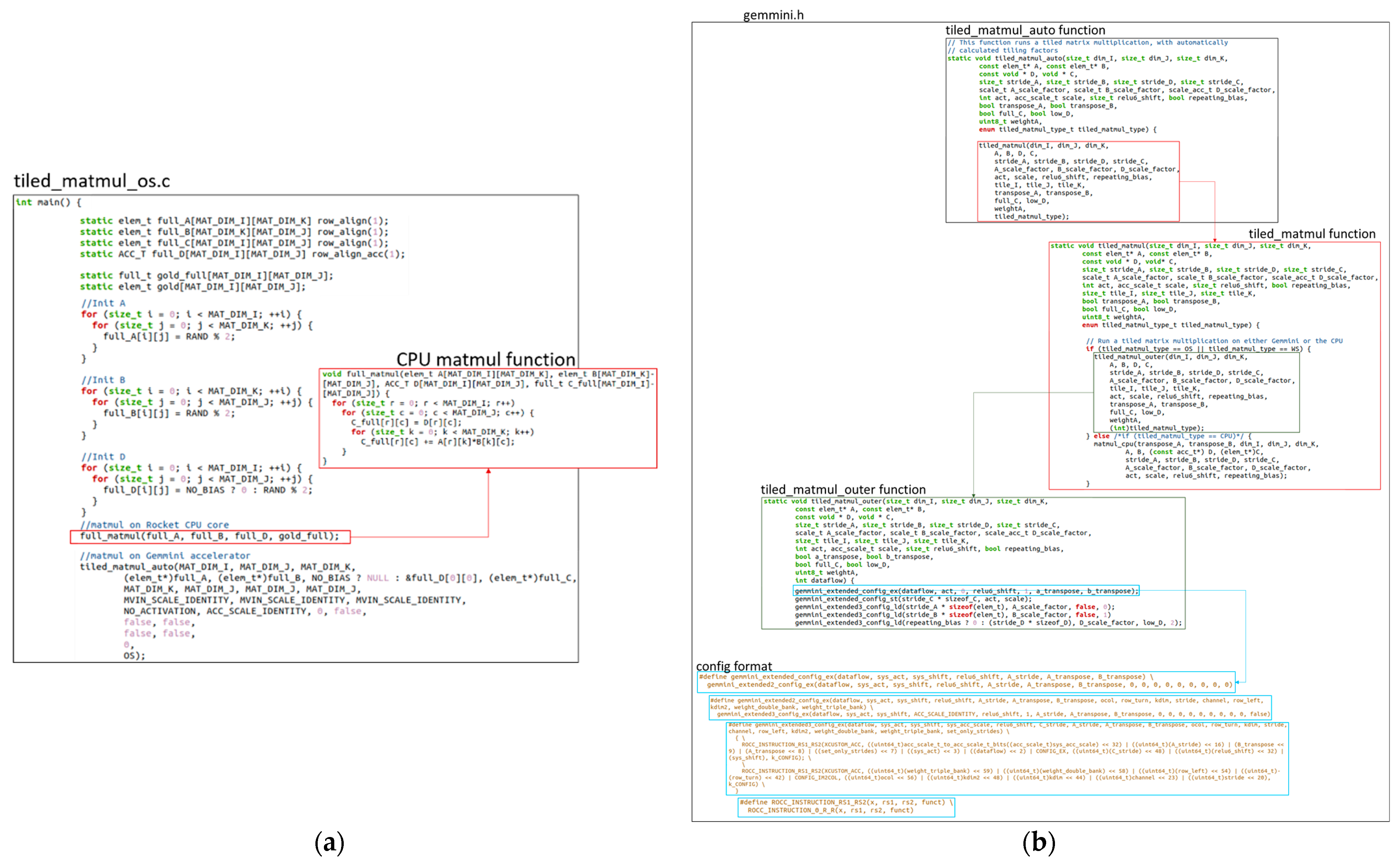

4.2. Software Code Compilation Flow

4.3. Gemmini RTL Code Synthesis Flow

5. Experimental Results

5.1. Gemmini Performance Analysis

5.2. Gemmini Hardware Resource Utilization Analysis

Hardware Resource Comparison

5.3. Gemmini Performance-Per-Area Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jeong, Y.; Son, S.; Lee, B.; Lee, S. The Braking-Pressure and Driving-Direction Determination System (BDDS) Using Road Roughness and Passenger Conditions of Surrounding Vehicles. Sensors 2022, 22, 4414. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformer for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019; Association for Computational Linguistic: Minneapolis, MI, USA, 2019. [Google Scholar]

- Huang, C.H.; Chen, P.J.; Lin, Y.J.; Chen, B.W.; Zheng, J.X. A Robot-based Intelligent Management Design for Agricultural Cyber-physical Systems. Comput. Electron. Agric. 2021, 181, 105967. [Google Scholar] [CrossRef]

- Zhao, Q.; Sheng, T.; Wang, Y.; Tang, Z.; Chen, Y.; Cai, L.; Ling, H. M2Det: A Single-shot Object Detector Based on Multi-level Feature Pyramid Network. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolul, HI, USA, 27 January–1 February 2019; Association of the Advancement of Artificial Intelligence: Palo Alto, CA, USA, 2019. [Google Scholar]

- Esmaeilzadeh, H.; Blem, E.; St. Amant, R.; Sankaralingam, K.; Burger, D. Dark Silicon and the end of Multicore Scaling. In Proceedings of the Annual International Symposium on Computer Architecture, San Jose, CA, USA, 4–8 June 2011; IEEE: San Francisco, CA, USA, 2019. [Google Scholar]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D. A Domain-Specific Architecture for Deep Neural Networks. Commun. ACM 2018, 61, 50–59. [Google Scholar] [CrossRef] [Green Version]

- Jian, W.; Sihao, L.; Vidushi, D.; Zhengrong, W.; Preyas, S.; Tony, N. Synthesizing Programmable Spatial Accelerator. In Proceedings of the Annual International Symposium on Computer Architecture, Valencia, Spain, 30 May–3 June 2020; IEEE: San Francisco, CA, USA, 2020. [Google Scholar]

- Samardzic, N.; Qiao, W.; Aggarwal, V.; Chang, M.C.; Cong, J. Bonsai: High-Performance Adaptive Merge Tree Sorting. In Proceedings of the Annual International Symposium on Computer Architecture, Valencia, Spain, 30 May–3 June 2020; IEEE: San Francisco, CA, USA, 2020. [Google Scholar]

- Kwon, H.; Samajdar, A.; Krishna, T. MAERI: Enabling Flexible Dataflow Mapping over DNN Accelerators via Reconfigurable Interconnects. In Proceedings of the Twenty-Third International Conference on Architectural Support for Programming Languages and Operating Systems, Williamsburg, VA, USA, 24–28 March 2018; ACM: New York, NY, USA, 2018. [Google Scholar]

- Chen, Y.H.; Emer, J.; Sze, V. Eyeriss: A Spatial Architecture for Energy-Efficient Dataflow for Convolutional Neural Networks. In Proceedings of the Annual International Symposium on Computer Architecture, Seoul, Republic of Korea, 18–22 June 2016; IEEE: San Francisco, CA, USA, 2016. [Google Scholar]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. Eyeriss: In-Datacenter Performance Analysis of a Tensor Processing Unit. In Proceedings of the Annual International Symposium on Computer Architecture, Toronto, ON, Canada, 24–28 June 2017; IEEE: San Francisco, CA, USA, 2017. [Google Scholar]

- Amazon EC2 Inf1 Instance. Available online: https://aws.amazon.com/ec2/instance-types/inf1/ (accessed on 28 October 2022).

- FSD Chip–Tesla. Available online: https://en.wikichip.org/wiki/tesla_(car_company)/fsd_chip (accessed on 28 October 2022).

- Caulfield, A.M.; Chung, E.S.; Putnam, A.; Angepat, H.; Fowers, J.; Haselman, M.; Heil, S.; Humphrey, M.; Kaur, P.; Kim, J.Y.; et al. A Cloud-Scale Acceleration Architecture. In Proceedings of the Annual IEEE/ACM International Symposium on Microarchitecture, Taipei, Taiwan, 15–19 October 2016; IEEE: San Francisco, CA, USA, 2016. [Google Scholar]

- Bobda, C.; Mbongue, J.M.; Chow, P.; Ewais, M.; Tarafdar, N.; Vega, J.C.; Eguro, K.; Koch, D.; Handagala, S.; Leeser, M.; et al. The Future of FPGA Acceleration in Datacenters and the Cloud. ACM Trans. Reconfigurable Technol. Syst. 2022, 15, 1–42. [Google Scholar] [CrossRef]

- NVDLA Open Source Project. Available online: http://nvdla.org/hw/contents (accessed on 28 October 2022).

- Genc, H.; Kim, S.; Amid, A.; Haj-Ali, A.; Iyer, V.; Prakash, P.; Zhao, J.; Grubb, D.; Liew, H.; Mao, H.; et al. Gemmini: Enabling Systematic Deep-Learning Architecture Evaluation via Full-Stack Integration. In Proceedings of the ACM/IEEE Design Automation Conference, San Francisco, CA, USA, 5–9 December 2021; IEEE: San Francisco, CA, USA, 2021. [Google Scholar]

- A Chipyard Comparison of NVDLA and Gemmini. Available online: https://charleshong3.github.io/projects/nvdla_v_gemmini.pdf (accessed on 28 October 2022).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. preprint. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Xiong, N.; Liang, X.; Tao, D.; Li, S.; Ouyang, K.; Zhao, K.; DeBardeleben, N.; Guan, Q.; Chen, Z. TSM2: Optimizing Tall-and-Skinny Matrix-Matrix Multiplication on GPUs. In Proceedings of the ACM International Conference on Supercomputing, Phoenix, AZ, USA, 26–28 June 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-performance Deep Learning Library. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–11 December 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Anderson, A.; Gregg, D. MobileNets: Optimal DNN Primitive Selection with Partitioned Boolean Quadratic Programming. arXiv 2018, arXiv:1710.01079. preprint. [Google Scholar]

- Kung, H.T. Why Systolic Architectures? IEEE Comput. 1982, 15, 37–46. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef] [Green Version]

- Inayat, K.; Chung, J. Hybrid Accumulator Factored Systolic Array for Machine Learning. IEEE Trans. Very Large Scale Integr. Syst. 2022, 30, 881–892. [Google Scholar] [CrossRef]

- Park, S.S.; Chung, K.S. CONNA: Configurable Matrix Multiplication Engine for Neural Network Acceleration. Electronics 2022, 11, 2373. [Google Scholar] [CrossRef]

- Vieira, C.; Lorenzon, A.; Schnorr, L.; Navaux, P.; Beck, A.C. Exploring Direct Convolution Performance on the Gemmini Accelerator. In Proceedings of the 21st Brazilian Symposium on High-Performance Computing Systems, Virtual, 21–23 October 2020; SBC: Gramado, Brazil, 2020. [Google Scholar]

- Hasan, G.; Ameer, H.; Vighnesh, I.; Alon, A.; Howard, M.; John, W.; Colin, S.; Jerry, Z.; Albert, O.; Max, B.; et al. Gemmini: An Agile Systolic Array Generator Enabling Systematic Evaluations of Deep-Learning Architectures. arXiv 2019, arXiv:1911.09925v1. [Google Scholar]

- Chipyard. Available online: https://chipyard.readthedocs.io/en/stable/ (accessed on 10 November 2022).

- Digital Design with Chisel. Available online: https://github.com/schoeberl/chisel-book (accessed on 10 November 2022).

- RISCV-BOOM. Available online: https://docs.boom-core.org/en/latest/ (accessed on 10 November 2022).

- Rocket Core. Available online: https://chipyard.readthedocs.io/en/stable/Generators/Rocket.html (accessed on 10 November 2022).

- Gemmini. Available online: https://github.com/ucb-bar/gemmini (accessed on 10 November 2022).

- ONNX. Available online: https://onnx.ai/index.html (accessed on 10 November 2022).

- SiFive elf2hex. Available online: https://github.com/sifive/elf2hex (accessed on 10 November 2022).

- Verilator. Available online: https://veripool.org/verilator/ (accessed on 10 November 2022).

- Gookyi, D.A.; Lee, E.; Kim, K.; Jang, S.J.; Lee, S.S. Exploring GEMM Operations on Different Configurations of the Gemmini Accelerator. In Proceedings of the International SoC Design Conference, Gangneung, Republic of Korea, 19–22 October 2022; IEEE: San Francisco, CA, USA, 2022. [Google Scholar]

- Exploring Gemmini. Available online: https://github.com/dennisgookyi/Exploring-Gemmini (accessed on 8 February 2023).

- Dai Prà, N. A Python-based Hardware Generation Framework for Tensor Systolic Acceleration. Master’s Thesis, Politecnico Di Torino, Turin, Italy, 2021. [Google Scholar]

| Spatial Array Dimension | Matrix Dimension | Execution Times (Cycles) | ||||

|---|---|---|---|---|---|---|

| Gemmini Accelerators | Rocket CPU | |||||

| OS Dataflow | WS Dataflow | |||||

| im2col in CPU | im2col in Hardware | im2col in CPU | im2col in Hardware | |||

| 8 × 8 | 2 × 2 | 809 | 809 | 329 | 329 | 167 |

| 8 × 8 | 777 | 777 | 388 | 388 | 7227 | |

| 16 × 16 | 1341 | 1341 | 500 | 497 | 53,662 | |

| 32 × 32 | 3491 | 3491 | 1242 | 1173 | 410,974 | |

| 128 × 128 | 104,957 | 104,863 | 36,228 | 34,196 | 26,624,924 | |

| 16 × 16 | 2 × 2 | 815 | 815 | 358 | 358 | 171 |

| 8 × 8 | 785 | 785 | 401 | 401 | 7252 | |

| 16 × 16 | 879 | 879 | 475 | 475 | 53,671 | |

| 32 × 32 | 1477 | 1477 | 732 | 712 | 411,120 | |

| 128 × 128 | 17,761 | 17,489 | 11,172 | 9471 | 26,628,902 | |

| 32 × 32 | 2 × 2 | 891 | 891 | 482 | 482 | 140 |

| 8 × 8 | 901 | 901 | 472 | 472 | 7220 | |

| 16 × 16 | 942 | 942 | 560 | 560 | 53,672 | |

| 32 × 32 | 1116 | 1116 | 706 | 706 | 410,955 | |

| 128 × 128 | 5531 | 5114 | 5808 | 5765 | 26,628,857 | |

| 64 × 64 | 2 × 2 | 1002 | 1002 | 604 | 604 | 157 |

| 8 × 8 | 1021 | 1021 | 619 | 619 | 6844 | |

| 16 × 16 | 1068 | 1068 | 686 | 686 | 49,375 | |

| 32 × 32 | 1248 | 1248 | 969 | 969 | 379,797 | |

| 128 × 128 | 3955 | 3902 | 5263 | 5230 | 24,491,236 | |

| Gemmini Configuration | CLB LUTs (Utilization) | CLB Registers (Utilization) | BRAM Tile (Utilization) | DSPs (Utilization) | Frequency (MHz) | Power (W) |

|---|---|---|---|---|---|---|

| 8 × 8_CPU_im2col | 90,165 (2.21%) | 34,298 (0.42%) | 96 (4.44%) | 149 (3.88%) | 52.6 | 1.010 |

| 8 × 8_Gemmini_im2col | 91,695 (2.24%) | 34,414 (0.42%) | 96 (4.44%) | 149 (3.88%) | 52.6 | 1.064 |

| 16 × 16_CPU_im2col | 246,108 (6.02%) | 65,545 (0.80%) | 128 (5.93%) | 171 (4.45%) | 47.6 | 2.073 |

| 16 × 16_Gemmini_im2col | 248,239 (6.08%) | 65,237 (0.80%) | 128 (5.93%) | 171 (4.45%) | 47.6 | 2.141 |

| 32 × 32_CPU_im2col | 861,560 (21.09%) | 180,311 (2.21%) | 64 (2.96%) | 204 (5.31%) | 47.6 | 6.963 |

| 32 × 32_Gemmini_im2col | 863,585 (21.14%) | 179,171 (2.20%) | 64 (2.96%) | 204 (5.31%) | 47.6 | 6.581 |

| 64 × 64_CPU_im2col | 3,212,384 (78.62%) | 602,962 (7.38%) | 128 (5.93%) | 267 (6.95%) | 55.6 | 25.854 |

| 64 × 64_Gemmini_im2col | 3,215,870 (78.71%) | 600,044 (7.34%) | 128 (5.93%) | 267 (6.95%) | 55.6 | 31.240 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gookyi, D.A.N.; Lee, E.; Kim, K.; Jang, S.-J.; Lee, S.-S. Deep Learning Accelerators’ Configuration Space Exploration Effect on Performance and Resource Utilization: A Gemmini Case Study. Sensors 2023, 23, 2380. https://doi.org/10.3390/s23052380

Gookyi DAN, Lee E, Kim K, Jang S-J, Lee S-S. Deep Learning Accelerators’ Configuration Space Exploration Effect on Performance and Resource Utilization: A Gemmini Case Study. Sensors. 2023; 23(5):2380. https://doi.org/10.3390/s23052380

Chicago/Turabian StyleGookyi, Dennis Agyemanh Nana, Eunchong Lee, Kyungho Kim, Sung-Joon Jang, and Sang-Seol Lee. 2023. "Deep Learning Accelerators’ Configuration Space Exploration Effect on Performance and Resource Utilization: A Gemmini Case Study" Sensors 23, no. 5: 2380. https://doi.org/10.3390/s23052380

APA StyleGookyi, D. A. N., Lee, E., Kim, K., Jang, S.-J., & Lee, S.-S. (2023). Deep Learning Accelerators’ Configuration Space Exploration Effect on Performance and Resource Utilization: A Gemmini Case Study. Sensors, 23(5), 2380. https://doi.org/10.3390/s23052380