1. Introduction

In-bed posture monitoring has become an area of interest for many researchers to help minimize the risk of pressure sore development and to increase sleep quality. Pressure sores can develop when there is constant pressure applied to a region of the body, resulting in a lack of blood flow to this region and the potential of injury to surrounding skin and/or tissue as time continues. As of 2020, approximately 60,000 people die worldwide each year due to pressure sores [

1]. Those with pressure sores are 4.5 times more at risk of death than those with similar health factors but no pressure sores [

1]. The caregivers and hospital staff are often tasked with monitoring patients with pressure sores, which can be a laborious task and can be taxing on hospital resources [

2].

On the other hand, sleep quality can also be determined by evaluating body postures and movement during sleep [

3,

4,

5]. Good sleep quality is beneficial to the health of a person as it can improve work efficiency, strengthen the immune system, and help maintain one’s physical health [

6]. However, poor sleep quality can be very harmful to people as it can cause extreme fatigue and emotional exhaustion, and increase the risk of cardiovascular diseases as well as obesity and diabetes [

6,

7].

Currently, in-bed posture monitoring consists of using either an infrared video camera or wearable devices. However, there are some limitations to the use of these devices. For example, infrared video cameras are highly sensitive to environmental changes, such as blanket movement, and have privacy concerns. Wearable devices, such as rings and wristbands, can be obtrusive to sleep, reducing a person’s sleep quality, and are susceptible to motion artifacts [

6]. Therefore, an unobtrusive privacy-preserving contactless system that can remind caregivers to change the patient’s body position frequently is needed. The use of smart mats has been an area of investigation to monitor people in and outside of hospitals to reduce the strain on caregivers, eliminate privacy concerns, and increase the accuracy of in-bed posture detection.

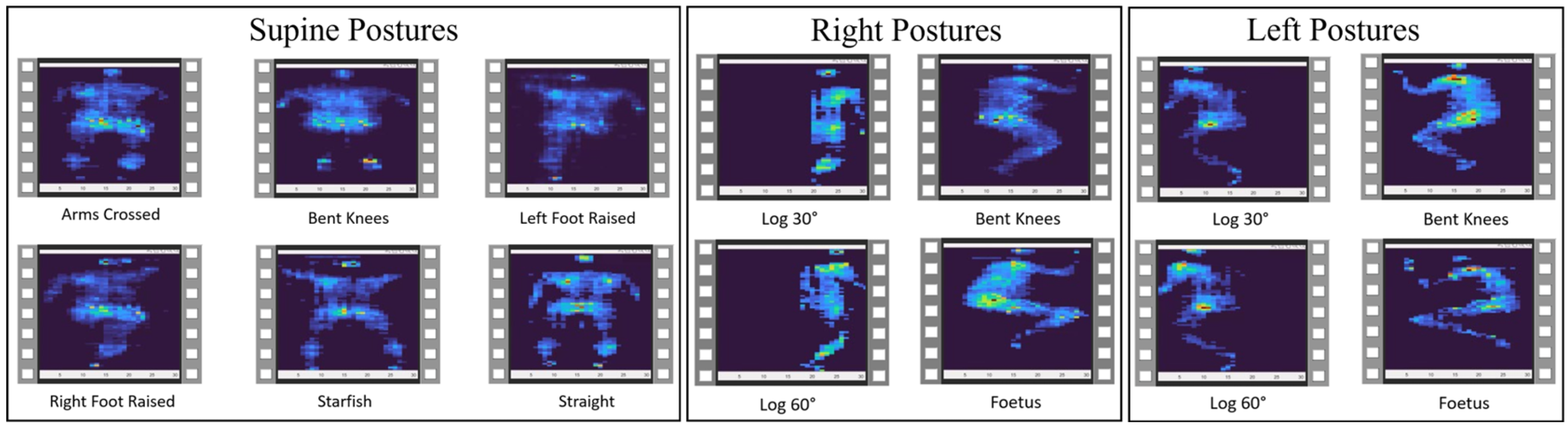

There are a variety of studies that have investigated in-bed posture detection using a smart mat composed of either pressure or force sensors. Many of these studies either classified the supine and prone positions as one class or ignored the prone position entirely, as these two positions can be difficult to distinguish. Additionally, these studies either examine their subjects in a simulated environment, where the investigator instructs the subject to lie in different positions, or in a clinical environment, where the subjects can lie in any position, and the investigation is typically performed overnight. Poyuan et al. conducted a simulated study on 13 participants with three sleeping postures (supine, right, and left). This study used a mat with 1048 pressure sensors and used a deep neural network to classify each position. They could achieve an accuracy of 82.70% [

8] using 10-fold cross validation. Similarly, Ostadabbas et al. conducted a simulated study on nine subjects with three sleeping postures: supine, right, and left. This study used a heat map image of a mat with 1728 resistive sensors to train a k-nearest neighbor algorithm. They achieved an overall accuracy of 98.4% using hold-out cross validation [

9]. This study only reported the accuracy values and did not mention any details about the amount of data they used, especially if the dataset was imbalanced. Metis et al. conducted a simulated study on three subjects with three sleeping postures. This study used a mat with 1024 pressure sensors and used principal component analysis and a Hidden Markov Model to classify these three postures, resulting in an accuracy of 90.40% using 10-fold cross validation [

10]. These previous studies did not evaluate their models by leave-one-subject-out (LOSO) validation which is necessary to yield unbiased performance estimates of the models. This is a very important factor in the body position monitoring application since the final model is supposed to be used with new users. In addition, the previous literature on different applications showed that most of the time, the accuracy value drops when using LOSO compared to 10-fold and hold-out validation [

11,

12].

In this study, we aim to classify heat map videos of three main in-bed postures (supine, left, and right) using a 3D Convolution Neural Network (CNN) model, and compare them to image classification results using four 2D CNN models. We will evaluate our models based on both 5-fold and LOSO cross validations after addressing the class imbalance to compensate for fewer data points in the minority groups.

3. Results

The classification metrics, such as accuracy, sensitivity, specificity, and F1-Score, shown in Equations (2)–(4), of different models with different hyper-parameters were calculated, and the best models for LOSO and 5-fold cross validations were selected.

TP,

TN,

FP, and

FN denote true positives, true negatives, false positives, and false negatives, respectively. The epoch number of 10, iteration of 60 for 5-fold and 120 for the LOSO, with a learning rate of 0.001 are achieved during hyper-parameter tuning.

Table 1 displays the classification metrics for each method for the I3D model.

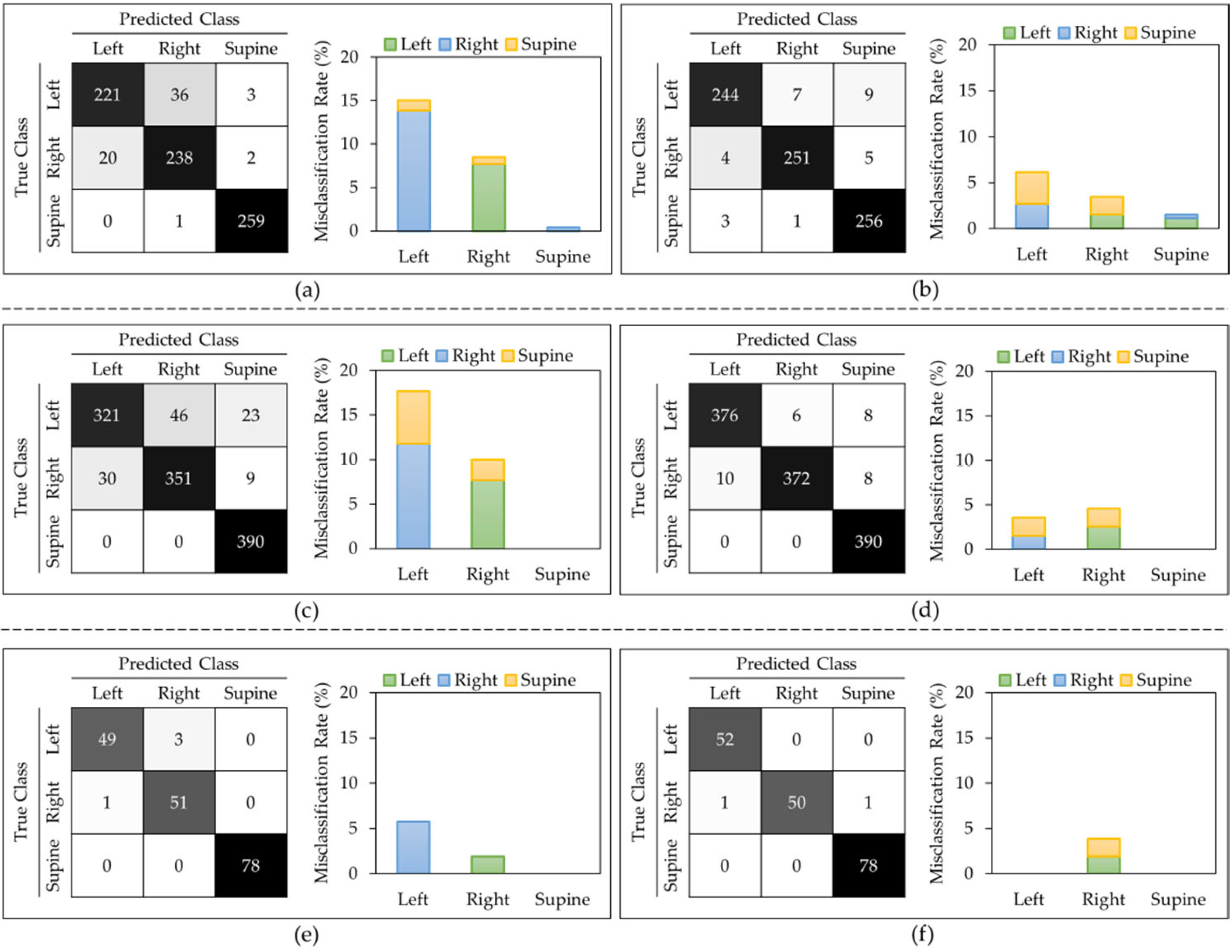

Figure 2 shows the Confusion Matrices and Misclassification Rates for all five down-sampling repetitions (52 videos per class), for all five over-sampling repetitions (78 videos per class), and for the class weight model (54 videos per right-side and left-side classes, 78 videos per supine class) with both LOSO and 5-fold cross validation. From the three approaches that were evaluated for the I3D model, the weighted model consistently provided the highest accuracies of 97.80 ± 4.50% and 98.90 ± 1.50% for LOSO and 5-fold, respectively. As shown in

Table 1, all other performance metrics followed the same trend. Therefore, it can be concluded that assigning weights to each class is the best I3D model to classify the body positions at sleep. Another observation was that not only did the average performance drop in LOSO compared to 5-fold, but all models also obtained higher standard deviations in LOSO, which could represent the differences in each subject’s data.

Figure 2 shows that, in most of the models, the left side class was more misclassified than the right side. The supine class typically provided the lowest misclassification rate.

Figure 3 displays sample frames of two misclassified videos. From this figure, it seems that the algorithm classified positions based on where most of the subject’s body was located. For example, when the participant lied on their left side (true class) but centered themselves in the middle of the mat as in

Figure 3b, the model predicted this position as supine.

For the four pre-trained models, classification metrics of different models with different hyper-parameters were calculated, and the best models for LOSO and 5-fold cross validations were selected. All hyper-parameters were kept the same as the I3D model for a fair comparison.

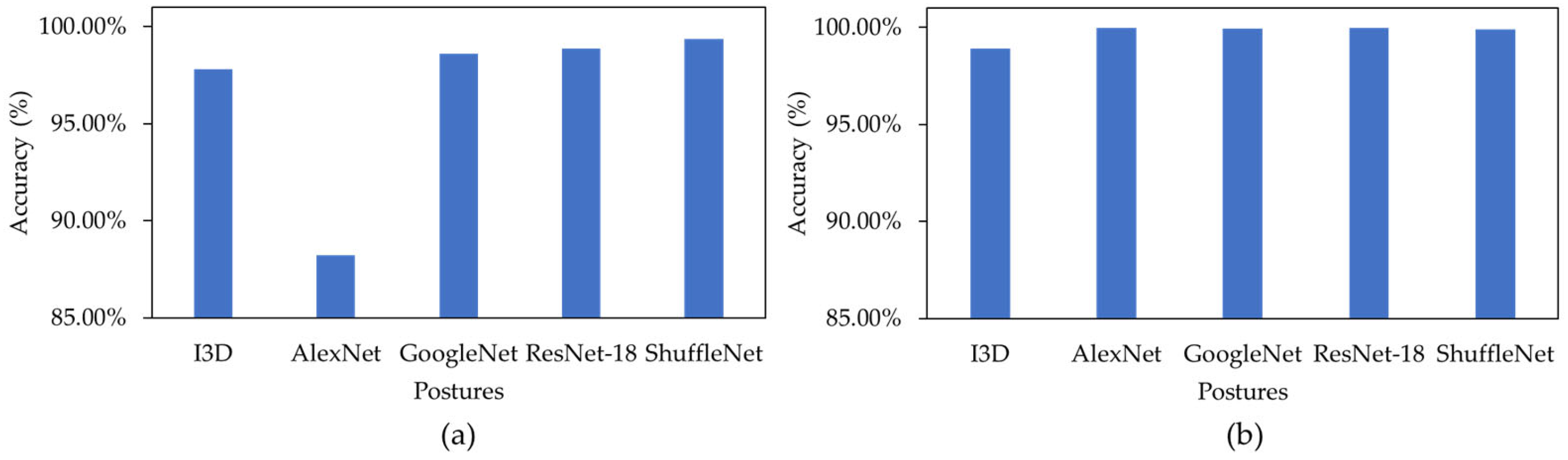

Figure 4 displays the classification metrics for all four pre-trained models for both LOSO and 5-fold. ResNet-18 model provided the highest overall performance with the down-sampling method. As expected, the performance of the models dropped slightly with LOSO compared to 5-fold validation regardless of the type of the model.

Figure 5 shows the Confusion Matrices and Misclassification rates of the best pre-trained 2D models for five down sampling (832 images per class), five over sampling (1248 images per class), and for the class weight model (832 images per right-side and left-side classes, 1248 images per supine class) with both LOSO and 5-fold cross validation. The best down-sampled and over-sampled models are with ResNet-18, and the best-weighted models are with ShuffleNet. Overall, the ResNet-18 model received the highest accuracy when predicting the correct postures using the down-sampling method, with an accuracy of 99.62 ± 0.37% for LOSO and 99.97 ± 0.03% for 5-fold shown in

Figure 4a.

Figure 5 displays that in all the LOSO models, every class had some misclassification associated with it, with the left class typically having the highest misclassification rate and the supine class having the lowest misclassification rate. However, in the 5-fold models, the right-side class never got misclassified, but the supine class always had some misclassification, with a maximum rate of 0.08%.

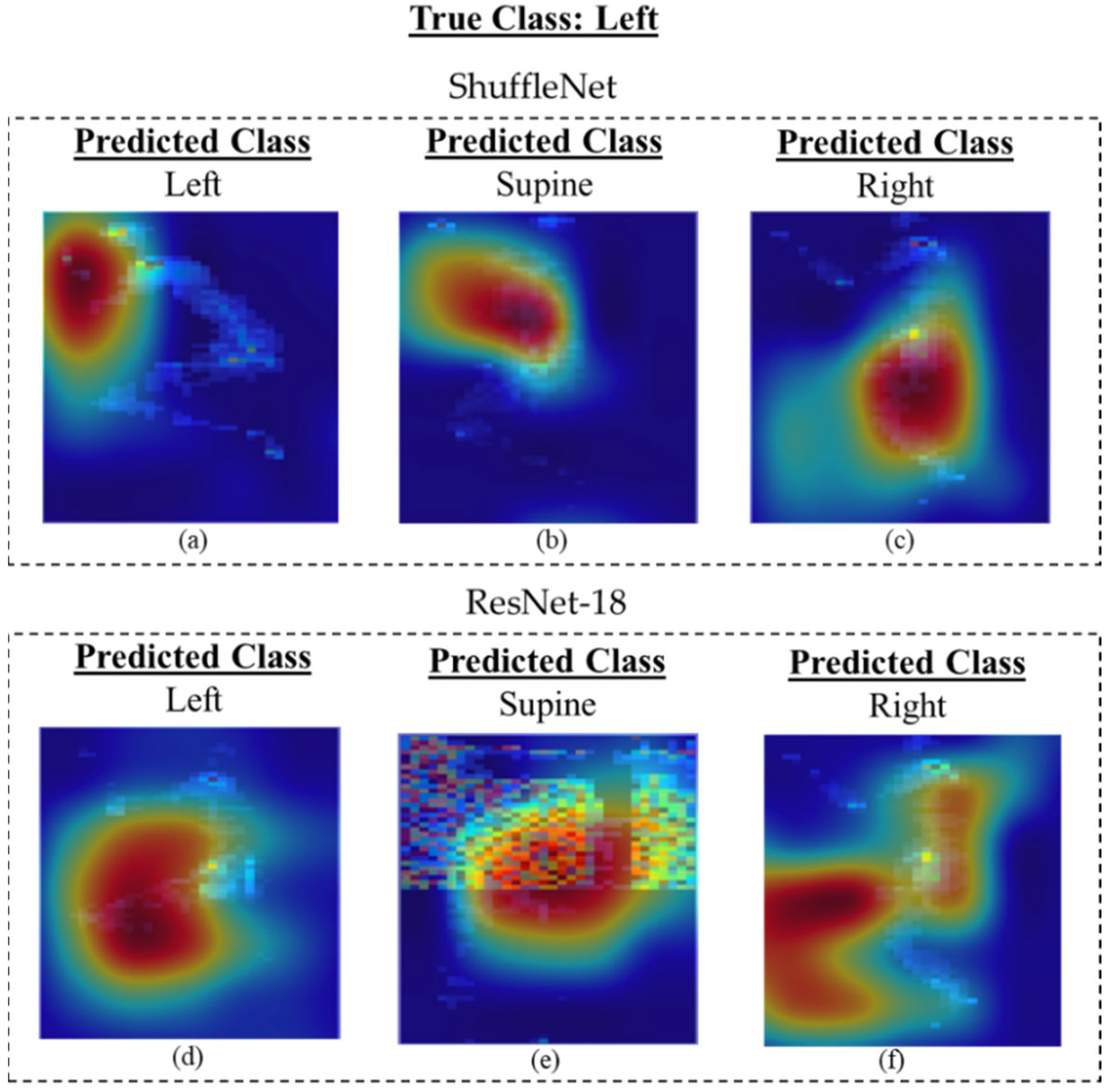

To further evaluate the best-achieved models, ResNet18 and ShuffleNet, a gradient-weighted class activation map (Grad-CAM) was used to highlight the important regions of the image for model prediction. Grad-CAM allows a better understanding of which regions the models pay more attention to, in order to classify the images. For the ShuffleNet model, typically, misclassification occurred when the model was looking at regions of the image that did not align with the location of the body in that position. For example, in

Figure 6b, it seems that the model was more focused on the center of the mattress, which is a correct focus for the supine class, or for

Figure 6c, where the body was inclined to the right side of the mat. For the ResNet-18 model, the misclassification occurred when the bulk of the body was not found in the region of interest. For example, in

Figure 6f, the model was primarily looking for the posture to be located toward the left side of the image; however, most of the posture was found towards the middle right of the image.

All 2D weighted models provided approximately 1–2% higher accuracies than the I3D model when looking at the 5-fold cross validation. Notably, in order to compare the 2D and the 3D algorithms, the exact frames present in the 3D data were extracted as single frame inputs for the 2D CNN models. This led to some images being very similar to each other in the dataset. Therefore, it is more useful to compare the LOSO results of the 2D and 3D models, as there is no chance of data leakage. The ShuffleNet, ResNet-18, and GoogleNet received approximately 1–2% higher accuracies for the weighted method than the I3D model when looking at LOSO cross validation shown in

Figure 7a.

There could be reasons to explain why these 2D models received a slightly higher accuracy when classifying this dataset. Firstly, for the 2D models, the dataset used to train, validate, and test was significantly larger than the I3D model, because the 2D models used frames from each video (16 frames per video), whereas the I3D model just looked at a video in its entirety. Therefore, the training and validation set were larger for the 2D models, allowing the model to have more information to learn from. Secondly, when testing the 2D models, only certain frames were misclassified, not an entire sequence of frames from a single video, whereas if there were specific frames within a video that made the I3D model misclassify the video during testing, then the entire video would be misclassified. When compared with previous work that was presented in the introduction, it can be seen that our proposed algorithms achieved higher performance, creating improved models for classifying the three postures. This could be due to a variety of reasons, such as an increase in the number of subjects, hyper-parameter tuning, and the pre-trained models.

Although these algorithms achieved high scores for distinguishing between three in-bed sleeping postures, it is important to recognize some limitations, as well. A limitation of the dataset was that only three postures were considered, and the prone position, another common sleeping posture, was not considered. Many papers chose to exclude the prone position as it often got confused with the supine position. Therefore, if the prone position was considered, then it can be assumed that the model would receive lower accuracies. Additionally, the data collected for this experiment were collected on healthy participants, with no history of pressure injuries, an age between 19 and 34, a height between 169 and 186 cm, and a weight between 63 and 100 kg. Therefore, this study may not replicate the population group that is most likely to use a pressure mat for pressure injury monitoring. Furthermore, the data collected in this study were set sleeping positions for 2 min, which is not a realistic use case for this system. In the future, it would be more beneficial to collect data in an overnight study, where the participants can lay in their normal sleeping postures, to train a model on more realistic positions. It should also be noted that within each class, there were different sub-classes associated with sub-positions that were not considered within the models of this study. However, we plan to consider that as one of our future works. Therefore, a more diverse dataset is required that includes the prone position, with participants within the aging population, in an overnight study.

Table 2 compares the previous studies that have used smart mats to classify three main in-bed postures using various algorithms and cross validation techniques. As depicted in

Table 2, all previous studies validated their models by either k-fold or hold-out techniques despite the fact that the final models are expected to be used to predict the behavior of separate individuals; thus, it is important to use a subject-independent validation technique such as LOSO. Although the proposed model in [

19] achieved the highest accuracy value than all other previous studies within this paper, this might not be a fair comparison as different studies used various validation techniques and datasets. For example, the results captured from the hold-out method will be directly affected by the type and quality of the data (50%) that were used for testing the model. Comparing the k-fold results, our proposed model provided the best performance in both 2D and 3D networks.

4. Conclusions

An Inflated 3D model was used to differentiate in-bed sleeping posture videos of the supine, right-side, and left-side classes. The 5-fold and leave-one-subject-out cross validation methods were used to evaluate the models. Due to the imbalanced dataset, down sampling, over sampling, and weighted models were used for each cross validation method to determine the best method to represent the data. The weighted model achieved the highest accuracy values of 98.90 ± 1.05% and 97.80 ± 2.14% for 5-fold and LOSO, respectively. To compare the Inflated 3D model, four 2D pre-trained deep learning models were used: AlexNet, ResNet-18, GoogleNet, and ShuffleNet. ResNet-18 achieved the highest accuracy of 99.62 ± 0.37% for LOSO, for the down sampling model. However, for a more accurate comparison of the weighted models, the ShuffleNet algorithm received the highest accuracies of 99.35 ± 0.43% for LOSO. Though these models provide a great basis for classifying various postures, there are many gaps within the research that need to be addressed, primarily with regard to the database, which can then be used for a more in-depth analysis. Some aspects include (1) collecting data on participants in various settings, such as at home, in hospitals, and in long-term care facilities, to have a more accurate representation of realistic data; (2) collecting data on various mattress stiffness, to evaluate the efficacy of a smart mat and ensure that the data being collected are constant throughout various environments; (3) adding common external devices, such as blankets and pillows, to provide a more realistic database; and (4) collecting overnight data to have a more holistic dataset that includes non-standardized positions as well as the transition between positions. This will open the possibility for a better analysis that will create models to be used in practice. Eventually, these models can be used as a preventative measure for pressure injuries as well as a monitoring tool for sleep quality. They can be used to either notify caregivers when it is time to change the position of the patients or can be used to monitor overnight sleeping to determine how much a person is moving in their sleep, thus evaluating the sleep quality of the person.