Robot Programming from a Single Demonstration for High Precision Industrial Insertion

Abstract

:1. Introduction

- We propose a novel Programming by Demonstration (PbD) framework to achieve a high-precision robotic industrial insertion task by combining visual servoing techniques, which generate imitated trajectory from human hand movements and then fine-tune the target positioning using the visual servoing.

- We simplify the object localization problem as a moving object detection problem. This allows our method to automatically identify the image features on the object without requiring tedious handcrafted image feature selection and any prior knowledge of the object.

- We introduce a new line feature matching approach, instead of traditional feature descriptors that are often affected by lighting or background changing, for identifying association constraints between demonstrator image and imitator image.

2. Related Works

3. Proposed Approach

3.1. Human Demonstration Phase

3.1.1. Imitated Trajectory Generation

3.1.2. Line Feature Generation

3.2. Robot Execution Phase

3.2.1. Fine-Tuned Trajectory Generation

3.2.2. Line Feature Matching

| Algorithm 1 Line Feature Matching |

|

3.2.3. Visual Servoing

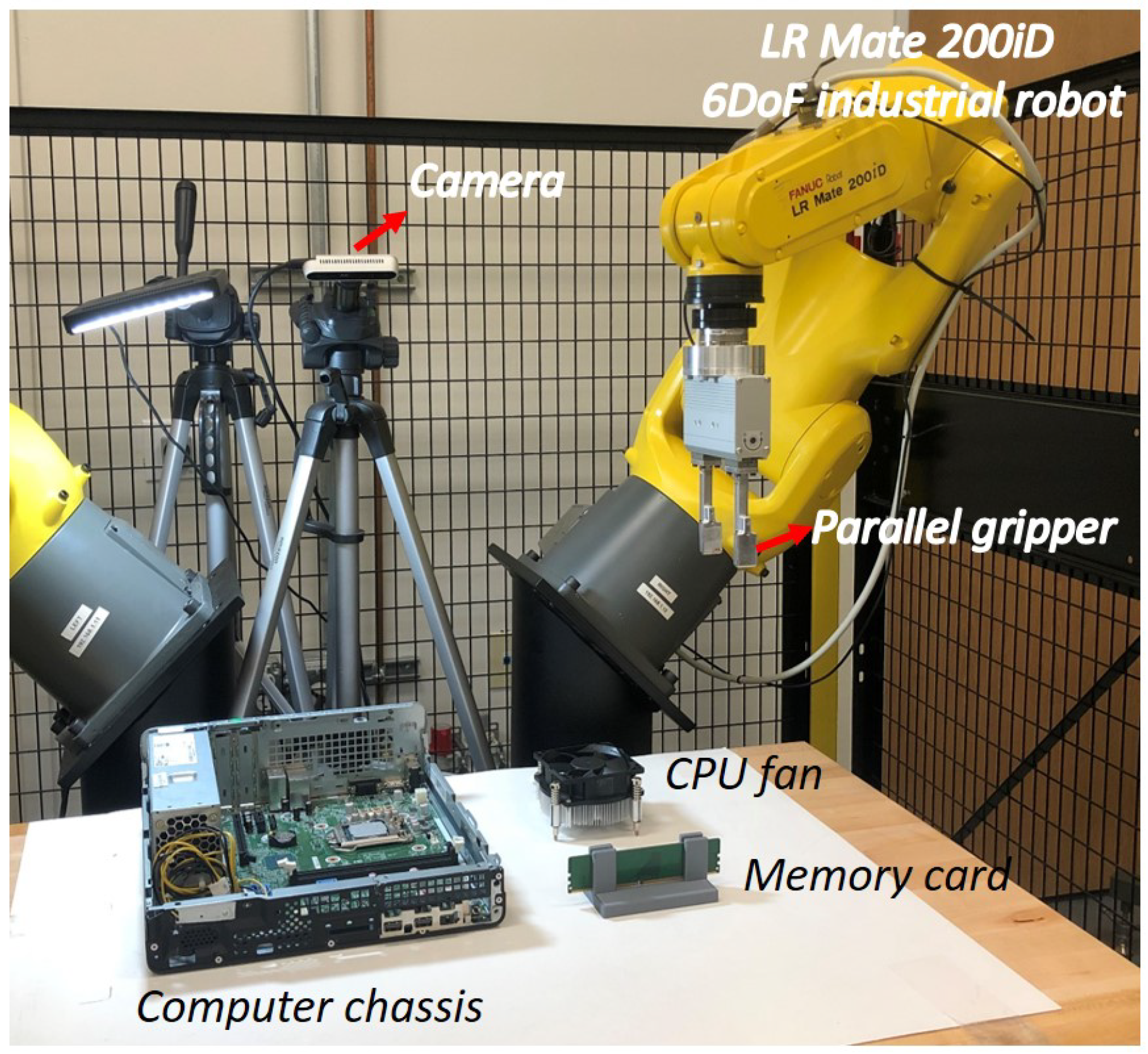

4. Experiment

4.1. Line Feature Matching

4.2. Experiments with Real Robot

- CPU fan installation: Align four pins of the CPU fan and four holes on the motherboard within ±1 mm.

- Memory card insertion: Insert the memory card into the slot on the motherboard within ±0.8 mm.

- Connector insertion: Insert the connector into the base within ±1.7 mm.

- Controller packing: Place the controller into the slot inside the bin within ±1 mm.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- IFR Presents World Robotics 2021 Reports. 2021. Available online: https://ifr.org/ifr-press-releases/news/robot-sales-rise-again (accessed on 30 June 2022).

- Ravichandar, H.; Polydoros, A.S.; Chernova, S.; Billard, A. Recent advances in robot learning from demonstration. Annu. Rev. Control. Robot. Auton. Syst. 2020, 3, 297–330. [Google Scholar] [CrossRef] [Green Version]

- Ghahramani, M.; Vakanski, A.; Janabi-Sharifi, F. 6d object pose estimation for robot programming by demonstration. In Progress in Optomechatronic Technologies; Springer: Berlin/Heidelberg, Germany, 2019; pp. 93–101. [Google Scholar]

- Argus, M.; Hermann, L.; Long, J.; Brox, T. Flowcontrol: Optical flow based visual servoing. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 7534–7541. [Google Scholar]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26–26 August 2004; Volume 2, pp. 28–31.

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the 7th IEEE international Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Torabi, F.; Warnell, G.; Stone, P. Behavioral cloning from observation. arXiv 2018, arXiv:1805.01954. [Google Scholar]

- Cao, Z.; Hu, H.; Yang, X.; Lou, Y. A robot 3C assembly skill learning method by intuitive human assembly demonstration. In Proceedings of the 2019 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 21–22 August 2019; pp. 13–18. [Google Scholar]

- Lafleche, J.F.; Saunderson, S.; Nejat, G. Robot cooperative behavior learning using single-shot learning from demonstration and parallel hidden Markov models. IEEE Robot. Autom. Lett. 2018, 4, 193–200. [Google Scholar] [CrossRef]

- Li, C.; Fahmy, A.; Li, S.; Sienz, J. An enhanced robot massage system in smart homes using force sensing and a dynamic movement primitive. Front. Neurorobotics 2020, 14, 30. [Google Scholar] [CrossRef]

- He, Z.; Feng, W.; Zhao, X.; Lv, Y. 6D pose estimation of objects: Recent technologies and challenges. Appl. Sci. 2020, 11, 228. [Google Scholar] [CrossRef]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a robot: Deep reinforcement learning, imitation learning, transfer learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.; Ma, Z.; Gomez, R.; Nakamura, K.; He, B.; Li, G. A review on interactive reinforcement learning from human social feedback. IEEE Access 2020, 8, 120757–120765. [Google Scholar] [CrossRef]

- de Giorgio, A.; Maffei, A.; Onori, M.; Wang, L. Towards online reinforced learning of assembly sequence planning with interactive guidance systems for industry 4.0 adaptive manufacturing. J. Manuf. Syst. 2021, 60, 22–34. [Google Scholar] [CrossRef]

- Arora, S.; Doshi, P. A survey of inverse reinforcement learning: Challenges, methods and progress. Artif. Intell. 2021, 297, 103500. [Google Scholar] [CrossRef]

- Davchev, T.; Bechtle, S.; Ramamoorthy, S.; Meier, F. Learning Time-Invariant Reward Functions through Model-Based Inverse Reinforcement Learning. arXiv 2021, arXiv:2107.03186. [Google Scholar]

- Zhang, T.; Mo, H. Reinforcement learning for robot research: A comprehensive review and open issues. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211007305. [Google Scholar] [CrossRef]

- Das, N.; Bechtle, S.; Davchev, T.; Jayaraman, D.; Rai, A.; Meier, F. Model-based inverse reinforcement learning from visual demonstrations. arXiv 2020, arXiv:2010.09034. [Google Scholar]

- Vecerik, M.; Sushkov, O.; Barker, D.; Rothörl, T.; Hester, T.; Scholz, J. A practical approach to insertion with variable socket position using deep reinforcement learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada, 20–24 May 2019; pp. 754–760. [Google Scholar]

- Ahmadzadeh, S.R.; Paikan, A.; Mastrogiovanni, F.; Natale, L.; Kormushev, P.; Caldwell, D.G. Learning symbolic representations of actions from human demonstrations. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 25–30 May 2015; pp. 3801–3808. [Google Scholar]

- Jin, J.; Petrich, L.; Dehghan, M.; Jagersand, M. A geometric perspective on visual imitation learning. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5194–5200. [Google Scholar]

- Valassakis, E.; Di Palo, N.; Johns, E. Coarse-to-fine for sim-to-real: Sub-millimetre precision across wide task spaces. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5989–5996. [Google Scholar]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Alshawa, M. lCL: Iterative closest line A novel point cloud registration algorithm based on linear features. Ekscentar 2007, 10, 53–59. [Google Scholar]

- Poreba, M.; Goulette, F. A robust linear feature-based procedure for automated registration of point clouds. Sensors 2015, 15, 1435–1457. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, K.; Tang, T. Robot programming by demonstration with a monocular RGB camera. Ind. Robot. Int. J. Robot. Res. Appl. 2023, 50, 234–245. [Google Scholar] [CrossRef]

- Schaal, S.; Mohajerian, P.; Ijspeert, A. Dynamics systems vs. optimal control—A unifying view. Prog. Brain Res. 2007, 165, 425–445. [Google Scholar] [PubMed]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Wilamowski, B.M. Levenberg–marquardt training. In Intelligent Systems; CRC Press: Boca Raton, FL, USA, 2018; pp. 1–61. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual servoing and visual tracking. In Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Drost, B.; Ulrich, M.; Bergmann, P.; Hartinger, P.; Steger, C. Introducing mvtec itodd-a dataset for 3d object recognition in industry. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2200–2208. [Google Scholar]

- Community, B.O. Blender—A 3D Modelling and Rendering Package. Available online: https://manpages.ubuntu.com/manpages/bionic/man1/blender.1.html (accessed on 30 June 2022).

- Luo, J.; Sushkov, O.; Pevceviciute, R.; Lian, W.; Su, C.; Vecerik, M.; Ye, N.; Schaal, S.; Scholz, J. Robust multi-modal policies for industrial assembly via reinforcement learning and demonstrations: A large-scale study. arXiv 2021, arXiv:2103.11512. [Google Scholar]

| Task | CPU Fan | Memory Card | Connector | Controller |

|---|---|---|---|---|

| Success Rate | Success Rate | Success Rate | Success Rate | |

| Visual servoing | ||||

| Ours |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Fan, Y.; Sakuma, I. Robot Programming from a Single Demonstration for High Precision Industrial Insertion. Sensors 2023, 23, 2514. https://doi.org/10.3390/s23052514

Wang K, Fan Y, Sakuma I. Robot Programming from a Single Demonstration for High Precision Industrial Insertion. Sensors. 2023; 23(5):2514. https://doi.org/10.3390/s23052514

Chicago/Turabian StyleWang, Kaimeng, Yongxiang Fan, and Ichiro Sakuma. 2023. "Robot Programming from a Single Demonstration for High Precision Industrial Insertion" Sensors 23, no. 5: 2514. https://doi.org/10.3390/s23052514