4.1.1. Dataset Description and Data Processing

The bearing dataset from XJTU-SY was collected from a bearing test rig which is shown in

Figure 7. It mainly consists of the following parts: an AC motor, a hydraulic loading device, a tested bearing, a motor speed controller, and two accelerometers for measuring vertical and horizontal vibration [

34]. The vibration signals were measured with a sampling frequency of 25.6 kHz. The dataset contains complete run-to-failure data of bearings which were acquired by conducting many accelerated degradation experiments. A total of 32,768 data points (i.e., 1.28 s) are recorded for each sampling until serious failure occurs.

In this paper, the dataset collected under a rotational speed of 2250 rpm and a radial force of 11 kN is selected for experimental verification. Four different types of health states are considered for fault diagnosis, which are normal (Nor), inner race fault (IRF), outer race fault (ORF), and bearing cage fault (BCF). Since the dataset is a kind of full life cycle data, it is necessary to judge the initial occurred time of each fault from the whole data. Considering that the RMS-3σ rule is a smooth and more accurate fault identification method [

36], the RMS-3σ rule is adopted to identify the early failure occurrence time point of each fault. The initial failure time of bearing 2–1, 2–2, and 2–3 (i.e., IRF, ORF, and BCF) are identified as 468, 52, and 334, respectively. That is, vibration signals after each initial failure point are used to construct data samples. The RMS-3σ results and corresponding initial failure points are shown in

Figure 8.

It is well known that each sample should contain at least one circle sampling point. In this dataset, 683 sampling points can be collected per revolution according to the following equation:

where

fz represents the sampling frequency and

n represents the rotating speed.

Therefore, a sliding window with a constant length of 1280 points is adopted to construct samples. Subsequently, Z-score standard normalization is adopted to process the time series samples, which are then converted to time-frequency images with a size of 64 × 64 × 3 based on CWT and subsequent feature fusion. Finally, a total of 750 samples of each class are obtained, among which 250 samples are randomly selected to form the testing dataset. Moreover, a different number of minority samples are selected from the remaining samples of each class for IDCGAN model training according to the relative experiment settings. That is, if 500 samples are used as the majority sample, 100 minority samples are selected for IDCGAN model training with an imbalanced ratio of 0.2.

4.1.2. Model Training and Sample Generation

In the training process of IDCGAN, the batch size and the iteration epoch are set as 32 and 2000 for each class, respectively. Besides, the discriminative ability of the discriminator is significantly stronger than the generative ability of the generator at the beginning; the TTUR strategy is adopted to balance the learning speed between the generator and the discriminator, which can improve the training stability. In this paper, the learning rates of the generator and the discriminator are 0.0001 and 0.0003, respectively.

The losses of the generator and discriminator, Wasserstein distance, together with visualization of the generated samples are adopted to better show the training process. Taking the IRF class with a limited sample number of 100 as an example, 100 real IRF samples are randomly selected from the training database as a minority class, which is used for training the IDCGAN model. The training process is shown in

Figure 9. It can be seen that the discriminator loss, the generator loss, and the Wasserstein distance converge quickly and the quality of the generated samples becomes better as the iteration increases. The Nash equilibrium training is almost achieved when the iteration number increases to 1750, and the sample quality is very close to the real sample.

4.1.3. Evaluation of the Generated Samples

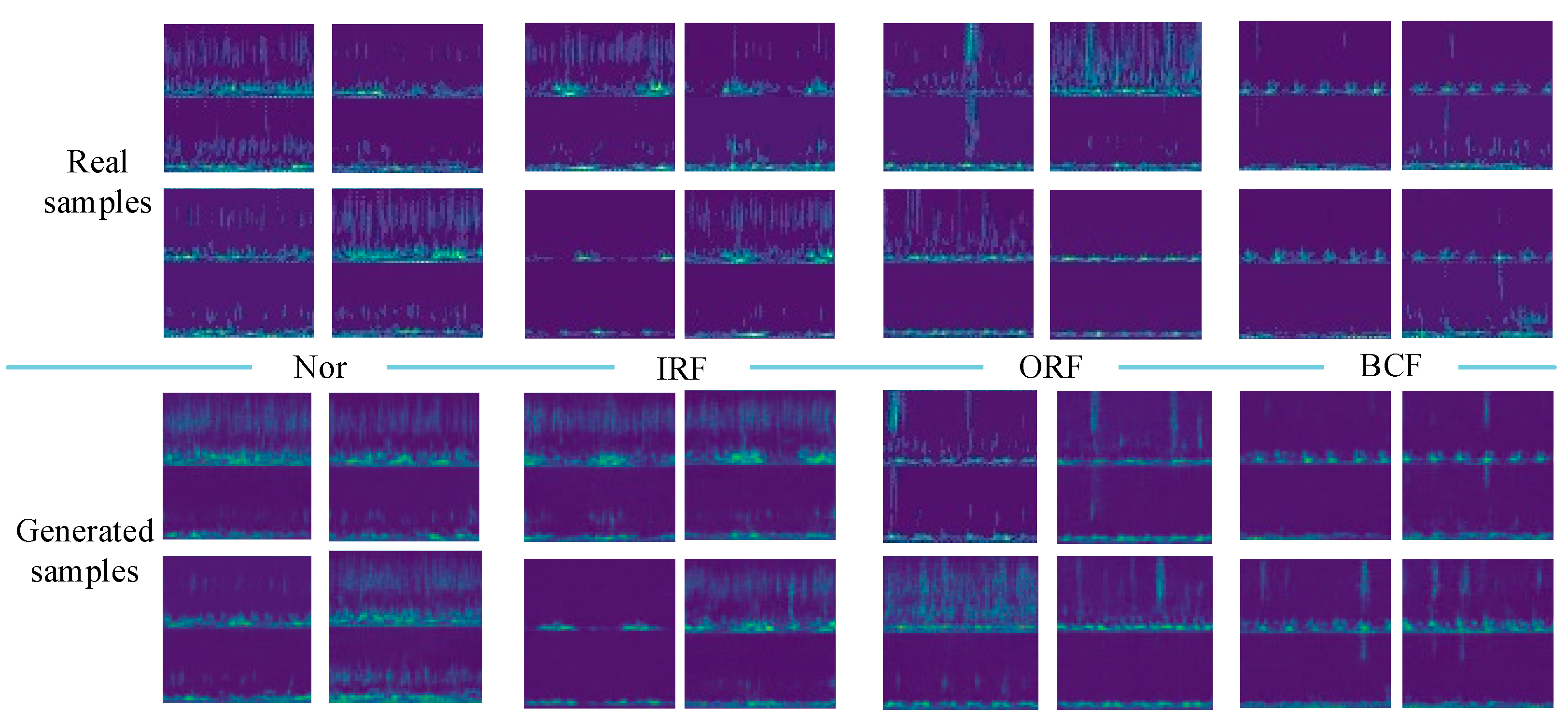

To evaluate the quality of the generated samples, visualization results of the real samples and the generated samples are presented. Taking the limited sample number of 100 as an example, 100 real samples of each health state are adopted to train IDCGANs and then generate new synthetic samples.

The comparison results of the generated samples and corresponding real samples are shown in

Figure 10. It is clear that all classes of the generated samples show favorable consistency in time-frequency features and also have some diversity, which means that the IDCGANs have captured the distribution of the initial training data and can generate high-quality synthetic samples.

To quantitatively evaluate the quality of the generated sample and show the superiority of the proposed feature fusion strategy, experiments in

Table 1 are conducted based on the GAN-train strategy [

37]. In all experiments mentioned above, IDCGAN is used for sample generation and CBAM-ResNet is used for fault classification. Time-frequency images of vibration signals from a single sensor are adopted to train the classifier in experiment A while the other experiments are based on fused time-frequency images from multiple sensors. For experiment B, real samples are used for fault identification and the generated samples in other experiments are obtained from the IDCGANs training with the same real samples of experiment B. According to reference [

37], the diagnosis accuracies of experiments B and C are called GAN-base and GAN-train, respectively. Moreover, the distribution of synthetic samples is quite similar to the real samples once the values of the GAN-base and GAN-train are close. Experiments D and E are adopted to show the similarity and diversity between real and generated samples, which can be regarded as the extension of the GAN-train strategy.

As shown in

Table 1, the diagnosis accuracy with information from single sensor (experiments A) is only 89.78% with a large standard deviation of 2.51%, which is increased to 96.89% with a smaller standard deviation of 1.21% by adopting the feature fusion method. This indicates that the proposed fusion method can provide more effective features which are beneficial for the classifier to learn more important information. By comparing experiments B and C, it can be seen that the GAN-train value with all generated samples is about 92.35%, which is close to the GAN-base value of 96.89% obtained based on real samples. In addition, both the diagnosis accuracy and standard deviation in experiment D are almost equal to the GAN-base value, which is obtained with half real samplesand half-generated samples of all classes. This further demonstrates the high similarity between the generated and real samples. In experiment E, the diagnosis accuracy is further improved to 98.83%, and the standard deviation is reduced to 0.61%, which proves the diversity of the samples generated by IDCGAN. From the above, it is reasonable to believe that the IDCGAN can be used to sample generation with similar diversity samples and for minority sample supplements.

4.1.4. Single-Class Imbalanced Fault Diagnosis

Different evaluation criteria have been adopted to evaluate the similarity between the synthetic samples and the real samples. However, this operation will cause excessive similarity between the synthetic samples and the real samples, which ignores the sample diversity and will cause information redundancy [

38]. Therefore, the generated samples are directly applied to expand the initially limited dataset. Moreover, the diagnosis accuracy can also be used as an indirect criterion to evaluate the generation ability of different generative models.

To verify the effectiveness of the proposed method in cases with limited fault samples, the single-class and multi-class imbalanced fault diagnosis experiments are established. Different initial fault samples are adopted to train the generative models, which are then used to generate new samples for data augmentation and achieve balanced data. Considering that the ORF occurs more frequently in all the full life cycle bearing experiments in the XJTU-SY dataset, ORF is selected as the minority class fault in single-class imbalanced experiments. It should be mentioned that the number of the majority class is 500, and the initial minority samples with different imbalance ratios are selected for training the generative models and expanding the minority class to 500 in the experiments, which are 0.5, 0.2 and 0.1 corresponding 250, 100 and 50 samples. To prove the superiority of the proposed method and different data augmentation methods, i.e., SMOTE, adaptive synthetic sampling (ADASYN), SN-assisted DCGAN (SN-DCGAN), and DCGAN based on Wasserstein distance with GP (WGANGP) are adopted for comparison. Meanwhile, different classifiers, i.e., CNN, ResNet, and CBAM-ResNet are used for comparative experiments to reduce the impact of the classifier on the results and verify the superiority of the improved classifier.

The CNN model is just a partial structure of the CBAM-ResNet without the skip connections and CBAM, while the ResNet is the partial structure of CBAM-ResNet only without CBAM. Since the generation mechanisms of SMOTE and ADASYN are quite different from GAN-based methods and these methods cannot generate synchronizing signals, experiments based on a single sensor were also conducted. Experimental diagnosis results are detailed in

Table 2, where the initial samples mean that the imbalanced samples are directly used for the training classifiers.

As shown in

Table 2, the diagnosis accuracy based on the initial imbalanced samples declines obviously with the increase in imbalance degree. For example, the accuracy based on multiple sensors decreases from 97.38% to 94.3% when the imbalance ratio decreases from 0.5 to 0.1, while the accuracy based on a single sensor possesses a similar decrease tendency but with much lower accuracy from 94.83% to 89.8%. Besides, the decline rate with the increased imbalance degree of multiple sensors is much slower than that of the single sensor.

The accuracies significantly increase once the initial imbalanced data are expanded by different synthesis methods whether data from a single sensor or multiple sensors are adopted. It can be concluded that the imbalanced samples have a great influence on the classifier performance, and the data augmentation method can attenuate the imbalance influence. Among the generative methods, the proposed IDCGAN obtains the best diagnosis performance with any of the adopted classifiers. Taking the imbalance ratio of 0.1 and the ResNet classifier as examples, the proposed IDCGAN with multi-sensor can achieve a diagnostic accuracy of 98.49%, which is much higher than that of the initial sample of 94.3%. Moreover, this is also higher than the accuracy of the SN-DCGAN and WGANGP, which are 98.12% and 97.04%, respectively. Taking an imbalance ratio of 0.2 and IDCGAN as examples, the accuracy based on CBAM-ResNet is 99.54%, which is higher than the accuracy of CNN and ResNet with values of 98.52% and 98.61%. Furthermore, the proposed IDCGAN with the CBAM-ResNet method can achieve the best diagnostic accuracies in almost all imbalance ratios.

The confusion matrix is adopted to better illustrate the influence of different generative models on classification.

Figure 11 shows the confusion matrixes based on the ResNet classifier under limited ORF conditions with an imbalance ratio of 0.1. It can be seen that a large number of ORF samples have been misclassified as other classes. For example, 80 and 76 ORF samples are misclassified as Nor and IRF while the classification of the majority class samples is correct. It indicates that the minority samples restrict the classifier from learning more valuable information. The misclassification of ORF is reduced when the synthetic samples generated by SN-DCGAN and WGANGP are adopted for data augmentation, and the misidentified samples are further reduced when the proposed IDCGAN is adopted.

In addition, single-class imbalanced fault diagnosis experiments based on IRF or BCF with an initial imbalance ratio of 0.2 are also performed. The results are shown in

Table 3. The diagnosis results are consistent with the results based on ORF imbalanced data. For the single sensor, IDCGAN has higher diagnostic accuracy than other data synthesis methods, i.e., SMOTE and ADASYN whichever fault is limited. Moreover, the diagnosis accuracy of all experiments based on IDCGAN can reach above 98% while the initial accuracy with imbalanced data is less than 93.5%. As for multiple sensors, IDCGAN can also achieve the highest accuracy compared with other data synthesis methods, i.e., SN-DCGAN and WGANGP with the same classifier. In addition, the diagnosis accuracy-based CBAM-ResNet is the highest among the three classifiers. For example, the accuracy based on IDCGAN and CBAM-ResNet can reach 99.41% after the data augmentation in BCF limited occasion, which is 4.32% higher than that of CNN and 1.45% higher than that of ResNet. In conclusion, the proposed IDCGAN can well grasp the time-frequency sample distribution and generate high-quality samples for data augmentation. Moreover, the proposed CBAM-ResNet model can enhance the diagnosis accuracy and achieve satisfying results.

4.1.5. Multi-Class Imbalanced Fault Diagnosis

In practice engineering, there always exist multiple fault imbalance problems. Thus, experiments based on multi-class imbalanced faults are performed to demonstrate the effectiveness of the proposed method. In this section, different imbalance ratios of multiple fault classes are selected as the initial training data to simulate multi-class imbalance occasions, which are detailed in

Table 4. It should be mentioned that the majority of classes have 500 samples and the presented results are based on expanded balanced data except for the initial samples. Corresponding experimental results are shown in

Figure 12. It can be seen that the accuracies of limited data with three fault classes (i.e., experiment A, B, and C in

Table 4) are worse than that of only two limited fault classes data (i.e., experiments D, E and F in

Table 4), especially when the initial samples are adopted for training classifiers. It is clear that the misclassification will decrease as the number of minority sample classes decreases. Furthermore, experiment results of all multi-class imbalanced fault diagnoses in

Figure 12 indicate that the IDCGAN with CBAM-ResNet proposed in this paper can achieve the best diagnosis results, which is consistent with the results of single-class imbalanced experiments. The above results show that the proposed method also has excellent diagnosis performance on multi-class imbalanced datasets.

To better present the features of different generative methods and different classifiers, t-Distributed Stochastic Neighbor Embedding (t-SNE) is used as a qualitative method to evaluate the extracted high-dimensional sample feature. The t-SNE visualization results of extracted features before the fully connected layer of experiment A in

Table 4 are shown in

Figure 13. Each health state is represented by a special color. There exists apparent overlapping among the clusters of different health states with the initial samples, meaning that the classifier trained by imbalanced data cannot well identify samples of different health states. Comparing

Figure 13a–c, it can be seen that the boundary becomes clearer once the classifier is trained with expanded balanced data. However, there still exist many overlaps when the CNN and the Resnet are adopted. The overlap has been improved by using the enhanced CBAM-ResNet, which is shown in

Figure 13d,e. This also indicates the superiority of the proposed CBAM-ResNet. Compared with SN-DCGAN and WGANGP, the feature clusters are more compact when the IDCGAN is applied to the data augmentation. This indicates that self-attention can help to improve the quality of the generated samples which finally helps the classifier to better learn the deep features of the samples.