Abstract

Using force myography (FMG) to monitor volumetric changes in limb muscles is a promising and effective alternative for controlling bio-robotic prosthetic devices. In recent years, there has been a focus on developing new methods to improve the performance of FMG technology in the control of bio-robotic devices. This study aimed to design and evaluate a novel low-density FMG (LD-FMG) armband for controlling upper limb prostheses. The study investigated the number of sensors and sampling rate for the newly developed LD-FMG band. The performance of the band was evaluated by detecting nine gestures of the hand, wrist, and forearm at varying elbow and shoulder positions. Six subjects, including both fit and amputated individuals, participated in this study and completed two experimental protocols: static and dynamic. The static protocol measured volumetric changes in forearm muscles at the fixed elbow and shoulder positions. In contrast, the dynamic protocol included continuous motion of the elbow and shoulder joints. The results showed that the number of sensors significantly impacts gesture prediction accuracy, with the best accuracy achieved on the 7-sensor FMG band arrangement. Compared to the number of sensors, the sampling rate had a lower influence on prediction accuracy. Additionally, variations in limb position greatly affect the classification accuracy of gestures. The static protocol shows an accuracy above 90% when considering nine gestures. Among dynamic results, shoulder movement shows the least classification error compared to elbow and elbow–shoulder (ES) movements.

1. Introduction

The human upper limb is a dexterous organ through which activities of daily livings (ADLs) can be achieved, such as handshaking, driving, grasping or lifting an object, etc. [1]. It is also important to communicate information or emotions through sign language. Losing the use of one’s upper limb can be devastating as it limits a person’s ability to perform ADLs. Various upper limb prosthetic devices, including body-powered and externally powered prostheses, have been developed to restore functionality. Body-powered prostheses use harness systems and are operated using intact body parts; however, they offer limited functionality and require much power [2]. Externally powered prostheses, particularly myoelectric devices, provide more dexterity, cosmetics, and ease of operation than body-powered prostheses. Various research-based and commercially available prosthetic devices with multiple degrees of freedom (mDOFs) have been developed recently. Among these, the most well-known commercially available upper limb prosthetic hands are Otto Bock’s Michelangelo hand [3], Steeper Group’s Bebionic V3 [4], and Touch Bionic’s i-Limb [5].

Myoelectric prosthetic devices require an interface for converting user intent to myoelectric signals. The popular adopted sensing mechanisms are surface electromyography (sEMG) [6], ultrasound imaging [7], and force myography (FMG) techniques. However, sEMG is a widely used sensing mechanism. FMG is a relatively new technology to sEMG. The other names for FMG are topographic pressure map (TPM) [8] residual kinetic imaging (RKI) [9], and surface pressure mapping (SPM) [10]. This technology is considered a potential replacement for the sEMG technique because of its cost-effectiveness, high signal-to-noise ratio (SNR), and the least effect on muscle fatigue, hair, and sweat [11,12,13]. Force myography is a non-invasive technique that uses pressure sensors to monitor volumetric changes in the musculotendinous complex during gesture contractions. These pressure sensors are either piezoresistive [14], piezoelectric [15], or capacitive [16]. However, resistive-based sensors such as force-sensitive resistors (FSRs) are more popular and widely used in this technique [16]. This technique provides a user-friendly, cost-effective, and unobtrusive method to monitor volumetric muscle changes. Furthermore, FMG signals can be generated using commercially available pressure sensors and do not require complex circuitry for signal processing [17]. In addition, it is not necessary to place FMG sensors on the specific anatomical points of the muscles to acquire adequate myoelectric signals [8]. Considering these factors, many studies are examining the use of FMG to monitor muscle activity, gesture recognition, and detection of functional tasks. With machine learning and pattern recognition, this sensing mechanism has been gaining importance in the last decade to detect limb activities, hand gestures, and grip repetitions.

No consistent standard has been adopted in developing the FMG system regarding sensor quantity selection and sampling rate required for effectively detecting upper limb movements. Some studies [18,19,20] used low-density pressure mapping (three to eight sensors), whereas others developed customized sensor arrays for FMG applications [21,22]. These high-density sensor arrays give more promising results compared to low-density sensor FMG bands. However, they add complexity, weight, cost, and computational time to the system, leading to increased maintenance and manufacturing costs for prostheses. Therefore, low-density FMG bands are utilized to recognize a limited number of gestures. As far as the sampling rate is concerned, it varies among studies from 6 Hz to 1 kHz. The sampling rate of 6 Hz is sufficient for static gesture recognition. However, higher frequencies are recommended for dynamic gestures, including multiple hands, forearm, and elbow movements, or their combination [16].

FMG technology is utilized to detect upper limb activities, including finger forces [23], fine finger movements [24], grip strength [25], hand gestures classification [26], and monitoring wrist and forearm gestures [27]. Most of these studies were performed under static limb conditions. For example, during experimentation, subjects were seated on a comfortable chair with their elbow and shoulder fixed positions. These static conditions provide higher accuracies for gestures classification. However, these conditions are far away from real-life scenarios. Since subjects are supposed to move a limb in space to achieve their ADLs, these variations and orientations in limb position cause a degradation in classification accuracy when the classifier is trained on a static condition and tested on multiple orientations or dynamic movements. Due to these orientation and position changes, different volumetric muscle patterns develop for the same gesture. Therefore, dynamic protocols were developed to train the classifier on multiple arm positions or orientations to effectively recognize gesture patterns in multiple positions and orientations of the limb. Radmond et al. [28] were the first to develop a dynamic protocol for FMG-based techniques. Their study classified a set of eight hand and wrist gestures using static and dynamic protocols. Later, Freigo et al. [29] introduced a dynamic protocol by covering generic humeral and transverse planes.

Similarly, Ahmadizadeh et al. [26] also utilized a dynamic protocol for training their classifier for ten hand, wrist, and forearm gestures. All these studies showed that limb position variation affects gesture classification accuracy. Table 1 shows a literature review of FMG-based studies.

Table 1.

Literature review of FMG-based studies.

This study investigates the impact of sensor quantity and sampling rate on the performance of a novel low-density force myography (LD-FMG) armband to control upper limb prostheses. The study also examines the effect of limb position variation on the classification accuracy of upper limb gestures, including fine finger movements and hand movements. A dynamic protocol was designed to assess the impact of the elbow, shoulder, and combined elbow–shoulder movements on gestures classification. The results of this study will provide insights into the fundamental parameters of FMG technology and its potential for use in bio-robotic devices.

2. Materials and Methods

2.1. FMG Sensors

FMG technology, which measures volumetric changes in muscle activity, often uses force-sensitive resistors (FSRs) as the sensing mechanism. Its cost-efficiency, lightweight design, precision, and small size make it suitable for bio-robotic applications [40]. This study used seven commercially available force-sensitive resistors (FSR) from Interlink (model No. 402) to develop the FMG armband. These FSRs have a 12.07 mm2 sensing area and a sensitivity range from 0.2 to 20 N [41]. The FSRs were chosen for their cost-effectiveness, light weight, accuracy, and compact size, which make them suitable for bio-robotic applications. However, FSR sensors are flexible and can bend when placed on human skin, leading to uneven pressure distribution [16,42,43]. To address this issue, a stiff mechanical housing was designed for each FSR sensor to protect it from bending and to ensure that muscle pressure is focused solely on the sensitive area of the sensor.

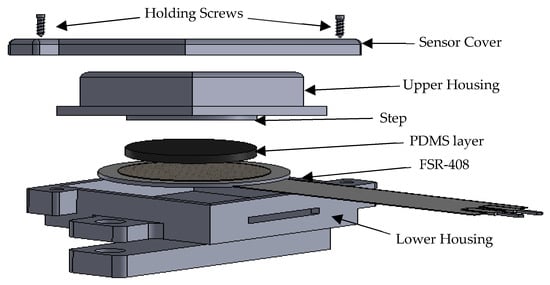

A novel mechanical housing was developed to protect the FSR sensors from bending and to ensure that muscle pressure is focused on the sensitive area of the sensor. The design consists of two halves, with the sensor embedded in the lower housing.

The upper housing has a circular step of 12 mm in diameter and a 1.2 mm polydimethylsiloxane (PDMS) layer at the lower end. The upper end is the force-sensing tip that transmits muscle–tendon force to the FSR. The housing is 3D printed using PLA, providing rigidity and being safe for direct skin contact due to its biocompatibility [44]. The new FSR housing developed in this study measures 2.3 cm × 2 cm × 1 cm. It was designed to protect the FSR from bending and to ensure that pressure is applied only to the sensitive area of the sensor. As previously performed in other studies, the FSR sensors were mounted on a Velcro strap and placed evenly around the subject’s forearm [19,27]. The developed FSR sensor’s exploded view is depicted in Figure 1.

Figure 1.

Exploded view of FSR sensor with housing.

2.2. Data Acquisition and Transfer Setup

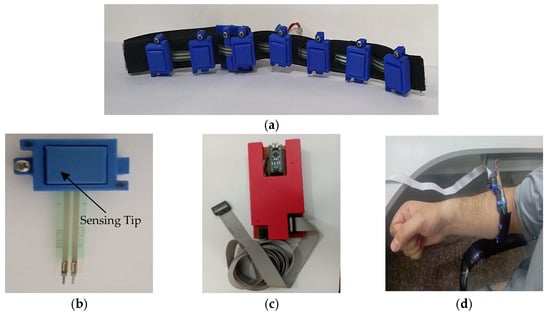

In this study, a voltage divider circuit, effective in previous research [31,45], was utilized to extract the signals from the FSR sensors. The circuit includes ground resistors (Rg) to adjust the sensitivity of the FSR and determines the relationship between the maximum force before saturation and the maximum output voltage of the FSR. The value of Rg selected in this study was 20 kΩ. The signals from the FSR were quantified using an operational amplifier (op-amp) from an Arduino Nano board, which features a 16 MHz ATmega328 microprocessor and a 10-bit analog-to-digital converter (ADC). The complete hardware setup is shown in Figure 2a–d.

Figure 2.

(a) FMG armband with 7S sensors, (b) 3D printed sensor, (c) Data acquisition hardware, and (d) LD-FMG band fastened on individual’s forearm.

2.3. Experimental Setup

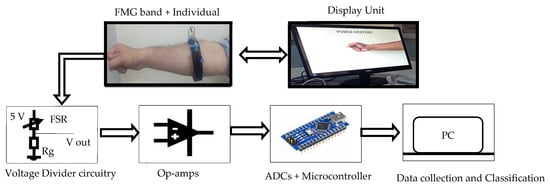

An experimental setup consisting of the FMG band, data collection, and classification setup was developed. Figure 3 shows the flowchart of the experimental setup from data recording to data classification. The FMG band’s FMG sensors perceive volumetric changes in muscles and FMG signals are digitized using the Arduino Nano board.

Figure 3.

Flow chart of the experimental setup.

2.4. Subjects

This experimental study recruited six volunteer adult participants, three of whom were fit, and three had trans-radial amputations. The participants ranged from 28 to 45 years, and all were physically active and had no skin-related issues. The study’s developed FMG band and experimental procedures were thoroughly explained to the participants. The research was approved by the ethical committee of the University of Engineering and Technology Peshawar, Pakistan (UET Peshawar), and all participants signed an informed consent form before the experimental procedure began.

2.5. Experimental Protocol

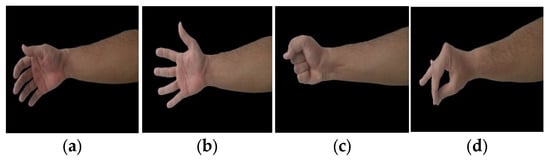

Two protocols were developed for data collection: one for static and the other for dynamic limb positions. Data were collected for nine upper extremity gestures, including five major hand gestures, mostly performed to accomplish activities of daily living (ADLs) according to [29,34,46], such as relax, fingers extended, power, tripod, and finger point. In addition, two wrist movements, such as wrist flexion and wrist extension [47], and two forearm movements, such as forearm supination and forearm pronation [48], are shown in Figure 4. For both protocols, the FMG band was positioned on the upper portion of the forearm. The participants could record data while standing or sitting on an armless chair. Participants were also asked for feedback on the tightness and comfort of the band during the experiment. The gestures to be performed and their names were displayed on the host device. Participants were instructed to perform the gestures with consistent force. Data recording for both protocols was conducted on the same day for each participant.

Figure 4.

Upper limb gestures: (a) Relax, (b) fingers extended, (c) power, (d) tripod, (e) finger point, (f) wrist flexion, (g) wrist extension, (h) forearm supination, and (i) forearm Pronation.

2.5.1. Static Limb Position Protocol

During static limb position, the elbow and shoulder positions were fixed, and data for the hand, wrist, and forearm gestures, as mentioned earlier, were recorded. Subjects were asked to flex their elbow at position 2 and their shoulder at position 1 (shown in Figure 5). This pose was selected for static protocol because it is the most adopted elbow and shoulder position during ADLs such as handshaking, reaching, and grasping objects, etc. Data collection for gestures from different sets of sensors and sampling rates followed the sequence shown in Table 2. Each gesture was displayed for 5 s on the host device, and subjects were asked to elicit that gesture contraction. Three seconds of rest phase was given between gestures to avoid muscle fatigue. Data were recorded in two sessions. In each session, sequence steps (as shown in Table 2) were repeated three times for data collection. The sequence of gestures remained the same throughout the experiment to minimize participants’ confusion. It was made sure to perform both sessions for a single participant on the same day, and a 20 min break was given between sessions.

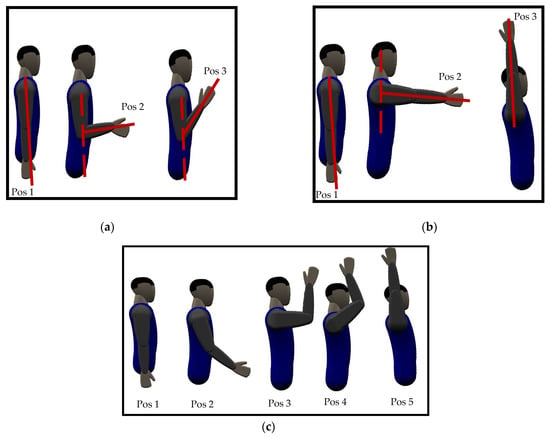

Figure 5.

Dynamic motions of upper limb (a) elbow, (b) shoulder, and (c) ES movement.

Table 2.

Data collection sequence.

Effect of the Number of Sensors

The number of sensors selected in this study to recognize all nine gestures was 3, 5, and 7 sets of sensors on the FMG band. The 3-sensor FMG armband is named ‘3S’; similarly, the 5- and 7-sensor FMG armbands are termed ‘5S’ and ‘7S’, respectively. These terminologies will be used throughout the paper to describe LD-FMG armbands with the associated number of sensors. Since the developed armband is based on a low-density pressure mapping technique, the selected number of sensors ranges from three to seven. This selection of the number of sensors is similar to the minimum number of sensors (three to eight) supposed to be sufficient for effectively perceiving user intent in pattern recognition-based studies [20,25]. The other reason for this selection of sensor quantity is that each sensor contributes to the stiffness of the armband. Therefore, increasing the number of sensors may compromise the band’s stiffness and make it difficult to mount on thinner forearms.

Effect of Sampling Rate

The data for all nine gestures were recorded at sampling frequencies of 5 Hz, 10 Hz, and 20 Hz, since the frequency of human arm motion is less than 4.5 Hz [49]. Furthermore, based on Nyquist criteria [50] (the minimum sampling frequency is twice the frequency of a moving body), a 10 Hz sampling rate was mainly adopted in previous studies [11,20,26] for data recording of static gestures. Therefore, in this study, data recording was performed at 5 Hz (almost equal to human hand motion), 10 Hz (based on Nyquist criteria), and 20 Hz sampling frequencies to determine the effect of sampling rates on static hand gesture recognition.

2.5.2. Dynamic Limb Position Protocol

Data for the gestures mentioned above were recorded by varying elbow, shoulder, and their combined movements to investigate the limb position effect. The movements were performed in humeral planes since most ADLs are covered in this plane [34,51]. For elbow movements, subjects were instructed to maintain the gesture while continuously moving their elbow from position 1 to position 3 (from extended to flexed position), as shown in Figure 5a, while not moving shoulder position. Similarly, data for gestures were collected for shoulder movements (from position 1 to position 3) while maintaining a fixed elbow position. For combined elbow and shoulder movements (we termed it ‘ES’), subjects were asked to simultaneously move their elbow and shoulder following positions 1-2-3-4-5, as shown in Figure 5c. For each elbow, shoulder, and ES movement, subjects were asked to maintain the gesture and gradually move their upper limb from the starting to the final position in each movement (as shown in Figure 5) for 10 s. If the subject reached the final position before 10 s, they were asked to follow movement in the reverse direction, i.e., the final-to-start position. Data were recorded three times for each elbow, shoulder, and ES movement, and a break of 10 min was given to subjects between movements.

2.6. Data Collection

A single trial from the static protocol at a 20 Hz sampling frequency consists of 100 samples for each of the nine gestures (5 s at 20 Hz). Similarly, a dynamic protocol of elbow, shoulder, and ES movement consists of 200 samples (10 s at 20 Hz) for a gesture in a single trial. The data was normalized from 0 to 1 and analyzed using the Statistics and Machine learning Toolbox of MATLAB on a Dell corei3 laptop (Processor: 2.4 GHz, Ram: 8 GB DDR3, Operating system: Windows 10). The classification performance of the data sets acquired from both protocols was evaluated using the support vector machines (SVM) algorithm. The SVM algorithm was chosen as it is the most tested and adopted classifier for bio-signal classification [16,52].

In addition, a k-fold cross-validation scheme (K = 5) was utilized for gesture classification. The mean classification accuracy from cross-validation is presented in this paper.

3. Results

3.1. Classification Performance of the Static Protocol

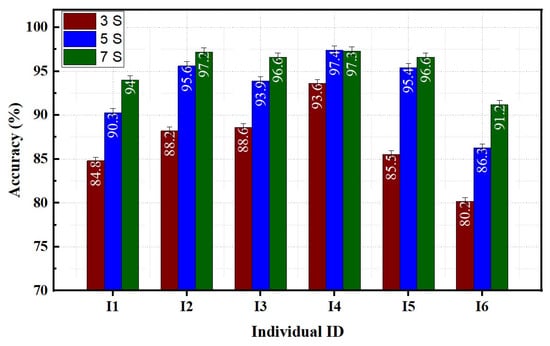

3.1.1. Effect of the Number of Sensors on Classification Accuracy

Overall, the classification accuracy across all subjects was highest on the 7S FMG band, followed by the 5S and 3S bands. The average accuracies predicted for the 3S, 5S, and 7S FMG bands were 62.1 ± 6.5%, 81 ± 4.2%, and 91.6 ± 3.7%, respectively.

The effect of the number of sensors on individual participants’ performances across three trials is illustrated in Figure 6. All three sets of sensors demonstrate average classification accuracies greater than 80%. The average accuracy increases as the number of sensors increases. All individuals achieved the maximum average accuracy on the 7S FMG band. Among all participants, participant I4 showed the highest classification accuracy across all sensor sets.

Figure 6.

Effect of sensor’s quantity on individual performance.

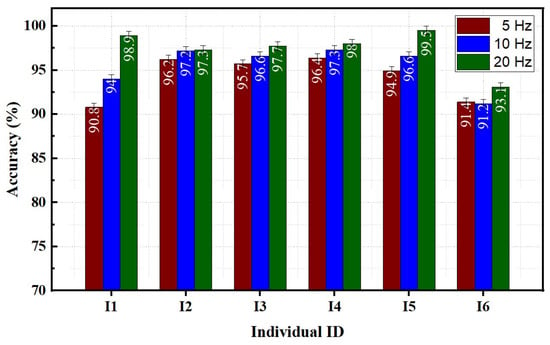

3.1.2. Effect of Sampling Rates on Classification Accuracy

The classification accuracy tends to increase as the sampling rate increases. The highest average classification accuracy of 95.6 ± 1.6% was achieved at a sampling rate of 20 Hz. In contrast, the lowest accuracy of 86.1 ± 2.9% was obtained at a rate of 5 Hz. Figure 7 illustrates the effect of the data sampling rate on the classification performance of individual participants using the 7S FMG band. The effect of the sampling rate was more pronounced in individuals I1 and I5 compared to others. An average accuracy difference of around 8% was found between the 5 Hz and 20 Hz sampling rate data for individual I1. For individual I5, an increase of 4.5% in accuracy was observed between data acquired at 5 Hz and 20 Hz sampling rates. The sampling frequency did not significantly impact the prediction of gestures for individuals I2, I3, I4, and I6.

Figure 7.

Effect of sampling rates on individual performance.

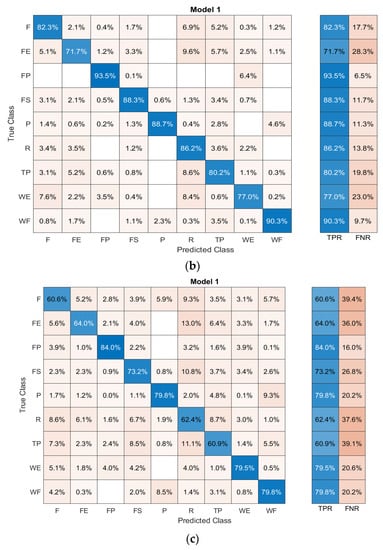

3.2. Classification Performance of the Dynamic Protocol

Figure 8a–c displays the confusion matrices from dynamic datasets during the execution of elbow, shoulder, and ES movements. Each confusion matrix illustrates the true and predicted classes of gestures represented by rows and columns. The diagonal entries in the matrix represent the average accuracy of correctly classified classes (true positive rates, TPR), and the average error for misclassified classes (false negative rates, FNR) is shown in other entries. The results indicate that variations in limb position affect the average classification accuracy of gestures. The dynamic movement of the elbow achieves an average accuracy of up to 80.6 ± 5.2% for classifying nine gestures. Shoulder and ES movements have average accuracies of 84.3 ± 3.7% and 71.3 ± 6.4%, respectively.

Figure 8.

Confusion matrices of (a) elbow, (b) shoulder, and (c) ES movements; F: finger point, FE: finger extension, FP: forearm pronation, FS: forearm supination, P: power gesture, R: relax, TP: tripod, WE: wrist extension, and WF: wrist flexion.

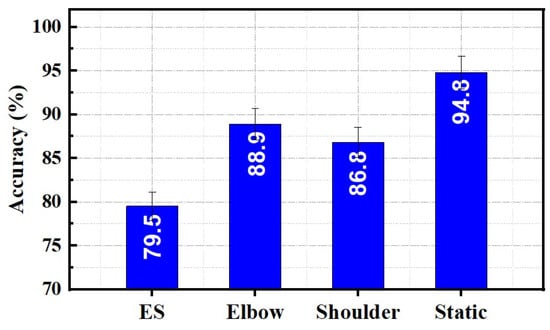

3.3. Effect of Limb Position Variation on Hand Gestures

When recognizing five hand gestures, the static limb position achieves a maximum average classification accuracy of 94.8%. However, variations in limb position led to an increase in classification error. As seen in Figure 9, classification accuracies were obtained under different limb movement conditions. The maximum classification error was around 21% for ES movement, and the minimum was around 12% for shoulder movement. Elbow movement demonstrates an average classification accuracy of 86.8% for hand gestures.

Figure 9.

Comparison of static and dynamic protocol on hand gestures classification performance.

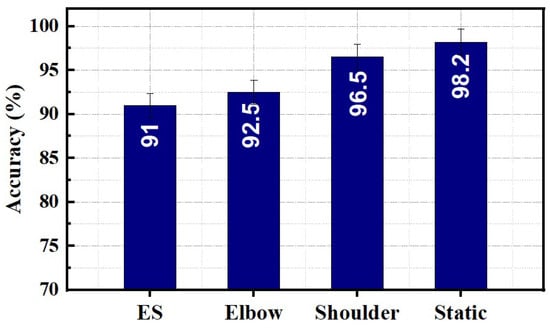

3.4. Effect of Limb Position Variations on Wrist and Forearm Gestures

Figure 10 compares the average classification accuracies of wrist and forearm gestures for static and dynamic limb movements. An average accuracy above 90% was observed for static and dynamic protocols. Static limb position demonstrates maximum average accuracy of 98.2%. Elbow, shoulder, and ES movements showed average accuracies of 91.2%, 92.9%, and 96.9%, respectively.

Figure 10.

Comparison of static and dynamic protocol on wrist and forearm gestures classification performance.

4. Discussion

An effective gesture detection technique is essential for an efficient control system of bio-robotic assistive devices. This study presented an LD-FMG band with seven newly developed FSR sensors. Static and dynamic protocols were designed to investigate upper limb gestures.

The effect of sensor quantity and sampling rates on the performance of the newly developed FMG band was investigated. As presented in Section 3.1, the number of sensors for perceiving user intent significantly impacts gesture recognition. The performance of the armband rises with the increasing number of sensors. Average accuracy increases by around 30% by increasing the number of sensors from 3S to 5S. Comparing 5S and 7S, an improvement in prediction accuracy of approximately 10% is observed. This relationship between the number of sensors and gesture recognition is also evident in previous studies. Lei et al. [38] demonstrated that the accuracy of hand gesture recognition increases from 77.92 % to 99.18% by increasing the channel number from 2 to 8. Ahmadizadeh et al. [26] demonstrated that accuracy for predicting 3 to 10 classes of upper limb motions increases from 74% to 95% for the same set of sensors. Similarly, studies [11,27] showed improvement in accuracy with an increasing number of channels for data collection.

The sampling rate at which data is recorded also affects the prediction accuracy of the gestures. However, the influence of the sampling rate on prediction accuracy is lower than the number of sensors. An increase of around 5% in prediction accuracy is achieved by increasing the sampling rate from 5 Hz to 10 Hz. Beyond 10 Hz, an additional improvement of around 4% in prediction accuracy is observed. The influence of the sampling rate from 5 Hz to 10 Hz may be due to the wrist and forearm movements in the selected gestures.

The results of the study indicate that the performance of the static protocol was significantly better than the dynamic protocol in predicting classes of upper limb gestures. This outcome was expected as FMG monitored the forearm muscles. Due to variations in elbow positions, there were volumetric changes in the forearm muscles. The human forearm consists of intrinsic, extrinsic, and brachioradialis muscles. Intrinsic muscles mainly cause pronation and supination in the forearm, extrinsic muscles move finger joints, and the brachioradialis is responsible for elbow joint flexion and extension [53].

The brachioradialis is located on the lateral side of the forearm and extends from the upper arm to the wrist. Volumetric changes in the forearm muscles due to the flexion and extension of the elbow joint result in distinct myoelectric signals for the same gesture at different limb positions. This finding is consistent with previous studies [28,34], which also reported similar observations. By using an array of sensors, it was found that the position of the upper limb has an impact on the accuracy of gesture classification. Furthermore, it was determined that FMG, which detects changes in the volume of forearm muscles, is more effective at detecting variations in the elbow joint than other methods, such as sEMG. Xiao et al. [27] found that FMG is more effective than sEMG in predicting elbow movements, with an 18% higher accuracy. This is due to the minimal change in the electrical activity of the forearm muscles when the elbow is moved.

In comparison, shoulder movements have less impact on upper limb gesture prediction accuracy. The flexion and extension of shoulder movements are caused by the deltoid muscle, which has minimal disruption on the forearm muscles [54]. Furthermore, the dynamic movement of the shoulder has been found to have a 5% higher classification accuracy in predicting nine gestures than elbow movements. Additionally, the dynamic movement of the shoulder demonstrates a 5% higher classification accuracy in predicting nine gestures than elbow movements. In addition, the accuracy is even further increased, up to 18%, for ES movement. Fine finger movements (hand gestures) and hand movements (wrist and forearm gestures) were also found to be less affected by shoulder movements, indicating that shoulder movements have less influence on the upper limbs regardless of the type of gesture.

One more key finding of this study is that the accuracy of gesture prediction also depends on the relationship between the number of sensors and gestures. The study found that the higher the ratio of sensors to gestures, the higher the prediction accuracy. Conversely, the lower the ratio, the lower the accuracy. For example, this study found that wrist and forearm gestures (four gestures) were predicted more accurately than nine. An improvement of approximately 6% in accuracy was observed. This trend of improvement in accuracy with an increasing ratio between the number of gestures and sensors for data collection is consistent with previous literature [11,26,38,55].

The current study has a limitation in that it only examines the dynamic protocol in the humeral plane. Therefore, it is necessary to study further the impact of limb movements in the sagittal and transverse planes. Furthermore, these planes are also crucial in performing activities of daily living, so they may likely effect gesture classification accuracy. Therefore, investigating these planes will aid in developing a more realistic protocol for training algorithms for practical usage. Integration of developed FMG sensors in the prosthetic socket of the upper limb is also essential to make it effective for the end-user. New integration techniques were developed that compact the size of embedded sensors and make them more comfortable for direct interaction with skin [56,57]. Additionally, it would be beneficial to investigate other AI algorithms, including deep learning and various signal feature extraction techniques, such as time-based, frequency-based, and time–frequency-based, in the armband.

5. Conclusions

There is a substantial body of research on different techniques developed for FMG-based prosthetic control; however, most studies focus on ideal conditions with limited variation in limb positions. This study, however, aims to develop a novel LD-FMG band for recognizing upper limb gestures, and its contributions are:

- The study results indicate that the number of sensors used in the FMG band significantly impacts its performance, with the 7S FMG band showing higher classification accuracy than the 5S and 3S FMG bands.

- Compared to the number of sensors used, the sampling rate has less of an impact on the performance of the FMG band. The study also determined that a sampling rate of 10 Hz or above is sufficient for recognizing upper limb movements.

- The developed LD-FMG band demonstrates good discrimination between different gestures in static protocols compared to dynamic protocols.

- Among variations in limb positions, the shoulder joint had the least effect on the prediction accuracy of the gestures. However, it was also observed that limb positions affected fine finger movements (hand gestures) and hand movements (wrist and forearm movements).

Author Contributions

Conceptualization, M.U.R. and K.S.; methodology, M.U.R.; software, M.U.R.; validation, M.U.R. and K.S.; formal analysis, K.S. and I.U.H.; investigation, S.I.; resources, K.S. and I.U.H.; data curation, M.U.R.; writing—original draft preparation, M.U.R. and K.S.; writing—review and editing, M.A.I., F.S. and I.U.H.; visualization, K.S., M.A.I. and F.S.; supervision, K.S.; project administration, K.S.; funding acquisition, K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate studies and Scientific Research, King Faisal University, Saudi Arabia [Grant No. 2980].

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the University of Engineering and Technology Peshawar, Pakistan (protocol code: 371/Mte/UET and date of approval: 4 March 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data available on request due to restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Biryukova, E.V.; Yourovskaya, V. A model of human hand dynamics. In Advances in the Biomechanics of the Hand and Wrist; Springer: Berlin/Heidelberg, Germany, 1994; pp. 107–122. [Google Scholar]

- Carey, S.L.; Lura, D.J.; Highsmith, M.J. Differences in myoelectric and body-powered upper-limb prostheses: Systematic literature review. J. Rehabil. Res. Dev. 2015, 52, 247–262. [Google Scholar] [CrossRef] [PubMed]

- Puchhammer, G.J.M. Michelangelo 03-A versatile hand prosthesis, featuring superb controllability and sophisticated bio mimicry. J. Rehabil. Res. Dev. 2008, 8, 162–163. [Google Scholar]

- Medynski, C.; Rattray, B. Bebionic prosthetic design. In Proceedings of the 2011 MyoElectric Controls/Powered Prosthetics Symposium, Fredericton, NB, Canada, 14–19 August 2011. [Google Scholar]

- Connolly, C. Prosthetic hands from touch bionics. Ind. Robot. 2008, 35, 290–293. [Google Scholar] [CrossRef]

- Geethanjali, P.; Ray, K.; Shanmuganathan, P. Actuation of prosthetic drive using EMG signal. In Proceedings of the TENCON 2009–2009 IEEE Region 10 Conference, Singapore, 23–26 November 2009. [Google Scholar]

- Sikdar, S.; Rangwala, H.; Eastlake, E.B.; Hunt, I.A.; Nelson, A.J.; Devanathan, J.; Shin, A.; Pancrazio, J.J. Novel method for predicting dexterous individual finger movements by imaging muscle activity using a wearable ultrasonic system. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Castellini, C.; Artemiadis, P.; Wininger, M.; Ajoudani, A.; Alimusaj, M.; Bicchi, A.; Caputo, B.; Craelius, W.; Dosen, S.; Englehart, K.; et al. Proceedings of the first workshop on peripheral machine interfaces: Going beyond traditional surface electromyography. Front. Neurorobot. 2014, 8, 22. [Google Scholar] [CrossRef] [PubMed]

- Phillips, S.L.; Craelius, W. Residual kinetic imaging: A versatile interface for prosthetic control. Robotica 2005, 23, 277–282. [Google Scholar] [CrossRef]

- Yungher, D.A.; Wininger, M.T.; Barr, J.; Craelius, W.; Threlkeld, A.J. Surface muscle pressure as a measure of active and passive behavior of muscles during gait. Med. Eng. Phys. 2011, 33, 464–471. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.-K.; Xiao, Z.G.; Menon, C. Exploration of Force Myography and surface Electromyography in hand gesture classification. Med. Eng. Phys. 2017, 41, 63–73. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control. 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Criswell, E. Cram’s Introduction to Surface Electromyography; Jones & Bartlett Publishers: Burlington, MA, USA, 2010. [Google Scholar]

- Esposito, D.; Andreozzi, E.; Fratini, A.; Gargiulo, G.D.; Savino, S.; Niola, V.; Bifulco, P. A piezoresistive sensor to measure muscle contraction and mechanomyography. Sensors 2018, 18, 2553. [Google Scholar] [CrossRef]

- Booth, R.; Goldsmit, P.B. A wrist-worn piezoelectric sensor array for gesture input. J. Med. Biol. Eng. 2018, 38, 284–295. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C.J.S. A review of force myography research and development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef]

- Dementyev, A.; Paradiso, J. WristFlex: Low-power gesture input with wrist-worn pressure sensors. In Proceedings of the 27th annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014. [Google Scholar]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Performance of forearm FMG for estimating hand gestures and prosthetic hand control. J. Bionic Eng. 2019, 16, 88–98. [Google Scholar] [CrossRef]

- Connan, M.; Ramírez, E.R.; Vodermayer, B.; Castellini, C. Assessment of a wearable force-and electromyography device and comparison of the related signals for myocontrol. Front. Neurorobot. 2016, 10, 17. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Towards the development of a wearable feedback system for monitoring the activities of the upper-extremities. J. Neuroeng. Rehabil. 2014, 11, 2. [Google Scholar] [CrossRef] [PubMed]

- Radmand, A.; Scheme, E.; Englehart, K. High-resolution muscle pressure mapping for upper-limb prosthetic control. In Proceedings of the MEC–Myoelectric Control Symposium, Fredericton, NB, Canada, 18–22 August 2014. [Google Scholar]

- Castellini, C.; Kõiva, R.; Pasluosta, C.; Viegas, C.; Eskofier, B.M. Tactile myography: An off-line assessment of able-bodied subjects and one upper-limb amputee. Technologies 2018, 6, 38. [Google Scholar] [CrossRef]

- Ravindra, V.; Castellini, C. A comparative analysis of three non-invasive human-machine interfaces for the disabled. Front. Neurorobot. 2014, 8, 24. [Google Scholar] [CrossRef]

- Li, N.; Yang, D.; Jiang, L.; Liu, H.; Cai, H. Combined use of FSR sensor array and SVM classifier for finger motion recognition based on pressure distribution map. J. Bionic Eng. 2012, 9, 39–47. [Google Scholar] [CrossRef]

- Wininger, M. Pressure signature of forearm as predictor of grip force. J. Rehabil. Res. Dev. 2008, 45, 883–892. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Merhi, L.-K.; Pousett, B.; Sangha, S.; Menon, C. Toward intuitive prosthetic control: Solving common issues using force myography, surface electromyography, and pattern recognition in a pilot case study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Performance of forearm FMG and sEMG for estimating elbow, forearm and wrist positions. J. Bionic Eng. 2017, 14, 284–295. [Google Scholar] [CrossRef]

- Radmand, A.; Scheme, E.; Englehart, K. High-density force myography: A possible alternative for upper-limb prosthetic control. J. Rehabil. Res. Dev. 2016, 53, 443–456. [Google Scholar] [CrossRef]

- Cho, E.; Chen, R.; Merhi, L.-K.; Xiao, Z.; Pousett, B.; Menon, C. Force myography to control robotic upper extremity prostheses: A feasibility study. Front. Bioeng. Biotechnol. 2016, 4, 18. [Google Scholar] [CrossRef] [PubMed]

- Kadkhodayan, A.; Jiang, X.; Menon, C. Continuous prediction of finger movements using force myography. J. Med. Biol. Eng. 2016, 36, 594–604. [Google Scholar] [CrossRef]

- Sadarangani, G.P.; Jiang, X.; Simpson, L.A.; Eng, J.J.; Menon, C. Force myography for monitoring grasping in individuals with stroke with mild to moderate upper-extremity impairments: A preliminary investigation in a controlled environment. Front. Bioeng. Biotechnol. 2017, 5, 42. [Google Scholar] [CrossRef] [PubMed]

- Nowak, M.; Eiband, T.; Castellini, C. Multi-modal myocontrol: Testing combined force-and electromyography. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017. [Google Scholar]

- Delva, M.L.; Menon, C. FSR based force myography (FMG) stability throughout non-stationary upper extremity tasks. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 29–30 November 2017. [Google Scholar]

- Ferigo, D.; Merhi, L.-K.; Pousett, B.; Xiao, Z.G.; Menon, C. A case study of a force-myography controlled bionic hand mitigating limb position effect. J. Bionic Eng. 2017, 14, 692–705. [Google Scholar] [CrossRef]

- Anvaripour, M.; Saif, M. Hand gesture recognition using force myography of the forearm activities and optimized features. In Proceedings of the 2018 IEEE International Conference on Industrial Technology (ICIT), Lyon, France, 19–22 February 2018. [Google Scholar]

- Xiao, Z.G.; Menon, C. An investigation on the sampling frequency of the upper-limb force myographic signals. Sensors 2019, 19, 2432. [Google Scholar] [CrossRef]

- Godiyal, A.K.; Singh, U.; Anand, S.; Joshi, D. Analysis of force myography based locomotion patterns. Measurement 2019, 140, 497–503. [Google Scholar] [CrossRef]

- Lei, G.; Zhang, S.; Fang, Y.; Wang, Y.; Zhang, X. Investigation on the Sampling Frequency and Channel Number for Force Myography Based Hand Gesture Recognition. Sensors 2021, 21, 3872. [Google Scholar] [CrossRef]

- Zakia, U.; Menon, C. Dataset on Force Myography for Human–Robot Interactions. Data 2022, 7, 154. [Google Scholar] [CrossRef]

- Prakash, A.; Sharma, N.; Sharma, S. An affordable transradial prosthesis based on force myography sensor. Sens. Actuators A Phys. 2021, 325, 112699. [Google Scholar] [CrossRef]

- Interlink FSR 400 Series Data Sheet. Available online: https://www.interlinkelectronics.com/fsr-400-series (accessed on 10 January 2022).

- Lukowicz, P.; Hanser, F.; Szubski, C.; Schobersberger, W. Detecting and interpreting muscle activity with wearable force sensors. In Proceedings of the International Conference on Pervasive Computing, Dublin, Ireland, 7–10 May 2006. [Google Scholar]

- Junker, H.; Amft, O.; Lukowicz, P.; Tröster, G. Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit. 2008, 41, 2010–2024. [Google Scholar] [CrossRef]

- Ramot, Y.; Haim-Zada, M.; Domb, A.J.; Nyska, A. Biocompatibility and safety of PLA and its copolymers. Adv. Drug Deliv. Rev. 2016, 107, 153–162. [Google Scholar] [CrossRef]

- Qadir, M.U.; Haq, I.U.; Khan, M.A.; Ahmad, M.N.; Shah, K.; Akhtar, N. Design, Development and Evaluation of Novel Force Myography Based 2-Degree of Freedom Transradial Prosthesis. IEEE Access 2021, 9, 130020–130031. [Google Scholar] [CrossRef]

- Peerdeman, B.; Boere, D.; Witteveen, H.; Huis in ’t Veld, R.; Hermens, H.; Stramigioli, S.; Rietman, H.; Veltink, P.; Misra, S. Myoelectric forearm prostheses: State of the art from a user-centered perspective. J. Rehabil. Res. Dev. 2011, 48, 719. [Google Scholar] [CrossRef] [PubMed]

- Mizuno, H.; Tsujiuchi, N.; Koizumi, T. Forearm motion discrimination technique using real-time EMG signals. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011. [Google Scholar]

- Sarrafian, S.K.; Melamed, J.L.; Goshgarian, G.M. Study of wrist motion in flexion and extension. Clin. Orthop. Relat. Res. 1977, 126, 153–159. [Google Scholar] [CrossRef]

- Xiong, Y.; Quek, F. Hand motion gesture frequency properties and multimodal discourse analysis. Int. J. Comput. Vis. 2006, 69, 353–371. [Google Scholar] [CrossRef]

- Shoemaker, R. Data acquisition for the twenty-first century with a brief look at the Nyquist sampling theorem. In Proceedings of the American Power Conference, Chicago, IL, USA, 13–15 April 1992. [Google Scholar]

- Aizawa, J.; Masuda, T.; Koyama, T.; Nakamaru, K.; Isozaki, K.; Okawa, A.; Morita, S. Three-dimensional motion of the upper extremity joints during various activities of daily living. J. Biomech. 2010, 43, 2915–2922. [Google Scholar] [CrossRef]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Force myography signal-based hand gesture classification for the implementation of real-time control system to a prosthetic hand. In Proceedings of the 2018 Design of Medical Devices Conference; ASME International, Minneapolis, MN, USA, 10–12 December 2018. [Google Scholar]

- Mitchell, B.; Whited, L. Anatomy, Shoulder and Upper Limb, Forearm Muscles; StatPearls: Treasure Island, FL, USA, 2019. [Google Scholar]

- Donatelli, R.A. Physical Therapy of the Shoulder-E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Farrell, T.R.; Weir, R.F. The optimal controller delay for myoelectric prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 111–118. [Google Scholar] [CrossRef] [PubMed]

- Paternò, L.; Dhokia, V.; Menciassi, A.; Bilzon, J.; Seminati, E. A personalised prosthetic liner with embedded sensor technology: A case study. Biomed. Eng. Online 2020, 19, 71. [Google Scholar] [CrossRef] [PubMed]

- Anany, D.; Lucas, G.; Waris, H.; Chi-Hung, Y.; Liarokapis, M. A soft exoglove equipped with a wearable muscle-machine interface based on forcemyography and electromyography. IEEE Robot. Autom. Lett. 2019, 4, 3240–3246. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).