Computer Vision Applications in Intelligent Transportation Systems: A Survey

Abstract

:1. Introduction

- CV applications in the field of ITS, along with the methods used, datasets, performance evaluation criteria, and success rates, are examined in a holistic and comprehensive way.

- The problems and application areas addressed by CV applications in ITS are investigated.

- The potential effects of CV studies on the transportation sector are evaluated.

- The applicability, contributions, shortcomings, challenges, future research areas, and trends of CV applications in ITS are summarized.

- Suggestions are made that will aid in improving the efficiency and effectiveness of transportation systems, increasing their safety levels, and making them smarter through CV studies in the future.

- This research surveys over 300 studies that shed light on the development of CV techniques in the field of ITS. These studies have been published in journals listed in top electronic libraries and presented at leading conferences. The survey further presents recent academic papers and review articles that can be consulted by researchers aiming to conduct detailed analysis of the categories of CV applications.

- It is believed that this survey can provide useful insights for researchers working on the potential effects of CV techniques, the automation of transportation systems, and the improvement of the efficiency and safety of ITS.

2. Computer Vision Studies in the Field of ITS

2.1. Evolution of Computer Vision Studies

2.1.1. Handcrafted Techniques

2.1.2. Machine Learning and Deep Learning Methods

2.1.3. Deep Neural Networks (DNNs)

2.1.4. Convolutional Neural Networks (CNNs)

2.1.5. Recurrent Neural Networks (RNNs)

2.1.6. Generative Adversarial Networks (GANs)

2.1.7. Other Methods

2.2. Computer Vision Functions

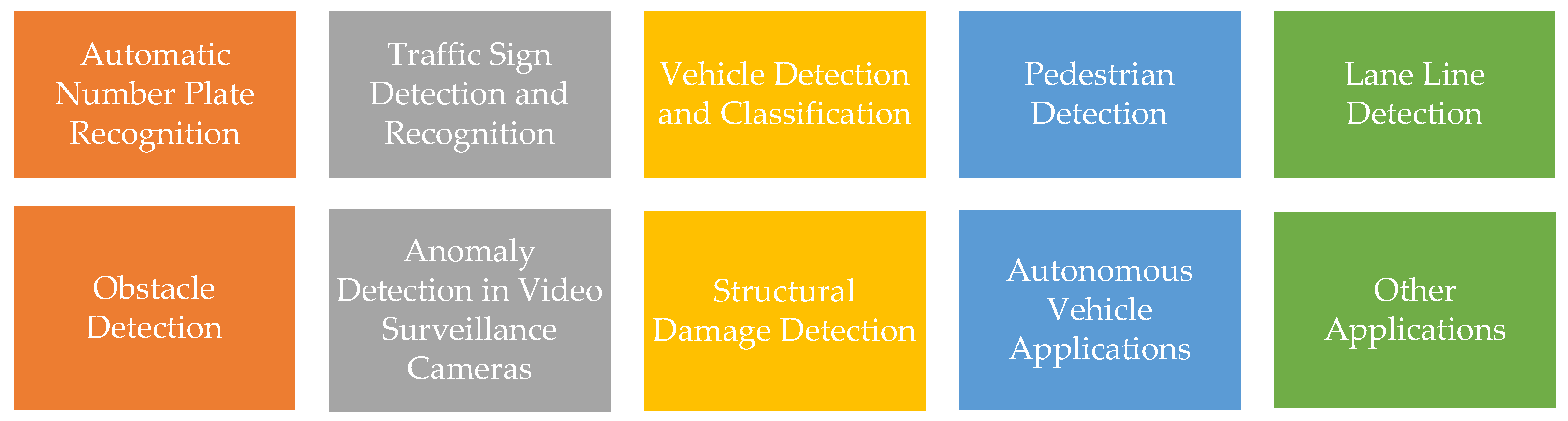

3. Computer Vision Applications in Intelligent Transportation Systems

3.1. Automatic Number Plate Recognition (ANPR)

3.2. TrafficSign Detection and Recognition

3.3. Vehicle Detection and Classification

3.4. Pedestrian Detection

3.5. Lane Line Detection

3.6. Obstacle Detection

3.7. Anomaly Detection in Video Surveillance Cameras

3.8. Structural Damage Detection

3.9. Autonomous Vehicle Applications

3.10. Other Applications

4. Discussions and Perspectives

4.1. Applicability

4.2. Contributions of Computer Vision Studies

4.3. Open Challenges in Computer Vision Studies

4.4. Future Research Directions and Trends

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, Y.; Wang, P.; Ma, M. Intelligent Transportation System (ITS): Concept, Challenge and Opportunity. In Proceedings of the 2017 IEEE 3rd International Conference on Big Data Security On cloud (Bigdatasecurity), IEEE International Conference on High Performance and Smart Computing (HPSC), and IEEE International Conference on Intelligent Data and Security (IDS), Beijing, China, 26–28 May 2017; IEEE: Beijing, China, 2017; pp. 167–172. [Google Scholar]

- Porter, M. Towards Safe and Equitable Intelligent Transportation Systems: Leveraging Stochastic Control Theory in Attack Detection; The University of Michigan: Michigan, MI, USA, 2021. [Google Scholar]

- Wang, Y.; Zhang, D.; Liu, Y.; Dai, B.; Lee, L.H. Enhancing Transportation Systems via Deep Learning: A Survey. Transp. Res. Part C Emerg. Technol. 2019, 99, 144–163. [Google Scholar] [CrossRef]

- Parveen, S.; Chadha, R.S.; Noida, C.; Kumar, I.P.; Singh, J. Artificial Intelligence in Transportation Industry. Int. J. Innov. Sci. Res. Technol. 2022, 7, 1274–1283. [Google Scholar]

- Yuan, Y.; Xiong, Z.; Wang, Q. An Incremental Framework for Video-Based Traffic Sign Detection, Tracking, and Recognition. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1918–1929. [Google Scholar] [CrossRef]

- Sharma, V.; Gupta, M.; Kumar, A.; Mishra, D. Video Processing Using Deep Learning Techniques: A Systematic Literature Review. IEEE Access 2021, 9, 139489–139507. [Google Scholar] [CrossRef]

- Loce, R.P.; Bernal, E.A.; Wu, W.; Bernal, E.A.; Bala, R. Computer Vision in Roadway Transportation Systems: A Survey Process Mining and Data Automation View Project Gait Segmentation View Project Computer Vision in Roadway Transportation Systems: A Survey Computer Vision in Roadway Transportation Systems: A Survey. Artic. J. Electron. Imaging 2013, 22, 041121. [Google Scholar] [CrossRef]

- Patrikar, D.R.; Parate, M.R. Anomaly Detection Using Edge Computing in Video Surveillance System: Review. Int. J. Multimed. Inf. Retr. 2022, 11, 85–110. [Google Scholar] [CrossRef] [PubMed]

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. Non-Handcrafted Features for Computer Vision Classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- Varshney, H.; Khan, R.A.; Khan, U.; Verma, R. Approaches of Artificial Intelligence and Machine Learning in Smart Cities: Critical Review. IOP Conf. Ser. Mater Sci. Eng. 2021, 1022, 012019. [Google Scholar] [CrossRef]

- Mittal, D.; Reddy, A.; Ramadurai, G.; Mitra, K.; Ravindran, B. Training a Deep Learning Architecture for Vehicle Detection Using Limited Heterogeneous Traffic Data. In Proceedings of the 2018 10th International Conference on Communication Systems & Networks (COMSNETS), Bengaluru, India, 3–7 January 2018; pp. 294–589. [Google Scholar]

- Alam, A.; Jaffery, Z.A.; Sharma, H. A Cost-Effective Computer Vision-Based Vehicle Detection System. Concurr. Eng. 2022, 30, 148–158. [Google Scholar] [CrossRef]

- Vishal, K.; Arvind, C.S.; Mishra, R.; Gundimeda, V. Traffic Light Recognition for Autonomous Vehicles by Admixing the Traditional ML and DL. In Proceedings of the Eleventh International Conference on Machine Vision (ICMV 2018), Munich, Germany, 1–3 November 2018; SPIE: Bellingham, WA, USA, 2019; Volume 11041, pp. 126–133. [Google Scholar]

- Al-Shemarry, M.S.; Li, Y. Developing Learning-Based Preprocessing Methods for Detecting Complicated Vehicle Licence Plates. IEEE Access 2020, 8, 170951–170966. [Google Scholar] [CrossRef]

- Greenhalgh, J.; Mirmehdi, M. Real-Time Detection and Recognition of Road Traffic Signs. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1498–1506. [Google Scholar] [CrossRef]

- Maldonado-Bascón, S.; Lafuente-Arroyo, S.; Gil-Jimenez, P.; Gómez-Moreno, H.; López-Ferreras, F. Road-Sign Detection and Recognition Based on Support Vector Machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 264–278. [Google Scholar] [CrossRef] [Green Version]

- Lafuente-Arroyo, S.; Gil-Jimenez, P.; Maldonado-Bascon, R.; López-Ferreras, F.; Maldonado-Bascon, S. Traffic Sign Shape Classification Evaluation I: SVM Using Distance to Borders. In Proceedings of the IEEE Proceedings. Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; IEEE: New York, NY, USA, 2005; pp. 557–562. [Google Scholar]

- Li, C.; Yang, C. The Research on Traffic Sign Recognition Based on Deep Learning. In Proceedings of the 2016 16th International Symposium on Communications and Information Technologies (ISCIT), Qingdao, China, 26–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 156–161. [Google Scholar]

- Oren, M.; Papageorgiou, C.; Sinha, P.; Osuna, E.; Poggio, T. Pedestrian Detection Using Wavelet Templates. In Proceedings of the Proceedings of the IEEE COMPUTER society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; IEEE: New York, NY, USA, 1997; pp. 193–199. [Google Scholar]

- Papageorgiou, C.; Evgeniou, T.; Poggio, T. A Trainable Pedestrian Detection System. In Proceedings of the Proc. of Intelligent Vehicles, Seville, Spain, 23–24 March 1998; pp. 241–246. [Google Scholar]

- Pustokhina, I.V.; Pustokhin, D.A.; Rodrigues, J.J.P.C.; Gupta, D.; Khanna, A.; Shankar, K.; Seo, C.; Joshi, G.P. Automatic Vehicle License Plate Recognition Using Optimal K-Means with Convolutional Neural Network for Intelligent Transportation Systems. Ieee Access 2020, 8, 92907–92917. [Google Scholar] [CrossRef]

- Hu, F.; Tian, Z.; Li, Y.; Huang, S.; Feng, M. A Combined Clustering and Image Mapping Based Point Cloud Segmentation for 3D Object Detection. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; IEEE: New York, NY, USA, 2018; pp. 1664–1669. [Google Scholar]

- Shan, B.; Zheng, S.; Ou, J. A Stereovision-Based Crack Width Detection Approach for Concrete Surface Assessment. KSCE J. Civ. Eng. 2016, 20, 803–812. [Google Scholar] [CrossRef]

- Hurtado-Gómez, J.; Romo, J.D.; Salazar-Cabrera, R.; Pachon de la Cruz, A.; Madrid Molina, J.M. Traffic Signal Control System Based on Intelligent Transportation System and Reinforcement Learning. Electronics 2021, 10, 2363. [Google Scholar] [CrossRef]

- Li, L.; Lv, Y.; Wang, F.-Y. Traffic Signal Timing via Deep Reinforcement Learning. IEEE/CAA J. Autom. Sin. 2016, 3, 247–254. [Google Scholar]

- Liu, Y.; Xu, P.; Zhu, L.; Yan, M.; Xue, L. Reinforced Attention Method for Real-Time Traffic Line Detection. J. Real Time Image Process. 2022, 19, 957–968. [Google Scholar] [CrossRef]

- Le, T.T.; Tran, S.T.; Mita, S.; Nguyen, T.D. Real Time Traffic Sign Detection Using Color and Shape-Based Features. In Proceedings of the ACIIDS, Hue City, Vietnam, 24–26 March 2010; pp. 268–278. [Google Scholar]

- Song, X.; Nevatia, R. Detection and Tracking of Moving Vehicles in Crowded Scenes. In Proceedings of the 2007 IEEE Workshop on Motion and Video Computing (WMVC’07), Austin, TX, USA, 23–24 February 2007; p. 4. [Google Scholar]

- Messelodi, S.; Modena, C.M.; Segata, N.; Zanin, M. A Kalman Filter Based Background Updating Algorithm Robust to Sharp Illumination Changes. In Proceedings of the ICIAP, Cagliari, Italy, 6–8 September 2005; Volume 3617, pp. 163–170. [Google Scholar]

- Okutani, I.; Stephanedes, Y.J. Dynamic Prediction of Traffic Volume through Kalman Filtering Theory. Transp. Res. Part B Methodol. 1984, 18, 1–11. [Google Scholar] [CrossRef]

- Ramrath, B.; Ari, L.; Doug, M. Insurance 2030—The Impact of AI on the Future of Insurance. 2021. Available online: https://www.mckinsey.com/industries/financial-services/our-insights/insurance-2030-the-impact-of-ai-on-the-future-of-insurance (accessed on 22 January 2023).

- Babu, K.; Kumar, C.; Kannaiyaraju, C. Face Recognition System Using Deep Belief Network and Particle Swarm Optimization. Intell. Autom. Soft Comput. 2022, 33, 317–329. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y. The Detection and Recognition of Bridges’ Cracks Based on Deep Belief Network. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017; IEEE: New York, NY, USA, 2017; Volume 1, pp. 768–771. [Google Scholar]

- Maria, J.; Amaro, J.; Falcao, G.; Alexandre, L.A. Stacked Autoencoders Using Low-Power Accelerated Architectures for Object Recognition in Autonomous Systems. Neural Process. Lett. 2016, 43, 445–458. [Google Scholar] [CrossRef]

- Theis, L.; Shi, W.; Cunningham, A.; Huszár, F. Lossy Image Compression with Compressive Autoencoders. arXiv 2017, arXiv:1703.00395. [Google Scholar]

- Song, J.; Zhang, H.; Li, X.; Gao, L.; Wang, M.; Hong, R. Self-Supervised Video Hashing with Hierarchical Binary Auto-Encoder. IEEE Trans. Image Process. 2018, 27, 3210–3221. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Teh, Y.W.; Hinton, G.E. Rate-Coded Restricted Boltzmann Machines for Face Recognition. Adv. Neural Inf. Process. Syst. 2000, 13, 872–878. [Google Scholar]

- Ghahremannezhad, H.; Shi, H.; Liu, C. Real-Time Accident Detection in Traffic Surveillance Using Deep Learning. In Proceedings of the 2022 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 21–23 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Lange, S.; Ulbrich, F.; Goehring, D. Online Vehicle Detection Using Deep Neural Networks and Lidar Based Preselected Image Patches. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 22 January 2016; IEEE: New York, NY, USA, 2016; pp. 954–959. [Google Scholar]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: New York, NY, USA, 2018; pp. 1–10. [Google Scholar]

- Hashmi, S.N.; Kumar, K.; Khandelwal, S.; Lochan, D.; Mittal, S. Real Time License Plate Recognition from Video Streams Using Deep Learning. Int. J. Inf. Retr. Res. 2019, 9, 65–87. [Google Scholar] [CrossRef] [Green Version]

- Cirean, D.; Meier, U.; Masci, J.; Schmidhuber, J. A Committee of Neural Networks for Traffic Sign Classification. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1918–1921. [Google Scholar]

- Sermanet, P.; LeCun, Y. Traffic Sign Recognition with Multi-Scale Convolutional Networks. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; IEEE: New York, NY, USA, 2011; pp. 2809–2813. [Google Scholar]

- Ciresan, D.; Meier, U.; Masci, J.; Schmidhuber, J. Multi-Column Deep Neural Network for Traffic Sign Classification. Neural Netw. 2012, 32, 333–338. [Google Scholar] [CrossRef] [Green Version]

- Jin, J.; Fu, K.; Zhang, C. Traffic Sign Recognition with Hinge Loss Trained Convolutional Neural Networks. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1991–2000. [Google Scholar] [CrossRef]

- Haloi, M. Traffic Sign Classification Using Deep Inception Based Convolutional Networks. arXiv 2015, arXiv:1511.02992. [Google Scholar]

- Qian, R.; Zhang, B.; Yue, Y.; Wang, Z.; Coenen, F. Robust Chinese Traffic Sign Detection and Recognition with Deep Convolutional Neural Network. In Proceedings of the 2015 11th International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; IEEE: New York, NY, USA, 2015; pp. 791–796. [Google Scholar]

- Changzhen, X.; Cong, W.; Weixin, M.; Yanmei, S. A Traffic Sign Detection Algorithm Based on Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing (ICSIP), Beijing, China, 13–15August 2016; IEEE: New York, NY, USA, 2016; pp. 676–679. [Google Scholar]

- Jung, S.; Lee, U.; Jung, J.; Shim, D.H. Real-Time Traffic Sign Recognition System with Deep Convolutional Neural Network. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xi’an, China, 19–22 August 2016; IEEE: New York, NY, USA, 2016; pp. 31–34. [Google Scholar]

- Zeng, Y.; Xu, X.; Shen, D.; Fang, Y.; Xiao, Z. Traffic Sign Recognition Using Kernel Extreme Learning Machines with Deep Perceptual Features. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1647–1653. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Q.; Wu, H.; Liu, Y. A Shallow Network with Combined Pooling for Fast Traffic Sign Recognition. Information 2017, 8, 45. [Google Scholar] [CrossRef] [Green Version]

- Du, X.; Ang, M.H.; Rus, D. Car Detection for Autonomous Vehicle: LIDAR and Vision Fusion Approach through Deep Learning Framework. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24 September 2017; IEEE: New York, NY, USA, 2017; pp. 749–754. [Google Scholar]

- Wu, L.-T.; Lin, H.-Y. Overtaking Vehicle Detection Techniques Based on Optical Flow and Convolutional Neural Network. In Proceedings of the VEHITS, Madeira, Portugal, 16–18 March 2018; pp. 133–140. [Google Scholar]

- Pillai, U.K.K.; Valles, D. An Initial Deep CNN Design Approach for Identification of Vehicle Color and Type for Amber and Silver Alerts. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 27–30 January 2021; IEEE: New York, NY, USA, 2021; pp. 903–908. [Google Scholar]

- Shvai, N.; Hasnat, A.; Meicler, A.; Nakib, A. Accurate Classification for Automatic Vehicle-Type Recognition Based on Ensemble Classifiers. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1288–1297. [Google Scholar] [CrossRef]

- Yi, S. Pedestrian Behavior Modeling and Understanding in Crowds. Doctoral Dissertation, The Chinese University of Hong Kong, Hong Kong, 2016. [Google Scholar]

- Ouyang, W.; Wang, X. Joint Deep Learning for Pedestrian Detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2056–2063. [Google Scholar]

- Fukui, H.; Yamashita, T.; Yamauchi, Y.; Fujiyoshi, H.; Murase, H. Pedestrian Detection Based on Deep Convolutional Neural Network with Ensemble Inference Network. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; IEEE: New York, NY, USA, 2015; pp. 223–228. [Google Scholar]

- John, V.; Mita, S.; Liu, Z.; Qi, B. Pedestrian Detection in Thermal Images Using Adaptive Fuzzy C-Means Clustering and Convolutional Neural Networks. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; IEEE: New York, NY, USA, 2015; pp. 246–249. [Google Scholar]

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing Lidar and Images for Pedestrian Detection Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York, NY, USA, 2016; pp. 2198–2205. [Google Scholar]

- Kim, J.; Lee, M. Robust Lane Detection Based on Convolutional Neural Network and Random Sample Consensus. In Proceedings of the Neural Information Processing: 21st International Conference, ICONIP 2014, Kuching, Malaysia, 3–6 November 2014; Part I 21. Springer: Berlin/Heidelberg, Germany, 2014; pp. 454–461. [Google Scholar]

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R. An Empirical Evaluation of Deep Learning on Highway Driving. arXiv 2015, arXiv:1504.01716. [Google Scholar]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep Neural Network for Structural Prediction and Lane Detection in Traffic Scene. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 690–703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Shao, J.; Loy, C.-C.; Kang, K.; Wang, X. Slicing Convolutional Neural Network for Crowd Video Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5620–5628. [Google Scholar]

- Sabokrou, M.; Fayyaz, M.; Fathy, M.; Moayed, Z.; Klette, R. Deep-Anomaly: Fully Convolutional Neural Network for Fast Anomaly Detection in Crowded Scenes. Comput. Vis. Image Underst. 2018, 172, 88–97. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Shao, J.; He, C. Abnormal Event Detection for Video Surveillance Using Deep One-Class Learning. Multimed Tools Appl. 2019, 78, 3633–3647. [Google Scholar] [CrossRef]

- Sabih, M.; Vishwakarma, D.K. Crowd Anomaly Detection with LSTMs Using Optical Features and Domain Knowledge for Improved Inferring. Vis. Comput. 2022, 38, 1719–1730. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-learning Network. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road Crack Detection Using Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE international conference on image processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 3708–3712. [Google Scholar]

- Gulgec, N.S.; Takáč, M.; Pakzad, S.N. Structural Damage Detection Using Convolutional Neural Networks. In Model Validation and Uncertainty Quantification, Proceedings of the 35th IMAC, A Conference and Exposition on Structural Dynamics; Springer: Berlin/Heidelberg, Germany, 2017; Volume 3, pp. 331–337. [Google Scholar]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Stathaki, T. Automatic Crack Detection for Tunnel Inspection Using Deep Learning and Heuristic Image Post-Processing. Appl. Intell. 2019, 49, 2793–2806. [Google Scholar] [CrossRef]

- Mandal, V.; Uong, L.; Adu-Gyamfi, Y. Automated Road Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: New York, NY, USA, 2018; pp. 5212–5215. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Li, S.; Zhao, X. Convolutional Neural Networks-Based Crack Detection for Real Concrete Surface. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2018, Denver, CO, USA, 5–8 March 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10598, pp. 955–961. [Google Scholar]

- Ahmed, T.U.; Hossain, M.S.; Alam, M.J.; Andersson, K. An Integrated CNN-RNN Framework to Assess Road Crack. In Proceedings of the 2019 22nd International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 18–20 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Nguyen, N.H.T.; Perry, S.; Bone, D.; Le, H.T.; Nguyen, T.T. Two-Stage Convolutional Neural Network for Road Crack Detection and Segmentation. Expert Syst. Appl. 2021, 186, 115718. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Z.; Wang, H.; Liu, K. High-Speed Railway Catenary Components Detection Using the Cascaded Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Bojarski, M.; del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Farkh, R.; Alhuwaimel, S.; Alzahrani, S.; al Jaloud, K.; Tabrez Quasim, M. Deep Learning Control for Autonomous Robot. Comput. Mater. Contin. 2022, 72, 2811–2824. [Google Scholar] [CrossRef]

- Nose, Y.; Kojima, A.; Kawabata, H.; Hironaka, T. A Study on a Lane Keeping System Using CNN for Online Learning of Steering Control from Real Time Images. In Proceedings of the 2019 34th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Jeju, Republic of Korea, 23–26 June 2019; IEEE: New York, NY, USA, 2019; pp. 1–4. [Google Scholar]

- Chen, Z.; Huang, X. End-to-End Learning for Lane Keeping of Self-Driving Cars. In Proceedings of the 2017 IEEE intelligent vehicles symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; IEEE: New York, NY, USA, 2017; pp. 1856–1860. [Google Scholar]

- Rateke, T.; von Wangenheim, A. Passive Vision Road Obstacle Detection: A Literature Mapping. Int. J. Comput. Appl. 2022, 44, 376–395. [Google Scholar] [CrossRef]

- Ali, R.; Chuah, J.H.; Talip, M.S.A.; Mokhtar, N.; Shoaib, M.A. Structural Crack Detection Using Deep Convolutional Neural Networks. Autom. Constr. 2022, 133, 103989. [Google Scholar] [CrossRef]

- GONG, W.; SHI, Z.; Qiang, J.I. Non-Segmented Chinese License Plate Recognition Algorithm Based on Deep Neural Networks. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; IEEE: New York, NY, USA, 2020; pp. 66–71. [Google Scholar]

- Chen, S.; Zhang, S.; Shang, J.; Chen, B.; Zheng, N. Brain-Inspired Cognitive Model with Attention for Self-Driving Cars. IEEE Trans. Cogn. Dev. Syst. 2017, 11, 13–25. [Google Scholar] [CrossRef] [Green Version]

- Medel, J.R.; Savakis, A. Anomaly Detection in Video Using Predictive Convolutional Long Short-Term Memory Networks. arXiv 2016, arXiv:1612.00390. [Google Scholar]

- Medel, J.R. Anomaly Detection Using Predictive Convolutional Long Short-Term Memory Units; Rochester Institute of Technology: Rochester, NY, USA, 2016; ISBN 1369443943. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. Remembering History with Convolutional Lstm for Anomaly Detection. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, 10–14 July 2017; IEEE: New York, NY, USA, 2017; pp. 439–444. [Google Scholar]

- Patraucean, V.; Handa, A.; Cipolla, R. Spatio-Temporal Video Autoencoder with Differentiable Memory. arXiv 2015, arXiv:1511.06309. [Google Scholar]

- Li, Y.; Cai, Y.; Liu, J.; Lang, S.; Zhang, X. Spatio-Temporal Unity Networking for Video Anomaly Detection. IEEE Access 2019, 7, 172425–172432. [Google Scholar] [CrossRef]

- Wang, L.; Tan, H.; Zhou, F.; Zuo, W.; Sun, P. Unsupervised Anomaly Video Detection via a Double-Flow Convlstm Variational Autoencoder. IEEE Access 2022, 10, 44278–44289. [Google Scholar] [CrossRef]

- Kim, J.; Canny, J. Interpretable Learning for Self-Driving Cars by Visualizing Causal Attention. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2942–2950. [Google Scholar]

- Wang, X.; Che, Z.; Jiang, B.; Xiao, N.; Yang, K.; Tang, J.; Ye, J.; Wang, J.; Qi, Q. Robust Unsupervised Video Anomaly Detection by Multipath Frame Prediction. IEEE Trans. Neural. Netw. Learn. Syst. 2021, 33, 2301–2312. [Google Scholar] [CrossRef]

- Jackson, S.D.; Cuzzolin, F. SVD-GAN for Real-Time Unsupervised Video Anomaly Detection. In Proceedings of the British Machine Vision Conference (BMVC), Virtual, 22–25 November 2021. [Google Scholar]

- Song, H.; Sun, C.; Wu, X.; Chen, M.; Jia, Y. Learning Normal Patterns via Adversarial Attention-Based Autoencoder for Abnormal Event Detection in Videos. IEEE Trans. Multimed. 2019, 22, 2138–2148. [Google Scholar] [CrossRef]

- Ganokratanaa, T.; Aramvith, S.; Sebe, N. Unsupervised Anomaly Detection and Localization Based on Deep Spatiotemporal Translation Network. IEEE Access 2020, 8, 50312–50329. [Google Scholar] [CrossRef]

- Chen, D.; Yue, L.; Chang, X.; Xu, M.; Jia, T. NM-GAN: Noise-Modulated Generative Adversarial Network for Video Anomaly Detection. Pattern Recognit. 2021, 116, 107969. [Google Scholar] [CrossRef]

- Huang, C.; Wen, J.; Xu, Y.; Jiang, Q.; Yang, J.; Wang, Y.; Zhang, D. Self-Supervised Attentive Generative Adversarial Networks for Video Anomaly Detection. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Darapaneni, N.; Mogeraya, K.; Mandal, S.; Narayanan, A.; Siva, P.; Paduri, A.R.; Khan, F.; Agadi, P.M. Computer Vision Based License Plate Detection for Automated Vehicle Parking Management System. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; IEEE: New York, NY, USA, 2020; pp. 800–805. [Google Scholar]

- Vetriselvi, T.; Lydia, E.L.; Mohanty, S.N.; Alabdulkreem, E.; Al-Otaibi, S.; Al-Rasheed, A.; Mansour, R.F. Deep Learning Based License Plate Number Recognition for Smart Cities. CMC Comput. Mater Contin. 2022, 70, 2049–2064. [Google Scholar] [CrossRef]

- Duman, E.; Erdem, O.A. Anomaly Detection in Videos Using Optical Flow and Convolutional Autoencoder. IEEE Access 2019, 7, 183914–183923. [Google Scholar] [CrossRef]

- Xing, J.; Nguyen, M.; Qi Yan, W. The Improved Framework for Traffic Sign Recognition Using Guided Image Filtering. SN Comput. Sci. 2022, 3, 461. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral Deep Neural Networks for Pedestrian Detection. arXiv 2016, arXiv:1611.02644. [Google Scholar]

- Dewangan, D.K.; Sahu, S.P. Road Detection Using Semantic Segmentation-Based Convolutional Neural Network for Intelligent Vehicle System. In Proceedings of the Data Engineering and Communication Technology: Proceedings of ICDECT 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 629–637. [Google Scholar]

- Walk, S.; Schindler, K.; Schiele, B. Disparity Statistics for Pedestrian Detection: Combining Appearance, Motion and Stereo. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, September 5–11, 2010, Proceedings, Part VI 11; Springer: Berlin/Heidelberg, Germany, 2010; pp. 182–195. [Google Scholar]

- Liu, Z.; Yu, C.; Zheng, B. Any Type of Obstacle Detection in Complex Environments Based on Monocular Vision. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26–28 July 2013; IEEE: New York, NY, USA, 2013; pp. 7692–7697. [Google Scholar]

- Pantilie, C.D.; Nedevschi, S. Real-Time Obstacle Detection in Complex Scenarios Using Dense Stereo Vision and Optical Flow. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Madeira Island, Portugal, 19–22 September 2010; IEEE: New York, NY, USA, 2010; pp. 439–444. [Google Scholar]

- Dairi, A.; Harrou, F.; Sun, Y.; Senouci, M. Obstacle Detection for Intelligent Transportation Systems Using Deep Stacked Autoencoder and $ k $-Nearest Neighbor Scheme. IEEE Sens. J. 2018, 18, 5122–5132. [Google Scholar] [CrossRef] [Green Version]

- Ci, W.; Xu, T.; Lin, R.; Lu, S. A Novel Method for Unexpected Obstacle Detection in the Traffic Environment Based on Computer Vision. Appl. Sci. 2022, 12, 8937. [Google Scholar] [CrossRef]

- Cha, Y.; Choi, W.; Büyüköztürk, O. Deep Learning-based Crack Damage Detection Using Convolutional Neural Networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Kortmann, F.; Fassmeyer, P.; Funk, B.; Drews, P. Watch out, Pothole! Featuring Road Damage Detection in an End-to-End System for Autonomous Driving. Data Knowl Eng 2022, 142, 102091. [Google Scholar] [CrossRef]

- Liu, J.; Yang, X.; Lau, S.; Wang, X.; Luo, S.; Lee, V.C.; Ding, L. Automated Pavement Crack Detection and Segmentation Based on Two-step Convolutional Neural Network. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 1291–1305. [Google Scholar] [CrossRef]

- Muhammad, K.; Hussain, T.; Ullah, H.; del Ser, J.; Rezaei, M.; Kumar, N.; Hijji, M.; Bellavista, P.; de Albuquerque, V.H.C. Vision-Based Semantic Segmentation in Scene Understanding for Autonomous Driving: Recent Achievements, Challenges, and Outlooks. In IEEE Transactions on Intelligent Transportation Systems; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Benamer, I.; Yahiouche, A.; Ghenai, A. Deep Learning Environment Perception and Self-Tracking for Autonomous and Connected Vehicles. In Proceedings of the Machine Learning for Networking: Third International Conference, MLN 2020, Paris, France, November 24–26, 2020, Revised Selected Papers 3; Springer: Berlin/Heidelberg, Germany, 2021; pp. 305–319. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neura. Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Zang, X.; Li, G.; Gao, W. Multidirection and Multiscale Pyramid in Transformer for Video-Based Pedestrian Retrieval. IEEE Trans. Ind. Inf. 2022, 18, 8776–8785. [Google Scholar] [CrossRef]

- Wang, H.; Chen, J.; Huang, Z.; Li, B.; Lv, J.; Xi, J.; Wu, B.; Zhang, J.; Wu, Z. FPT: Fine-Grained Detection of Driver Distraction Based on the Feature Pyramid Vision Transformer. In IEEE Transactions on Intelligent Transportation Systems; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413. [Google Scholar]

- Rafie, M.; Zhang, Y.; Liu, S. Evaluation Framework for Video Coding for Machines. ISO/IEC JTC 2021, 1, 3–4. [Google Scholar]

- Manikoth, N.; Loce, R.; Bernal, E.; Wu, W. Survey of Computer Vision in Roadway Transportation Systems. In Proceedings of the Visual Information Processing and Communication III; SPIE: Bellingham, WA, USA, 2012; Volume 8305, pp. 258–276. [Google Scholar]

- Buch, N.; Velastin, S.A.; Orwell, J. A Review of Computer Vision Techniques for the Analysis of Urban Traffic. IEEE Trans. Intell. Transp. Syst. 2011, 12, 920–939. [Google Scholar] [CrossRef]

- Mufti, N.; Shah, S.A.A. Automatic Number Plate Recognition: A Detailed Survey of Relevant Algorithms. Sensors 2021, 21, 3028. [Google Scholar]

- Oliveira-Neto, F.M.; Han, L.D.; Jeong, M.K. An Online Self-Learning Algorithm for License Plate Matching. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1806–1816. [Google Scholar] [CrossRef]

- Hommos, O.; Al-Qahtani, A.; Farhat, A.; Al-Zawqari, A.; Bensaali, F.; Amira, A.; Zhai, X. HD Qatari ANPR System. In Proceedings of the 2016 International Conference on Industrial Informatics and Computer Systems (CIICS); IEEE: New York, NY, USA, 2016; pp. 1–5. [Google Scholar]

- Farhat, A.A.H.; Al-Zawqari, A.; Hommos, O.; Al-Qahtani, A.; Bensaali, F.; Amira, A.; Zhai, X. OCR-Based Hardware Implementation for Qatari Number Plate on the Zynq SoC. In Proceedings of the 2017 9th IEEE-GCC Conference and Exhibition (GCCCE); IEEE: New York, NY, USA, 2017; pp. 1–9. [Google Scholar]

- Molina-Moreno, M.; González-Díaz, I.; Díaz-de-María, F. Efficient Scale-Adaptive License Plate Detection System. IEEE Trans. Intell. Transp. Syst. 2018, 20, 2109–2121. [Google Scholar] [CrossRef]

- Sasi, A.; Sharma, S.; Cheeran, A.N. Automatic Car Number Plate Recognition. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Ahmad, I.S.; Boufama, B.; Habashi, P.; Anderson, W.; Elamsy, T. Automatic License Plate Recognition: A Comparative Study. In Proceedings of the 2015 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT); IEEE: New York, NY, USA, 2015; pp. 635–640. [Google Scholar]

- Omran, S.S.; Jarallah, J.A. Iraqi License Plate Localization and Recognition System Using Neural Network. In Proceedings of the 2017 Second Al-Sadiq International Conference on Multidisciplinary in IT and Communication Science and Applications (AIC-MITCSA), Baghdad, Iraq, 30–31 December 2017; IEEE: New York, NY, USA, 2017; pp. 73–78. [Google Scholar]

- Weihong, W.; Jiaoyang, T. Research on License Plate Recognition Algorithms Based on Deep Learning in Complex Environment. IEEE Access 2020, 8, 91661–91675. [Google Scholar] [CrossRef]

- Silva, S.M.; Jung, C.R. Real-Time License Plate Detection and Recognition Using Deep Convolutional Neural Networks. J. Vis. Commun. Image Represent. 2020, 71, 102773. [Google Scholar] [CrossRef]

- Akhtar, Z.; Ali, R. Automatic Number Plate Recognition Using Random Forest Classifier. SN Comput. Sci. 2020, 1, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Calitz, A.; Hill, M. Automated License Plate Recognition Using Existing University Infrastructure and Different Camera Angles. Afr. J. Inf. Syst. 2020, 12, 4. [Google Scholar]

- Desai, G.G.; Bartakke, P.P. Real-Time Implementation of Indian License Plate Recognition System. In Proceedings of the 2018 IEEE Punecon; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Joshi, G.; Kaul, S.; Singh, A. Automated Vehicle Numberplate Detection and Recognition. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; IEEE: New York, NY, USA, 2021; pp. 465–469. [Google Scholar]

- Shashirangana, J.; Padmasiri, H.; Meedeniya, D.; Perera, C. Automated License Plate Recognition: A Survey on Methods and Techniques. IEEE Access 2020, 9, 11203–11225. [Google Scholar] [CrossRef]

- Singh, V.; Srivastava, A.; Kumar, S.; Ghosh, R. A Structural Feature Based Automatic Vehicle Classification System at Toll Plaza. In Proceedings of the 4th International Conference on Internet of Things and Connected Technologies (ICIoTCT), 2019: Internet of Things and Connected Technologies; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–10. [Google Scholar]

- Sferle, R.M.; Moisi, E.V. Automatic Number Plate Recognition for a Smart Service Auto. In Proceedings of the 2019 15th International Conference on Engineering of Modern Electric Systems (EMES), Oradea, Romania, 13–14 June 2019; IEEE: New York, NY, USA, 2019; pp. 57–60. [Google Scholar]

- Slimani, I.; Zaarane, A.; Hamdoun, A.; Atouf, I. Vehicle License Plate Localization and Recognition System for Intelligent Transportation Applications. In Proceedings of the 2019 6th International Conference on Control, Decision and Information Technologies (CoDIT), Paris, France, 23–26 April 2019; IEEE: New York, NY, USA, 2019; pp. 1592–1597. [Google Scholar]

- Ruta, A.; Li, Y.; Liu, X. Robust Class Similarity Measure for Traffic Sign Recognition. IEEE Trans. Intell. Transp. Syst. 2010, 11, 846–855. [Google Scholar] [CrossRef]

- Zaklouta, F.; Stanciulescu, B.; Hamdoun, O. Traffic Sign Classification Using Kd Trees and Random Forests. In Proceedings of the 2011 International Joint Conference on Neural Networks; IEEE: New York, NY, USA, 2011; pp. 2151–2155. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Li, Y.; Li, Z.; Li, L. Missing Traffic Data: Comparison of Imputation Methods. IET Intell. Transp. Syst. 2014, 8, 51–57. [Google Scholar] [CrossRef]

- Zaklouta, F.; Stanciulescu, B. Real-Time Traffic-Sign Recognition Using Tree Classifiers. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1507–1514. [Google Scholar] [CrossRef]

- Rajesh, R.; Rajeev, K.; Suchithra, K.; Lekhesh, V.P.; Gopakumar, V.; Ragesh, N.K. Coherence Vector of Oriented Gradients for Traffic Sign Recognition Using Neural Networks. In Proceedings of the 2011 International Joint Conference on Neural Networks; IEEE: New York, NY, USA, 2011; pp. 907–910. [Google Scholar]

- Boi, F.; Gagliardini, L. A Support Vector Machines Network for Traffic Sign Recognition. In Proceedings of the 2011 International Joint Conference on Neural Networks; IEEE: New York, NY, USA, 2011; pp. 2210–2216. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.-L.; Yin, F.; Wang, D.-H.; Wang, Q.-F. Chinese Handwriting Recognition Contest 2010. In Proceedings of the 2010 Chinese Conference on Pattern Recognition (CCPR); IEEE: New York, NY, USA, 2010; pp. 1–5. [Google Scholar]

- Salakhutdinov, R.; Hinton, G. Learning and Evaluaing Deep Bolztmann Machines. 2008. [Google Scholar]

- Zhang, Y.; Wang, Z.; Song, R.; Yan, C.; Qi, Y. Detection-by-Tracking of Traffic Signs in Videos. Appl. Intell. 2021, 52, 8226–8242. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2969239–2969250. [Google Scholar] [CrossRef] [Green Version]

- Sindhu, O.; Victer Paul, P. Computer Vision Model for Traffic Sign Recognition and Detection—A Survey. In Proceedings of the ICCCE 2018: Proceedings of the International Conference on Communications and Cyber Physical Engineering 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 679–689. [Google Scholar]

- Marques, R.; Ribeiro, T.; Lopes, G.; Ribeiro, A.F. YOLOv3: Traffic Signs & Lights Detection and Recognition for Autonomous Driving. In Proceedings of the ICAART (3), Online, 3–5 February 2022; pp. 818–826. [Google Scholar]

- Arif, M.U.; Farooq, M.U.; Raza, R.H.; Lodhi, Z.; Hashmi, M.A.R. A Comprehensive Review of Vehicle Detection Techniques Under Varying Moving Cast Shadow Conditions Using Computer Vision and Deep Learning. IEEE Access 2022, 10, 1. [Google Scholar]

- Zhu, J.; Li, X.; Jin, P.; Xu, Q.; Sun, Z.; Song, X. Mme-Yolo: Multi-Sensor Multi-Level Enhanced Yolo for Robust Vehicle Detection in Traffic Surveillance. Sensors 2020, 21, 27. [Google Scholar] [CrossRef]

- Huang, S.; He, Y.; Chen, X. M-YOLO: A Nighttime Vehicle Detection Method Combining Mobilenet v2 and YOLO V3. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 1883, p. 012094. [Google Scholar]

- Li, J.; Xu, Z.; Fu, L.; Zhou, X.; Yu, H. Domain Adaptation from Daytime to Nighttime: A Situation-Sensitive Vehicle Detection and Traffic Flow Parameter Estimation Framework. Transp. Res. Part C Emerg. Technol. 2021, 124, 102946. [Google Scholar] [CrossRef]

- Neto, J.; Santos, D.; Rossetti, R.J.F. Computer-Vision-Based Surveillance of Intelligent Transportation Systems. In Proceedings of the 2018 13th Iberian Conference on Information Systems and Technologies (CISTI), Caceres, Spain, 13–16 June 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Yang, Z.; Pun-Cheng, L.S.C. Vehicle Detection in Intelligent Transportation Systems and Its Applications under Varying Environments: A Review. Image Vis. Comput. 2018, 69, 143–154. [Google Scholar] [CrossRef]

- Gholamhosseinian, A.; Seitz, J. Vehicle Classification in Intelligent Transport Systems: An Overview, Methods and Software Perspective. IEEE Open J. Intell. Transp. Syst. 2021, 2, 173–194. [Google Scholar] [CrossRef]

- Niroomand, N.; Bach, C.; Elser, M. Robust Vehicle Classification Based on Deep Features Learning. IEEE Access 2021, 9, 95675–95685. [Google Scholar] [CrossRef]

- Wong, Z.J.; Goh, V.T.; Yap, T.T.V.; Ng, H. Vehicle Classification Using Convolutional Neural Network for Electronic Toll Collection. In Proceedings of the Computational Science and Technology: 6th ICCST 2019, Kota Kinabalu, Malaysia, 29–30 August 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 169–177. [Google Scholar]

- Jiao, J.; Wang, H. Traffic Behavior Recognition from Traffic Videos under Occlusion Condition: A Kalman Filter Approach. Transp. Res. Rec. 2022, 2676, 55–65. [Google Scholar] [CrossRef]

- Bernal, E.A.; Li, Q.; Loce, R.P. U.S. Patent No. 10,262,328. Washington, DC: U.S. Patent and Trademark Office. 2019. Available online: https://patentimages.storage.googleapis.com/83/14/27/1d8b55b4bfe61a/US10262328.pdf (accessed on 12 January 2023).

- Tian, D.; Han, Y.; Wang, B.; Guan, T.; Wei, W. A Review of Intelligent Driving Pedestrian Detection Based on Deep Learning. Comput. Intell. Neurosci. 2021, 2021, 1–16. [Google Scholar] [CrossRef]

- Ali, A.T.; Dagless, E.L. Vehicle and Pedestrian Detection and Tracking. In Proceedings of the IEE Colloquium on Image Analysis for Transport Applications; IET: Stevenage, UK, 1990; pp. 1–5. [Google Scholar]

- Zhao, L.; Thorpe, C.E. Stereo-and Neural Network-Based Pedestrian Detection. IEEE Trans. Intell. Transp. Syst. 2000, 1, 148–154. [Google Scholar] [CrossRef] [Green Version]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: An Evaluation of the State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Leibe, B.; Seemann, E.; Schiele, B. Pedestrian Detection in Crowded Scenes. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 878–885. [Google Scholar]

- Tuzel, O.; Porikli, F.; Meer, P. Pedestrian Detection via Classification on Riemannian Manifolds. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1713–1727. [Google Scholar] [CrossRef]

- Enzweiler, M.; Gavrila, D.M. Monocular Pedestrian Detection: Survey and Experiments. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 2179–2195. [Google Scholar] [CrossRef] [Green Version]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Pham, Q. Autonomous Vehicles and Their Impact on Road Transportations. Bachelor’s Thesis, JAMK University of Applied Sciences, Jyväskylä, Finland, May 2018. [Google Scholar]

- Sabzmeydani, P.; Mori, G. Detecting Pedestrians by Learning Shapelet Features. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2007; pp. 1–8. [Google Scholar]

- Viola, P.; Jones, M.J.; Snow, D. Detecting Pedestrians Using Patterns of Motion and Appearance. Int. J. Comput. Vis. 2005, 63, 153–161. [Google Scholar] [CrossRef]

- Gall, J.; Yao, A.; Razavi, N.; van Gool, L.; Lempitsky, V. Hough Forests for Object Detection, Tracking, and Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2188–2202. [Google Scholar] [CrossRef]

- Wu, B.; Nevatia, R. Detection and Tracking of Multiple, Partially Occluded Humans by Bayesian Combination of Edgelet Based Part Detectors. Int. J. Comput. Vis. 2007, 75, 247. [Google Scholar] [CrossRef]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Deep Learning Strong Parts for Pedestrian Detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1904–1912. [Google Scholar]

- Zhang, S.; Yang, J.; Schiele, B. Occluded Pedestrian Detection through Guided Attention in Cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6995–7003. [Google Scholar]

- Garcia-Bunster, G.; Torres-Torriti, M.; Oberli, C. Crowded Pedestrian Counting at Bus Stops from Perspective Transformations of Foreground Areas. IET Comput. Vis. 2012, 6, 296–305. [Google Scholar] [CrossRef]

- Chen, D.-Y.; Huang, P.-C. Visual-Based Human Crowds Behavior Analysis Based on Graph Modeling and Matching. IEEE Sens. J. 2013, 13, 2129–2138. [Google Scholar] [CrossRef]

- Stauffer, C.; Grimson, W.E.L. Adaptive Background Mixture Models for Real-Time Tracking. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and PATTERN recognition (Cat. No PR00149); IEEE: New York, NY, USA, 1999; Volume 2, pp. 246–252. [Google Scholar]

- Li, T.; Chang, H.; Wang, M.; Ni, B.; Hong, R.; Yan, S. Crowded Scene Analysis: A Survey. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 367–386. [Google Scholar] [CrossRef] [Green Version]

- Ge, W.; Collins, R.T.; Ruback, R.B. Vision-Based Analysis of Small Groups in Pedestrian Crowds. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1003–1016. [Google Scholar]

- Luo, P.; Tian, Y.; Wang, X.; Tang, X. Switchable Deep Network for Pedestrian Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 899–906. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.; Xu, T.; Feng, J.; Yan, S. Scale-Aware Fast R-CNN for Pedestrian Detection. IEEE Trans. Multimed. 2017, 20, 985–996. [Google Scholar] [CrossRef] [Green Version]

- Sindagi, V.A.; Patel, V.M. A Survey of Recent Advances in CNN-Based Single Image Crowd Counting and Density Estimation. Pattern Recognit. Lett. 2018, 107, 3–16. [Google Scholar] [CrossRef] [Green Version]

- Tripathi, G.; Singh, K.; Vishwakarma, D.K. Convolutional Neural Networks for Crowd Behaviour Analysis: A Survey. Vis. Comput. 2019, 35, 753–776. [Google Scholar] [CrossRef]

- Afsar, P.; Cortez, P.; Santos, H. Automatic Visual Detection of Human Behavior: A Review from 2000 to 2014. Expert. Syst. Appl. 2015, 42, 6935–6956. [Google Scholar] [CrossRef] [Green Version]

- Yun, S.; Yun, K.; Choi, J.; Choi, J.Y. Density-Aware Pedestrian Proposal Networks for Robust People Detection in Crowded Scenes. In Proceedings of the Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part II 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 643–654. [Google Scholar]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer Vision and Deep Learning Techniques for Pedestrian Detection and Tracking: A Survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Du, X.; El-Khamy, M.; Lee, J.; Davis, L. Fused DNN: A Deep Neural Network Fusion Approach to Fast and Robust Pedestrian Detection. In Proceedings of the 2017 IEEE winter conference on applications of computer vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; IEEE: New York, NY, USA, 2017; pp. 953–961. [Google Scholar]

- Chen, W.; Wang, W.; Wang, K.; Li, Z.; Li, H.; Liu, S. Lane Departure Warning Systems and Lane Line Detection Methods Based on Image Processing and Semantic Segmentation: A Review. J. Traffic Transp. Eng. 2020, 7, 748–774. [Google Scholar] [CrossRef]

- Gopalan, R.; Hong, T.; Shneier, M.; Chellappa, R. A Learning Approach towards Detection and Tracking of Lane Markings. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1088–1098. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Shin Yoon, J.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.-H.; Seok Hong, H.; Han, S.-H.; So Kweon, I. Vpgnet: Vanishing Point Guided Network for Lane and Road Marking Detection and Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1947–1955. [Google Scholar]

- Tang, J.; Li, S.; Liu, P. A Review of Lane Detection Methods Based on Deep Learning. Pattern Recognit 2021, 111, 107623. [Google Scholar] [CrossRef]

- Waykole, S.; Shiwakoti, N.; Stasinopoulos, P. Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System. Sustainability 2021, 13, 11417. [Google Scholar] [CrossRef]

- Mamun, A.; Ping, E.P.; Hossen, J.; Tahabilder, A.; Jahan, B. A Comprehensive Review on Lane Marking Detection Using Deep Neural Networks. Sensors 2022, 22, 7682. [Google Scholar] [CrossRef]

- Wang, Z.; Ren, W.; Qiu, Q. Lanenet: Real-Time Lane Detection Networks for Autonomous Driving. arXiv 2018, arXiv:1807.01726. [Google Scholar]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning Lightweight Lane Detection Cnns by Self Attention Distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1013–1021. [Google Scholar]

- van Gansbeke, W.; de Brabandere, B.; Neven, D.; Proesmans, M.; van Gool, L. End-to-End Lane Detection through Differentiable Least-Squares Fitting. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Wedel, A.; Schoenemann, T.; Brox, T.; Cremers, D. Warpcut–Fast Obstacle Segmentation in Monocular Video. In Proceedings of the Pattern Recognition: 29th DAGM Symposium, Heidelberg, Germany, September 12-14, 2007. Proceedings 29; Springer: Berlin/Heidelberg, Germany, 2007; pp. 264–273. [Google Scholar]

- Gonzales, R.C.; Woods, R.E. Digital Image Processing, 2nd ed.; Prentice Hall: Hoboken, NJ, USA, 2002. [Google Scholar]

- Zebbara, K.; el Ansari, M.; Mazoul, A.; Oudani, H. A Fast Road Obstacle Detection Using Association and Symmetry Recognition. In Proceedings of the 2019 International Conference on Wireless Technologies, Embedded and Intelligent Systems (WITS); IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the Image Analysis: 13th Scandinavian Conference, SCIA 2003 Halmstad, Sweden, June 29–July 2, 2003 Proceedings 13; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Shen, Y.; Du, X.; Liu, J. Monocular Vision Based Obstacle Detection for Robot Navigation in Unstructured Environment. In Proceedings of the Advances in Neural Networks–ISNN 2007: 4th International Symposium on Neural Networks, ISNN 2007, Nanjing, China, June 3-7, 2007, Proceedings, Part I 4; Springer: Berlin/Heidelberg, Germany, 2007; pp. 714–722. [Google Scholar]

- Bouchafa, S.; Zavidovique, B. Obstacle Detection” for Free” in the c-Velocity Space. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC); IEEE: New York, NY, USA, 2011; pp. 308–313. [Google Scholar]

- Pȩszor, D.; Paszkuta, M.; Wojciechowska, M.; Wojciechowski, K. Optical Flow for Collision Avoidance in Autonomous Cars. In Proceedings of the Intelligent Information and Database Systems: 10th Asian Conference, ACIIDS 2018, Dong Hoi City, Vietnam, March 19-21, 2018, Proceedings, Part II 10; Springer: Berlin/Heidelberg, Germany, 2018; pp. 482–491. [Google Scholar]

- Herghelegiu, P.; Burlacu, A.; Caraiman, S. Negative Obstacle Detection for Wearable Assistive Devices for Visually Impaired. In Proceedings of the 2017 21st International Conference on System Theory, Control and Computing (ICSTCC); IEEE: New York, NY, USA, 2017; pp. 564–570. [Google Scholar]

- Kim, D.; Choi, J.; Yoo, H.; Yang, U.; Sohn, K. Rear Obstacle Detection System with Fisheye Stereo Camera Using HCT. Expert. Syst. Appl. 2015, 42, 6295–6305. [Google Scholar] [CrossRef]

- Gao, Y.; Ai, X.; Wang, Y.; Rarity, J.; Dahnoun, N. UV-Disparity Based Obstacle Detection with 3D Camera and Steerable Filter. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV); IEEE: New York, NY, USA, 2011; pp. 957–962. [Google Scholar]

- Benenson, R.; Mathias, M.; Timofte, R.; van Gool, L. Fast Stixel Computation for Fast Pedestrian Detection. In Proceedings of the ECCV Workshops (3), Florence, Italy, 7–13 October 2012; Volume 7585, pp. 11–20. [Google Scholar]

- Kang, M.-S.; Lim, Y.-C. Fast Stereo-Based Pedestrian Detection Using Hypotheses. In Proceedings of the 2015 Conference on Research in Adaptive and Convergent Systems, Prague, Czech Republic, 9–12 October 2015; pp. 131–135. [Google Scholar]

- Mhiri, R.; Maiza, H.; Mousset, S.; Taouil, K.; Vasseur, P.; Bensrhair, A. Obstacle Detection Using Unsynchronized Multi-Camera Network. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI); IEEE: New York, NY, USA, 2015; pp. 7–12. [Google Scholar]

- Benacer, I.; Hamissi, A.; Khouas, A. A Novel Stereovision Algorithm for Obstacles Detection Based on UV-Disparity Approach. In Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS); IEEE: New York, NY, USA, 2015; pp. 369–372. [Google Scholar]

- Burlacu, A.; Bostaca, S.; Hector, I.; Herghelegiu, P.; Ivanica, G.; Moldoveanul, A.; Caraiman, S. Obstacle Detection in Stereo Sequences Using Multiple Representations of the Disparity Map. In Proceedings of the 2016 20th International Conference on System Theory, Control and Computing (ICSTCC); IEEE: NEW York, NY, USA, 2016; pp. 854–859. [Google Scholar]

- Sun, Y.; Zhang, L.; Leng, J.; Luo, T.; Wu, Y. An Obstacle Detection Method Based on Binocular Stereovision. In Proceedings of the Advances in Multimedia Information Processing–PCM 2017: 18th Pacific-Rim Conference on Multimedia, Harbin, China, September 28-29, 2017, Revised Selected Papers, Part II 18; Springer: Berlin/Heidelberg, Germany, 2018; pp. 571–580. [Google Scholar]

- Kubota, S.; Nakano, T.; Okamoto, Y. A Global Optimization Algorithm for Real-Time on-Board Stereo Obstacle Detection Systems. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium; IEEE: New York, NY, USA, 2007; pp. 7–12. [Google Scholar]

- Liu, L.; Cui, J.; Li, J. Obstacle Detection and Classification in Dynamical Background. AASRI Procedia 2012, 1, 435–440. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Mukhopadhyay, A.; Mukherjee, I.; Biswas, P. Comparing CNNs for Non-Conventional Traffic Participants. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands, 21–25 September 2019; pp. 171–175. [Google Scholar]

- Masmoudi, M.; Ghazzai, H.; Frikha, M.; Massoud, Y. Object Detection Learning Techniques for Autonomous Vehicle Applications. In Proceedings of the 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES); IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Huang, P.-Y.; Lin, H.-Y. Rear Obstacle Warning for Reverse Driving Using Stereo Vision Techniques. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC); IEEE: New York, NY, USA, 2019; pp. 921–926. [Google Scholar]

- Dairi, A.; Harrou, F.; Senouci, M.; Sun, Y. Unsupervised Obstacle Detection in Driving Environments Using Deep-Learning-Based Stereovision. Rob. Auton. Syst. 2018, 100, 287–301. [Google Scholar] [CrossRef] [Green Version]

- Lian, J.; Kong, L.; Li, L.; Zheng, W.; Zhou, Y.; Fang, S.; Qian, B. Study on Obstacle Detection and Recognition Method Based on Stereo Vision and Convolutional Neural Network. In Proceedings of the 2019 Chinese Control Conference (CCC); IEEE: New York, NY, USA, 2019; pp. 8766–8771. [Google Scholar]

- Hsu, Y.-W.; Zhong, K.-Q.; Perng, J.-W.; Yin, T.-K.; Chen, C.-Y. Developing an On-Road Obstacle Detection System Using Monovision. In Proceedings of the 2018 International Conference on Image and Vision Computing New Zealand (IVCNZ); IEEE: New York, NY, USA, 2018; pp. 1–9. [Google Scholar]

- Hota, R.N.; Jonna, K.; Krishna, P.R. On-Road Vehicle Detection by Cascaded Classifiers. In Proceedings of the Third Annual ACM Bangalore Conference, Bangalore, India, 22–23 January 2010; pp. 1–5. [Google Scholar]

- Woo, J.-W.; Lim, Y.-C.; Lee, M. Dynamic Obstacle Identification Based on Global and Local Features for a Driver Assistance System. Neural Comput. Appl. 2011, 20, 925–933. [Google Scholar] [CrossRef]

- Chanawangsa, P.; Chen, C.W. A Novel Video Analysis Approach for Overtaking Vehicle Detection. In Proceedings of the 2013 International Conference on Connected Vehicles and Expo (ICCVE); IEEE: New York, NY, USA, 2013; pp. 802–807. [Google Scholar]

- Badrloo, S.; Varshosaz, M.; Pirasteh, S.; Li, J. Image-Based Obstacle Detection Methods for the Safe Navigation of Unmanned Vehicles: A Review. Remote Sens (Basel) 2022, 14, 3824. [Google Scholar] [CrossRef]

- Gavrila, D.M.; Munder, S. Multi-Cue Pedestrian Detection and Tracking from a Moving Vehicle. Int. J. Comput. Vis. 2007, 73, 41–59. [Google Scholar] [CrossRef] [Green Version]

- Franke, U.; Gehrig, S.; Badino, H.; Rabe, C. Towards Optimal Stereo Analysis of Image Sequences. Lect. Notes Comput. Sci. 2008, 4931, 43–58. [Google Scholar]

- Ma, G.; Park, S.-B.; Muller-Schneiders, S.; Ioffe, A.; Kummert, A. Vision-Based Pedestrian Detection-Reliable Pedestrian Candidate Detection by Combining Ipm and a 1d Profile. In Proceedings of the 2007 IEEE Intelligent Transportation Systems Conference; IEEE: New York, NY, USA, 2007; pp. 137–142. [Google Scholar]

- Cabani, I.; Toulminet, G.; Bensrhair, A. Contrast-Invariant Obstacle Detection System Using Color Stereo Vision. In Proceedings of the 2008 11th International IEEE Conference on Intelligent Transportation Systems; IEEE: New York, NY, USA, 2008; pp. 1032–1037. [Google Scholar]

- Suganuma, N.; Shimoyama, M.; Fujiwara, N. Obstacle Detection Using Virtual Disparity Image for Non-Flat Road. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium; IEEE: New York, NY, USA, 2008; pp. 596–601. [Google Scholar]

- Keller, C.G.; Llorca, D.F.; Gavrila, D.M. Dense Stereo-Based Roi Generation for Pedestrian Detection. In Proceedings of the Pattern Recognition: 31st DAGM Symposium, Jena, Germany, September 9-11, 2009. Proceedings 31; Springer: Berlin/Heidelberg, Germany, 2009; pp. 81–90. [Google Scholar]

- Chiu, C.-C.; Chen, W.-C.; Ku, M.-Y.; Liu, Y.-J. Asynchronous Stereo Vision System for Front-Vehicle Detection. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing; IEEE: New York, NY, USA, 2009; pp. 965–968. [Google Scholar]

- Ess, A.; Leibe, B.; Schindler, K.; van Gool, L. Moving Obstacle Detection in Highly Dynamic Scenes. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation; IEEE: New York, NY, USA, 2009; pp. 56–63. [Google Scholar]

- Ma, G.; Müller, D.; Park, S.-B.; Müller-Schneiders, S.; Kummert, A. Pedestrian Detection Using a Single-Monochrome Camera. IET Intell. Transp. Syst. 2009, 3, 42–56. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, J. Parallel Computation for Stereovision Obstacle Detection of Autonomous Vehicles Using GPU. In Proceedings of the Life System Modeling and Intelligent Computing: International Conference on Life System Modeling and Simulation, LSMS 2010, and International Conference on Intelligent Computing for Sustainable Energy and Environment, ICSEE 2010, Wuxi, China, 17 September 2010; Springer: Berlin/Heidelberg, Germany; pp. 176–184. [Google Scholar]

- Baig, M.W.; Pirzada, S.J.H.; Haq, E.; Shin, H. New Single Camera Vehicle Detection Based on Gabor Features for Real Time Operation. In Proceedings of the Convergence and Hybrid Information Technology: 5th International Conference, ICHIT 2011, Daejeon, Korea, September 22-24, 2011. Proceedings 5; Springer: Berlin/Heidelberg, Germany, 2011; pp. 567–574. [Google Scholar]

- Nieto, M.; Arróspide Laborda, J.; Salgado, L. Road Environment Modeling Using Robust Perspective Analysis and Recursive Bayesian Segmentation. Mach. Vis. Appl. 2011, 22, 927–945. [Google Scholar] [CrossRef] [Green Version]

- Na, I.; Han, S.H.; Jeong, H. Stereo-Based Road Obstacle Detection and Tracking. In Proceedings of the 13th International Conference on Advanced Communication Technology (ICACT2011), Gangwon-Do, Republic of Korea, 13–16 February 2011; IEEE: New York, NY, USA, 2011; pp. 1181–1184. [Google Scholar]

- Iwata, H.; Saneyoshi, K. Forward Obstacle Detection System by Stereo Vision. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO); IEEE: New York, NY, USA, 2012; pp. 1842–1847. [Google Scholar]

- Boroujeni, N.S.; Etemad, S.A.; Whitehead, A. Fast Obstacle Detection Using Targeted Optical Flow. In Proceedings of the 2012 19th IEEE International Conference on Image Processing; IEEE: New York, NY, USA, 2012; pp. 65–68. [Google Scholar]

- Lefebvre, S.; Ambellouis, S. Vehicle Detection and Tracking Using Mean Shift Segmentation on Semi-Dense Disparity Maps. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium; IEEE: New York, NY, USA, 2012; pp. 855–860. [Google Scholar]

- Trif, A.; Oniga, F.; Nedevschi, S. Stereovision on Mobile Devices for Obstacle Detection in Low Speed Traffic Scenarios. In Proceedings of the 2013 IEEE 9th International Conference on Intelligent Computer Communication and Processing (ICCP); IEEE: New York, NY, USA, 2013; pp. 169–174. [Google Scholar]

- Khalid, Z.; Abdenbi, M. Stereo Vision-Based Road Obstacles Detection. In Proceedings of the 2013 8th International Conference on Intelligent Systems: Theories and Applications (SITA); IEEE: New York, NY, USA, 2013; pp. 1–6. [Google Scholar]

- Petrovai, A.; Costea, A.; Oniga, F.; Nedevschi, S. Obstacle Detection Using Stereovision for Android-Based Mobile Devices. In Proceedings of the 2014 IEEE 10th International Conference on Intelligent Computer Communication and Processing (ICCP); IEEE: New York, NY, USA, 2014; pp. 141–147. [Google Scholar]

- Iloie, A.; Giosan, I.; Nedevschi, S. UV Disparity Based Obstacle Detection and Pedestrian Classification in Urban Traffic Scenarios. In Proceedings of the 2014 IEEE 10th International Conference on Intelligent Computer Communication and Processing (ICCP); IEEE: New YORK, NY, USA, 2014; pp. 119–125. [Google Scholar]

- Poddar, A.; Ahmed, S.T.; Puhan, N.B. Adaptive Saliency-Weighted Obstacle Detection for the Visually Challenged. In Proceedings of the 2015 2nd International Conference on Signal Processing and Integrated Networks (SPIN); IEEE: New York, NY, USA, 2015; pp. 477–482. [Google Scholar]

- Jia, B.; Liu, R.; Zhu, M. Real-Time Obstacle Detection with Motion Features Using Monocular Vision. Vis. Comput. 2015, 31, 281–293. [Google Scholar] [CrossRef]

- Wu, M.; Zhou, C.; Srikanthan, T. Robust and Low Complexity Obstacle Detection and Tracking. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC); IEEE: New York, NY, USA, 2016; pp. 1249–1254. [Google Scholar]

- Carrillo, D.A.P.; Sutherland, A. Fast Obstacle Detection Using Sparse Edge-Based Disparity Maps. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV); IEEE: New York, NY, USA, 2016; pp. 66–72. [Google Scholar]

- Häne, C.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Furgale, P.; Sattler, T.; Pollefeys, M. 3D Visual Perception for Self-Driving Cars Using a Multi-Camera System: Calibration, Mapping, Localization, and Obstacle Detection. Image Vis. Comput. 2017, 68, 14–27. [Google Scholar] [CrossRef] [Green Version]

- Prabhakar, G.; Kailath, B.; Natarajan, S.; Kumar, R. Obstacle Detection and Classification Using Deep Learning for Tracking in High-Speed Autonomous Driving. In Proceedings of the 2017 IEEE region 10 symposium (TENSYMP); IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Li, P.; Mi, Y.; He, C.; Li, Y. Detection and Discrimination of Obstacles to Vehicle Environment under Convolutional Neural Networks. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC); IEEE: New York, NY, USA, 2018; pp. 337–341. [Google Scholar]

- Fan, Y.; Zhou, L.; Fan, L.; Yang, J. Multiple Obstacle Detection for Assistance Driver System Using Deep Neural Networks. In Proceedings of the Artificial Intelligence and Security: 5th International Conference, ICAIS 2019, New York, NY, USA, July 26–28, 2019, Proceedings, Part III 5; Springer: Berlin/Heidelberg, Germany, 2019; pp. 501–513. [Google Scholar]

- Hsieh, Y.-Y.; Lin, W.-Y.; Li, D.-L.; Chuang, J.-H. Deep Learning-Based Obstacle Detection and Depth Estimation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP); IEEE: New York, NY, USA, 2019; pp. 1635–1639. [Google Scholar]

- Ohgushi, T.; Horiguchi, K.; Yamanaka, M. Road Obstacle Detection Method Based on an Autoencoder with Semantic Segmentation. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November– 4 December 2020. [Google Scholar]

- He, D.; Zou, Z.; Chen, Y.; Liu, B.; Miao, J. Rail Transit Obstacle Detection Based on Improved CNN. IEEE Trans. Instrum. Meas. 2021, 70, 1–14. [Google Scholar] [CrossRef]

- Luo, G.; Chen, X.; Lin, W.; Dai, J.; Liang, P.; Zhang, C. An Obstacle Detection Algorithm Suitable for Complex Traffic Environment. World Electr. Veh. J. 2022, 13, 69. [Google Scholar] [CrossRef]

- Du, L.; Chen, X.; Pei, Z.; Zhang, D.; Liu, B.; Chen, W. Improved Real-Time Traffic Obstacle Detection and Classification Method Applied in Intelligent and Connected Vehicles in Mixed Traffic Environment. J Adv Transp 2022, 2022, 1–12. [Google Scholar] [CrossRef]

- Zaheer, M.Z.; Lee, J.H.; Lee, S.-I.; Seo, B.-S. A Brief Survey on Contemporary Methods for Anomaly Detection in Videos. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC); IEEE: New York, NY, USA, 2019; pp. 472–473. [Google Scholar]

- UCSD Anomaly Detection Dataset. Available online: http://www.svcl.ucsd.edu/projects/anomaly/dataset.html (accessed on 12 January 2023).

- Monitoring Human Activity. Available online: http://mha.cs.umn.edu/Movies/Crowd-Activity-All.avi (accessed on 20 January 2023).

- Charlotte Vision Laboratory. Available online: https://webpages.charlotte.edu/cchen62/dataset.html (accessed on 20 January 2023).

- Chong, Y.S.; Tay, Y.H. Abnormal Event Detection in Videos Using Spatiotemporal Autoencoder. In Proceedings of the Advances in Neural Networks-ISNN 2017: 14th International Symposium, ISNN 2017, Sapporo, Hakodate, and Muroran, Hokkaido, Japan, June 21–26, 2017, Proceedings, Part II 14; Springer: Berlin/Heidelberg, Germany, 2017; pp. 189–196. [Google Scholar]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future Frame Prediction for Anomaly Detection–a New Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6536–6545. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. A Revisit of Sparse Coding Based Anomaly Detection in Stacked Rnn Framework. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 341–349. [Google Scholar]

- Samuel, D.J.; Cuzzolin, F. Unsupervised Anomaly Detection for a Smart Autonomous Robotic Assistant Surgeon (SARAS) Using a Deep Residual Autoencoder. IEEE Robot Autom. Lett. 2021, 6, 7256–7261. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6479–6488. [Google Scholar]

- Sabokrou, M.; Fayyaz, M.; Fathy, M.; Klette, R. Deep-Cascade: Cascading 3d Deep Neural Networks for Fast Anomaly Detection and Localization in Crowded Scenes. IEEE Trans. Image Process. 2017, 26, 1992–2004. [Google Scholar] [CrossRef] [PubMed]

- Adam, A.; Rivlin, E.; Shimshoni, I.; Reinitz, D. Robust Real-Time Unusual Event Detection Using Multiple Fixed-Location Monitors. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 555–560. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Wang, B.; Lin, S.; Wipf, D.; Guo, M.; Guo, B. Unsupervised Extraction of Video Highlights via Robust Recurrent Auto-Encoders. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4633–4641. [Google Scholar]

- Tran, H.T.M.; Hogg, D. Anomaly Detection Using a Convolutional Winner-Take-All Autoencoder. In Proceedings of the British Machine Vision Conference 2017; British Machine Vision Association: Durham, UK, 2017. [Google Scholar]

- Lu, C.; Shi, J.; Jia, J. Abnormal Event Detection at 150 Fps in Matlab. In Proceedings of the IEEE international conference on computer vision, Sydney, Australia, 1–8 December 2013; pp. 2720–2727. [Google Scholar]

- Ravanbakhsh, M.; Sangineto, E.; Nabi, M.; Sebe, N. Training Adversarial Discriminators for Cross-Channel Abnormal Event Detection in Crowds. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV); IEEE: New York, NY, USA, 2019; pp. 1896–1904. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Xu, D.; Ricci, E.; Yan, Y.; Song, J.; Sebe, N. Learning Deep Representations of Appearance and Motion for Anomalous Event Detection. arXiv 2015, arXiv:1510.01553. [Google Scholar]

- Fan, Y.; Wen, G.; Li, D.; Qiu, S.; Levine, M.D.; Xiao, F. Video Anomaly Detection and Localization via Gaussian Mixture Fully Convolutional Variational Autoencoder. Comput. Vis. Image Underst. 2020, 195, 102920. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Wang, X.; Xiong, N.; Shao, J. Learning Sparse Representation with Variational Auto-Encoder for Anomaly Detection. IEEE Access 2018, 6, 33353–33361. [Google Scholar] [CrossRef]

- Nayak, R.; Pati, U.C.; Das, S.K. A Comprehensive Review on Deep Learning-Based Methods for Video Anomaly Detection. Image Vis. Comput. 2021, 106, 104078. [Google Scholar] [CrossRef]

- Yan, S.; Smith, J.S.; Lu, W.; Zhang, B. Abnormal Event Detection from Videos Using a Two-Stream Recurrent Variational Autoencoder. IEEE Trans. Cogn. Dev. Syst. 2018, 12, 30–42. [Google Scholar] [CrossRef]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning Temporal Regularity in Video Sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 733–742. [Google Scholar]

- Colque, R.V.H.M.; Caetano, C.; de Andrade, M.T.L.; Schwartz, W.R. Histograms of Optical Flow Orientation and Magnitude and Entropy to Detect Anomalous Events in Videos. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 673–682. [Google Scholar] [CrossRef]

- Zhao, Y.; Deng, B.; Shen, C.; Liu, Y.; Lu, H.; Hua, X.-S. Spatio-Temporal Autoencoder for Video Anomaly Detection. In Proceedings of the 25th ACM international Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1933–1941. [Google Scholar]

- Lee, S.; Kim, H.G.; Ro, Y.M. STAN: Spatio-Temporal Adversarial Networks for Abnormal Event Detection. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); IEEE: New York, NY, USA, 2018; pp. 1323–1327. [Google Scholar]

- Kiran, B.R.; Thomas, D.M.; Parakkal, R. An Overview of Deep Learning Based Methods for Unsupervised and Semi-Supervised Anomaly Detection in Videos. J. Imaging 2018, 4, 36. [Google Scholar] [CrossRef] [Green Version]

- Zhou, J.T.; Du, J.; Zhu, H.; Peng, X.; Liu, Y.; Goh, R.S.M. Anomalynet: An Anomaly Detection Network for Video Surveillance. IEEE Trans. Inf. Secur. 2019, 14, 2537–2550. [Google Scholar] [CrossRef]

- Vu, H.; Nguyen, T.D.; Le, T.; Luo, W.; Phung, D. Robust Anomaly Detection in Videos Using Multilevel Representations. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5216–5223. [Google Scholar]

- Chen, D.; Wang, P.; Yue, L.; Zhang, Y.; Jia, T. Anomaly Detection in Surveillance Video Based on Bidirectional Prediction. Image Vis. Comput. 2020, 98, 103915. [Google Scholar] [CrossRef]

- Nawaratne, R.; Alahakoon, D.; de Silva, D.; Yu, X. Spatiotemporal Anomaly Detection Using Deep Learning for Real-Time Video Surveillance. IEEE Trans. Ind. Inf. 2019, 16, 393–402. [Google Scholar] [CrossRef]

- Sun, C.; Jia, Y.; Song, H.; Wu, Y. Adversarial 3d Convolutional Auto-Encoder for Abnormal Event Detection in Videos. IEEE Trans. Multimed. 2020, 23, 3292–3305. [Google Scholar] [CrossRef]

- Bansod, S.D.; Nandedkar, A. v Crowd Anomaly Detection and Localization Using Histogram of Magnitude and Momentum. Vis. Comput. 2020, 36, 609–620. [Google Scholar] [CrossRef]

- Wang, S.; Zeng, Y.; Liu, Q.; Zhu, C.; Zhu, E.; Yin, J. Detecting Abnormality without Knowing Normality: A Two-Stage Approach for Unsupervised Video Abnormal Event Detection. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 26 October 2018; pp. 636–644. [Google Scholar]

- Li, N.; Chang, F.; Liu, C. Spatial-Temporal Cascade Autoencoder for Video Anomaly Detection in Crowded Scenes. IEEE Trans. Multimed. 2020, 23, 203–215. [Google Scholar] [CrossRef]

- Le, V.-T.; Kim, Y.-G. Attention-Based Residual Autoencoder for Video Anomaly Detection. Appl. Intell. 2023, 53, 3240–3254. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.A.; Haddad, A.; Soares, C.A.P.; Waller, S.T. Image-Based Crack Detection Methods: A Review. Infrastructures 2021, 6, 115. [Google Scholar] [CrossRef]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of Edge-Detection Techniques for Crack Identification in Bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef] [Green Version]

- Yamaguchi, T.; Nakamura, S.; Saegusa, R.; Hashimoto, S. Image-based Crack Detection for Real Concrete Surfaces. IEEJ Trans. Electr. Electron. Eng. 2008, 3, 128–135. [Google Scholar] [CrossRef]

- Gehri, N.; Mata-Falcón, J.; Kaufmann, W. Automated Crack Detection and Measurement Based on Digital Image Correlation. Constr. Build Mater. 2020, 256, 119383. [Google Scholar] [CrossRef]

- Adhikari, R.S.; Moselhi, O.; Bagchi, A. Image-Based Retrieval of Concrete Crack Properties for Bridge Inspection. Autom. Constr. 2014, 39, 180–194. [Google Scholar] [CrossRef]

- Xu, B.; Huang, Y. Automatic Inspection of Pavement Cracking Distress; Tescher, A.G., Ed.; SPIE: Bellingham, WA, USA, 18 August 2005; p. 590901. [Google Scholar] [CrossRef]

- Tsai, Y.-C.; Kaul, V.; Mersereau, R.M. Critical Assessment of Pavement Distress Segmentation Methods. J. Transp. Eng. 2010, 136, 11–19. [Google Scholar] [CrossRef]

- Zou, Q.; Cao, Y.; Li, Q.; Mao, Q.; Wang, S. CrackTree: Automatic Crack Detection from Pavement Images. Pattern Recognit. Lett. 2012, 33, 227–238. [Google Scholar] [CrossRef]