Dynamic Service Function Chain Deployment and Readjustment Method Based on Deep Reinforcement Learning

Abstract

:1. Introduction

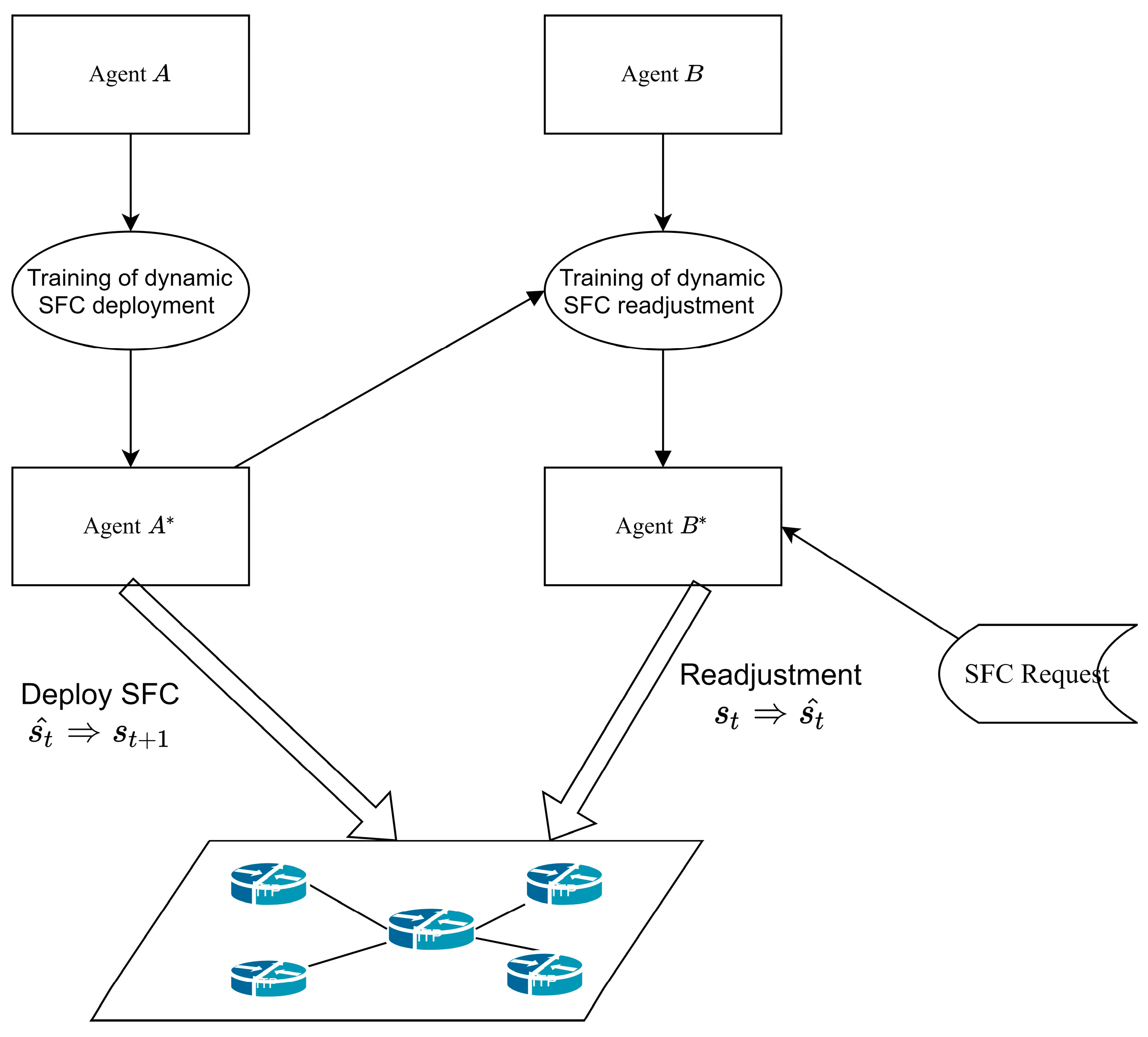

- We propose MQDR, a DRL-based framework for dynamic deployment and readjustment of SFCs. This framework employs two trained agents, and , to select VNF deployment locations by and adjust the underlying network by , respectively, to enable SFC requests to be received successfully when such requests arrive dynamically. We first present the use of these two agents together to address the dynamic SFC deployment problem.

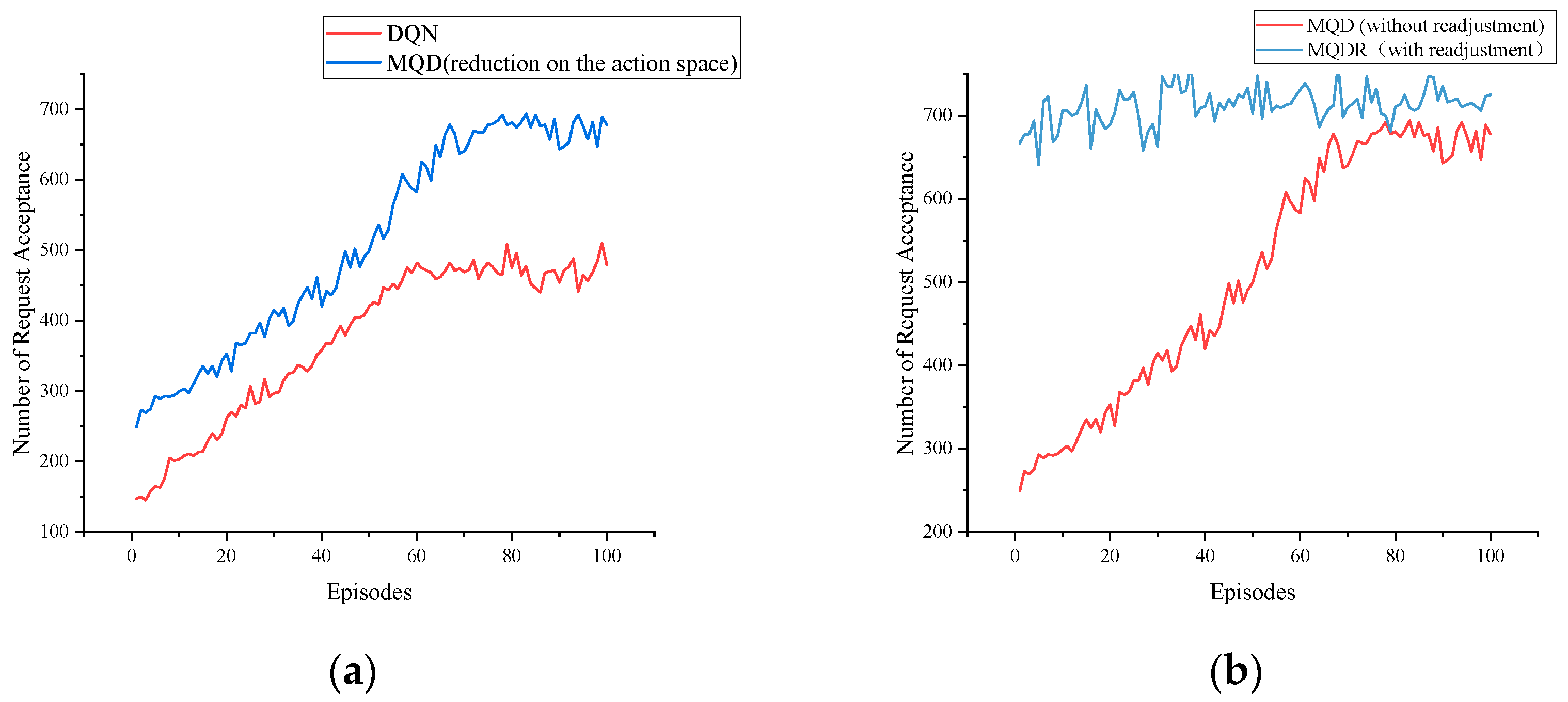

- We impose restrictions on the action space to simplify the training of the agents and enhance the deployment performance. Unlike allowing the agents to determine deployment locations among all nodes, MQDR incorporates the M Shortest Path algorithm (MSPA) to reduce the range of actions to be chosen by the agents.

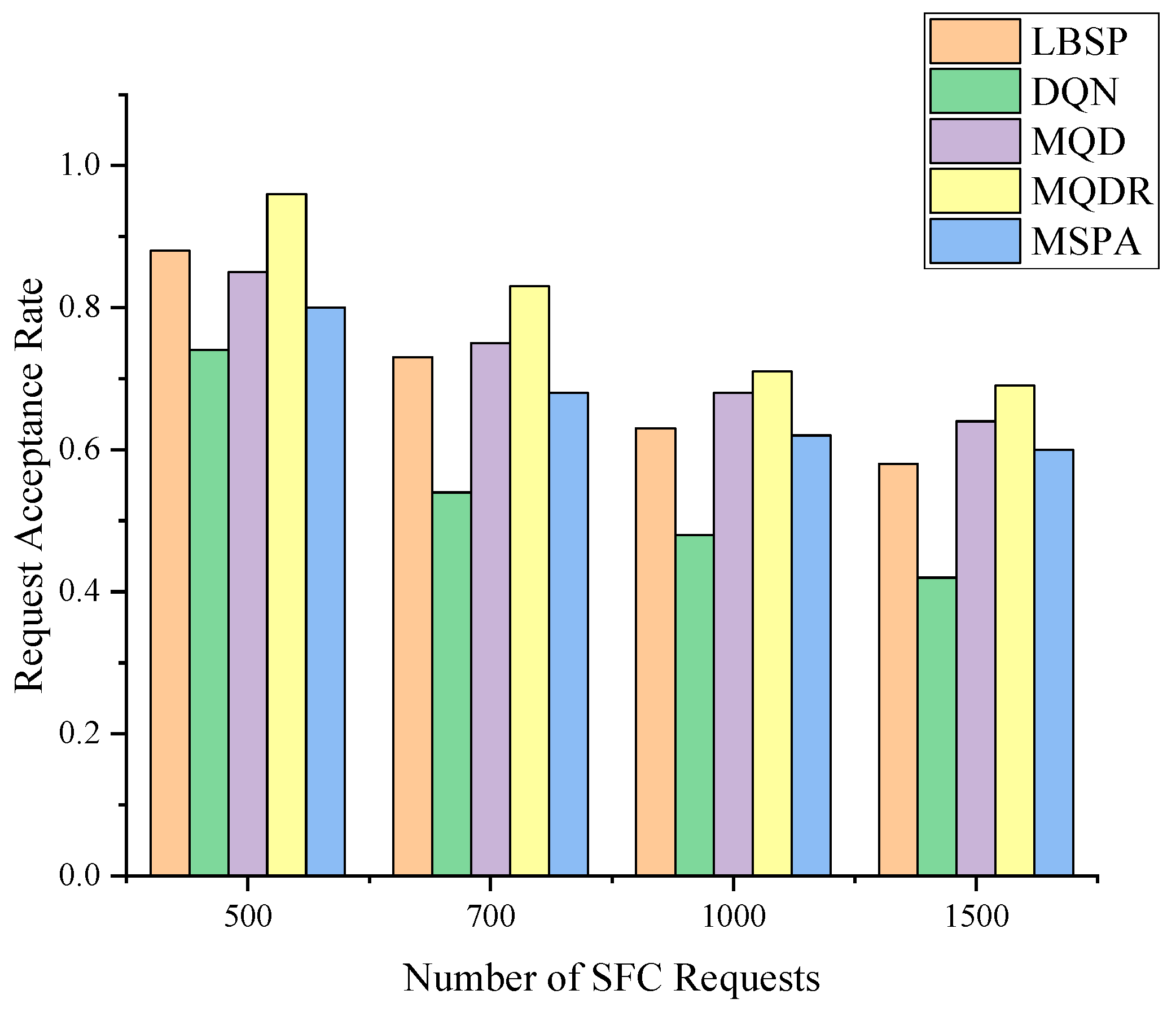

- Finally, we compare MQDR with other methods, and it is found that MDQR improves the request acceptance rate by approximately 25% compared with the original DQN algorithm and by 9.3% compared with the Load Balancing Shortest Path (LBSP) algorithm. Therefore, the proposed method is a feasible solution to the dynamic SFC deployment problem.

2. Related Works

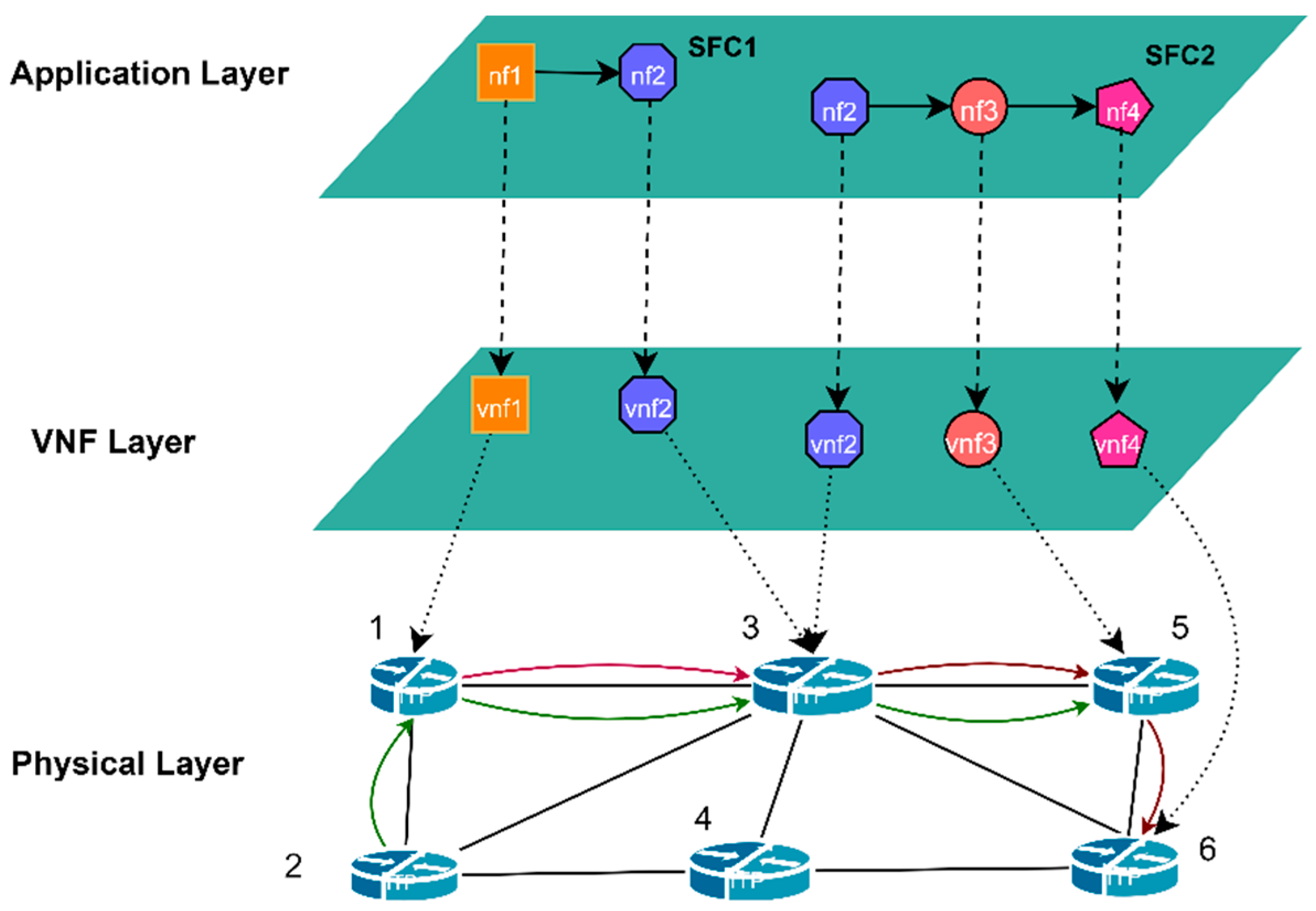

3. System Model and Problem Description

3.1. Network Model

3.2. SFC and VNF

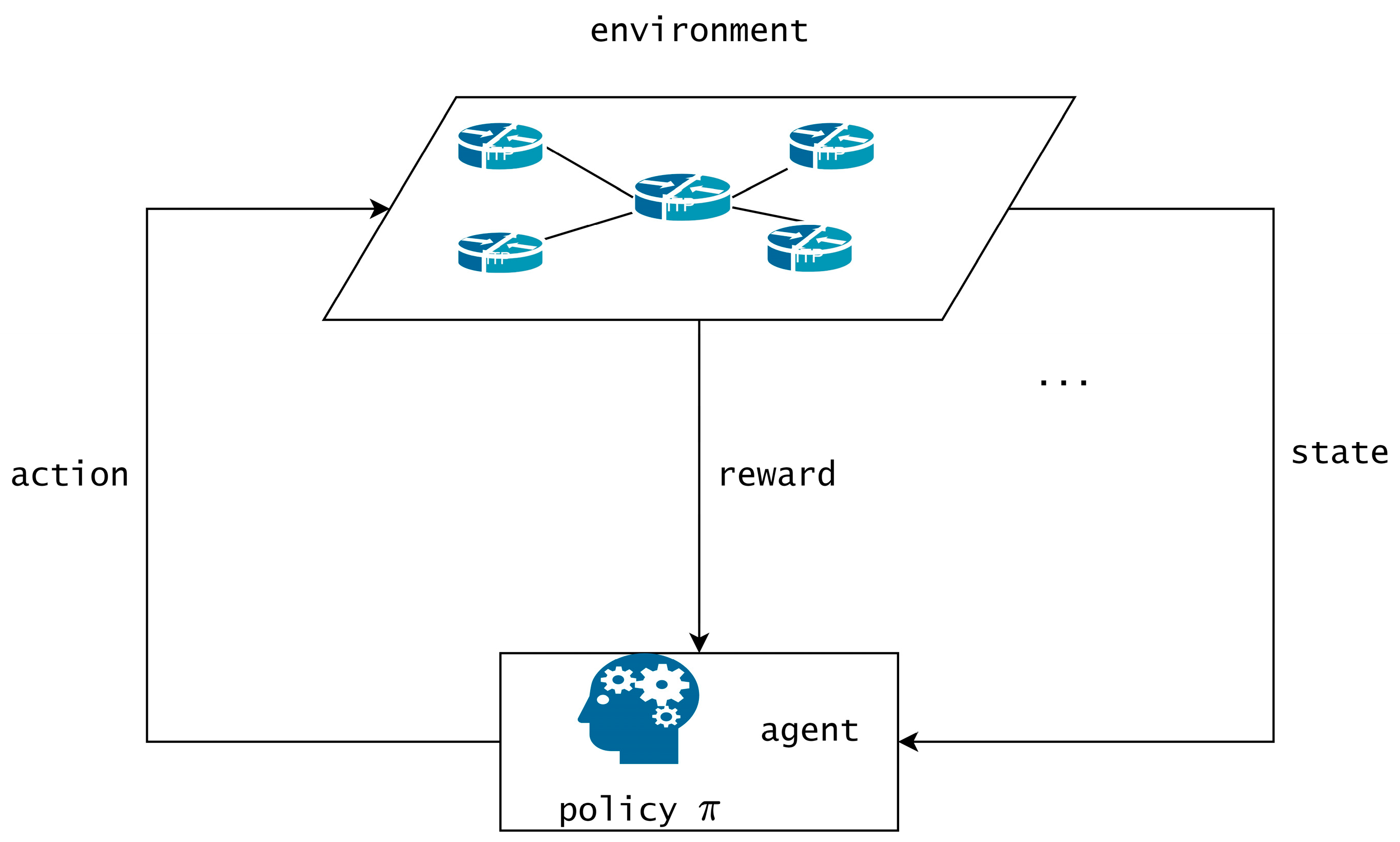

3.3. Markov Decision Process (MDP)

4. Algorithm Design

4.1. DQN-Based Dynamic SFC Deployment and Readjustment Framework (MQDR)

4.2. DQN-Based Dynamic SFC Deployment Algorithm

| Algorithm 1: DQN-based dynamic SFC deployment algorithm |

| Input: The underlying network state the set of dynamically arriving SFC requests . Output: Dynamic SFC deployment policy . 1: Initialize the action-value function where are the randomly generated neural network weights. 2: Initialize the target action-value function , where . 3: Initialize the experience pool with memory . 4: for episode in range (EPISODES): 5: Generate a new collection of SFCs. 6: Initialize state . 7: for step in range (STEPS): 8: Select the nodes that satisfy the resource and delay requirements. 9: Select m nodes that are closest to the last deployed node among the nodes that satisfy the deployment requirements and add them to set . 10: With probability , select an action at random. 11: Otherwise, select the action . 12: Execute action and observe reward . 13: Store transition in . 14: Sample random minibatch of transitions from D. 15: Set 16: Perform a gradient descent step on with respect to the network parameters . 17: Every steps, reset . 18: End. 19: End. |

4.3. DQN-Based Algorithm for Dynamic SFC Readjustment

| Algorithm 2: DQN-based dynamic SFC readjustment algorithm |

| Input: The network state the set of SFC , dynamic SFC deployment policy . Output: Dynamic SFC readjustment policy . 1: Initialize the action-value function , where is the randomly generated neural network weights. 2: Initialize the target action-value function , where . 3: Initialize the experience pool with memory . 4: for episode in range (EPISODES): 5: Generate a new collection of SFCs. 6: Initialization state . 7: for step in range (STEPS): 8: Generate the set of nodes that need to be readjusted based on the state of the underlying network. 9: With probability , select an action at random. 10: Otherwise, select the action . 11: Execute readjustment action , . 12: Perform deployment with . 13: Observe reward . 14: Store transition in . 15: Sample random minibatch of transitions from D. 16: Set 17: Perform a gradient descent step on with respect to the network parameters . 18: Every steps, reset . 19: End. 20: End. |

4.4. Conclusions

5. Performance Evaluation

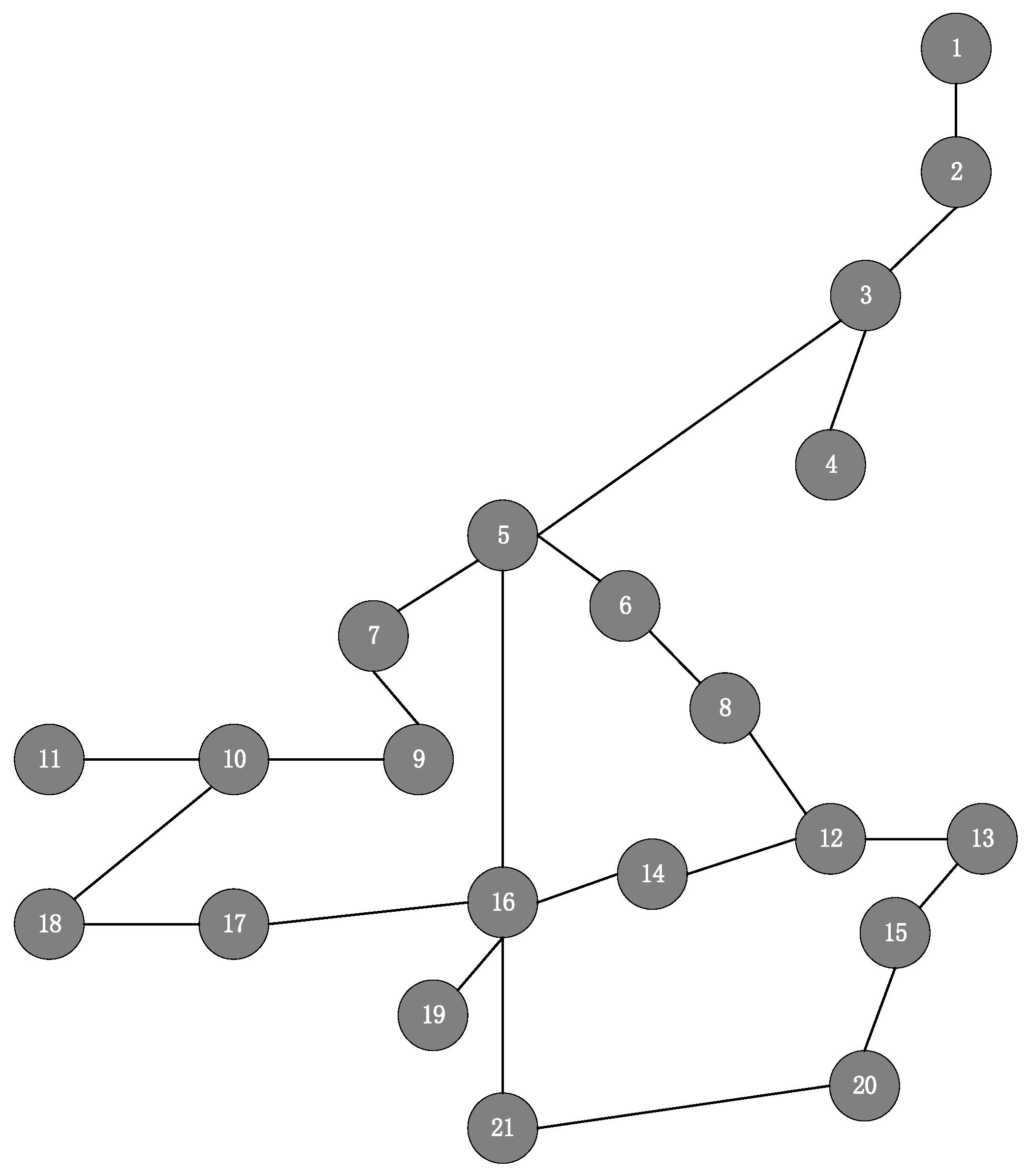

5.1. Simulation Setup

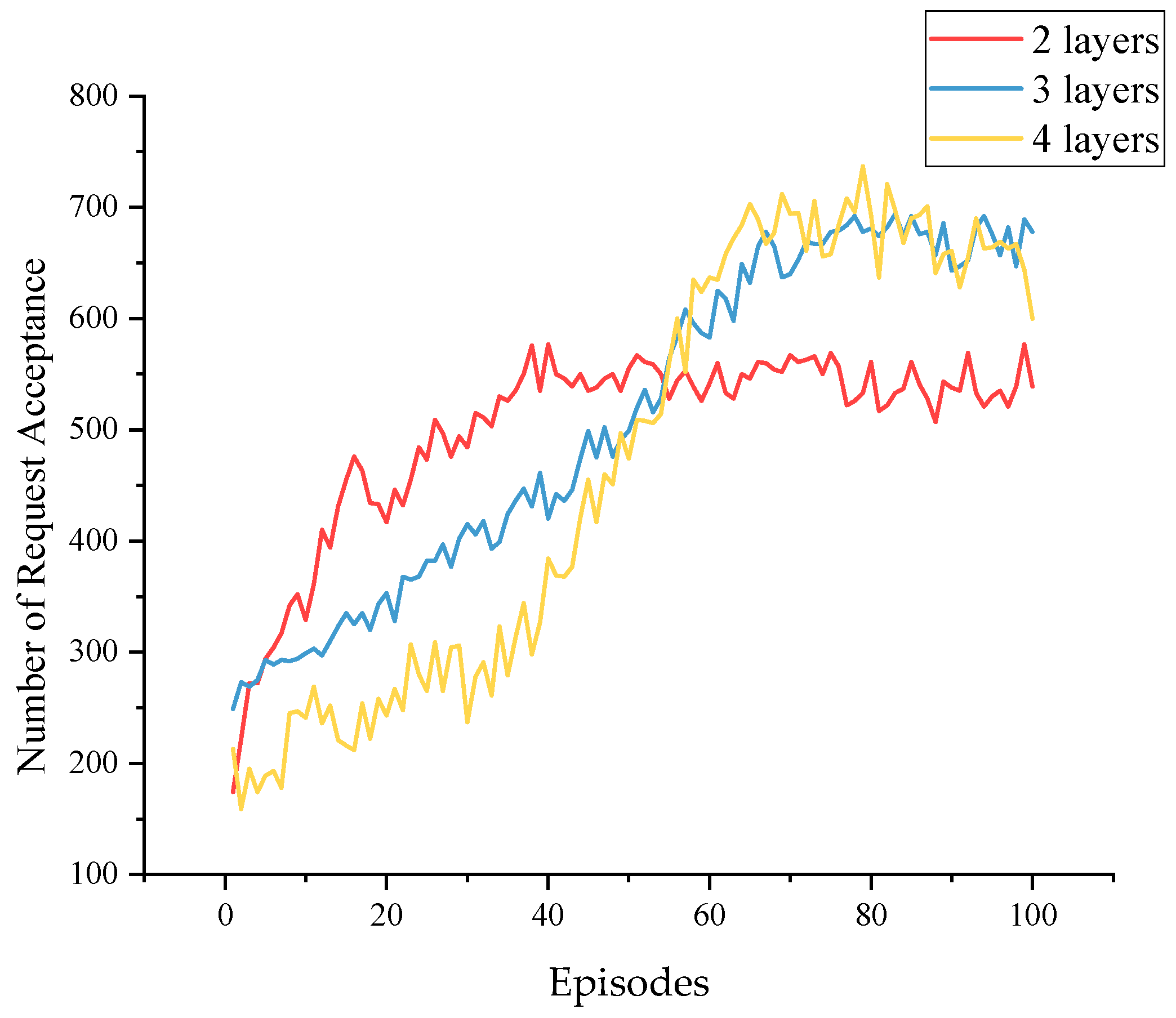

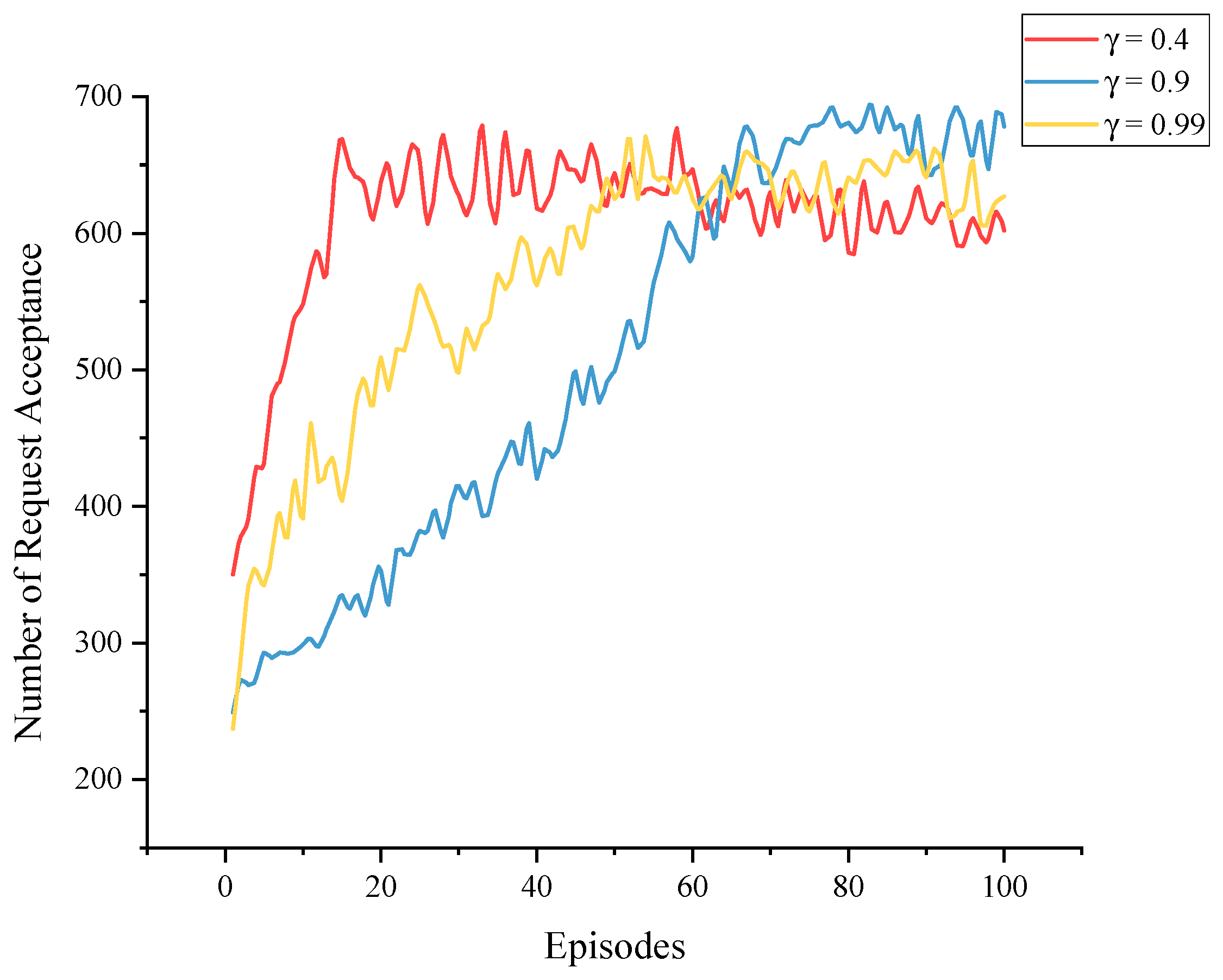

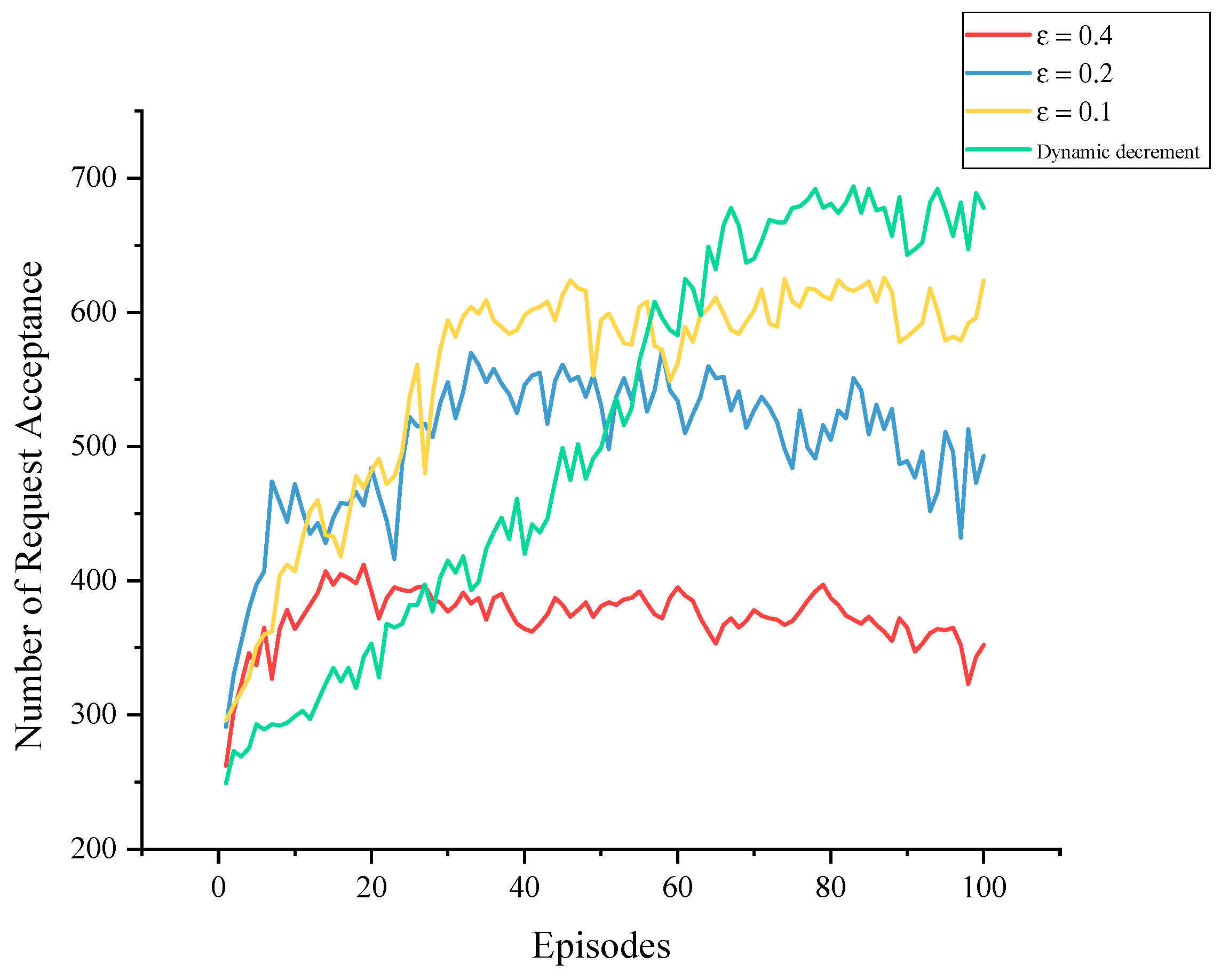

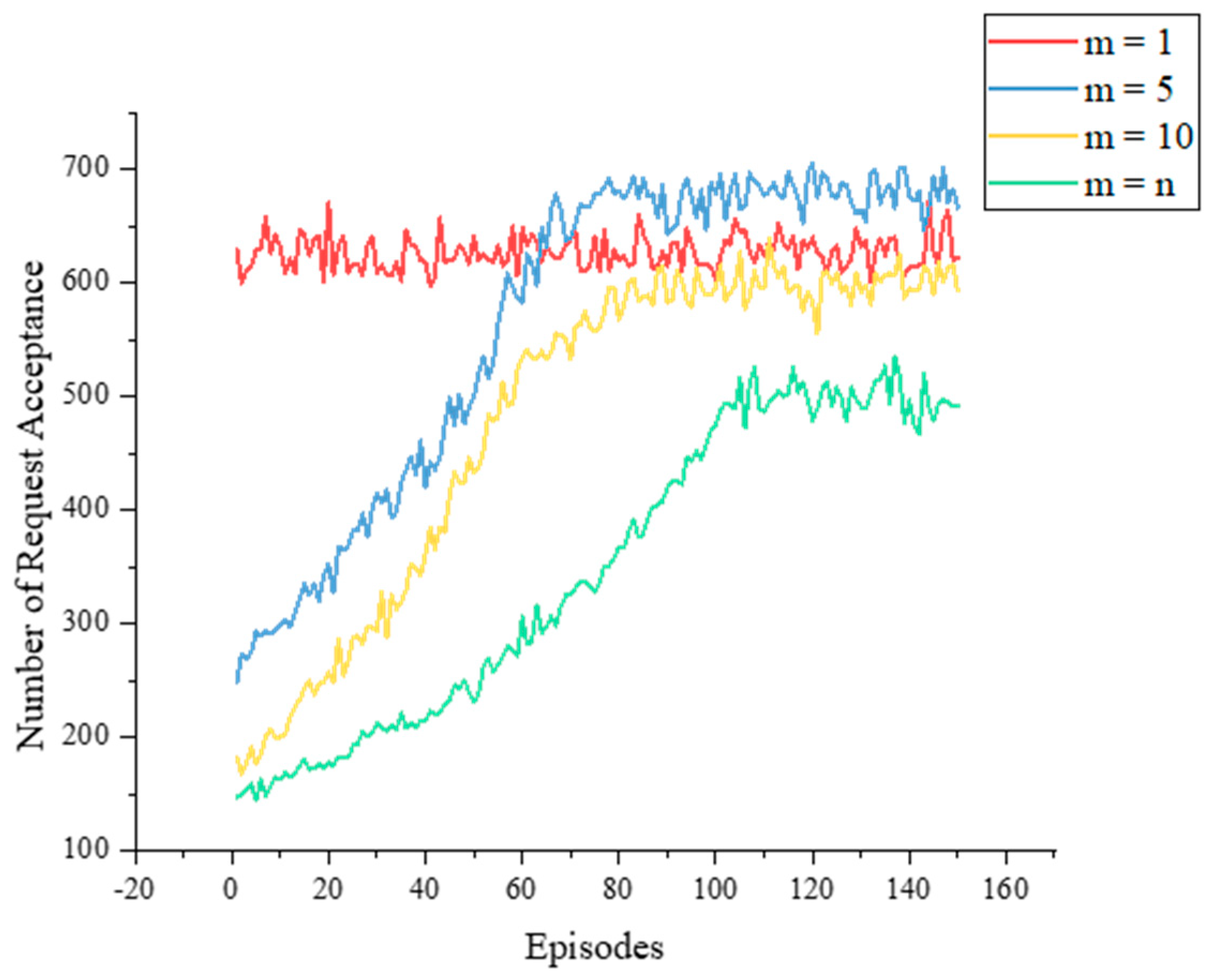

5.2. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- ETSI GS NFV 002 v1. 2.1; Network Functions Virtualisation; Architectural Framework. European Telecommunications Standards Institute: Sophia Antipolis, France, 2013.

- Karakus, M.; Durresi, A. A Survey: Control Plane Scalability Issues and Approaches in Software-Defined Networking (SDN). Comput. Netw. 2017, 112, 279–293. [Google Scholar] [CrossRef] [Green Version]

- Halpern, J.; Pignataro, C. Service Function Chaining (SFC) Architecture; Internet Engineering Task Force: Fremont, CA, USA, 2015. [Google Scholar]

- Bhamare, D.; Jain, R.; Samaka, M.; Erbad, A. A Survey on Service Function Chaining. J. Netw. Comput. Appl. 2016, 75, 138–155. [Google Scholar] [CrossRef]

- Mijumbi, R.; Serrat, J.; Gorricho, J.-L.; Bouten, N.; De Turck, F.; Boutaba, R. Network Function Virtualization: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2016, 18, 236–262. [Google Scholar] [CrossRef] [Green Version]

- Bari, F.; Chowdhury, S.R.; Ahmed, R.; Boutaba, R.; Duarte, O.C.M.B. Orchestrating Virtualized Network Functions. IEEE Trans. Netw. Serv. Manag. 2016, 13, 725–739. [Google Scholar] [CrossRef]

- Zhong, X.; Wang, Y.; Qiu, X. Service Function Chain Orchestration across Multiple Clouds. China Commun. 2018, 15, 99–116. [Google Scholar] [CrossRef]

- Savi, M.; Tornatore, M.; Verticale, G. Impact of processing costs on service chain placement in network functions virtualization. In Proceedings of the IEEE Conference on Network Function Virtualization and Software Defined Network (nfv-Sdn), Chandler, AZ, USA, 14–16 November; IEEE: New York, NY, USA, 2015; pp. 191–197. [Google Scholar]

- Addis, B.; Belabed, D.; Bouet, M.; Secci, S. Virtual network functions placement and routing optimization. In Proceedings of the IEEE 4th International Conference on Cloud Networking (CloudNet), Niagara Falls, ON, Canada, 5–7 October 2015; pp. 171–177. [Google Scholar]

- Rankothge, W.; Le, F.; Russo, A.; Lobo, J. Optimizing Resource Allocation for Virtualized Network Functions in a Cloud Center Using Genetic Algorithms. IEEE Trans. Netw. Serv. Manag. 2017, 14, 343–356. [Google Scholar] [CrossRef] [Green Version]

- Jin, P.; Fei, X.; Zhang, Q.; Liu, F.; Li, B. Latency-Aware VNF Chain Deployment with Efficient Resource Reuse at Network Edge. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; pp. 267–276. [Google Scholar]

- Wu, Y.; Zhou, J. Dynamic Service Function Chaining Orchestration in a Multi-Domain: A Heuristic Approach Based on SRv6. Sensors 2021, 21, 6563. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Lu, Y.; Li, X.; Qiao, W.; Li, Z.; Zhao, D. SFC Embedding Meets Machine Learning: Deep Reinforcement Learning Approaches. IEEE Commun. Lett. 2021, 25, 1926–1930. [Google Scholar] [CrossRef]

- Tang, L.; He, X.; Zhao, P.; Zhao, G.; Zhou, Y.; Chen, Q. Virtual Network Function Migration Based on Dynamic Resource Requirements Prediction. IEEE Access 2019, 7, 112348–112362. [Google Scholar] [CrossRef]

- Subramanya, T.; Harutyunyan, D.; Riggio, R. Machine Learning-Driven Service Function Chain Placement and Scaling in MEC-Enabled 5G Networks. Comput. Netw. 2020, 166, 106980. [Google Scholar] [CrossRef]

- Qiu, X.P. Neural Network and Deep Learning; China Machine Press: Beijing, China, 2020; ISBN 978711164968. [Google Scholar]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.-C.; Kim, D.I. Applications of Deep Reinforcement Learning in Communications and Networking: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar] [CrossRef] [Green Version]

- Sun, P.; Lan, J.; Li, J.; Guo, Z.; Hu, Y. Combining Deep Reinforcement Learning with Graph Neural Networks for Optimal VNF Placement. IEEE Commun. Lett. 2021, 25, 176–180. [Google Scholar] [CrossRef]

- Li, G.; Feng, B.; Zhou, H.; Zhang, Y.; Sood, K.; Yu, S. Adaptive Service Function Chaining Mappings in 5G Using Deep Q-Learning. Comput. Commun. 2020, 152, 305–315. [Google Scholar] [CrossRef]

- Wang, L.; Mao, W.; Zhao, J.; Xu, Y. DDQP: A Double Deep Q-Learning Approach to Online Fault-Tolerant SFC Placement. IEEE Trans. Netw. Serv. Manag. 2021, 18, 118–132. [Google Scholar] [CrossRef]

- Gu, L.; Zeng, D.; Li, W.; Guo, S.; Zomaya, A.Y.; Jin, H. Intelligent VNF Orchestration and Flow Scheduling via Model-Assisted Deep Reinforcement Learning. IEEE J. Select. Areas Commun. 2020, 38, 279–291. [Google Scholar] [CrossRef]

- Pei, J.; Hong, P.; Pan, M.; Liu, J.; Zhou, J. Optimal VNF Placement via Deep Reinforcement Learning in SDN/NFV-Enabled Networks. IEEE J. Select. Areas Commun. 2020, 38, 263–278. [Google Scholar] [CrossRef]

- Qiu Hang; Tang Hongbo; You Wei Online Service Function Chain Deployment Method Based on Deep Q Network. J. Electron. Inf. Technol. 2022, 43, 3122–3130.

- Fu, X.; Yu, F.R.; Wang, J.; Qi, Q.; Liao, J. Dynamic Service Function Chain Embedding for NFV-Enabled IoT: A Deep Reinforcement Learning Approach. IEEE Trans. Wirel. Commun. 2020, 19, 507–519. [Google Scholar] [CrossRef]

- Tang, H.; Zhou, D.; Chen, D. Dynamic Network Function Instance Scaling Based on Traffic Forecasting and VNF Placement in Operator Data Centers. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 530–543. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, Y.; Li, X.; Yao, Z.; Zhao, D. On Dynamic Service Function Chain Reconfiguration in IoT Networks. IEEE Internet Things J. 2020, 7, 10969–10984. [Google Scholar] [CrossRef]

- Zhuang, W.; Ye, Q.; Lyu, F.; Cheng, N.; Ren, J. SDN/NFV-Empowered Future IoV with Enhanced Communication, Computing, and Caching. Proc. IEEE 2020, 108, 274–291. [Google Scholar] [CrossRef]

- Zhao, L.; Li, Z.; Al-Dubai, A.Y.; Min, G.; Li, J.; Hawbani, A.; Zomaya, A.Y. A Novel Prediction-Based Temporal Graph Routing Algorithm for Software-Defined Vehicular Networks. IEEE Trans. Intell. Transport. Syst. 2022, 23, 13275–13290. [Google Scholar] [CrossRef]

- Zhao, L.; Zheng, T.; Lin, M.; Hawbani, A.; Shang, J.; Fan, C. SPIDER: A Social Computing Inspired Predictive Routing Scheme for Softwarized Vehicular Networks. IEEE Trans. Intell. Transport. Syst. 2022, 23, 9466–9477. [Google Scholar] [CrossRef]

- Ebiaredoh-Mienye, S.A.; Esenogho, E.; Swart, T.G. Integrating Enhanced Sparse Autoencoder-Based Artificial Neural Network Technique and Softmax Regression for Medical Diagnosis. Electronics 2020, 9, 1963. [Google Scholar] [CrossRef]

- Ebiaredoh-Mienye, S.A.; Swart, T.G.; Esenogho, E.; Mienye, I.D. A Machine Learning Method with Filter-Based Feature Selection for Improved Prediction of Chronic Kidney Disease. Bioengineering 2022, 9, 350. [Google Scholar] [CrossRef] [PubMed]

- Esenogho, E.; Djouani, K.; Kurien, A.M. Integrating Artificial Intelligence Internet of Things and 5G for Next-Generation Smartgrid: A Survey of Trends Challenges and Prospect. IEEE Access 2022, 10, 4794–4831. [Google Scholar] [CrossRef]

- Obaido, G.; Ogbuokiri, B.; Swart, T.G.; Ayawei, N.; Kasongo, S.M.; Aruleba, K.; Mienye, I.D.; Aruleba, I.; Chukwu, W.; Osaye, F.; et al. An Interpretable Machine Learning Approach for Hepatitis B Diagnosis. Appl. Sci. 2022, 12, 11127. [Google Scholar] [CrossRef]

- Liu, Y.; Ran, J.; Hu, H.; Tang, B. Energy-Efficient Virtual Network Function Reconfiguration Strategy Based on Short-Term Resources Requirement Prediction. Electronics 2021, 10, 2287. [Google Scholar] [CrossRef]

- Wen, T.; Yu, H.; Sun, G.; Liu, L. Network function consolidation in service function chaining orchestration. In Proceedings of the IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 23–27 May 2016; pp. 1–6. [Google Scholar]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Liu, J.; Lu, W.; Zhou, F.; Lu, P.; Zhu, Z. On Dynamic Service Function Chain Deployment and Readjustment. IEEE Trans. Netw. Serv. Manag. 2017, 14, 543–553. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Wu, J.; Wang, J.H.; Yang, J. CNGI-CERNET2: An IPv6 Deployment in China. SIGCOMM Comput. Commun. Rev. 2011, 41, 48–52. [Google Scholar] [CrossRef]

- Xu, L.; Hu, H.; Liu, Y. SFCSim: A Network Function Virtualization Resource Allocation Simulation Platform. Cluster Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Notations | Description |

|---|---|

| The underlying network | |

| Set of underlying nodes, | |

| Set of underlying links, | |

| Underlying link between and node | |

| Bandwidth of | |

| Link latency of | |

| Set of VNF types, | |

| CPU resource factor of VNF type | |

| Traffic scaling factor of VNF type | |

| Set of SFC requests, | |

| Set of ordered NF requests, denotes the u-th NF | |

| Set of logical links of | |

| CPU resource requirement of | |

| Bandwidth requirement of logical link | |

| End-to-end delay allowed for the request |

| Notation | Description |

|---|---|

| To show whether has been successfully deployed. | |

| To show whether is of type . | |

| To show whether the u-th virtual node is deployed on physical node when . | |

| To show whether traffic segment flows through physical link | |

| To show whether there is a VNF of type on physical node . |

| Number of Hidden Layers | Request Acceptance Rate |

|---|---|

| 2 | 0.56 |

| 3 | 0.71 |

| 4 | 0.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ran, J.; Wang, W.; Hu, H. Dynamic Service Function Chain Deployment and Readjustment Method Based on Deep Reinforcement Learning. Sensors 2023, 23, 3054. https://doi.org/10.3390/s23063054

Ran J, Wang W, Hu H. Dynamic Service Function Chain Deployment and Readjustment Method Based on Deep Reinforcement Learning. Sensors. 2023; 23(6):3054. https://doi.org/10.3390/s23063054

Chicago/Turabian StyleRan, Jing, Wenkai Wang, and Hefei Hu. 2023. "Dynamic Service Function Chain Deployment and Readjustment Method Based on Deep Reinforcement Learning" Sensors 23, no. 6: 3054. https://doi.org/10.3390/s23063054

APA StyleRan, J., Wang, W., & Hu, H. (2023). Dynamic Service Function Chain Deployment and Readjustment Method Based on Deep Reinforcement Learning. Sensors, 23(6), 3054. https://doi.org/10.3390/s23063054