Multimodal Image Fusion for X-ray Grating Interferometry

Abstract

1. Introduction

- (1)

- drawbacks of image fusion methods in the XGI were analyzed;

- (2)

- an image fusion scheme based on NSCT-SCM for the XGI was proposed;

- (3)

- a tunable sub-band coefficient selection strategy was proposed to serve special requirements for the XGI fusion;

- (4)

- the proposed NSCT-SCM image fusion scheme was applied to XGI data of frog toes and compared with current fusion methods in the XGI fusion field, exhibiting state-of-the-art performance.

2. Materials and Methods

2.1. Image Fusion for X-ray Grating Interferometry

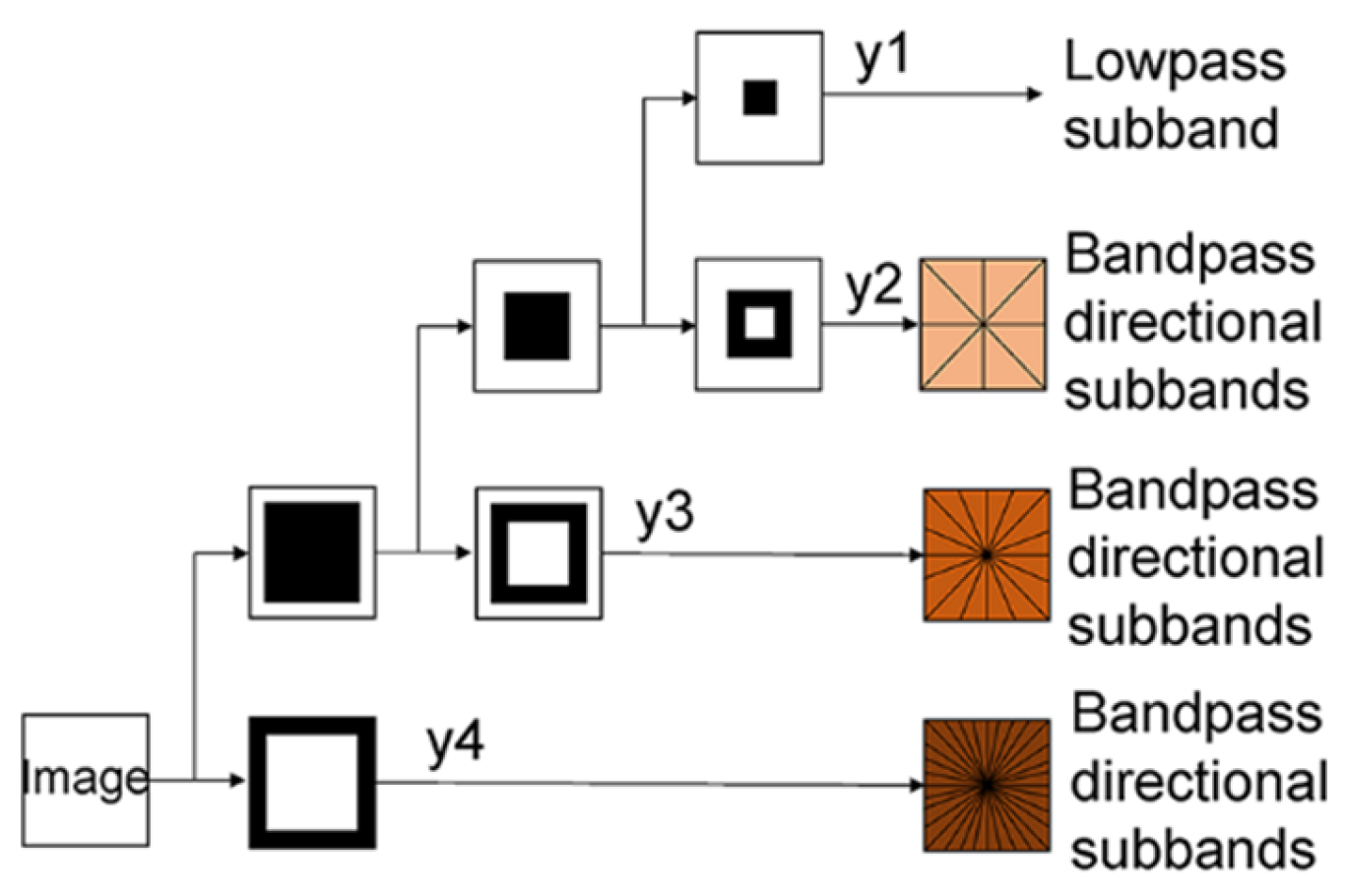

2.2. Non-Subsampled Contourlet Transform

2.3. Spiking Cortical Model

3. NSCT-SCM Fusion Scheme

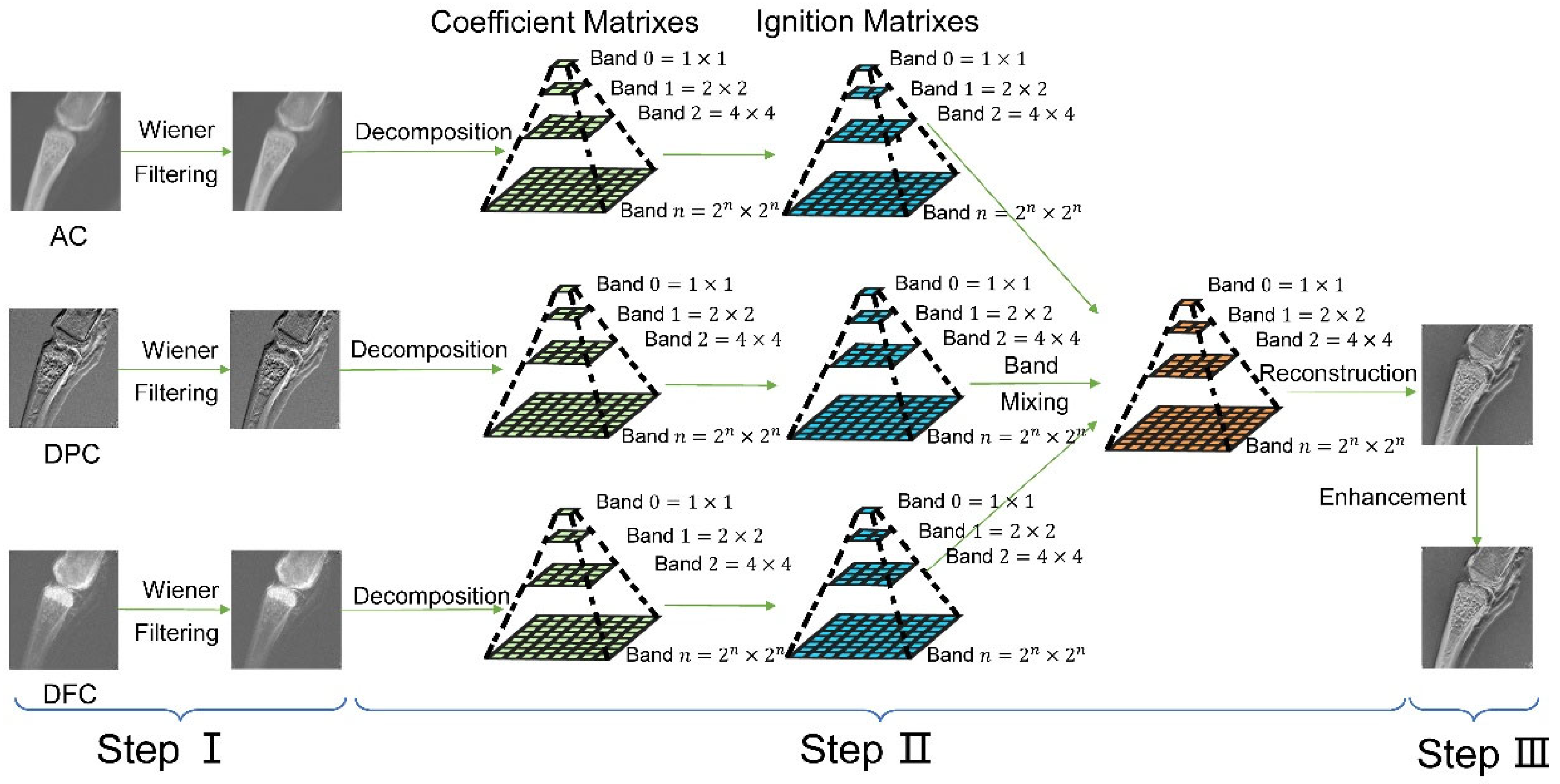

3.1. Step 1. Image Denoising Based on Wiener Filtering

3.2. Step 2. NSCT-SCM XGI Fusion Algorithm

- First, the NSCT was implemented to the , and obtaining images’ high-frequency coefficients (, and ) and low-frequency coefficients (, and ), where denotes the index of high-frequency coefficients, because multiple high-frequency coefficients are decomposed from a single image. Note that the size of each coefficient obtained from NSCT was the same as the input images, in this case. Additionally, although only one low-frequency coefficient could be obtained from the NSCT process, multiple high-frequency coefficients could be gained from the NSCT of a single image, depending on the decomposition levels of NSDFB and NSPFB.

- Second, high-frequency coefficients and low-frequency coefficients were fed into the SCM, generating the state of the firing of each coefficient (, , or for the high-frequency coefficient and , , or for the low-frequency coefficient), i.e., the ignition matrix. Each ignition matrix has the same size as its input coefficient, which was in this case.

- Two separate fusion rules were provided for high-frequency and low-frequency coefficients because of the need to preserve details and features in the high-frequency sub-band and keep the low-frequency part of the fused final image closer to the AC image. It is easier for doctors or radiologists to analyze a fused tri-contrast image when its low-frequency sub-band is close to that of the AC channel. Under this condition, the final fusion results will generally resemble the effects of traditional absorption-based tomography while containing complementary information of DPC and DFC channels.For the low-frequency coefficients:where is the fused low-frequency coefficient and is a tunable parameter that determines the similarity between the fused image and the AC image; the larger the value of , the closer the fused image will be to the AC image.For the high-frequency coefficients:There were a total of 7 possible values for : (1) ; (2) , (3) ; (4) ; (5) ; (6) ; and (7) . The programming idea of the high-frequency fusion rule was such that we set a threshold for the comparison of ignition results , , and . This comparison measured whether the information of a pixel coming from a single channel was significant enough to replace the others or whether a weighted average of the information of two or three channels was required. To be specific, when one channel was significantly larger than others, we chose the coefficient from this channel as the value of the directly. When two were significantly larger than the rest, we took the average as the value of the . When no channel was significantly larger than the others, we weighted averaged the value of all three channels as the value of the by the weight factors , and . A detailed fusion scheme of high-frequency coefficients is presented in the Supplemental Information, Section S1.

- Finally, the inverse NSCT was implemented with respect to the low-frequency coefficients as well as the high-frequency coefficients , obtaining the fused image .

3.3. Step 3. Image Enhancement Using CLAHE, AS, and GC

- The image was first processed by CLAHE [35], which divided it into small tiles and changed the histogram of these tiles to enhance their contrast. Additionally, a clipping limit needed to be applied to the aforementioned processing, aiming to prevent excessive noise in the image. Bilinear interpolation was implemented on the tiles to avoid image discontinuities. After the implementation, the processed image was obtained.

- Second, was sharpened by the AS method, mathematically given by:wherewhere is the weighting factor adaptively determined by calculating the image entropies with many values of and finding the value, i.e., when the maximum entropy was obtained. The final was calculated by , where is a constant to preserve the image becoming over-sharpened, with a fixed value of 3, empirically given by Felix Scholkmann et al. in their work [12]. After the aforementioned process, the image was obtained.

- Finally, in the GC step, the image was enhanced by a sigmoid function, denoted as:where and are two manually tunable parameters.

4. Measures of the Fusion Performance

- Edge strength () [36] stands for the relative amount of edge information transferred from the input images (, , and ) into the fused result , denoted as:where , , and are the weights, assigned to edge preservation values , , and for , , and, , respectively. This edge preservation value was calculated through a Sobel edge operator, detailed information of which can be found in [36]. The larger the value of , the better the image fusion performance.

- Spatial frequency () measures the number of details presented in a stimulus per degree of visual angle, and can be given as follows:where and represent the row frequency and column frequency, respectively, and denotes the gray-value intensity of the pixel located at in the image. A higher value of an image meant that it contained more details—and hence, led to a better fusion result.

- Standard deviation () is the square root of the variance, which refers to the image contrast. The higher the contrast, the greater the value of . was calculated as follows:where stands for the mean intensity of the image.

- Entropy () [37] measures how much information is contained in an image, calculated as follows:where represents the gray level of an image and stands for the probability of the th gray level in the image. A larger value signified a better image fusion performance.

- Feature mutual information () [38,39] refers to how much feature information is successfully transferred from the original images (, , and ) to the fused image , mathematically defined as follows:where stands for the amount of feature information transferred from image to image ; , in Formula (19), can be calculated as follows:where is the joint distribution function between image A and image B, and and denote the pixel coordinates in image A and image B, respectively. Should the value of be more significant, the fusion scheme fused three images successfully, preserving more feature information from each image.

- The feature similarity index measure () [40,41] related to the similarity between two images based on the low-level features—specifically, the phase congruency () and the image gradient magnitude (). The of two images, and , were calculated by:where and are the values of and , respectively, and refers to the local similarity, denoted as follows:where and are similarity measurements for and based on and respectively; and are two parameters; and and are two constants, all of which were defined in [36]. To measure the performance of the XGI fusion, the overall was calculated by averaging , , and , where denoted the fusion result. The higher the value, the better the fusion performance.

- The fusion factor () is based on mutual information (), which originally measures the statistical dependence between two random variables as a concept in information theory. It is capable of measuring how much information was transferred from the input image to the fused image, and was defined as follows:wherewhere , , and refer to the mutual information between images and , and , and and , respectively; is the joint probability density function of two images; and is the probability density function of an image. A larger value means a better image fusion performance.

- The structural similarity index measure () [42] measures how much structural information was transferred from one image into another based on the human eye’s sensitivity to the structural information, given as follows:where represents the value of images and ; is the number of windows that come from the division of an image; and denotes the structural similarity between images and in the th window. This was calculated by:where , , , and are the local means and the local variances of the th windows in images and , respectively; is the cross-covariance for the th windows between and . An overall value for the XGI fusion was defined as follows:where , , , and denote the three input images and the fused image, respectively. Note that larger values corresponded to better fusion performance.

- Power spectral density (PSD) [43,44] measures the power at each signal frequency. The estimate of the PSD at frequency was denoted as follows:where are the Fourier terms and is the number of samples. The total area enclosed by the PSD curve and the coordinate axis denoted the information contained in an image. The PSD curve of one image within one frequency band was higher than that of the other image, which meant that the former image had more information in this frequency band. A generally higher PSD curve indicated a better image fusion performance [42].

5. Experiment

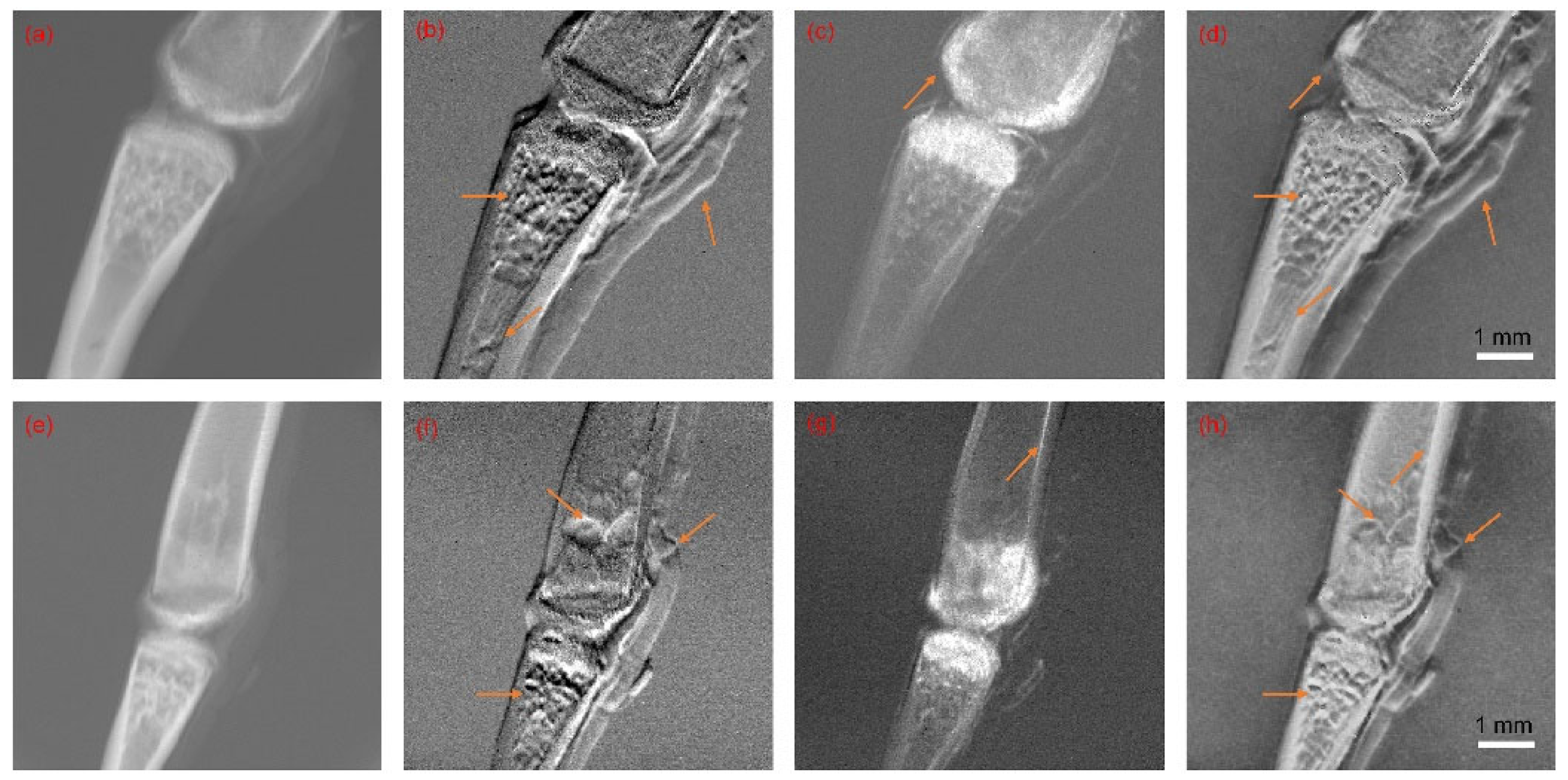

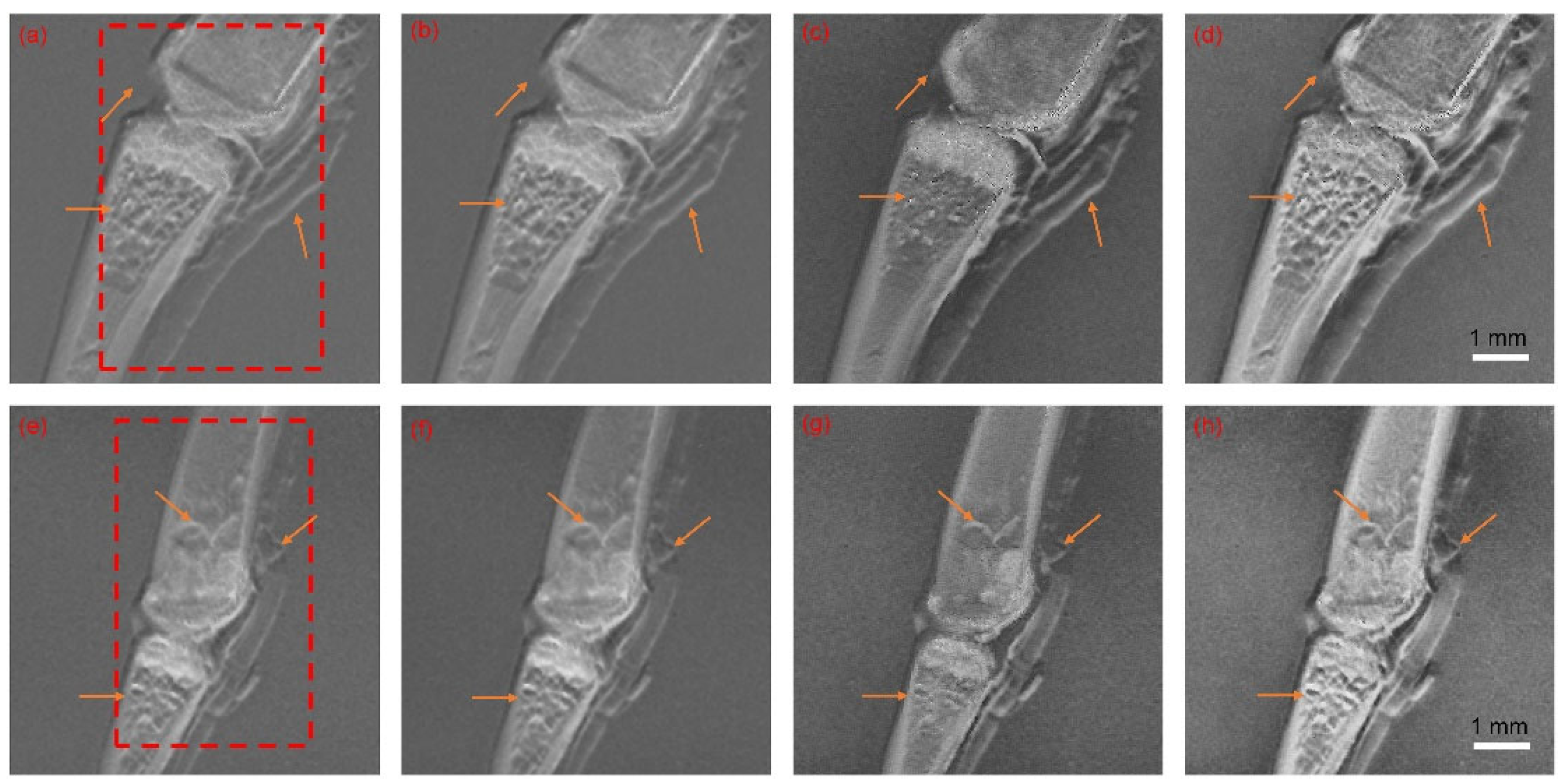

5.1. Image Fusion Parameters and Results

5.2. Objective Evaluation and Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cozzi, A.; Magni, V.; Zanardo, M.; Schiaffino, S.; Sardanelli, F. Contrast-enhanced Mammography: A Systematic Review and Meta-Analysis of Diagnostic Performance. Radiology 2022, 302, 568–581. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Abdalkader, M.; Nagel, S.; Qureshi, M.M.; Ribo, M.; Caparros, F.; Haussen, D.C.; Mohammaden, M.H.; Sheth, S.A.; Ortega-Gutierrez, S.; et al. Noncontrast Computed Tomography vs Computed Tomography Perfusion or Magnetic Resonance Imaging Selection in Late Presentation of Stroke With Large-Vessel Occlusion. JAMA Neurol. 2022, 79, 22–31. [Google Scholar] [CrossRef] [PubMed]

- Martz, H.E.; Logan, C.M.; Schneberk, D.J.; Shull, P.J. X-ray Imaging: Fundamentals, industrial techniques and applications; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Pfeiffer, F.; Weitkamp, T.; Bunk, O.; David, C. Phase retrieval and differential phase-contrast imaging with low-brilliance X-ray sources. Nat. Phys. 2006, 2, 258–261. [Google Scholar] [CrossRef]

- Zan, G.; Vine, D.J.; Yun, W.; Lewis, S.J.Y.; Wang, Q.; Wang, G. Quantitative analysis of a micro array anode structured target for hard x-ray grating interferometry. Phys. Med. Biol. 2020, 65, 035008. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Hauser, N.; Singer, G.; Trippel, M.; Kubik-Huch, R.A.; Schneider, C.W.; Stampanoni, M. Non-invasive classification of microcalcifications with phase-contrast X-ray mammography. Nat. Commun. 2014, 5, 3797. [Google Scholar] [CrossRef]

- Arboleda, C.; Wang, Z.; Jefimovs, K.; Koehler, T.; Van Stevendaal, U.; Kuhn, N.; David, B.; Prevrhal, S.; Lång, K.; Forte, S.; et al. Towards clinical grating-interferometry mammography. Eur. Radiol. 2020, 30, 1419–1425. [Google Scholar] [CrossRef]

- Meinel, F.G.; Schwab, F.; Yaroshenko, A.; Velroyen, A.; Bech, M.; Hellbach, K.; Fuchs, J.; Stiewe, T.; Yildirim, A.Ö.; Bamberg, F.; et al. Lung tumors on multimodal radiographs derived from grating-based X-ray imaging—A feasibility study. Phys. Med. 2014, 30, 352–357. [Google Scholar] [CrossRef]

- Gradl, R.; Morgan, K.S.; Dierolf, M.; Jud, C.; Hehn, L.; Günther, B.; Möller, W.; Kutschke, D.; Yang, L.; Stoeger, T.; et al. Dynamic In Vivo Chest X-ray Dark-Field Imaging in Mice. IEEE Trans. Med. Imaging 2019, 38, 649–656. [Google Scholar] [CrossRef]

- Glinz, J.; Thor, M.; Schulz, J.; Zabler, S.; Kastner, J.; Senck, S. Non-destructive characterisation of out-of-plane fibre waviness in carbon fibre reinforced polymers by X-ray dark-field radiography. Nondestruct. Test. Eval. 2022, 37, 497–507. [Google Scholar] [CrossRef]

- Sarapata, A.; Ruiz-Yaniz, M.; Zanette, I.; Rack, A.; Pfeiffer, F.; Herzen, J. Multi-contrast 3D X-ray imaging of porous and composite materials. Appl. Phys. Lett. 2015, 106, 154102. [Google Scholar] [CrossRef]

- Yashiro, W.; Terui, Y.; Kawabata, K.; Momose, A. On the origin of visibility contrast in x-ray Talbot interferometry. Opt. Express 2010, 18, 16890–16901. [Google Scholar] [CrossRef] [PubMed]

- Bech, M.; Bunk, O.; Donath, T.; Feidenhans’l, R.; David, C.; Pfeiffer, F. Quantitative x-ray dark-field computed tomography. Phys. Med. Biol. 2010, 55, 5529. [Google Scholar] [CrossRef] [PubMed]

- Michel, T.; Rieger, J.; Anton, G.; Bayer, F.; Beckmann, M.W.; Durst, J.; Fasching, P.A.; Haas, W.; Hartmann, A.; Pelzer, G.; et al. On a dark-field signal generated by micrometer-sized calcifications in phase-contrast mammography. Phys. Med. Biol. 2013, 58, 2713. [Google Scholar] [CrossRef] [PubMed]

- Ewald, R.; Thomas, K.; van Udo, S.; Gerhard, M.; Nik, H.; Zhentian, W.; Marco, S. Image fusion algorithm for differential phase contrast imaging. In Proceedings of the SPIE Medical Imaging 2012, San Diego, CA, USA, 4–9 February 2012. [Google Scholar]

- Wang, Z.; Clavijo, C.A.; Roessl, E.; van Stevendaal, U.; Koehler, T.; Hauser, N.; Stampanoni, M. Image fusion scheme for differential phase contrast mammography. J. Instrum. 2013, 8, C07011. [Google Scholar] [CrossRef]

- Scholkmann, F.; Revol, V.; Kaufmann, R.; Baronowski, H.; Kottler, C. A new method for fusion, denoising and enhancement of x-ray images retrieved from Talbot–Lau grating interferometry. Phys. Med. Biol. 2014, 59, 1425–1440. [Google Scholar] [CrossRef]

- Coello, E.; Sperl, J.I.; Bequé, D.; Benz, T.; Scherer, K.; Herzen, J.; Sztrókay-Gaul, A.; Hellerhoff, K.; Pfeiffer, F.; Cozzini, C.; et al. Fourier domain image fusion for differential X-ray phase-contrast breast imaging. Eur. J. Radiol. 2017, 89, 27–32. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef]

- Skodras, A.; Christopoulos, C.; Ebrahimi, T. The JPEG 2000 still image compression standard. IEEE Signal Process. Mag. 2001, 18, 36–58. [Google Scholar] [CrossRef]

- Stéphane, M. Chapter 6—Wavelet Zoom. In A Wavelet Tour of Signal Processing, 3rd ed.; Stéphane, M., Ed.; Academic Press: Cambridge, MA, USA, 2009; pp. 205–261. [Google Scholar]

- Donoho, D.L.; Vetterli, M.; DeVore, R.A.; Daubechies, I. Data compression and harmonic analysis. IEEE Trans. Inf. Theory 1998, 44, 2435–2476. [Google Scholar] [CrossRef]

- Yan, C.-M.; Guo, B.-L.; Yi, M. Fast Algorithm for Nonsubsampled Contourlet Transform. Acta Autom. Sin. 2014, 40, 757–762. [Google Scholar] [CrossRef]

- Cunha, A.L.D.; Zhou, J.; Do, M.N. The Nonsubsampled Contourlet Transform: Theory, Design, and Applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef]

- Zhan, K.; Zhang, H.; Ma, Y. New Spiking Cortical Model for Invariant Texture Retrieval and Image Processing. IEEE Trans. Neural Netw. 2009, 20, 1980–1986. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Liu, M.; Li, D.; Zheng, W.; Yin, L.; Wang, R. Recent Advances in Pulse-Coupled Neural Networks with Applications in Image Processing. Electronics 2022, 11, 3264. [Google Scholar] [CrossRef]

- Zhou, G.; Tian, X.; Zhou, A. Image copy-move forgery passive detection based on improved PCNN and self-selected sub-images. Front. Comput. Sci. 2021, 16, 164705. [Google Scholar] [CrossRef]

- Liu, H.; Cheng, Y.; Zuo, Z.; Sun, T.; Wang, K. Discrimination of neutrons and gamma rays in plastic scintillator based on pulse-coupled neural network. Nucl. Sci. Tech. 2021, 32, 82. [Google Scholar] [CrossRef]

- Liu, H.; Zuo, Z.; Li, P.; Liu, B.; Chang, L.; Yan, Y. Anti-noise performance of the pulse coupled neural network applied in discrimination of neutron and gamma-ray. Nucl. Sci. Tech. 2022, 33, 75. [Google Scholar] [CrossRef]

- Liu, H.; Liu, M.; Xiao, Y.; Li, P.; Zuo, Z.; Zhan, Y. Discrimination of neutron and gamma ray using the ladder gradient method and analysis of filter adaptability. Nucl. Sci. Tech. 2022, 33, 159. [Google Scholar] [CrossRef]

- Liu, M.; Zhao, F.; Jiang, X.; Zhang, H.; Zhou, H. Parallel binary image cryptosystem via spiking neural networks variants. Int. J. Neural Syst. 2021, 32, 2150014. [Google Scholar] [CrossRef]

- Lian, J.; Yang, Z.; Liu, J.; Sun, W.; Zheng, L.; Du, X.; Yi, Z.; Shi, B.; Ma, Y. An Overview of Image Segmentation Based on Pulse-Coupled Neural Network. Arch. Comput. Methods Eng. 2021, 28, 387–403. [Google Scholar] [CrossRef]

- Tan, W.; Thitøn, W.; Xiang, P.; Zhou, H. Multi-modal brain image fusion based on multi-level edge-preserving filtering. Biomed. Signal Process. Control 2021, 64, 102280. [Google Scholar] [CrossRef]

- Lim, J.S. Two-Dimensional Signal and Image Processing; Englewood Cliffs: Englewood Cliffs, NJ, USA, 1990. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Xydeas, C.S.; Petrović, V. Objective image fusion performance measure. Electron. Lett. 2000, 36, 308–309. [Google Scholar] [CrossRef]

- Hamza, A.B.; Krim, H. Jensen-renyi divergence measure: Theoretical and computational perspectives. In Proceedings of the IEEE International Symposium on Information Theory, Yokohama, Japan, 29 June–4 July 2003; p. 257. [Google Scholar]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. A non-reference image fusion metric based on mutual information of image features. Comput. Electr. Eng. 2011, 37, 744–756. [Google Scholar] [CrossRef]

- Haghighat, M.; Razian, M.A. Fast-FMI: Non-reference image fusion metric. In Proceedings of the 2014 IEEE 8th International Conference on Application of Information and Communication Technologies (AICT), Astana, Kazakhstan, 15–17 October 2014; pp. 1–3. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Laganière, R. Phase congruence measurement for image similarity assessment. Pattern Recognit. Lett. 2007, 28, 166–172. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Sheehy, C.D.; McCrady, N.; Graham, J.R. Constraining the Adaptive Optics Point-Spread Function in Crowded Fields: Measuring Photometric Aperture Corrections. Astrophys. J. 2006, 647, 1517–1530. [Google Scholar] [CrossRef]

- Gircys, M.; Ross, B.J. Image Evolution Using 2D Power Spectra. Complexity 2019, 2019, 7293193. [Google Scholar] [CrossRef]

- Zan, G.; Gul, S.; Zhang, J.; Zhao, W.; Lewis, S.; Vine, D.J.; Liu, Y.; Pianetta, P.; Yun, W. High-resolution multicontrast tomography with an X-ray microarray anode–structured target source. Proc. Natl. Acad. Sci. USA 2021, 118, e2103126118. [Google Scholar] [CrossRef]

- Xiang, T.; Yan, L.; Gao, R. A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys. Technol. 2015, 69, 53–61. [Google Scholar] [CrossRef]

| Measures | NSCT | NSCT-PCNN | SIDWT | Proposed Method (NSCT-SCM) |

|---|---|---|---|---|

| 2.6297 | 2.2885 | 0.6527 | 1.8847 | |

| 5.8758 | 5.6990 | 6.5755 | 7.0350 | |

| 0.0962 | 0.0830 | 0.1229 | 0.1615 | |

| 12.1136 | 14.0702 | 40.3987 | 40.6443 | |

| 0.9524 | 0.9524 | 0.9181 | 0.9321 | |

| 13.1018 | 13.0406 | 12.9649 | 13.4200 | |

| 0.9973 | 0.9970 | 0.9974 | 0.9961 | |

| 0.9390 | 0.9381 | 0.9304 | 0.9234 |

| Measures | NSCT | NSCT-PCNN | SIDWT | Proposed Method (NSCT-SCM) |

|---|---|---|---|---|

| 1.2587 | 1.1371 | 0.3937 | 1.1191 | |

| 6.0928 | 6.2928 | 6.9253 | 7.2230 | |

| 0.1077 | 0.1077 | 0.1471 | 0.1821 | |

| 8.3268 | 8.3268 | 30.0311 | 24.2106 | |

| 0.9336 | 0.9936 | 0.8545 | 0.8943 | |

| 13.7133 | 13.7133 | 13.5084 | 14.2617 | |

| 0.9974 | 0.9974 | 0.9964 | 0.9968 | |

| 0.9368 | 0.9368 | 0.9214 | 0.9318 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Liu, M.; Jiang, X.; Luo, J.; Song, Y.; Chu, X.; Zan, G. Multimodal Image Fusion for X-ray Grating Interferometry. Sensors 2023, 23, 3115. https://doi.org/10.3390/s23063115

Liu H, Liu M, Jiang X, Luo J, Song Y, Chu X, Zan G. Multimodal Image Fusion for X-ray Grating Interferometry. Sensors. 2023; 23(6):3115. https://doi.org/10.3390/s23063115

Chicago/Turabian StyleLiu, Haoran, Mingzhe Liu, Xin Jiang, Jinglei Luo, Yuming Song, Xingyue Chu, and Guibin Zan. 2023. "Multimodal Image Fusion for X-ray Grating Interferometry" Sensors 23, no. 6: 3115. https://doi.org/10.3390/s23063115

APA StyleLiu, H., Liu, M., Jiang, X., Luo, J., Song, Y., Chu, X., & Zan, G. (2023). Multimodal Image Fusion for X-ray Grating Interferometry. Sensors, 23(6), 3115. https://doi.org/10.3390/s23063115