A Hyperspectral Image Classification Method Based on the Nonlocal Attention Mechanism of a Multiscale Convolutional Neural Network

Abstract

1. Introduction

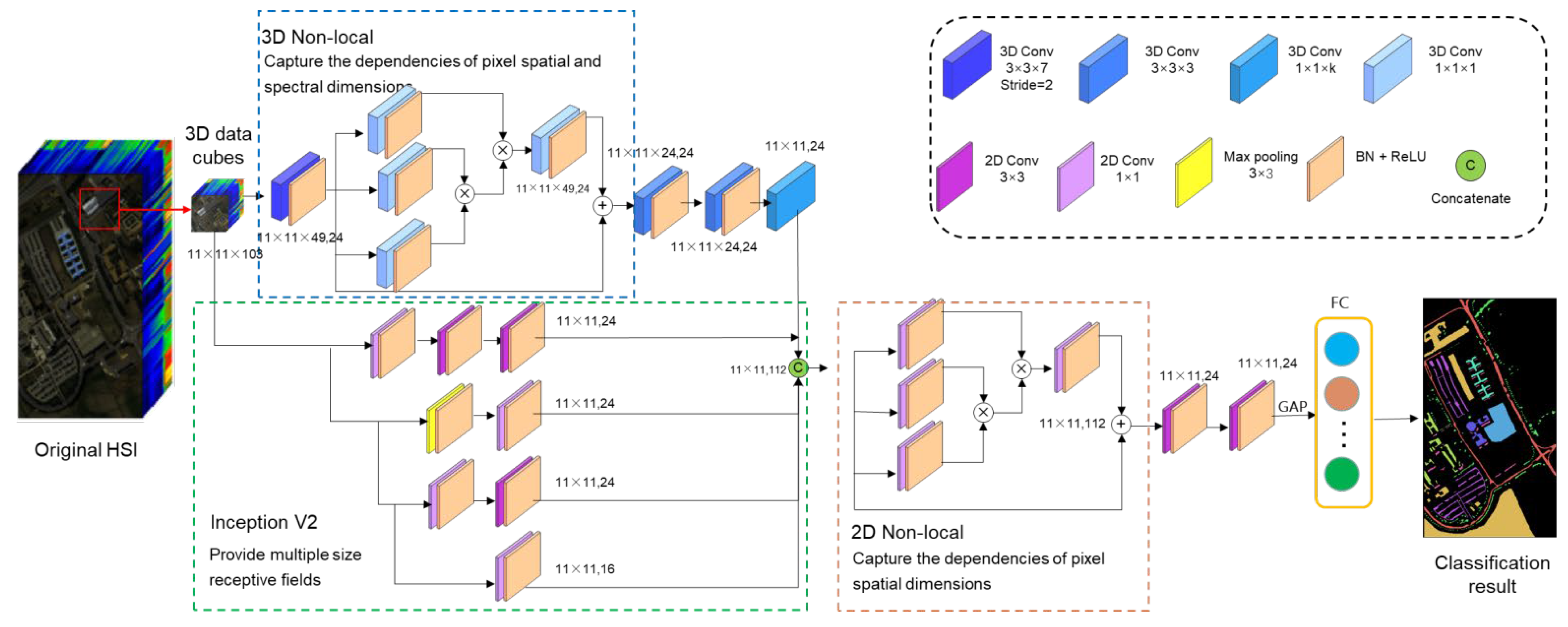

- We use the inception and nonlocal attention mechanism to solve the problems of insufficient spatial–spectral feature extraction and the high redundancy of spectral information in hyperspectral images and to achieve higher classification accuracy;

- We compare the nonlocal attention block with two other attention mechanisms to verify its effectiveness for hyperspectral image classification;

- Experiments were conducted using other parameters that affect the classification accuracy of hyperspectral images with the deep learning model. The results provide a reference for further improving the classification accuracy of hyperspectral images.

2. Related Works

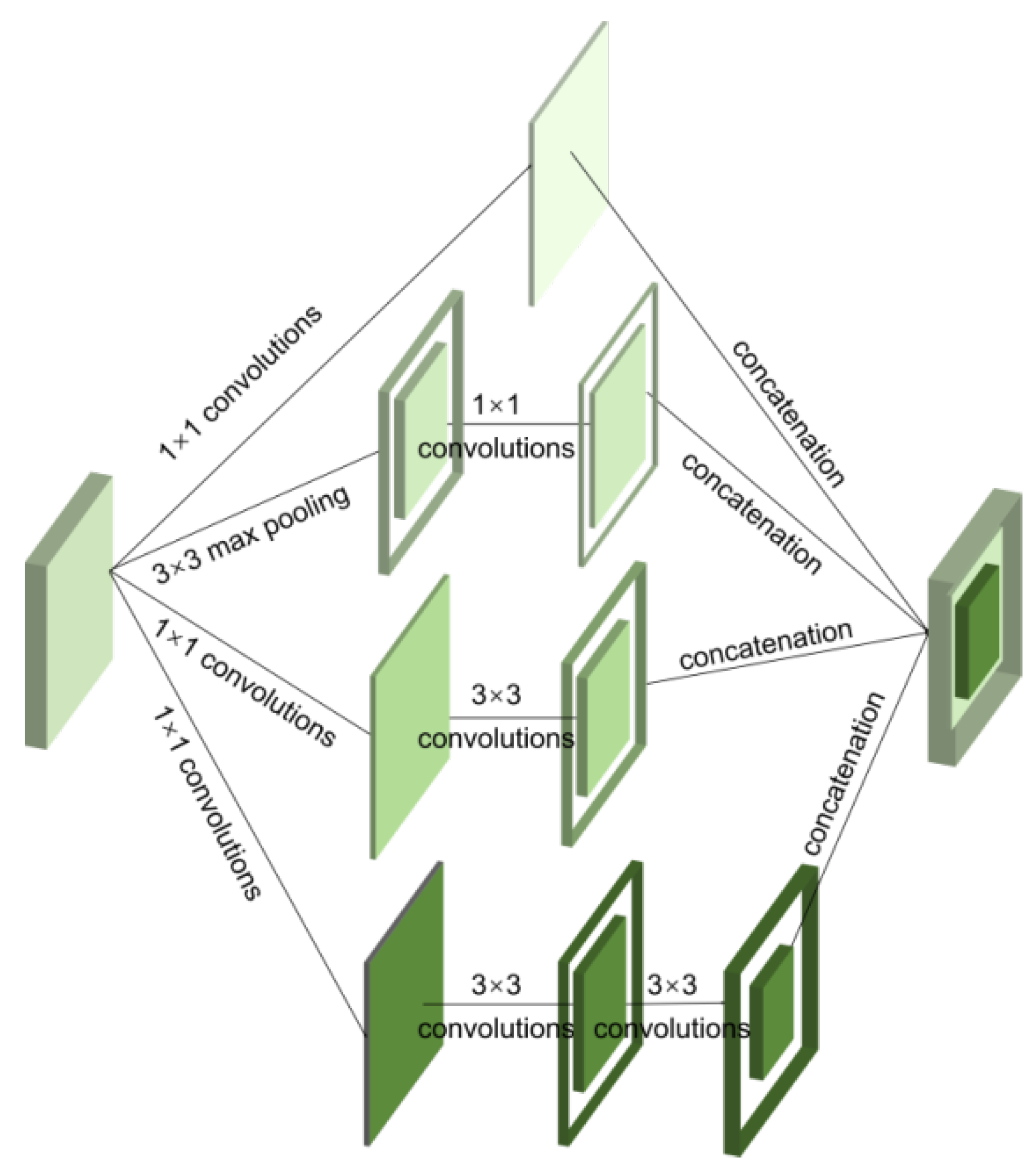

2.1. Inception Block

2.2. Nonlocal Block

3. The Proposed Method

4. Ablation Study

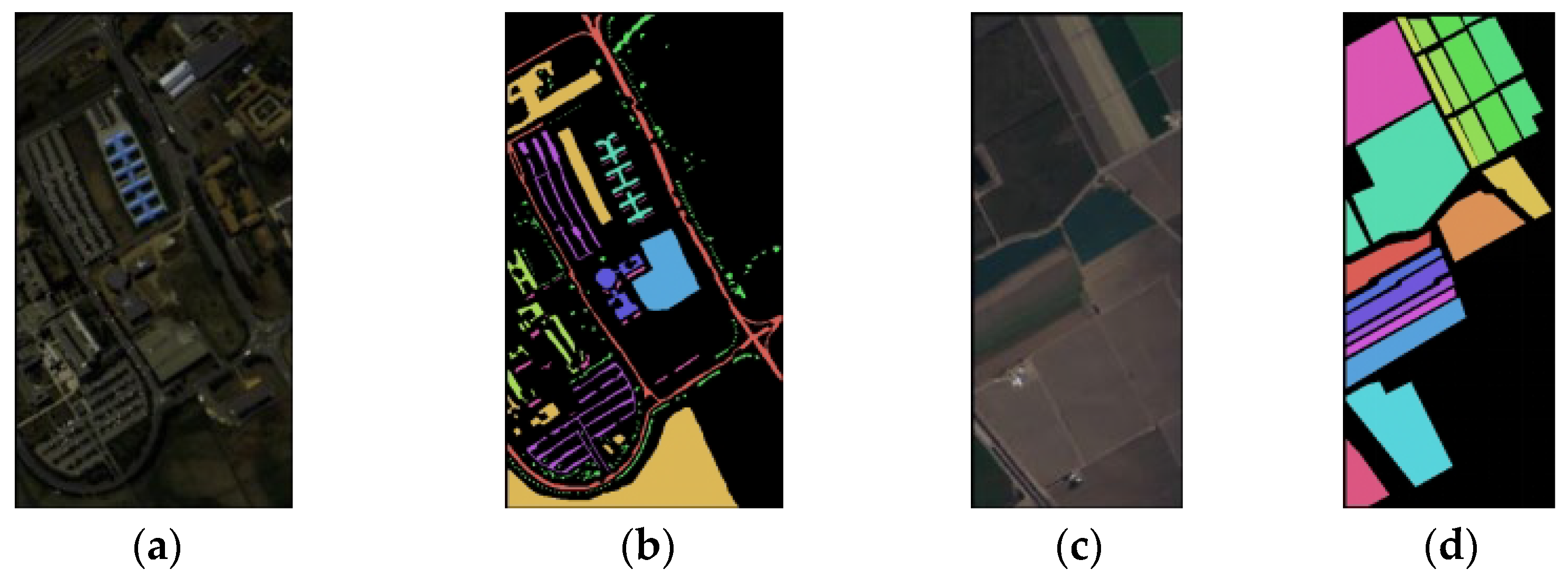

4.1. Experimental Data and Evaluation Metrics

4.2. Experimental Environment and Parameter Settings

4.3. Experiment

4.3.1. Selection of the Network Backbone

4.3.2. Comparing the Effectiveness of Multiscale Attention Modules

4.3.3. Searching for the Optimal Parameters

- The number of convolutional kernels

- 2.

- Neighboring pixel block size and proportion of training samples

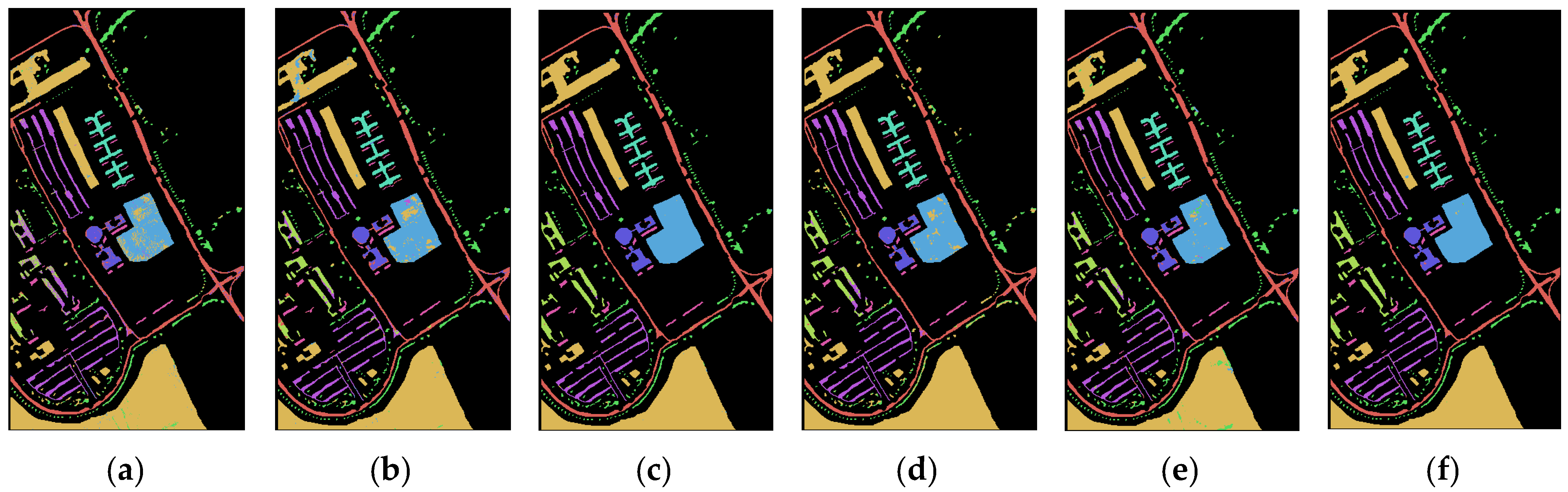

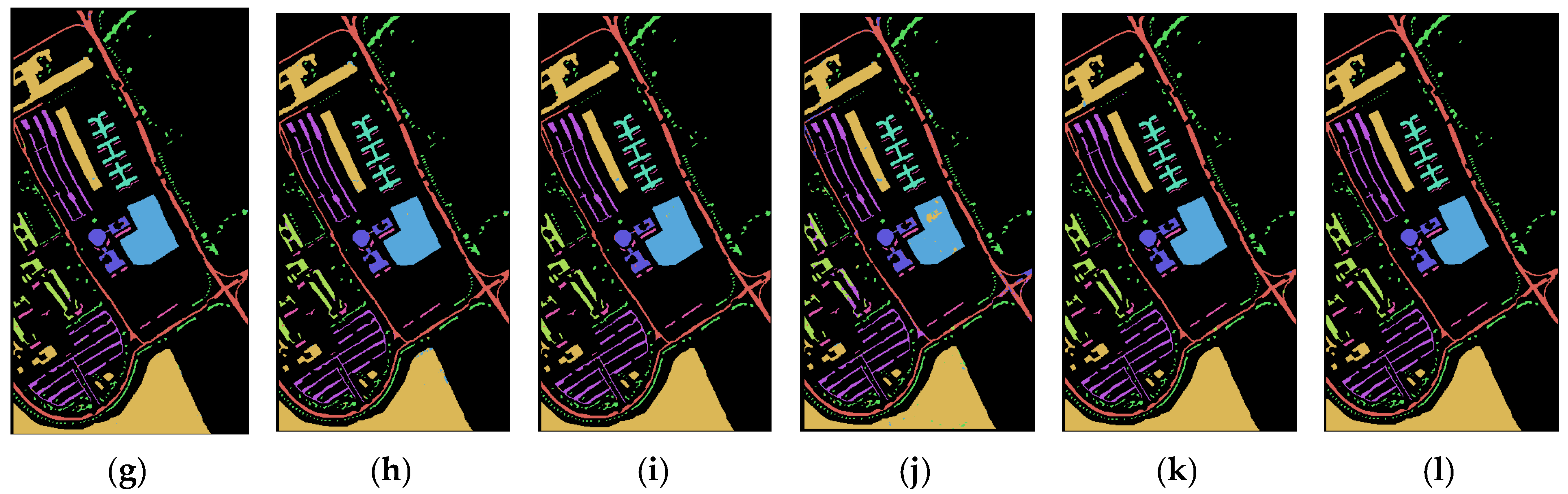

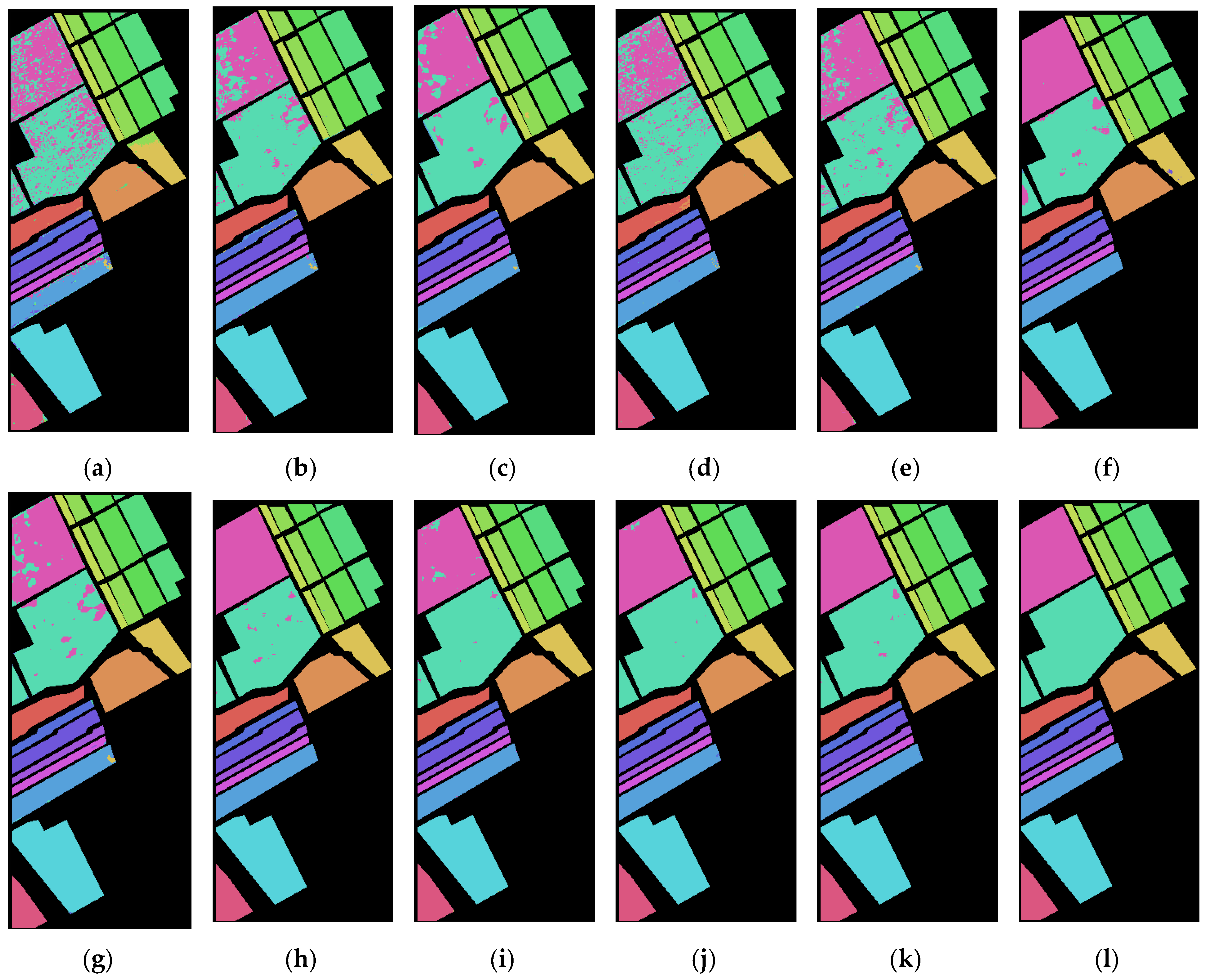

4.3.4. Algorithm Comparison Experiments

5. Conclusions

- The 2-3D NL CNN effectively improves the classification accuracy of hyperspectral images. The inception block uses convolution kernels of different sizes to provide different sizes of receptive fields for the network, making feature extraction more comprehensive. The nonlocal attention mechanism enhances the spectral feature extraction ability of the network and suppresses the information redundancy of spectral dimension.

- The nonlocal attention mechanism is more suitable for hyperspectral image classification tasks. Our experiment compared three attention mechanisms, namely SENet, CBAM, and a nonlocal attention mechanism, and the nonlocal attention mechanism improved the classification accuracy more significantly for the two datasets. This is mainly because the nonlocal attention mechanism can factor in the correlation between the pixels at a greater distance, as well as accounting for the pixels to be classified.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, R.; He, M. Band Selection Based on Feature Weighting for Classification of Hyperspectral Data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 156–159. [Google Scholar] [CrossRef]

- Li, J.; Khodadadzadeh, M.; Plaza, A.; Jia, X.; Bioucas-Dias, J.M. A Discontinuity Preserving Relaxation Scheme for Spectral–Spatial Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 625–639. [Google Scholar] [CrossRef]

- Liang, L.; Di, L.; Zhang, L.; Deng, M.; Qin, Z.; Zhao, S.; Lin, H. Estimation of crop LAI using hyperspectral vegetation indices and a hybrid inversion method. Remote Sens. Environ. 2015, 165, 123–134. [Google Scholar] [CrossRef]

- Yang, X.; Yu, Y. Estimating Soil Salinity Under Various Moisture Conditions: An Experimental Study. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2525–2533. [Google Scholar] [CrossRef]

- Feddema, J.J.; Oleson, K.W.; Bonan, G.B.; Mearns, L.O.; Buja, L.E.; Meehl, G.A.; Washington, W.M. The Importance of Land-Cover Change in Simulating Future Climates. Science 2005, 310, 1674–1678. [Google Scholar] [CrossRef] [PubMed]

- Li, S.H.; Liu, X.; Li, X.P.; Chen, Y.M. Simulation model of land use dynamics and application: Progress and prospects. J. Remote Sens. 2017, 21, 329–340. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, S.; Li, H.; Wu, P.; Dale, P.; Liu, L.; Cheng, S. A restrictive polymorphic ant colony algorithm for the optimal band selection of hyperspectral remote sensing images. Int. J. Remote Sens. 2020, 41, 1093–1117. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- van Ruitenbeek, F.; van der Werff, H.; Bakker, W.; van der Meer, F.; Hein, K. Measuring rock microstructure in hyperspectral mineral maps. Remote Sens. Environ. 2019, 220, 94–109. [Google Scholar] [CrossRef]

- Pipitone, C.; Maltese, A.; Dardanelli, G.; Brutto, M.L.; La Loggia, G. Monitoring Water Surface and Level of a Reservoir Using Different Remote Sensing Approaches and Comparison with Dam Displacements Evaluated via GNSS. Remote Sens. 2018, 10, 71. [Google Scholar] [CrossRef]

- Grotte, M.E.; Birkeland, R.; Honore-Livermore, E.; Bakken, S.; Garrett, J.L.; Prentice, E.F.; Sigernes, F.; Orlandic, M.; Gravdahl, J.T.; Johansen, T.A. Ocean Color Hyperspectral Remote Sensing With High Resolution and Low Latency—The HYPSO-1 CubeSat Mission. IEEE Trans. Geosci. Remote Sens. 2021, 60, 19. [Google Scholar] [CrossRef]

- Sallam, N.M.; Saleh, A.I.; Ali, H.A.; Abdelsalam, M.M. An efficient EGWO algorithm as feature selection for B-ALL diagnoses and its subtypes classification using peripheral blood smear images. Alex. Eng. J. 2023, 68, 39–66. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Yu, Y.; Samali, B.; Rashidi, M.; Mohammadi, M.; Nguyen, T.N.; Zhang, G. Vision-based concrete crack detection using a hybrid framework considering noise effect. J. Build. Eng. 2022, 61, 105246. [Google Scholar] [CrossRef]

- LeBien, J.; Zhong, M.; Campos-Cerqueira, M.; Velev, J.P.; Dodhia, R.; Ferres, J.L.; Aide, T.M. A pipeline for identification of bird and frog species in tropical soundscape recordings using a convolutional neural network. Ecol. Inform. 2020, 59, 101113. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.A. Automated Melanoma Recognition in Dermoscopy Images via Very Deep Residual Networks. IEEE Trans. Med. Imaging 2017, 36, 994–1004. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Going Deeper With Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar] [CrossRef]

- Yu, S.; Jia, S.; Xu, C. Convolutional neural networks for hyperspectral image classification. Neurocomputing 2017, 219, 88–98. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, Q.; Li, J.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Employing a Spatial–Spectral Deep Residual Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1205–1218. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Wang, W.; Dou, S.; Jiang, Z.; Sun, L. A Fast Dense Spectral–Spatial Convolution Network Framework for Hyperspectral Images Classification. Remote Sens. 2018, 10, 1068. [Google Scholar] [CrossRef]

- Duan, P.; Kang, X.; Li, S.; Ghamisi, P. Noise-Robust Hyperspectral Image Classification via Multi-Scale Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1948–1962. [Google Scholar] [CrossRef]

- Fang, S.; Quan, D.; Wang, S.; Zhang, L.; Zhou, L. A Two-Branch Network with Semi-Supervised Learning for Hyperspectral Classification. In Proceedings of the 38th IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 3860–3863. [Google Scholar] [CrossRef]

- Zhang, C.; Li, G.; Du, S. Multi-Scale Dense Networks for Hyperspectral Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9201–9222. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.; Chan, J.C.-W.; Yi, C. Hyperspectral image classification using two-channel deep convolutional neural network. In Proceedings of the 36th IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5079–5082. [Google Scholar] [CrossRef]

- He, M.; Li, B.; Chen, H. Multi-scale 3D deep convolutional neural network for hyperspectral image classification. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3904–3908. [Google Scholar]

- Pooja, K.; Nidamanuri, R.R.; Mishra, D. Multi-Scale Dilated Residual Convolutional Neural Network for Hyperspectral Image Classification. In Proceedings of the 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019. [Google Scholar] [CrossRef]

- Wu, S.F.; Zhang, J.P.; Zhong, C.X. Multiscale spectral-spatial unified networks for hyperspectral image classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 2706–2709. [Google Scholar] [CrossRef]

- Fang, B.; Liu, Y.; Zhang, H.; He, J. Hyperspectral Image Classification Based on 3D Asymmetric Inception Network with Data Fusion Transfer Learning. Remote Sens. 2022, 14, 1711. [Google Scholar] [CrossRef]

- Lu, Z.; Xu, B.; Sun, L.; Zhan, T.; Tang, S. 3-D Channel and Spatial Attention Based Multiscale Spatial–Spectral Residual Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4311–4324. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X.; Wu, S. Spectral–Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3232–3245. [Google Scholar] [CrossRef]

- Hu, J.; Liu, Y.; Kang, X.; Fan, S. Multilevel Progressive Network With Nonlocal Channel Attention for Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 14. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Delibasoglu, I.; Cetin, M. Improved U-Nets with inception blocks for building detection. J. Appl. Remote Sens. 2020, 14, 15. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, C.; Coleman, S.; Kerr, D. DENSE-INception U-net for medical image segmentation. Comput. Methods Programs Biomed. 2020, 192, 15. [Google Scholar] [CrossRef]

- Halawa, L.J.; Wibowo, A.; Ernawan, F. Face Recognition Using Faster R-CNN with Inception-V2 Architecture for CCTV Camera. In Proceedings of the 3rd International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 29–30 October 2019. [Google Scholar] [CrossRef]

- Chen, J.; Lin, Y.; Guo, Y.; Zhang, M.; Alawieh, M.B.; Pan, D.Z. Lithography hotspot detection using a double inception module architecture. J. Micro/Nanolithogr. MEMS MOEMS 2019, 18, 9. [Google Scholar] [CrossRef]

- Purnamawati, S.; Rachmawati, D.; Lumanauw, G.; Rahmat, R.F.; Taqyuddin, R. Korean letter handwritten recognition using deep convolutional neural network on android platform. In Proceedings of the 2nd International Conference on Computing and Applied Informatics, Medan, Indonesia, 28–30 November 2017. [Google Scholar] [CrossRef]

- Singh, S.; Kumar, R. Breast cancer detection from histopathology images with deep inception and residual blocks. Multimed. Tools Appl. 2022, 81, 5849–5865. [Google Scholar] [CrossRef]

- Shokri, M.; Harati, A.; Taba, K. Salient object detection in video using deep non-local neural networks. J. Vis. Commun. Image Represent. 2020, 68, 10. [Google Scholar] [CrossRef]

- Wang, J.; Qiao, X.; Liu, C.; Wang, X.; Liu, Y.; Yao, L.; Zhang, H. Automated ECG classification using a non-local convolutional block attention module. Comput. Methods Programs Biomed. 2021, 203, 15. [Google Scholar] [CrossRef]

- Wang, S.; Hou, X.; Zhao, X. Automatic Building Extraction from High-Resolution Aerial Imagery via Fully Convolutional Encoder-Decoder Network with Non-Local Block. IEEE Access 2020, 8, 7313–7322. [Google Scholar] [CrossRef]

- Hyperspectral Remote Sensing Scenes[EB/OL]. Available online: http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral-Remote-Sensing-Scenes (accessed on 24 September 2017).

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September2018. [Google Scholar] [CrossRef]

- Ben Hamida, A.; Benoit, A.; Lambert, P.; Ben Amar, C. 3-D Deep Learning Approach for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4420–4434. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Fernandez-Beltran, R.; Plaza, J.; Plaza, A.J.; Pla, F. Deep Pyramidal Residual Networks for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 740–754. [Google Scholar] [CrossRef]

| Model | OA (%) | Training Time (s) | Number of Parameters | |||

|---|---|---|---|---|---|---|

| PU | SA | PU | SA | PU | SA | |

| 2D | 98.663 | 97.038 | 152.141 | 210.043 | 48,850 | 70,841 |

| 3D | 99.149 | 97.270 | 815.031 | 1784.013 | 90,322 | 90,497 |

| 3D–2D | 99.132 | 98.156 | 556.057 | 1093.856 | 57,490 | 57,665 |

| Method | MS | 2D Nonlocal | 3D Nonlocal | SE | CBAM | OA (%) | |

|---|---|---|---|---|---|---|---|

| PU | SA | ||||||

| baseline | 99.132 | 98.156 | |||||

| Ms | √ | 99.295 | 98.254 | ||||

| Nonlocal | √ | √ | 99.426 | 98.294 | |||

| Ms+2D nonlocal | √ | √ | 99.439 | 98.449 | |||

| Ms+3D nonlocal | √ | √ | 99.518 | 98.525 | |||

| Ms+nonlocal | √ | √ | √ | 99.592 | 98.567 | ||

| MS+SE | √ | √ | 99.349 | 97.922 | |||

| MS+CBAM | √ | √ | 99.332 | 97.893 | |||

| Dataset | Performance | Number of Convolutional Kernels | |||

|---|---|---|---|---|---|

| 12 | 18 | 24 | 30 | ||

| PU | OA (%) | 98.962 | 99.275 | 99.533 | 99.617 |

| Parameters | 67,694 | 93,158 | 125,832 | 165,686 | |

| SA | OA (%) | 96.640 | 97.764 | 98.290 | 98.344 |

| Parameters | 71,825 | 97,331 | 130,037 | 169,943 | |

| Datasets | Proportion | Neighboring Pixel Block Size | ||||

|---|---|---|---|---|---|---|

| 5 | 7 | 9 | 11 | 13 | ||

| PU | 5 | 98.736 | 99.553 | 99.718 | 99.731 | 98.729 |

| 10 | 99.579 | 99.893 | 99.924 | 99.927 | 99.915 | |

| 15 | 99.731 | 99.935 | 99.948 | 99.989 | 99.972 | |

| 20 | 99.718 | 99.922 | 99.934 | 99.961 | 99.952 | |

| SA | 5 | 93.892 | 98.264 | 98.578 | 98.582 | 98.554 |

| 10 | 97.257 | 99.218 | 99.589 | 99.809 | 98.756 | |

| 15 | 98.598 | 99.465 | 99.624 | 99.682 | 99.635 | |

| 20 | 98.454 | 99.463 | 99.587 | 99.645 | 99.627 | |

| Method | Conv Nb | Spatial Size | FC Nb | Parameter Nb |

|---|---|---|---|---|

| 2D CNN | 6 | 5 | 1 | 1152 |

| 3D CNN | 6 | 5 | 1 | 4176 |

| HybridSN | 4 | 5 | 2 | 3520 |

| Two-CNN | 5 | 5 | 2 | 6454 |

| Hamida | 4 | 5 | 2 | 40,740 |

| PResNet | 10 | 5 | 1 | 2468 |

| M3D-DCNN | 4 | 5 | 1 | 2528 |

| FDSSC | 9 | 5 | 1 | 46,308 |

| SSRN | 11 | 5 | 1 | 45,688 |

| SSAN | 5 | 5 | 1 | 26,880 |

| 2-3D-NL CNN | 6 | 5 | 1 | 4786 |

| Method | PU Dataset | SA Dataset | ||

|---|---|---|---|---|

| OA(%) | AA(%) | OA(%) | AA(%) | |

| 2D CNN | 90.85 ± 0.37 | 87.68 ± 0.32 | 91.01 ± 0.28 | 92.37 ± 0.25 |

| 3D CNN | 93.80 ± 0.24 | 89.85 ± 0.27 | 93.86 ± 0.25 | 92.75 ± 0.18 |

| HybridSN | 97.33 ± 0.19 | 97.16 ± 0.15 | 97.44 ± 0.22 | 97.32 ± 0.19 |

| Two-CNN | 94.63 ± 0.27 | 93.31 ± 0.22 | 91.38 ± 0.36 | 89.74 ± 0.43 |

| Hamida | 94.51 ± 0.42 | 93.68 ± 0.35 | 93.15 ± 0.34 | 92.86 ± 0.28 |

| PResNet | 99.76 ± 0.21 | 99.68 ± 0.23 | 99.59 ± 0.25 | 99.45 ± 0.28 |

| M3D-DCNN | 98.98 ± 0.34 | 98.34 ± 0.28 | 98.78 ± 0.35 | 98.55 ± 0.32 |

| FDSSC | 99.56 ± 0.18 | 99.35 ± 0.25 | 99.45 ± 0.28 | 99.34 ± 0.34 |

| SSRN | 99.42 ± 0.26 | 99.35 ± 0.32 | 99.22 ± 0.26 | 99.31 ± 0.33 |

| SSAN | 99.64 ± 0.34 | 99.54 ± 0.28 | 99.35 ± 0.25 | 99.27 ± 0.29 |

| 2-3D-NL CNN | 99.81 ± 0.25 | 99.76 ± 0.24 | 99.65 ± 0.28 | 99.42 ± 0.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Lu, Y.; Cao, S.; Wang, X.; Xie, S. A Hyperspectral Image Classification Method Based on the Nonlocal Attention Mechanism of a Multiscale Convolutional Neural Network. Sensors 2023, 23, 3190. https://doi.org/10.3390/s23063190

Li M, Lu Y, Cao S, Wang X, Xie S. A Hyperspectral Image Classification Method Based on the Nonlocal Attention Mechanism of a Multiscale Convolutional Neural Network. Sensors. 2023; 23(6):3190. https://doi.org/10.3390/s23063190

Chicago/Turabian StyleLi, Mingtian, Yu Lu, Shixian Cao, Xinyu Wang, and Shanjuan Xie. 2023. "A Hyperspectral Image Classification Method Based on the Nonlocal Attention Mechanism of a Multiscale Convolutional Neural Network" Sensors 23, no. 6: 3190. https://doi.org/10.3390/s23063190

APA StyleLi, M., Lu, Y., Cao, S., Wang, X., & Xie, S. (2023). A Hyperspectral Image Classification Method Based on the Nonlocal Attention Mechanism of a Multiscale Convolutional Neural Network. Sensors, 23(6), 3190. https://doi.org/10.3390/s23063190