RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements

Abstract

1. Introduction

2. Related Work

3. Contributions

4. Problem Formulation

4.1. Sensor Models

4.2. Attitude and Position Estimation

5. Proposed Solution

5.1. Network Components

5.1.1. Positional Encoding

5.1.2. Self-Attention

5.1.3. Encoder

5.1.4. Decoder

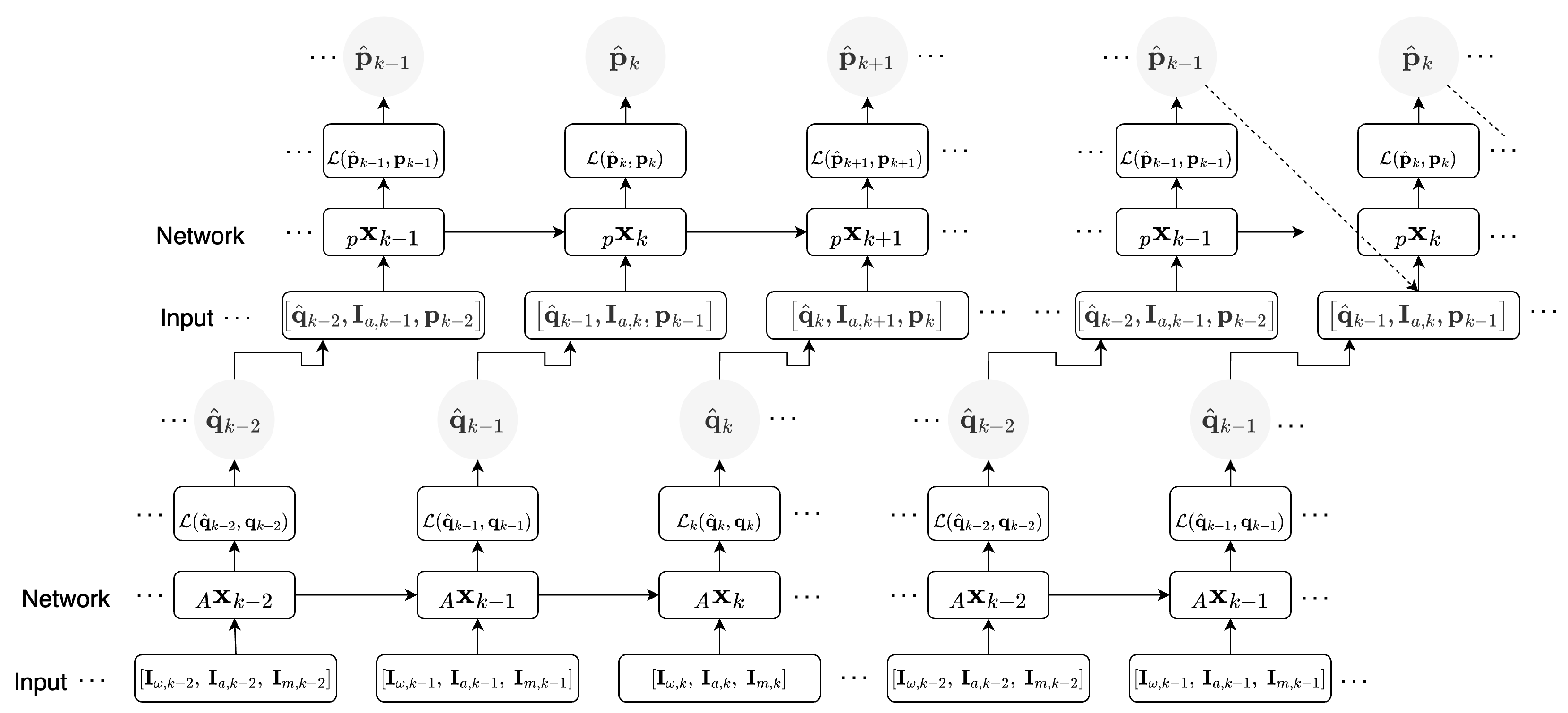

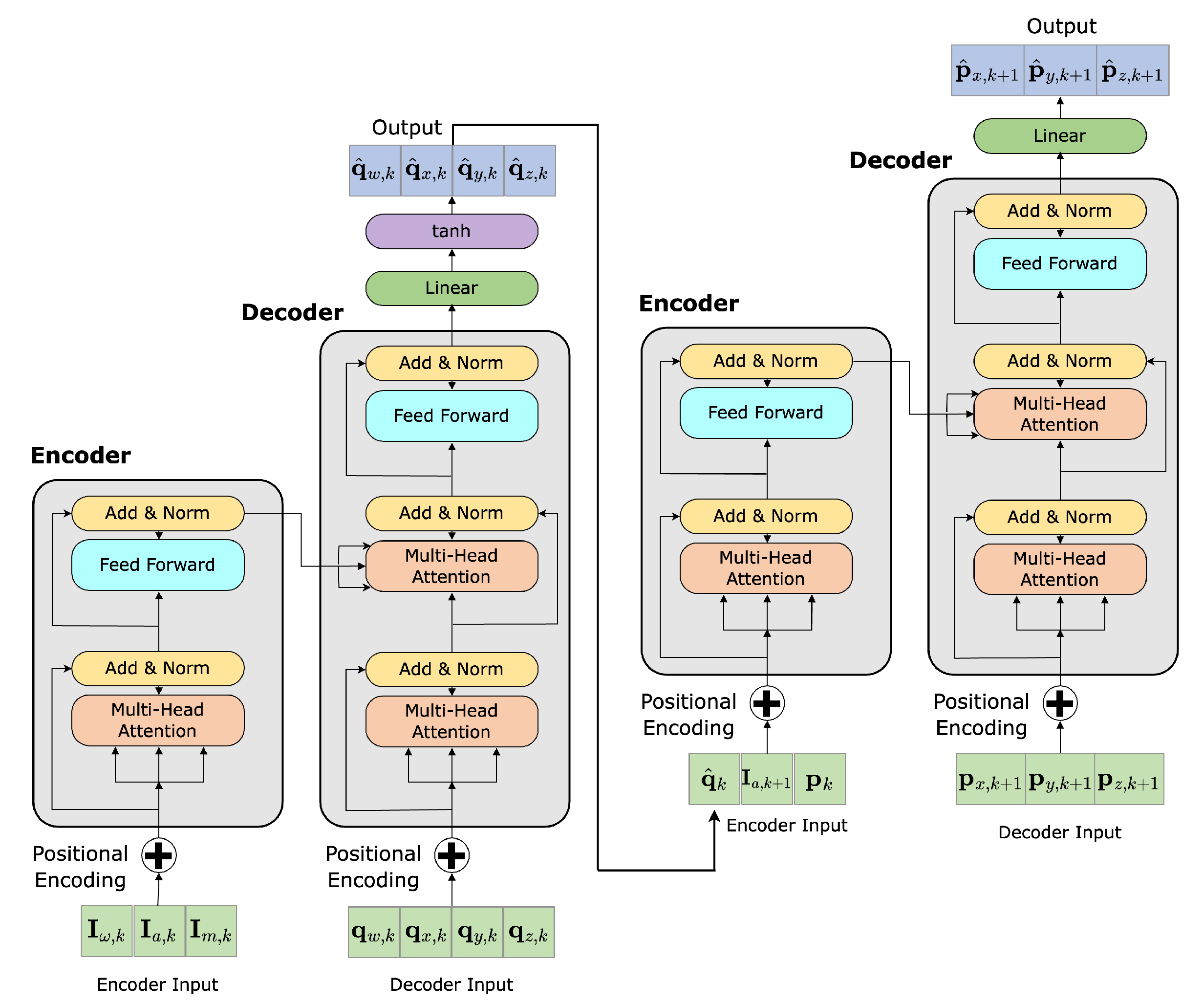

5.2. Attitude Recursive Inertial Odometry Transformer

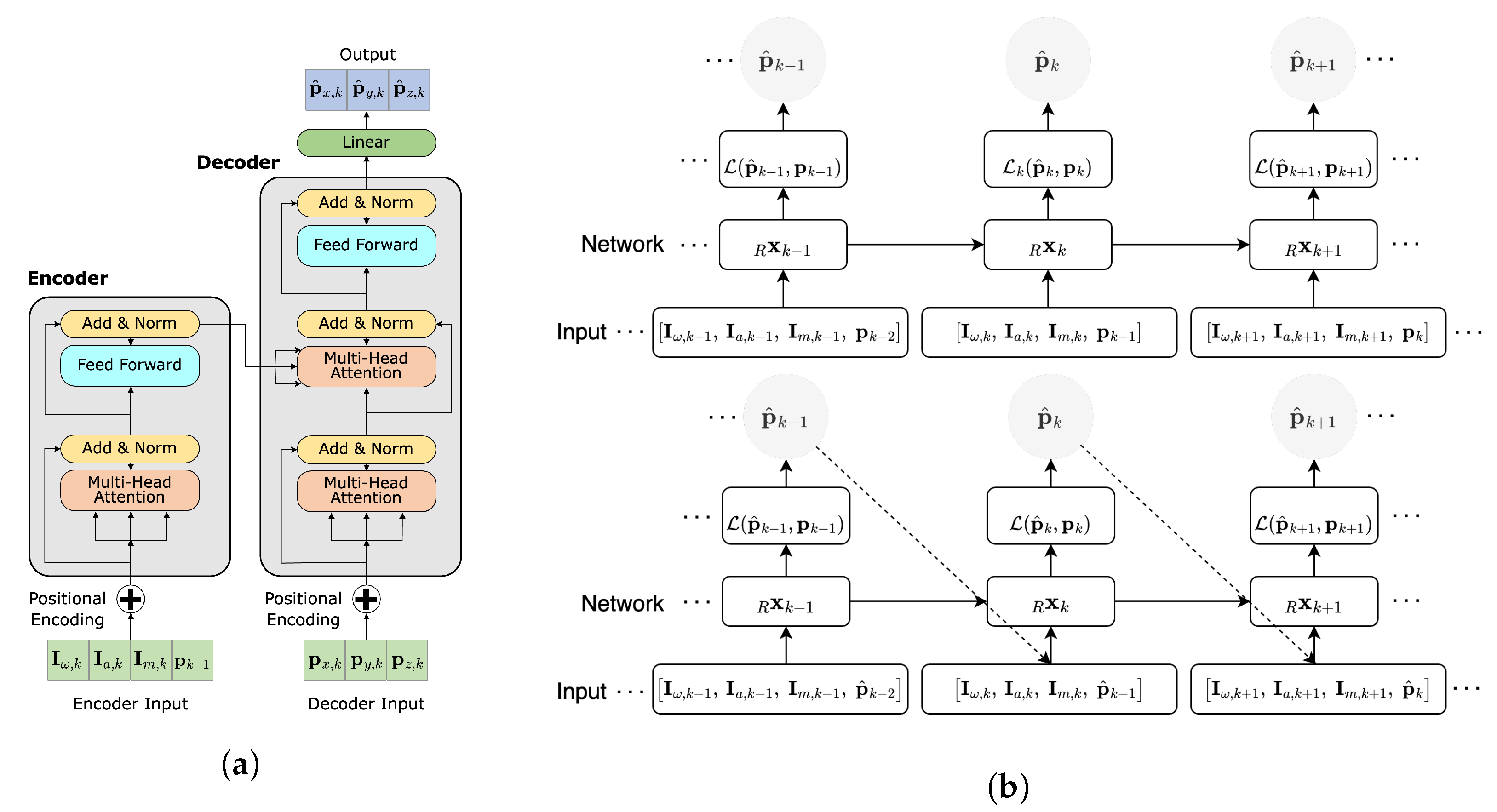

5.3. Recursive Inertial Odometry Transformer

6. Evaluation

6.1. Gated Recurrent Unit

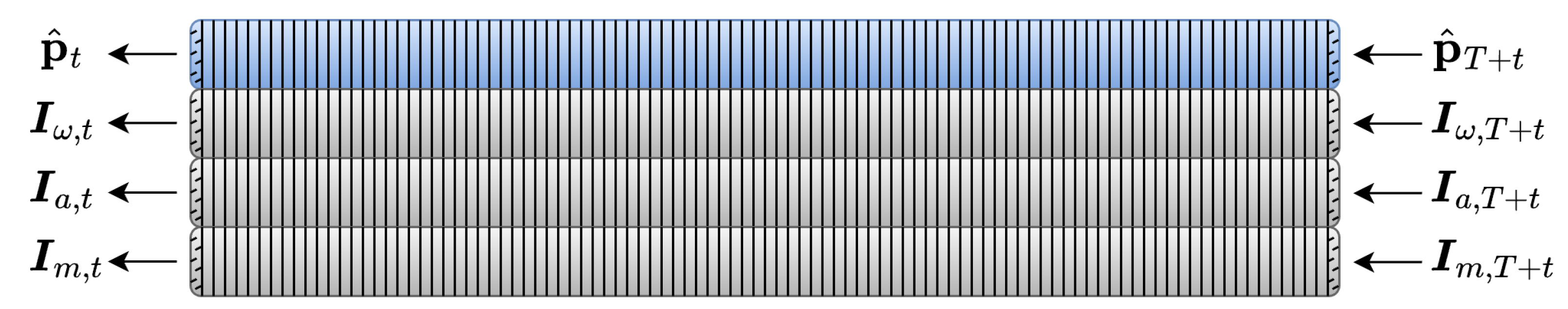

6.2. Training and Dataset

6.3. Inference

6.4. Evaluation Metrics

- Absolute Trajectory Error (ATE)The ATE is commonly used to assess the performance of a guidance or navigation system and represents the global accuracy of the estimated position.

- Relative Trajectory Error (RTE)The RTE is a measure of the difference between the estimated and true position at a given time relative to the distance between the two positions. It is often used to quantify the location position consistency over a predefined duration ; s in this work.

- Cumulative Distribution Function (CDF)The CDF is the distribution function , used to characterise the distribution of a variable. In this context, it is used to describe the probability that the error in the estimated position will be less than or equal to a certain value. is the probability density function of the localisation error .

6.5. Discussion

7. RIOT Ablations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Position Estimate Visulations from the First and Last Minute of Each Network

References

- El-Sheimy, N.; Hou, H.; Niu, X. Analysis and modeling of inertial sensors using Allan variance. IEEE Trans. Instrum. Meas. 2007, 57, 140–149. [Google Scholar] [CrossRef]

- Chen, S.A.B.S.; Billings, S.A. Neural networks for nonlinear dynamic system modelling and identification. Int. J. Control. 1992, 56, 319–346. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Atlanta, GE, USA, 17–19 June 2013; pp. 1310–1318. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Mohammdi Farsani, R.; Pazouki, E. A transformer self-attention model for time series forecasting. J. Electr. Comput. Eng. Innov. (JECEI) 2021, 9, 1–10. [Google Scholar]

- Parmar, N.; Vaswani, A.; Uszkoreit, J.; Kaiser, L.; Shazeer, N.; Ku, A.; Tran, D. Image transformer. In Proceedings of the International Conference on Machine Learning. PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4055–4064. [Google Scholar]

- Liu, P.J.; Saleh, M.; Pot, E.; Goodrich, B.; Sepassi, R.; Kaiser, L.; Shazeer, N. Generating wikipedia by summarizing long sequences. arXiv 2018, arXiv:1801.10198. [Google Scholar]

- Povey, D.; Hadian, H.; Ghahremani, P.; Li, K.; Khudanpur, S. A time-restricted self-attention layer for ASR. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5874–5878. [Google Scholar]

- Huang, C.Z.A.; Vaswani, A.; Uszkoreit, J.; Shazeer, N.; Simon, I.; Hawthorne, C.; Dai, A.M.; Hoffman, M.D.; Dinculescu, M.; Eck, D. Music transformer. arXiv 2018, arXiv:1809.04281. [Google Scholar]

- Merkx, D.; Frank, S.L. Comparing Transformers and RNNs on predicting human sentence processing data. arXiv 2020, arXiv:2005.09471. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.cs.ubc.ca/~amuham01/LING530/papers/radford2018improving.pdf (accessed on 10 November 2022).

- Mahdi, A.E.; Azouz, A.; Abdalla, A.E.; Abosekeen, A. A machine learning approach for an improved inertial navigation system solution. Sensors 2022, 22, 1687. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Meng, Z.; Zhang, X.; Yan, Y. Hybrid deep recurrent neural networks for noise reduction of MEMS-IMU with static and dynamic conditions. Micromachines 2021, 12, 214. [Google Scholar] [CrossRef]

- Chen, H.; Aggarwal, P.; Taha, T.M.; Chodavarapu, V.P. Improving inertial sensor by reducing errors using deep learning methodology. In Proceedings of the NAECON 2018-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 23–26 July 2018; pp. 197–202. [Google Scholar]

- Huang, F.; Wang, Z.; Xing, L.; Gao, C. A MEMS IMU gyroscope calibration method based on deep learning. IEEE Trans. Instrum. Meas. 2022, 71, 1–9. [Google Scholar] [CrossRef]

- Yan, H.; Shan, Q.; Furukawa, Y. RIDI: Robust IMU double integration. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 621–636. [Google Scholar]

- Asraf, O.; Shama, F.; Klein, I. PDRNet: A deep-learning pedestrian dead reckoning framework. IEEE Sens. J. 2021, 22, 4932–4939. [Google Scholar] [CrossRef]

- Chen, C.; Lu, X.; Markham, A.; Trigoni, N. Ionet: Learning to cure the curse of drift in inertial odometry. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Yan, H.; Herath, S.; Furukawa, Y. Ronin: Robust neural inertial navigation in the wild: Benchmark, evaluations, and new methods. arXiv 2019, arXiv:1905.12853. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Khorrambakht, R.; Lu, C.X.; Damirchi, H.; Chen, Z.; Li, Z. Deep Inertial Odometry with Accurate IMU Preintegration. arXiv 2021, arXiv:2101.07061. [Google Scholar]

- Liu, W.; Caruso, D.; Ilg, E.; Dong, J.; Mourikis, A.I.; Daniilidis, K.; Kumar, V.; Engel, J. Tlio: Tight learned inertial odometry. IEEE Robot. Autom. Lett. 2020, 5, 5653–5660. [Google Scholar] [CrossRef]

- Brotchie, J.; Li, W.; Kealy, A.; Moran, B. Evaluating Tracking Rotations Using Maximal Entropy Distributions for Smartphone Applications. IEEE Access 2021, 9, 168806–168815. [Google Scholar] [CrossRef]

- Sun, S.; Melamed, D.; Kitani, K. IDOL: Inertial deep orientation-estimation and localization. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 6128–6137. [Google Scholar]

- Wang, Y.; Cheng, H.; Wang, C.; Meng, M.Q.H. Pose-invariant inertial odometry for pedestrian localization. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Cao, X.; Zhou, C.; Zeng, D.; Wang, Y. RIO: Rotation-equivariance supervised learning of robust inertial odometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6614–6623. [Google Scholar]

- Wang, Y.; Cheng, H.; Meng, M.Q.H. A2DIO: Attention-Driven Deep Inertial Odometry for Pedestrian Localization based on 6D IMU. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 819–825. [Google Scholar]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- Mohajerin, N.; Waslander, S.L. Multistep prediction of dynamic systems with recurrent neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3370–3383. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 2010 7th Workshop on Positioning, Navigation and Communication, Dresden, Germany, 11–12 March 2010; pp. 135–143. [Google Scholar]

- Brotchie, J.; Shao, W.; Li, W.; Kealy, A. Leveraging Self-Attention Mechanism for Attitude Estimation in Smartphones. Sensors 2022, 22, 9011. [Google Scholar] [CrossRef] [PubMed]

- Crassidis, J.L.; Markley, F.L.; Cheng, Y. Survey of nonlinear attitude estimation methods. J. Guid. Control Dyn. 2007, 30, 12–28. [Google Scholar] [CrossRef]

- Coviello, G.; Avitabile, G.; Florio, A.; Talarico, C. A study on IMU sampling rate mismatch for a wireless synchronized platform. In Proceedings of the 2020 IEEE 63rd International Midwest Symposium on Circuits and Systems (MWSCAS), Springfield, MA, USA, 9–12 August 2020; pp. 229–232. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the ICML Citeseer, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B. Using inertial sensors for position and orientation estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar]

- Huynh, D.Q. Metrics for 3D rotations: Comparison and analysis. J. Math. Imaging Vis. 2009, 35, 155–164. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef]

- Yang, S.; Yu, X.; Zhou, Y. Lstm and gru neural network performance comparison study: Taking yelp review dataset as an example. In Proceedings of the 2020 International Workshop on Electronic Communication and Artificial Intelligence (IWECAI), Shanghai, China, 12–14 June 2020; pp. 98–101. [Google Scholar]

- Gruber, N.; Jockisch, A. Are GRU cells more specific and LSTM cells more sensitive in motive classification of text? Front. Artif. Intell. 2020, 3, 40. [Google Scholar] [CrossRef] [PubMed]

- Cahuantzi, R.; Chen, X.; Güttel, S. A comparison of LSTM and GRU networks for learning symbolic sequences. arXiv 2021, arXiv:2107.02248. [Google Scholar]

- Che, Z.; Purushotham, S.; Cho, K.; Sontag, D.; Liu, Y. Recurrent neural networks for multivariate time series with missing values. Sci. Rep. 2018, 8, 6085. [Google Scholar] [CrossRef]

- Weber, D.; Gühmann, C.; Seel, T. RIANN—A robust neural network outperforms attitude estimation filters. AI 2021, 2, 444–463. [Google Scholar] [CrossRef]

- Chen, C.; Zhao, P.; Lu, C.X.; Wang, W.; Markham, A.; Trigoni, N. Oxiod: The dataset for deep inertial odometry. arXiv 2018, arXiv:1809.07491. [Google Scholar]

- Vleugels, R.; Van Herbruggen, B.; Fontaine, J.; De Poorter, E. Ultra-Wideband Indoor Positioning and IMU-Based Activity Recognition for Ice Hockey Analytics. Sensors 2021, 21, 4650. [Google Scholar] [CrossRef] [PubMed]

- Girbés-Juan, V.; Armesto, L.; Hernández-Ferrándiz, D.; Dols, J.F.; Sala, A. Asynchronous Sensor Fusion of GPS, IMU and CAN-Based Odometry for Heavy-Duty Vehicles. IEEE Trans. Veh. Technol. 2021, 70, 8617–8626. [Google Scholar] [CrossRef]

- Dey, S.; Schilling, A. A Function Approximator Model for Robust Online Foot Angle Trajectory Prediction Using a Single IMU Sensor: Implication for Controlling Active Prosthetic Feet. IEEE Trans. Ind. Inform. 2022, 19, 1467–1475. [Google Scholar] [CrossRef]

- Vicon. Vicon Motion Capture Systems. 2017. Available online: https://www.vicon.com/?s=Motion%20Capture%20Systems (accessed on 1 November 2021).

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Xue, W.; Qiu, W.; Hua, X.; Yu, K. Improved Wi-Fi RSSI Measurement for Indoor Localization. IEEE Sens. J. 2017, 17, 2224–2230. [Google Scholar] [CrossRef]

| User 2 | User 3 | User 4 | User 5 | Running | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) |

| GRU | 0.0796 | 0.0110 | 0.0692 | 0.0100 | 0.0757 | 0.0121 | 0.0856 | 0.0114 | 0.1013 | 0.125 | 0.1589 | 0.0171 |

| ARIOT | 0.0994 | 0.0093 | 0.0934 | 0.0088 | 0.0960 | 0.0094 | 0.1027 | 0.0100 | 0.1059 | 0.0088 | 0.1279 | 0.0144 |

| RIOT | 0.0681 | 0.0090 | 0.0655 | 0.0085 | 0.0654 | 0.0091 | 0.0721 | 0.0096 | 0.0676 | 0.0085 | 0.0990 | 0.0140 |

| Slow Walking | Trolley | Handbag | Handheld | iPhone 5 | iPhone 6 | |||||||

| Model | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) | ATE (m) | RTE (m) |

| GRU | 0.2634 | 0.0077 | 0.0881 | 0.0116 | 0.2021 | 0.0112 | 7.352 | 0.0357 | 0.1172 | 0.0138 | 0.1133 | 0.0110 |

| ARIOT | 0.1082 | 0.0060 | 0.1033 | 0.0099 | 0.1096 | 0.0091 | 0.3196 | 0.0129 | 0.1046 | 0.0090 | 0.1036 | 0.0089 |

| RIOT | 0.0660 | 0.0058 | 0.0690 | 0.0096 | 0.0694 | 0.0089 | 0.4438 | 0.0109 | 0.0690 | 0.0086 | 0.0667 | 0.0085 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brotchie, J.; Li, W.; Greentree, A.D.; Kealy, A. RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements. Sensors 2023, 23, 3217. https://doi.org/10.3390/s23063217

Brotchie J, Li W, Greentree AD, Kealy A. RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements. Sensors. 2023; 23(6):3217. https://doi.org/10.3390/s23063217

Chicago/Turabian StyleBrotchie, James, Wenchao Li, Andrew D. Greentree, and Allison Kealy. 2023. "RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements" Sensors 23, no. 6: 3217. https://doi.org/10.3390/s23063217

APA StyleBrotchie, J., Li, W., Greentree, A. D., & Kealy, A. (2023). RIOT: Recursive Inertial Odometry Transformer for Localisation from Low-Cost IMU Measurements. Sensors, 23(6), 3217. https://doi.org/10.3390/s23063217