VV-YOLO: A Vehicle View Object Detection Model Based on Improved YOLOv4

Abstract

1. Introduction

2. Related Works

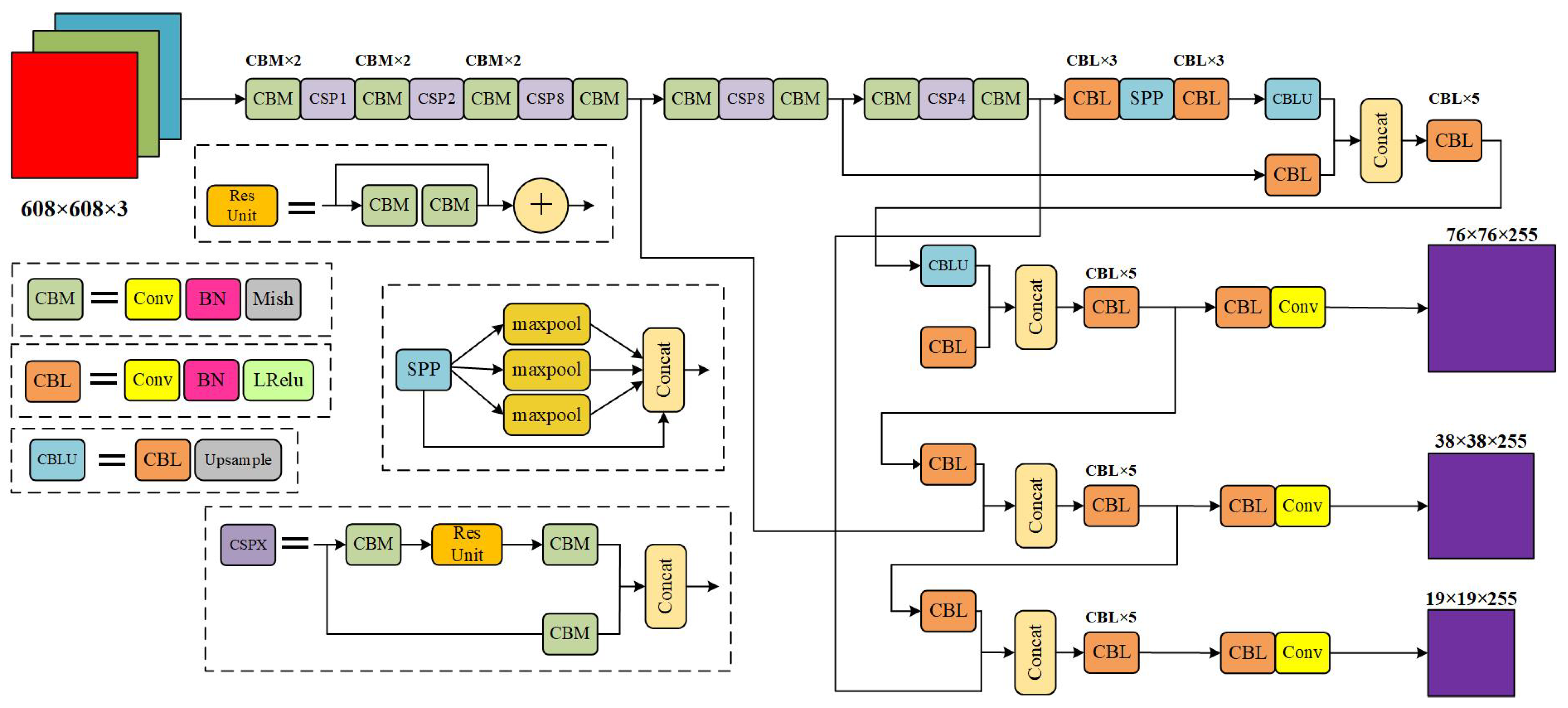

2.1. Structure of the YOLOv4 Model

2.2. Loss Function of the YOLOv4 Model

2.3. Discussion on YOLOv4 Model Detection Performance

3. Materials and Methods

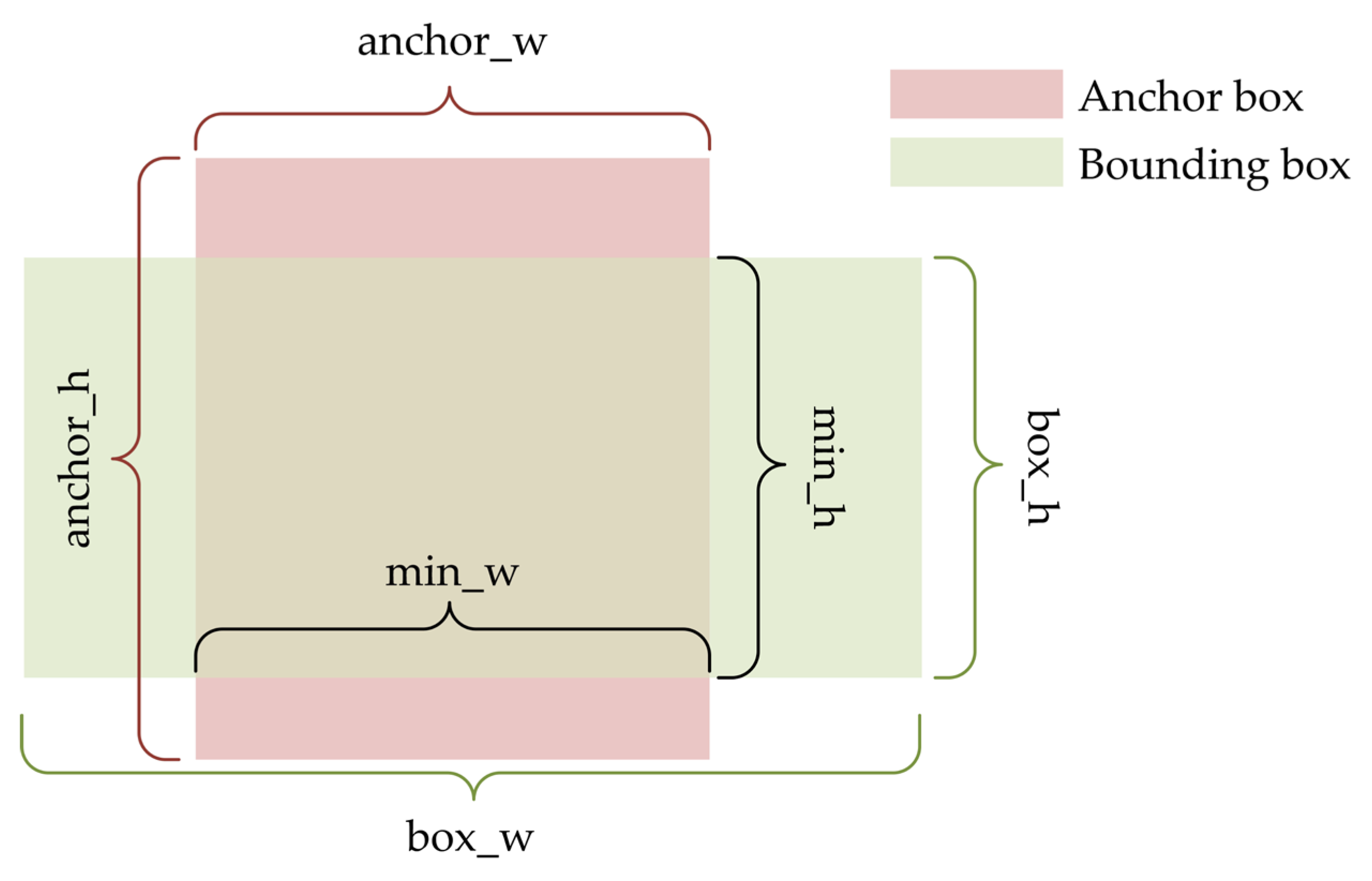

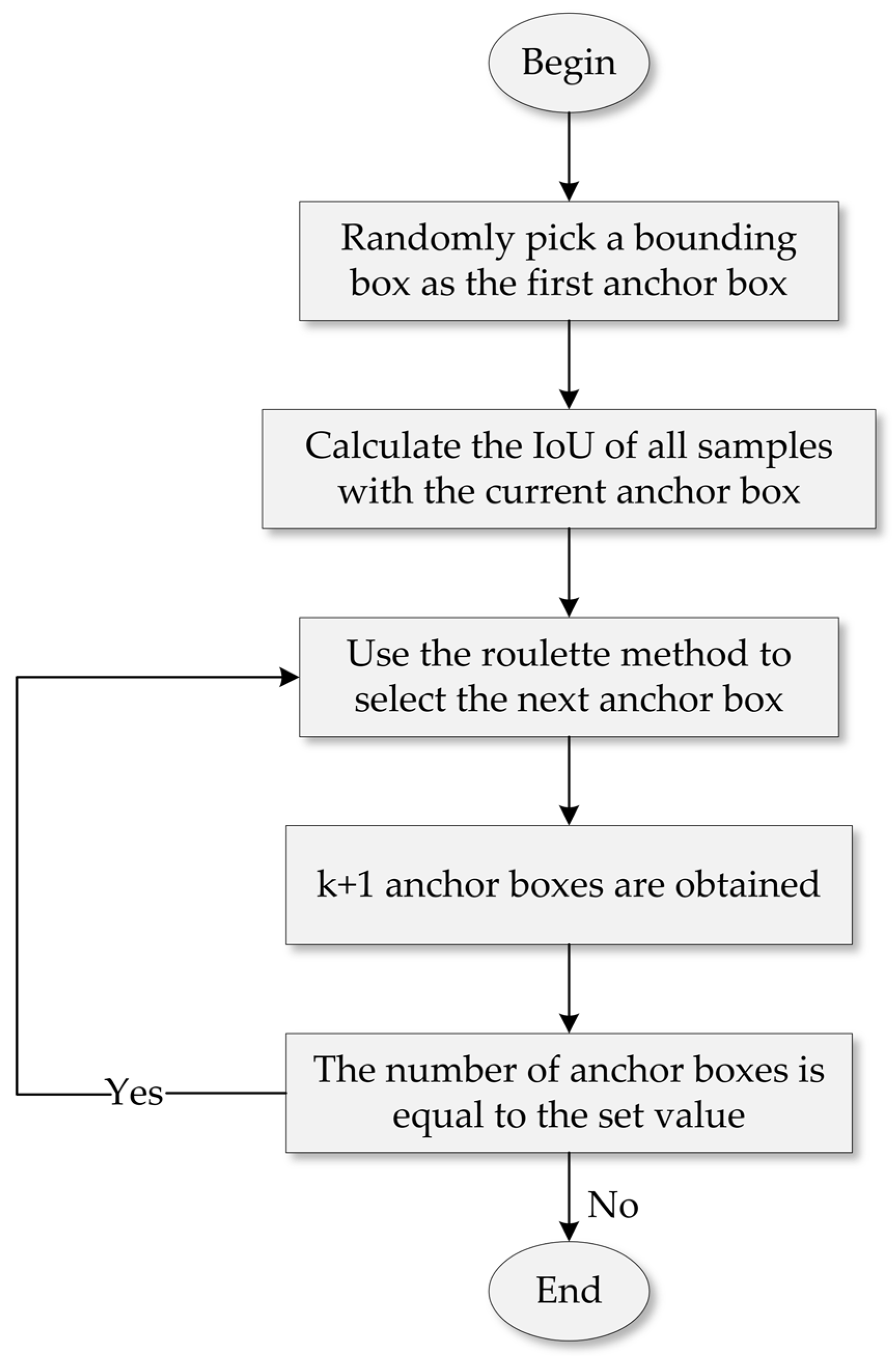

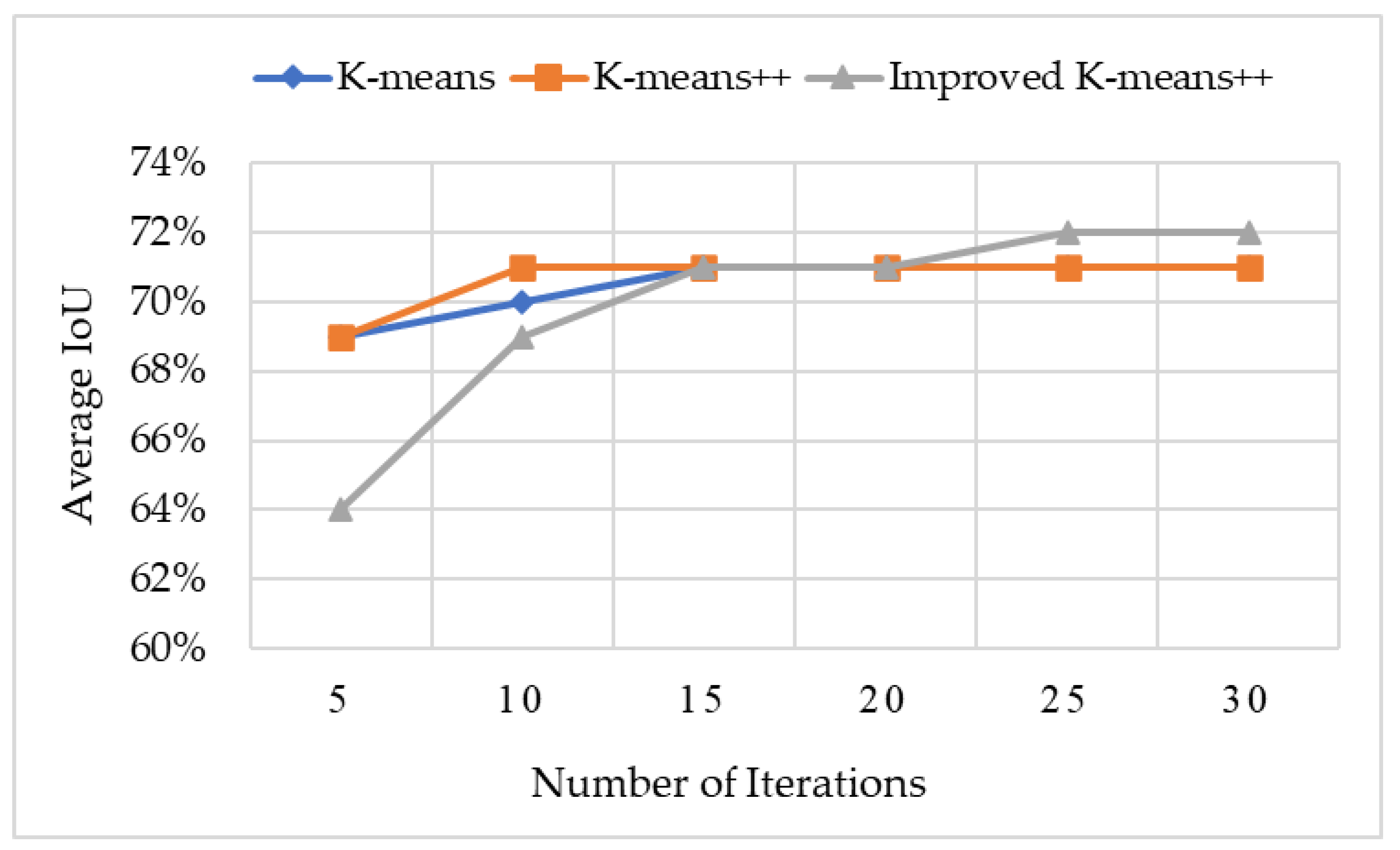

3.1. Improvements to the Anchor Box Clustering Algorithm

3.2. Optimization of the Model Loss Function Based on Sample Balance

- The essence of object detection in the YOLOv4 model is to carry out intensive sampling, generate a large number of prior boxes in an image, and match the real box with some prior boxes. The prior box on the successful match is a positive sample, and the one that cannot be matched is a negative sample.

- Suppose there is a dichotomous problem, and both Sample 1 and Sample 2 are in Category 1. In the prediction results of the model, the probability that Sample 1 belongs to Category 1 is 0.9, and the probability that Sample 2 belongs to Category 1 is 0.6; the former predicts more accurately and is an easy sample to classify; the latter predicts inaccurately and is a difficult sample to classify.

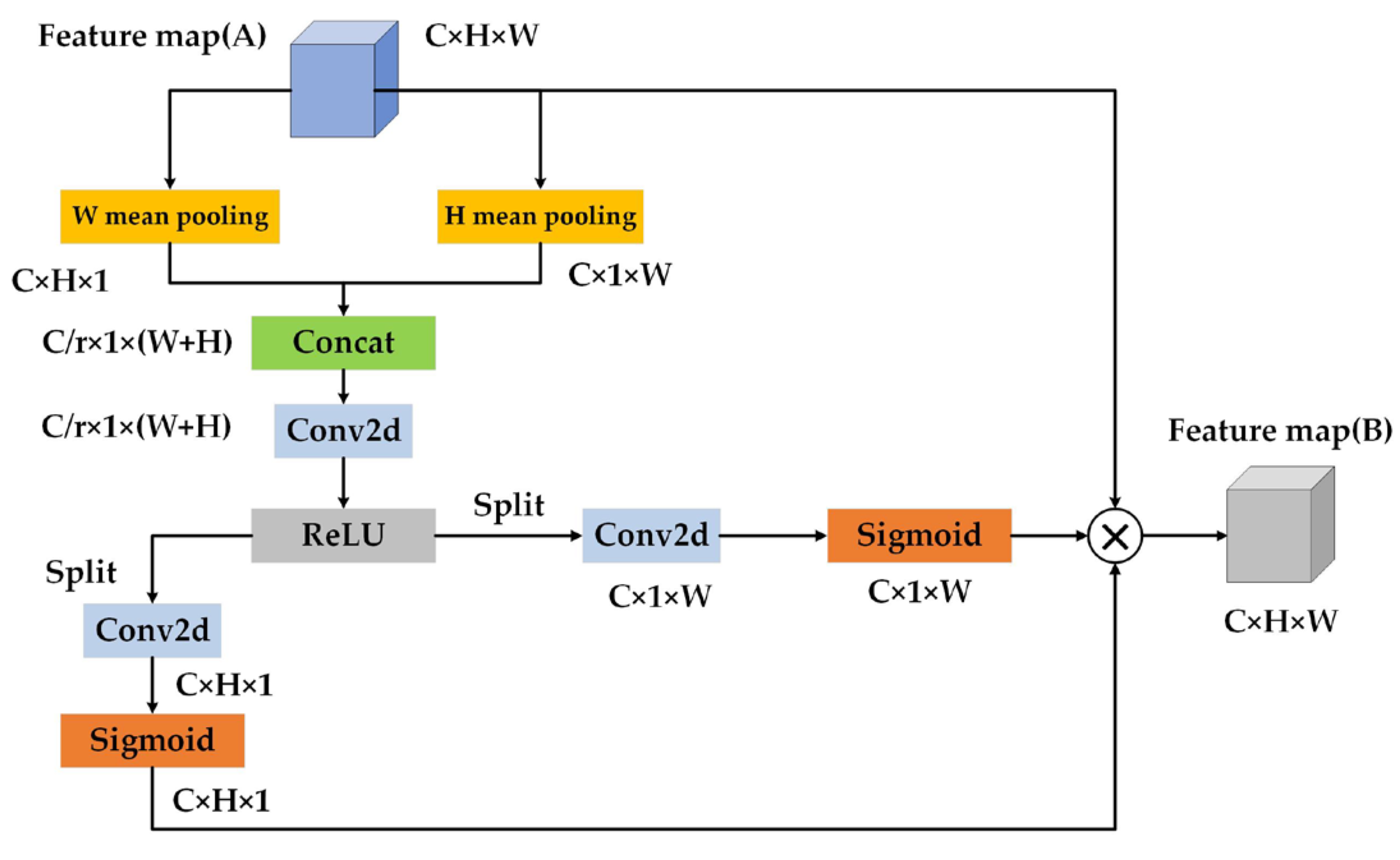

3.3. Neck Network Design Based on Attention Mechanism

4. Results and Discussion

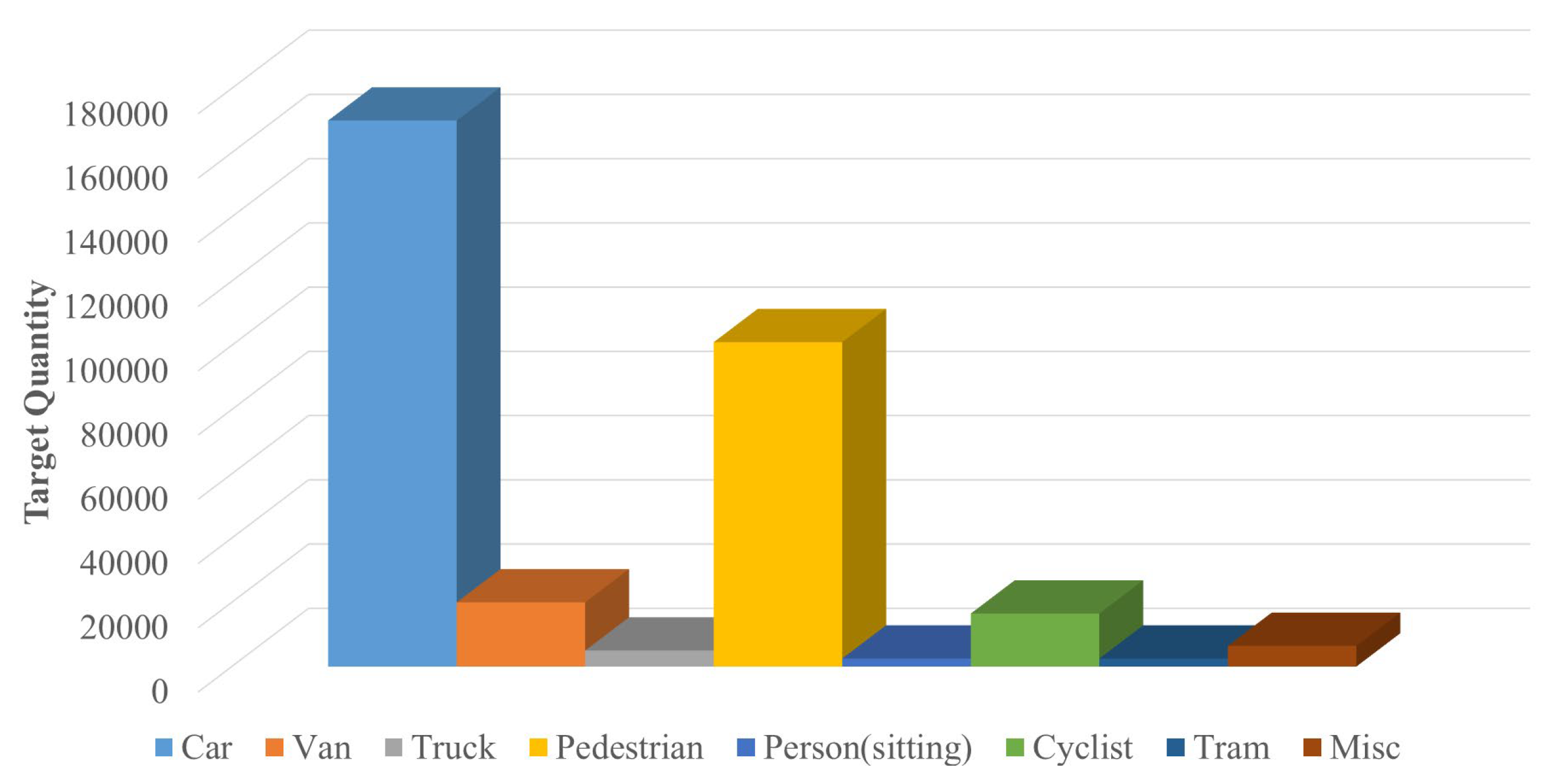

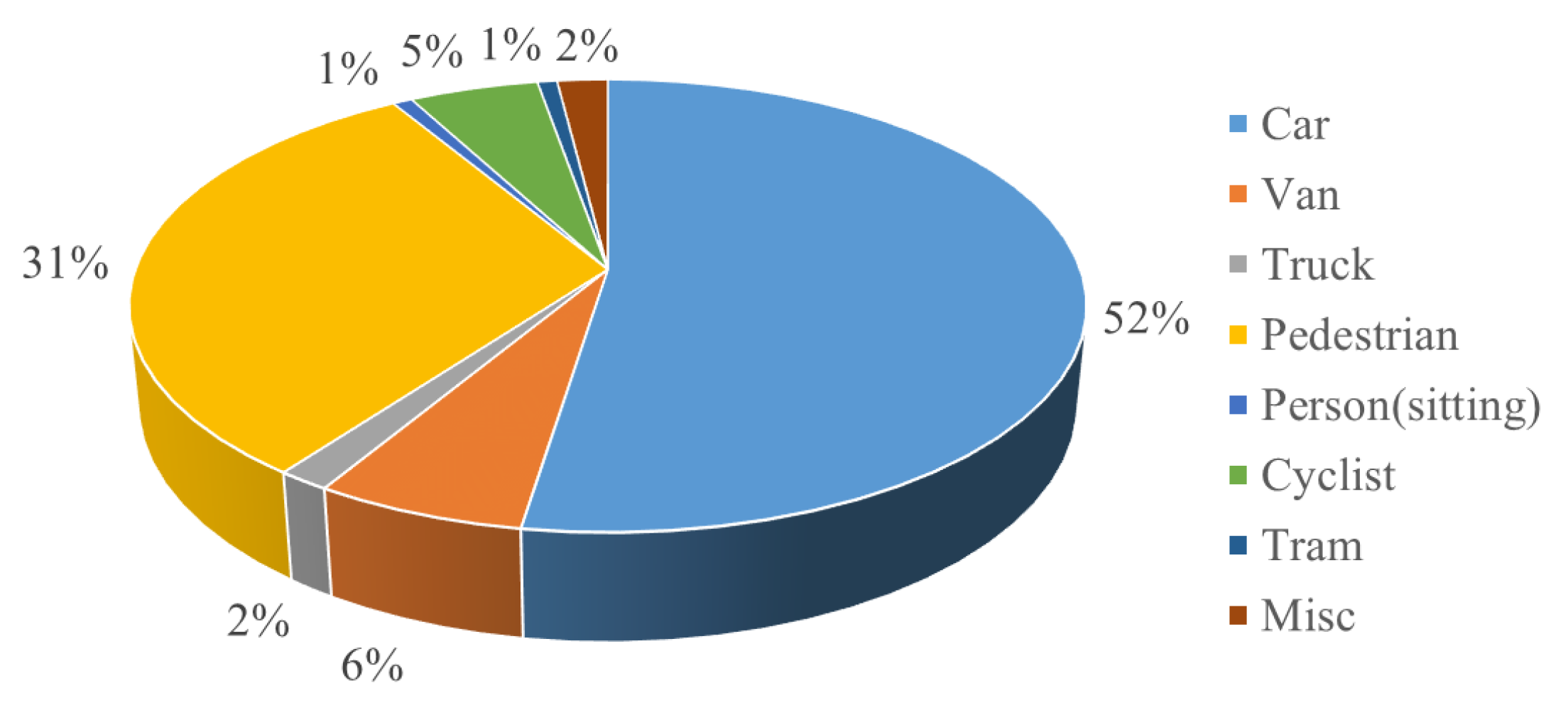

4.1. Test Dataset

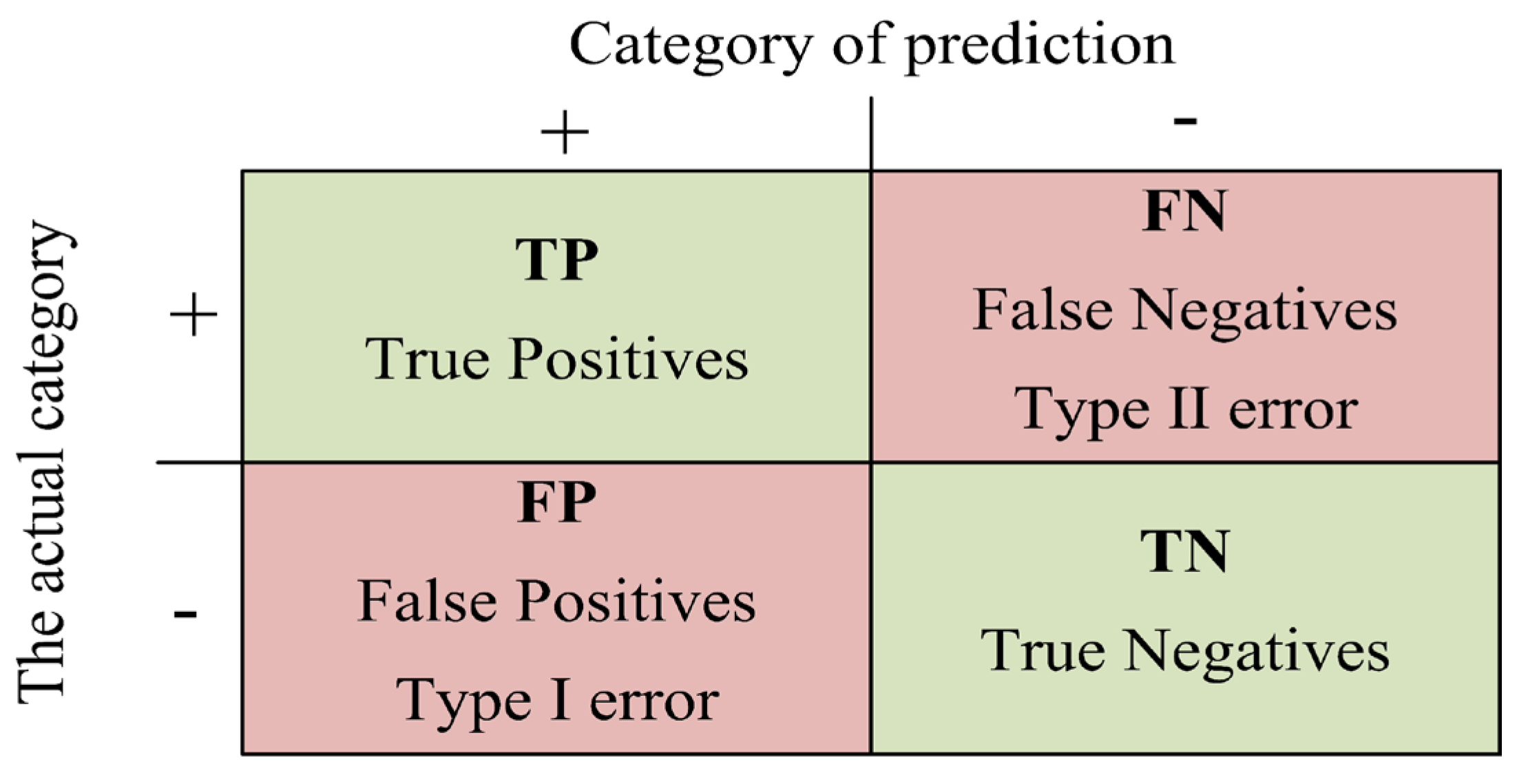

4.2. Index of Evaluation

4.2.1. Precision and Recall

4.2.2. Average Precision

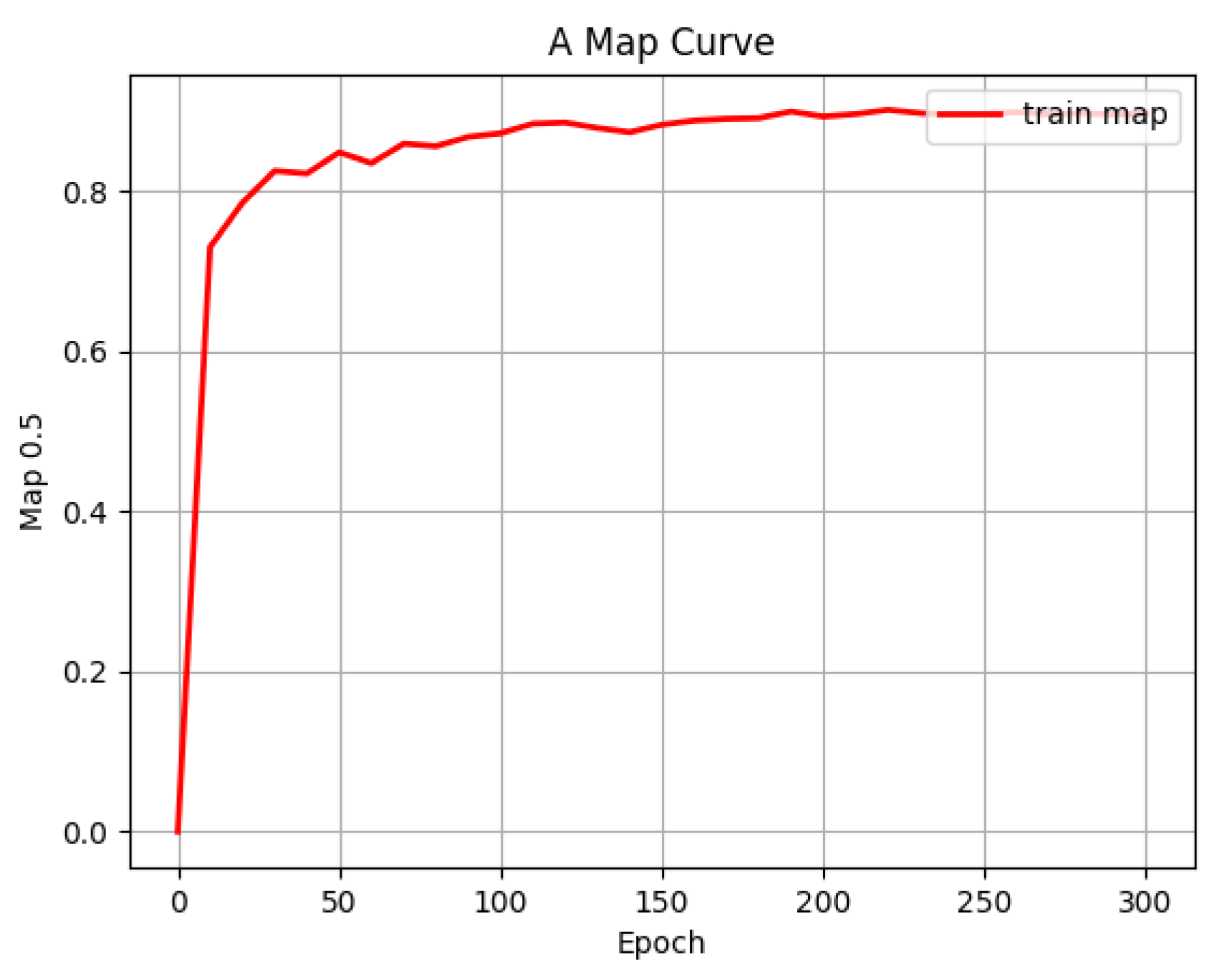

4.3. VV-YOLO Model Training

- Input image size: 608 × 608;

- Number of iterations: 300;

- Initial learning rate: 0.001;

- Optimizer: Adam;

4.4. Discussion

4.4.1. Discussion on Average Precision of VV-YOLO Model

4.4.2. Discussion on the Real-Time Performance of VV-YOLO Model

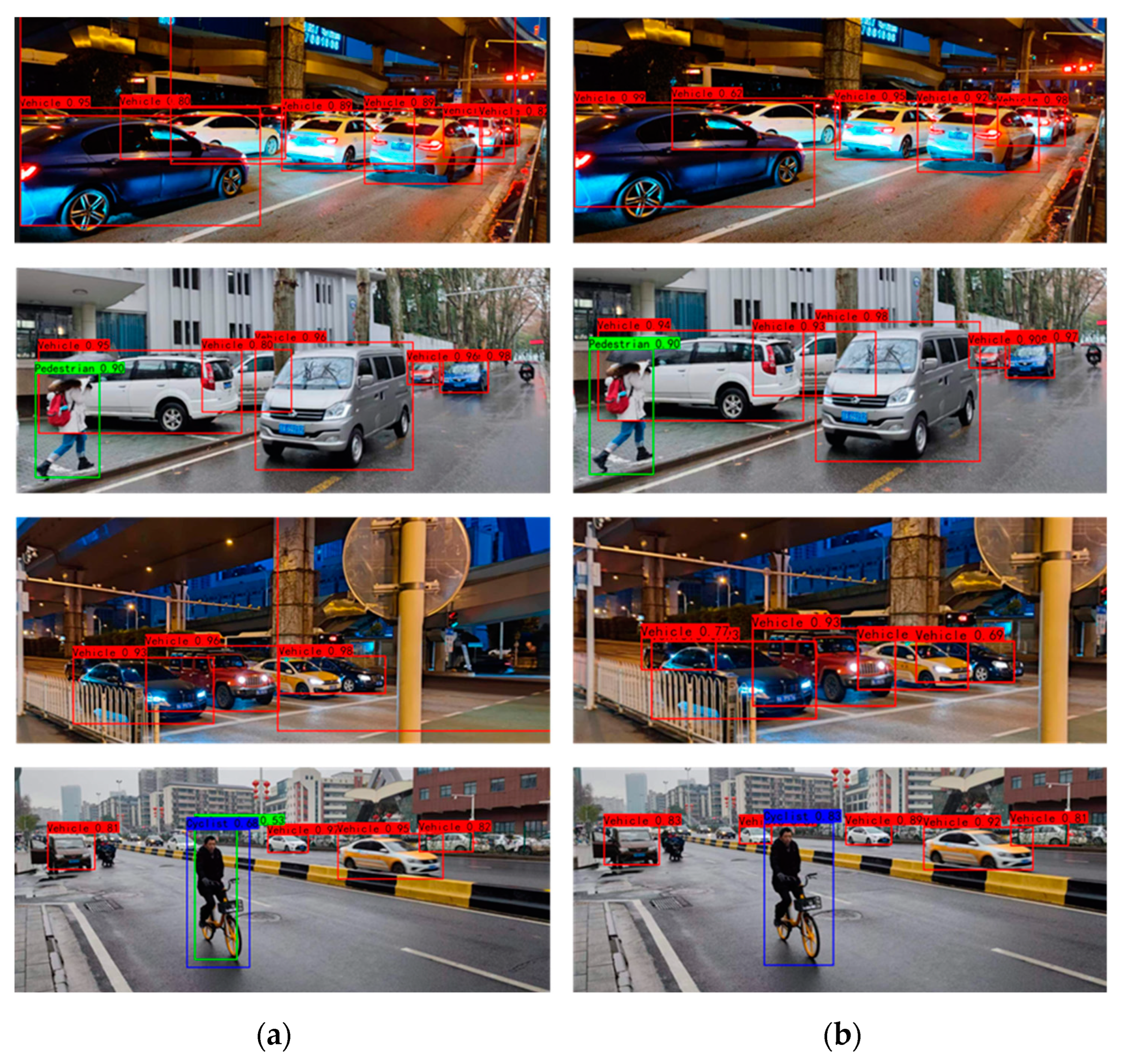

4.4.3. Visual Analysis of VV-YOLO Model Detection Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Saleemi, H.; Rehman, Z.U.; Khan, A.; Aziz, A. Effectiveness of Intelligent Transportation System: Case study of Lahore safe city. Transp. Lett. 2022, 14, 898–908. [Google Scholar] [CrossRef]

- Kenesei, Z.; Ásványi, K.; Kökény, L.; Jászberényi, M.; Miskolczi, M.; Gyulavári, T.; Syahrivar, J. Trust and perceived risk: How different manifestations affect the adoption of autonomous vehicles. Transp. Res. Part A Policy Pract. 2022, 164, 379–393. [Google Scholar] [CrossRef]

- Hosseini, P.; Jalayer, M.; Zhou, H.; Atiquzzaman, M. Overview of Intelligent Transportation System Safety Countermeasures for Wrong-Way Driving. Transp. Res. Rec. 2022, 2676, 243–257. [Google Scholar] [CrossRef]

- Zhang, H.; Bai, X.; Zhou, J.; Cheng, J.; Zhao, H. Object Detection via Structural Feature Selection and Shape Model. IEEE Trans. Image Process. 2013, 22, 4984–4995. [Google Scholar] [CrossRef] [PubMed]

- Rabah, M.; Rohan, A.; Talha, M.; Nam, K.-H.; Kim, S.H. Autonomous Vision-based Object Detection and Safe Landing for UAV. Int. J. Control. Autom. Syst. 2018, 16, 3013–3025. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, K.; Wang, Y.; Tian, Y.; Wang, Z.; Wang, F.-Y. Adaptive and azimuth-aware fusion network of multimodal local features for 3D object detection. Neurocomputing 2020, 411, 32–44. [Google Scholar] [CrossRef]

- Shirmohammadi, S.; Ferrero, A. Camera as the Instrument: The Rising Trend of Vision Based Measurement. IEEE Instrum. Meas. Mag. 2014, 17, 41–47. [Google Scholar] [CrossRef]

- Noh, S.; Shim, D.; Jeon, M. Adaptive Sliding-Window Strategy for Vehicle Detection in Highway Environments. IEEE Trans. Intell. Transp. Syst. 2016, 17, 323–335. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Machine Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Machine Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. arXiv 2016, arXiv:1605.06409. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Mark Liao, H.-Y. YOLOv4: Optimal Speed and Precision of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Mark Liao, H.-Y. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696v1. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Hassaballah, M.; Kenk, M.; Muhammad, K.; Minaee, S. Vehicle Detection and Tracking in Adverse Weather Using a Deep Learning Framework. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4230–4242. [Google Scholar] [CrossRef]

- Lin, C.-T.; Huang, S.-W.; Wu, Y.-Y.; Lai, S.-H. GAN-Based Day-to-Night Image Style Transfer for Nighttime Vehicle Detec-tion. IEEE Trans. Intell. Transp. Syst. 2021, 22, 951–963. [Google Scholar] [CrossRef]

- Tian, D.; Lin, C.; Zhou, J.; Duan, X.; Cao, D. SA-YOLOv3: An Efficient and Accurate Object Detector Using Self-Attention Mechanism for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4099–4110. [Google Scholar] [CrossRef]

- Zhang, T.; Ye, Q.; Zhang, B.; Liu, J.; Zhang, X.; Tian, Q. Feature Calibration Network for Occluded Pedestrian Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4151–4163. [Google Scholar] [CrossRef]

- Wang, L.; Qin, H.; Zhou, X.; Lu, X.; Zhang, F. R-YOLO: A Robust Object Detector in Adverse Weather. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. k-means plus plus: The Advantages of Careful Seeding. In Proceedings of the ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13708–13717. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Dollár, P.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 6517–6525. [Google Scholar]

- Misra, D. Mish: A Self Regularized Non-Monotonic Activation Function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. DropBlock: A regularization method for convolutional networks. In Proceedings of the Con-ference on Neural Information Processing Systems, Montreal, Canada, 2–8 December 2018; pp. 10727–10737. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Franti, P.; Sieranoja, S. K-means properties on six clustering benchmark datasets. Appl. Intell. 2018, 48, 4743–4759. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2254–3361. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Cai, Y.; Wang, H.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 4503613. [Google Scholar] [CrossRef]

| Year | Title | Method | Limitation | Reference |

|---|---|---|---|---|

| 2021 | Vehicle Detection and Tracking in Adverse Weather Using a Deep Learning Framework | A visual enhancement mechanism was proposed and introduced into the YOLOv3 model to realize vehicle detection in snowy, foggy, and other scenarios. | There is the introduction of larger modules, and only the vehicle objects are considered. | [23] |

| 2021 | GAN-Based Day-to-Night Image Style Transfer for Nighttime Vehicle Detection | AugGAN network was proposed to enhance vehicle targets in dark light images, and the data generated by this strategy was used to train R-CNN and YOLO faster, which improved the performance of the object detection model under dark light conditions. | GAN networks are introduced, and multiple models need to be trained, and only vehicle objects are considered. | [24] |

| 2022 | SA-YOLOv3: An Efficient and Accurate Object Detector Using Self-Attention Mechanism for Autonomous Driving | A SA-YOLOv3 model is proposed, in which dilated convolution and self-attention module (SAM) are introduced into YOLOv3, and the GIOU loss function is introduced during training. | There are fewer test scenarios to validate the model. | [25] |

| 2022 | Feature Calibration Network for Occluded Pedestrian Detection | The fusion module of SA and FC features is designed, and FC-NET is further proposed to realize pedestrian detection in occlusion scenes | Only pedestrian targets are considered, and there are few verification scenarios. | [26] |

| 2023 | R-YOLO: A Robust Object Detector in Adverse Weather | QTNet and FCNet adaptive networks were proposed to learn the image features without tags and applied to YOLOv3, YOLOv5 and YOLOX, which improved the precision of object detection in foggy scenarios. | With the introduction of additional large networks, multiple models need to be trained. | [27] |

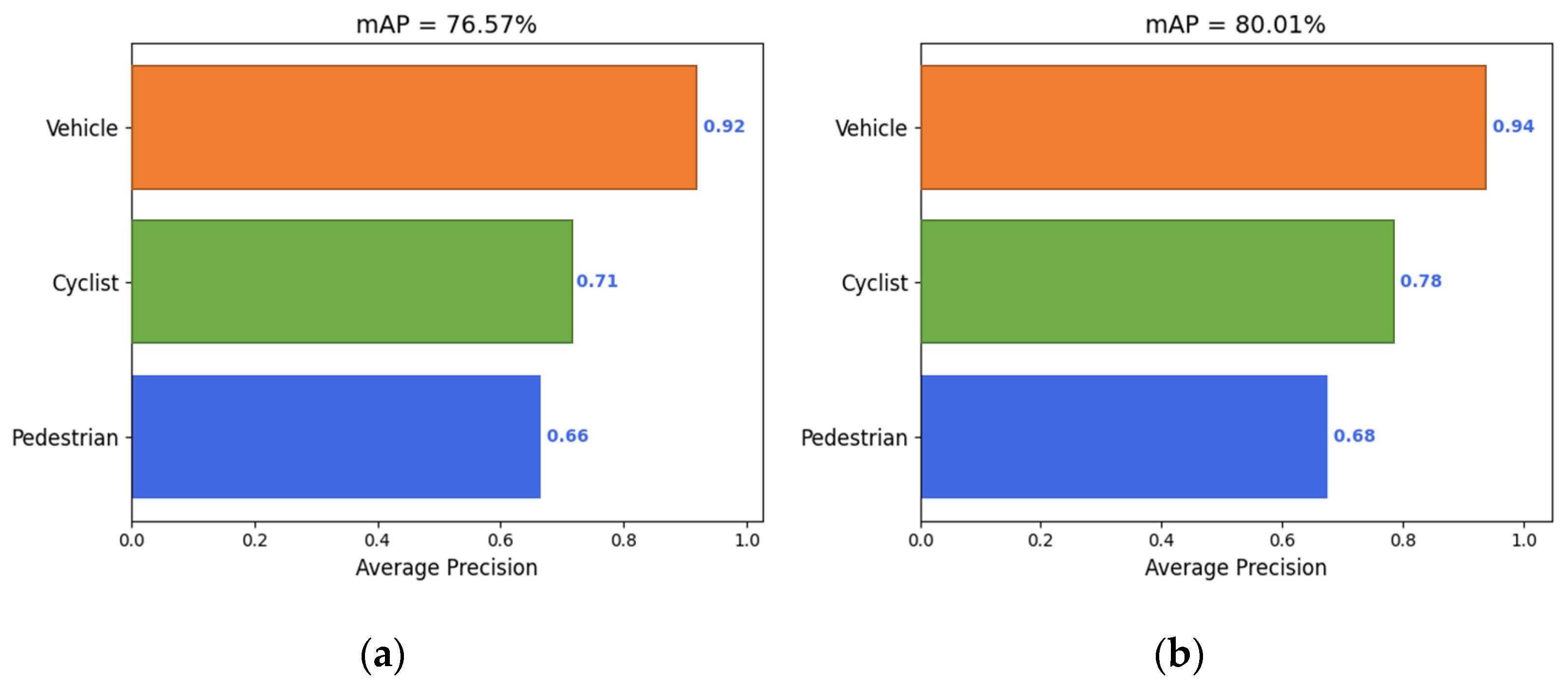

| Evaluation Indicators | YOLOv4 | VV-YOLO | |

|---|---|---|---|

| Precision | Vehicle | 95.01% | 96.87% |

| Cyclist | 81.97% | 93.41% | |

| Pedestrian | 74.43% | 81.75% | |

| Recall | Vehicle | 80.79% | 82.21% |

| Cyclist | 55.87% | 55.75% | |

| Pedestrian | 56.58% | 52.24% | |

| Average precision | 76.57% | 80.01% | |

| Test Model | Precision | Recall | Average Precision | |

|---|---|---|---|---|

| Baseline | 83.80% | 64.41% | 76.57% | |

| +Improved K-means++ | 89.83% | 60.70% | 77.49% | |

| +Focal Loss | 90.24% | 61.79% | 78.79% | |

| attention mechanisms | +SENet | 89.47% | 62.99% | 78.61% |

| +CBAM | 89.83% | 60.69% | 78.49% | |

| +ECA | 89.66% | 61.96% | 78.48% | |

| VV-YOLO | 90.68% | 63.40% | 80.01% | |

| Test Model | Precision | Recall | Average Precision |

|---|---|---|---|

| RetinaNet | 90.43% | 37.52% | 66.38% |

| CenterNet | 87.79% | 34.01% | 60.60% |

| YOLOv5 | 89.71% | 61.08% | 78.73% |

| Faster-RCNN | 59.04% | 76.54% | 75.09% |

| SSD | 77.59% | 26.13% | 37.99% |

| YOLOv3 | 77.75% | 32.07% | 47.26% |

| VV-YOLO | 90.68% | 63.40% | 80.01% |

| Test Model | Inference Time | Inference Frames |

|---|---|---|

| RetinaNet | 31.57 ms | 31.67 |

| YOLOv4 | 36.53 ms | 27.37 |

| CenterNet | 16.49 ms | 60.64 |

| YOLOv5 | 26.65 ms | 37.52 |

| Faster-RCNN | 62.47 ms | 16.01 |

| SSD | 52.13 ms | 19.18 |

| YOLOv3 | 27.32 ms | 36.60 |

| VV-YOLO | 37.19 ms | 26.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Guan, Y.; Liu, H.; Jin, L.; Li, X.; Guo, B.; Zhang, Z. VV-YOLO: A Vehicle View Object Detection Model Based on Improved YOLOv4. Sensors 2023, 23, 3385. https://doi.org/10.3390/s23073385

Wang Y, Guan Y, Liu H, Jin L, Li X, Guo B, Zhang Z. VV-YOLO: A Vehicle View Object Detection Model Based on Improved YOLOv4. Sensors. 2023; 23(7):3385. https://doi.org/10.3390/s23073385

Chicago/Turabian StyleWang, Yinan, Yingzhou Guan, Hanxu Liu, Lisheng Jin, Xinwei Li, Baicang Guo, and Zhe Zhang. 2023. "VV-YOLO: A Vehicle View Object Detection Model Based on Improved YOLOv4" Sensors 23, no. 7: 3385. https://doi.org/10.3390/s23073385

APA StyleWang, Y., Guan, Y., Liu, H., Jin, L., Li, X., Guo, B., & Zhang, Z. (2023). VV-YOLO: A Vehicle View Object Detection Model Based on Improved YOLOv4. Sensors, 23(7), 3385. https://doi.org/10.3390/s23073385