Absolute and Relative Depth-Induced Network for RGB-D Salient Object Detection

Abstract

:1. Introduction

- We propose an which adopts the GRU-based method and adaptively integrates absolution depth values and RGB features, to combine the geometric and semantic information from multi-modalities.

- We propose an which employs spatial GCN to explore semantic affinities and contrastive saliency cues, by leveraging the relative depth relationship.

- The proposed DIN for RGB-D salient object detection is a lightweight network and outperforms most state-of-the-art algorithms on six challenging datasets.

2. Related Works

2.1. Salient Object Detection

2.2. RGB-D Salient Object Detection

2.3. Graph Convolutional Network

3. Algorithm

3.1. Overall Architecture

3.2. Absolute Depth-Induced Module

3.3. Relative Depth-Induced Module

3.4. Training and Inference

4. Results and Analysis

4.1. Experiment Setup

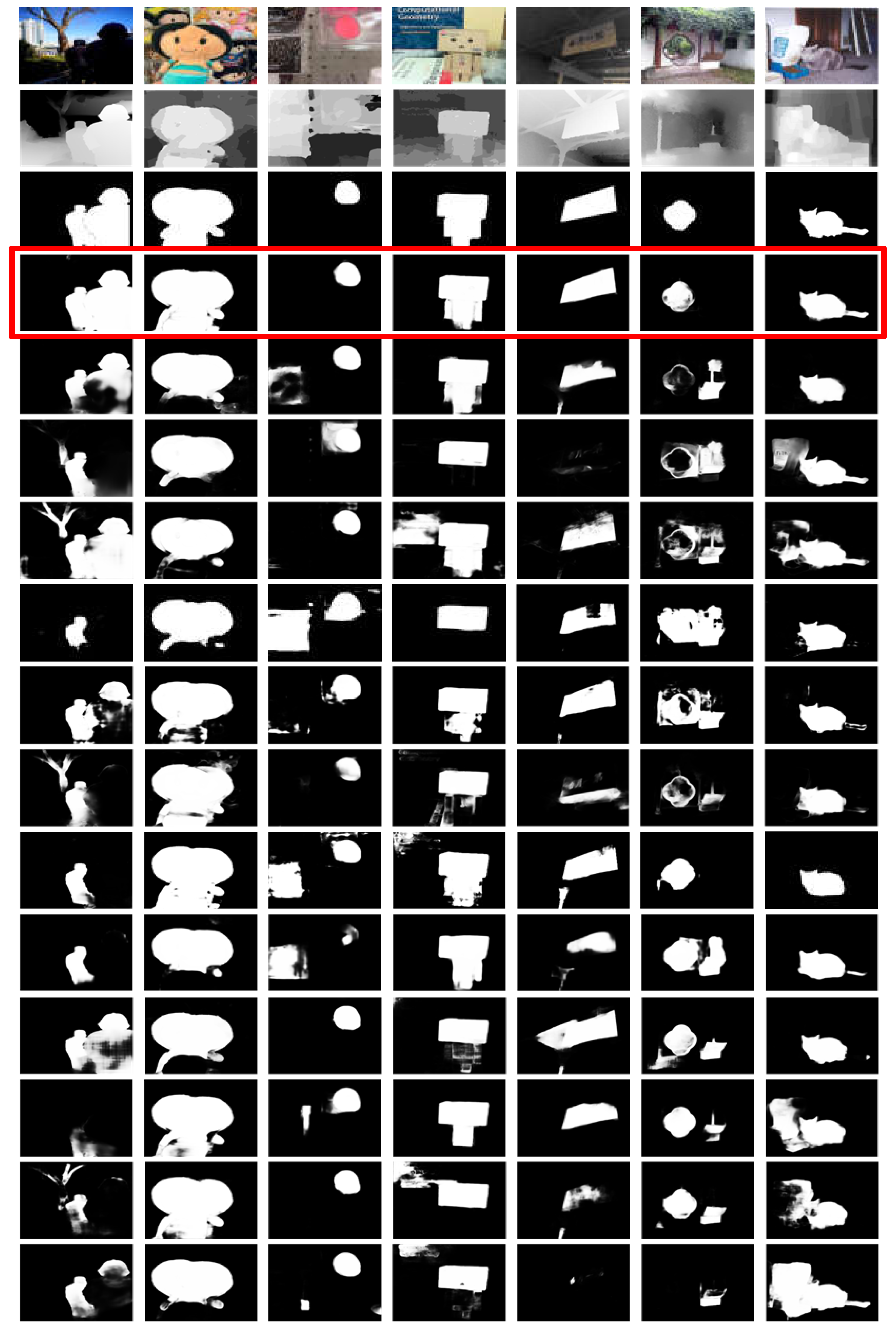

4.2. Evaluation with State-of-the-Art Models

4.3. Ablation Studies

4.3.1. Effectiveness of

4.3.2. Effectiveness of

4.3.3. Effectiveness of Combination of and

4.4. Failure Cases

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ren, Z.; Gao, S.; Chia, L.; Tsang, W. Region-Based Saliency Detection and Its Application in Object Recognition. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 769–779. [Google Scholar] [CrossRef]

- Siagian, C.; Itti, L. Rapid Biologically-Inspired Scene Classification Using Features Shared with Visual Attention. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 300–312. [Google Scholar] [CrossRef] [PubMed]

- Mahadevan, V.; Vasconcelos, N. Biologically Inspired Object Tracking Using Center-surround Saliency Mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 541–554. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Borji, A.; Frintrop, S.; Sihite, D.N.; Itti, L. Adaptive object tracking by learning background context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 23–30. [Google Scholar]

- Zhang, K.; Wang, W.; Lv, Z.; Fan, Y.; Song, Y. Computer vision detection of foreign objects in coal processing using attention CNN. Eng. Appl. Artif. Intell. 2021, 102, 104242. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Z.; Qin, J.; Zhang, Z.; Shao, L. Discriminative Fisher Embedding Dictionary Learning Algorithm for Object Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 786–800. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Huang, G.; Zhang, Q.; Han, J.; Han, J.; Yu, Y. Cross-modality deep feature learning for brain tumor segmentation. Pattern Recognit. 2021, 110, 107562. [Google Scholar] [CrossRef]

- Atik, M.E.; Duran, Z. An Efficient Ensemble Deep Learning Approach for Semantic Point Cloud Segmentation Based on 3D Geometric Features and Range Images. Sensors 2022, 22, 6210. [Google Scholar] [CrossRef]

- Ji, Y.; Zhang, H.; Zhang, Z.; Liu, M. CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Inf. Sci. 2021, 546, 835–857. [Google Scholar] [CrossRef]

- Uddin, M.K.; Bhuiyan, A.; Bappee, F.K.; Islam, M.M.; Hasan, M. Person Re-Identification with RGB–D and RGB–IR Sensors: A Comprehensive Survey. Sensors 2023, 23, 1504. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3D object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D proposal generation and object detection from view aggregation. In Proceedings of the International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Luo, Q.; Ma, H.; Tang, L.; Wang, Y.; Xiong, R. 3D-SSD: Learning hierarchical features from RGB-D images for amodal 3D object detection. Neurocomputing 2020, 378, 364–374. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Li, Y.; Su, D. Multi-modal fusion network with multi-scale multi-path and cross-modal interactions for RGB-D salient object detection. Pattern Recognit. 2019, 86, 376–385. [Google Scholar] [CrossRef]

- Han, J.; Chen, H.; Liu, N.; Yan, C.; Li, X. CNNs-based RGB-D saliency detection via cross-view transfer and multiview fusion. IEEE Trans. Cybern. 2018, 48, 3171–3183. [Google Scholar] [CrossRef] [PubMed]

- Qu, L.; He, S.; Zhang, J.; Tian, J.; Tang, Y.; Yang, Q. RGBD salient object detection via deep fusion. IEEE Trans. Image Process. 2017, 26, 2274–2285. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Piao, Y.; Rong, Z.; Zhang, M.; Ren, W.; Lu, H. A2dele: Adaptive and attentive depth distiller for efficient RGB-D salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9060–9069. [Google Scholar]

- Zhang, M.; Sun, X.; Liu, J.; Xu, S.; Piao, Y.; Lu, H. Asymmetric two-stream architecture for accurate RGB-D saliency detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 374–390. [Google Scholar]

- Sun, P.; Zhang, W.; Wang, H.; Li, S.; Li, X. Deep RGB-D saliency detection with depth-sensitive attention and automatic multi-modal fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 15–20 June 2021; pp. 1407–1417. [Google Scholar]

- Zhou, T.; Fu, H.; Chen, G.; Zhou, Y.; Fan, D.; Shao, L. Specificity-preserving RGB-D saliency detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4661–4671. [Google Scholar]

- Scholkopf, B.; Platt, J.; Hofmann, T. Graph-based visual saliency. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; pp. 545–552. [Google Scholar]

- Krahenbuhl, P. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 20–26 July 2012; pp. 733–740. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A Model of Saliency-Based Visual Attention for Rapid Scene Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Han, G.; Liu, P.; Yang, H.; Luo, H.; Li, Q. Saliency Detection with Bilateral Absorbing Markov Chain Guided by Depth Information. Sensors 2021, 21, 838. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Wang, D.; Lu, H.; Wang, H.; Xiang, R. Amulet: Aggregating multi-level convolutional features for salient object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

- Feng, M.; Lu, H.; Ding, E. Attentive feedback network for boundary-aware salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1623–1632. [Google Scholar]

- Kong, Y.; Feng, M.; Li, X.; Lu, H.; Liu, X.; Yin, B. Spatial context-aware network for salient object detection. Pattern Recognit. 2021, 114, 107867. [Google Scholar] [CrossRef]

- Zhuge, M.; Fan, D.P.; Liu, N.; Zhang, D.; Xu, D.; Shao, L. Salient Object Detection via Integrity Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3738–3752. [Google Scholar] [CrossRef]

- Liu, N.; Zhao, W.; Zhang, D.; Han, J.; Shao, L. Light field saliency detection with dual local graph learning and reciprocative guidance. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4712–4721. [Google Scholar]

- Zhang, D.; Han, J.; Zhang, Y.; Xu, D. Synthesizing Supervision for Learning Deep Saliency Network without Human Annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1755–1769. [Google Scholar] [CrossRef]

- Feng, D.; Barnes, N.; You, S.; McCarthy, C. Local background enclosure for RGB-D salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2343–2350. [Google Scholar]

- Peng, H.; Li, B.; Xiong, W.; Hu, W.; Ji, R. Rgbd salient object detection: A benchmark and algorithms. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 5–12 September 2014; pp. 92–109. [Google Scholar]

- Ju, R.; Ge, L.; Geng, W.; Ren, T.; Wu, G. Depth saliency based on anisotropic center-surround difference. In Proceedings of the IEEE International Conference on Image Processing, San Antonio, TX, USA, 16–19 September 2014; pp. 1115–1119. [Google Scholar]

- Piao, Y.; Ji, W.; Li, J.; Zhang, M.; Lu, H. Depth-induced multi-scale recurrent attention network for saliency detection. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7254–7263. [Google Scholar]

- Zhao, X.; Zhang, L.; Pang, Y.; Lu, H.; Zhang, L. A single stream network for robust and real-time RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2020; pp. 646–662. [Google Scholar]

- Liu, N.; Zhang, N.; Shao, L.; Han, J. Learning Selective Mutual Attention and Contrast for RGB-D Saliency Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 9026–9042. [Google Scholar] [CrossRef]

- Ji, W.; Li, J.; Zhang, M.; Piao, Y.; Lu, H. Accurate RGB-D salient object detection via collaborative learning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2020; pp. 52–69. [Google Scholar]

- Zhao, X.; Pang, Y.; Zhang, L.; Lu, H.; Ruan, X. Self-supervised pretraining for RGB-D salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2022; pp. 3463–3471. [Google Scholar]

- Liu, N.; Zhang, N.; Han, J. Learning selective self-mutual attention for RGB-D saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13753–13762. [Google Scholar]

- Zhang, J.; Fan, D.; Dai, Y.; Yu, X.; Zhong, Y.; Barnes, N.; Shao, L. RGB-D saliency detection via cascaded mutual information minimization. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4318–4327. [Google Scholar]

- Liu, N.; Zhang, N.; Wan, K.; Shao, L.; Han, J. Visual saliency transformer. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4722–4732. [Google Scholar]

- Zhang, N.; Han, J.; Liu, N. Learning Implicit Class Knowledge for RGB-D Co-Salient Object Detection With Transformers. IEEE Trans. Image Process. 2022, 31, 4556–4570. [Google Scholar] [CrossRef] [PubMed]

- Hussain, T.; Anwar, A.; Anwar, S.; Petersson, L.; Baik, S.W. Pyramidal attention for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 20–25 June 2022; pp. 2878–2888. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.F.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R.; et al. Relational inductive biases, deep learning, and graph networks. arXiv 2018, arXiv:1806.01261. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring visual relationship for image captioning. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 711–727. [Google Scholar]

- Qi, X.; Liao, R.; Jia, J.; Fidler, S.; Urtasun, R. 3D graph neural networks for RGBD semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5209–5218. [Google Scholar]

- Liu, S.; Hui, T.; Shaofei, H.; Yunchao, W.; Li, B.; Li, G. Cross-Modal Progressive Comprehension for Referring Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4761–4775. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Niu, Y.; Geng, Y.; Li, X.; Liu, F. Leveraging stereopsis for saliency analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2012; pp. 454–461. [Google Scholar]

- Fan, D.; Lin, Z.; Zhang, Z.; Zhu, M.; Cheng, M. Rethinking RGB-D salient object detection: Models, datasets, and large-scale benchmarks. IEEE Trans. Neural Networks Learn. Syst. 2020, 32, 2075–2089. [Google Scholar] [CrossRef]

- Li, N.; Ye, J.; Ji, Y.; Ling, H.; Yu, J. Saliency detection on light field. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2806–2813. [Google Scholar]

- Zhu, C.; Li, G. A three-pathway psychobiological framework of salient object detection using stereoscopic technology. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3008–3014. [Google Scholar]

- Borji, A.; Sihite, D.N.; Itti, L. Salient object detection: A benchmark. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 414–429. [Google Scholar]

- Fan, D.; Cheng, M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4548–4557. [Google Scholar]

- Fan, D.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 698–704. [Google Scholar]

- Chen, S.; Fu, Y. Progressively guided alternate refinement network for RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2020; pp. 520–538. [Google Scholar]

- Li, G.; Liu, Z.; Ye, L.; Wang, Y.; Ling, H. Cross-modal weighting network for rgb-d salient object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2020; pp. 665–681. [Google Scholar]

- Ji, W.; Li, J.; Yu, S.; Zhang, M.; Piao, Y.; Yao, S.; Bi, Q.; Ma, K.; Zheng, Y.; Lu, H.; et al. Calibrated RGB-D salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2021; pp. 9471–9481. [Google Scholar]

- Li, G.; Liu, Z.; Chen, M.; Bai, Z.; Lin, W.; Ling, H. Hierarchical Alternate Interaction Network for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3528–3542. [Google Scholar] [CrossRef]

- Jin, W.; Xu, J.; Han, Q.; Zhang, Y.; Cheng, M. CDNet: Complementary Depth Network for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3376–3390. [Google Scholar] [CrossRef]

- Zhang, C.; Cong, R.; Lin, Q.; Ma, L.; Li, F.; Zhao, Y.; Kwong, S. Cross-modality discrepant interaction network for RGB-D salient object detection. In Proceedings of the ACM Multimedia, Virtual, 24 October 2021; pp. 2094–2102. [Google Scholar]

- Liu, Z.y.; Liu, J.W.; Zuo, X.; Hu, M.F. Multi-scale iterative refinement network for RGB-D salient object detection. Eng. Appl. Artif. Intell. 2021, 106, 104473. [Google Scholar] [CrossRef]

- Jin, X.; Guo, C.; He, Z.; Xu, J.; Wang, Y.; Su, Y. FCMNet: Frequency-aware cross-modality attention networks for RGB-D salient object detection. Neurocomputing 2022, 491, 414–425. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representation, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Model | Pub. | NLPR [33] | NJUD [34] | STERE [54] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DANet [36] | 20ECCV | 0. 913 | 0.915 | 0.948 | 0.029 | 0.898 | 0.892 | 0.912 | 0.049 | 0.897 | 0.892 | 0.915 | 0.048 |

| PGAR [61] | 20ECCV | 0.912 | 0.916 | 0.950 | 0.027 | 0.918 | 0.909 | 0.934 | 0.042 | 0.902 | 0.894 | 0.932 | 0.045 |

| CMWNet [62] | 20ECCV | 0.913 | 0.917 | 0.940 | 0.029 | 0.913 | 0.903 | 0.923 | 0.046 | 0.911 | 0.905 | 0.930 | 0.043 |

| ATSA [19] | 20ECCV | 0.905 | 0.911 | 0.947 | 0.027 | 0.904 | 0.887 | 0.927 | 0.047 | 0.910 | 0.898 | 0.942 | 0.038 |

| D3Net [55] | 21TNNLS | 0.907 | 0.911 | 0.943 | 0.030 | 0.910 | 0.900 | 0.914 | 0.047 | 0.904 | 0.899 | 0.921 | 0.046 |

| DSA2F [20] | 21CVPR | 0.917 | 0.917 | 0.953 | 0.024 | 0.919 | 0.903 | 0.937 | 0.039 | 0.910 | 0.898 | 0.942 | 0.039 |

| DCF [63] | 21CVPR | 0.919 | 0.923 | 0.959 | 0.021 | 0.923 | 0.911 | 0.944 | 0.035 | 0.911 | 0.901 | 0.942 | 0.039 |

| HAINet [64] | 21TIP | 0.916 | 0.921 | 0.950 | 0.026 | 0.919 | 0.909 | 0.917 | 0.039 | 0.918 | 0.909 | 0.929 | 0.039 |

| CDNet [65] | 21TIP | 0.928 | 0.927 | 0.955 | 0.025 | 0.925 | 0.915 | 0.943 | 0.037 | 0.917 | 0.909 | 0.942 | 0.038 |

| CDINet [66] | 21ACMMM | 0.923 | 0.927 | 0.954 | 0.024 | 0.928 | 0.918 | 0.944 | 0.036 | 0.904 | 0.899 | 0.939 | 0.043 |

| MSIRN [67] | 21EAAI | 0.918 | 0.931 | 0.962 | 0.025 | 0.913 | 0.912 | 0.942 | 0.038 | 0.780 | 0.904 | 0.936 | 0.045 |

| SSP [39] | 22AAAI | 0.911 | 0.915 | 0.945 | 0.027 | 0.912 | 0.903 | 0.908 | 0.043 | 0.897 | 0.886 | 0.919 | 0.048 |

| FCMNet [68] | 22NeuCom | 0.908 | 0.916 | 0.949 | 0.024 | 0.907 | 0.901 | 0.929 | 0.044 | 0.904 | 0.899 | 0.939 | 0.043 |

| PASNet [44] | 22CVPRW | 0.921 | 0.913 | 0.966 | 0.021 | 0.892 | 0.867 | 0.938 | 0.051 | - | - | - | - |

| DIN | Ours | 0.925 | 0.931 | 0.956 | 0.024 | 0.925 | 0.920 | 0.945 | 0.035 | 0.915 | 0.909 | 0.932 | 0.038 |

| Model | Pub. | SIP [55] | LFSD [56] | SSD [57] | |||||||||

| DANet [36] | 20ECCV | 0.900 | 0.878 | 0.916 | 0.055 | 0.871 | 0.845 | 0.878 | 0.082 | 0.887 | 0.869 | 0.907 | 0.051 |

| PGAR [61] | 20ECCV | 0.852 | 0.838 | 0.889 | 0.073 | 0.835 | 0.816 | 0.870 | 0.091 | 0.821 | 0.832 | 0.883 | 0.068 |

| CMWNet [62] | 20ECCV | 0.889 | 0.867 | 0.908 | 0.062 | 0.899 | 0.876 | 0.908 | 0.067 | 0.883 | 0.875 | 0.902 | 0.051 |

| ATSA [19] | 20ECCV | 0.884 | 0.851 | 0.899 | 0.064 | 0.883 | 0.854 | 0.897 | 0.071 | 0.867 | 0.852 | 0.916 | 0.052 |

| D3Net [55] | 21TNNLS | 0.881 | 0.860 | 0.902 | 0.063 | 0.840 | 0.825 | 0.853 | 0.095 | 0.861 | 0.857 | 0.897 | 0.059 |

| DSA2F [20] | 21CVPR | 0.891 | 0.861 | 0.911 | 0.057 | 0.903 | 0.882 | 0.923 | 0.054 | 0.878 | 0.876 | 0.913 | 0.047 |

| DCF [63] | 21CVPR | 0.899 | 0.875 | 0.921 | 0.052 | 0.867 | 0.841 | 0.883 | 0.075 | 0.868 | 0.864 | 0.905 | 0.049 |

| HAINet [64] | 21TIP | 0.915 | 0.886 | 0.924 | 0.049 | 0.880 | 0.859 | 0.895 | 0.072 | 0.864 | 0.861 | 0.904 | 0.053 |

| CDNet [65] | 21TIP | 0.907 | 0.879 | 0.919 | 0.052 | 0.898 | 0.878 | 0.912 | 0.063 | 0.871 | 0.875 | 0.922 | 0.046 |

| CDINet [66] | 21ACMMM | 0.903 | 0.875 | 0.912 | 0.055 | 0.890 | 0.870 | 0.915 | 0.063 | 0.867 | 0.853 | 0.906 | 0.056 |

| MSIRN [67] | 21EAAI | 0.884 | 0.879 | 0.920 | 0.056 | 0.863 | 0.862 | 0.898 | 0.077 | 0.868 | 0.880 | 0.918 | 0.050 |

| SSP [39] | 22AAAI | 0.895 | 0.869 | 0.909 | 0.059 | 0.870 | 0.853 | 0.886 | 0.076 | 0.887 | 0.882 | 0.921 | 0.042 |

| FCMNet [68] | 22NeuCom | 0.883 | 0.862 | 0.903 | 0.068 | 0.860 | 0.855 | 0.903 | 0.055 | 0.881 | 0.858 | 0.912 | 0.062 |

| PASNet [44] | 22CVPRW | 0.956 | 0.936 | 0.987 | 0.016 | - | - | - | - | - | - | - | - |

| DIN | Ours | 0.910 | 0.880 | 0.925 | 0.048 | 0.900 | 0.880 | 0.913 | 0.066 | 0.887 | 0.877 | 0.920 | 0.044 |

| Model | DANet | PGAR | ATSA | D3Net | DSA2F | DCF |

| Model Size (Mb) | 101.77 | 66.53 | 122.79 | 172.53 | 139.48 | 370.47 |

| Model | HAINet | CDNet | CDINet | SSP | FCMNet | DIN |

| Model Size (Mb) | 228.32 | 125.65 | 207.40 | 283.11 | 196.65 | 95.55 |

| Model | NLPR | NJUD | STERE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.888 | 0.893 | 0.909 | 0.041 | 0.887 | 0.879 | 0.898 | 0.061 | 0.880 | 0.877 | 0.900 | 0.063 |

| + | 0.906 | 0.917 | 0.943 | 0.028 | 0.910 | 0.905 | 0.929 | 0.043 | 0.902 | 0.890 | 0.922 | 0.050 |

| + | 0.915 | 0.920 | 0.949 | 0.027 | 0.917 | 0.909 | 0.935 | 0.041 | 0.910 | 0.900 | 0.927 | 0.048 |

| +SDIM | 0.910 | 0.913 | 0.944 | 0.031 | 0.912 | 0.902 | 0.927 | 0.044 | 0.904 | 0.893 | 0.922 | 0.051 |

| DIN | 0.925 | 0.931 | 0.956 | 0.024 | 0.925 | 0.920 | 0.945 | 0.035 | 0.915 | 0.909 | 0.932 | 0.038 |

| Model | ||||

|---|---|---|---|---|

| (a) Baseline | 0.888 | 0.893 | 0.909 | 0.041 |

| (b) + | 0.897 | 0.910 | 0.923 | 0.035 |

| (c) + | 0.903 | 0.912 | 0.931 | 0.033 |

| (d) + | 0.903 | 0.910 | 0.934 | 0.030 |

| (e) + | 0.906 | 0.917 | 0.943 | 0.028 |

| (f) + | 0.909 | 0.912 | 0.933 | 0.032 |

| (g) + | 0.910 | 0.914 | 0.937 | 0.031 |

| (h) + | 0.915 | 0.920 | 0.949 | 0.027 |

| (i) DIN | 0.925 | 0.931 | 0.956 | 0.024 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, Y.; Wang, H.; Kong, L.; Liu, Y.; Yao, C.; Yin, B. Absolute and Relative Depth-Induced Network for RGB-D Salient Object Detection. Sensors 2023, 23, 3611. https://doi.org/10.3390/s23073611

Kong Y, Wang H, Kong L, Liu Y, Yao C, Yin B. Absolute and Relative Depth-Induced Network for RGB-D Salient Object Detection. Sensors. 2023; 23(7):3611. https://doi.org/10.3390/s23073611

Chicago/Turabian StyleKong, Yuqiu, He Wang, Lingwei Kong, Yang Liu, Cuili Yao, and Baocai Yin. 2023. "Absolute and Relative Depth-Induced Network for RGB-D Salient Object Detection" Sensors 23, no. 7: 3611. https://doi.org/10.3390/s23073611