A Meta-Learning Approach for Few-Shot Face Forgery Segmentation and Classification

Abstract

:1. Introduction

- Identifying the limitations of existing methods for detecting forged images, particularly in encountering unseen forging techniques;

- Suggesting a new approach that employs meta-learning techniques to develop a highly adaptive detector for identifying new forging techniques;

- Proposing a method that fine-tunes the detector, allowing it to adjust its weights based on the statistical features of the input forged images with only a few new forged samples;

- Showing that the proposed method outperforms current state-of-the-art methods in scenarios where only a few training samples are available.

2. Related Work

3. The Proposed Scheme

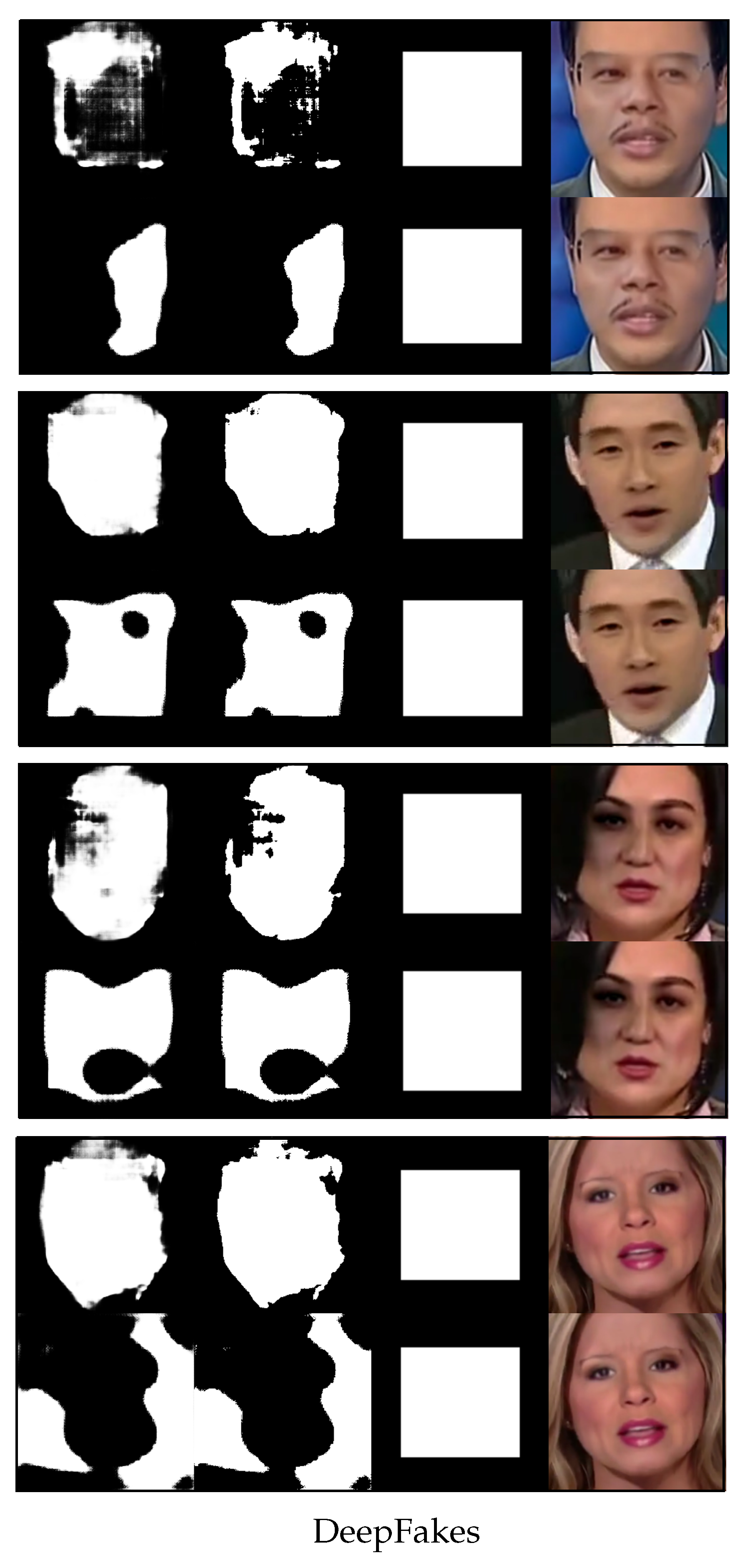

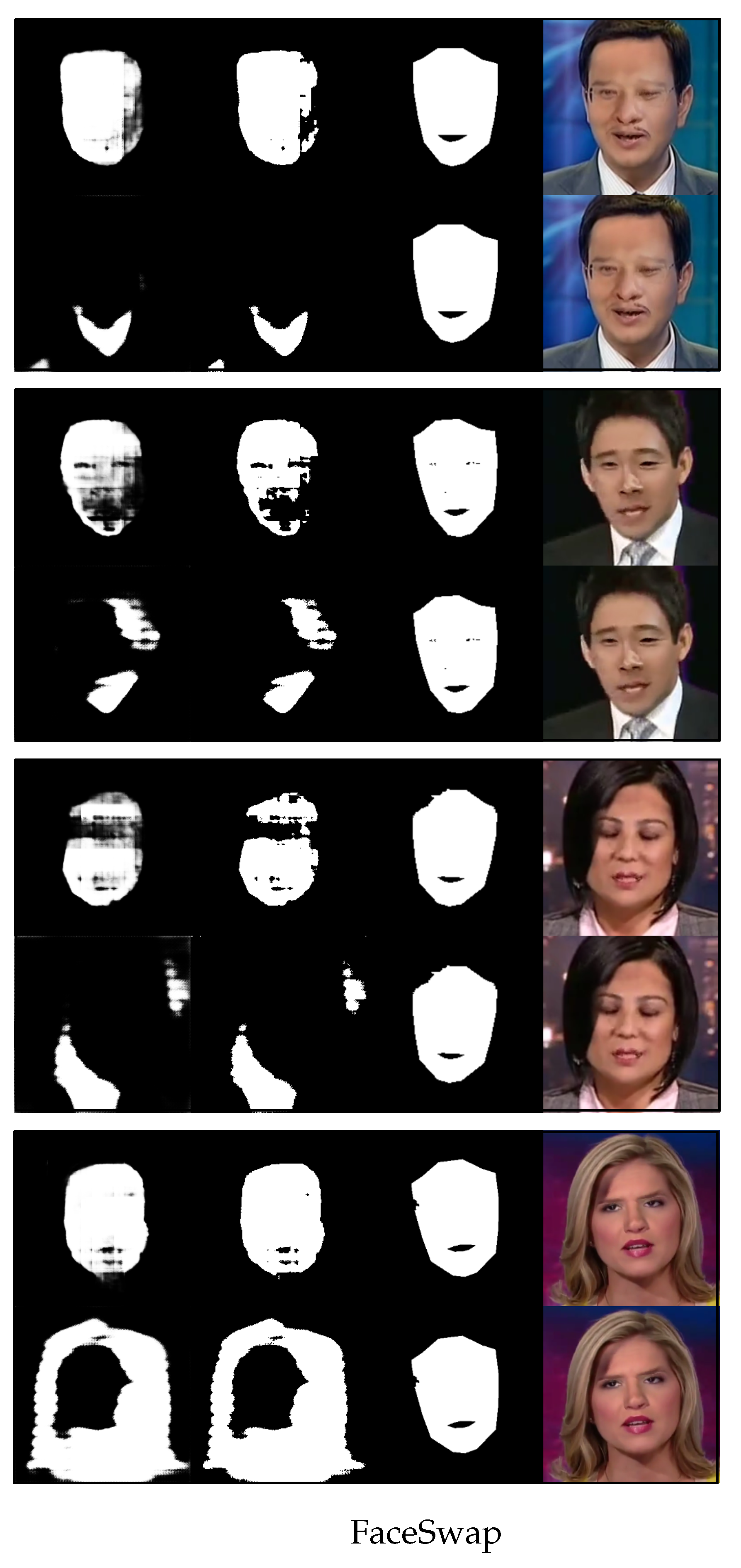

3.1. Segmentation-Based Forgery Detection

3.2. Architecture of the Model

| Algorithm 1: FakeFaceMetaLearning () |

input: A set of N fake fake segmentation task input: Learning hyperparameters (learning rates) , output: An optimized initial model 1 randomly initialize 2 while not done do ▹ gradient descent for optimizing 3 for to N do 4 Sample k images and their ground truth from support set 5 Sample q images and their ground truth from query set 6 ▹ set initialization weight for each task 7 while not done do ▹ gradient descent for optimizing 8 Evaluate for 9 Update for 10 for ▹ count loss using query set 11 Update end |

3.3. The Meta-Learning Approach

4. Experiment and Comparison

4.1. Experimental Design and Data Collection

4.2. Performance Metrics

4.3. Data Analysis and Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shiohara, K.; Yamasaki, T. Detecting Deepfakes with Self-Blended Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 18720–18729. [Google Scholar]

- Thies, J.; Zollhöfer, M.; Nießner, M. Deferred neural rendering: Image synthesis using neural textures. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 2387–2395. [Google Scholar]

- Thies, J. Face2Face: Real-time facial reenactment. IT-Inf. Technol. 2019, 61, 143–146. [Google Scholar] [CrossRef]

- Faceswap. 2018. Available online: https://github.com/MarekKowalski/FaceSwap (accessed on 3 February 2023).

- Deepfakes. 2018. Available online: https://github.com/deepfakes/faceswap (accessed on 3 February 2023).

- Rössler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Nießner, M. Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar]

- Bayar, B.; Stamm, M.C. A deep learning approach to universal image manipulation detection using a new convolutional layer. In Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security, Vigo, Spain, 20–22 June 2016; pp. 5–10. [Google Scholar]

- Cozzolino, D.; Poggi, G.; Verdoliva, L. Recasting residual-based local descriptors as convolutional neural networks: An application to image forgery detection. In Proceedings of the 5th ACM Workshop on Information Hiding and Multimedia Security, Philadelphia, PA, USA, 20–22 June 2017; pp. 159–164. [Google Scholar]

- Nguyen, H.H.; Fang, F.; Yamagishi, J.; Echizen, I. Multi-task learning for detecting and segmenting manipulated facial images and videos. arXiv 2019, arXiv:1906.06876. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 20, 2018, Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Feng, S.; Fan, Y.; Tang, Y.; Cheng, H.; Zhao, C.; Zhu, Y.; Cheng, C. A Change Detection Method Based on Multi-Scale Adaptive Convolution Kernel Network and Multimodal Conditional Random Field for Multi-Temporal Multispectral Images. Remote Sens. 2022, 14, 5368. [Google Scholar] [CrossRef]

- D’Amiano, L.; Cozzolino, D.; Poggi, G.; Verdoliva, L. A patchmatch-based dense-field algorithm for video copy–move detection and localization. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 669–682. [Google Scholar] [CrossRef]

- Bestagini, P.; Milani, S.; Tagliasacchi, M.; Tubaro, S. Local tampering detection in video sequences. In Proceedings of the 2013 IEEE 15th International Workshop on Multimedia Signal Processing (MMSP), Pula, Italy, 30 September–2 October 2013; pp. 488–493. [Google Scholar]

- Gironi, A.; Fontani, M.; Bianchi, T.; Piva, A.; Barni, M. A video forensic technique for detecting frame deletion and insertion. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 6226–6230. [Google Scholar]

- Li, Y.; Yang, X.; Sun, P.; Qi, H.; Lyu, S. Celeb-df: A large-scale challenging dataset for deepfake forensics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3207–3216. [Google Scholar]

- Nick, D.; Andrew, J. Contributing Data to Deepfake Detection Research. Google AI Blog, 24 September 2019. [Google Scholar]

- Dolhansky, B.; Howes, R.; Pflaum, B.; Baram, N.; Ferrer, C.C. The deepfake detection challenge (dfdc) preview dataset. arXiv 2019, arXiv:1910.08854. [Google Scholar]

- Zhou, T.; Wang, W.; Liang, Z.; Shen, J. Face forensics in the wild. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5778–5788. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Korshunov, P.; Marcel, S. Improving Generalization of Deepfake Detection With Data Farming and Few-Shot Learning. IEEE Trans. Biom. Behav. Identity Sci. 2022, 4, 386–397. [Google Scholar] [CrossRef]

- Thrun, S.; Pratt, L. Learning to learn: Introduction and overview. In Learning to Learn; Springer: Berlin/Heidelberg, Germany, 1998; pp. 3–17. [Google Scholar]

- Zakharov, E.; Shysheya, A.; Burkov, E.; Lempitsky, V. Few-shot adversarial learning of realistic neural talking head models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9459–9468. [Google Scholar]

- Zhang, H.; Zhang, Y.; Zhan, L.M.; Chen, J.; Shi, G.; Wu, X.M.; Lam, A. Effectiveness of Pre-training for Few-shot Intent Classification. arXiv 2021, arXiv:2109.05782. [Google Scholar]

- Flennerhag, S.; Schroecker, Y.; Zahavy, T.; van Hasselt, H.; Silver, D.; Singh, S. Bootstrapped meta-learning. arXiv 2021, arXiv:2109.04504. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Alet, F.; Schneider, M.F.; Lozano-Perez, T.; Kaelbling, L.P. Meta-learning curiosity algorithms. arXiv 2020, arXiv:2003.05325. [Google Scholar]

- Flennerhag, S.; Rusu, A.A.; Pascanu, R.; Visin, F.; Yin, H.; Hadsell, R. Meta-learning with warped gradient descent. arXiv 2019, arXiv:1909.00025. [Google Scholar]

- Zhong, Q.; Chen, L.; Qian, Y. Few-shot learning for remote sensing image retrieval with maml. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2446–2450. [Google Scholar]

- Jamal, M.A.; Qi, G.J. Task agnostic meta-learning for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 11719–11727. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Antoniou, A.; Edwards, H.; Storkey, A. How to train your MAML. arXiv 2018, arXiv:1810.09502. [Google Scholar]

- Raghu, A.; Raghu, M.; Bengio, S.; Vinyals, O. Rapid learning or feature reuse? towards understanding the effectiveness of maml. arXiv 2019, arXiv:1909.09157. [Google Scholar]

- Zhang, Y.; Li, W.; Zhang, M.; Wang, S.; Tao, R.; Du, Q. Graph information aggregation cross-domain few-shot learning for hyperspectral image classification. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef] [PubMed]

- Gao, N.; Ziesche, H.; Vien, N.A.; Volpp, M.; Neumann, G. What Matters For Meta-Learning Vision Regression Tasks? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 14776–14786. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; pp. 234–244. [Google Scholar]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 1–28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cozzolino, D.; Thies, J.; Rössler, A.; Riess, C.; Nießner, M.; Verdoliva, L. Forensictransfer: Weakly-supervised domain adaptation for forgery detection. arXiv 2018, arXiv:1812.02510. [Google Scholar]

| Testing | Segmentation | Classification | |||

|---|---|---|---|---|---|

| Task | IoU | acc-Pixel | AUC | acc (t = 0.2) | acc (t = 0.3) |

| meta K = 0 | 0.565 | 0.729 | 0.737 | 0.666 | 0.677 |

| meta K = 1 | 0.600 | 0.747 | 0.750 | 0.685 | 0.683 |

| no meta K = 1 | 0.264 | 0.704 | 0.539 | 0.533 | 0.532 |

| meta K = 0 | 0.576 | 0.899 | 0.729 | 0.647 | 0.507 |

| meta K = 1 | 0.795 | 0.890 | 0.742 | 0.704 | 0.525 |

| no meta K = 1 | 0.375 | 0.388 | 0.498 | 0.499 | 0.500 |

| meta K = 0 | 0.410 | 0.851 | 0.495 | 0.482 | 0.491 |

| meta K = 1 | 0.516 | 0.839 | 0.503 | 0.500 | 0.492 |

| no meta K = 1 | 0.254 | 0.584 | 0.493 | 0.494 | 0.494 |

| meta K = 0 | 0.458 | 0.862 | 0.623 | 0.557 | 0.516 |

| meta K = 1 | 0.485 | 0.870 | 0.650 | 0.582 | 0.517 |

| no meta K = 1 | 0.358 | 0.587 | 0.530 | 0.518 | 0.519 |

| Manipulation | Classification (Accuracy) | Segmentation (acc-Pixel) | ||||||

|---|---|---|---|---|---|---|---|---|

| Method | ||||||||

| FT_Res [38] | 0.647 | 0.535 | - | - | - | - | - | - |

| FT [38] | 0.621 | 0.523 | - | - | - | - | - | - |

| Deeper_FT [10] | 0.512 | 0.534 | - | - | - | - | - | - |

| MT_Old [10] | 0.537 | 0.568 | - | - | 0.701 | 0.842 | - | - |

| No_Recon [10] | 0.519 | 0.549 | - | - | 0.704 | 0.848 | - | - |

| MT_New [10] | 0.523 | 0.540 | - | - | 0.703 | 0.847 | - | - |

| Proposed method | 0.666 | 0.482 | 0.647 | 0.557 | 0.729 | 0.851 | 0.899 | 0.862 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-K.; Yen, T.-Y. A Meta-Learning Approach for Few-Shot Face Forgery Segmentation and Classification. Sensors 2023, 23, 3647. https://doi.org/10.3390/s23073647

Lin Y-K, Yen T-Y. A Meta-Learning Approach for Few-Shot Face Forgery Segmentation and Classification. Sensors. 2023; 23(7):3647. https://doi.org/10.3390/s23073647

Chicago/Turabian StyleLin, Yih-Kai, and Ting-Yu Yen. 2023. "A Meta-Learning Approach for Few-Shot Face Forgery Segmentation and Classification" Sensors 23, no. 7: 3647. https://doi.org/10.3390/s23073647