An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions

Abstract

:1. Introduction

- We show a vehicle-level performance evaluation pipeline consisting of a schematic diagram of the test vehicle, the test metric & procedure, and the environmental factors.

- We present performance test results for an automotive-graded LiDAR sensor, in both a real road driving scenario and an environmental simulation based on time-series measurements from real road fleets in harsh weather conditions.

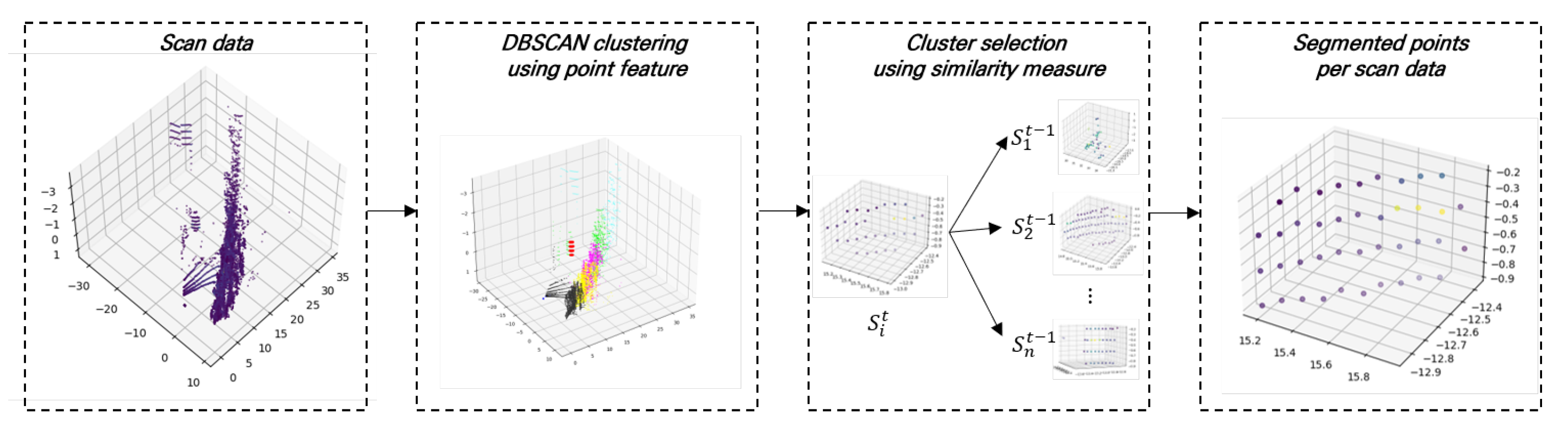

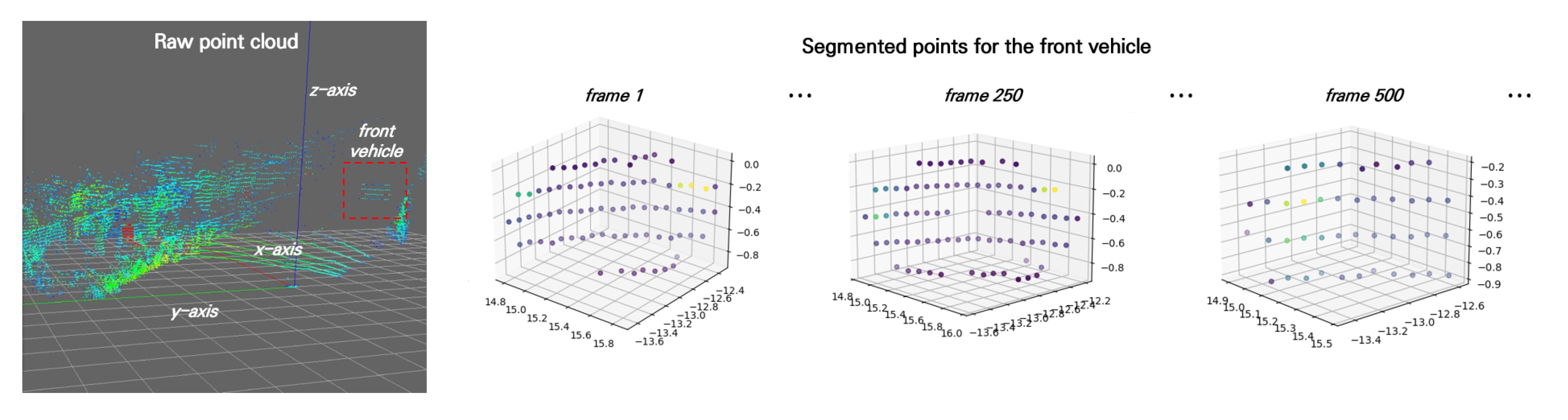

- We propose a spatio-temporal point segmentation algorithm using the density-based unsupervised clustering algorithm that can be utilized in dynamic test scenarios (e.g., feature extraction from moving objects, such as a car).

2. Related Work

2.1. Lidar Performance Evaluation in Adverse Weather Conditions

2.2. Lidar Performance Test in Dynamic Scene

2.3. Lidar Quality Assessment Based on Simulation

3. Methodology

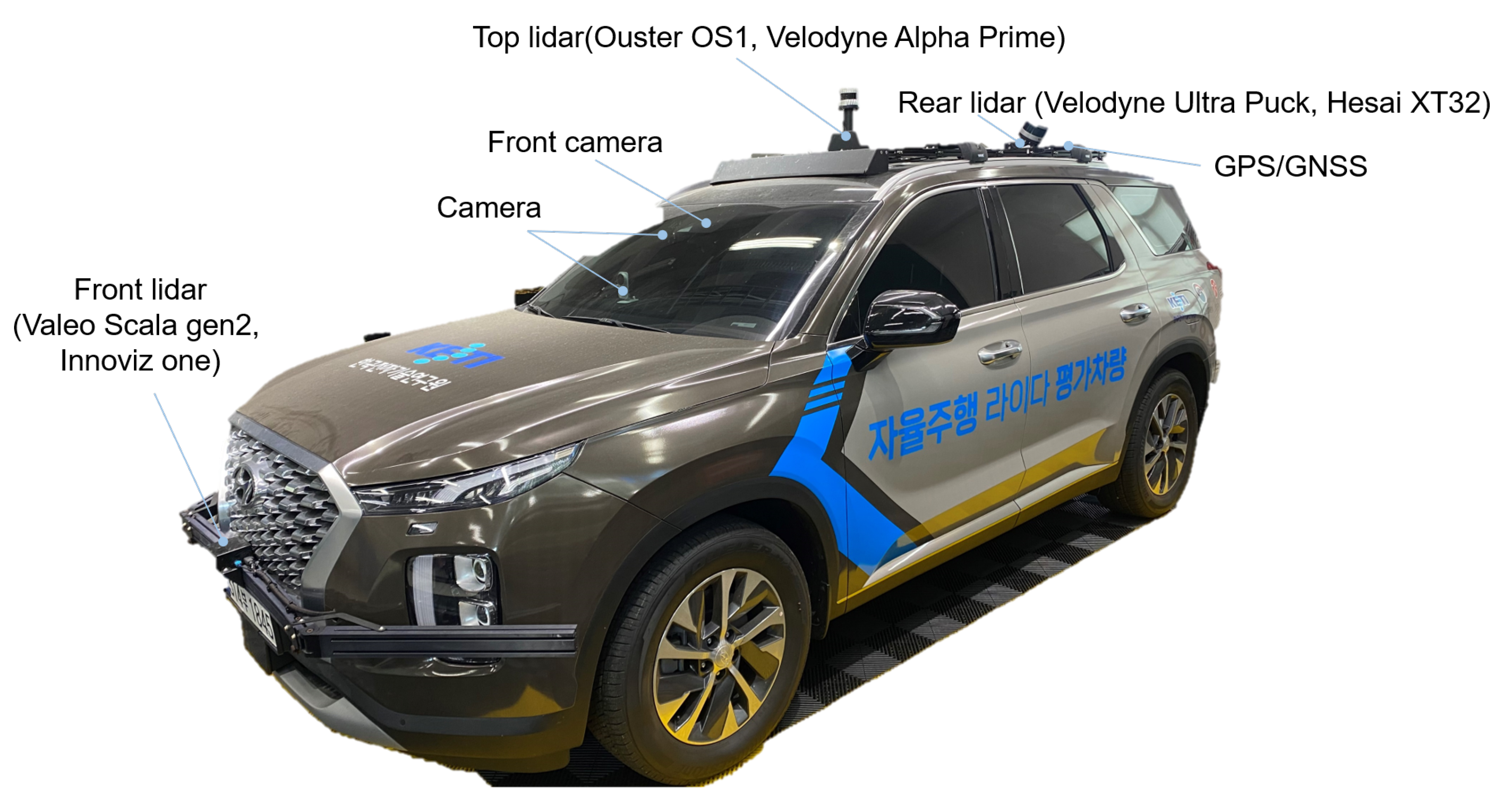

3.1. Test Vehicle

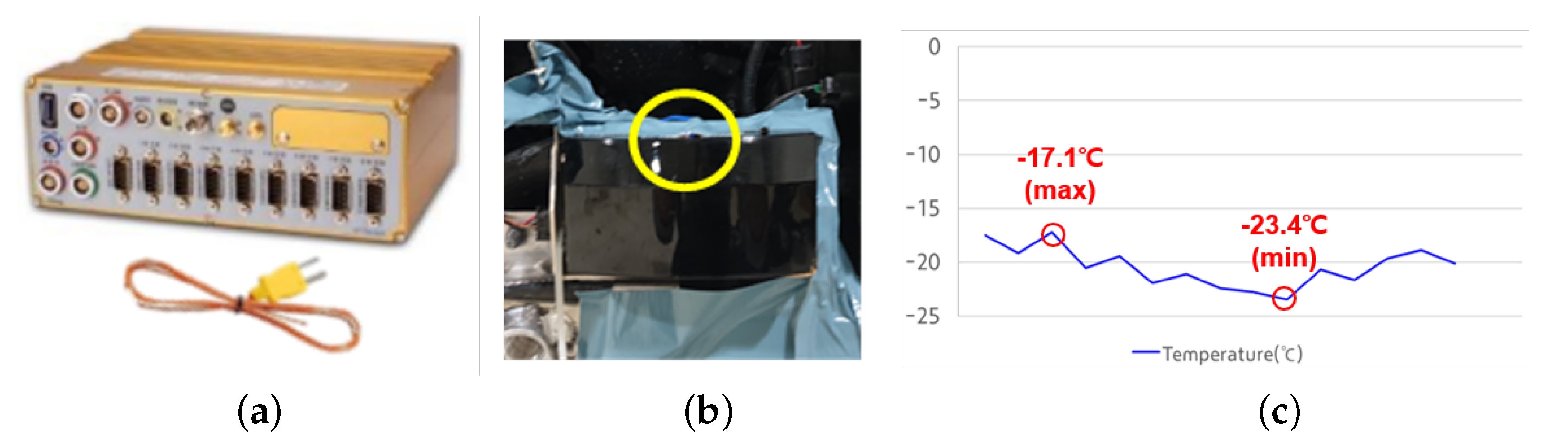

3.2. Environmental Data Acquisition

3.3. Dynamic Target Feature Extraction Algorithm

3.4. Test Metrics

3.4.1. Number of Points

3.4.2. Intensity

3.4.3. Scan Frequency

3.4.4. Field of View and Angular Resolution

3.4.5. Number of Noise Points

3.4.6. Range Accuracy/Precision

4. Results

4.1. Vibration Test

4.2. String Sunlight Test

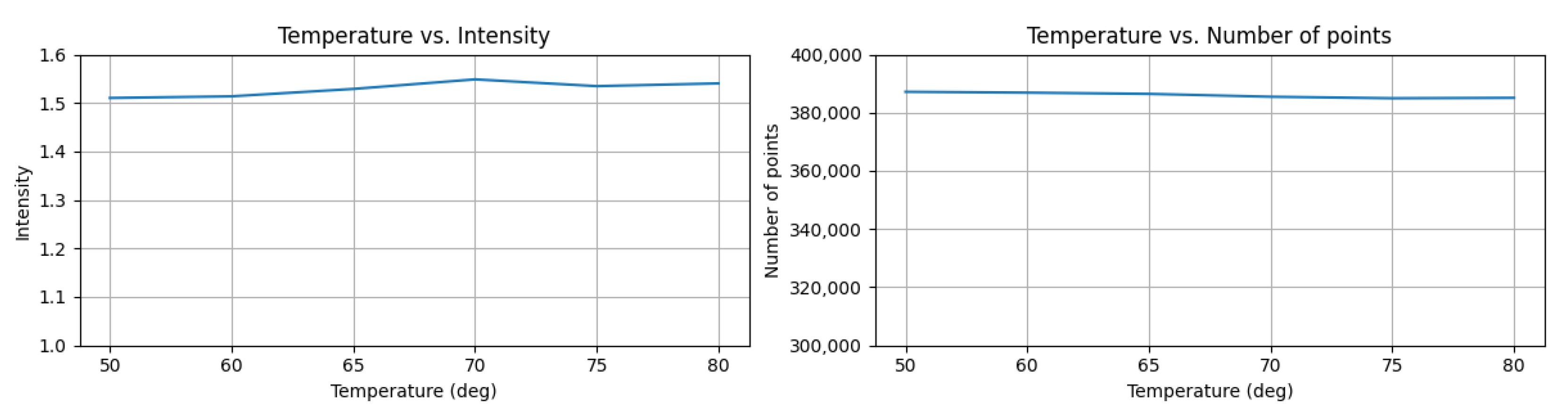

4.3. High/Low Ambient Temperature Test

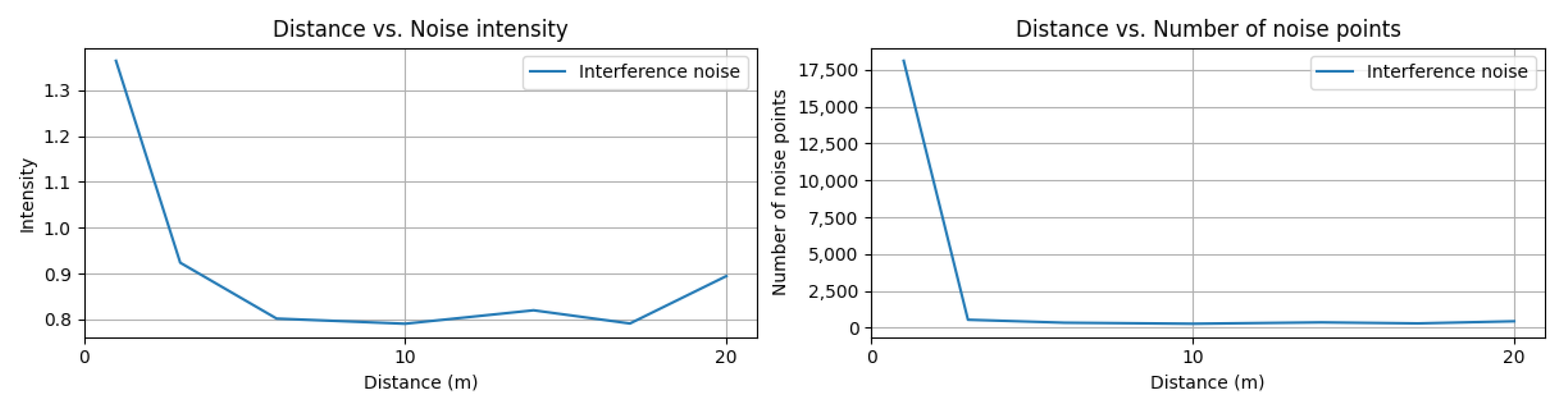

4.4. Interference by External Laser Source

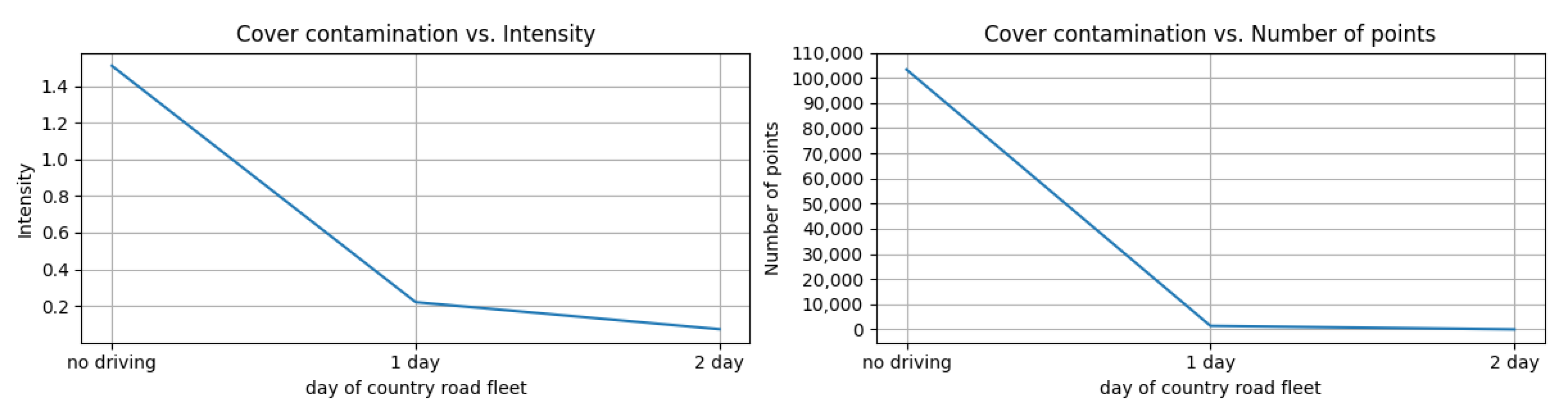

4.5. Cover Contamination Test

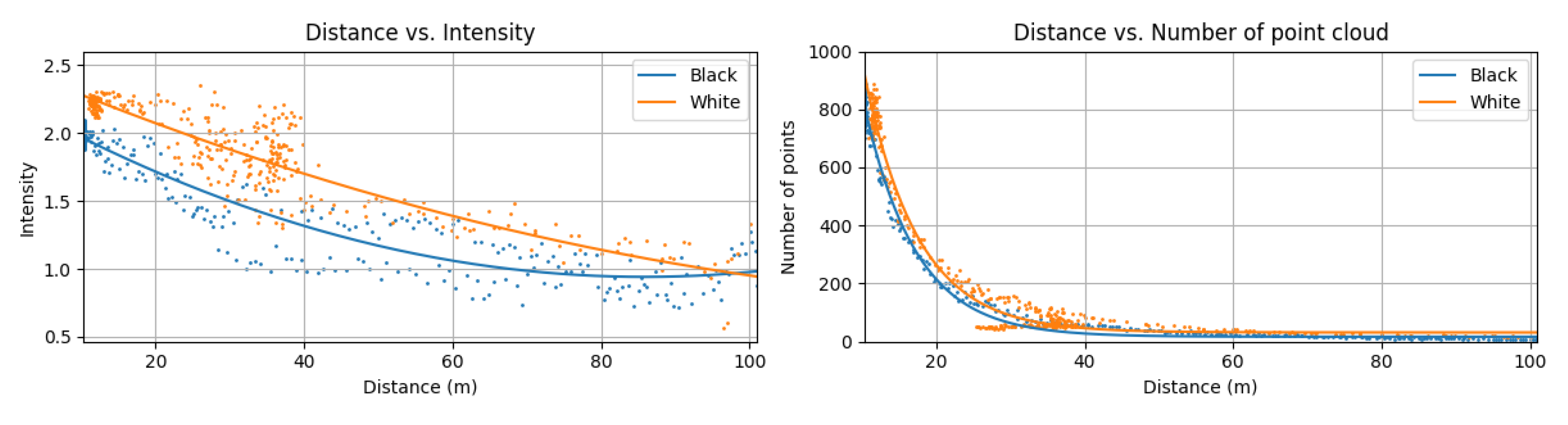

4.6. Color Change Test

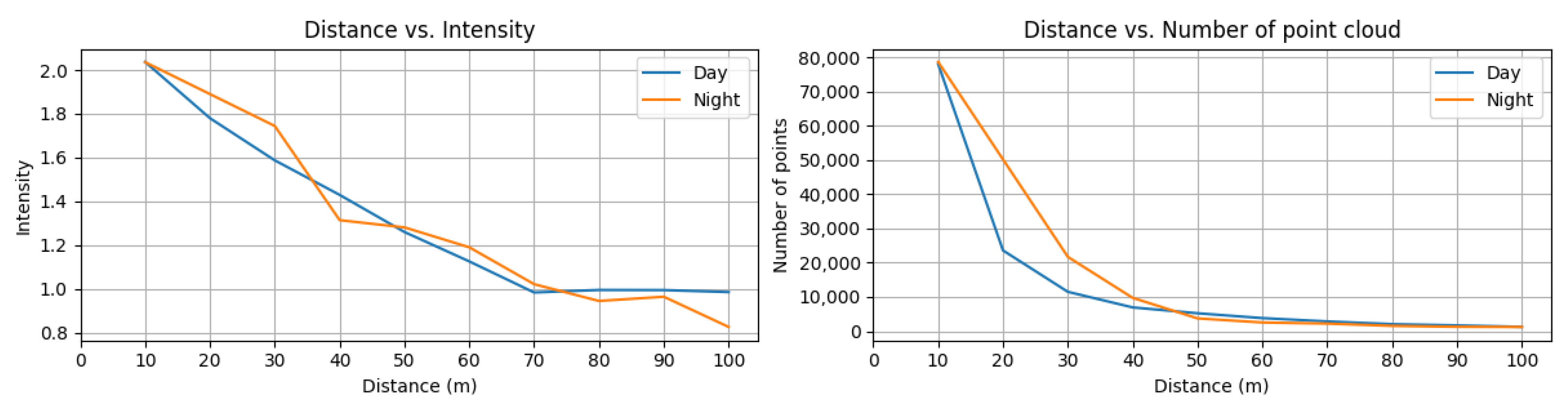

4.7. Day and Night Transition Test

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- J3016-202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2018.

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR technology: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6282–6297. [Google Scholar] [CrossRef]

- 2019-25217; Advanced Driver Assistance Systems Draft Research Test Procedures. NHTSA: Washington, DC, USA, 2020.

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What happens for a ToF LiDAR in fog? IEEE Trans. Intell. Transp. Syst. 2020, 22, 6670–6681. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive lidar sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1527–1534. [Google Scholar]

- Sun, W.; Hu, Y.; MacDonnell, D.G.; Weimer, C.; Baize, R.R. Technique to separate lidar signal and sunlight. Opt. Express 2016, 24, 12949–12954. [Google Scholar] [CrossRef] [PubMed]

- Mona, L.; Liu, Z.; Müller, D.; Omar, A.; Papayannis, A.; Pappalardo, G.; Sugimoto, N.; Vaughan, M. Lidar measurements for desert dust characterization: An overview. Adv. Meteorol. 2012, 2012, 356265. [Google Scholar] [CrossRef] [Green Version]

- Jokela, M.; Kutila, M.; Pyykönen, P. Testing and validation of automotive point-cloud sensors in adverse weather conditions. Appl. Sci. 2019, 9, 2341. [Google Scholar] [CrossRef] [Green Version]

- Hasirlioglu, S.; Doric, I.; Kamann, A.; Riener, A. Reproducible fog simulation for testing automotive surround sensors. In Proceedings of the 2017 IEEE 85th Vehicular Technology Conference (VTC Spring), Sydney, NSW, Australia, 4–7 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–7. [Google Scholar]

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Park, B.j.; Roh, C.G.; Kim, Y. Performance of mobile LiDAR in real road driving conditions. Sensors 2021, 21, 7461. [Google Scholar] [CrossRef] [PubMed]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Wojtanowski, J.; Zygmunt, M.; Kaszczuk, M.; Mierczyk, Z.; Muzal, M. Comparison of 905 nm and 1550 nm semiconductor laser rangefinders’ performance deterioration due to adverse environmental conditions. Opto-Electron. Rev. 2014, 22, 183–190. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykönen, P.; Holzhüter, H.; Colomb, M.; Duthon, P. Automotive LiDAR performance verification in fog and rain. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1695–1701. [Google Scholar]

- Filgueira, A.; González-Jorge, H.; Lagüela, S.; Díaz-Vilariño, L.; Arias, P. Quantifying the influence of rain in LiDAR performance. Measurement 2017, 95, 143–148. [Google Scholar] [CrossRef]

- Carballo, A.; Lambert, J.; Monrroy, A.; Wong, D.; Narksri, P.; Kitsukawa, Y.; Takeuchi, E.; Kato, S.; Takeda, K. LIBRE: The multiple 3D LiDAR dataset. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October 2020–13 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1094–1101. [Google Scholar]

- Dannheim, C.; Icking, C.; Mäder, M.; Sallis, P. Weather detection in vehicles by means of camera and LIDAR systems. In Proceedings of the 2014 Sixth International Conference on Computational Intelligence, Communication Systems and Networks, Tetova, Macedonia, 27–29 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 186–191. [Google Scholar]

- Tang, L.; Shi, Y.; He, Q.; Sadek, A.W.; Qiao, C. Performance test of autonomous vehicle lidar sensors under different weather conditions. Transp. Res. Rec. 2020, 2674, 319–329. [Google Scholar] [CrossRef]

- Dhananjaya, M.M.; Kumar, V.R.; Yogamani, S. Weather and light level classification for autonomous driving: Dataset, baseline and active learning. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2816–2821. [Google Scholar]

- Gschwandtner, M.; Kwitt, R.; Uhl, A.; Pree, W. BlenSor: Blender sensor simulation toolbox. In Proceedings of the Advances in Visual Computing: 7th International Symposium, ISVC 2011, Las Vegas, NV, USA, 26–28 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 199–208. [Google Scholar]

- Pereira, J.L.; Rossetti, R.J. An integrated architecture for autonomous vehicles simulation. In Proceedings of the 27th Annual ACM Symposium on Applied Computing, Trento, Italy, 26–30 March 2012; pp. 286–292. [Google Scholar]

- Goodin, C.; Carruth, D.; Doude, M.; Hudson, C. Predicting the Influence of Rain on LIDAR in ADAS. Electronics 2019, 8, 89. [Google Scholar] [CrossRef] [Green Version]

- Manivasagam, S.; Wang, S.; Wong, K.; Zeng, W.; Sazanovich, M.; Tan, S.; Yang, B.; Ma, W.C.; Urtasun, R. Lidarsim: Realistic lidar simulation by leveraging the real world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11167–11176. [Google Scholar]

- Hahner, M.; Sakaridis, C.; Dai, D.; Van Gool, L. Fog simulation on real LiDAR point clouds for 3D object detection in adverse weather. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15283–15292. [Google Scholar]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- Reway, F.; Huber, W.; Ribeiro, E.P. Test methodology for vision-based adas algorithms with an automotive camera-in-the-loop. In Proceedings of the 2018 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Madrid, Spain, 12–14 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Buddappagari, S.; Asghar, M.; Baumgärtner, F.; Graf, S.; Kreutz, F.; Löffler, A.; Nagel, J.; Reichmann, T.; Stephan, R.; Hein, M.A. Over-the-air vehicle-in-the-loop test system for installed-performance evaluation of automotive radar systems in a virtual environment. In Proceedings of the 2020 17th European Radar Conference (EuRAD), Utrecht, The Netherlands, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 278–281. [Google Scholar]

- Rasshofer, R.H.; Gresser, K. Automotive radar and lidar systems for next generation driver assistance functions. Adv. Radio Sci. 2005, 3, 205–209. [Google Scholar] [CrossRef] [Green Version]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Wandinger, U. Introduction to Lidar; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Jeong, J.; Kim, A. LiDAR intensity calibration for road marking extraction. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 455–460. [Google Scholar]

- Gomes, T.; Roriz, R.; Cunha, L.; Ganal, A.; Soares, N.; Araújo, T.; Monteiro, J. Evaluation and Testing Platform for Automotive LiDAR Sensors. Appl. Sci. 2022, 12, 13003. [Google Scholar] [CrossRef]

- Gao, T.; Gao, F.; Zhang, G.; Liang, L.; Song, Y.; Du, J.; Dai, W. Effects of temperature environment on ranging accuracy of lidar. In Proceedings of the Tenth International Conference on Digital Image Processing (ICDIP 2018), Shanghai, China, 11–14 May 2018; Volume 10806, pp. 1915–1921. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1887–1893. [Google Scholar]

| Type | Environmental Conditions | Test Results | |

|---|---|---|---|

| Simulation with road fleet data (Section 3.2) | Cover contamination | Mud was spread mud on the cover of the DUT based on visibility of a front LiDAR sensor from an off-road fleet. A reference target was placed in front of the DUT at a 5 m distance. | The signal intensity and the number of points decreased when the cover of the LiDAR was obscured by mud contamination. |

| Strong sunlight | Applying direct sunlight to the DUT while the DUT captured points from a reference target. Illumination flux was controlled by measured maximum sunlight on a desert surface in Nevada | The mean intensity decreased due to sunlight but the number of points from a reference target remained the same. The intensity drop rate was 47% at the highest illumination flux compared to the zero sunlight case. | |

| High temperature | Simulating ambient temperature using a large temperature chamber capable of containing a test car, with temperature profiles captured from an extremely hot area in Nevada, the USA | As the temperature increased, the signal strength increased by 19.8% compared to the initial stage. | |

| Low temperature | Simulating ambient temperature using a large temperature chamber capable of containing a test car with temperature profiles captured from an extremely cold area in Alaska, the USA | At low temperatures, the difference of signal intensity changed insignificantly. | |

| Performance test in dynamic conditions | Vibration | Recording the force applied to the DUT and the signal variations of the DUT using the data acquisition toolbox and driving feature extraction algorithm (Section 3.3) | Position in z-axis and intensity of a front vehicle drifted highly in response to road vibrations. A high deviation in the z-axis position and intensity were measured. |

| Interference | Capturing interference noise from a front LiDAR on a vehicle coming from the opposite side while the opposite car was being moved from 0 to 20 m distance to the ego-vehicle | Interference noise might occur at a very short distance (e.g., 1 m). It drastically decreased as the distance to the interference source increased. | |

| Color change of front car (reflectivity of a target) | Driving the front car in a straight road from 0 to 100 m distance to the ego-vehicle. We utilized the black and white car which had a different reflectivity to 905 nm wavelength of light. | A 33.2% drop rate was measured in the intensity curve. There was no significant change in the number of points in the graph. | |

| Day and night transition | Driving the front car on a straight road from 0 to 100 m distance to the ego-vehicle in day and night conditions | The signal intensity decreased under day conditions compared to night conditions. | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Cho, J.; Lee, S.; Bak, S.; Kim, Y. An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions. Sensors 2023, 23, 3892. https://doi.org/10.3390/s23083892

Park J, Cho J, Lee S, Bak S, Kim Y. An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions. Sensors. 2023; 23(8):3892. https://doi.org/10.3390/s23083892

Chicago/Turabian StylePark, Jewoo, Jihyuk Cho, Seungjoo Lee, Seokhwan Bak, and Yonghwi Kim. 2023. "An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions" Sensors 23, no. 8: 3892. https://doi.org/10.3390/s23083892

APA StylePark, J., Cho, J., Lee, S., Bak, S., & Kim, Y. (2023). An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions. Sensors, 23(8), 3892. https://doi.org/10.3390/s23083892