A Review of Successes and Impeding Challenges of IoT-Based Insect Pest Detection Systems for Estimating Agroecosystem Health and Productivity of Cotton

Abstract

1. Introduction

2. Scope of the Review

3. Pest Recognition Features

4. Detected Cotton Pest Species, Approach, and Performance Indicator

4.1. AI Performance Indicators

4.2. Detected Cotton Pest Species

5. Tested AI Models for Pest Detection

6. Intelligent Sensor Systems for Monitoring and Counting Cotton Pests

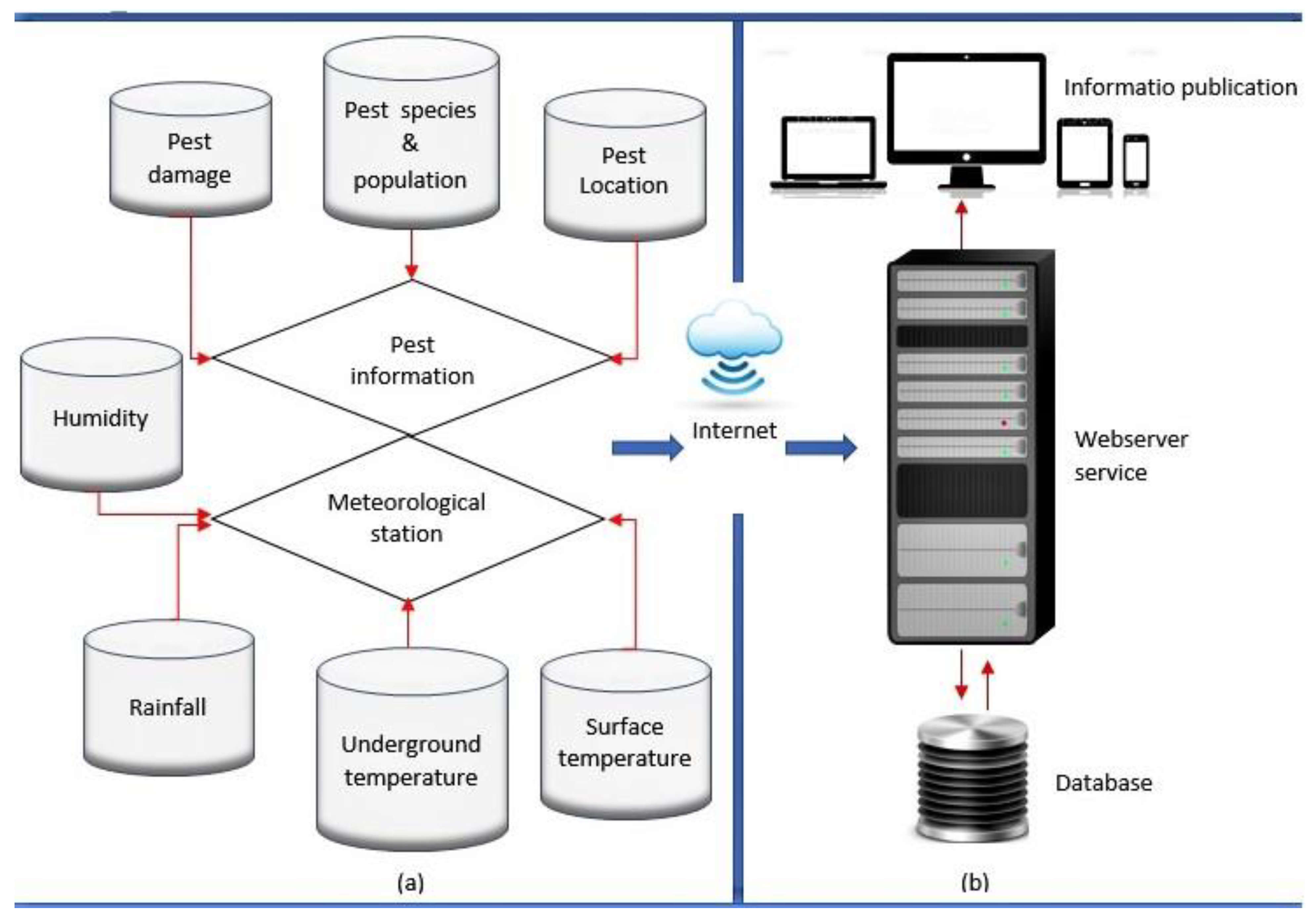

6.1. System Components of Remote Monitoring Devices

6.2. Counting of Pests on Leaves

6.3. Counting Pests on Sticky Traps

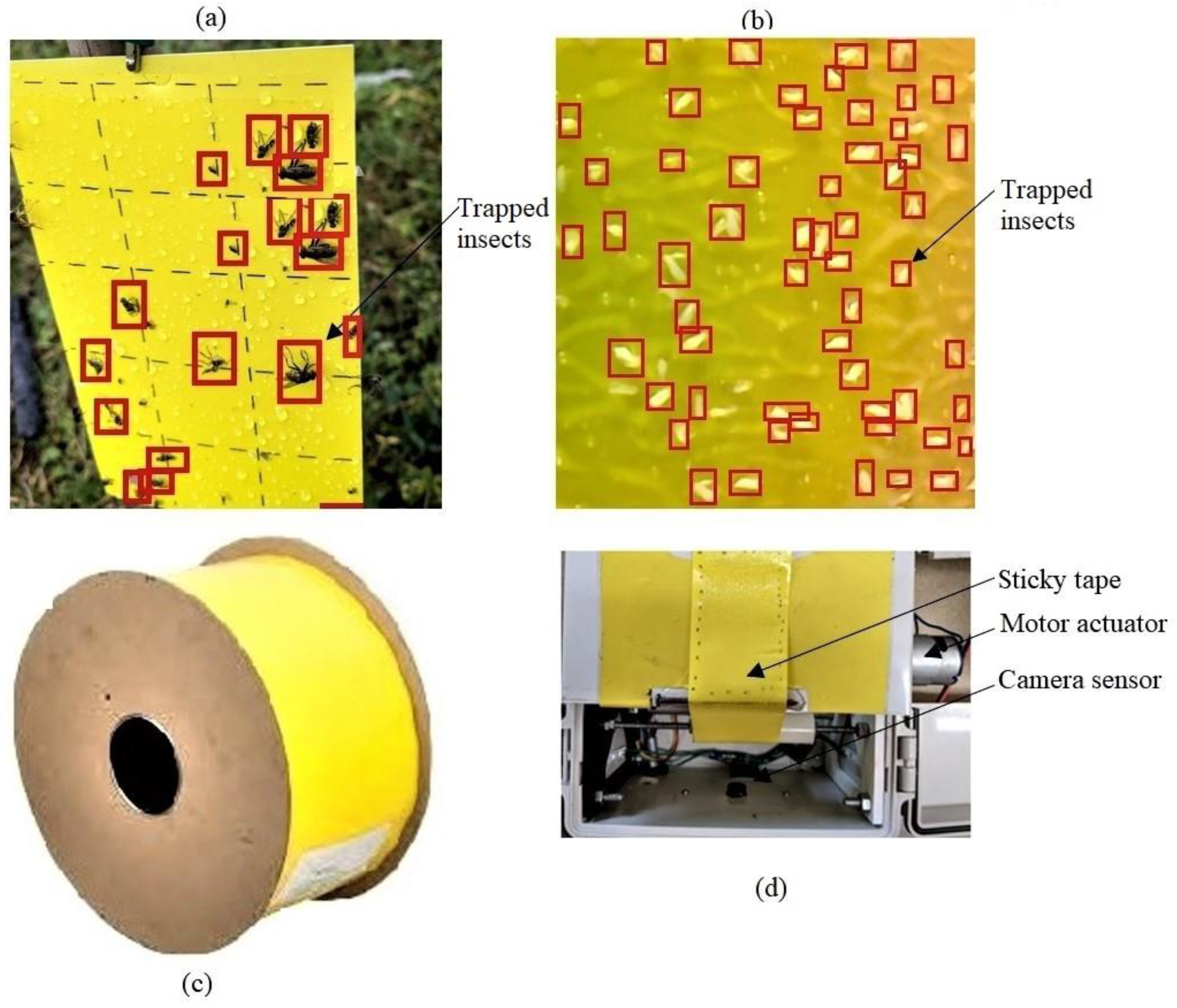

6.4. Counting Pests on Paper

7. Artificial Intelligence in Beneficial Insects

8. Challenges to the Implementation of IoT-Filled Devices

9. Urgent Need for IoT towards Worldwide Major Cotton Pests

10. Recommendations for Future Research

11. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| CNN | Convolutional Neural Networks |

| SVM | Support Vector Machine |

| KNN | K-Nearest Neighbors |

| ANN | Artificial Neural networks |

| RNN | Recurrent Neural Networks |

| DBN | Deep Belief Network |

| DBM | Deep Boltzmann Machine |

| LBP-SVM | Local Binary Patterns with Support Vector Machine |

| Faster R-CNN | Faster Recurrent Convolution Neural Network |

| ResNet | Deep Residual Network |

| DCNN | Deep Convolutional Neural Network |

| HD-CNN | Hierarchical Deep Convolutional Neural Network |

| SegNet | Semantic Segmentation Network |

| SSD MobileNet | Single Shot Multi-Box Detector MobileNet |

| BP Neural Network | Back Propagation Neural Networks |

| Bi-Directional RNN | Bi-directional Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| GNSS | Geographical Navigation Satellite Systems |

References

- Alves, A.N.; Souza, W.S.; Borges, D.L. Cotton pests classification in field-based images using deep residual networks. Comput. Electron. Agric. 2020, 174, 105488. [Google Scholar] [CrossRef]

- Gholve, V.M.; Jogdand, S.M.; Jagtap, G.P.; Dey, U. In-vitro evaluation of fungicides, bioagents and aqueous leaf extracts against Alternaria leaf blight of cotton. Sci. J. Vet. Adv. 2012, 1, 12–21. [Google Scholar]

- Ahmad, M.; Muhammad, W.; Sajjad, A. Ecological Management of Cotton Insect Pests. In Cotton Production and Uses; Springer: Singapore, 2020; pp. 213–238. [Google Scholar]

- Thenmozhi, K.; Reddy, U.S. Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 2019, 164, 104906. [Google Scholar] [CrossRef]

- Luttrell, R.G.; Fitt, G.P.; Ramalho, F.S.; Sugonyaev, E.S. Cotton pest management: Part 1. A worldwide perspective. Annu. Rev. Entomol. 1994, 39, 517–526. [Google Scholar] [CrossRef]

- Dhananjayan, V.; Ravichandran, B. Occupational health risk of farmers exposed to pesticides in agricultural activities. Curr. Opin. Environ. Sci. Health 2018, 4, 31–37. [Google Scholar] [CrossRef]

- Shah, N.; Jain, S. Detection of disease in cotton leaf using artificial neural network. In Proceedings of the 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; pp. 473–476. [Google Scholar] [CrossRef]

- Machado, A.V.; Potin, D.M.; Torres, J.B.; Torres, C.S.S. Selective insecticides secure natural enemies action in cotton pest management. Ecotoxicol. Environ. Saf. 2019, 184, 109669. [Google Scholar] [CrossRef]

- Van Goethem, S.; Verwulgen, S.; Goethijn, F.; Steckel, J. An IoT solution for measuring bee pollination efficacy. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 837–841. [Google Scholar] [CrossRef]

- Calvo-Agudo, M.; Tooker, J.F.; Dicke, M.; Tena, A. Insecticide-contaminated honeydew: Risks for beneficial insects. Biol. Rev. 2022, 97, 664–678. [Google Scholar] [CrossRef] [PubMed]

- Karar, M.E.; Alsunaydi, F.; Albusaymi, S.; Alotaibi, S. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alex. Eng. J. 2021, 60, 4423–4432. [Google Scholar] [CrossRef]

- Geetharamani, G.; Pandian, A. Identification of plant leaf diseases using a nine-layer deep convolutional neural network. Comput. Electr. Eng. 2019, 76, 323–338. [Google Scholar] [CrossRef]

- Martin, V.; Thonnat, M. A Cognitive Vision Approach to Image Segmentation. Available online: https://hal.inria.fr/inria-00499604 (accessed on 3 October 2022).

- Tenório, G.L.; Martins, F.F.; Carvalho, T.M.; Leite, A.C.; Figueiredo, K.; Vellasco, M.; Caarls, W. Comparative Study of Computer Vision Models for Insect Pest Identification in Complex Backgrounds. In Proceedings of the 2019 12th International Conference on Developments in eSystems Engineering (DeSE), Kazan, Russia, 7–10 October 2019; pp. 551–556. [Google Scholar] [CrossRef]

- Cho, J.; Choi, J.; Qiao, M.; Ji, C.W.; Kim, H.Y.; Uhm, K.B.; Chon, T.S. Automatic identification of whiteflies, aphids and thrips in greenhouse based on image analysis. Red 2007, 346, 244. [Google Scholar]

- Boissard, P.; Martin, V.; Moisan, S. A cognitive vision approach to early pest detection in greenhouse crops. Comput. Electron. Agric. 2008, 62, 81–93. [Google Scholar] [CrossRef]

- Khosla, G.; Rajpal, N.; Singh, J. Evaluation of Euclidean and Manhanttan metrics in content based image retrieval system. In Proceedings of the 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 11–13 March 2015; pp. 12–18. [Google Scholar]

- Zhang, J.; Qi, L.; Ji, R.; Yuan, X.; Li, H. Classification of cotton blind stinkbug based on Gabor wavelet and color moments. Trans. Chin. Soc. Agric. Eng. 2012, 28, 133–138. [Google Scholar]

- Vinayak, V.; Jindal, S. CBIR system using color moment and color auto-Correlogram with block truncation coding. Int. J. Comput. Appl. 2017, 161, 1–7. [Google Scholar] [CrossRef]

- Rasli, R.M.; Muda TZ, T.; Yusof, Y.; Bakar, J.A. Comparative analysis of content based image retrieval techniques using color histogram: A case study of glcm and k-means clustering. In Proceedings of the 2012 Third International Conference on Intelligent Systems Modelling and Simulation, Kota Kinabalu, Malaysia, 8–10 February 2012; pp. 283–286. [Google Scholar] [CrossRef]

- Gassoumi, H.; Prasad, N.R.; Ellington, J.J. Neural network-based approach for insect classification in cotton ecosystems. In International Conference on Intelligent Technologies; InTech: Bangkok, Thailand, 2000; Volume 7. [Google Scholar]

- Kandalkar, G.; Deorankar, A.V.; Chatur, P.N. Classification of agricultural pests using dwt and back propagation neural networks. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 4034–4037. [Google Scholar]

- Jige, M.N.; Ratnaparkhe, V.R. Population estimation of whitefly for cotton plant using image processing approach. In Proceedings of the 2017 2nd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 19–20 May 2017; pp. 487–491. [Google Scholar] [CrossRef]

- Lin, Y.B.; Lin, Y.W.; Lin, J.Y.; Hung, H.N. SensorTalk: An IoT device failure detection and calibration mechanism for smart farming. Sensors 2019, 19, 4788. [Google Scholar] [CrossRef]

- Lin, Y.W.; Lin, Y.B.; Hung, H.N. CalibrationTalk: A farming sensor failure detection and calibration technique. IEEE Internet Things J. 2020, 8, 6893–6903. [Google Scholar] [CrossRef]

- Shi, W.; Li, N. Research on Farmland Pest Image Recognition Based on Target Detection Algorithm. In CS & IT 2020: Computer Science & Information Technology Conference Proceedings; CS & IT-CSCP; 2020; Volume 10, pp. 111–117. Available online: https://aircconline.com/csit/papers/vol10/csit100210.pdf (accessed on 8 November 2022).

- Zekiwos, M.; Bruck, A. Deep learning-based image processing for cotton leaf disease and pest diagnosis. J. Electr. Comput. Eng. 2021, 2021, 9981437. [Google Scholar] [CrossRef]

- Dalmia, A.; White, J.; Chaurasia, A.; Agarwal, V.; Jain, R.; Vora, D.; Dhame, B.; Dharmaraju, R.; Panicker, R. Pest Management in Cotton Farms: An AI-System Case Study from the Global South. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 3119–3127. [Google Scholar] [CrossRef]

- Li, Y.; Yang, J. Few-shot cotton pest recognition and terminal realization. Comput. Electron. Agric. 2020, 169, 105240. [Google Scholar] [CrossRef]

- Long, D.; Grundy, P.; McCarthy, A. Machine Vision App for Automated Cotton Insect Counting: Initial Development and First Results. Available online: https://core.ac.uk/download/pdf/335012855.pdf (accessed on 8 November 2022).

- McCarthy, A.; Long, D.; Grundy, P. Smartphone Apps under Development to Aid Pest Monitoring; GRDC Update; Australian Government, Grains Research and Development Corporation: Kingston, Australia, 2020; pp. 180–184. Available online: https://grdc.com.au/__data/assets/pdf_file/0028/430858/GRDC-Update-Paper-Long-Derek-July-2020.pdf (accessed on 8 November 2022).

- Parab, C.U.; Mwitta, C.; Hayes, M.; Schmidt, J.M.; Riley, D.; Fue, K.; Bhandarkar, S.; Rains, G.C. Comparison of Single-Shot and Two-Shot Deep Neural Network Models for Whitefly Detection in IoT Web Application. AgriEngineering 2022, 4, 507–522. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wason, R. Deep learning: Evolution and expansion. Cogn. Syst. Res. 2018, 52, 701–708. [Google Scholar] [CrossRef]

- Kumbhar, S.; Nilawar, A.; Patil, S.; Mahalakshmi, B.; Nipane, M. Farmer buddy-web based cotton leaf disease detection using CNN. Int. J. Appl. Eng. Res. 2019, 14, 2662–2666. [Google Scholar]

- Zhang, Y.D.; Dong, Z.; Chen, X.; Jia, W.; Du, S.; Muhammad, K.; Wang, S.H. Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation. Multimed. Tools Appl. 2019, 78, 3613–3632. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Huang, W.; Zhang, S. Fine-grained recognition of crop pests based on capsule network with attention mechanism. In International Conference on Intelligent Computing; Springer: Cham, Switzerland, 2021; pp. 465–474. [Google Scholar] [CrossRef]

- Xie, C.; Zhang, J.; Li, R.; Li, J.; Hong, P.; Xia, J.; Chen, P. Automatic classification for field crop insects via multiple-task sparse representation and multiple-kernel learning. Comput. Electron. Agric. 2015, 119, 123–132. [Google Scholar] [CrossRef]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the 2017 Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kasinathan, T.; Singaraju, D.; Uyyala, S.R. Insect classification and detection in field crops using modern machine learning techniques. Inf. Process. Agric. 2021, 8, 446–457. [Google Scholar] [CrossRef]

- Jian, Y.; Peng, S.; Zhenpeng, L.; Yu, Z.; Chenggui, Z.; Zizhong, Y. Automatic Classification of Spider Images in Natural Background. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 158–164. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef]

- Buschbacher, K.; Ahrens, D.; Espeland, M.; Steinhage, V. Image-based species identification of wild bees using convolutional neural networks. Ecol. Inform. 2020, 55, 101017. [Google Scholar] [CrossRef]

- Ascolese, R.; Gargiulo, S.; Pace, R.; Nappa, P.; Griffo, R.; Nugnes, F.; Bernardo, U. E-traps: A valuable monitoring tool to be improved. EPPO Bull. 2022, 52, 175–184. [Google Scholar] [CrossRef]

- Sun, Y.; Cheng, H.; Cheng, Q.; Zhou, H.; Li, M.; Fan, Y.; Shan, G.; Damerow, L.; Lammers, P.S.; Jones, S.B. A smart-vision algorithm for counting whiteflies and thrips on sticky traps using two-dimensional Fourier transform spectrum. Biosyst. Eng. 2017, 153, 82–88. [Google Scholar] [CrossRef]

- Tian, M.; Lu, P.; Liu, X.; Lu, X. The Cotton Aphids Counting System with Super Resolution and Compressive Sensing. Sens. Imaging 2021, 22, 27. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, K.; Liu, Z.; Wang, X.; Pan, S. A cognitive vision method for insect pest image segmentation. IFAC-Pap. 2018, 51, 85–89. [Google Scholar] [CrossRef]

- Liu, T.; Chen, W.; Wu, W.; Sun, C.; Guo, W.; Zhu, X. Detection of aphids in wheat fields using a computer vision technique. Biosyst. Eng. 2016, 141, 82–93. [Google Scholar] [CrossRef]

- Qiao, M.; Lim, J.; Ji, C.W.; Chung, B.-K.; Kim, H.-Y.; Uhm, K.-B.; Myung, C.S.; Cho, J.; Chon, T.-S. Density estimation of Bemisia tabaci (Hemiptera: Aleyrodidae) in a greenhouse using sticky traps in conjunction with an image processing system. J. Asia-Pac. Entomol. 2008, 11, 25–29. [Google Scholar] [CrossRef]

- Barros, E.M.; da Silva-Torres CS, A.; Torres, J.B.; Rolim, G.G. Short-term toxicity of insecticides residues to key predators and parasitoids for pest management in cotton. Phytoparasitica 2018, 46, 391–404. [Google Scholar] [CrossRef]

- da Silva, F.L.; Sella ML, G.; Francoy, T.M.; Costa, A.H.R. Evaluating classification and feature selection techniques for honeybee subspecies identification using wing images. Comput. Electron. Agric. 2015, 114, 68–77. [Google Scholar] [CrossRef]

- Zgank, A. IoT-based bee swarm activity acoustic classification using deep neural networks. Sensors 2021, 21, 676. [Google Scholar] [CrossRef]

- Terenzi, A.; Cecchi, S.; Spinsante, S. On the importance of the sound emitted by honey bee hives. Vet. Sci. 2020, 7, 168. [Google Scholar] [CrossRef] [PubMed]

- Zgank, A. Bee swarm activity acoustic classification for an IoT-based farm service. Sensors 2019, 20, 21. [Google Scholar] [CrossRef]

- Júnior TD, C.; Rieder, R.; Di Domênico, J.R.; Lau, D. InsectCV: A system for insect detection in the lab from trap images. Ecol. Inform. 2022, 67, 101516. [Google Scholar] [CrossRef]

- Bayar, B.; Stamm, M.C. Constrained convolutional neural networks: A new approach towards general purpose image manipulation detection. IEEE Trans. Inf. Secur. 2018, 13, 2691–2706. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.H.; Emer, J.; Suleiman, A.; Zhang, Z. Hardware for machine learning: Challenges and opportunities. In Proceedings of the 2017 IEEE Custom Integrated Circuits Conference (CICC), Austin, TX, USA, 30 April–3 May 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Saad MH, M.; Hamdan, N.M.; Sarker, M.R. State of the art of urban smart vertical farming automation system: Advanced topologies, issues and recommendations. Electronics 2021, 10, 1422. [Google Scholar] [CrossRef]

- Ramli, R.M.; Jabbar, W.A. Design and implementation of solar-powered with IoT-Enabled portable irrigation system. Internet Things Cyber-Phys. Syst. 2022, 2, 212–225. [Google Scholar] [CrossRef]

- Kumari, P.; Kaur, P. A survey of fault tolerance in cloud computing. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 1159–1176. [Google Scholar] [CrossRef]

- Ravindranath, L.; Nath, S.; Padhye, J.; Balakrishnan, H. Automatic and scalable fault detection for mobile applications. In Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 190–203. [Google Scholar]

- Tang, Y.; Dananjayan, S.; Hou, C.; Guo, Q.; Luo, S.; He, Y. A survey on the 5G network and its impact on agriculture: Challenges and opportunities. Comput. Electron. Agric. 2021, 180, 105895. [Google Scholar] [CrossRef]

- Darwish, S.M.; El-Dirini, M.N.; Abd El-Moghith, I.A. An adaptive cellular automata scheme for diagnosis of fault tolerance and connectivity preserving in wireless sensor networks. Alex. Eng. J. 2018, 57, 4267–4275. [Google Scholar] [CrossRef]

- Prathiba, S.; Sowvarnica, S. Survey of failures and fault tolerance in cloud. In Proceedings of the 2017 2nd International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 23–24 February 2017; pp. 169–172. [Google Scholar] [CrossRef]

- Sicari, S.; Rizzardi, A.; Grieco, L.A.; Coen-Porisini, A. Security, privacy and trust in Internet of Things: The road ahead. Comput. Netw. 2015, 76, 146–164. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious radiometric calibration of a multispectral camera on board an unmanned aerial system. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Brewster, C.; Roussaki, I.; Kalatzis, N.; Doolin, K.; Ellis, K. IoT in agriculture: Designing a Europe-wide large-scale pilot. IEEE Commun. Mag. 2017, 55, 26–33. [Google Scholar] [CrossRef]

- Grolinger, K.; L’Heureux, A.; Capretz, M.A.; Seewald, L. Energy forecasting for event venues: Big data and prediction accuracy. Energy Build. 2016, 112, 222–233. [Google Scholar] [CrossRef]

- Aslam, M.Q.; Hussain, A.; Akram, A.; Hussain, S.; Naqvi, R.Z.; Amin, I.; Saeed, M.; Mansoor, S. Cotton Mi-1.2-like Gene: A potential source of whitefly resistance. Gene 2023, 851, 146983. [Google Scholar] [CrossRef]

- Afzal, M.; Saeed, S.; Riaz, H.; Ishtiaq, M.; Rahman, M.H. A critical review of whitefly (Bemisia tabaci gennadius) cryptic species associated with the cotton leaf curl disease. J. Innov. Sci. 2023, 9, 24–43. [Google Scholar] [CrossRef]

- Abbas, G.; Arif, M.J.; Ashfaq, M.; Aslam, M.; Saeed, S. The impact of some environmental factors on the fecundity of Phenacoccus solenopsis Tinsley (Hemiptera: Pseudococcidae): A serious pest of cotton and other crops. Pak. J. Agric. Sci. 2010, 47, 321–325. [Google Scholar]

- Burbano-Figueroa, O.; Sierra-Monroy, A.; Grandett Martinez, L.; Borgemeister, C.; Luedeling, E. Management of the Boll Weevil (Coleoptera: Curculionidae) in the Colombian Caribbean: A Conceptual Model. J. Integr. Pest Manag. 2021, 12, 15. [Google Scholar] [CrossRef]

- Hamons, K.; Raszick, T.; Perkin, L.; Sword, G.; Suh, C. Cotton Fleahopper1 Biology and Ecology Relevant to Development of Insect Resistance Management Strategies. Southwest. Entomol. 2021, 46, 1–16. [Google Scholar] [CrossRef]

- Dhir, B.C.; Mohapatra, H.K.; Senapati, B. Assessment of crop loss in groundnut due to tobacco caterpillar, Spodoptera litura (F.). Indian J. Plant Prot. 1992, 20, 215–217. [Google Scholar]

- Jaleel, W.; Saeed, S.; Naqqash, M.N.; Zaka, S.M. Survey of Bt cotton in Punjab Pakistan related to the knowledge, perception and practices of farmers regarding insect pests. Int. J. Agric. Crop Sci. 2014, 7, 10. [Google Scholar]

- Crow, W.D.; Catchot, A.L.; Bao, D. Efficacy of Counter on Thrips in Cotton, 2014. Arthropod Manag. Tests 2021, 46, tsaa115. [Google Scholar] [CrossRef]

- Ramalho, F.S.; Fernandes, F.S.; Nascimento AR, B.; Júnior, J.N.; Malaquias, J.B.; Silva, C.A.D. Feeding damage from cotton aphids, Aphis gossypii Glover (Hemiptera: Heteroptera: Aphididae), in cotton with colored fiber intercropped with fennel. Ann. Entomol. Soc. Am. 2012, 105, 20–27. [Google Scholar] [CrossRef]

- Furtado, R.F.; da Silva, F.P.; de Carvalho Lavôr MT, F.; Bleicher, E. Susceptibilidade de cultivares de Gossypium hirsutum L. r. latifolium Hutch a Aphis gossypii Glover. Rev. Ciênc. Agron. 2009, 40, 461–464. [Google Scholar]

- Bamel, K.; Gulati, R. Biology, population built up and damage potential of red spider mite, Tetranychus urticae Koch (Acari: Tetranychidae) on marigold: A review. J. Entomol. Zool. Stud 2021, 9, 547–552. [Google Scholar] [CrossRef]

- Gupta, S.K. Mite pests of agricultural crops in India, their management and identification. In Mites, Their Identification and Management; CCS HAU: Hisar, India, 2003; pp. 48–61. [Google Scholar]

- MahaLakshmi, M.S.; Prasad, N.V.V.S.D. Insecticide resistance in field population of cotton leaf hopper, Amrasca devastans (Dist.) in Guntur, Andhra Pradesh, India. Int. J. Curr. Microbiol. App. Sci 2020, 9, 3006–3011. [Google Scholar] [CrossRef]

- Bhosle, B.B.; More, D.G.; Patange, N.R.; Sharma, O.P.; Bambawale, O.M. Efficacy of different seed dressers against early season sucking pest of cotton. Pestic. Res. J. 2009, 21, 75–79. [Google Scholar]

- Waghmare, V.N.; Venugopalan, M.V.; Nagrare, V.S.; Gawande, S.P.; Nagrale, D.T. Cotton growing in India. In Pest Management in Cotton: A Global Perspective; CABI: Wallingford, UK, 2022; pp. 30–52. [Google Scholar] [CrossRef]

- Nadeem, A.; Tahir, H.M.; Khan, A.A.; Hassan, Z.; Khan, A.M. Species composition and population dynamics of some arthropod pests in cotton fields of irrigated and semi-arid regions of Punjab, Pakistan. Saudi J. Biol. Sci. 2023, 30, 103521. [Google Scholar] [CrossRef] [PubMed]

- Sain, S.K.; Monga, D.; Hiremani, N.S.; Nagrale, D.T.; Kranthi, S.; Kumar, R.; Kranthi, K.R.; Tuteja, O.; Waghmare, V.N. Evaluation of bioefficacy potential of entomopathogenic fungi against the whitefly (Bemisia tabaci Genn.) on cotton under polyhouse and field conditions. J. Invertebr. Pathol. 2021, 183, 107618. [Google Scholar] [CrossRef] [PubMed]

- Krupke, C.H.; Long, E.Y. Intersections between neonicotinoid seed treatments and honey bees. Curr. Opin. Insect Sci. 2015, 10, 8–13. [Google Scholar] [CrossRef]

- Baron, G.L.; Raine, N.E.; Brown, M.J. General and species-specific impacts of a neonicotinoid insecticide on the ovary development and feeding of wild bumblebee queens. Proc. R. Soc. B Biol. Sci. 2017, 284, 20170123. [Google Scholar] [CrossRef]

- Mamoon-ur-Rashid, M.; Abdullah, M.K.K.K.; Hussain, S. Toxic and residual activities of selected insecticides and neem oil against cotton mealybug, Phenacoccus solenopsis Tinsley (Sternorrhyncha: Pseudococcidae) under laboratory and field conditions. Mortality 2011, 10, 100. [Google Scholar]

- Fand, B.B.; Nagrare, V.S.; Gawande, S.P.; Nagrale, D.T.; Naikwadi, B.V.; Deshmukh, V.; Gokte-Narkhedkar, N.; Waghmare, V.N. Widespread infestation of pink bollworm, Pectinophora gossypiella (Saunders) (Lepidoptera: Gelechidae) on Bt cotton in Central India: A new threat and concerns for cotton production. Phytoparasitica 2019, 47, 313–325. [Google Scholar] [CrossRef]

- Gaikwad, A.B.; Patil, S.D.; Deshmukh, B.A. Mitigation practices followed by cotton growers to control pink bollworm. Young 2019, 15, 12–50. [Google Scholar]

- Sain, S.K.; Monga, D.; Mohan, M.; Sharma, A.; Beniwal, J. Reduction in seed cotton yield corresponding with symptom severity grades of Cotton Leaf Curl Disease (CLCuD) in Upland Cotton (Gossypium hirsutum L.). Int. J. Curr. Microbiol. Appl. Sci. 2020, 9, 3063–3076. [Google Scholar] [CrossRef]

- Fand, B.B.; Suroshe, S.S. The invasive mealybug Phenacoccus solenopsis Tinsley, a threat to tropical and subtropical agricultural and horticultural production systems–a review. Crop Prot. 2015, 69, 34–43. [Google Scholar] [CrossRef]

- Younas, H.; Razaq, M.; Farooq, M.O.; Saeed, R. Host plants of Phenacoccus solenopsis (Tinsley) affect parasitism of Aenasius bambawalei (Hayat). Phytoparasitica 2022, 50, 669–681. [Google Scholar] [CrossRef]

- Allen, K.C.; Luttrell, R.G.; Sappington, T.W.; Hesler, L.S.; Papiernik, S.K. Frequency and abundance of selected early-season insect pests of cotton. J. Integr. Pest Manag. 2018, 9, 20. [Google Scholar] [CrossRef]

- Stewart, S.D.; Lorenz, G.M.; Catchot, A.L.; Gore, J.; Cook, D.; Skinner, J.; Mueller, T.C.; Johnson, D.R.; Zawislak, J.; Barber, J. Potential exposure of pollinators to neonicotinoid insecticides from the use of insecticide seed treatments in the mid-southern United States. Environ. Sci. Technol. 2014, 48, 9762–9769. [Google Scholar] [CrossRef] [PubMed]

| S/No. | Morphological Insect Feature | Formula |

|---|---|---|

| 1 | Form Factor | =(4 × π × Area)/(Perimeter)2 |

| 2 | Roundness | =(4 × Area)/(π × Max Diameter2) |

| 3 | Aspect ratio | =(Max Diameter)/(Mean Diameter) |

| 4 | Compactness | =(Sqrt ((4/π) × Area)/Max Diameter) |

| 5 | Extent | =Net area/Bounding rectangle |

| No. | Analysis Term | Formula | Description |

|---|---|---|---|

| 1 | Accuracy (%) | =[(TP + TN)/(TP + FP + FN + TN)] × 100 | Estimates the percentage of correct predictions made by a model |

| 2 | Precision | =[TP/(TP + FP)] | Indicates the quality of a positive prediction made by the model |

| 3 | Recall (sensitivity) | =[TP/(TP + FN)] | Evaluates how accurately the model is capable of identifying the relevant data |

| 4 | F1-score | =2/[(Recall)−1 + (Precision)−1] | Calculates the model’s overall accuracy by combining the precision and recall metrics in a twofold ratio. |

| 5 | Mean Average Precision | =((/Q) × 100% | Shows the Average Precision metric obtained from Precision and Recall |

| Detected Insect Pests | Reference |

|---|---|

| Boll weevil, Cotton aphid, Cotton bollworm (larva), Cotton bollworm (adult), tobacco budworm (larva), Tobacco budworm S (adult), Soybean looper, Fall armyworm (larva), Fall armyworm (adult), Cotton leafworm, Cotton whitefly, Cotton bug, Pink bollworm, southern armyworm, and red spider mite | [1] |

| Cotton aphids, Flea beetles, Flax budworms, and Red spider mites | [11] |

| Mexican cotton boll weevil, Fall armyworm, Cotton bollworm, Cotton aphid, Cotton whitefly, Green stink bug, Neotropical brown stink bug, Soybean looper | [14] |

| Assassin Bug, Three-Corned Alfalfa Hopper, and Convergent lady beetle | [21] |

| Red spider mites and Leaf miner | [27] |

| Pink and American bollworms | [28,29] |

| American bollworm, Ash weevil, Blossom thrips, Brown cotton moth, Brown soft scale, Brown-spotted locust, Cotton aphid, Cotton leaf roller, Cotton leafhopper, Cotton looper, Cotton stem weevil, Cotton whitefly, Cream drab, Cutworm, Darth maul moth imago, Darth maul moth, Desert locust, Dusky cotton bug, Giant red bug, Golden twin spot tomato looper, Green stink bug, Grey mealybug, Hermolaus, Latania scale, Madeira mealybug, Mango mealybug, Megapulvinaria, Cotton stainers, Menida, Menida-versicolor, White-spotted flea beetle, Myllocerus-subfasciatus, Sri Lankan weevil, Painted bug, Pink bollworm, Brown-winged green bug, Poppiocapsidea, Red-banded shield bug, Red cotton bug, Red hairy caterpillar, Solenopsis mealybug, Spherical mealybug, Spotted bollworm imago, Spotted bollworm, Tobacco caterpillar Tomentosa, Transverse moth, Tussock caterpillar, and Yellow cotton scale | [29] |

| Cotton whitefly | [1,14,23,29,30,31,32] |

| Challenge | Source | Solution |

|---|---|---|

| Power consumption |

|

|

| Failure to execute software |

|

|

| Service expiry fault |

|

|

| Network faults |

|

|

| Security |

|

|

| Physical faults of hardware |

|

|

| Data cost |

|

|

| Changes in environmental conditions |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kiobia, D.O.; Mwitta, C.J.; Fue, K.G.; Schmidt, J.M.; Riley, D.G.; Rains, G.C. A Review of Successes and Impeding Challenges of IoT-Based Insect Pest Detection Systems for Estimating Agroecosystem Health and Productivity of Cotton. Sensors 2023, 23, 4127. https://doi.org/10.3390/s23084127

Kiobia DO, Mwitta CJ, Fue KG, Schmidt JM, Riley DG, Rains GC. A Review of Successes and Impeding Challenges of IoT-Based Insect Pest Detection Systems for Estimating Agroecosystem Health and Productivity of Cotton. Sensors. 2023; 23(8):4127. https://doi.org/10.3390/s23084127

Chicago/Turabian StyleKiobia, Denis O., Canicius J. Mwitta, Kadeghe G. Fue, Jason M. Schmidt, David G. Riley, and Glen C. Rains. 2023. "A Review of Successes and Impeding Challenges of IoT-Based Insect Pest Detection Systems for Estimating Agroecosystem Health and Productivity of Cotton" Sensors 23, no. 8: 4127. https://doi.org/10.3390/s23084127

APA StyleKiobia, D. O., Mwitta, C. J., Fue, K. G., Schmidt, J. M., Riley, D. G., & Rains, G. C. (2023). A Review of Successes and Impeding Challenges of IoT-Based Insect Pest Detection Systems for Estimating Agroecosystem Health and Productivity of Cotton. Sensors, 23(8), 4127. https://doi.org/10.3390/s23084127