Effects of AR-Based Home Appliance Agents on User’s Perception and Maintenance Behavior

Abstract

:1. Introduction

- The novel concept of home appliance agents assisting users in maintenance actions through their behavior;

- The development of an AR system that superimposes home appliance agents to make real home appliances appear as living creatures;

- Elucidation of a trend that users will feel intimacy and pleasure when taking care of their home appliances through home appliance agents.

2. Related Work

2.1. AR-Based User Assistance for IoT Devices

2.2. Information Visualization in AR

2.3. Human-Dependent Robots and Intentional Stance

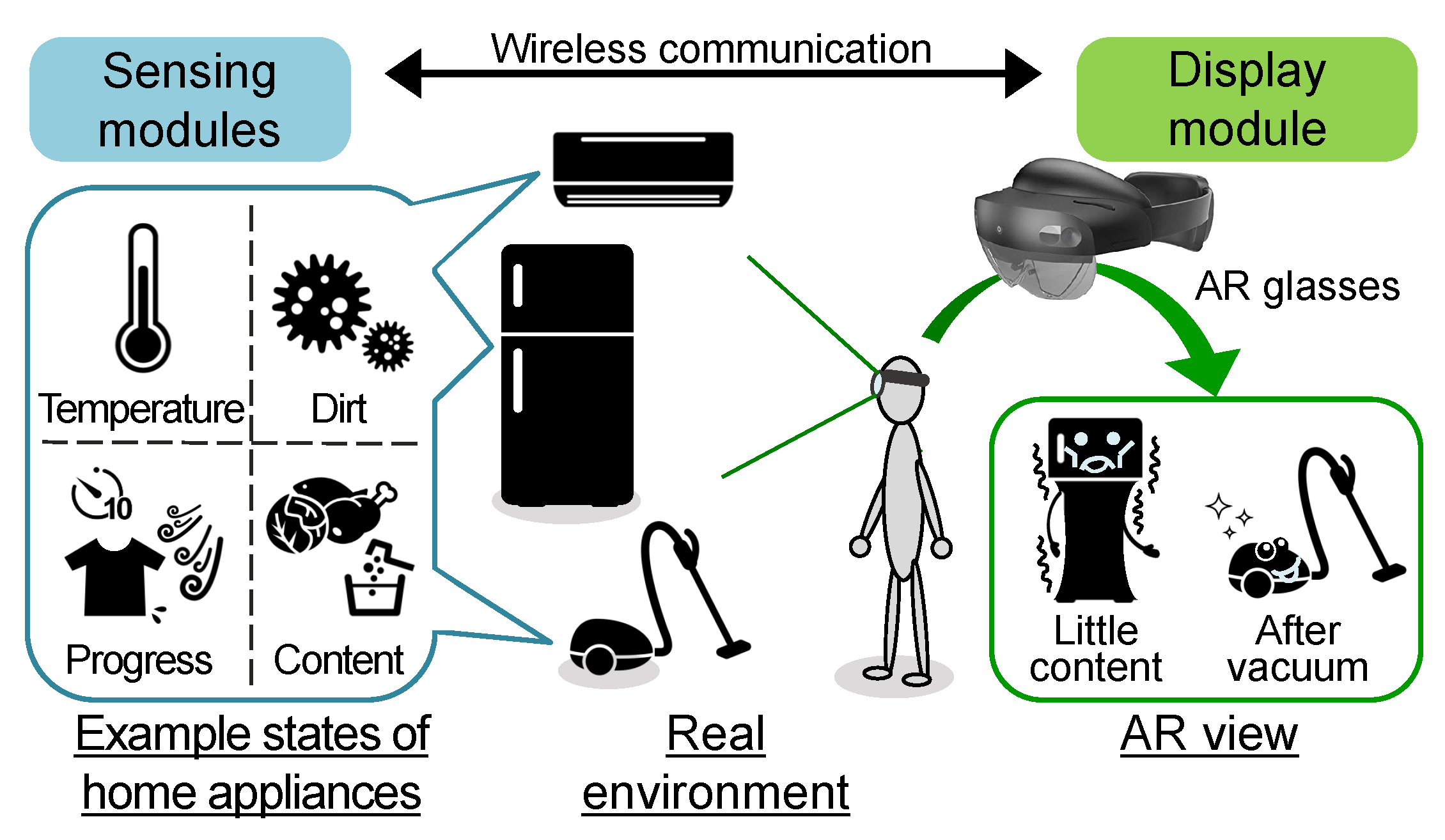

3. Proposed System

3.1. Usage Scenarios

3.2. System Requirements and Approaches

- I.

- It can be used with any existing home appliances;

- II.

- It induces the user’s intimacy for the home appliance and motivates them to act on it;

- III.

- It enables the user to easily guess the condition to be dealt with;

- IV.

- It does not give the user a sense of coercion so that the timing of the act can be adjusted comfortably.

3.3. System Architecture

4. Design of the Refrigerator Agent

4.1. Overview of the Focus Group

4.2. Results of Final Focus Group

5. Experiment

5.1. Overview

- RQ1:

- Does the proposed system induce animacy perception and a sense of intelligence toward the agent?

- RQ2:

- Can the user easily guess the state of the home appliance?

- RQ3:

- How do home appliances’ appearance and behavior influence users’ discomfort?

- RQ4:

- Does intelligent behavior reduce the sense of coercion?

- RQ5:

- Which condition increases the frequency of users’ maintenance?

5.2. Task Design

- I.

- AR visualizations should be in the participants’ view while they are working on a different job.

- II.

- Each state and the corresponding AR visualization should be presented at least once at a time when the participants can take a short break.

- III.

- The maintenance task of the refrigerator should be easy to guess from the AR visualizations and easy to accomplish.

5.3. Experimental Settings

5.3.1. Refrigerator States

5.3.2. Intelligent Behavior

5.3.3. Maintenance Task

5.3.4. Transcription Task

5.3.5. Layout

5.3.6. Procedure

5.3.7. Metrics

5.3.8. Participants

5.4. Results

5.4.1. Objective Evaluation

5.4.2. Subjective Evaluation

5.5. Discussion

5.5.1. RQ1: Does the Proposed System Induce Animacy Perception and Sense of Intelligence toward the Agent?

5.5.2. RQ2: Can the User Easily Guess the State of the Home Appliance?

5.5.3. RQ3: How Do Home Appliance’s Appearance and Behavior Influence User’s Discomfort?

5.5.4. RQ4: Does Intelligent Behavior Reduce the Sense of Coercion?

5.5.5. RQ5: Which Condition Increases the Frequency of Users’ Maintenance?

6. Discussion

6.1. Summary of Findings and Potential Users

6.2. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Scholl, B.J.; Tremoulet, P.D. Perceptual causality and animacy. Trends Cogn. Sci. 2000, 4, 299–309. [Google Scholar] [CrossRef] [PubMed]

- Osawa, H.; Ohmura, R.; Imai, M. Embodiment of an Agent by Anthropomorphization of a Common Object. In Proceedings of the 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT2008), Sydney, NSW, Australia, 9–12 December 2008; Volume 2, pp. 484–490. [Google Scholar] [CrossRef]

- Proklova, D.; Goodale, M. The role of animal faces in the animate-inanimate distinction in the ventral temporal cortex. bioRxiv 2020. [Google Scholar] [CrossRef] [PubMed]

- Bracci, S.; Kalfas, I.; de Beeck, H.O. The ventral visual pathway represents animal appearance over animacy, unlike human behavior and deep neural networks. bioRxiv. 2018. Available online: https://www.biorxiv.org/content/early/2018/08/01/228932.full.pdf (accessed on 19 March 2023).

- Heider, F.; Simmel, M. An Experimental Study of Apparent Behavior. Am. J. Psychol. 1944, 57, 243–259. [Google Scholar] [CrossRef]

- Blythe, P.; Todd, P.; Miller, G. How motion reveals intention: Categorizing social interactions. In Simple Heuristics That Make Us Smart; Oxford University Press: Oxford, UK, 1999. [Google Scholar]

- Fukuda, H.; Ueda, K. Interaction with a Moving Object Affects One’s Perception of Its Animacy. Int. J. Soc. Robot. 2010, 2, 187–193. [Google Scholar] [CrossRef]

- Müller, L.; Aslan, I.; Krüßen, L. GuideMe: A Mobile Augmented Reality System to Display User Manuals for Home Appliances. In Proceedings of the Advances in Computer Entertainment (ACE2013), Boekelo, The Netherlands, 12–15 November 2013; pp. 152–167. [Google Scholar]

- Mahroo, A.; Greci, L.; Sacco, M. HoloHome: An Augmented Reality Framework to Manage the Smart Home. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Santa Maria al Bagno, Italy, 24–27 June 2019; pp. 137–145. [Google Scholar] [CrossRef]

- Bittner, B.; Aslan, I.; Dang, C.T.; André, E. Of Smarthomes, IoT Plants, and Implicit Interaction Design. In Proceedings of the Thirteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI’19), Tempe, AZ, USA, 17–20 March 2019; pp. 145–154. [Google Scholar] [CrossRef]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Inomata, S.; Komiya, K.; Iwase, K.; Nakajima, T. Ar smart home: A smart appliance controller using augmented reality technology and a gesture recognizer. In Proceedings of the Twelfth International Conference on Advances in Multimedia (MMEDIA2020), Lisbon, Portugal, 23–27 February 2020; pp. 1–6. [Google Scholar]

- Bonanni, L.; Lee, C.H.; Selker, T. Attention-Based Design of Augmented Reality Interfaces. In Proceedings of the CHI ’05 Extended Abstracts on Human Factors in Computing Systems, New York, NY, USA, 2–7 April 2005; pp. 1228–1231. [Google Scholar] [CrossRef]

- Wang, I.; Smith, J.; Ruiz, J. Exploring Virtual Agents for Augmented Reality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI’19), Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Reinhardt, J.; Hillen, L.; Wolf, K. Embedding Conversational Agents into AR: Invisible or with a Realistic Human Body? In Proceedings of the Fourteenth International Conference on Tangible, Embedded, and Embodied Interaction, TEI’20, Sydney, NSW, Australia, 9–12 February 2020; pp. 299–310. [Google Scholar] [CrossRef]

- Yoshii, A.; Nakajima, T. Personification Aspect of Conversational Agents as Representations of a Physical Object. In Proceedings of the 3rd International Conference on Human-Agent Interaction (HAI’15), Daegu Kyungpook, Republic of Korea, 21–24 October 2015; pp. 231–234. [Google Scholar] [CrossRef]

- Kim, K.; Bölling, L.; Haesler, S.; Bailenson, J.; Bruder, G.; Welch, G. Does a Digital Assistant Need a Body? The Influence of Visual Embodiment and Social Behavior on the Perception of Intelligent Virtual Agents in AR. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 105–114. [Google Scholar] [CrossRef]

- Walker, M.; Hedayati, H.; Lee, J.; Szafir, D. Communicating Robot Motion Intent with Augmented Reality. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, HRI’18, Chicago, IL, USA, 5–8 March 2018; pp. 316–324. [Google Scholar] [CrossRef]

- Katzakis, N.; Steinicke, F. Excuse Me! Perception of Abrupt Direction Changes Using Body Cues and Paths on Mixed Reality Avatars. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, HRI’18, Chicago, IL, USA, 5–8 March 2018; pp. 147–148. [Google Scholar] [CrossRef]

- Chen, D.; Seong, Y.A.; Ogura, H.; Mitani, Y.; Sekiya, N.; Moriya, K. Nukabot: Design of Care for Human-Microbe Relationships. In Proceedings of the CHI’21: CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021. [Google Scholar] [CrossRef]

- Khaoula, Y.; Ohshima, N.; De Silva, P.R.S.; Okada, M. Concepts and Applications of Human-Dependent Robots. In Proceedings of the Human Interface and the Management of Information. Information and Knowledge in Applications and Services, Heraklion, Crete, 22–27 June 2014; Yamamoto, S., Ed.; Springer: Cham, Switzerland, 2014; pp. 435–444. [Google Scholar]

- Yamaji, Y.; Miyake, T.; Yoshiike, Y.; De Silva, P.R.; Okada, M. STB: Human-dependent Sociable Trash Box. In Proceedings of the 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI2010), Osaka, Japan, 2–5 March 2010; pp. 197–198. [Google Scholar] [CrossRef]

- Sung, J.Y.; Guo, L.; Grinter, R.; Christensen, H. “My Roomba Is Rambo”: Intimate Home Appliances. In Proceedings of the 9th International Conference, UbiComp 2007, Innsbruck, Austria, 16–19 September 2007; Volume 4717. [Google Scholar] [CrossRef]

- Dennett, D.C. The Intentional Stance; MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Matsushita, H.; Kurata, Y.; De Silva, P.R.S.; Okada, M. Talking-Ally: What is the future of robot’s utterance generation? In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 291–296. [Google Scholar] [CrossRef]

- Rajap, P.; Nakadai, S.; Nishi, M.; Yuasa, M.; Mukawa, N. Impression design of a life-like agent by its appearance, facial expressions, and gaze behaviors—Analysis of agent’s sidelong glance. In Proceedings of the 2007 IEEE International Conference on Systems, Man and Cybernetics, Montreal, QC, Canada, 7–10 October 2007; pp. 2630–2635. [Google Scholar] [CrossRef]

- Fukayama, A.; Ohno, T.; Mukawa, N.; Sawaki, M.; Hagita, N. Messages Embedded in Gaze of Interface Agents—Impression Management with Agent’s Gaze. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; pp. 41–48. [Google Scholar] [CrossRef]

- Manneville, P. Intermittency, self-similarity and 1/f spectrum in dissipative dynamical systems. J. Phys. 1980, 41, 1235–1243. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Perusquía-Hernández, M.; Balda, M.C.; Gómez Jáuregui, D.A.; Paez-Granados, D.; Dollack, F.; Salazar, J.V. Robot Mirroring: Promoting Empathy with an Artificial Agent by Reflecting the User’s Physiological Affective States. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1328–1333. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baba, T.; Isoyama, N.; Uchiyama, H.; Sakata, N.; Kiyokawa, K. Effects of AR-Based Home Appliance Agents on User’s Perception and Maintenance Behavior. Sensors 2023, 23, 4135. https://doi.org/10.3390/s23084135

Baba T, Isoyama N, Uchiyama H, Sakata N, Kiyokawa K. Effects of AR-Based Home Appliance Agents on User’s Perception and Maintenance Behavior. Sensors. 2023; 23(8):4135. https://doi.org/10.3390/s23084135

Chicago/Turabian StyleBaba, Takeru, Naoya Isoyama, Hideaki Uchiyama, Nobuchika Sakata, and Kiyoshi Kiyokawa. 2023. "Effects of AR-Based Home Appliance Agents on User’s Perception and Maintenance Behavior" Sensors 23, no. 8: 4135. https://doi.org/10.3390/s23084135

APA StyleBaba, T., Isoyama, N., Uchiyama, H., Sakata, N., & Kiyokawa, K. (2023). Effects of AR-Based Home Appliance Agents on User’s Perception and Maintenance Behavior. Sensors, 23(8), 4135. https://doi.org/10.3390/s23084135