Powder Bed Monitoring Using Semantic Image Segmentation to Detect Failures during 3D Metal Printing

Abstract

1. Introduction

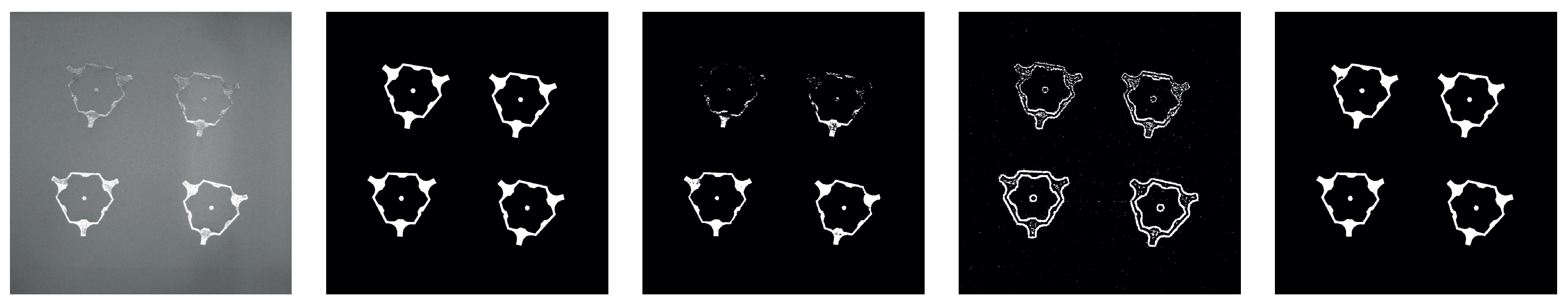

Related Works

2. Research Target

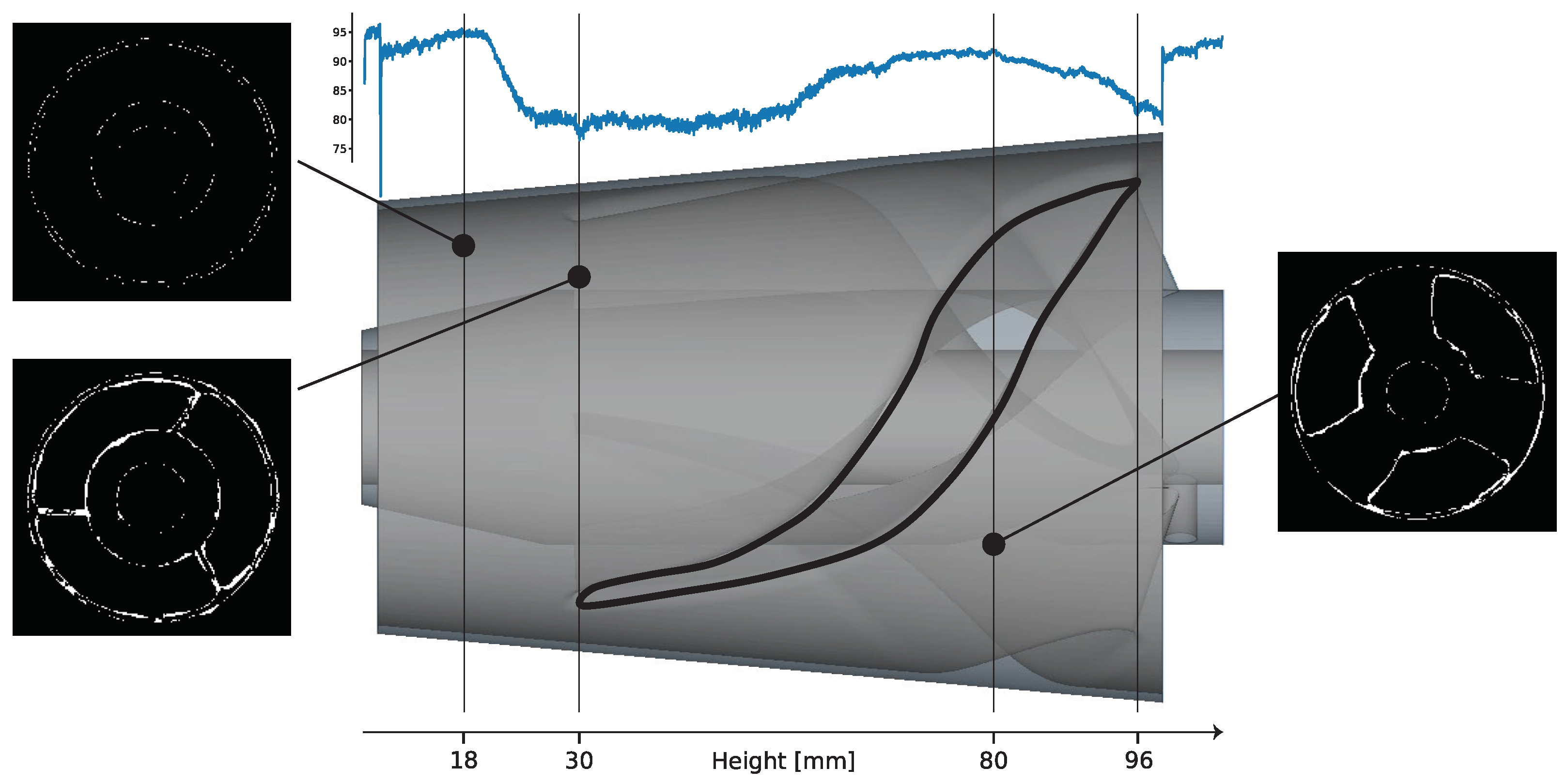

3. Materials and Methods

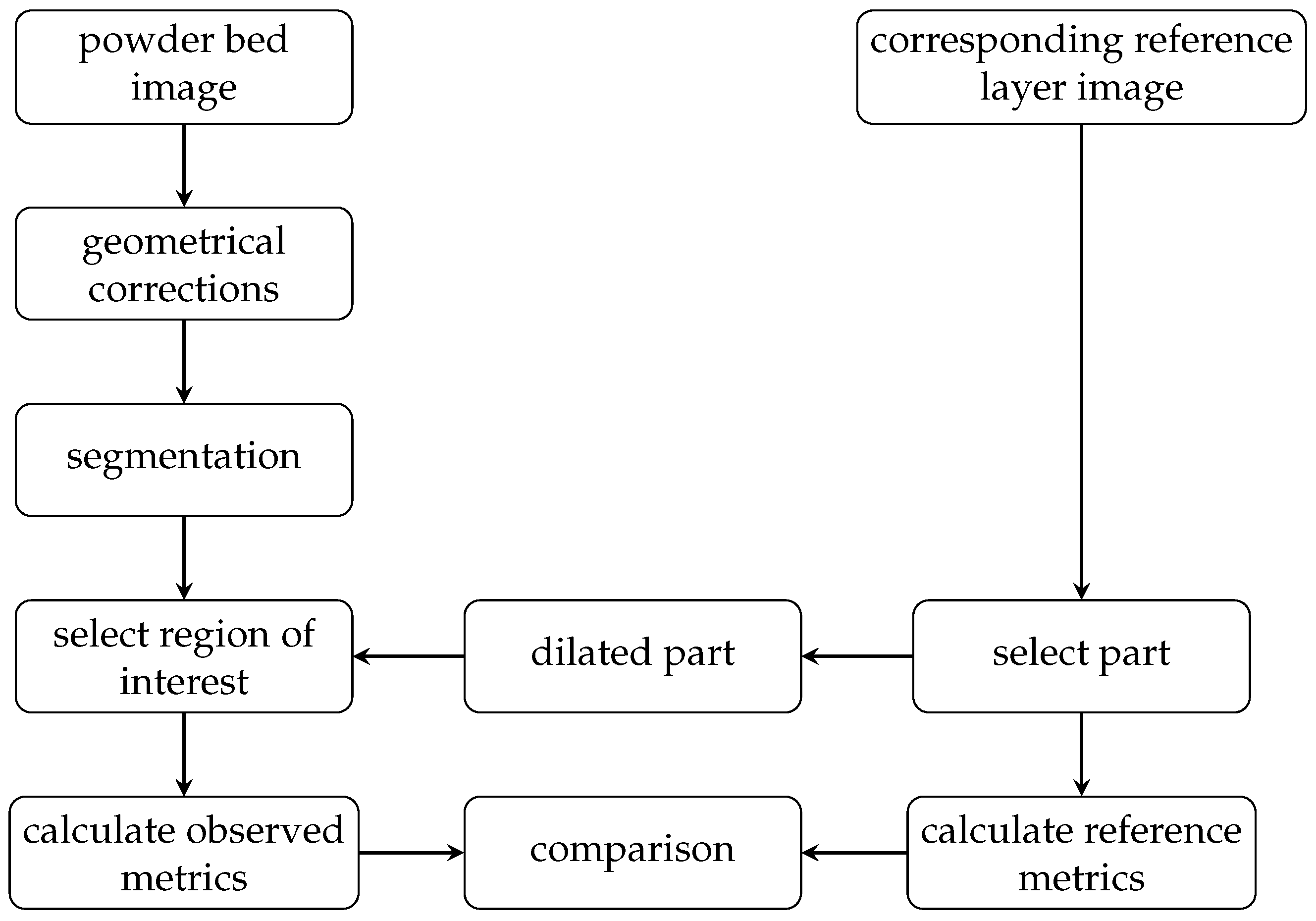

3.1. Monitoring Approach

3.2. Segmentation Approach

- The reflected light intensity is not homogeneous over the powder bed.

- Due to the surface structure—stripes—of the melted metal, the light reflections have different orientations.

- The heat distribution on the surface is not homogenous, although the camera has a low-pass filter, the measured intensity still results in biased brightness values due to the surface temperature.

- Obtaining the powder bed and reference layer images from the database.

- Extracting the corresponding mask for training.

- Training the neural network with actual images as input and the reference masks as targets.

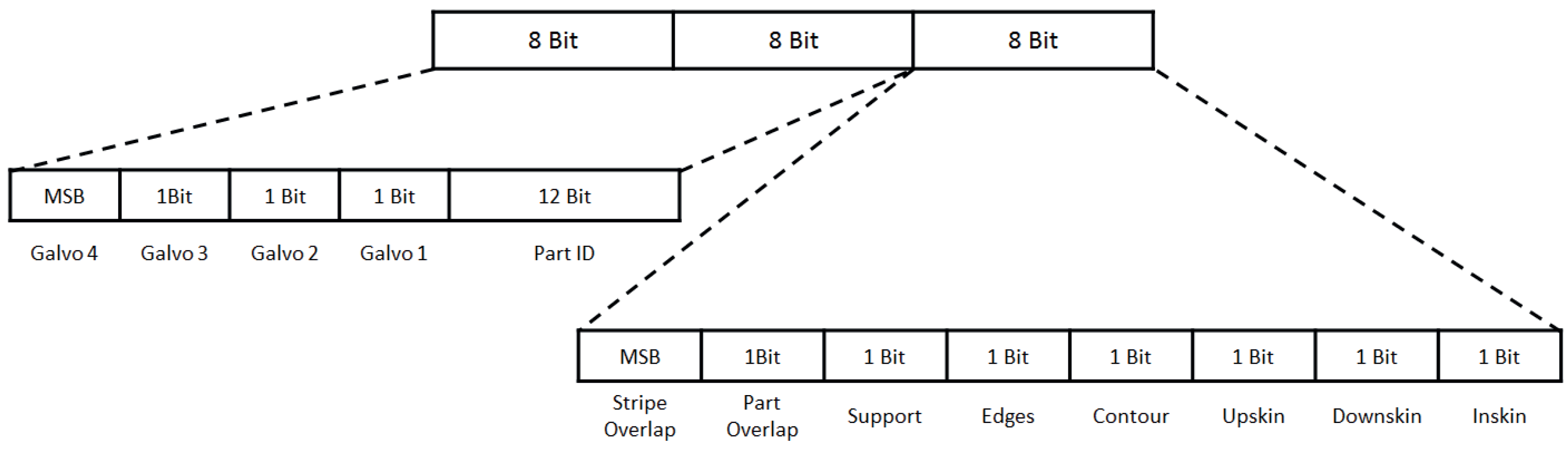

3.3. Annotating the Predicted Segmentation with the Part Identification

3.4. Metrics

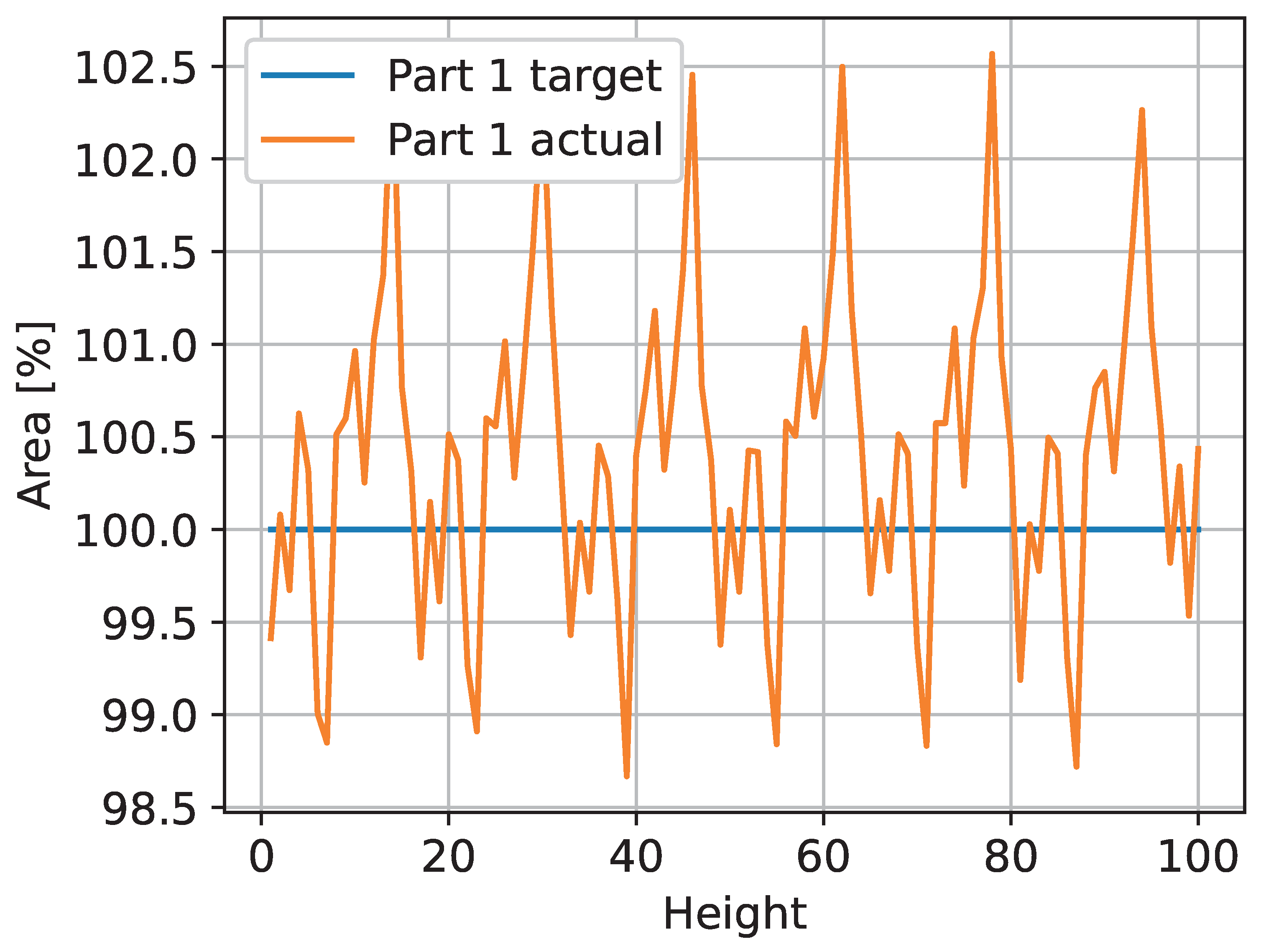

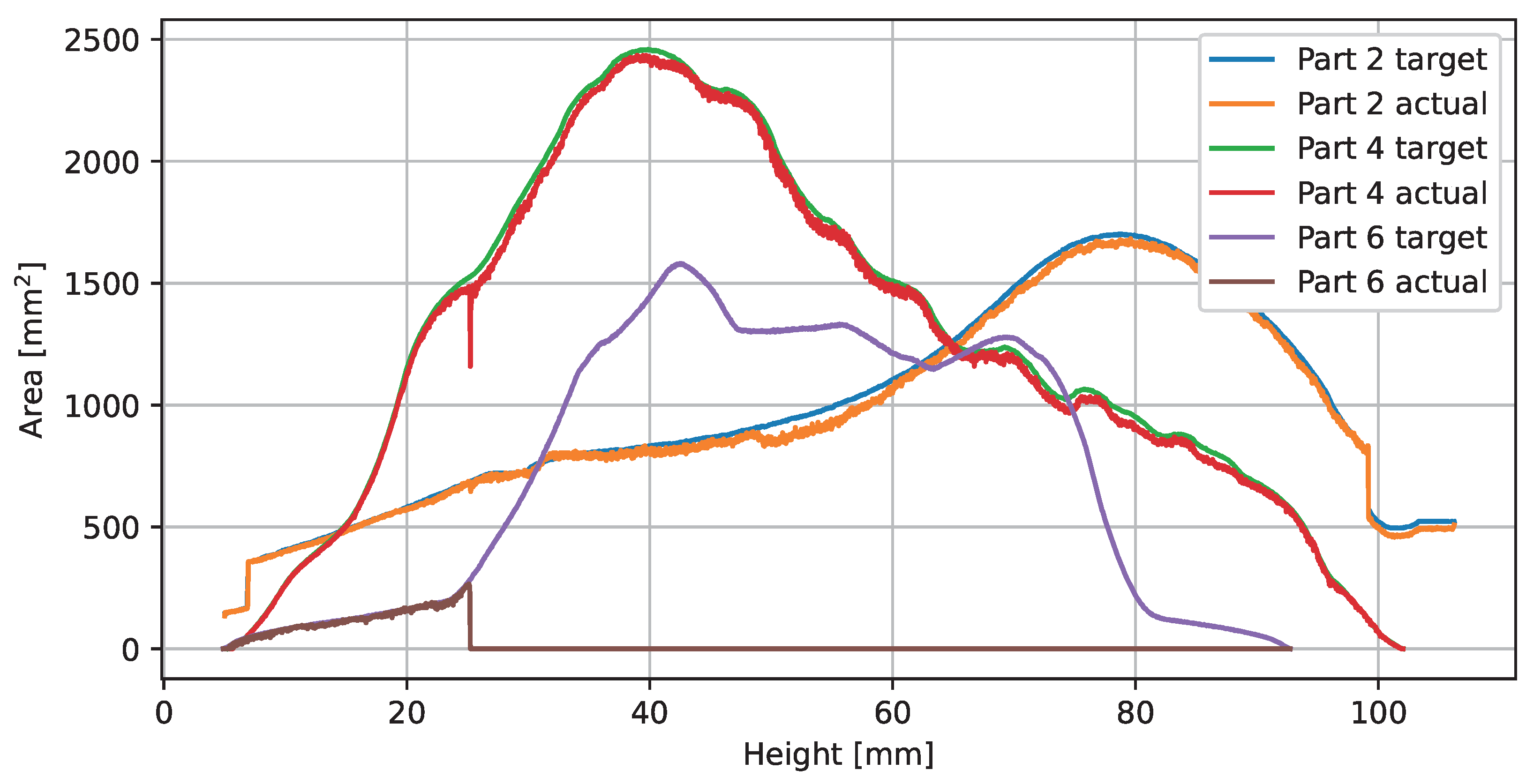

- Area deviation.

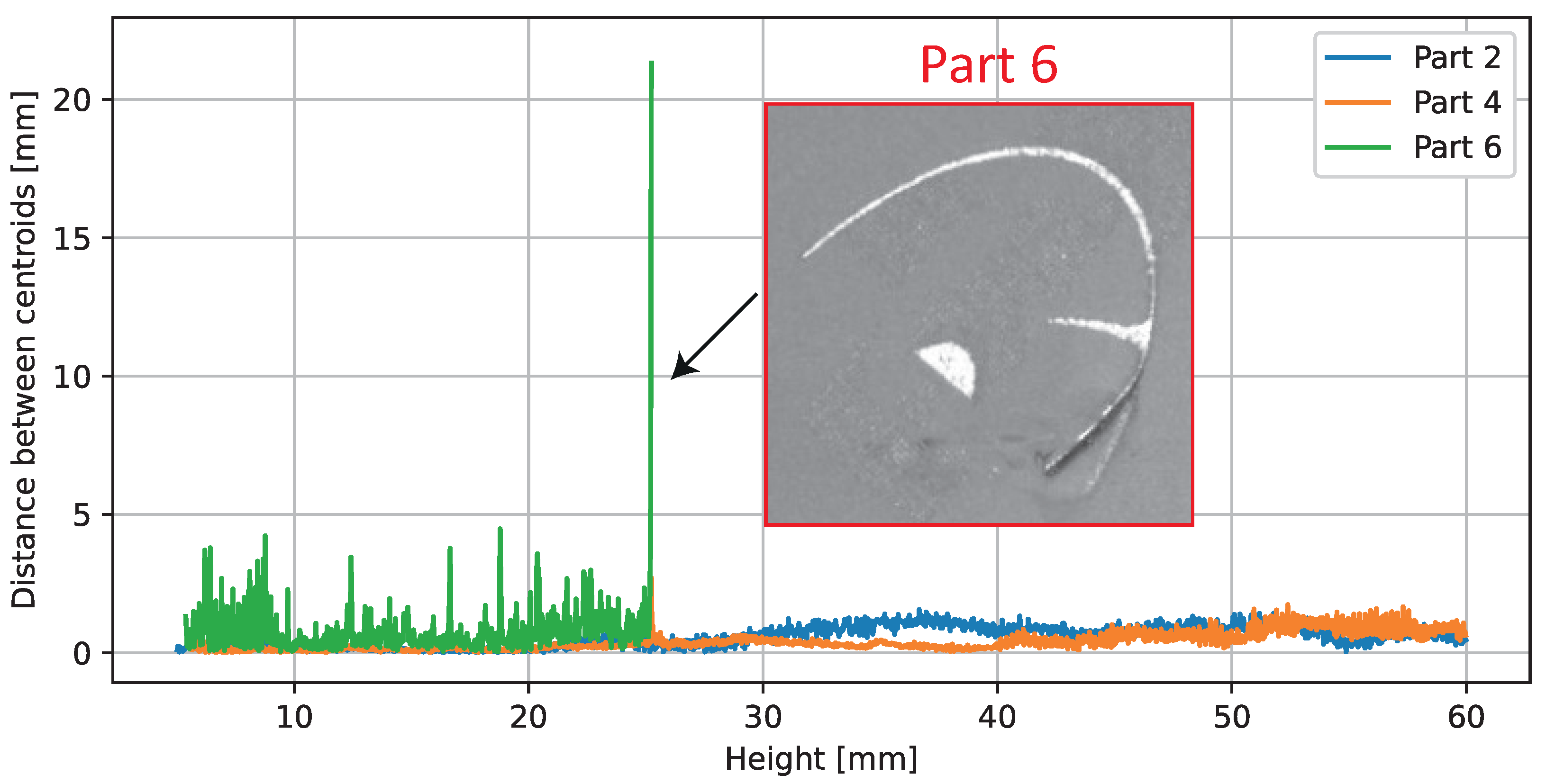

- Distance between the area centroids.

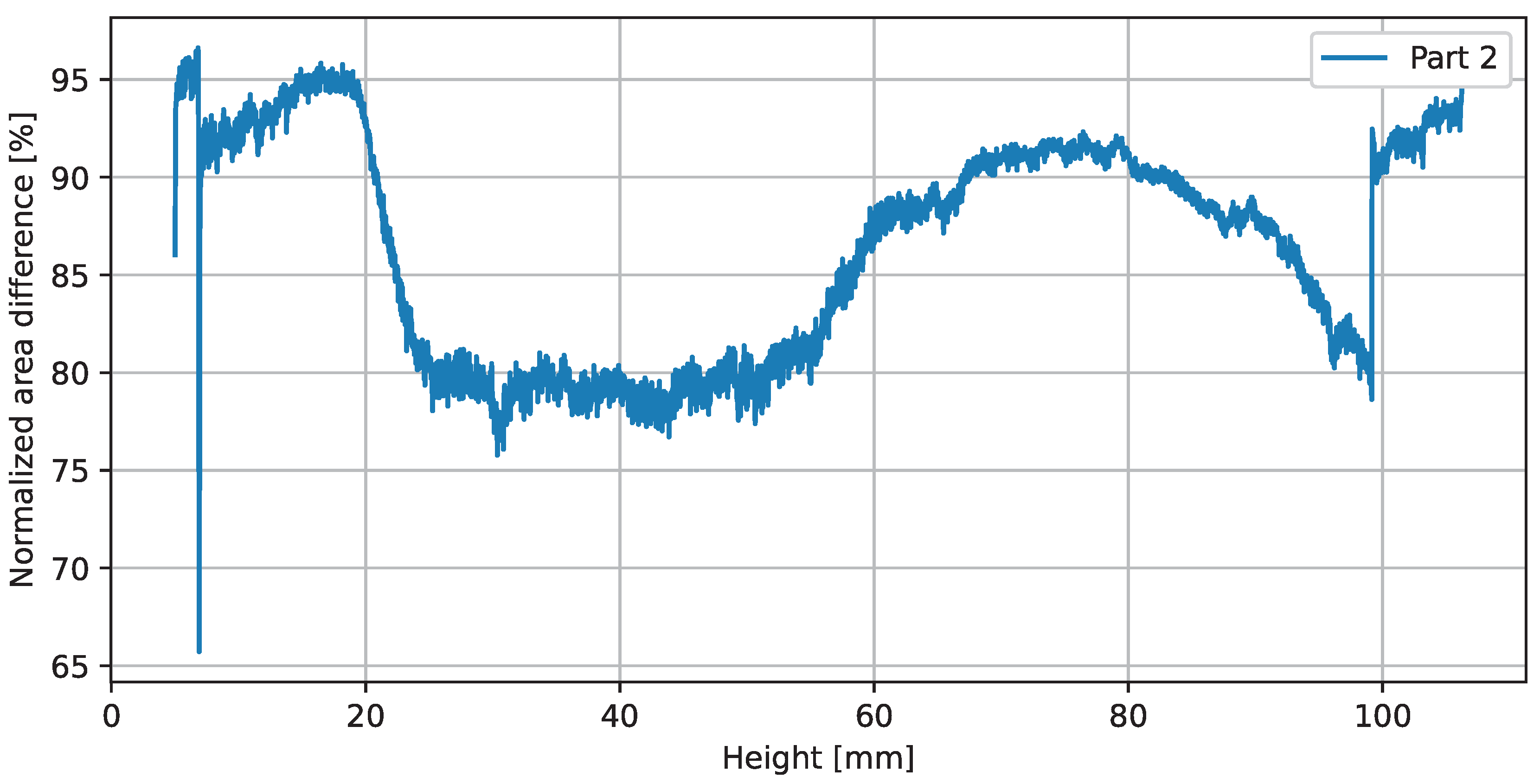

- Normalized area difference of an image generated by applying the logical operation xor on both images.

3.5. Proof of Concept

4. Results

5. Discussion

6. Conclusions and Further Research

6.1. Conclusion and Outlook

6.2. Further Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AM | Additive Manufacturing |

| CAD | Computer-Aided Design |

| CNN | Convolutional Neural Network |

| IoU | Intersection Over Union |

| PBF-LM | Powder Bed Fusion Laser Melting |

| SVMs | Support Vector Machines |

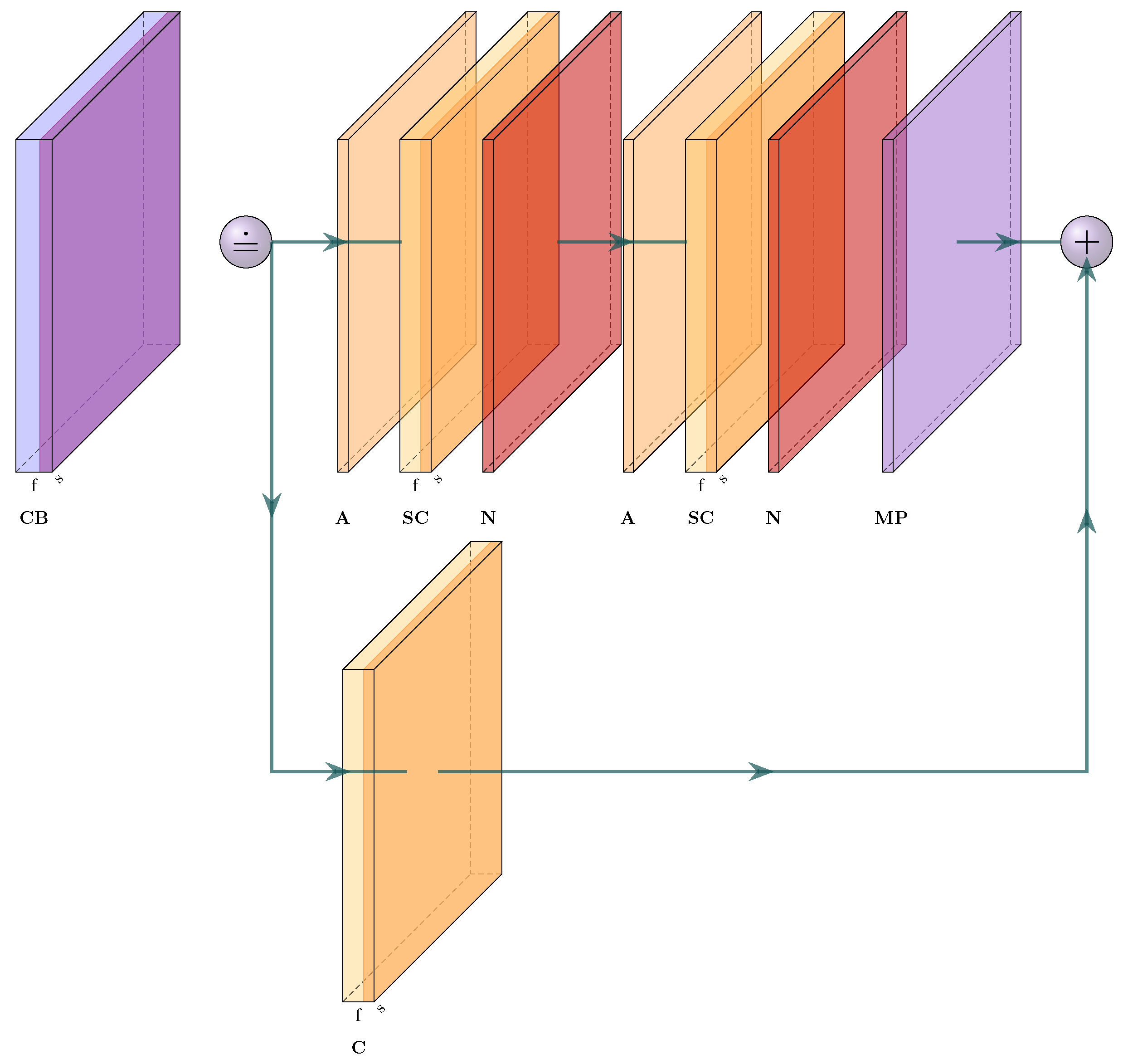

Appendix A. Xception Architecture

| Abbr. | Layer | Description |

|---|---|---|

| A | Activation layer | The used function is ReLu. |

| C | Convolutional layer | The number of used filters is at the bottom mid. |

| The input image size is in the lower right corner. | ||

| CB | Convolutional block | The block consists of several layers. |

| Crop | Cropping (2D) | Delete the edge pixels to match the desired output |

| resolution. | ||

| CT | Transposed convolutional layer | With f as the number of filters and s as the size. |

| MP | MaxPooling | Decrease image resolution. |

| N | Batch normalization | Standardize inputs. |

| SC | Seperable convolutional layer | With f as the number of filters and s as the size. |

| UpCB | Upsampling convolutional block | It consists of several layers. |

| UpS | Upsampling layer | Increase image dimensions. |

| ZP | Zero padding (2D) | Add 1 pixel at the edges. |

| The result is an image with size I (lower right corner). |

References

- Vock, S.; Klöden, B.; Kirchner, A.; Weißgärber, T.; Kieback, B. Powders for powder bed fusion: A review. Prog. Addit. Manuf. 2019, 4, 383–397. [Google Scholar] [CrossRef]

- Slotwinski, J.A.; Garboczi, E.J.; Stutzman, P.E.; Ferraris, C.F.; Watson, S.S.; Peltz, M.A. Characterization of metal powders used for additive manufacturing. J. Res. Natl. Inst. Stand. Technol. 2014, 119, 460. [Google Scholar] [CrossRef] [PubMed]

- Strondl, A.; Lyckfeldt, O.; Brodin, H.; Ackelid, U. Characterization and control of powder properties for additive manufacturing. Jom 2015, 67, 549–554. [Google Scholar] [CrossRef]

- Fischer, F.G.; Zimmermann, M.G.; Praetzsch, N.; Knaak, C. Monitoring of the Powder Bed Quality in Metal Additive Manufacturing Using Deep Transfer Learning. Mater. Des. 2022, 222, 111029. [Google Scholar] [CrossRef]

- Grasso, M.; Remani, A.; Dickins, A.; Colosimo, B.M.; Leach, R.K. In-Situ Measurement and Monitoring Methods for Metal Powder Bed Fusion: An Updated Review. Meas. Sci. Technol. 2021, 32, 112001. [Google Scholar] [CrossRef]

- Foster, B.K.; Reutzel, E.W.; Nassar, A.R.; Hall, B.T.; Brown, S.W.; Dickman, C.J. Optical, Layerwise Monitoring of Powder Bed Fusion. In Proceedings of the 2015 International Solid Freeform Fabrication Symposium, Austin, TX, USA, 10–12 August 2015; pp. 295–307. [Google Scholar]

- Ansari, M.A.; Crampton, A.; Parkinson, S. A Layer-Wise Surface Deformation Defect Detection by Convolutional Neural Networks in Laser Powder-Bed Fusion Images. Materials 2022, 15, 7166. [Google Scholar] [CrossRef] [PubMed]

- Gobert, C.; Reutzel, E.W.; Petrich, J.; Nassar, A.R.; Phoha, S. Application of Supervised Machine Learning for Defect Detection during Metallic Powder Bed Fusion Additive Manufacturing Using High Resolution Imaging. Addit. Manuf. 2018, 21, 517–528. [Google Scholar] [CrossRef]

- Ben naceur, M.; Akil, M.; Saouli, R.; Kachouri, R. Fully Automatic Brain Tumor Segmentation with Deep Learning-Based Selective Attention Using Overlapping Patches and Multi-Class Weighted Cross-Entropy. Med. Image Anal. 2020, 63, 101692. [Google Scholar] [CrossRef] [PubMed]

- Pagani, L.; Grasso, M.; Scott, P.J.; Colosimo, B.M. Automated Layerwise Detection of Geometrical Distortions in Laser Powder Bed Fusion. Addit. Manuf. 2020, 36, 101435. [Google Scholar] [CrossRef]

- Caltanissetta, F.; Grasso, M.; Petrò, S.; Colosimo, B.M. Characterization of In-Situ Measurements Based on Layerwise Imaging in Laser Powder Bed Fusion. Addit. Manuf. 2018, 24, 183–199. [Google Scholar] [CrossRef]

- zur Jacobsmühlen, J.; Achterhold, J.; Kleszczynski, S.; Witt, G.; Merhof, D. In Situ Measurement of Part Geometries in Layer Images from Laser Beam Melting Processes. Prog. Addit. Manuf. 2019, 4, 155–165. [Google Scholar] [CrossRef]

- Scime, L.; Beuth, J. Anomaly Detection and Classification in a Laser Powder Bed Additive Manufacturing Process Using a Trained Computer Vision Algorithm. Addit. Manuf. 2018, 19, 114–126. [Google Scholar] [CrossRef]

- Baumgartl, H.; Tomas, J.; Buettner, R.; Merkel, M. A Deep Learning-Based Model for Defect Detection in Laser-Powder Bed Fusion Using in-Situ Thermographic Monitoring. Prog. Addit. Manuf. 2020, 5, 277–285. [Google Scholar] [CrossRef]

- Scime, L.; Siddel, D.; Baird, S.; Paquit, V. Layer-Wise Anomaly Detection and Classification for Powder Bed Additive Manufacturing Processes: A Machine-Agnostic Algorithm for Real-Time Pixel-Wise Semantic Segmentation. Addit. Manuf. 2020, 36, 101453. [Google Scholar] [CrossRef]

- Imani, F.; Chen, R.; Diewald, E.; Reutzel, E.; Yang, H. Deep learning of variant geometry in layerwise imaging profiles for additive manufacturing quality control. J. Manuf. Sci. Eng. 2019, 141, 111001. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing Intersection-Over-Union in Deep Neural Networks for Image Segmentation. In Proceedings of the Advances in Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; pp. 234–244. [Google Scholar] [CrossRef]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X. Image Analysis Using Mathematical Morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 532–550. [Google Scholar] [CrossRef] [PubMed]

- Ansari, M.A.; Crampton, A.; Garrard, R.; Cai, B.; Attallah, M. A Convolutional Neural Network (CNN) classification to identify the presence of pores in powder bed fusion images. Int. J. Adv. Manuf. Technol. 2022, 120, 5133–5150. [Google Scholar] [CrossRef]

- Abdelrahman, M.; Reutzel, E.W.; Nassar, A.R.; Starr, T.L. Flaw detection in powder bed fusion using optical imaging. Addit. Manuf. 2017, 15, 1–11. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Phan, T.H.; Yamamoto, K. Resolving Class Imbalance in Object Detection with Weighted Cross Entropy Losses. 2020. Available online: https://arxiv.org/abs/2006.01413 (accessed on 10 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schmitt, A.-M.; Sauer, C.; Höfflin, D.; Schiffler, A. Powder Bed Monitoring Using Semantic Image Segmentation to Detect Failures during 3D Metal Printing. Sensors 2023, 23, 4183. https://doi.org/10.3390/s23094183

Schmitt A-M, Sauer C, Höfflin D, Schiffler A. Powder Bed Monitoring Using Semantic Image Segmentation to Detect Failures during 3D Metal Printing. Sensors. 2023; 23(9):4183. https://doi.org/10.3390/s23094183

Chicago/Turabian StyleSchmitt, Anna-Maria, Christian Sauer, Dennis Höfflin, and Andreas Schiffler. 2023. "Powder Bed Monitoring Using Semantic Image Segmentation to Detect Failures during 3D Metal Printing" Sensors 23, no. 9: 4183. https://doi.org/10.3390/s23094183

APA StyleSchmitt, A.-M., Sauer, C., Höfflin, D., & Schiffler, A. (2023). Powder Bed Monitoring Using Semantic Image Segmentation to Detect Failures during 3D Metal Printing. Sensors, 23(9), 4183. https://doi.org/10.3390/s23094183