RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach

Abstract

1. Introduction

- An end-to-end DL-based system has been proposed to detect and identify UAS, Bluetooth, and WIFI signals across various different noise levels.

- The model does not require any manual feature extraction steps, which reduces the computational overhead. The model exploits the RF signature of different devices for the detection and identification tasks.

- Stacked convolutional layers along with multiscale architecture have been utilized in the model, which assists in the extraction of crucial features from the noisy data without any assistance from the feature-extraction techniques.

- The performance of the model has been evaluated using different performance matrices (e.g., accuracy, precision, sensitivity, and F1-score) on the CardRF dataset.

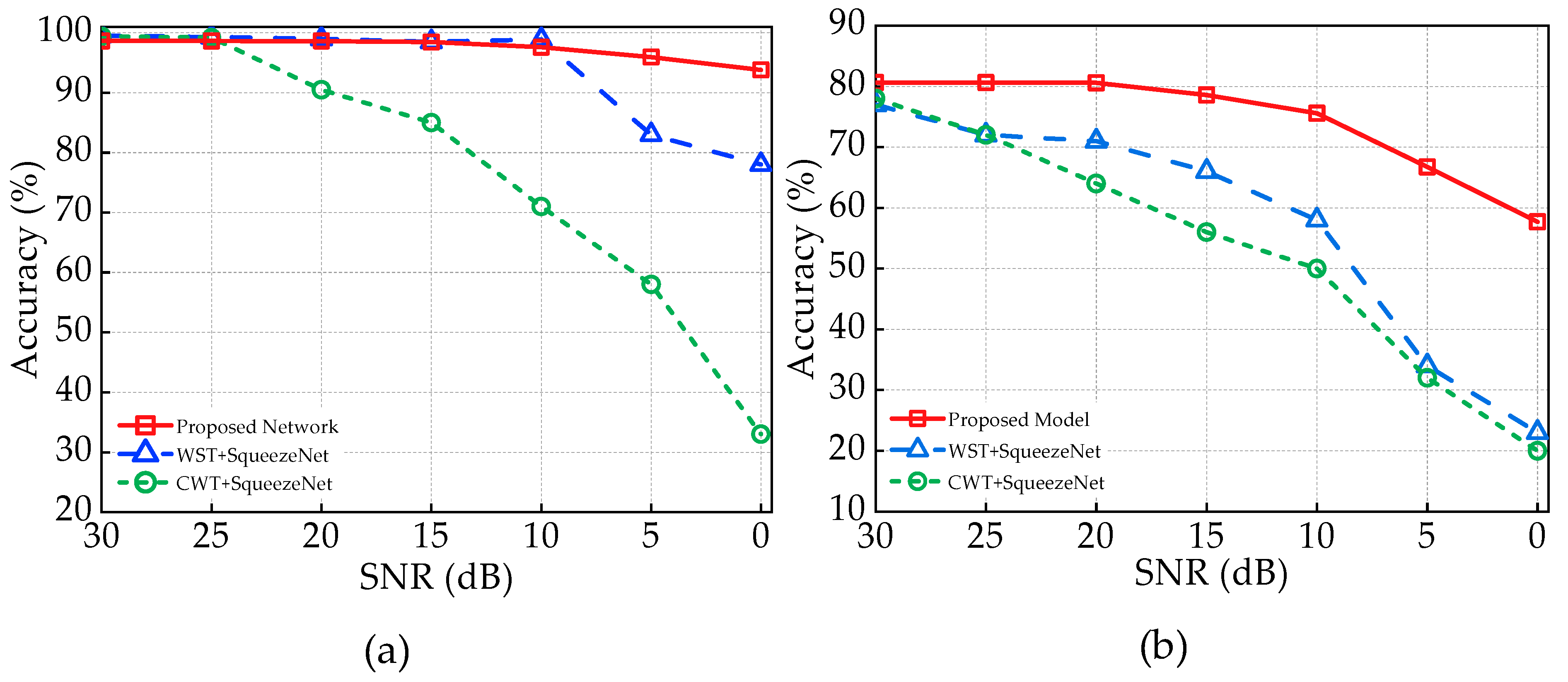

- After conducting comparative experiments, we have established that our proposed network outperforms the existing works in terms of performance and time complexity.

2. Methodology

2.1. RF Dataset Description

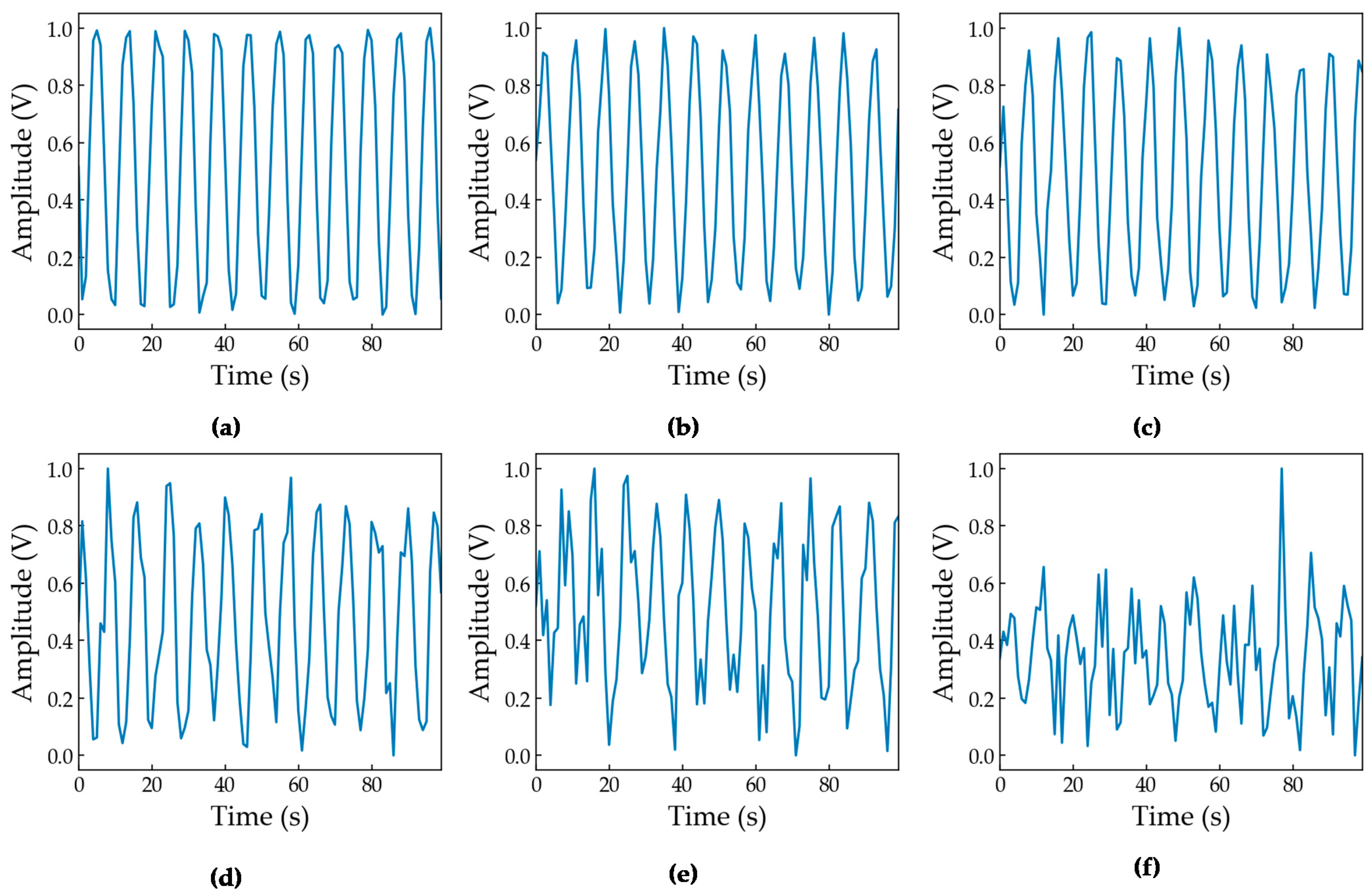

2.2. RF Signal Preprocessing

2.3. Noise Incorporation

2.4. Model Description

3. Experimental Results

3.1. Implementation Details and Performance Metrics

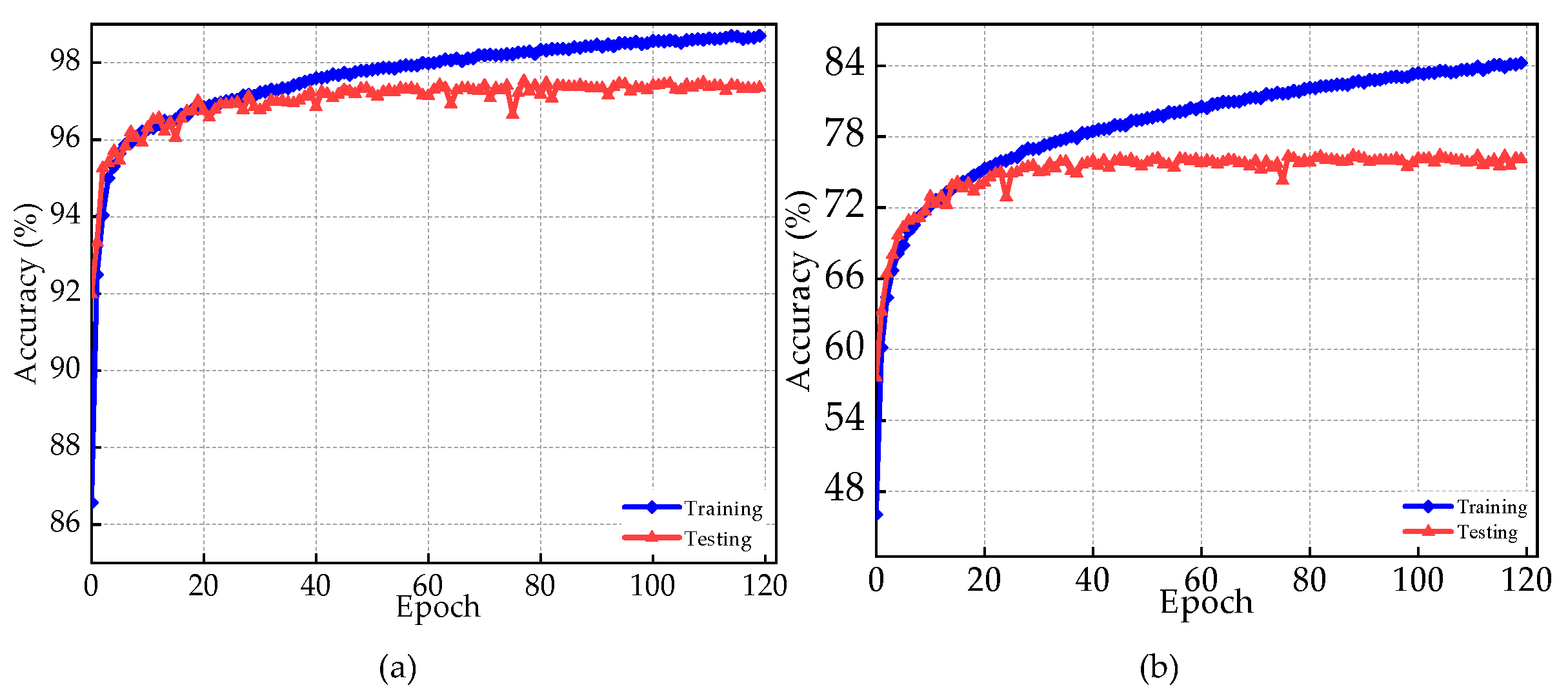

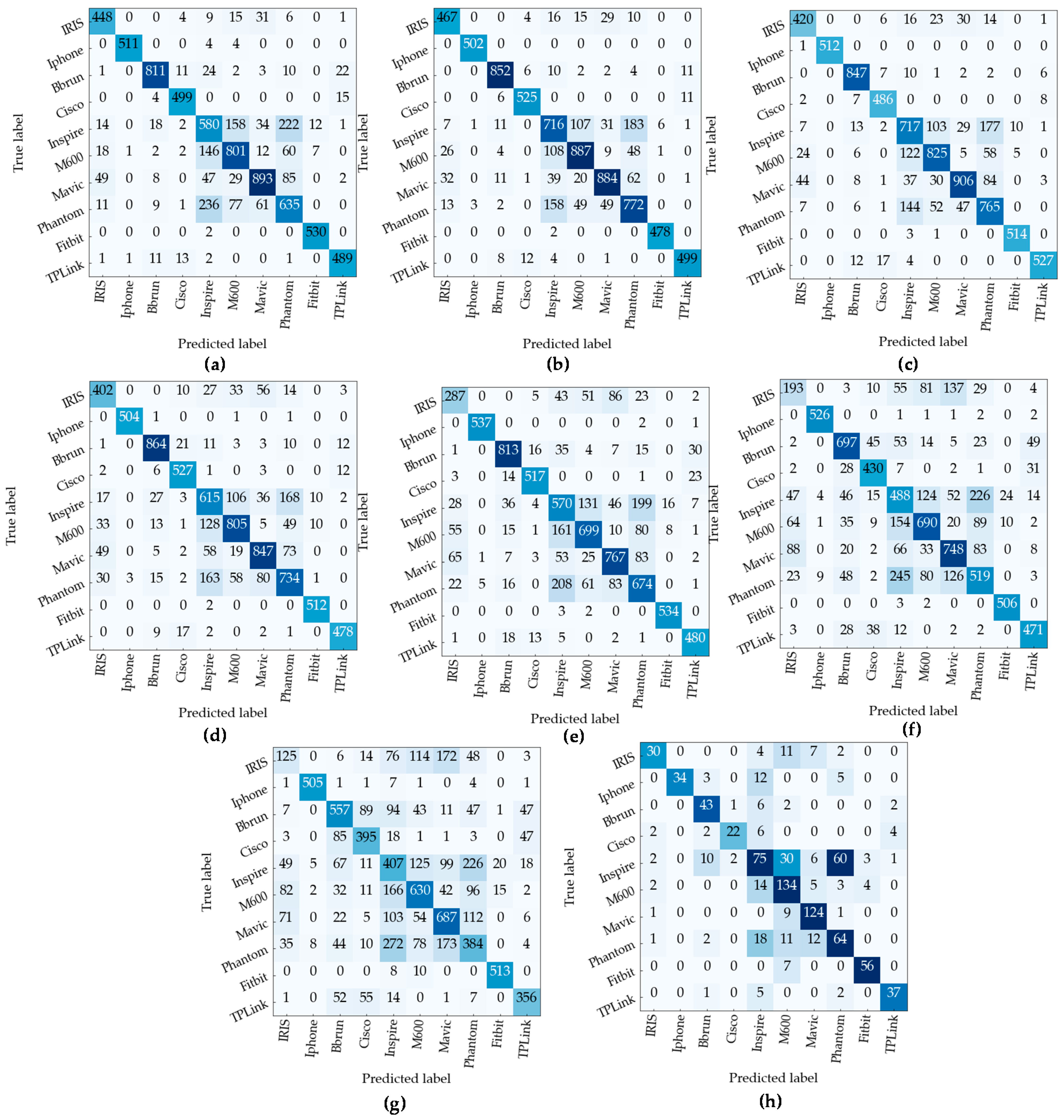

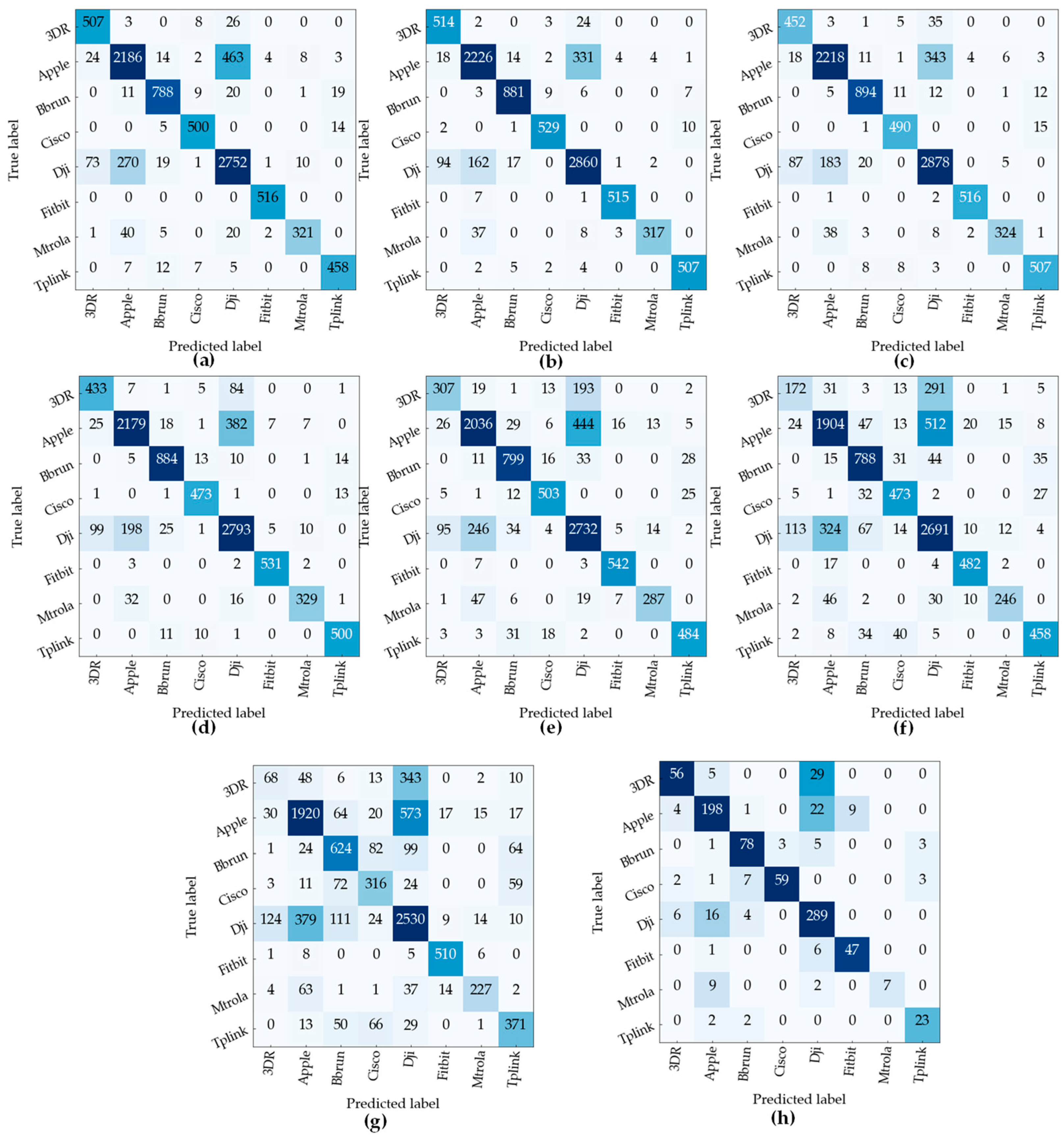

3.2. Performance Analysis

3.3. Computational Performance of the Proposed Model

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vemula, H. Multiple Drone Detection and Acoustic Scene Classification with Deep Learning. Brows. All Theses Diss. January 2018. Available online: https://corescholar.libraries.wright.edu/etd_all/2221 (accessed on 9 December 2022).

- Wilson, R.L. Ethical issues with use of Drone aircraft. In Proceedings of the International Symposium on Ethics in Science, Technology and Engineering, Chicago, IL, USA, 23–24 May 2014; IEEE: New York, NY, USA, 2014. [Google Scholar]

- Coveney, S.; Roberts, K. Lightweight UAV digital elevation models and orthoimagery for environmental applications: Data accuracy evaluation and potential for river flood risk modelling. Int. J. Remote Sens. 2017, 38, 3159–3180. [Google Scholar] [CrossRef]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous UAV with vision based on-board decision making for remote sensing and precision agriculture. In Proceedings of the Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Amazon Prime Air Drone Delivery Fleet Gets FAA Approval. Available online: https://www.cnbc.com/2020/08/31/amazon-prime-now-drone-delivery-fleet-gets-faa-approval.html (accessed on 9 December 2022).

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Guvenc, I. Wavelet transform analytics for RF-based UAV detection and identification system using machine learning. Pervasive Mob. Comput. 2022, 82, 101569. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. On the Localization of Wireless Targets: A Drone Surveillance Perspective. IEEE Netw. 2021, 35, 249–255. [Google Scholar] [CrossRef]

- Civilian Drone Crashes into Army Helicopter. Available online: https://nypost.com/2017/09/22/army-helicopter-hit-by-drone (accessed on 11 December 2022).

- Birch, G.C.; Griffin, J.C.; Erdman, M.K. UAS Detection Classification and Neutralization: Market Survey 2015; Sandia National Lab: Albuquerque, NM, USA, 2015. [Google Scholar]

- Huynh-The, T.; Pham, Q.V.; Van Nguyen, T.; Da Costa, D.B.; Kim, D.S. RF-UAVNet: High-Performance Convolutional Network for RF-Based Drone Surveillance Systems. IEEE Access 2022, 10, 49696–49707. [Google Scholar] [CrossRef]

- Khrissi, L.; El Akkad, N.; Satori, H.; Satori, K. Clustering method and sine cosine algorithm for image segmentation. Evol. Intell. 2022, 15, 669–682. [Google Scholar] [CrossRef]

- Khrissi, L.; Satori, H.; Satori, K.; el Akkad, N. An Efficient Image Clustering Technique based on Fuzzy C-means and Cuckoo Search Algorithm. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 423–432. [Google Scholar] [CrossRef]

- Ali, S.N.; Shuvo, S.B.; Al-Manzo, M.I.S.; Hasan, M.; Hasan, T. An End-to-end Deep Learning Framework for Real-Time Denoising of Heart Sounds for Cardiac Disease Detection in Unseen Noise. TechRxiv 2023. [Google Scholar] [CrossRef]

- Casabianca, P.; Zhang, Y. Acoustic-Based UAV Detection Using Late Fusion of Deep Neural Networks. Drones 2021, 5, 54. [Google Scholar] [CrossRef]

- Fu, R.; Al-Absi, M.A.; Kim, K.H.; Lee, Y.S.; Al-Absi, A.A.; Lee, H.J. Deep Learning-Based Drone Classification Using Radar Cross Section Signatures at mmWave Frequencies. IEEE Access 2021, 9, 161431–161444. [Google Scholar] [CrossRef]

- Sazdić-Jotić, B.; Pokrajac, I.; Bajčetić, J.; Bondžulić, B.; Obradović, D. Single and multiple drones detection and identification using RF based deep learning algorithm. Expert Syst. Appl. 2022, 187, 115928. [Google Scholar] [CrossRef]

- Allahham, M.S.; Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. DroneRF dataset: A dataset of drones for RF-based detection, classification and identification. Data Br. 2019, 26, 104313. [Google Scholar] [CrossRef] [PubMed]

- Mo, Y.; Huang, J.; Qian, G. Deep Learning Approach to UAV Detection and Classification by Using Compressively Sensed RF Signal. Sensors 2022, 22, 3072. [Google Scholar] [CrossRef] [PubMed]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Adeniran, A.A. Hierarchical Learning Framework for UAV Detection and Identification. IEEE J. Radio Freq. Identif. 2022, 6, 176–188. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Wang, B.; Wang, D. Plant leaves classification: A few-shot learning method based on siamese network. IEEE Access 2019, 7, 151754–151763. [Google Scholar] [CrossRef]

- Cardinal RF (CardRF): An Outdoor UAV/UAS/Drone RF Signals with Bluetooth and WiFi Signals Dataset|IEEE DataPort. Available online: https://ieee-dataport.org/documents/cardinal-rf-cardrf-outdoor-uavuasdrone-rf-signals-bluetooth-and-wifi-signals-dataset (accessed on 12 December 2022).

- Dai, J.; Du, Y.; Zhu, T.; Wang, Y.; Gao, L. Multiscale Residual Convolution Neural Network and Sector Descriptor-Based Road Detection Method. IEEE Access 2019, 7, 173377–173392. [Google Scholar] [CrossRef]

- Coletti, M.; Lunga, D.; Bassett, J.K.; Rose, A. Evolving larger convolutional layer kernel sizes for a settlement detection deep-learner on summit. In Proceedings of the Third Workshop on Deep Learning on Supercomputers (DLS), Denver, CO, USA, 17 November 2019; IEEE: New York, NY, USA, 2019; pp. 36–44. [Google Scholar]

- Kulwa, F.; Li, C.; Zhang, J.; Shirahama, K.; Kosov, S.; Zhao, X.; Jiang, T.; Grzegorzek, M. A new pairwise deep learning feature for environmental microorganism image analysis. Environ. Sci. Pollut. Res. 2022, 29, 51909–51926. [Google Scholar] [CrossRef] [PubMed]

- Chen, A.; Li, C.; Zou, S.; Rahaman, M.M.; Yao, Y.; Chen, H.; Yang, H.; Zhao, P.; Hu, W.; Liu, W.; et al. SVIA dataset: A new dataset of microscopic videos and images for computer-aided sperm analysis. Biocybern. Biomed. Eng. 2022, 42, 204–214. [Google Scholar] [CrossRef]

| Device Type | Make | Model Name | Number of Signals |

|---|---|---|---|

| UAV and/or UAV controller | Beebeerun | FPV RC drone mini quadcopter | 245 |

| DJI | Inspire | 700 | |

| Matrice 600 | 700 | ||

| Mavic Pro 1 | 700 | ||

| Phantom 4 | 700 | ||

| 3DR | Iris FS-TH9x | 350 | |

| WIFI | Cisco | Linksys E3200 | 350 |

| TP-link | TL-WR940N | 350 | |

| Bluetooth | Apple | iPhone 6S | 350 |

| iPhone 7 | 350 | ||

| iPad 3 | 350 | ||

| FitBit | Charge3 smartwatch | 350 | |

| Motorolla | E5 Cruise | 350 |

| Initial Feature Extraction Block | |||

|---|---|---|---|

| Layer | Output Volume | ||

| Input | (1024) | ||

| Reshape | (1024, 1) | ||

| Convolution 1D 1 | (512, 64) | ||

| ReLU 1 | (512, 64) | ||

| MaxPooling | (255, 64) | ||

| Multiscale Feature Extraction Block | |||

| Branch 1 | Branch 2 | ||

| Layer | Output Volume | Layer | Output Volume |

| Convolution 1D 2 | (255, 64) | Convolution 1D 10 | (255, 64) |

| ReLU 2 | (255, 64) | ReLU 10 | (255, 64) |

| Convolution 1D 3 | (255, 64) | Convolution 1D 11 | (255, 64) |

| Add 1 | (255, 64) | Add 5 | (255, 64) |

| ReLU 3 | (255, 64) | ReLU 11 | (255, 64) |

| Convolution 1D 4 | (255, 128) | Convolution 1D 12 | (255, 128) |

| ReLU 4 | (255, 128) | ReLU 12 | (255, 128) |

| Convolution 1D 5 | (255, 128) | Convolution 1D 13 | (255, 64) |

| Dense 1 | (255, 64) | Dense 4 | (255, 64) |

| Add 2 | (255, 64) | Add 6 | (255, 64) |

| ReLU 5 | (255, 64) | ReLU 13 | (255, 64) |

| Convolution 1D 6 | (255, 256) | Convolution 1D 14 | (255, 256) |

| ReLU 6 | (255, 256) | ReLU 16 | (255, 256) |

| Convolution 1D 7 | (255, 256) | Convolution 1D 15 | (255, 256) |

| Dense 2 | (255, 64) | Dense 5 | (255, 64) |

| Add 3 | (255, 64) | Add 7 | (255, 64) |

| ReLU 7 | (255, 64) | ReLU 14 | (255, 64) |

| Convolution 1D 8 | (255, 256) | Convolution 1D 16 | (255, 256) |

| ReLU 8 | (255, 256) | ReLU 18 | (255, 256) |

| Convolution 1D 9 | (255, 256) | Convolution 1D 17 | (255, 256) |

| Dense 3 | (255, 64) | Dense 6 | (255, 64) |

| Add 4 | (255, 64) | Add 8 | (255, 64) |

| ReLU 9 | (255, 64) | ReLU 17 | (255, 64) |

| Averagepooling 1 | (127, 64) | Averagepooling 2 | (127, 64) |

| Dropout 1 | (127, 64) | Dropout 2 | (127, 64) |

| Terminal Block | |||

| Layer | Output Volume | ||

| Add 9 | (127, 64) | ||

| Flatten | (8, 128) | ||

| Dense 7 | (3,)/(10,)/(8,) | ||

| Hyperparameters | Values |

|---|---|

| Train data shape | (51,765, 1024), (51,765, 3) (Detection stage) (43,732, 1024), (43,732, 10) (Specific identification stage) (43,732, 1024), (43,732, 8) (Manufacturer Identification stage) |

| Test data shape | (9135, 1024), (9135, 3) (Detection stage) (7718, 1024), (7718, 10) (Specific identification stage) (43,732, 1024), (43,732, 8) (Manufacturer identification stage) |

| Learning rate | 0.001 |

| Number of epochs | 120 |

| Cost function | Categorical cross-entropy |

| Activation function | ReLU, softmax |

| Optimizer | Adam |

| Batch size | 512 |

| Noise Level | Signal Detection Task | Device Identification Task | ||||

|---|---|---|---|---|---|---|

| Kernel 3 and 5 (%) | Kernel 3 and 7 (%) | Kernel 5 and 7 (%) | Kernel 3 and 5 (%) | Kernel 3 and 7 (%) | Kernel 5 and 7 (%) | |

| 30 dB | 98.63 | 98.64 | 98.64 | 80.50 | 80.51 | 80.62 |

| 25 dB | 98.60 | 98.61 | 98.63 | 80.50 | 80.60 | 80.61 |

| 20 dB | 98.20 | 98.27 | 98.62 | 79.49 | 79.96 | 80.60 |

| 15 dB | 98.04 | 98.36 | 98.46 | 78.26 | 78.39 | 78.58 |

| 10 dB | 96.10 | 96.12 | 97.59 | 73.72 | 74.13 | 75.58 |

| 5 dB | 94.65 | 94.85 | 96.00 | 66.29 | 66.35 | 66.73 |

| 0 dB | 92.86 | 92.88 | 93.81 | 55.29 | 56.50 | 57.70 |

| Unseen | 91.33 | 91.40 | 95.88 | 66.20 | 67.45 | 68.78 |

| Overall | 97.00 | 96.60 | 97.53 | 74.00 | 75.54 | 76.42 |

| Signal | ||||

|---|---|---|---|---|

| Bluetooth | 98.95 | 98.16 | 98.02 | 98.5 |

| UAS | 97.53 | 98.06 | 98.0 | 98.0 |

| WIFI | 98.53 | 93.23 | 94.23 | 93.72 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, S.S.; Chakma, A.; Rahman, M.H.; Bin Mofidul, R.; Alam, M.M.; Utama, I.B.K.Y.; Jang, Y.M. RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors 2023, 23, 4202. https://doi.org/10.3390/s23094202

Alam SS, Chakma A, Rahman MH, Bin Mofidul R, Alam MM, Utama IBKY, Jang YM. RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors. 2023; 23(9):4202. https://doi.org/10.3390/s23094202

Chicago/Turabian StyleAlam, Syed Samiul, Arbil Chakma, Md Habibur Rahman, Raihan Bin Mofidul, Md Morshed Alam, Ida Bagus Krishna Yoga Utama, and Yeong Min Jang. 2023. "RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach" Sensors 23, no. 9: 4202. https://doi.org/10.3390/s23094202

APA StyleAlam, S. S., Chakma, A., Rahman, M. H., Bin Mofidul, R., Alam, M. M., Utama, I. B. K. Y., & Jang, Y. M. (2023). RF-Enabled Deep-Learning-Assisted Drone Detection and Identification: An End-to-End Approach. Sensors, 23(9), 4202. https://doi.org/10.3390/s23094202