A Multiscale Instance Segmentation Method Based on Cleaning Rubber Ball Images

Abstract

:1. Introduction

2. Related Work

- (1)

- Using FCN [29] to generate larger-resolution prototype masks which are not specific to any instance.

- (2)

- The object detection branch adds an extra head to predict the mask factor vector for instance-specific weighted encoding of the prototype mask.

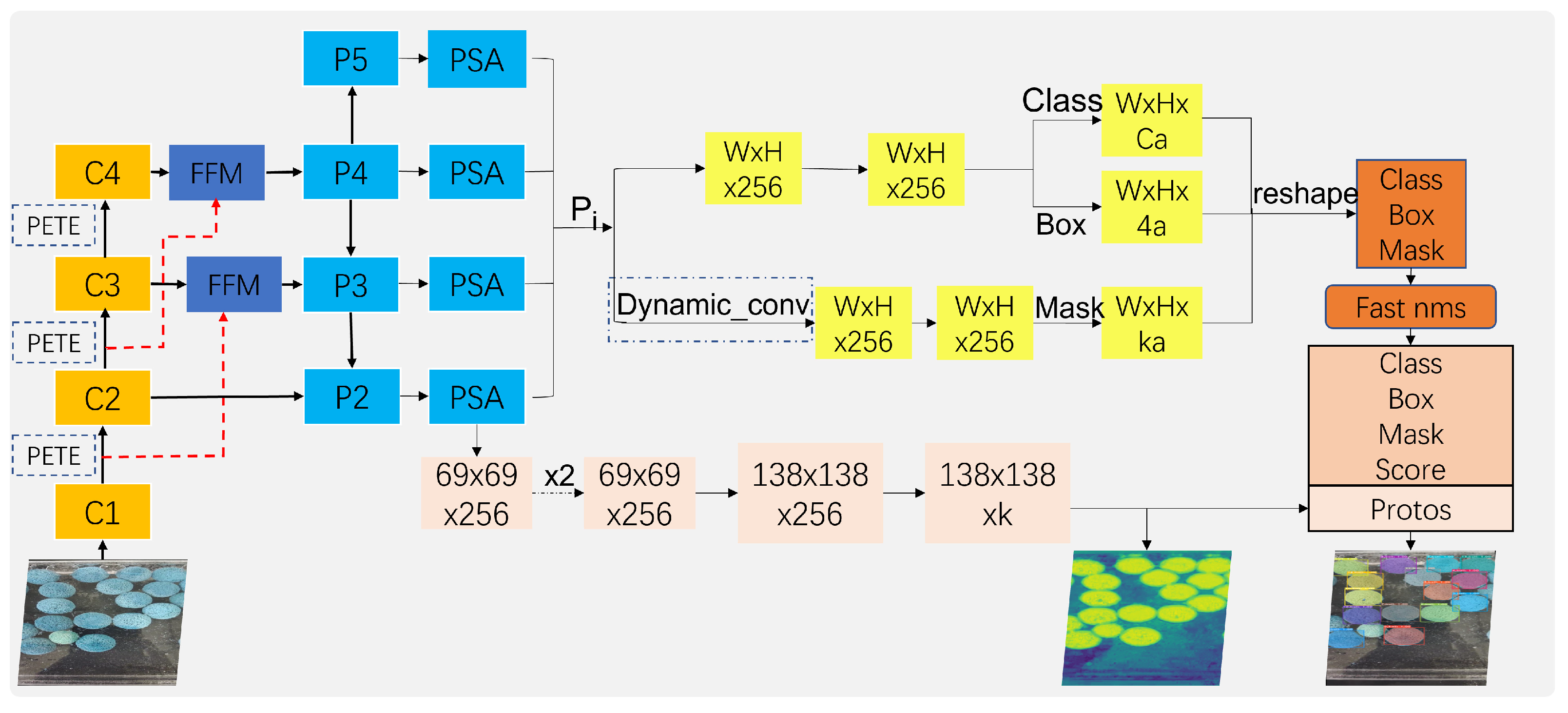

3. A Multi-Scale Feature Fusion Real-Time Instance Segmentation Model Based on Attention Mechanism

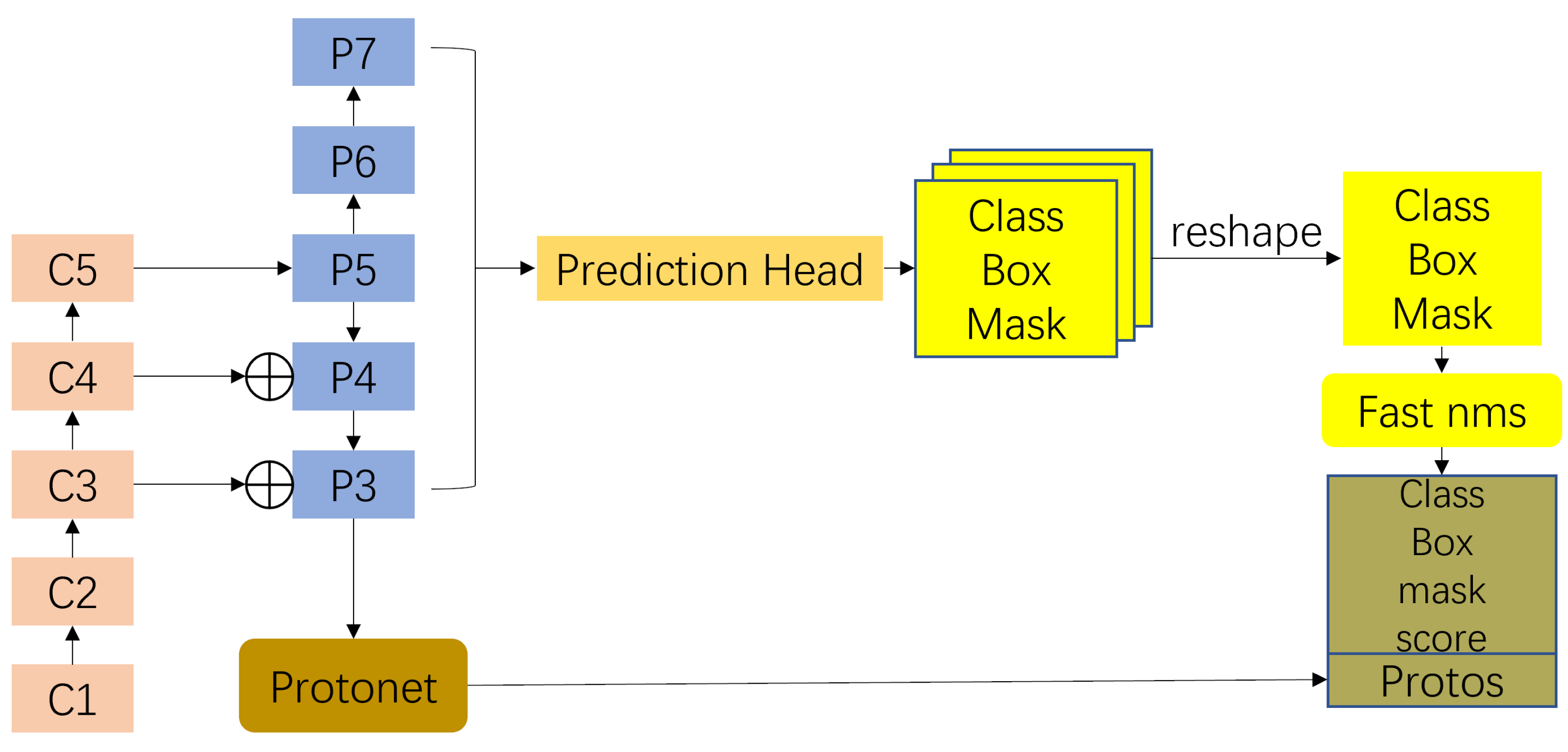

3.1. Yolact Network

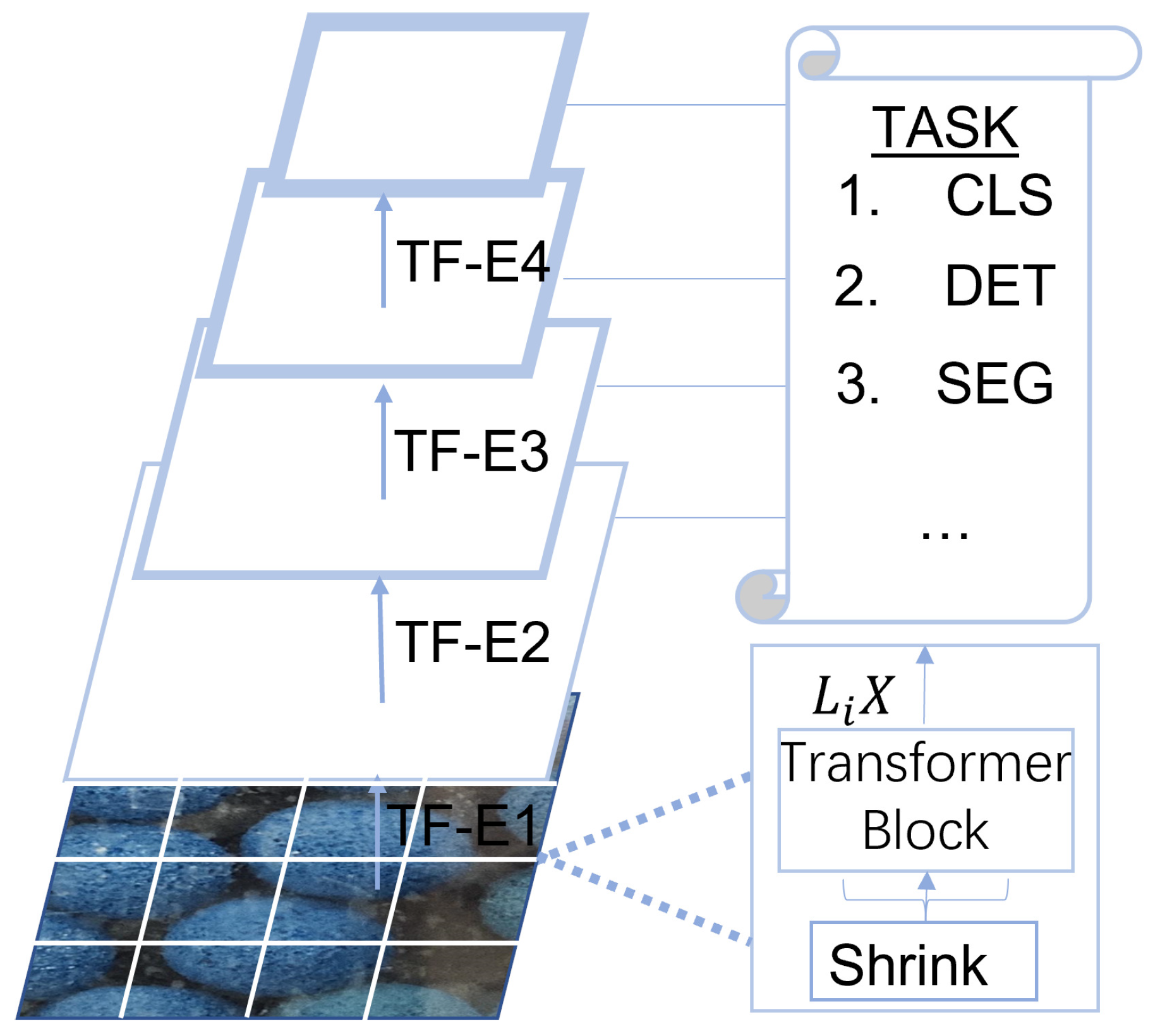

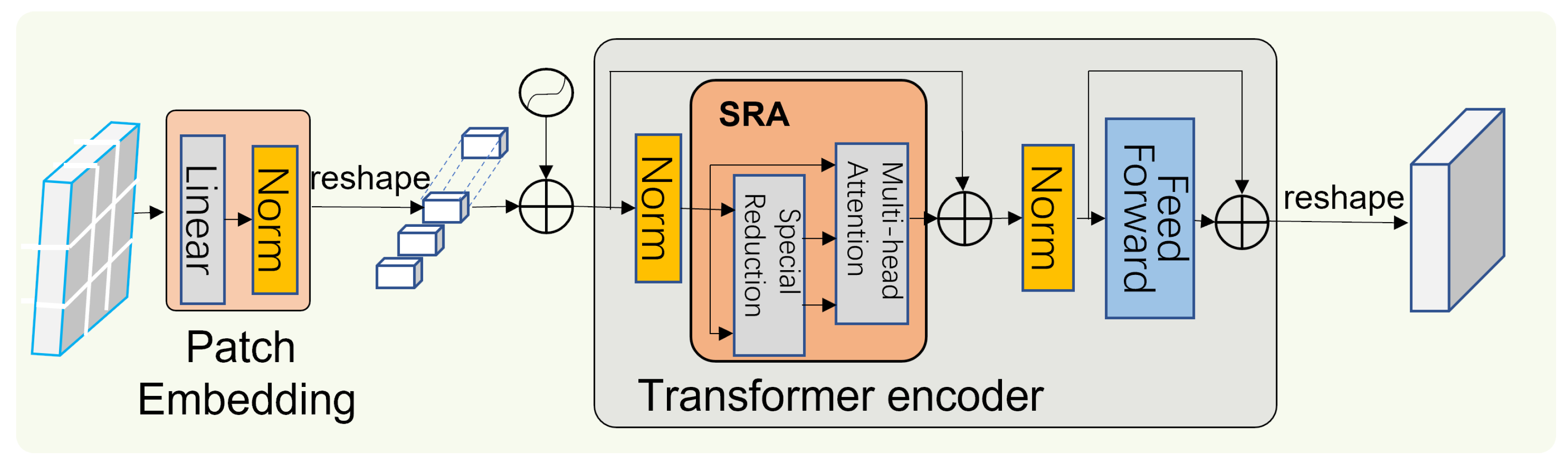

3.2. Pyramid Vision Transformer

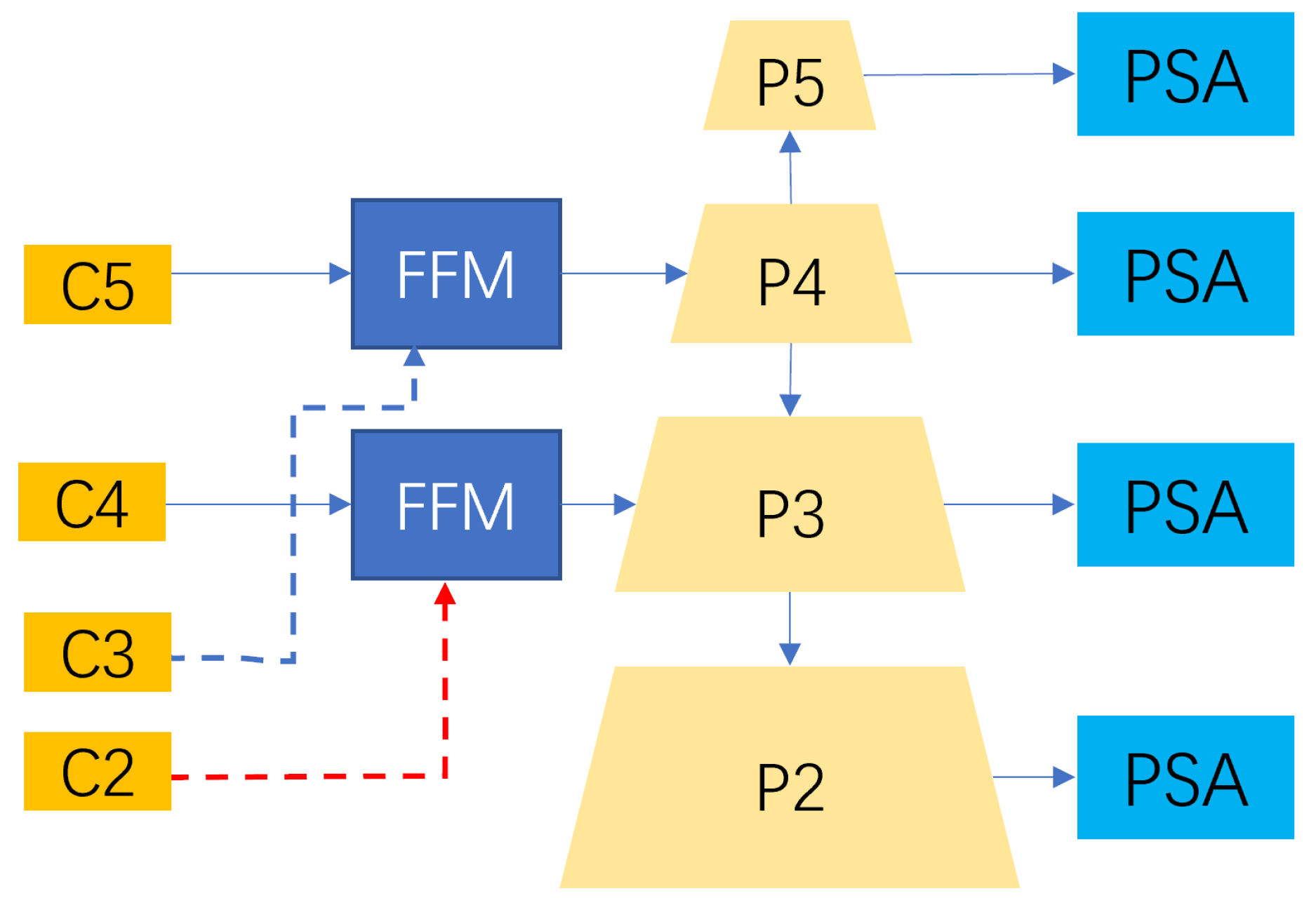

3.3. Improved FPN in the Model

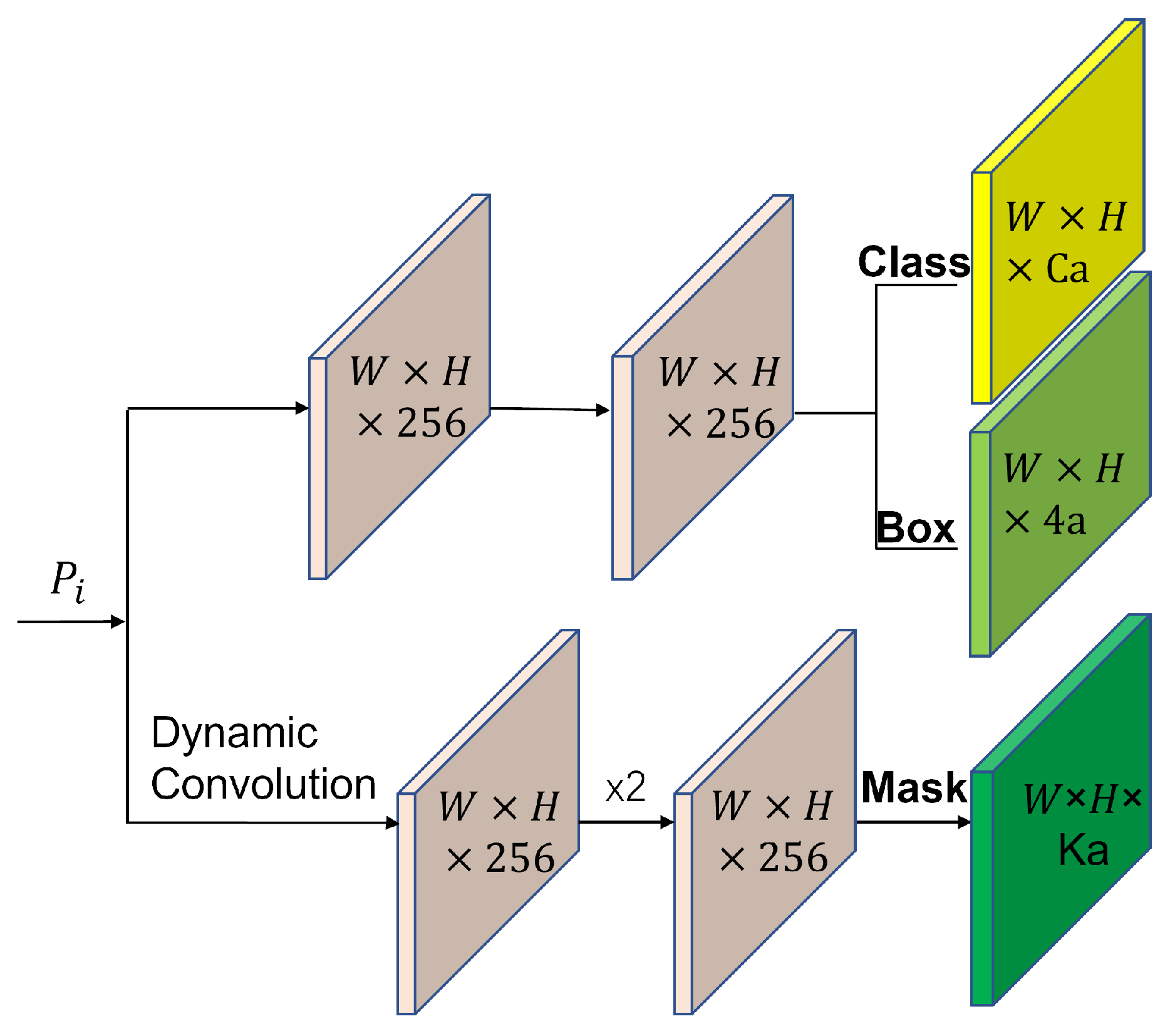

3.4. Prediction Head in Model

3.5. SIoU Loss Function in Model

4. Experiments and Results

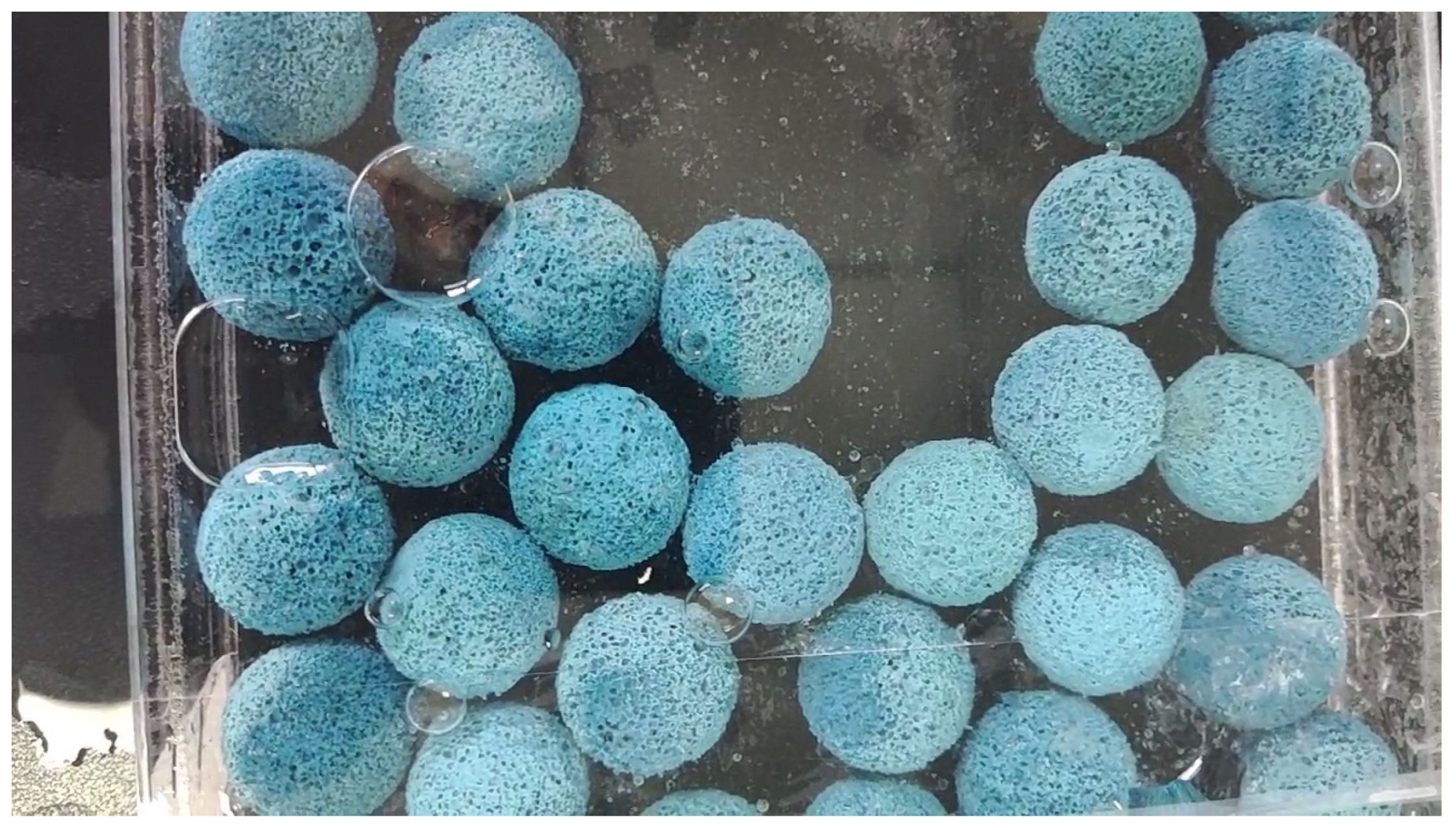

4.1. Dataset and Experimental Environment

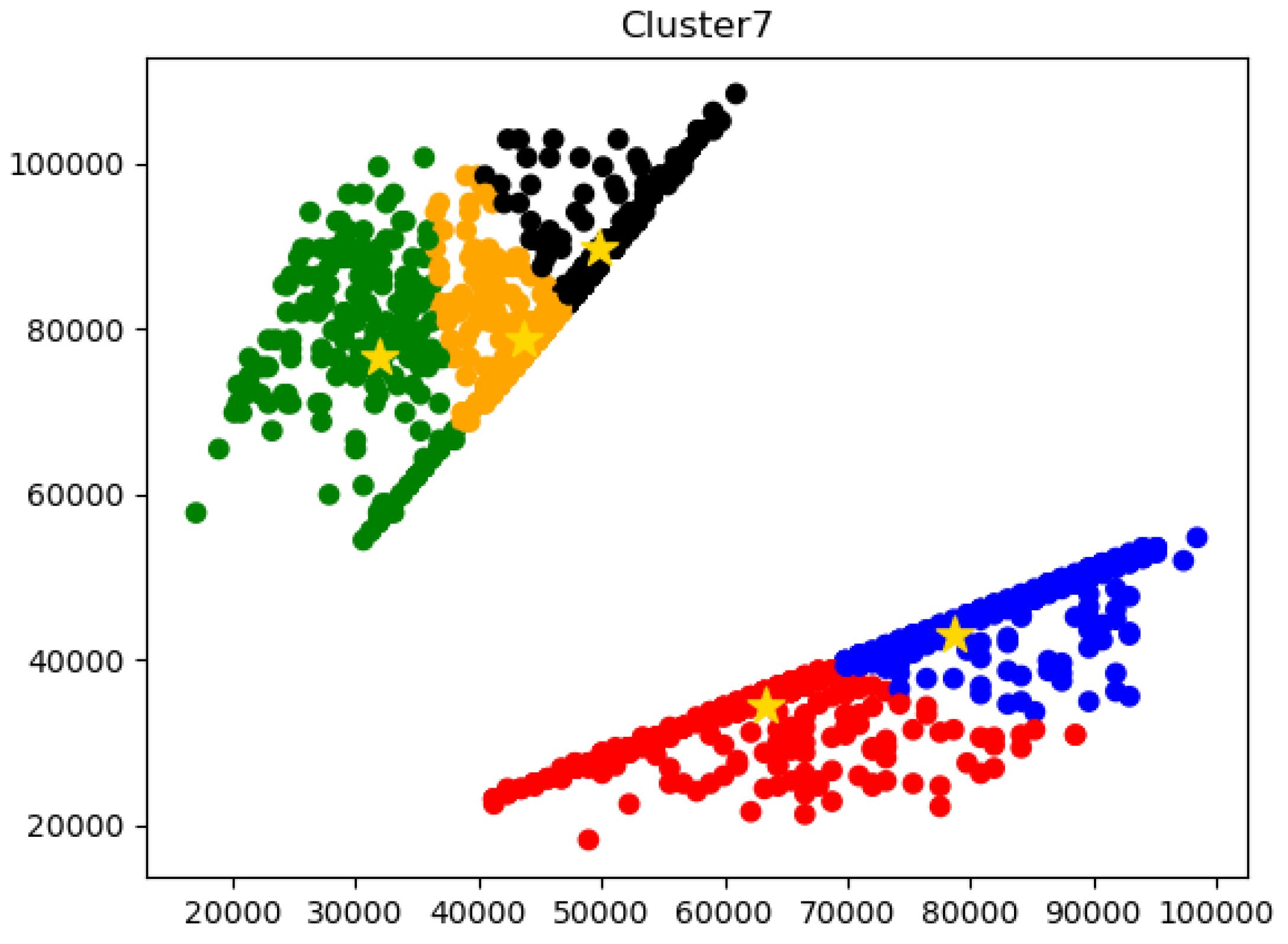

4.2. Generate Anchor Box

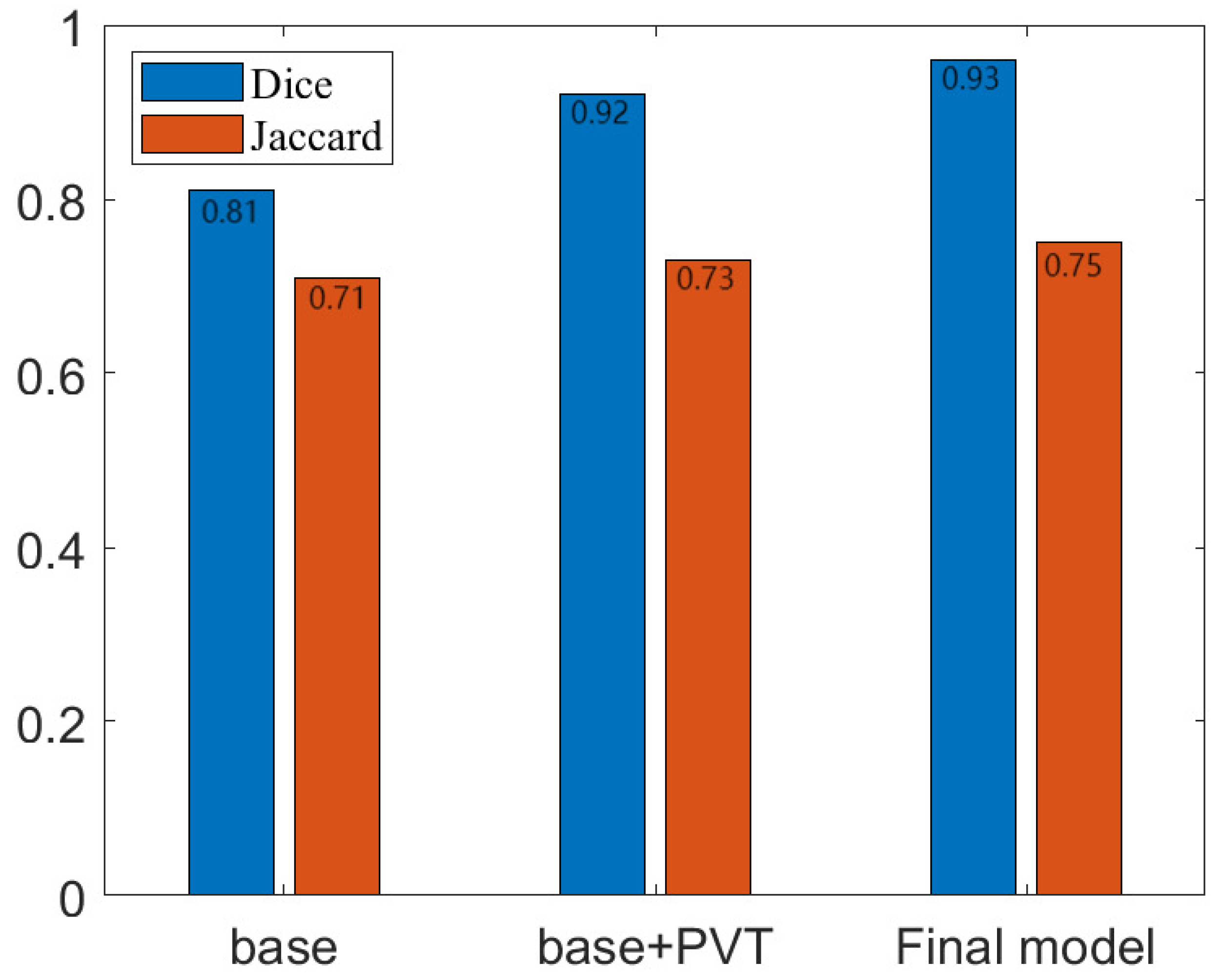

4.3. Ablation Experiment

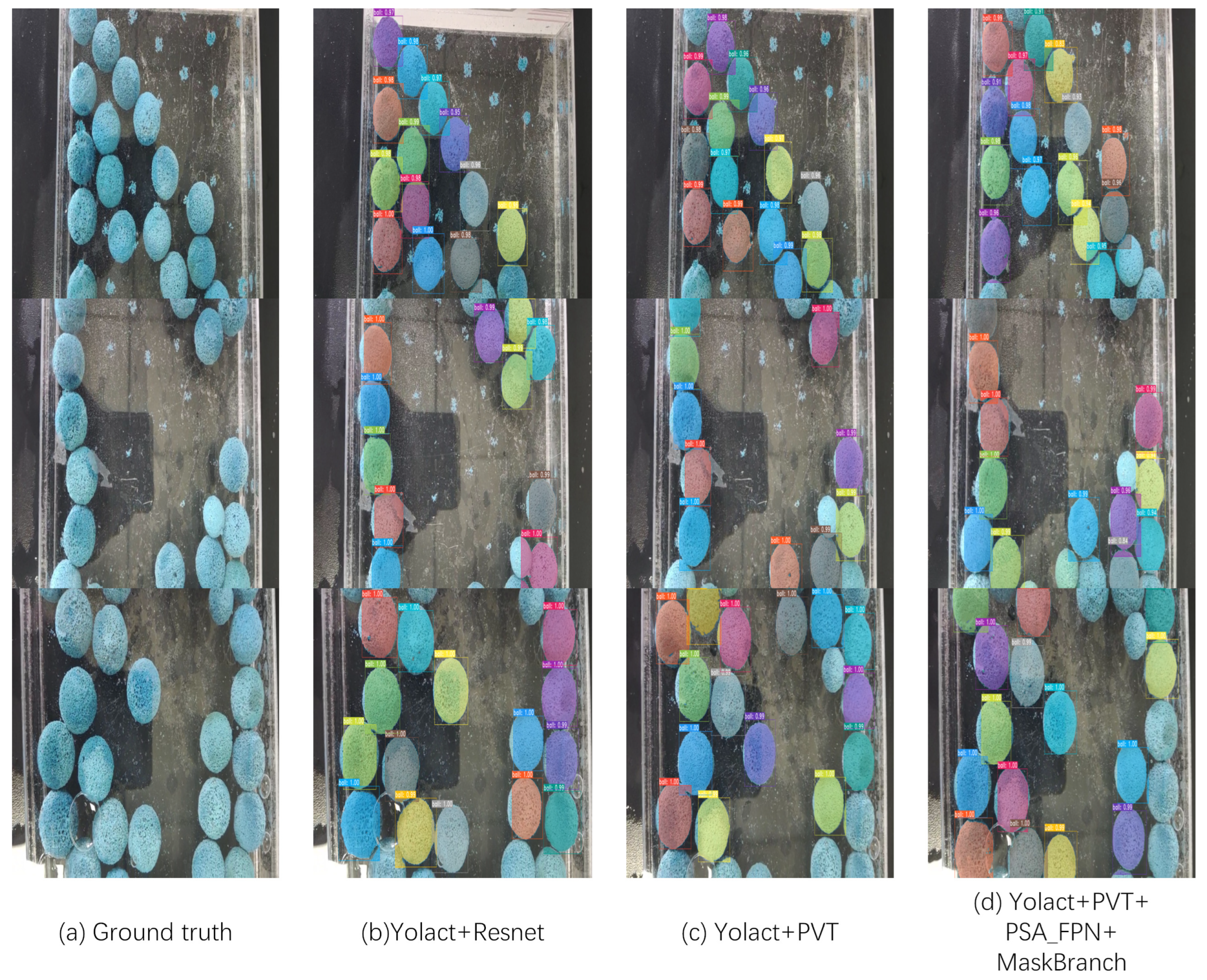

4.4. Analysis of Network Model Test Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Sample Availability

References

- Yan, X.; Zhou, L.; Zhang, W. On-line Cleaning Technology of rubber ball in Circulating Water Cooler. Petrochem. Equip. 2004, 05, 60–61. [Google Scholar]

- Yang, Y.; Luo, W.; Yu, B.; Zhu, H. Technical Analysis and Research on the Improvement of Condenser Rubber Ball Cleaning System. East China Electr. Power 2007, 04, 56–58. [Google Scholar]

- Bezdek, J.C.; Ehrlich, R.; Full, W. FCM: The fuzzy c-means clustering algorithm. Comput. Geosci. 1984, 10, 191–203. [Google Scholar] [CrossRef]

- Wang, Y.; Fu, L.; Liu, L.; Nian, R.; Yan, T.; Lendasse, A. Stable underwater image segmentation in high quality via MRF model. In Proceedings of the OCEANS 2015-MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, Y.; Li, H. Design of Refined Segmentation Model for Underwater Images. In Proceedings of the 2020 5th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 13–15 November 2020; pp. 282–287. [Google Scholar] [CrossRef]

- Wei, W.; Shen, X.; Qian, Q. A Local Threshold Segmentation Method Based on Multi-direction Grayscale Wave. In Proceedings of the 2010 Fifth International Conference on Frontier of Computer Science and Technology, Changchun, China, 18–22 August 2010; pp. 166–170. [Google Scholar] [CrossRef]

- He, K.; Georgia, G.; Piotr, D.; Ross, G. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 154–196. [Google Scholar]

- Raducu, G.; Cristian, Z.; Foșalău, C.; Marcin, S.; David, C. Faster R-CNN: An Approach to Real-Time Object Detection, 2018. In Proceedings of the 2018 International Conference and Exposition on Electrical and Power Engineering (EPE), Iasi, Romania, 18–19 October 2018; pp. 0165–0168. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6402–6411. [Google Scholar] [CrossRef]

- Li, B.; Wang, T.; Yang, K.; Wang, Y.; Zhao, Z. Infrared image instance segmentation algorithm of power substation equipment based on improved Mask R-CNN. J. North China Electr. Power Univ. (Nat. Sci. Ed.) 2023, 50, 91–99. [Google Scholar]

- Guo, Z.; Guo, D.; Gu, Z.; Zheng, H.; Zheng, B. Unsupervised Underwater Image Clearness via Transformer. In Proceedings of the OCEANS 2022-Chennai, Chennai, India, 21–24 February 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Xu, X.; Qin, Y.; Xi, D.; Ming, R.; Xia, J. MulTNet: A Multi-Scale Transformer Network for Marine Image Segmentation toward Fishing. Sensors 2022, 22, 7224. [Google Scholar] [CrossRef]

- Huang, A.; Zhong, G.; Li, H.; Daniel, P. Underwater Object Detection Using Restructured SSD. Artif. Intell. 2022, 13604, 526–537. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Yong, Y. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9156–9165. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.; Song, K. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 10–17 October 2021; pp. 548–558. [Google Scholar]

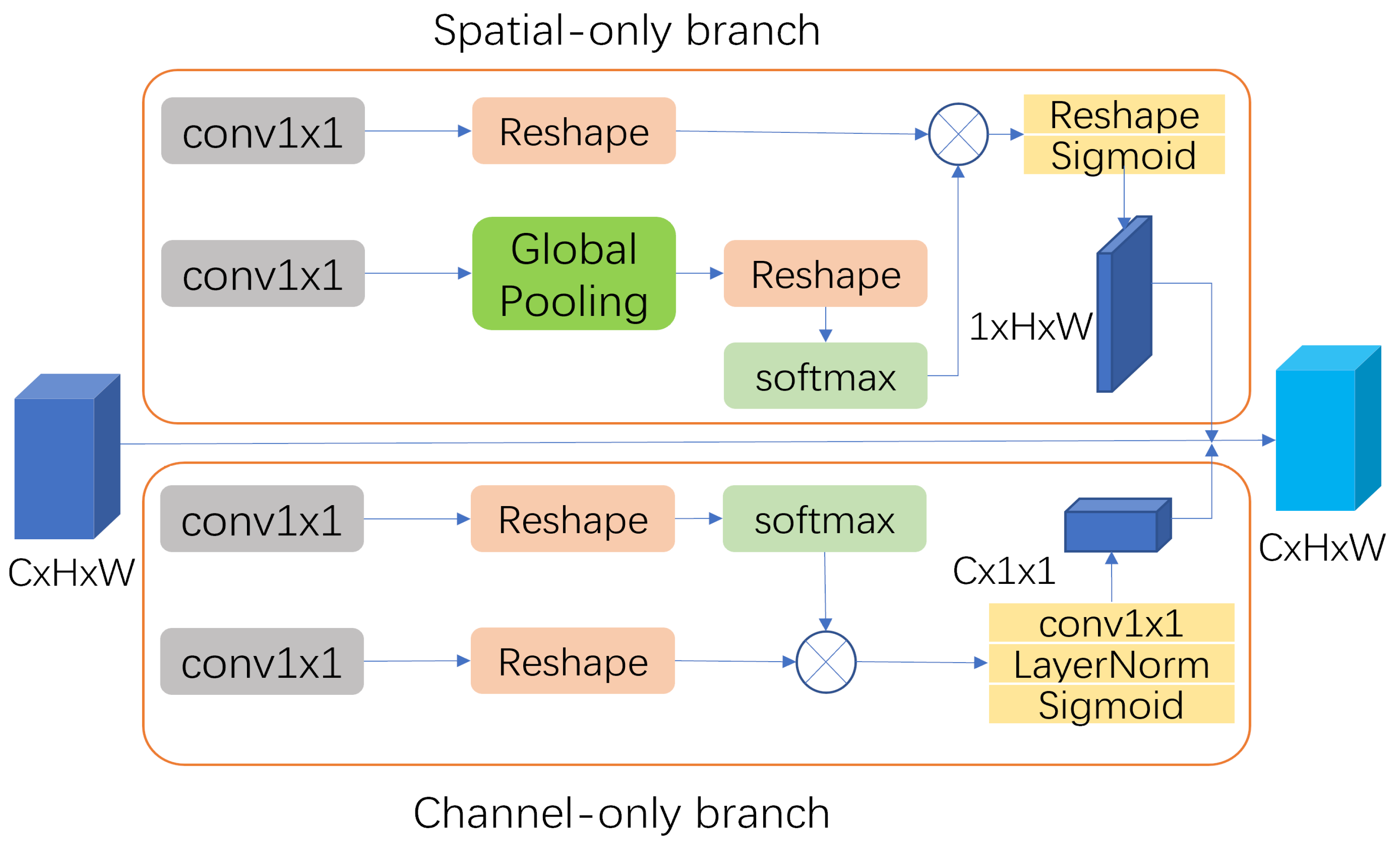

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized Self-Attention: Towards High-quality Pixel-wise Regression. arXiv 2021, arXiv:2107.00782. [Google Scholar]

- Lin, T.; Dollar, P.; Girshick, G.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Zhou, D.; Liu, X.; Liu, J. Application of PLC in automatic on-line cleaning device of condenser rubber ball. Electromech. Eng. Technol. 2011, 6, 109–111. [Google Scholar]

- Han, J. Study on Numerical Simulation and Control System of New Type Condenser Rubber Ball Cleaning System; North China Electric Power University: Beijing, China, 2019. [Google Scholar]

- Li, Z.; Li, X.; Zheng, W.; Li, B. Research on automatic monitoring device for rubber ball cleaning. Steam Turbine Technol. 2008, 5, 65–367. [Google Scholar]

- Wang, H.; Dong, L.; Song, W.; Zhao, X.; Xia, J.; Liu, T. Improved U-Net-Based Novel Segmentation Algorithm for Underwater Mineral Image. Intelligent Automation. Soft Comput. 2022, 32, 1573–1586. [Google Scholar] [CrossRef]

- Liang, Y.; Zhu, X.; Zhang, J. MiTU-Net: An Efficient Mix Transformer U-like Network for Forward-looking Sonar Image Segmentation. In Proceedings of the 2022 IEEE 2nd International Conference on Computer Communication and Artificial Intelligence (CCAI), Beijing, China, 6–8 May 2022; pp. 149–154. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, J.; Gomez, Z.; Kaiser, L.; Polosukhin, I. Attention is All you Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Rajamani, K.; Siebert, H.; Heinrich, M. Dynamic deformable attention network (DDANet) for COVID-19 lesions semantic segmentation. J. Biomed. Inform. 2021, 115, 103816. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, J.; Guo, P. Eye-UNet: A UNet-based network with attention mechanism for low-quality human eye image segmentation. Signal Image Video Process. 2022. [Google Scholar] [CrossRef]

- Lu, F.; Tang, C.; Liu, T.; Zhang, Z.; Li, L. Multi-Attention Segmentation Networks Combined with the Sobel Operator for Medical Images. Sensors 2023, 23, 2546. [Google Scholar] [CrossRef]

- Liu, K.; Peng, L.; Tang, S. Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors 2023, 23, 2567. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-shot Learning. arXiv 2017, arXiv:1703.05175. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Weinberger, K. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Fu, C.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A. DSSD: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wu, Y.; Li, J. YOLOv4 with Deformable-Embedding-Transformer Feature Extractor for Exact Object Detection in Aerial Imagery. Sensors 2023, 23, 2522. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Gao, Z.; Yang, G.; Li, E.; Liang, Z. Novel Feature Fusion Module-Based Detector for Small Insulator Defect Detection. IEEE Sens. J. 2021, 21, 16807–16814. [Google Scholar] [CrossRef]

- Rezatofighi, S.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

| Model | Box (%) | ||

|---|---|---|---|

| Yolact + VGG | 61.97 | 28.2 | 33.1 |

| Yolact + Darknet | 62.53 | 28.7 | 34.4 |

| Yolact + Resnet | 68.02 | 29.3 | 35.7 |

| Yolact + PVT | 71.99 | 32.5 | 35.3 |

| Yolact + PVT + PSA_FPN | 73.14 | 33.2 | 34.3 |

| Final model | 79.30 | 36.7 | 33.6 |

| Model | Box | Mask |

|---|---|---|

| Mask R-CNN | 35.7 | 4.8 |

| BlendMask | 41.3 | 22.3 |

| SOLOv1 | 30.8 | 32.5 |

| SOLOv2 | 33.1 | 35.7 |

| Yolact | 28.2 | 37.1 |

| final model | 36.7 | 35.2 |

| AP | Box | Mask | |

|---|---|---|---|

| IoU | |||

| all | 78.11 | 72.74 | |

| 0.50 | 92.10 | 92.10 | |

| 0.60 | 90.11 | 90.11 | |

| 0.65 | 90.11 | 90.11 | |

| 0.70 | 90.11 | 90.11 | |

| 0.75 | 89.10 | 89.10 | |

| 0.85 | 86.07 | 81.71 | |

| 0.90 | 68.61 | 51.44 | |

| 0.95 | 29.24 | 9.65 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, E.; Tian, Y.; Liang, E.; Wang, J.; Zhang, Y. A Multiscale Instance Segmentation Method Based on Cleaning Rubber Ball Images. Sensors 2023, 23, 4261. https://doi.org/10.3390/s23094261

Su E, Tian Y, Liang E, Wang J, Zhang Y. A Multiscale Instance Segmentation Method Based on Cleaning Rubber Ball Images. Sensors. 2023; 23(9):4261. https://doi.org/10.3390/s23094261

Chicago/Turabian StyleSu, Erjie, Yongzhi Tian, Erjun Liang, Jiayu Wang, and Yibo Zhang. 2023. "A Multiscale Instance Segmentation Method Based on Cleaning Rubber Ball Images" Sensors 23, no. 9: 4261. https://doi.org/10.3390/s23094261

APA StyleSu, E., Tian, Y., Liang, E., Wang, J., & Zhang, Y. (2023). A Multiscale Instance Segmentation Method Based on Cleaning Rubber Ball Images. Sensors, 23(9), 4261. https://doi.org/10.3390/s23094261