Using Object Detection Technology to Identify Defects in Clothing for Blind People

Abstract

:1. Introduction

2. Related Work

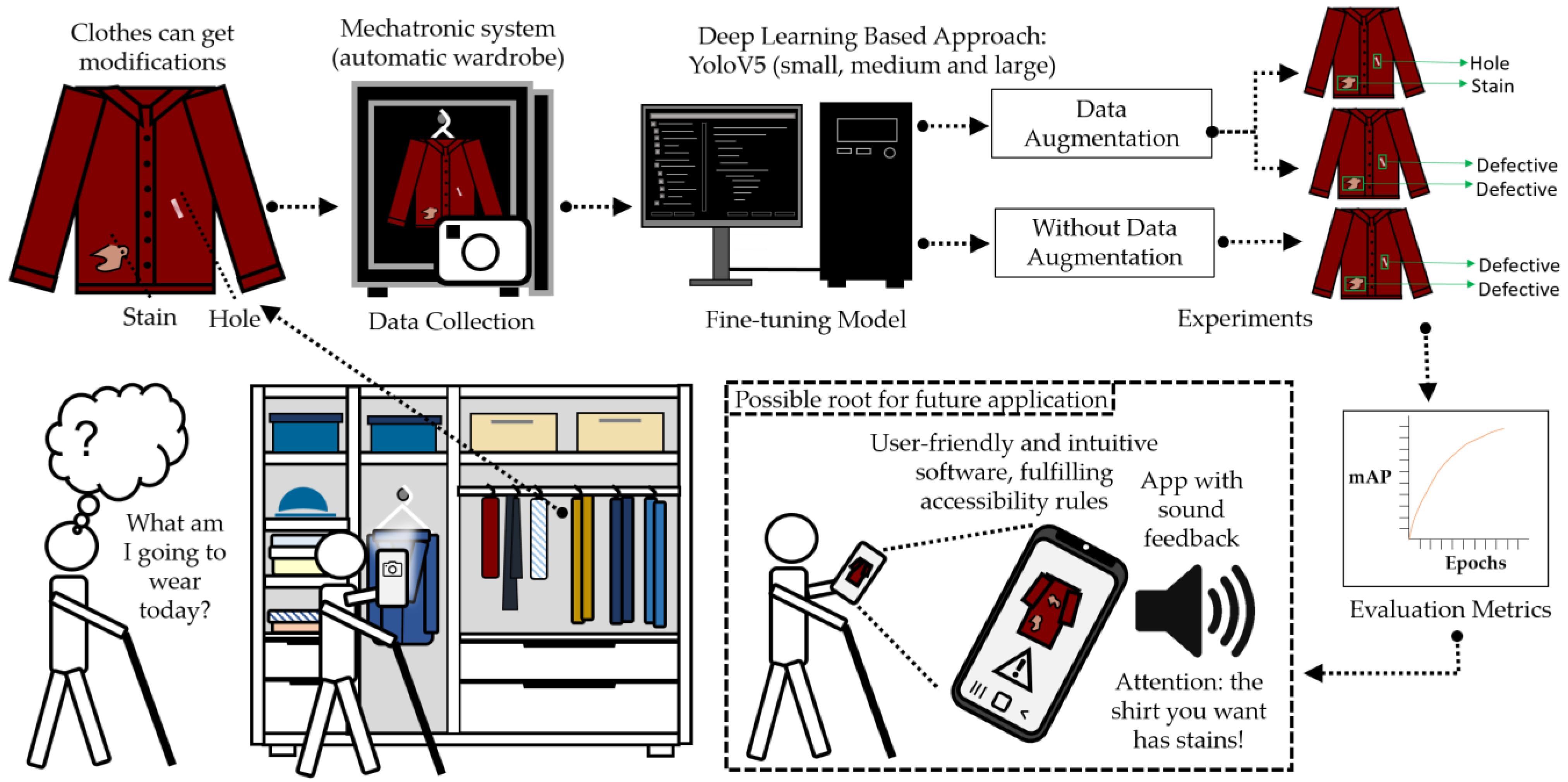

3. Methodology

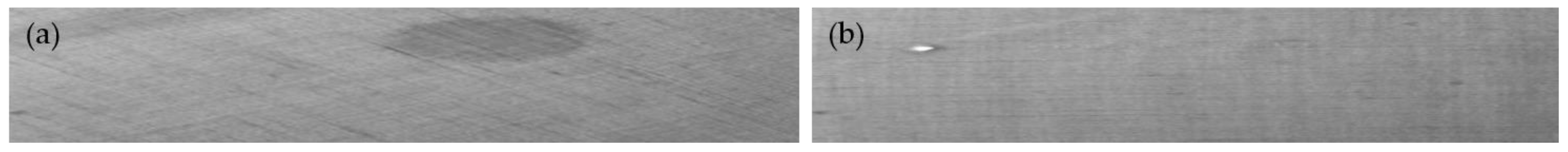

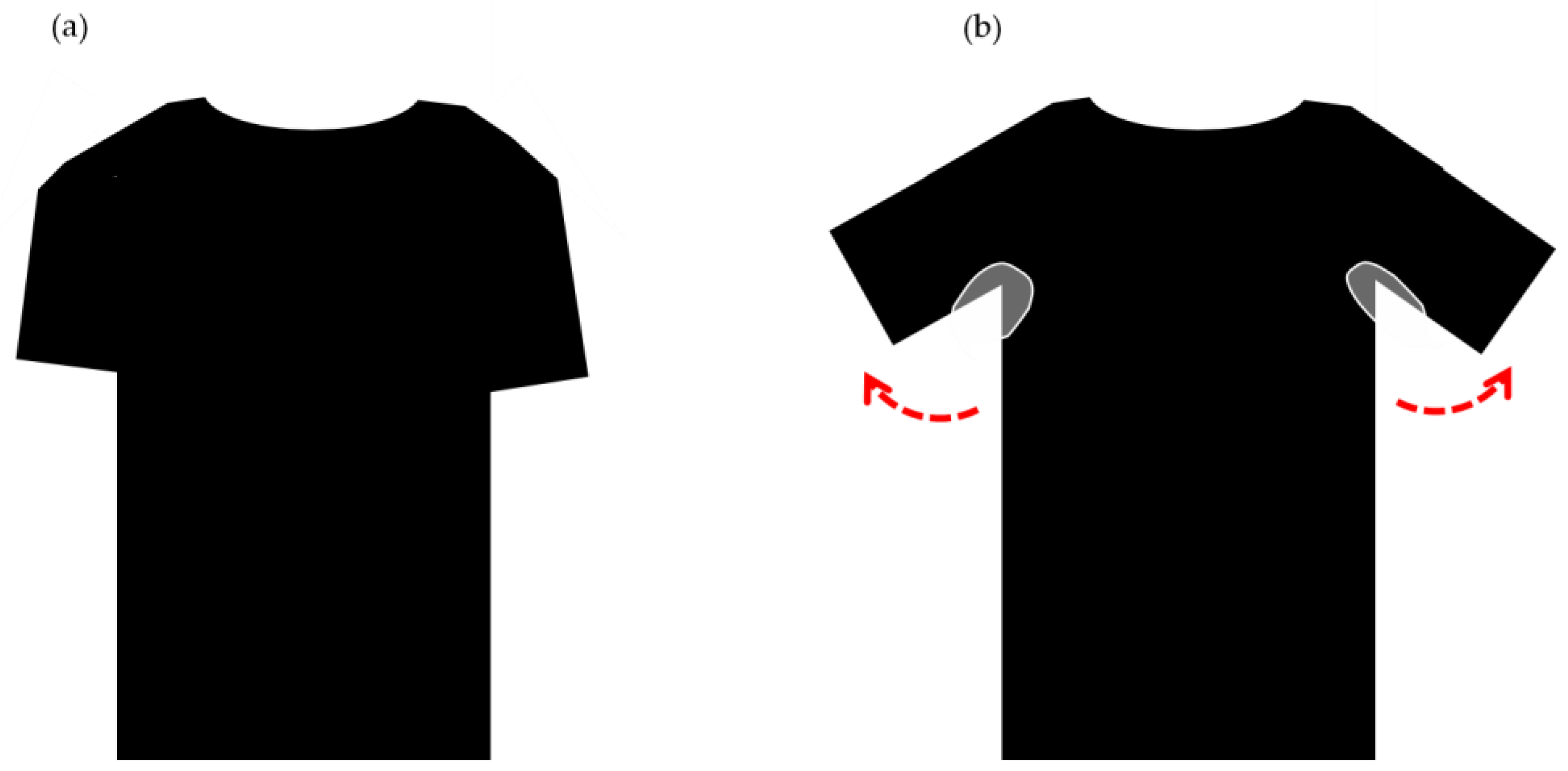

3.1. Data Collection

3.2. Data Augmentation

3.3. Deep Learning-Based Approach

3.4. Evaluation Metrics

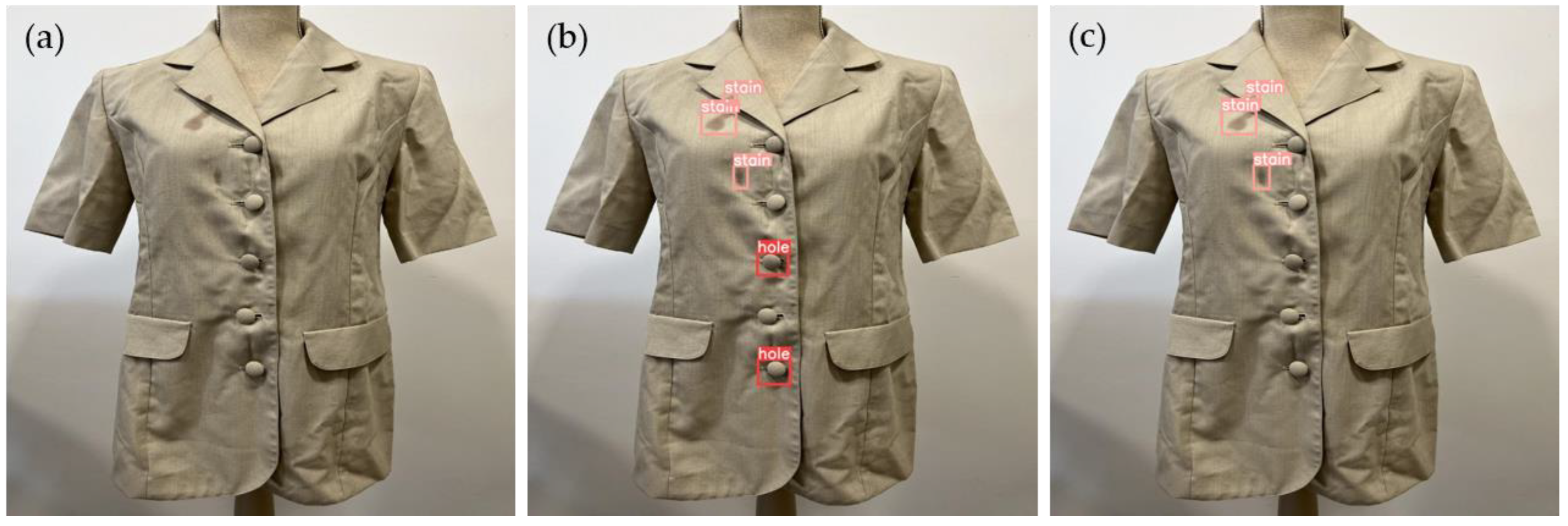

4. Results and Discussion

4.1. Clothing Defect Detection

4.2. Clothing Defect Detection with Data Augmentation

4.3. Clothing Defect Detection and Classification with Data Augmentation

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Chia, E.-M.; Mitchell, P.; Ojaimi, E.; Rochtchina, E.; Wang, J.J. Assessment of vision-related quality of life in an older population subsample: The Blue Mountains Eye Study. Ophthalmic Epidemiol. 2006, 13, 371–377. [Google Scholar] [CrossRef] [PubMed]

- Langelaan, M.; de Boer, M.R.; van Nispen, R.M.A.; Wouters, B.; Moll, A.C.; van Rens, G.H.M.B. Impact of visual impairment on quality of life: A comparison with quality of life in the general population and with other chronic conditions. Ophthalmic Epidemiol. 2007, 14, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Steinmetz, J.D.; Bourne, R.A.A.; Briant, P.S.; Flaxman, S.R.; Taylor, H.R.B.; Jonas, J.B.; Abdoli, A.A.; Abrha, W.A.; Abualhasan, A.; Abu-Gharbieh, E.G.; et al. Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to VISION 2020: The Right to Sight: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar] [CrossRef] [PubMed]

- Bhowmick, A.; Hazarika, S.M. An insight into assistive technology for the visually impaired and blind people: State-of-the-art and future trends. J. Multimodal User Interfaces 2017, 11, 149–172. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Review of Navigation Assistive Tools and Technologies for the Visually Impaired. Sensors 2022, 22, 7888. [Google Scholar] [CrossRef]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef]

- Johnson, K.; Lennon, S.J.; Rudd, N. Dress, body and self: Research in the social psychology of dress. Fash. Text. 2014, 1, 20. [Google Scholar] [CrossRef]

- Adam, H.; Galinsky, A.D. Enclothed cognition. J. Exp. Soc. Psychol. 2012, 48, 918–925. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Rocha, D.; Carvalho, V.; Oliveira, E.; Goncalves, J.; Azevedo, F. MyEyes-automatic combination system of clothing parts to blind people: First insights. In Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH), Perth, WA, Australia, 2–4 April 2017. [Google Scholar] [CrossRef]

- Rocha, D.; Carvalho, V.; Oliveira, E. MyEyes—Automatic Combination System of Clothing Parts to Blind People: Prototype Validation. In Proceedings of the SENSORDEVICES’ 2017 Conference, Rome, Italy, 10–14 September 2017. [Google Scholar]

- Rocha, D.; Carvalho, V.; Gonçalves, J.; Azevedo, F.; Oliveira, E. Development of an Automatic Combination System of Clothing Parts for Blind People: MyEyes. Sens. Transducers 2018, 219, 26–33. [Google Scholar]

- Rocha, D.; Carvalho, V.; Soares, F.; Oliveira, E. Extracting Clothing Features for Blind People Using Image Processing and Machine Learning Techniques: First Insights BT—VipIMAGE 2019; Tavares, J.M.R.S., Natal Jorge, R.M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 411–418. [Google Scholar]

- Rocha, D.; Carvalho, V.; Soares, F.; Oliveira, E. A Model Approach for an Automatic Clothing Combination System for Blind People. In Proceedings of the Design, Learning, and Innovation; Brooks, E.I., Brooks, A., Sylla, C., Møller, A.K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 74–85. [Google Scholar]

- Rocha, D.; Soares, F.; Oliveira, E.; Carvalho, V. Blind People: Clothing Category Classification and Stain Detection Using Transfer Learning. Appl. Sci. 2023, 13, 1925. [Google Scholar] [CrossRef]

- Ngan, H.Y.T.; Pang, G.K.H.; Yung, N.H.C. Automated fabric defect detection—A review. Image Vis. Comput. 2011, 29, 442–458. [Google Scholar] [CrossRef]

- Li, C.; Li, J.; Li, Y.; He, L.; Fu, X.; Chen, J. Fabric Defect Detection in Textile Manufacturing: A Survey of the State of the Art. Secur. Commun. Netw. 2021, 2021, 9948808. [Google Scholar] [CrossRef]

- Kahraman, Y.; Durmuşoğlu, A. Deep learning-based fabric defect detection: A review. Text. Res. J. 2022, 93, 1485–1503. [Google Scholar] [CrossRef]

- Lu, Y. Artificial intelligence: A survey on evolution, models, applications and future trends. J. Manag. Anal. 2019, 6, 1–29. [Google Scholar] [CrossRef]

- Roslan, M.I.B.; Ibrahim, Z.; Abd Aziz, Z. Real-Time Plastic Surface Defect Detection Using Deep Learning. In Proceedings of the 2022 IEEE 12th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 21–22 May 2022; pp. 111–116. [Google Scholar]

- Lv, B.; Zhang, N.; Lin, X.; Zhang, Y.; Liang, T.; Gao, X. Surface Defects Detection of Car Door Seals Based on Improved YOLO V3. J. Phys. Conf. Ser. 2021, 1986, 12127. [Google Scholar] [CrossRef]

- Ding, F.; Zhuang, Z.; Liu, Y.; Jiang, D.; Yan, X.; Wang, Z. Detecting Defects on Solid Wood Panels Based on an Improved SSD Algorithm. Sensors 2020, 20, 5315. [Google Scholar] [CrossRef]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.; Li, P.; Gu, D. Yarn-dyed Fabric Defect Detection with YOLOV2 Based on Deep Convolution Neural Networks. In Proceedings of the 2018 IEEE 7th Data Driven Control and Learning Systems Conference (DDCLS), Enshi, China, 25–27 May 2018; pp. 170–174. [Google Scholar]

- Mei, S.; Wang, Y.; Wen, G. Automatic Fabric Defect Detection with a Multi-Scale Convolutional Denoising Autoencoder Network Model. Sensors 2018, 18, 1604. [Google Scholar] [CrossRef]

- He, X.; Wu, L.; Song, F.; Jiang, D.; Zheng, G. Research on Fabric Defect Detection Based on Deep Fusion DenseNet-SSD Network. In Proceedings of the International Conference on Wireless Communication and Sensor Networks, Association for Computing Machinery, New York, NY, USA, 13–15 May 2020; pp. 60–64. [Google Scholar]

- Jing, J.; Wang, Z.; Rätsch, M.; Zhang, H. Mobile-Unet: An efficient convolutional neural network for fabric defect detection. Text. Res. J. 2022, 92, 30–42. [Google Scholar] [CrossRef]

- Han, Y.-J.; Yu, H.-J. Fabric Defect Detection System Using Stacked Convolutional Denoising Auto-Encoders Trained with Synthetic Defect Data. Appl. Sci. 2020, 10, 2511. [Google Scholar] [CrossRef]

- Mohammed, K.M.C.; Srinivas Kumar, S.; Prasad, G. Defective texture classification using optimized neural network structure. Pattern Recognit. Lett. 2020, 135, 228–236. [Google Scholar] [CrossRef]

- Xie, H.; Wu, Z. A Robust Fabric Defect Detection Method Based on Improved RefineDet. Sensors 2020, 20, 4260. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Jing, J.; Wang, Z. Fabric Defect Segmentation Method Based on Deep Learning. IEEE Trans. Instrum. Meas. 2021, 70, 5005715. [Google Scholar] [CrossRef]

- Kahraman, Y.; Durmuşoğlu, A. Classification of Defective Fabrics Using Capsule Networks. Appl. Sci. 2022, 12, 5285. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- GitHub—Ultralytics/yolov5: YOLOv5 in PyTorch > ONNX > CoreML > TFLite. Available online: https://github.com/ultralytics/yolov5 (accessed on 3 March 2023).

- Nakaguchi, V.M.; Ahamed, T. Development of an Early Embryo Detection Methodology for Quail Eggs Using a Thermal Micro Camera and the YOLO Deep Learning Algorithm. Sensors 2022, 22, 5820. [Google Scholar] [CrossRef]

- Idrissi, M.; Hussain, A.; Barua, B.; Osman, A.; Abozariba, R.; Aneiba, A.; Asyhari, T. Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV. Sensors 2022, 22, 5497. [Google Scholar] [CrossRef]

- Rocha, D.; Carvalho, V.; Soares, F.; Oliveira, E. Design of a Smart Mechatronic System to Combine Garments for Blind People: First Insights. In Proceedings of the IoT Technologies for HealthCare; Garcia, N.M., Pires, I.M., Goleva, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 52–63. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement 2018. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection 2020. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J. V Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context 2014. arXiv 2014, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

| Author | Year | Method | Dataset | Defect Classes | Metrics |

|---|---|---|---|---|---|

| Hang et al. [25] | 2018 | DL object detection (YOLOv2) | Collected dataset: 276 manually labeled defect images | 3 | IoU: 0.667 |

| Mei et al. [26] | 2018 | Multiscale convolutional denoising autoencoder network model | Fabrics dataset: ca. 2000 samples of garments and fabrics | - | Accuracy: 83.8% |

| KTH-TIPS | - | Accuracy: 85.2% | |||

| Kylberg Texture: database of 28 texture classes | - | Accuracy: 80.3% | |||

| Collected dataset: ms-Texture | - | Accuracy: 84.0% | |||

| He et al. [27] | 2020 | DenseNet-SSD | Collected dataset: 2072 images | 6 | mAP: 78.6% |

| Jing et al. [28] | 2020 | DL segmentation (Mobile-Unet) | Yarn-dyed Fabric Images (YFI): 1340 images composed in a PRC textile factory. | 4 | IoU: 0.92; F1: 0.95 |

| Fabric Images (FI): 106 images provided by the Industrial Automation Research Laboratory of the Department of Electrical and Electronic Engineering at Hong Kong University | 6 | IoU: 0.70; F1: 0.82 | |||

| Han et al. [29] | 2020 | Stacked convolutional autoencoders | Synthetic and collected dataset | - | F1: 0.763 |

| Mohammed et al. [30] | 2020 | A multilayer perceptron with a LM algorithm | Collected dataset: 217 images | 11 | Accuracy: 97.85% |

| Xie et al. [31] | 2020 | Improved RefineDet | TILDA dataset: 3200 images; only 4 classes were used from 8 in total, resulting in 1597 defect images. | 4 of 8 | mAP: 80.2%; F1: 82.1% |

| Hong Kong patterned textures database: 82 defective images. | 6 | mAP: 87.0%; F1: 81.8% | |||

| DAGM2007 Dataset: 2100 images | 10 | mAP: 96.9%; F1: 97.8% | |||

| Huang et al. [32] | 2021 | Segmentation network | Dark redfFabric (DRF) | 4 | IoU: 0.784 |

| Patterned texture fabric (PTF) | 6 | IoU: 0.695 | |||

| Light blue fabric (LBF) | 4 | IoU: 0.616 | |||

| Fiberglass fabric (FF) | 5 | IoU: 0.592 | |||

| Kahraman et al. [33] | 2022 | Capsule Networks | TILDA dataset | 7 | Accuracy: 98.7% |

| Class | Number of Defects |

|---|---|

| Stain | 323 |

| Hole | 324 |

| Parameters | Value |

|---|---|

| Image Size | 1024 × 1024 pixels |

| Optimizer | Stochastic gradient descent (SGD) |

| Learning Rate | 0.01 |

| Batch Size | 16 |

| Model | Precision | Recall | AP at IoU = 0.50 |

|---|---|---|---|

| YOLOv5s6 | 0.85 | 0.41 | 0.62 |

| YOLOv5m6 | 0.83 | 0.53 | 0.66 |

| YOLOv5l6 | 0.86 | 0.60 | 0.73 |

| Model | Precision | Recall | AP at IoU = 0.50 |

|---|---|---|---|

| YOLOv5s6 | 0.78 | 0.54 | 0.69 |

| YOLOv5m6 | 0.80 | 0.63 | 0.74 |

| YOLOv5l6 | 0.94 | 0.58 | 0.76 |

| Model | Class | Precision | Recall | AP at IoU = 0.50 |

|---|---|---|---|---|

| YOLOv5s6 | all | 0.849 | 0.538 | 0.688 |

| hole | 0.836 | 0.448 | 0.610 | |

| stain | 0.863 | 0.628 | 0.765 | |

| YOLOv5m6 | all | 0.823 | 0.593 | 0.726 |

| hole | 0.696 | 0.552 | 0.656 | |

| stain | 0.950 | 0.633 | 0.796 | |

| YOLOv5l6 | all | 0.915 | 0.543 | 0.747 |

| hole | 0.889 | 0.552 | 0.741 | |

| stain | 0.941 | 0.533 | 0.753 |

| Model | Inference Time (s) |

|---|---|

| YOLOv5s6 | 0.0092 |

| YOLOv5m6 | 0.0112 |

| YOLOv5l6 | 0.0157 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rocha, D.; Pinto, L.; Machado, J.; Soares, F.; Carvalho, V. Using Object Detection Technology to Identify Defects in Clothing for Blind People. Sensors 2023, 23, 4381. https://doi.org/10.3390/s23094381

Rocha D, Pinto L, Machado J, Soares F, Carvalho V. Using Object Detection Technology to Identify Defects in Clothing for Blind People. Sensors. 2023; 23(9):4381. https://doi.org/10.3390/s23094381

Chicago/Turabian StyleRocha, Daniel, Leandro Pinto, José Machado, Filomena Soares, and Vítor Carvalho. 2023. "Using Object Detection Technology to Identify Defects in Clothing for Blind People" Sensors 23, no. 9: 4381. https://doi.org/10.3390/s23094381

APA StyleRocha, D., Pinto, L., Machado, J., Soares, F., & Carvalho, V. (2023). Using Object Detection Technology to Identify Defects in Clothing for Blind People. Sensors, 23(9), 4381. https://doi.org/10.3390/s23094381