Abstract

The current technological world is growing rapidly and each aspect of life is being transformed toward automation for human comfort and reliability. With autonomous vehicle technology, the communication gap between the driver and the traditional vehicle is being reduced through multiple technologies and methods. In this regard, state-of-the-art methods have proposed several approaches for advanced driver assistance systems (ADAS) to meet the requirement of a level-5 autonomous vehicle. Consequently, this work explores the role of textual cues present in the outer environment for finding the desired locations and assisting the driver where to stop. Firstly, the driver inputs the keywords of the desired location to assist the proposed system. Secondly, the system will start sensing the textual cues present in the outer environment through natural language processing techniques. Thirdly, the system keeps matching the similar keywords input by the driver and the outer environment using similarity learning. Whenever the system finds a location having any similar keyword in the outer environment, the system informs the driver, slows down, and applies the brake to stop. The experimental results on four benchmark datasets show the efficiency and accuracy of the proposed system for finding the desired locations by sensing textual cues in autonomous vehicles.

1. Introduction

Autonomous vehicles (AV) have gained significant popularity in recent years due to the vast revolution in modern transportation systems. An autonomous vehicle is a self-driving vehicle that is efficient at perceiving its outer environment and moving without or with very limited human involvement. The various renowned reports and surveys predict that by 2030, autonomous vehicles will be capable and reliable enough to replace maximum human driving [1,2]. In this scenario, many new methods are being proposed to facilitate autonomous vehicles’ vision perception, sensing the outer environment, safety aspects, traffic laws and regulations, accident liability, and maintaining the surrounding map [3,4,5].

An autonomous vehicle can rely on multiple sensors, complex algorithms, actuators, machine learning tools, computer vision techniques, and reliable processors to take effect [6,7]. The autonomous vehicle perceives the outer environment with the help of numerous sensors and makes the decision by perceiving with the assistance of computer vision [8,9]. Each sensor’s configuration and mechanism varies, as an example in [10], the sideslip angle estimation algorithm for autonomous vehicles is proposed. The algorithm is based on a consensus Kalman filter that fuses measurements from a reduced inertial navigation system (R-INS), a global navigation satellite system (GNSS), and a linear vehicle-dynamic-based sideslip estimator.

Since the last few decades, advanced driver assistance systems (ADAS) are equally appreciated to avoid traffic accidents and to improve driving comfort in autonomous vehicles [11]. The ADAS systems are safe and secure systems designed to decrease the human error rate. These systems assist the driver through the advanced technologies to drive safely and thus, improve the driving performance. Several state-of-the-art methods have employed the inertial measurement unit (IMU) and a global navigation satellite system (GNSS) for vehicle localization. In [12], the authors have proposed a method for estimating the sideslip angle and attitude of an automated vehicle using IMU and GNSS. The method is designed to be robust against the effects of vehicle parameters, road friction, and low-sample-rate GNSS measurements. In [13], the method is proposed for estimating the yaw misalignment of an IMU mounted on a vehicle.

The ADAS systems utilize a combination of multiple sensors to perceive the outer environment and then either offers useful information to the driver or take some necessary actions such as applying the brake, changing the lane, turning left/right, etc. These systems are very helpful to decrease traffic congestion and smoothing traffic movement [14,15]. In the last three decades, multiple features of ADAS systems have been proposed, including cruise control, antilock braking system, auto-parking, power steering, lane centering, collision warnings, and others [16,17,18]. In autonomous vehicles, cameras are generally used as vision sensors. The vision-based ADAS utilizes multiple cameras to capture the images, analyze them, and take the appropriate actions whenever needed.

In state-of-the-art methods, multiple advanced features of the ADAS system are proposed. In [19], Liu et al. proposed a framework using SVM-based trail detection to achieve trail directions and tracking in a real-time environment. The vision-based framework is capable to detect and track the trails as well as scene understanding using a quadrotor UAV operator. Yang et al. [20] proposed two frameworks that show how the CNNs perceive and process the driving scenes with distinguishing visual regions. Gao et al. [21] proposed a method for a 3D surround view for ADAS that covers automobiles around the vehicle. The method helps the driver to be aware of the outer environment.

Liu et al. [22] presented a novel algorithm for detecting tassels in maize using UAV-based RGB imagery. The algorithm named YOLOv5-tassel, is based on the YOLOv5 object detection framework and incorporates several modifications to improve its performance on tassel detection. The authors included the modifications of a bidirectional feature pyramid network to effectively fuse cross-scale features, introduced a robust attention module to extract the features of interest before each detection head, and added an additional detection head to improve small-size tassel detection. Xia et al. [23] proposed a novel data acquisition and analytics platform for vehicle trajectory extraction, reconstruction, and evaluation based on connected automated vehicle (CAV) cooperative perception. The platform is designed to be holistic and capable of processing sensor data from multiple CAVs.

Wang et al. [24] proposed a lane-changing model for making decisions to either change the lane or produce trajectories. The model analyzes the vehicle kinematics of different states, their distances, and comfort level requirements. Chen et al. [25] proposed an instructor-like assistance system in order to avoid collision risk. The driver and the assistance system both assure the recommendation to control the vehicle. Gilbert et al. [26] proposed an efficient decision-making model which selects the least possible collision for AV. The model combines vehicle dynamics and maneuver trajectory paths to produce simulation results and multi-attribute decision-making techniques. Gao et al. [27] proposed a new vehicle localization system that is based on vehicle chassis sensors and considers vehicle lateral velocity. The system is designed to improve the accuracy of vehicle stand-alone localization in highly dynamic driving conditions during GNSS outages.

Xia et al. [10] presented a new algorithm for estimating the sideslip angle of an autonomous vehicle. The algorithm uses consensus and vehicle kinematics/dynamics synthesis to enhance the accuracy of the estimation under normal driving conditions. The proposed algorithm uses a velocity-based Kalman filter to estimate the errors of the reduced inertial navigation system (R-INS) and a consensus Kalman information filter to estimate the heading error. The consensus framework combines a novel heading error measurement from a linear vehicle-dynamic-based sideslip estimator with the heading error from the global navigation satellite system (GNSS) course. Liu et al. [28] proposed a novel kinematic-model-based vehicle slip angle (VSA) estimation method that fuses information from a GNSS and an IMU. The method is designed to be robust against the effects of vehicle roll and pitch, a low sampling rate of GNSS, and GNSS signal delay.

Since the early days of mechanical vehicles, safety has been one of the key concerns in automotive systems. Several attempts have been made to address safety concerns by developing safe and secure systems to protect the driver as well as prevent injuring pedestrians [29,30]. It is one of the safety aspects of an autonomous vehicle when the driver is preoccupied with searching for the desired location to stop. With our proposed system, the safety of AV can be increased drastically since the AV will automatically realize the desired locations in its surrounding. Rather than continually searching for the desired locations, our proposed system will automatically realize the textual cues present in the outer environment and suggest the driver to stop.

While driving on the road, AV performs multiple operations such as lane change, lane keeping, overtaking, and following the traffic rules. Several studies proposed and developed numerous methods for ADAS systems [31,32]. It is equally important for an autonomous vehicle to be aware of the textual cues appearing in its outer environment to take some decision or assist the driver, either to stop or drive. Thus, the key concern of this paper is to reduce human intervention in an autonomous vehicles.

In this paper, we propose a novel intelligent system based on the driver’s instruction for finding the desired locations using textual cues present in the outer environment for advanced driver assistance systems. For this, we combine computer vision and natural language processing (NLP) techniques to perceive textual cues. Computer vision methods train the system to interpret and perceive the visual world around the autonomous vehicle and NLP techniques emphasize the system with the ability to read, recognize, and derive the meaning from textual cues appearing in front of an autonomous vehicle. The key contributions of this paper are as follows:

- A novel intelligent system is proposed for AVs to find unsupervised locations.

- The proposed system is capable of sensing the textual cues that appear in the outer environment for determining desired locations.

- The proposed system is a novel development in the list of ADAS features of an autonomous vehicle.

- With the proposed system, the driver’s efforts for finding the desired locations will drastically be decreased.

The remainder of the paper is organized as follows. In Section 2, we describe the proposed system for finding the desired locations with textual cues and their formation as keywords. In Section 3, the experimental results are defined to show the efficiency and accuracy of the proposed system. Section 4 concludes the proposed work and presents the future directions.

2. Proposed System

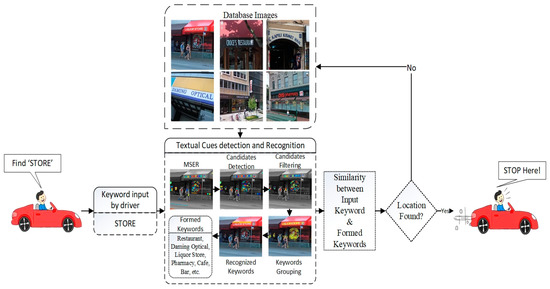

In this section, we propose a novel intelligent system to find the desired locations using textual cues for an autonomous vehicle. Firstly, the driver inputs one or more keywords to the proposed system to find the desired locations. Secondly, the proposed system detects and localizes the textual cues appearing in the outer environment. The system will generate the keywords localized from the outer environment with detection and recognition methods. Finally, the system will execute similarity learning to find the similarity between the input keywords and the localized keywords from outer environment images. The schematic diagram of the proposed intelligent system is shown in Figure 1.

Figure 1.

Schematic diagram of the proposed system.

2.1. Textual Cues Detection

In order to detect, localize, and form the keywords from the outer environment, we employ text detection and localization technique. Firstly, we use affine transformation to deal with global distortion appearing within an input image and to improve the accuracy of the text to a more horizontal text. It takes an input image with channel , height , and width to produce an output image . The affine transformation based on the arguments between the input image and output image is given as:

where are the source coordinates of the input image and are the required coordinates for the output image . The output image is further rectified from the input image using bilinear interpolation, given as:

where is the pixel value of the rectified image at the location and is the pixel value of the input image at the location .

2.1.1. Textual Candidates Detection

The textual candidates detection aims to extract the position of textual regions in the outer environment. Since the text appearing in the outer environment generally has diverse contrast to its relative background and uniform color intensity, the maximally stable extremal region (MSER) technique is the best approach as it is widely used and considered the best region detector [33]. In order to detect the textual candidates appearing in the outer environment, we adopt the MSER approach for finding the corresponding candidates within the input image . For finding the extremal regions in the input image, the intensity difference is given as:

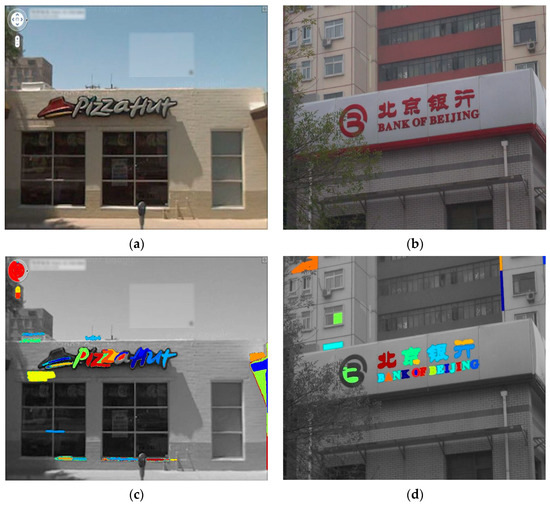

where represents the extracted extremal regions area, represents the extremal regions, specifies the increment of each extremal region , and shows the area difference between the two regions’ area. After applying the region detector, the obtained extremal regions are shown in Figure 2.

Figure 2.

Textual candidates detection. (a,b) Original images. (c,d) Detected extremal regions.

2.1.2. Textual Candidates Filtering

The textual regions detected in the previous step using the MSER technique are further refined and rectified. First, we validate the size and the aspect ratio using geometric properties for textual candidates filtering, which is given as:

where h and w are the height and width of the aligned bounding box of segmented axes, respectively, and , , and are components to finetune.

The input image having the size and the predicted categorized result and with uncertain probability sequence and is given as:

where

where D and K represent the character sequence length.

The input vector is combined using the following properties:

The above four properties are probability characteristics in which mean represents the overall confidence score and min represents the least likely character.

Furthermore,

where is the constant parameter. The above two properties are used to normalize the number of characters between 0 and 1, and the following two properties are used for character width calculated as per geometric properties:

where represents the character width.

The localized regions which satisfy the above properties are then further processed and the remaining regions are discarded, as shown in Figure 3. The obtained localized regions consist of non-textual regions and may produce a false result for recognition. We further segment textual regions with the stroke responses of each image pixel. The corner points are used as the edges of two strokes. The corner points and stroke points establish the distortion of strokes. For this, we follow the corner detection approach [34], which applies the following selection criteria. Firstly, the matrix M for each pixel is calculated as follows:

where represents the weight at position for window center, and denotes the gradient value of pixel at position . The eigenvalues and of matrix are calculated as:

Figure 3.

Textual candidates filtering. (a,b) non-textual objects filtered.

To compute the turning point of outer stroke endpoints, we use the following equation [35]:

where , , , and are the coordinates of the endpoints of the strokes; and are coordinates of the outermost points; and denotes coordinates of every single point at the curve. The following equation is given to determine outer stroke points:

where denotes a single point at the x-axis and y-axis in word image.

Given the corner point along with its adjacent corner , the height and width of a moving window is determined as:

where is a coefficient to normalize the area of the moving region among the corner points and is set between 0 and 1. Moreover, the moving area of outer strokes for the side length area is given as:

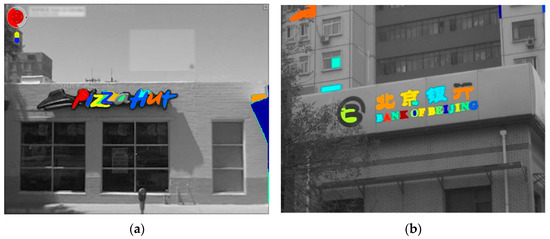

where is a coefficient to normalize the moving regions among the outer strokes and is set between 0 and 1. The final filtered localized textual regions are shown in Figure 4.

Figure 4.

Optimal textual candidates. (a,b) Filtered textual candidates.

2.1.3. Keywords Grouping and Recognition

The localized textual regions in the previous steps consist of individual text characters. In order to recognize and understand the meaning of these textual regions, these individual characters must be combined into text lines. This way, the localized textual regions may represent more meaningful information about the outer environment as compared to the individual characters. For example, the localized textual region consists of the “SCHOOL” versus the individual character set {C,O,L,O,S,H} where its meaning is lost due to the unordered sequence of the word [36,37,38].

In order to form the ordered keywords, we employ the grouping approach [39]. The key idea is to apply a rectangle for each connected region having the center and orientation . Each associated region is considered to be a keyword candidate. The initial candidate regions having are refined to be the keyword with the following properties:

- (1)

- The two adjacent textual candidates are associated with a new value.

- (2)

- The achieved keyword candidate which is the combination of two candidates is obtained with curvilinear.

If the centers of connected regions in are estimated normally with a kth order polynomial, then the candidate keyword is determined as curvilinear:

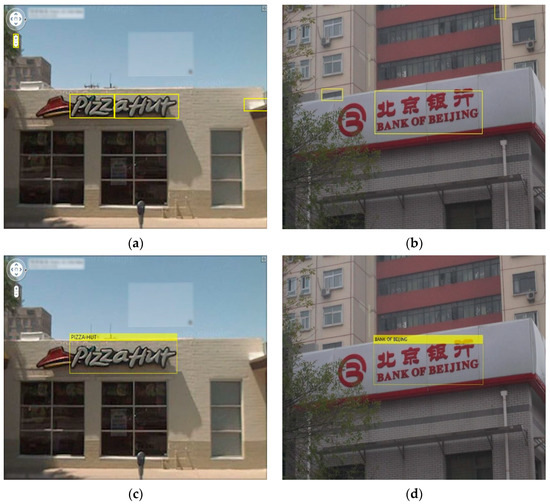

where is the rotated point of and is the average score. The bounding boxes are applied to the character set of textual regions, as shown in Figure 5.

Figure 5.

Keywords grouping using yellow bounding boxes.

The grouped keywords from localized textual regions are further processed for recognition purposes. The cropped word images having width and height consisting of the textual cues are recognized individually. The inputs are the 2D maps resulting in a map for the individual character supposition.

Given the metrics and the confidence score for individual word supposition , let represent the breakpoints amid individual characters, where initializes the first character and ends the last character. The breakpoint hypothesis for the word confidence score is given as:

Each individual hypothesis word is optimized for breakpoints, and a word having an optimal score is recognized as:

The unary fraction scores given in Equation (22) are determined with the following properties: the distance from outside the image boundaries, the distance from the estimated breakpoint location, the binary fraction score, the non-text class score, and the distance of the first and last breakpoints from the edge of the image. The pairwise score given in Equation (23) is determined with the following properties: non-text scores at character centers, character scores at midpoints amid breakpoints, eccentricity from the normalized character width, and active contributions of the left and right binary responses comparative to character scores.

The bounding boxes are applied to recognized words in order to match the evaluated breakpoints, and the recognized bounding boxes are added to the queue of recognized words. The recognized keywords are shown in Figure 6.

Figure 6.

Recognized keywords in the outer environment. (a,b) Optimum bounding boxes. (c,d) Recognized keywords.

2.2. Textual Cues Keywords

The localized textual regions are optimized with the OCR and the formal words are recognized, thus providing a sensible meaning. In this step, we utilize the recognized formal words to establish a words model that will be responsible for sequences and boundaries. Since the recognized textual cues may still be missing some characters and may affect finding the desired locations, we employ an n-gram probabilistic language model that will provide evidence for the presence of the actual cues [40].

An n-gram model is generally used to predict the probability of a given n-gram in any contiguous sequence of words. A better n-gram model predicts the next word in a sentence. For example, given the word ‘park’, the first recognized trigram is ‘par’ and the second recognized trigram is ‘ark’, and then its overlapping characters ‘ar’ suggests that the correctly recognized word is likely to be ‘park’.

Given the word of length as a sequence of characters where each denotes a character at position in word from 26 letters and 10 digits, each recognized word has a varying length that can be determined at the run time. Therefore, the number of characters in a single word is fixed to 22 with a null character and a maximum length class, which is given as:

For two strings and , the represents as a substring of the word . An -gram of is assumed as substring having the length . The dictionary of all grams of word of length is given as:

As an example, the dictionary for the word ‘cafe’ is . Given the recognized ith n-gram and its consistent confidence score , in order to determine the sequence of n-grams with the most confident prediction for the entire sequence of recognized words, the objective function can be given as:

where

Here, is used to achieve the optimal n-gram separation of the given word, and each n-gram word image is recursively recognized.

2.3. Similarity Learning

Similarity learning finds and matches similar images as the user-input keywords [41,42,43]. The proposed intelligent system matches the user-input keywords with the outer environment textual cues. For this, we create a feature vector of user input keywords and the recognized textual cues from the outer environment images.

Given the input keywords , the word is treated as a sequence of characters , where denotes the total number of characters in word , and is considered as the optimal representation of the character of word . Each sequence is interpolated and concatenated with a fixed-length feature and all the features are signified as output features .

The recognized textual cue proposals and the input keywords are formed, and the similarity is computed as a similarity matrix between the input keywords and recognized textual cues. The score between both the feature vectors and is given as:

where represents the operator that converts the 2D matrix into a 1D vector. The required similarity matrix is maintained by the target similarity matrix . The target similarity is computed as the Levenshtein distance between corresponding textual pairs and is given as:

Meanwhile, during implementation for the ranking, the similarity between the input keywords and recognized textual words equals to the maximum value of .

3. Experimental Results and Discussion

This section presents evaluation results to show the efficiency and accuracy of the proposed intelligent system. The evaluation protocols and benchmark datasets used for experimentation are given as follows.

3.1. Datasets

As the proposed intelligent system is capable to find and locate the desired locations using textual cues, we evaluated our system on four different benchmark datasets comprising the outer environment images. In these datasets, different textual cues can be found and located for the autonomous vehicle’s driving assistance system.

Street View Text (SVT): The dataset [44] consists of outer environment images and certain textual cues appear on the different objects such as walls, shops, billboards, buildings, etc. This dataset contains 250 trained images and 100 test images, and each image has a varying dimension from 1024 × 768 to 1920 × 906.

ICDAR 2013: The dataset [45] comprising the outer environment images and textual cues can be found on multiple different objects such as shops, cafes, signboards, banners, posters, etc. This dataset contains 229 trained images and 233 test images. The dimension of each image varies from 3888 × 2592 to 350 × 200.

Total-Text: The dataset [46] contains curved, orientated, and horizontal textual cues that are very challenging to detect and recognize. The text appears on multiple objects present in the outer environment. The dataset contains 300 test images and 1255 trained images and each image’s dimension varies from 180 × 240 to 5184 × 3456.

MSRA-TD500: The dataset [47] contains challenging outer and inner environment images in which the textual cues appear on doorplates, caution plates, signs, boards, etc. This dataset contains 300 trained images and 200 test images. The dimension of each image varies from 1920 × 1280 to 1296 × 864.

3.2. Evaluation Measures

The evaluation measures for the proposed intelligent system are given as follows.

3.2.1. Textual Cues Evaluation

The detection and localization of the textual cues is one of the main entities for a robust intelligent system. We evaluate the textual cues’ detection and localization with standard evaluation protocols [48]—precision p, recall r, and frequency f measures defined as:

3.2.2. Location Retrieval Evaluation

The user-input keywords are matched with the recognized textual cues and similar location images are retrieved for the necessary actions such as applying the brake. For location retrieval, the mean average precision mAP is a commonly used evaluation measure which is the average of all queries. The mean average precision can be given as follows:

where A denotes the number of relevant locations, B is the number of retrieved locations, denotes the top similar images consisting of the same textual cues as the user-specified keywords. Given the set of user keywords as , where Q denotes the set of all the keywords specified by the user, the mAP for the proposed system is formulated as:

where k is the number of retrieved location images having the most similar textual cues.

3.3. Implementation Results

In this section, we briefly describe the implementation details and discuss the output results. Firstly, the proposed intelligent system asks the driver to input the keywords of the desired location. For this purpose, we randomly select the different keywords as input from the test images of all the datasets defined in Section 3.1. Secondly, the proposed system detects and localizes the textual cues from the trained images of each dataset and applies OCR recognition. Thirdly, similarity learning is applied to compute the similarity between the input keywords and recognized textual cues. Lastly, whenever any similar textual cue is found, the intelligent system informs the driver, slows down, and applies the brake to stop. The detailed experiments are given as follows.

3.3.1. Textual Cues Detection

Since textual cues detection is one of the key phases for a robust intelligent system, we first evaluate the textual cues detection on the benchmark datasets.

In this experiment, we evaluate the efficiency and accuracy of the proposed intelligent system for detecting and localizing the textual cues on the different datasets. We use the textual evaluation protocols: precision p, recall r, and frequency f. The obtained results of the proposed system are given and compared with the state-of-the-art methods in Table 1, Table 2, Table 3 and Table 4 for SVT, ICDAR’13, Total-Text, and MSRA-TD500 datasets, respectively.

Table 1.

Textual cues detection and localization result on SVT dataset.

Table 2.

Textual cues detection and localization result on ICDAR’13.

Table 3.

Textual cues detection and localization result on Total-Text.

Table 4.

Textual cues detection and localization result on MSRA-TD500.

The proposed method outperformed state-of-the-art methods for the SVT and ICDAR datasets in textual cues detection and localization. For the Total-Text dataset, our proposed method achieved better precision as compared to state-of-the-art methods. For the MSRA-TD500 dataset, the proposed method achieved remarkable results with a better f score. Our main target is to detect the textual cues from the low-quality road and street images such as the SVT dataset that are really challenging to detect.

3.3.2. Locations Retrieval

This section describes the experimentations on the datasets for finding the desired locations. In order to show the better performance and accuracy of the proposed intelligent system, we conducted the experimentation on one-to-one and one-to-many location frames. The details of the experiments are given as follows:

Experiment 1.

One-to-one: In this experiment, the proposed intelligent system first asks the driver to input the keywords of desired locations to trace and proceed. Once the driver inputs one or more keywords, the proposed system creates a feature vector of those keywords and finds a similar location image with the same textual cues. In this experiment, the proposed system keeps finding similar textual cues and asks the driver to confirm. The feature vector for input keywords continually matches with each image and produces the score. The retrieval time for this experiment is much faster than Experiment 2 as it rapidly compares the similarity and presents the outcomes to the driver. The obtained results of Experiment 1 are given in Table 5 for SVT, ICDAR’13, Total-Text, and MSRA-TD500 datasets.

Table 5.

One-to-one location retrieval evaluation on different datasets.

Experiment 2.

One-to-many: In order to show the efficiency and accuracy of the proposed intelligent system, we perform the one-to-many experiment. In this experiment, the proposed intelligent system takes input keywords from the driver for the desired location and traces the top-rank location images possessing similar textual cues. The actual purpose of this experiment is to show the robustness of the proposed system by retrieving the top ten similar images with similar textual cues. The obtained results of Experiment 2 are compared with the state-of-the-art methods in Table 6 for SVT, ICDAR’13, Total-Text, and MSRA-TD500 datasets.

Table 6.

One-to-many location retrieval evaluation on different datasets.

It is worth mentioning that the proposed method outperforms the majority of the previous methods in the one-to-many experiment. However, for the ICDAR’13 dataset, our proposed method could not compete for the ICDAR’13 dataset with the method [51] but still presented a remarkable performance with other datasets.

3.3.3. Retrieval Time Comparison

The time consumed during the computation of textual cues and finding the similarity is truly a critical parameter to be considered. The proposed intelligent system finds the textual features and discards the non-textual features during the localization step. The system maintains a good balance between textual and non-textual features. The retrieval time of the proposed system is compared for both experiments in Table 7. For the one-to-one experiment, the retrieval time is better and more robust since the similarity is compared between the two entities, i.e., driver-input keywords and targeted outer environment location image. For the one-to-many experiment, the retrieval time is higher since the similarity is computed to index top-rank location images.

Table 7.

Locations’ retrieval time (s) comparison.

3.4. Results Impact and Discussion

In this section, we discuss and compare the results of the proposed approach with state-of-the-art methods. The details are given as follows.

Textual cues: Since the textual cue detection plays a vital role in order to find the semantic locations, we first compared the textual cue detection rate for four different datasets. The output results are given on the SVT dataset for textual cues detection and localization in Table 1. The proposed approach outperformed the other methods with respect to p, r, and f values. The method achieved 61.52 precision, 58.31 recall, and 59.87 f values. Here, the precision and recall values of the method [52] are the least inferior, having 27.0 precision, 35.0 recall, and 30.0 f values. For the ICDAR’13 dataset, the proposed method achieved 92.4 precision, 87.63 recall, and 89.95 f values. The proposed method also outperformed state-of-the-art methods for the ICDAR’13 dataset. The close competent method [54] has 92.0 precision, 84.4 recall, and 88.0 f values.

For the Total-Text dataset, our proposed method achieved 84.2 precision, 86.9 recall, and 85.5 f values. The proposed method outperformed most of the existing methods for precision and f values. However, the method in [60] has a slightly higher recall value, i.e., 88.6 and the method in [59] has an 87.1 recall value. For the MSRA-TD500 dataset, the proposed method achieved 86.2 precision, 86.71 recall, and 86.45 f values. Here, the method in [54] has the highest precision value of 87.6. However, the proposed method outperformed state-of-the-art methods for the highest recall and f score.

Locations retrieval: For retrieving the semantic locations, we have evaluated the proposed method for two different experiments. For the one-to-one mode, the proposed method achieved 69.2 mAP on SVT, 74.8 mAP on ICDAR’13, 59.1 mAP on Total-text, and 63.4 mAP on MSRA-TD500 datasets. The mAP is inferior due to the textual cue localization step and can be improved if the textual cues are further improved. For the one-to-many mode, the proposed method has achieved 66.8 mAP on SVT, 75.6 mAP on ICDAR, 57.4 mAP on Total-Text, and 61.7 mAP on MSRA datasets. Here, the close competent method [49] has 63.0 mAP on SVT and 71.0 mAP on ICDAR datasets. The method in [63] has the most inferior mAP 23.0 on SVT and the method in [62] has 65.0 mAP on ICDAR datasets. The proposed method has achieved an overall better mAP for all the datasets and outperformed state-of-the-art methods.

The proposed method has certainly a few limitations. Due to its simple feature description, it is robust and able to handle only a small number of data rather than millions of images. Another disadvantage is that our method might not work well with more complicated and complex images since it might not be able to generalize to the new data type. The proposed approach, however, is exempt from both extensive training and any expensive hardware needs. The proposed approach is often easier to adopt and can be used more quickly. As a result, the proposed method performed better than the majority of the current methods and achieved an overall greater mean average precision score. The datasets included images from outer environment scenarios; however, the system’s performance can be further improved by more complicated conditions such as low light, occlusion, or a partial visibility of textual cues.

4. Conclusions

In this work, a novel intelligent system is proposed to sense the textual cues available in the outer environment for finding the locations in autonomous vehicles. The proposed system first asks the driver to input the keywords of the desired locations. Next, the system proceeds with the detection and recognition of certain textual cues appearing on different objects such as billboards, shops, signboards, walls, buildings, banner, etc. Whenever the system finds a location composed of similar keywords to the driver’s input keywords, the system notifies the driver, slows down, and applies the brake to stop. The experimental results on four datasets show the robustness of the proposed intelligent system for autonomous vehicles to sense the textual cues appearing in the outer environment scenario. The proposed system has lesser computation complexity and does not require any specific hardware. The system is free from a tremendous amount of training due to its simple feature description. In the future, we intend to improve the retrieval accuracy of the proposed system. We will further improve the methodology for live tracking and perform the experimentations on real-time scenario with video frames.

Author Contributions

Conceptualization, S.U.; Methodology, S.U.; Software, P.L. and Y.W.; Validation, Y.W.; Formal analysis, S.U. and L.T.; Investigation, Y.S.; Resources, S.U., Y.S., P.L., Y.W. and X.F.; Data curation, L.T. and X.F.; Writing—original draft, S.U.; Writing—review & editing, P.L. and X.F.; Visualization, Y.S.; Supervision, X.F.; Funding acquisition, X.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Grant 62176037, by the Research Project of China Disabled Persons’ Federation on Assistive Technology Grant 2021CDPFAT-09, by the Liaoning Revitalization Talents Program Grant XLYC1908007, by the Dalian Science and Technology Innovation Fund Grant 2019J11CY001, and Grant 2021JJ12GX028.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, Y.; Chen, H.; Waslander, S.L.; Yang, T.; Zhang, S.; Xiong, G.; Liu, K. Toward a More Complete, Flexible, and Safer Speed Planning for Autonomous Driving via Convex Optimization. Sensors 2018, 18, 2185. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Ruan, R.; Zhou, Z.; Sun, A.; Luo, X. Positioning of Unmanned Underwater Vehicle Based on Autonomous Tracking Buoy. Sensors 2023, 23, 4398. [Google Scholar] [CrossRef]

- Bayoudh, K.; Hamdaoui, F.; Mtibaa, A. Transfer Learning Based Hybrid 2D-3D CNN for Traffic Sign Recognition and Semantic Road Detection Applied in Advanced Driver Assistance Systems. Appl. Intell. 2021, 51, 124–142. [Google Scholar] [CrossRef]

- Cheng, H.Y.; Yu, C.C.; Lin, C.L.; Shih, H.C.; Kuo, C.W. Ego-Lane Position Identification with Event Warning Applications. IEEE Access 2019, 7, 14378–14386. [Google Scholar] [CrossRef]

- Li, Z.; Yuan, S.; Yin, X.; Li, X.; Tang, S. Research into Autonomous Vehicles Following and Obstacle Avoidance Based on Deep Reinforcement Learning Method under Map Constraints. Sensors 2023, 23, 844. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Greco, A.; Saggese, A.; Vento, M.; Vicinanza, A. Benchmarking 2D Multi-Object Detection and Tracking Algorithms in Autonomous Vehicle Driving Scenarios. Sensors 2023, 23, 4024. [Google Scholar] [CrossRef]

- Park, J.; Cho, J.; Lee, S.; Bak, S.; Kim, Y. An Automotive LiDAR Performance Test Method in Dynamic Driving Conditions. Sensors 2023, 23, 3892. [Google Scholar] [CrossRef]

- Giulietti, F.; Dahia, K.; Statheros, T.; Innocente, M.; Li, S.; Frey, M.; Gauterin, F. Model-Based Condition Monitoring of the Sensors and Actuators of an Electric and Automated Vehicle. Sensors 2023, 23, 887. [Google Scholar] [CrossRef]

- Kukkala, V.K.; Tunnell, J.; Pasricha, S.; Bradley, T. Advanced Driver-Assistance Systems: A Path Toward Autonomous Vehicles. IEEE Consum. Electron. Mag. 2018, 7, 18–25. [Google Scholar] [CrossRef]

- Xia, X.; Hashemi, E.; Xiong, L.; Khajepour, A. Autonomous Vehicle Kinematics and Dynamics Synthesis for Sideslip Angle Estimation Based on Consensus Kalman Filter. IEEE Trans. Control Syst. Technol. 2023, 31, 179–192. [Google Scholar] [CrossRef]

- Tsai, J.; Chang, C.-C.; Li, T. Autonomous Driving Control Based on the Technique of Semantic Segmentation. Sensors 2023, 23, 895. [Google Scholar] [CrossRef] [PubMed]

- Xiong, L.; Xia, X.; Lu, Y.; Liu, W.; Gao, L.; Song, S.; Yu, Z. IMU-Based Automated Vehicle Body Sideslip Angle and Attitude Estimation Aided by GNSS Using Parallel Adaptive Kalman Filters. IEEE Trans. Veh. Technol. 2020, 69, 10668–10680. [Google Scholar] [CrossRef]

- Xia, X.; Xiong, L.; Huang, Y.; Lu, Y.; Gao, L.; Xu, N.; Yu, Z. Estimation on IMU Yaw Misalignment by Fusing Information of Automotive Onboard Sensors. Mech. Syst. Signal. Process 2022, 162, 107993. [Google Scholar] [CrossRef]

- Alghamdi, A.S.; Saeed, A.; Kamran, M.; Mursi, K.T.; Almukadi, W.S. Vehicle Classification Using Deep Feature Fusion and Genetic Algorithms. Electronics 2023, 12, 280. [Google Scholar] [CrossRef]

- Dauptain, X.; Koné, A.; Grolleau, D.; Cerezo, V.; Gennesseaux, M.; Do, M.T. Conception of a High-Level Perception and Localization System for Autonomous Driving. Sensors 2022, 22, 9661. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Wei, Z.; Li, Y.; Jin, J.; Li, X. SEDG-Yolov5: A Lightweight Traffic Sign Detection Model Based on Knowledge Distillation. Electronics 2023, 12, 305. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. MmWave Radar and Vision Fusion for Object Detection in Autonomous Driving: A Review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef]

- Miao, L.; Chen, S.F.; Hsu, Y.L.; Hua, K.L. How Does C-V2X Help Autonomous Driving to Avoid Accidents? Sensors 2022, 22, 686. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Q.; Zhuang, Y.; Hu, H. A Novel Trail Detection and Scene Understanding Framework for a Quadrotor UAV with Monocular Vision. IEEE Sens. J. 2017, 17, 6778–6787. [Google Scholar] [CrossRef]

- Yang, S.; Wang, W.; Liu, C.; Deng, W. Scene Understanding in Deep Learning-Based End-to-End Controllers for Autonomous Vehicles. IEEE Trans. Syst. Man. Cybern. Syst. 2019, 49, 53–63. [Google Scholar] [CrossRef]

- Gao, Y.; Lin, C.; Zhao, Y.; Wang, X.; Wei, S.; Huang, Q. 3-D Surround View for Advanced Driver Assistance Systems. IEEE Trans. Intell. Transp. Syst. 2018, 19, 320–328. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery With Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth. Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An Automated Driving Systems Data Acquisition and Analytics Platform. Transp. Res. Part C Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, X.; Chen, Z.; Li, X. A Dynamic Cooperative Lane-Changing Model for Connected and Autonomous Vehicles with Possible Accelerations of a Preceding Vehicle. Expert Syst. Appl. 2021, 173, 114675. [Google Scholar] [CrossRef]

- Chen, K.; Yamaguchi, T.; Okuda, H.; Suzuki, T.; Guo, X. Realization and Evaluation of an Instructor-Like Assistance System for Collision Avoidance. IEEE Trans. Intell. Transp. Syst. 2021, 22, 2751–2760. [Google Scholar] [CrossRef]

- Gilbert, A.; Petrovic, D.; Pickering, J.E.; Warwick, K. Multi-Attribute Decision Making on Mitigating a Collision of an Autonomous Vehicle on Motorways. Expert Syst. Appl. 2021, 171, 114581. [Google Scholar] [CrossRef]

- Gao, L.; Xiong, L.; Xia, X.; Lu, Y.; Yu, Z.; Khajepour, A. Improved Vehicle Localization Using On-Board Sensors and Vehicle Lateral Velocity. IEEE Sens. J. 2022, 22, 6818–6831. [Google Scholar] [CrossRef]

- Liu, W.; Xia, X.; Xiong, L.; Lu, Y.; Gao, L.; Yu, Z. Automated Vehicle Sideslip Angle Estimation Considering Signal Measurement Characteristic. IEEE Sens. J. 2021, 21, 21675–21687. [Google Scholar] [CrossRef]

- Wang, B.; Shi, H.; Chen, L.; Wang, X.; Wang, G.; Zhong, F.A.; Wang, B.; Shi, H.; Chen, L.; Wang, X.; et al. A Recognition Method for Road Hypnosis Based on Physiological Characteristics. Sensors 2023, 23, 3404. [Google Scholar] [CrossRef]

- Liu, B.; Lai, H.; Kan, S.; Chan, C. Camera-Based Smart Parking System Using Perspective Transformation. Smart Cities 2023, 6, 1167–1184. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, L.; Lou, R.; Li, X. Recognition of Lane Changing Maneuvers for Vehicle Driving Safety. Electronics 2023, 12, 1456. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, B.; Wang, Z.; Nakano, K.A.; Valero, F.; Yan, Z.; Yang, B.; Wang, Z.; Nakano, K. A Predictive Model of a Driver’s Target Trajectory Based on Estimated Driving Behaviors. Sensors 2023, 23, 1405. [Google Scholar] [CrossRef]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust Wide-Baseline Stereo from Maximally Stable Extremal Regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Escalante, H.J.; Ponce-López, V.; Escalera, S.; Baró, X.; Morales-Reyes, A.; Martínez-Carranza, J. Evolving Weighting Schemes for the Bag of Visual Words. Neural. Comput. Appl. 2017, 28, 925–939. [Google Scholar] [CrossRef]

- Farin, G.E.; Hansford, D. The Essentials of CAGD; A.K. Peters: Natick, MA, USA, 2000; ISBN 9781568811239. [Google Scholar]

- Neumann, L.; Matas, J. Real-Time Lexicon-Free Scene Text Localization and Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1872–1885. [Google Scholar] [CrossRef] [PubMed]

- Unar, S.; Wang, X.; Zhang, C.; Wang, C. Detected Text-Based Image Retrieval Approach for Textual Images. IET Image Process 2019, 13, 515–521. [Google Scholar] [CrossRef]

- Pan, Y.F.; Hou, X.; Liu, C.L. A Hybrid Approach to Detect and Localize Texts in Natural Scene Images. IEEE Trans. Image Process. 2011, 20, 800–813. [Google Scholar] [CrossRef] [PubMed]

- Delaye, A.; Lee, K. A Flexible Framework for Online Document Segmentation by Pairwise Stroke Distance Learning. Pattern Recognit. 2015, 48, 1197–1210. [Google Scholar] [CrossRef]

- Niesler, T.R.; Woodland, P.C. A Variable-Length Category-Based n-Gram Language Model. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, Atlanta, GA, USA, 9 May 1996; Volume 1, pp. 164–167. [Google Scholar] [CrossRef]

- Tang, Z.; Huang, Z.; Yao, H.; Zhang, X.; Chen, L.; Yu, C. Perceptual Image Hashing with Weighted DWT Features for Reduced-Reference Image Quality Assessment. Comput. J. 2018, 61, 1695–1709. [Google Scholar] [CrossRef]

- Wang, H.; Bai, X.; Yang, M.; Zhu, S.; Wang, J.; Liu, W. Scene Text Retrieval via Joint Text Detection and Similarity Learning. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4556–4565. [Google Scholar]

- Park, C.; Park, S. Performance Evaluation of Zone-Based In-Vehicle Network Architecture for Autonomous Vehicles. Sensors 2023, 23, 669. [Google Scholar] [CrossRef]

- Kai, W.; Babenko, B.; Belongie, S. End-to-End Scene Text Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Karatzas, D.; Shafait, F.; Uchida, S.; Iwamura, M.; Bigorda, L.G.I.; Mestre, S.R.; Mas, J.; Mota, D.F.; Almazan, J.A.; De Las Heras, L.P. ICDAR 2013 Robust Reading Competition. In Proceedings of the 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar]

- Ch’Ng, C.K.; Chan, C.S. Total-Text: A Comprehensive Dataset for Scene Text Detection and Recognition. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 935–942. [Google Scholar]

- Yao, C.; Bai, X.; Liu, W.; Ma, Y.; Tu, Z. Detecting Texts of Arbitrary Orientations in Natural Images. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; Volume 8, pp. 1083–1090. [Google Scholar]

- Lucas, S.M.; Panaretos, A.; Sosa, L.; Tang, A.; Wong, S.; Young, R. ICDAR 2003 Robust Reading Competitions. In Proceedings of the 7th International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003; pp. 682–687. [Google Scholar]

- Unar, S.; Wang, X.; Zhang, C. Visual and Textual Information Fusion Using Kernel Method for Content Based Image Retrieval. Inf. Fusion 2018, 44, 176–187. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, Z.; Shen, W.; Zeng, D.; Fang, M.; Zhou, S. Text Detection in Scene Images Based on Exhaustive Segmentation. Signal Process Image Commun. 2017, 50, 1–8. [Google Scholar] [CrossRef]

- Unar, S.; Wang, X.; Wang, C.; Wang, Y. A Decisive Content Based Image Retrieval Approach for Feature Fusion in Visual and Textual Images. Knowl. Based Syst. 2019, 179, 8–20. [Google Scholar] [CrossRef]

- Yu, C.; Song, Y.; Zhang, Y. Scene Text Localization Using Edge Analysis and Feature Pool. Neurocomputing 2016, 175, 652–661. [Google Scholar] [CrossRef]

- Zhong, Y.; Cheng, X.; Chen, T.; Zhang, J.; Zhou, Z.; Huang, G. PRPN: Progressive Region Prediction Network for Natural Scene Text Detection. Knowl. Based Syst. 2022, 236, 107767. [Google Scholar] [CrossRef]

- Lyu, P.; Yao, C.; Wu, W.; Yan, S.; Bai, X. Multi-Oriented Scene Text Detection via Corner Localization and Region Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7553–7563. [Google Scholar]

- Hou, J.B.; Zhu, X.; Liu, C.; Sheng, K.; Wu, L.H.; Wang, H.; Yin, X.C. HAM: Hidden Anchor Mechanism for Scene Text Detection. IEEE Trans. Image Process. 2020, 29, 7904–7916. [Google Scholar] [CrossRef]

- Wang, X.; Jiang, Y.; Luo, Z.; Liu, C.L.; Choi, H.; Kim, S. Arbitrary Shape Scene Text Detection with Adaptive Text Region Representation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6442–6451. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, H.; Zha, Z.; Xing, M.; Fu, Z.; Zhang, Y. Contournet: Taking a Further Step toward Accurate Arbitrary-Shaped Scene Text Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 11750–11759. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Hou, W.; Lu, T.; Yu, G.; Shao, S. Shape Robust Text Detection with Progressive Scale Expansion Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9336–9345. [Google Scholar]

- Liao, M.; Wan, Z.; Yao, C.; Chen, K.; Bai, X. Real-Time Scene Text Detection with Differentiable Binarization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Washington, DC, USA, 2020; Volume 34, pp. 11474–11481. [Google Scholar]

- Zhang, C.; Liang, B.; Huang, Z.; En, M.; Han, J.; Ding, E.; Ding, X. Look More than Once: An Accurate Detector for Text of Arbitrary Shapes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10544–10553. [Google Scholar]

- Shi, B.; Bai, X.; Belongie, S. Detecting Oriented Text in Natural Images by Linking Segments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2550–2558. [Google Scholar]

- Mishra, A.; Alahari, K.; Jawahar, C.V. Image Retrieval Using Textual Cues. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3040–3047. [Google Scholar]

- Neumann, L.; Matas, J. Real-Time Scene Text Localization and Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3538–3545. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).