Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine

Abstract

:1. Introduction

- HAR System: We present an approach for HAR using the TI mmwave radar sensor in conjunction with PointNet neural networks implemented on the NVIDIA Jetson Nano Graphical Processing Unit (GPU) system. This system offers a non-intrusive and privacy-preserving method for monitoring human activities without using camera imagery. Furthermore, it directly uses point cloud data without additional pre-processing.

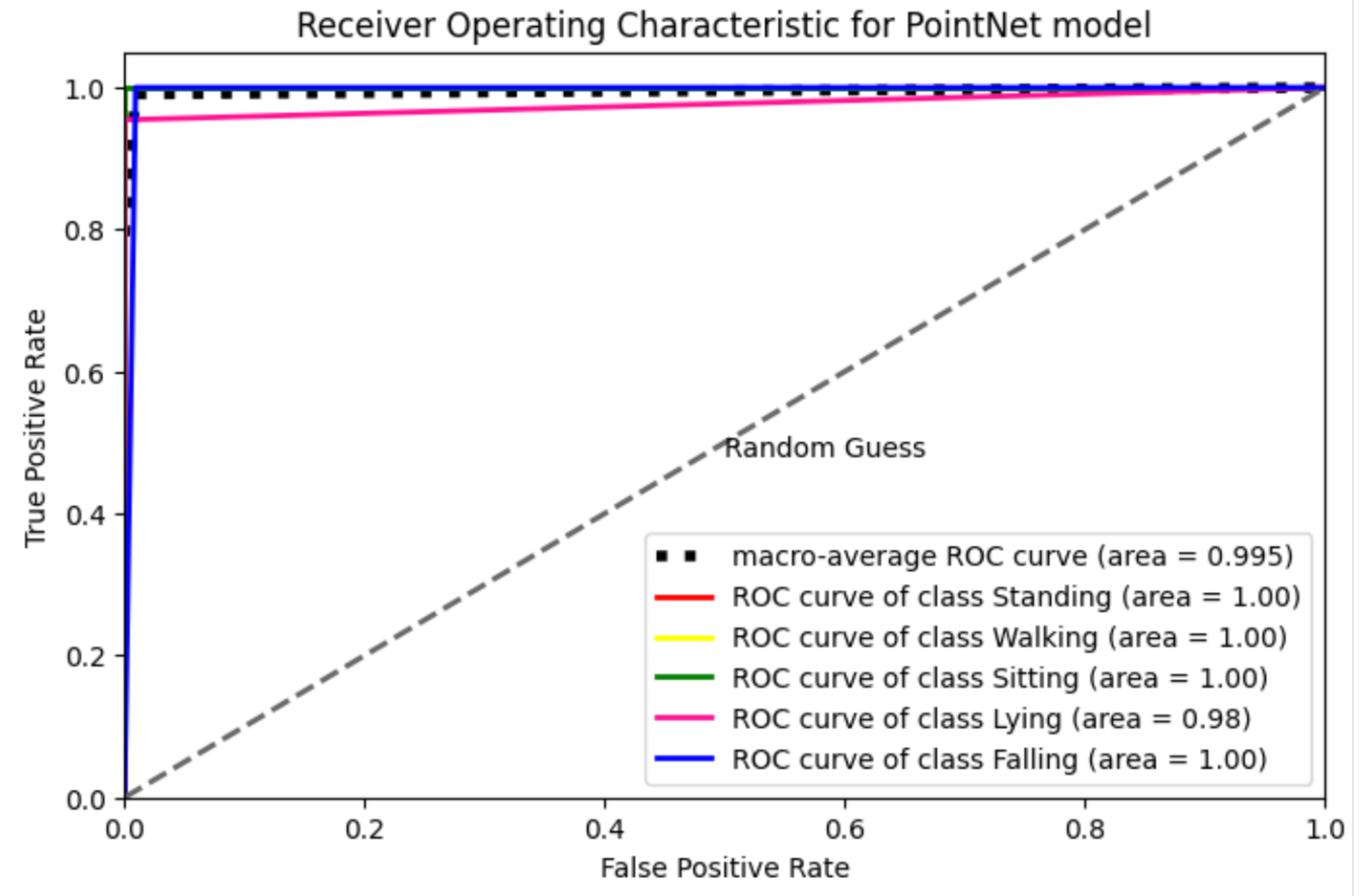

- Real-Time Classification of Common Activities: Our system achieves real-time monitoring and classification of five common activities, including standing, walking, sitting, lying, and falling, with an accuracy of 99.5%.

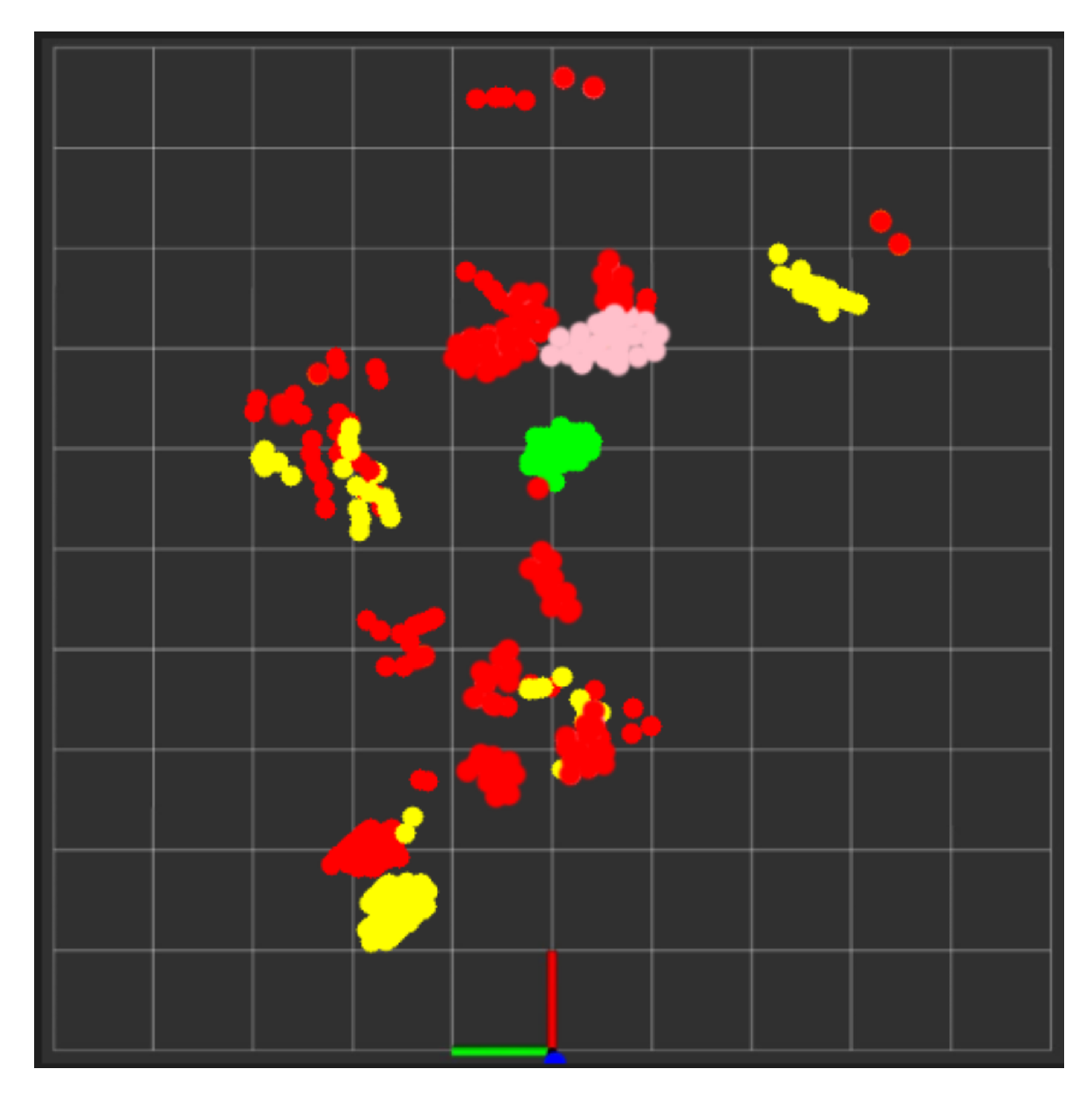

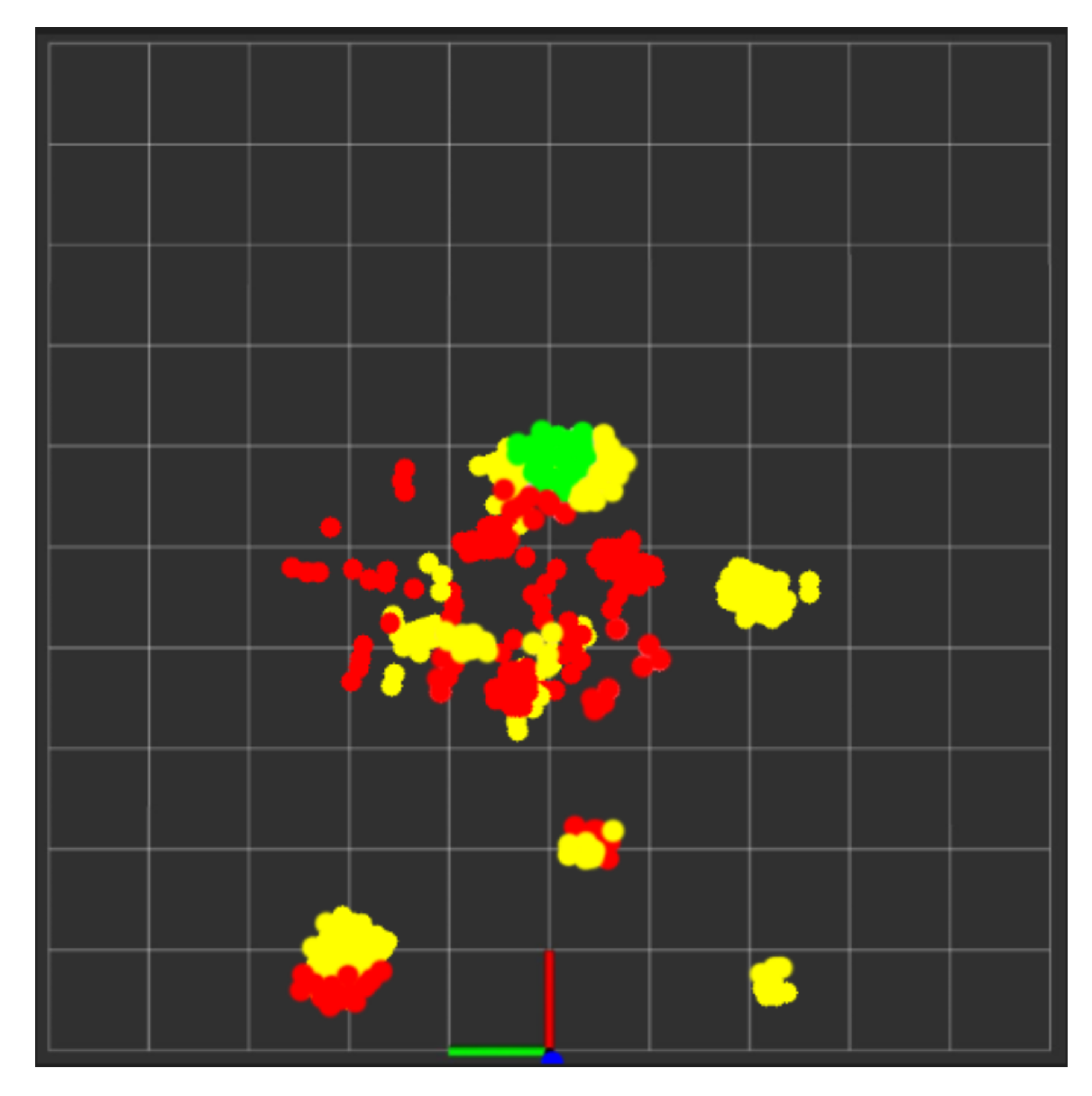

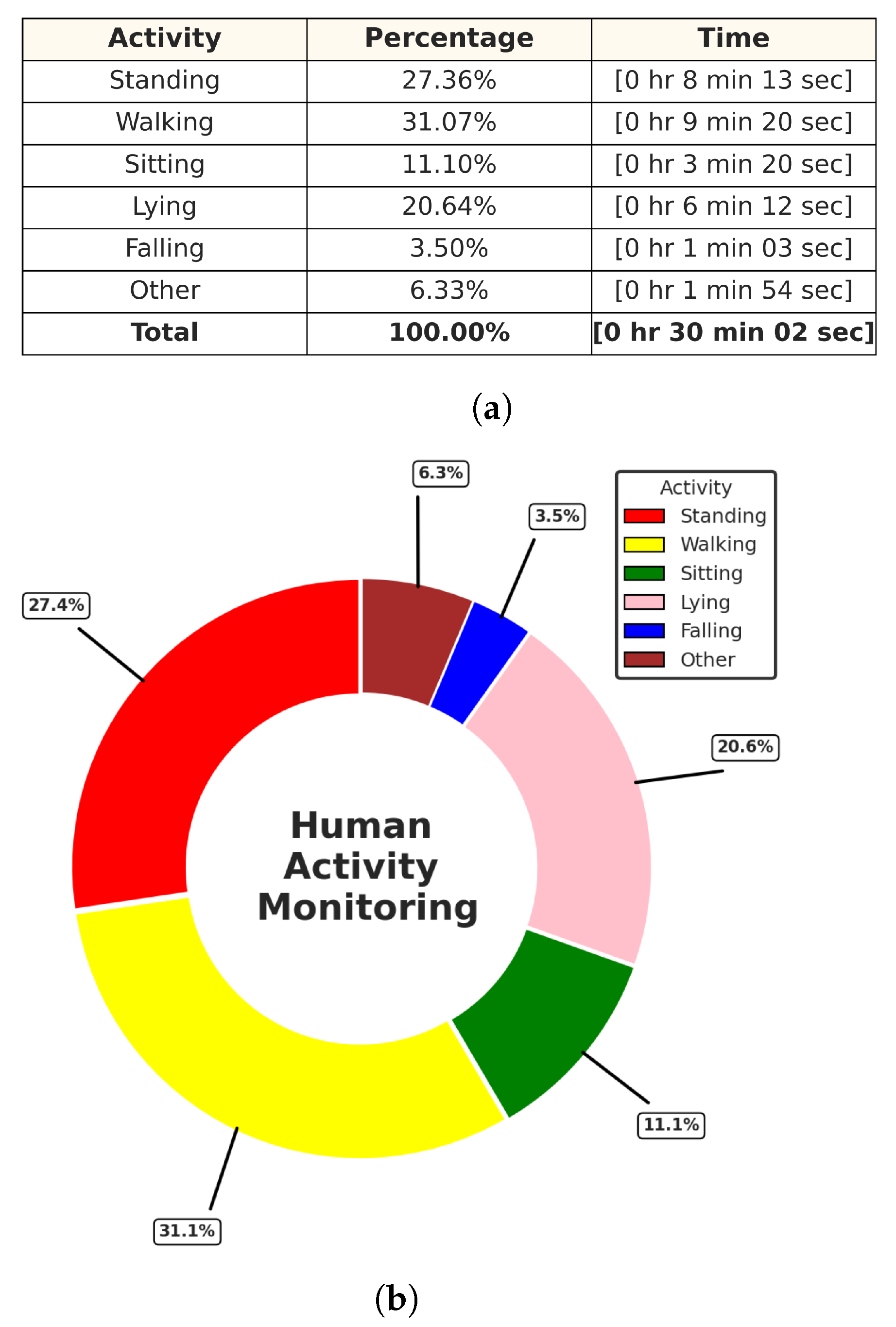

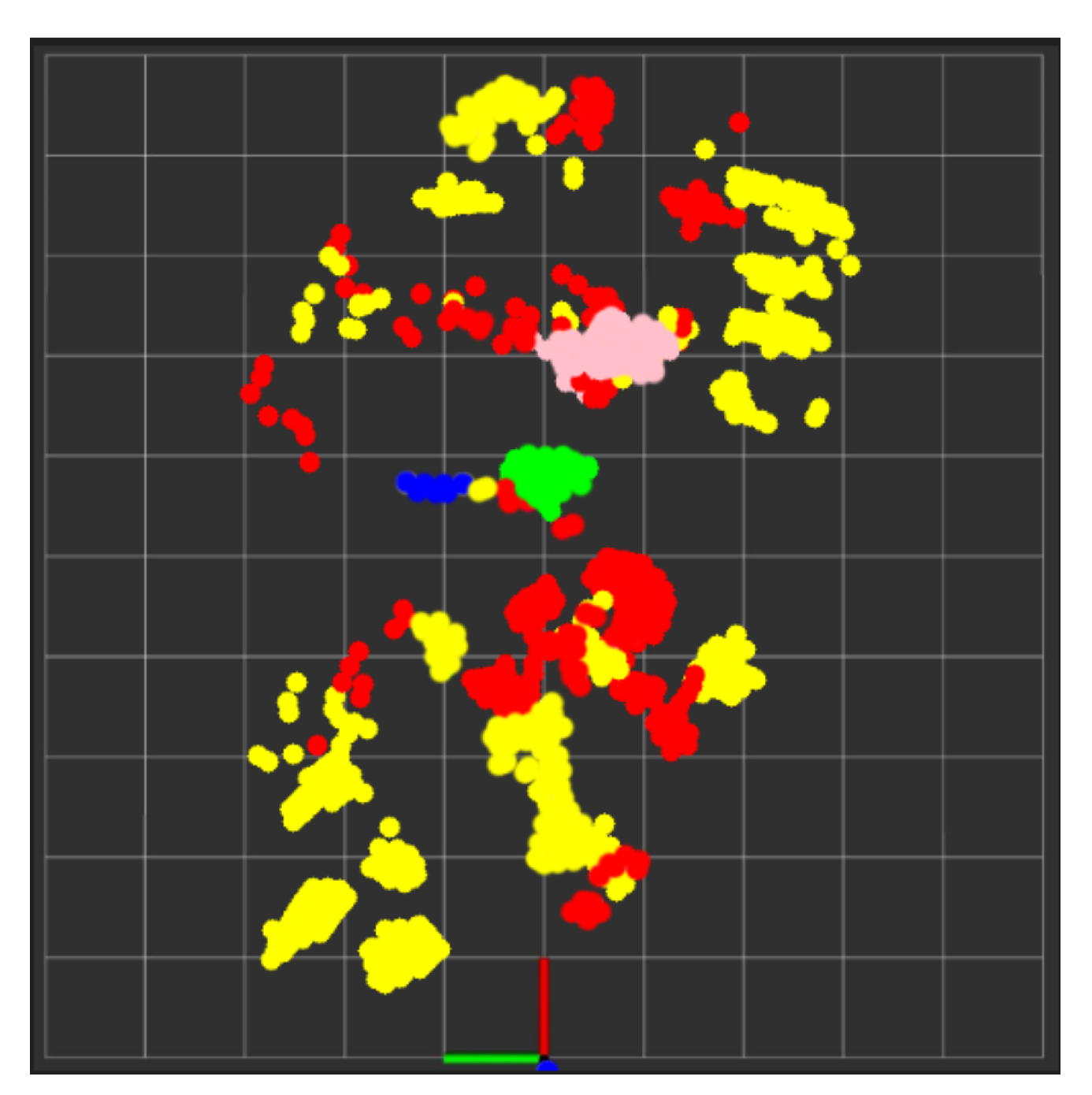

- Comprehensive Activity Analysis: We provide a novel comprehensive analysis of activities over time and spatial positions, offering valuable insights into human behavior. Our solution includes the ability to generate detailed reports that depict the temporal distribution of each activity and spatial features through tracking maps, ensuring a detailed understanding of human movement patterns.

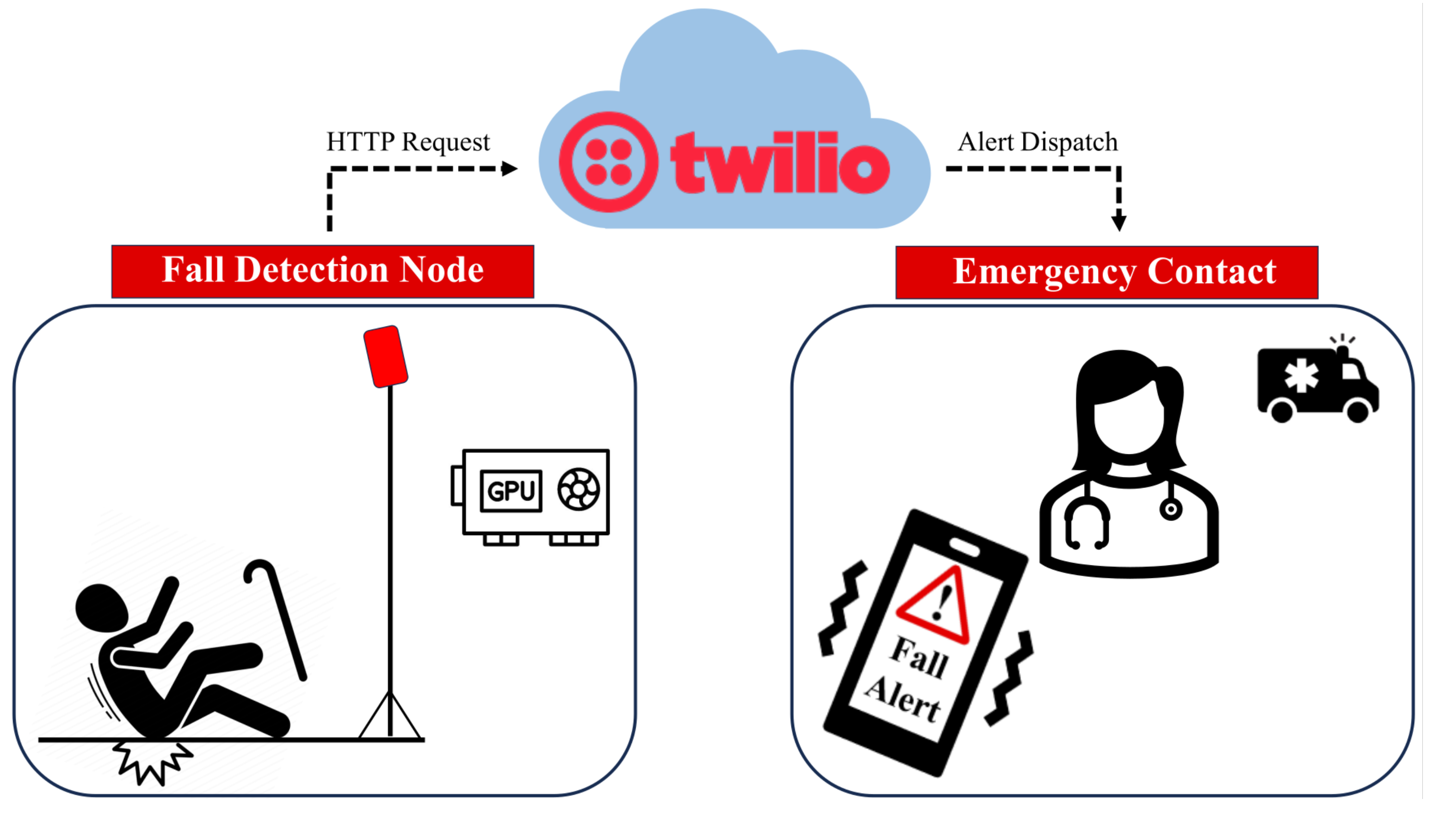

- Fall Detection and Alert Mechanism: Our system includes an alert mechanism that leverages the Twilio Application Programming Interface (API) protocol. This feature allows for prompt notification in the event of a fall, enabling rapid intervention and potentially saving lives.

2. Human Activity Recognition Approaches and Related Work

3. System Overview

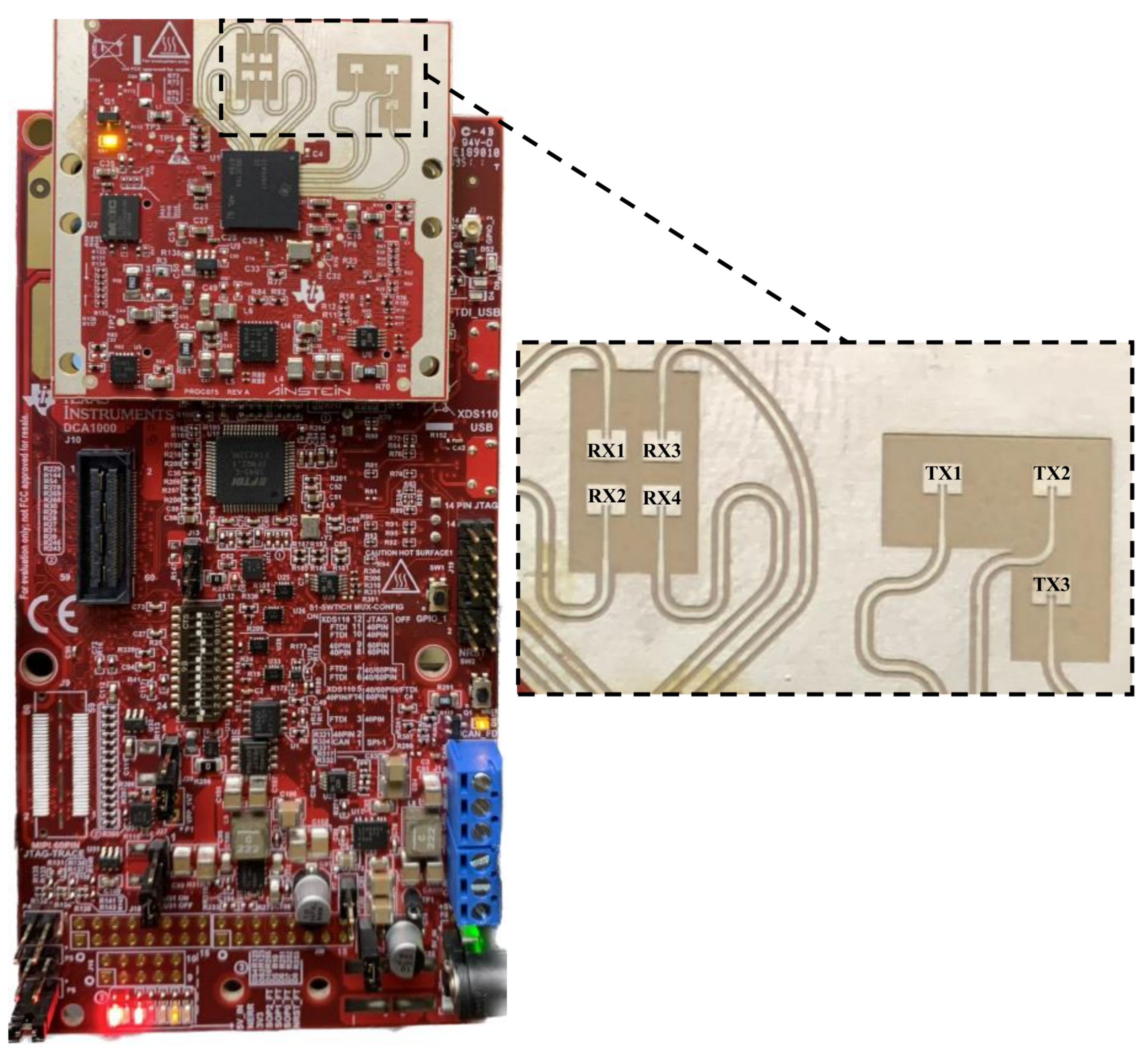

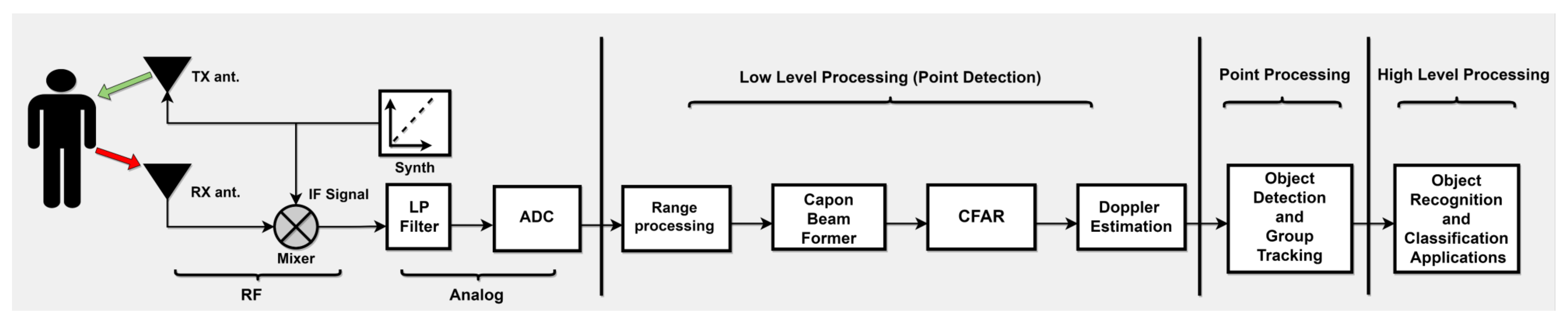

3.1. Millimeter-Wave Radar Sensor (IWR6843ISK-ODS)

3.2. NVIDIA Jetson Nano GPU

3.3. PointNet Neural Network

3.4. Twilio API Programmable Protocol Messages

4. Experiment Setup and Data Collection

4.1. Experimental Setup

4.2. Data Collection

4.3. Data Analysis

4.4. Receiver Operating Characteristic (ROC) Curve

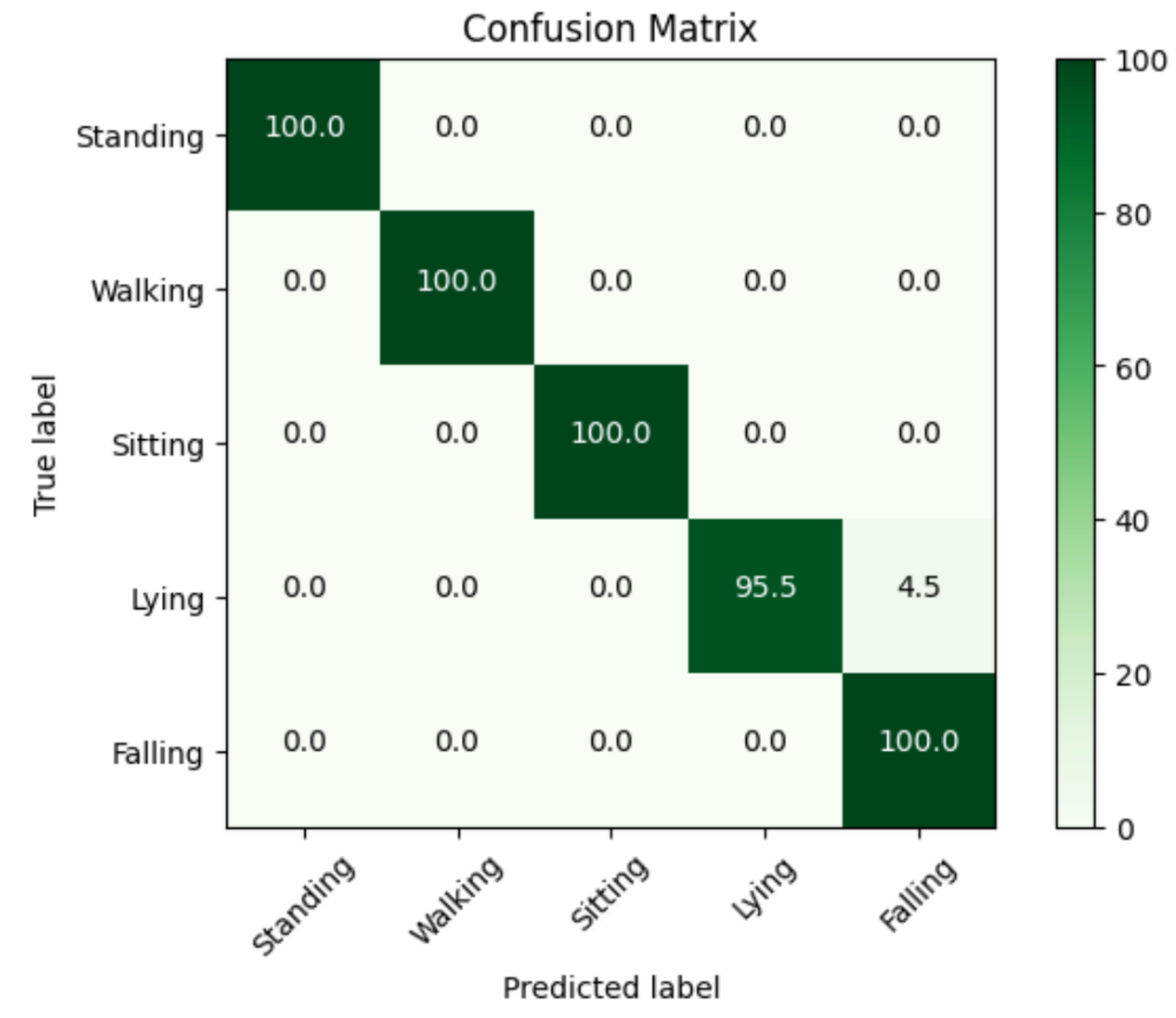

4.5. Confusion Matrixes

4.6. F1-Score, Precision, Recall

5. Results and Discussions

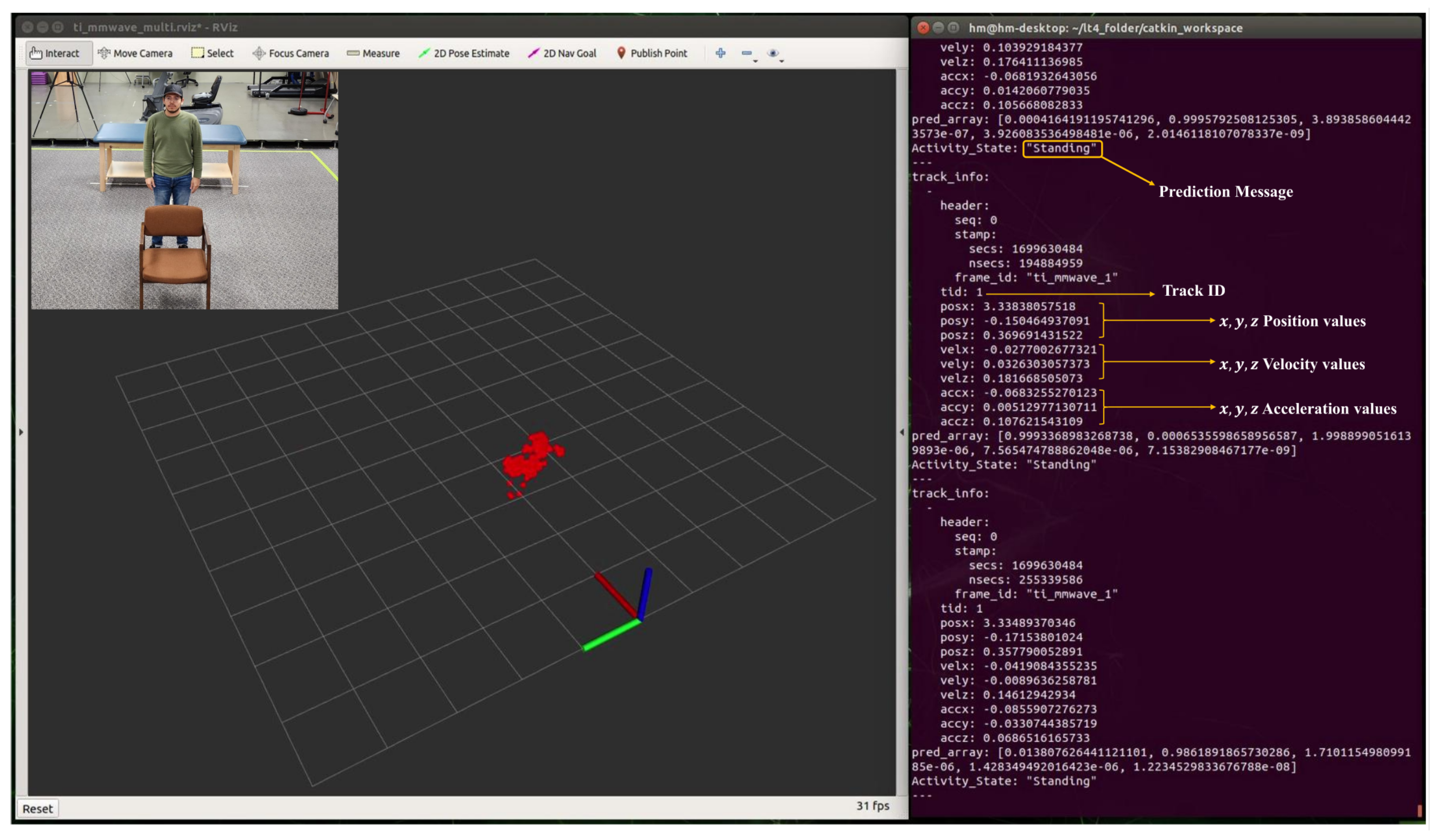

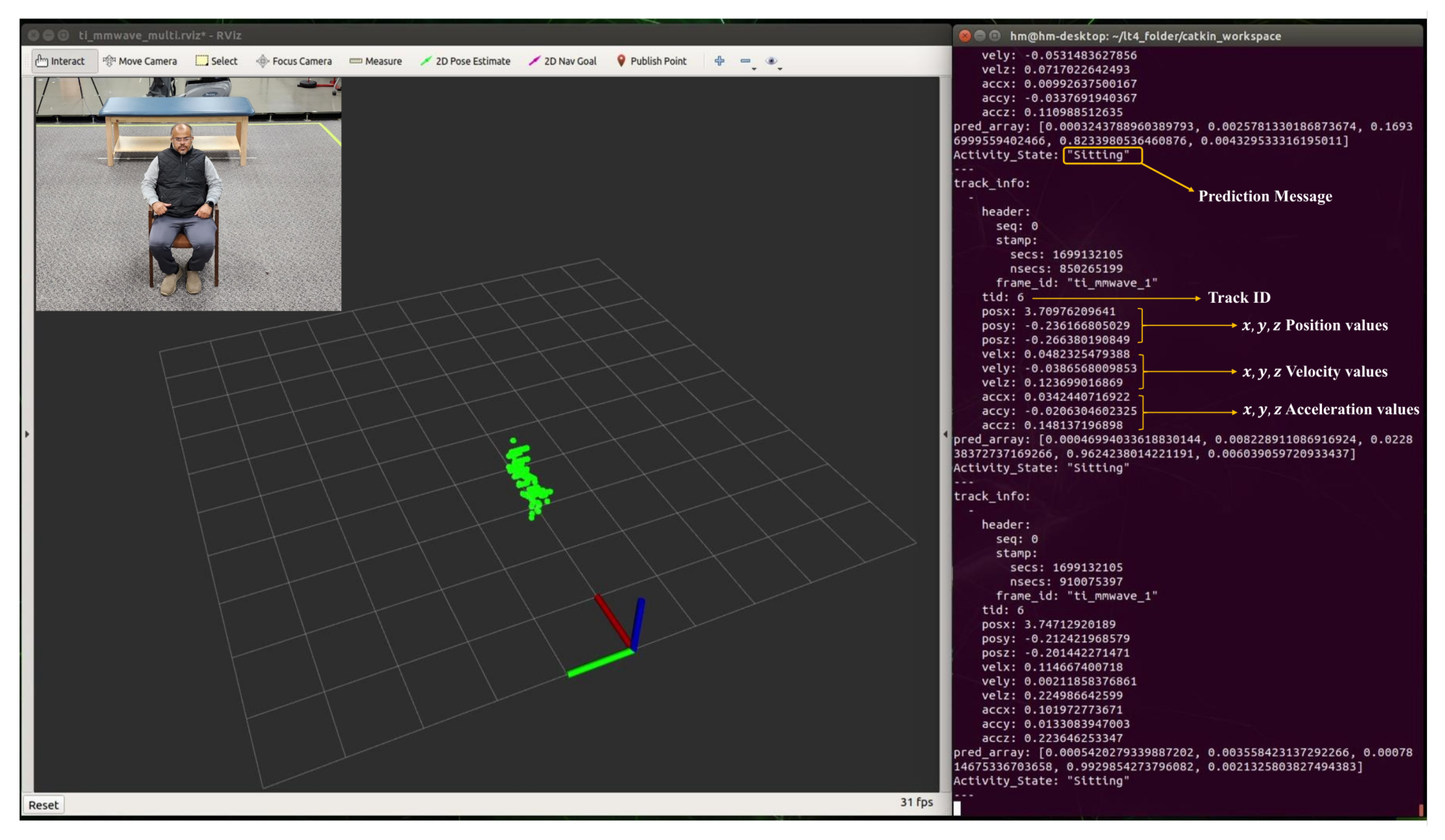

5.1. Real-time Detection of Human Activity

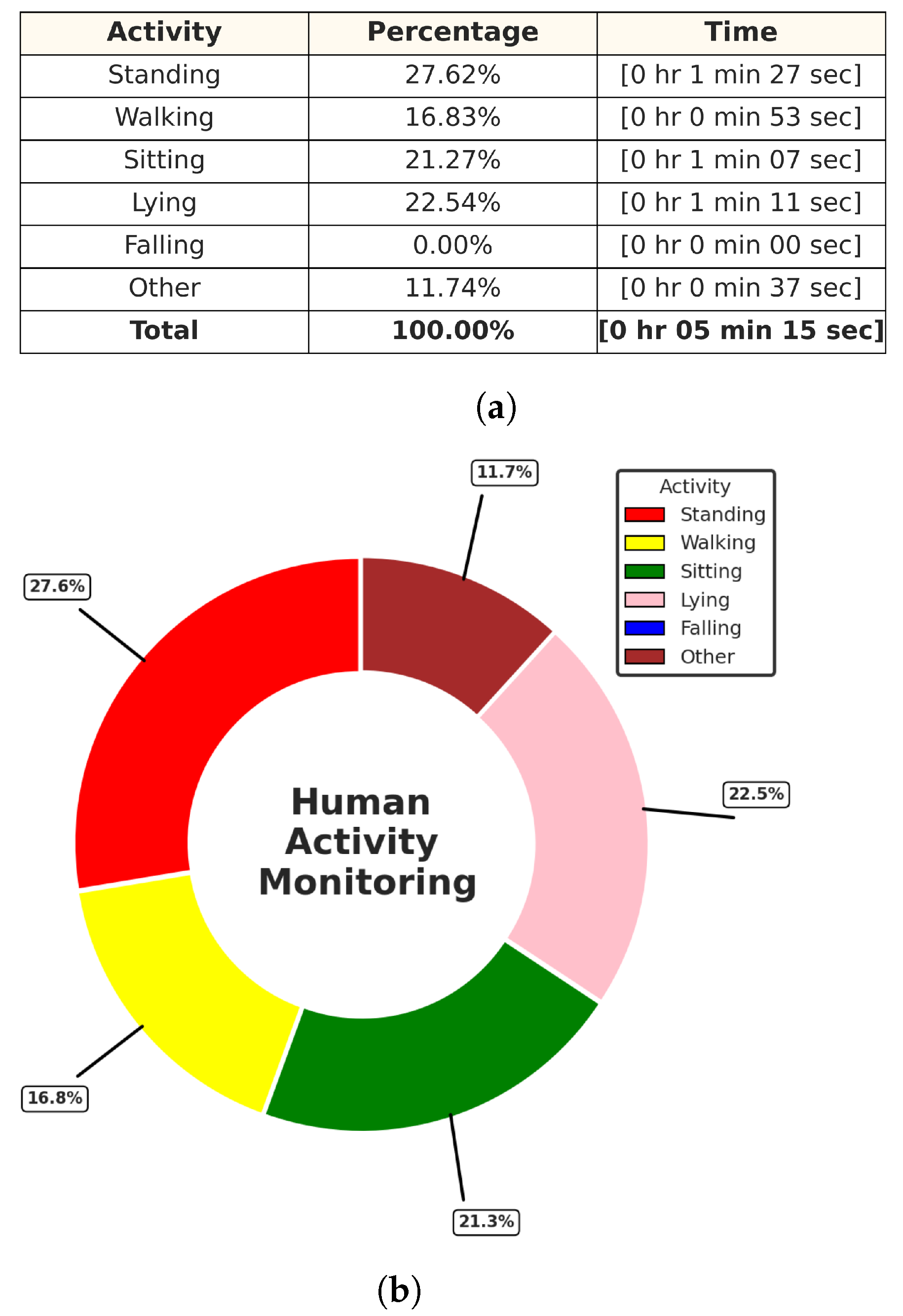

5.2. Short-Term Monitoring of Human Activity

5.3. Long-Term Monitoring of Human Activity

6. Limitations and Future Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization (WHO). National Programmes for Age-Friendly Cities and Communities: A Guide. 2023. Available online: https://www.who.int/teams/social-determinants-of-health/demographic-change-and-healthy-ageing/age-friendly-environments/national-programmes-afcc. (accessed on 1 June 2023).

- Administration for Community Living (ACL). 2021 Profile of Older Americans; The Administration for Community Living: Washington, DC, USA, 2022; Available online: https://acl.gov/sites/default/files/Profile%20of%20OA/2021%20Profile%20of%20OA/2021ProfileOlderAmericans_508.pdf. (accessed on 5 May 2023).

- Debauche, O.; Mahmoudi, S.; Manneback, P.; Assila, A. Fog IoT for Health: A new Architecture for Patients and Elderly Monitoring. Procedia Comput. Sci. 2019, 160, 289–297. [Google Scholar] [CrossRef]

- Burns, E.; Kakara, R.; Moreland, B. A CDC Compendium of Effective Fall Interventions: What Works for Community-Dwelling Older Adults, 4th ed.; Centers for Disease Control and Prevention, National Center for Injury Prevention and Control: Atlanta, GA, USA, 2023; Available online: https://www.cdc.gov/falls/pdf/Steadi_Compendium_2023_508.pdf (accessed on 10 July 2023).

- Bargiotas, I.; Wang, D.; Mantilla, J.; Quijoux, F.; Moreau, A.; Vidal, C.; Barrois, R.; Nicolai, A.; Audiffren, J.; Labourdette, C.; et al. Preventing falls: The use of machine learning for the prediction of future falls in individuals without history of fall. J. Neurol. 2023, 270, 618–631. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, C.; Ghosh, U.; Ravi, V.; Shelke, Y. Efficient Data Handling for Massive Internet of Medical Things: Healthcare Data Analytics; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Sakamaki, T.; Furusawa, Y.; Hayashi, A.; Otsuka, M.; Fernandez, J. Remote patient monitoring for neuropsychiatric disorders: A scoping review of current trends and future perspectives from recent publications and upcoming clinical trials. Telemed.-Health 2022, 28, 1235–1250. [Google Scholar] [CrossRef] [PubMed]

- Alanazi, M.A.; Alhazmi, A.K.; Alsattam, O.; Gnau, K.; Brown, M.; Thiel, S.; Jackson, K.; Chodavarapu, V.P. Towards a low-cost solution for gait analysis using millimeter wave sensor and machine learning. Sensors 2022, 22, 5470. [Google Scholar] [CrossRef]

- Palanisamy, P.; Padmanabhan, A.; Ramasamy, A.; Subramaniam, S. Remote Patient Activity Monitoring System by Integrating IoT Sensors and Artificial Intelligence Techniques. Sensors 2023, 23, 5869. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Telemedicine: Opportunities and Developments in Member States. Report on the Second Global Survey on eHealth; World Health Organization: Geneva, Switzerland, 2010. [Google Scholar]

- Zhang, X.; Lin, D.; Pforsich, H.; Lin, V.W. Physician workforce in the United States of America: Forecasting nationwide shortages. Hum. Resour. Health 2020, 18, 8. [Google Scholar] [CrossRef] [PubMed]

- Lucas, J.W.; Villarroel, M.A. Telemedicine Use among Adults: United States, 2021; US Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Health Statistics: Hyattsville, MD, USA, 2022. [Google Scholar]

- Alanazi, M.A.; Alhazmi, A.K.; Yakopcic, C.; Chodavarapu, V.P. Machine learning models for human fall detection using millimeter wave sensor. In Proceedings of the 2021 55th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 24–26 March 2021; pp. 1–5. [Google Scholar]

- Seron, P.; Oliveros, M.J.; Gutierrez-Arias, R.; Fuentes-Aspe, R.; Torres-Castro, R.C.; Merino-Osorio, C.; Nahuelhual, P.; Inostroza, J.; Jalil, Y.; Solano, R.; et al. Effectiveness of telerehabilitation in physical therapy: A rapid overview. Phys. Ther. 2021, 101, pzab053. [Google Scholar] [CrossRef]

- Usmani, S.; Saboor, A.; Haris, M.; Khan, M.A.; Park, H. Latest research trends in fall detection and prevention using machine learning: A systematic review. Sensors 2021, 21, 5134. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Jing, X. A survey of deep learning-based human activity recognition in radar. Remot. Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Texas Instruments. IWR6843, IWR6443 Single-Chip 60- to 64-GHz mmWave Sensor. 2021. Available online: https://www.ti.com/lit/ds/symlink/iwr6843.pdf?ts=1669861629404&ref_url=https%253A%252F%252Fwww.google.com.hk%252F (accessed on 25 June 2023).

- Alhazmi, A.K.; Alanazi, M.A.; Liu, C.; Chodavarapu, V.P. Machine Learning Enabled Fall Detection with Compact Millimeter Wave System. In Proceedings of the NAECON 2021-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 16–19 August 2021; pp. 217–222. [Google Scholar]

- Singh, A.D.; Sandha, S.S.; Garcia, L.; Srivastava, M. Radhar: Human activity recognition from point clouds generated through a millimeter-wave radar. In Proceedings of the 3rd ACM Workshop on Millimeter-Wave Networks and Sensing Systems, Los Cabos, Mexico, 25 October 2019; pp. 51–56. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Huang, T.; Liu, G.; Li, S.; Liu, J. RPCRS: Human Activity Recognition Using Millimeter Wave Radar. In Proceedings of the 2022 IEEE 28th International Conference on Parallel and Distributed Systems (ICPADS), Nanjing, China, 10–12 January 2023; pp. 122–129. [Google Scholar]

- Beddiar, D.R.; Nini, B.; Sabokrou, M.; Hadid, A. Vision-based human activity recognition: A survey. Multimed. Tools Appl. 2020, 79, 30509–30555. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. Acm Comput. Surv. (CSUR) 2014, 46, 1–33. [Google Scholar] [CrossRef]

- Bouchabou, D.; Nguyen, S.M.; Lohr, C.; LeDuc, B.; Kanellos, I. A survey of human activity recognition in smart homes based on IoT sensors algorithms: Taxonomies, challenges, and opportunities with deep learning. Sensors 2021, 21, 6037. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Jalal, A.; Mahmood, M. Vision-based human activity recognition system using depth silhouettes: A smart home system for monitoring the residents. J. Electr. Eng. Technol. 2019, 14, 2567–2573. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep learning in human activity recognition with wearable sensors: A review on advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef] [PubMed]

- Bibbò, L.; Carotenuto, R.; Della Corte, F. An overview of indoor localization system for human activity recognition (HAR) in healthcare. Sensors 2022, 22, 8119. [Google Scholar] [CrossRef]

- Tarafdar, P.; Bose, I. Recognition of human activities for wellness management using a smartphone and a smartwatch: A boosting approach. Decis. Support Syst. 2021, 140, 113426. [Google Scholar] [CrossRef]

- Tan, T.H.; Shih, J.Y.; Liu, S.H.; Alkhaleefah, M.; Chang, Y.L.; Gochoo, M. Using a Hybrid Neural Network and a Regularized Extreme Learning Machine for Human Activity Recognition with Smartphone and Smartwatch. Sensors 2023, 23, 3354. [Google Scholar] [CrossRef]

- Ramezani, R.; Cao, M.; Earthperson, A.; Naeim, A. Developing a Smartwatch-Based Healthcare Application: Notes to Consider. Sensors 2023, 23, 6652. [Google Scholar] [CrossRef]

- Kheirkhahan, M.; Nair, S.; Davoudi, A.; Rashidi, P.; Wanigatunga, A.A.; Corbett, D.B.; Mendoza, T.; Manini, T.M.; Ranka, S. A smartwatch-based framework for real-time and online assessment and mobility monitoring. J. Biomed. Inform. 2019, 89, 29–40. [Google Scholar] [CrossRef]

- Montes, J.; Young, J.C.; Tandy, R.; Navalta, J.W. Reliability and validation of the hexoskin wearable bio-collection device during walking conditions. Int. J. Exerc. Sci. 2018, 11, 806. [Google Scholar]

- Ravichandran, V.; Sadhu, S.; Convey, D.; Guerrier, S.; Chomal, S.; Dupre, A.M.; Akbar, U.; Solanki, D.; Mankodiya, K. iTex Gloves: Design and In-Home Evaluation of an E-Textile Glove System for Tele-Assessment of Parkinson’s Disease. Sensors 2023, 23, 2877. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Pecoraro, P.M.; Pecoraro, G.; Caminiti, M.L.; Di Lazzaro, V. Markerless radio frequency indoor monitoring for telemedicine: Gait analysis, indoor positioning, fall detection, tremor analysis, vital signs and sleep monitoring. Sensors 2022, 22, 8486. [Google Scholar] [CrossRef] [PubMed]

- Rezaei, A.; Mascheroni, A.; Stevens, M.C.; Argha, R.; Papandrea, M.; Puiatti, A.; Lovell, N.H. Unobtrusive Human Fall Detection System Using mmWave Radar and Data Driven Methods. IEEE Sensors J. 2023, 23, 7968–7976. [Google Scholar] [CrossRef]

- Pareek, P.; Thakkar, A. A survey on video-based human action recognition: Recent updates, datasets, challenges, and applications. Artif. Intell. Rev. 2021, 54, 2259–2322. [Google Scholar] [CrossRef]

- Xu, D.; Qi, X.; Li, C.; Sheng, Z.; Huang, H. Wise information technology of med: Human pose recognition in elderly care. Sensors 2021, 21, 7130. [Google Scholar] [CrossRef] [PubMed]

- Lan, G.; Liang, J.; Liu, G.; Hao, Q. Development of a smart floor for target localization with bayesian binary sensing. In Proceedings of the 2017 IEEE 31st International Conference on Advanced Information Networking and Applications (AINA), Taipei, Taiwan, 27–29 March 2017; pp. 447–453. [Google Scholar]

- Luo, Y.; Li, Y.; Foshey, M.; Shou, W.; Sharma, P.; Palacios, T.; Torralba, A.; Matusik, W. Intelligent carpet: Inferring 3d human pose from tactile signals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11255–11265. [Google Scholar]

- Zhao, Y.; Zhou, H.; Lu, S.; Liu, Y.; An, X.; Liu, Q. Human activity recognition based on non-contact radar data and improved PCA method. Appl. Sci. 2022, 12, 7124. [Google Scholar] [CrossRef]

- Iovescu, C.; Rao, S. The Fundamentals of Millimeter Wave Sensors; Texas Instrument: Dallas, TX, USA, 2017; pp. 1–8. [Google Scholar]

- Jin, F.; Sengupta, A.; Cao, S. mmfall: Fall detection using 4-d mmwave radar and a hybrid variational rnn autoencoder. IEEE Trans. Autom. Sci. Eng. 2020, 19, 1245–1257. [Google Scholar] [CrossRef]

- Broeder, G. Human Activity Recognition Using a mmWave Radar. Bachelor’s Thesis, University of Twente, Netherlands, Enschede, The Netherlands, 2022. [Google Scholar]

- An, S.; Ogras, U.Y. Mars: Mmwave-based assistive rehabilitation system for smart healthcare. Acm Trans. Embed. Comput. Syst. (TECS) 2021, 20, 1–22. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, S. Real-time human motion behavior detection via CNN using mmWave radar. IEEE Sens. Lett. 2018, 3, 3500104. [Google Scholar] [CrossRef]

- Jin, F.; Zhang, R.; Sengupta, A.; Cao, S.; Hariri, S.; Agarwal, N.K.; Agarwal, S.K. Multiple patients behavior detection in real-time using mmWave radar and deep CNNs. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019; pp. 1–6. [Google Scholar]

- Cui, H.; Dahnoun, N. Real-time short-range human posture estimation using mmWave radars and neural networks. IEEE Sens. J. 2021, 22, 535–543. [Google Scholar] [CrossRef]

- Liu, K.; Zhang, Y.; Tan, A.; Sun, Z.; Ding, C.; Chen, J.; Wang, B.; Liu, J. Micro-doppler feature and image based human activity classification with FMCW radar. In Proceedings of the IET International Radar Conference (IET IRC 2020), Online, 4–6 November 2020; Volume 2020, pp. 1689–1694. [Google Scholar]

- Tiwari, G.; Gupta, S. An mmWave radar based real-time contactless fitness tracker using deep CNNs. IEEE Sens. J. 2021, 21, 17262–17270. [Google Scholar] [CrossRef]

- Wu, J.; Cui, H.; Dahnoun, N. A voxelization algorithm for reconstructing MmWave radar point cloud and an application on posture classification for low energy consumption platform. Sustainability 2023, 15, 3342. [Google Scholar] [CrossRef]

- Li, Z.; Ni, H.; He, Y.; Li, J.; Huang, B.; Tian, Z.; Tan, W. mmBehavior: Human Activity Recognition System of millimeter-wave Radar Point Clouds Based on Deep Recurrent Neural Network. 2023; preprint. [Google Scholar] [CrossRef]

- Li, Z.; Li, W.; Liu, H.; Wang, Y.; Gui, G. Optimized pointnet for 3d object classification. In Proceedings of the Advanced Hybrid Information Processing: Third EAI International Conference, ADHIP 2019, Nanjing, China, 21–22 September 2019; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2019; pp. 271–278. [Google Scholar]

- Rajab, K.Z.; Wu, B.; Alizadeh, P.; Alomainy, A. Multi-target tracking and activity classification with millimeter-wave radar. Appl. Phys. Lett. 2021, 119, 034101. [Google Scholar] [CrossRef]

- Ahmed, S.; Park, J.; Cho, S.H. FMCW radar sensor based human activity recognition using deep learning. In Proceedings of the 2022 International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Republic of Korea, 6–9 February 2022; pp. 1–5. [Google Scholar]

- Werthen-Brabants, L.; Bhavanasi, G.; Couckuyt, I.; Dhaene, T.; Deschrijver, D. Split BiRNN for real-time activity recognition using radar and deep learning. Sci. Rep. 2022, 12, 7436. [Google Scholar] [CrossRef]

- Hassan, S.; Wang, X.; Ishtiaq, S.; Ullah, N.; Mohammad, A.; Noorwali, A. Human Activity Classification Based on Dual Micro-Motion Signatures Using Interferometric Radar. Remote Sens. 2023, 15, 1752. [Google Scholar] [CrossRef]

- Sun, Y.; Hang, R.; Li, Z.; Jin, M.; Xu, K. Privacy-preserving fall detection with deep learning on mmWave radar signal. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, NSW, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- Senigagliesi, L.; Ciattaglia, G.; Gambi, E. Contactless walking recognition based on mmWave radar. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020; pp. 1–4. [Google Scholar]

- Xie, Y.; Jiang, R.; Guo, X.; Wang, Y.; Cheng, J.; Chen, Y. mmFit: Low-Effort Personalized Fitness Monitoring Using Millimeter Wave. In Proceedings of the 2022 International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 25–28 July 2022; pp. 1–10. [Google Scholar]

- Texas Instruments. IWR6843ISK-ODS Product Details. Available online: https://www.ti.com/product/IWR6843ISK-ODS/part-details/IWR6843ISK-ODS. (accessed on 9 April 2023).

- Texas Instruments. Detection Layer Parameter Tuning Guide for the 3D People Counting Demo; Revision 3.0; Incorporated: Dallas, TX, USA, 2023. [Google Scholar]

- Texas Instruments. Group Tracker Parameter Tuning Guide for the 3D People Counting Demo; Revision 1.1; Incorporated: Dallas, TX, USA, 2023. [Google Scholar]

- NVIDIA Corporation. Jetson NANO Module. Available online: https://developer.nvidia.com/embedded/jetson-nano. (accessed on 2 January 2023).

- NVIDIA Corporation. DATA SHEET Jetson Nano System-on-Module Data Sheet; Version 1; NVIDIA Corporation: Santa Clara, CA, USA, 2019. [Google Scholar]

- Jeong, E.; Kim, J.; Ha, S. Tensorrt-based framework and optimization methodology for deep learning inference on jetson boards. Acm Trans. Embed. Comput. Syst. (TECS) 2022, 21, 51. [Google Scholar] [CrossRef]

- NVIDIA Corporation. NVIDIA TensorRT Developer Guide, NVIDIA Docs; Release 8.6.1; NVIDIA Corporation: Santa Clara, CA, USA, 2023. [Google Scholar]

- Twilio Inc. Twilio’s Rest APIs. Available online: https://www.twilio.com/docs/usage/api. (accessed on 23 August 2023).

| Ref. | Preprocessing Methods | Sensor Type | Model | Activity Detection | Overall Accuracy |

|---|---|---|---|---|---|

| [45] | Micro-Doppler Signatures | TI AWR1642 | CNN 1 | Walking, swinging hands, sitting, and shifting. | 95.19% |

| [46] | Micro-Doppler Signatures | TI AWR1642 | CNN | Standing, walking, falling, swing, seizure, restless. | 98.7% |

| [53] | Micro-Doppler Signatures | TI IWR6843 | DNN 2 | Standing, running, jumping jacks, jumping, jogging, squats. | 95% |

| [54] | Micro-Doppler Signatures | TI IWR6843ISK | CNN | Stand, sit, move toward, away, pick up something from ground, left, right, and stay still. | 91% |

| [55] | Micro-Doppler | TI xWR14xx | RNN 3 | Stand up, sit down, walk, fall, get in, lie down, | NA 4 |

| Signatures | TI xWR68xx | roll in, sit in, and get out of bed. | |||

| [56] | Dual-Micro Motion Signatures | TI AWR1642 | CNN | Standing, sitting, walking, running, jumping, punching, bending, and climbing. | 98% |

| [57] | Reflection | Two | LSTM 5 | Falling, walking, pickup, stand up, boxing, sitting, | 80% |

| Heatmap | TI IWR1642 | and Jogging. | |||

| [58] | Doppler Maps | TI AWR1642 | PCA 6 | Fast walking, slow walking (with swinging hands, or without swinging hands), and limping. | 96.1% |

| [59] | Spatial-Temporal Heatmaps | TI AWR1642 | CNN | 14 Common in-home full-body workout. | 97% |

| [47] | Heatmap Images | TI IWR1443 | CNN | Standing, walking, and sitting. | 71% |

| [48] | Doppler Images | TI AWR1642 | SVM 7 | Stand up, pick up, drink while standing, walk, sit down. | 95% |

| [49] | Doppler Images | TI AWR1642 | SVM | Shoulder press, lateral raise, dumbbell, squat, boxing, right and left triceps. | NA |

| [19] | Voxelization | TI IWR1443 | T-D 8 CNN | Walking, jumping, jumping jacks, squats and boxing. | 90.47% |

| B-D 9 LSTM | |||||

| [50] | Voxelization | TI IWR1443 | CNN | Sitting posture with various directions. | 99% |

| [21] | Raw Points Cloud | TI IWR1843 | PointNet | Walking, rotating, waving, stooping, and falling. | 95.40% |

| This | Raw Points Cloud | TI IWR6843 | PointNet | Standing, walking, sitting, lying, falling. | 99.5% |

| Work |

| Parameter | Physical Description |

|---|---|

| Type | FMCW |

| Frequency Band | 60–64 GHz |

| Start Frequency (fo) | 60.75 GHz |

| Idle Time (Tidle) | 30 s |

| bandwidth (B) | 1780.41 MHz |

| Number of Transmitters (Tx) | 3 |

| Number of Receivers (Rx) | 4 |

| Total virtual antennas (NTx, NRx) | 12 |

| Transmit power (PTx) | −10 dBm |

| Noise figure of the receiver () | 16 dB |

| Combined Tx/Rx antenna gain (GTx, GRx) | 16 dB |

| Azimuth (FoV) | 120 |

| Elevation (FoV) | 120 |

| Chirp time (Tc) | 32.5 s |

| Inter-Chirp time (Tr) | 267.30 s |

| Number of chirps per frame (Nc) | 96 |

| Maximum beat frequency (fb) | 2.66 MHz |

| Center Frequency (fc) | 63.01 GHz |

| Required detection (SNR) | 12 dB |

| Maximum unambiguous range (rmax,u) 1 | 7.28 m |

| Maximum detection range based on SNR (rmax,d) | 18.27 m |

| Maximum unambiguous velocity (vmax) 2 | 4.45 m/s |

| Range resolution (r) 3 | 0.0842 m |

| Velocity resolution (v) 4 | 0.0928 m/s |

| Parameter | Physical Description |

|---|---|

| GPU | 128 cores NVIDIA Maxwell architecture |

| CPU | ARM Cortex-A57 multiprocessor core (Quad-core) unit |

| RAM | 64-bit LPDDR4 |

| Memory Capacity | 4 GB |

| Max Memory Bus Frequency | 1600 MHz |

| Peak Bandwidth | 25.6 GB/s |

| Storage | 16 GB, eMMC 5.1 |

| Power | 5 Watt |

| Mechanical | 69.6 mm × 45 mm, 260-pin edge connector |

| Parameter | Mean ± SD (Range) |

|---|---|

| Age | 24 ± 7.42 (21–53) |

| Height (cm) | 169 ± 5.32 (158–186) |

| weight (kg) | 76 ± 11.53 (55–115) |

| BMI 1 (kg/cm2) | 25.44 ± 4.36 (19.56–40.55) |

| Gender (M/F) 2 | 57/32 |

| Standing | Walking | Sitting | Lying | Falling | |

|---|---|---|---|---|---|

| F1-score | 1.00 | 1.00 | 1.00 | 0.9767 | 0.9756 |

| Precision | 1.00 | 1.00 | 1.00 | 1.00 | 0.9524 |

| Recall | 1.00 | 1.00 | 1.00 | 0.9545 | 1.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhazmi, A.K.; Alanazi, M.A.; Alshehry, A.H.; Alshahry, S.M.; Jaszek, J.; Djukic, C.; Brown, A.; Jackson, K.; Chodavarapu, V.P. Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine. Sensors 2024, 24, 268. https://doi.org/10.3390/s24010268

Alhazmi AK, Alanazi MA, Alshehry AH, Alshahry SM, Jaszek J, Djukic C, Brown A, Jackson K, Chodavarapu VP. Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine. Sensors. 2024; 24(1):268. https://doi.org/10.3390/s24010268

Chicago/Turabian StyleAlhazmi, Abdullah K., Mubarak A. Alanazi, Awwad H. Alshehry, Saleh M. Alshahry, Jennifer Jaszek, Cameron Djukic, Anna Brown, Kurt Jackson, and Vamsy P. Chodavarapu. 2024. "Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine" Sensors 24, no. 1: 268. https://doi.org/10.3390/s24010268

APA StyleAlhazmi, A. K., Alanazi, M. A., Alshehry, A. H., Alshahry, S. M., Jaszek, J., Djukic, C., Brown, A., Jackson, K., & Chodavarapu, V. P. (2024). Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine. Sensors, 24(1), 268. https://doi.org/10.3390/s24010268