The Aerial Guide Dog: A Low-Cognitive-Load Indoor Electronic Travel Aid for Visually Impaired Individuals

Abstract

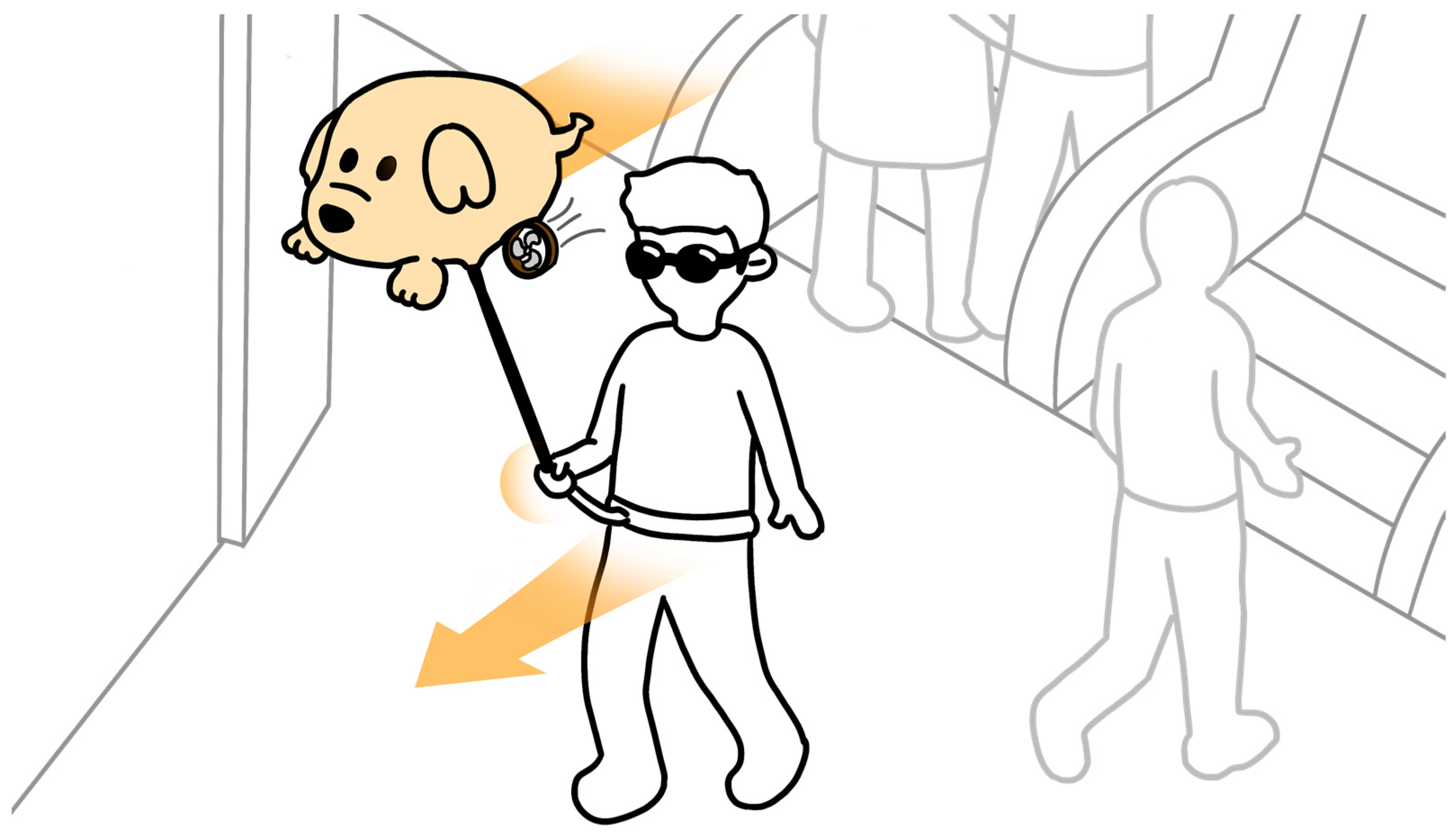

1. Introduction

- An innovative solution for indoor ETAs based on tactile sensory substitution.

- A prototype system that is potentially easy to use, requires short training times, and is cost-effective.

- Preliminary results from pilot studies validating the prototype’s effectiveness through directional perception experiments and path-following tests.

2. Related Work

3. Design and Implementation

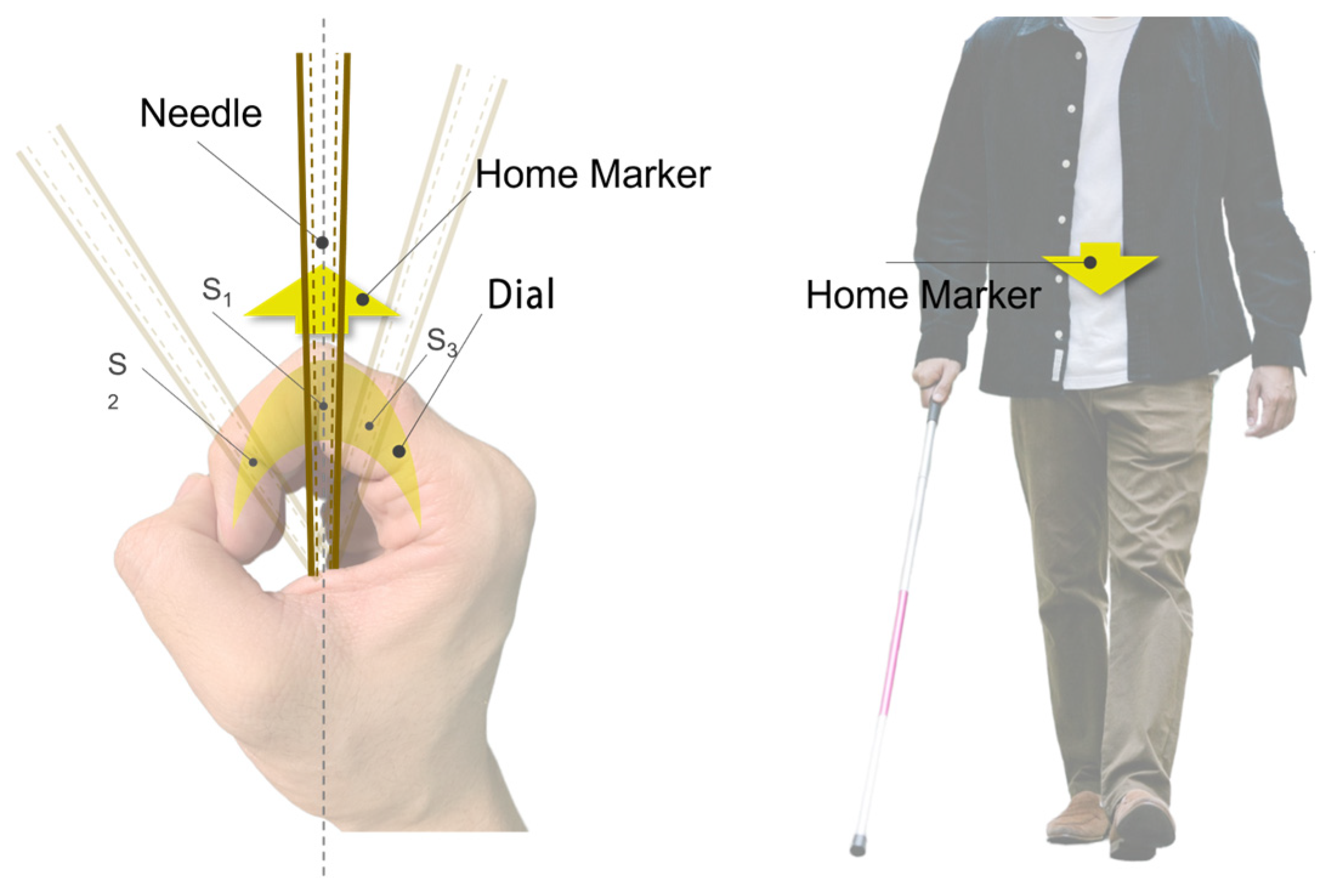

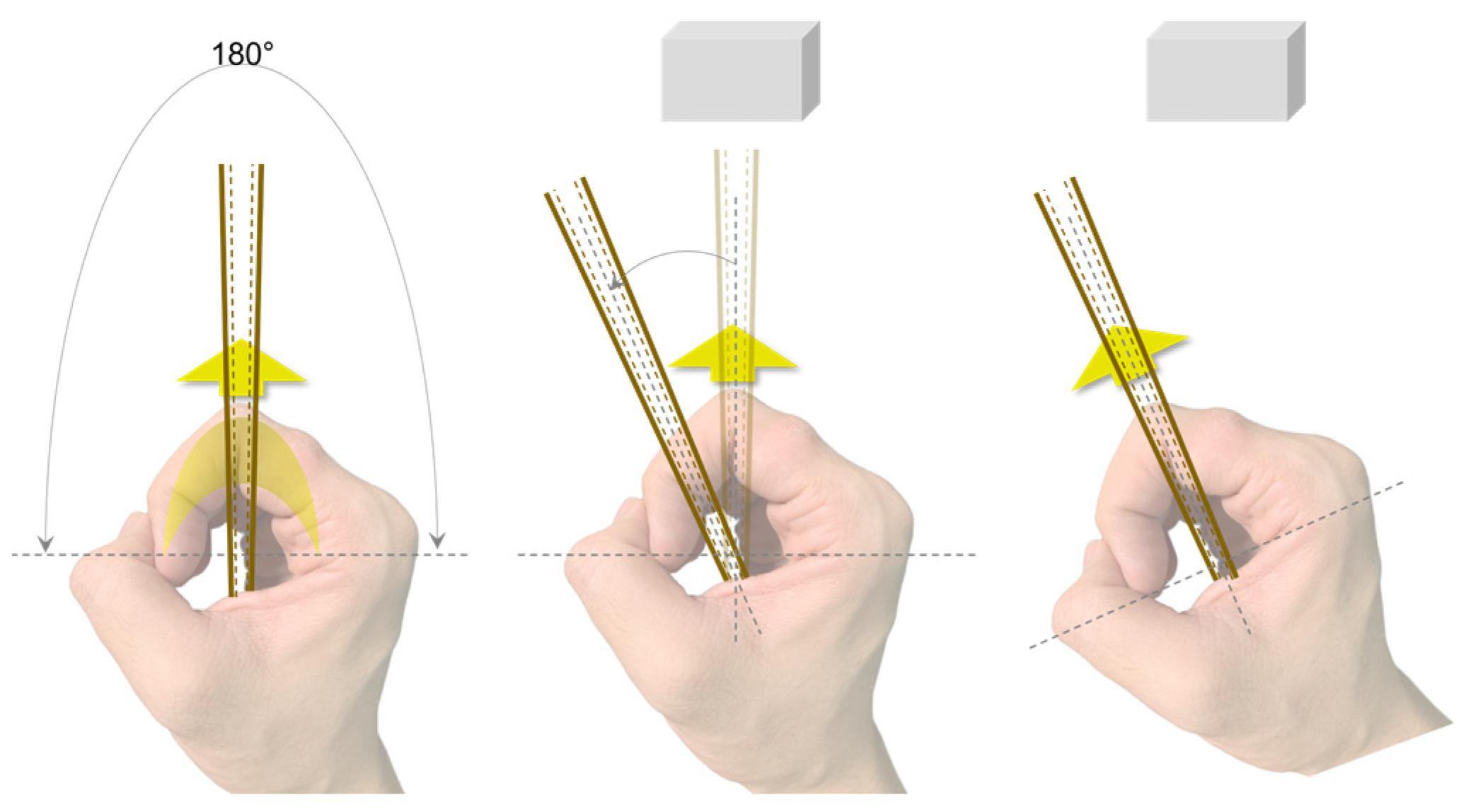

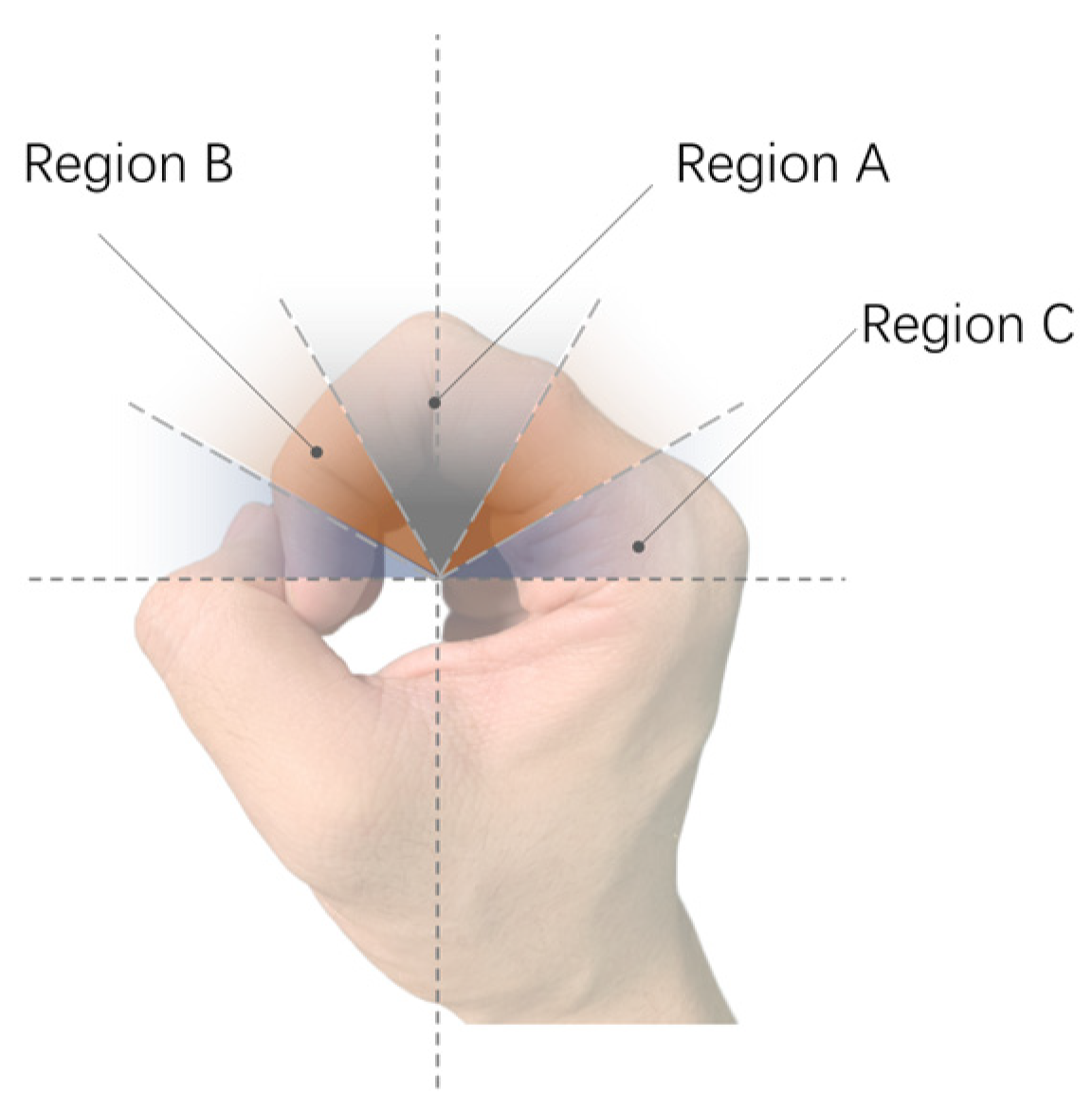

3.1. Spatial Mapping and Perceptual Characteristics of the Index Finger

3.2. Wearable Tactile Prototype and Its Interaction Methodology

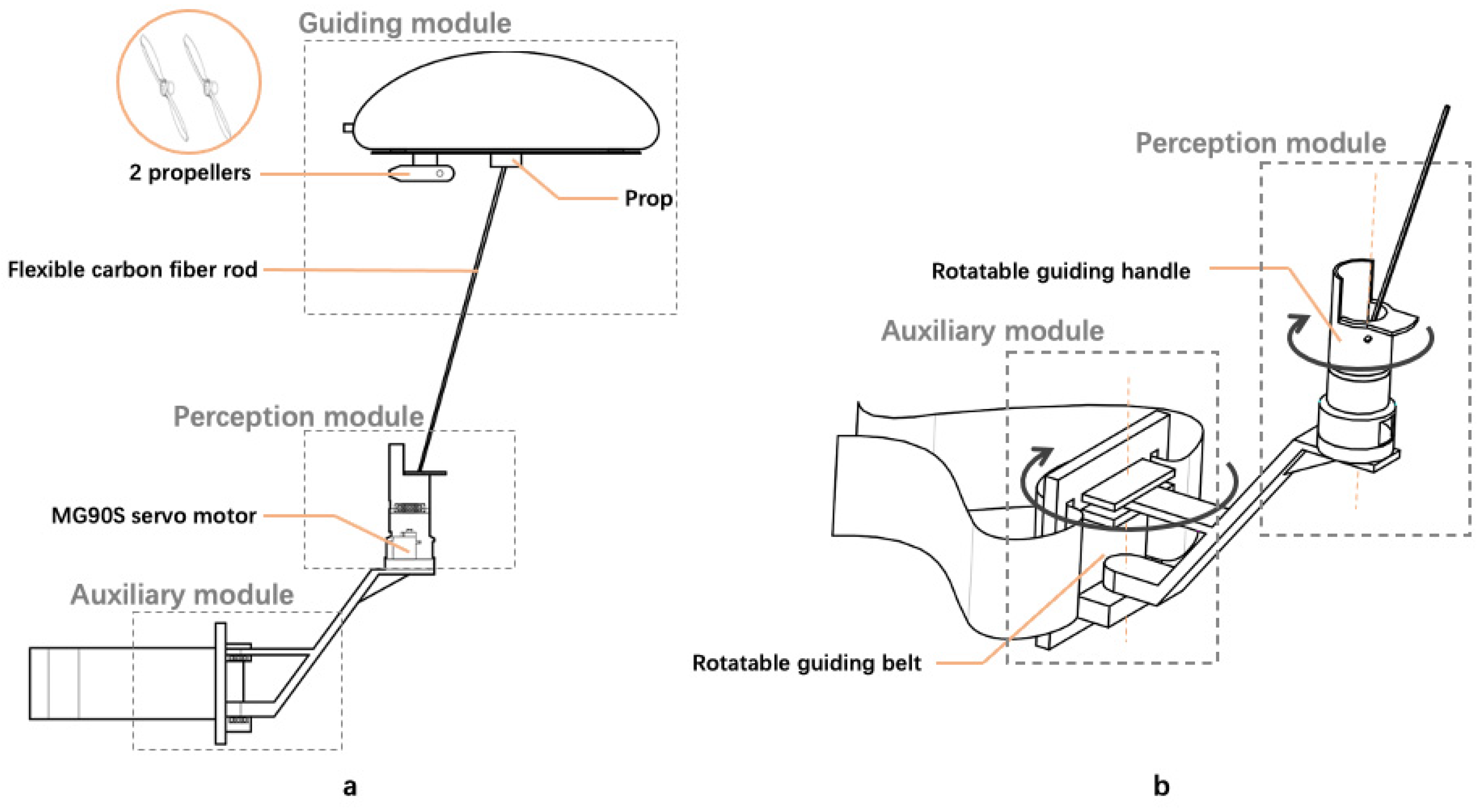

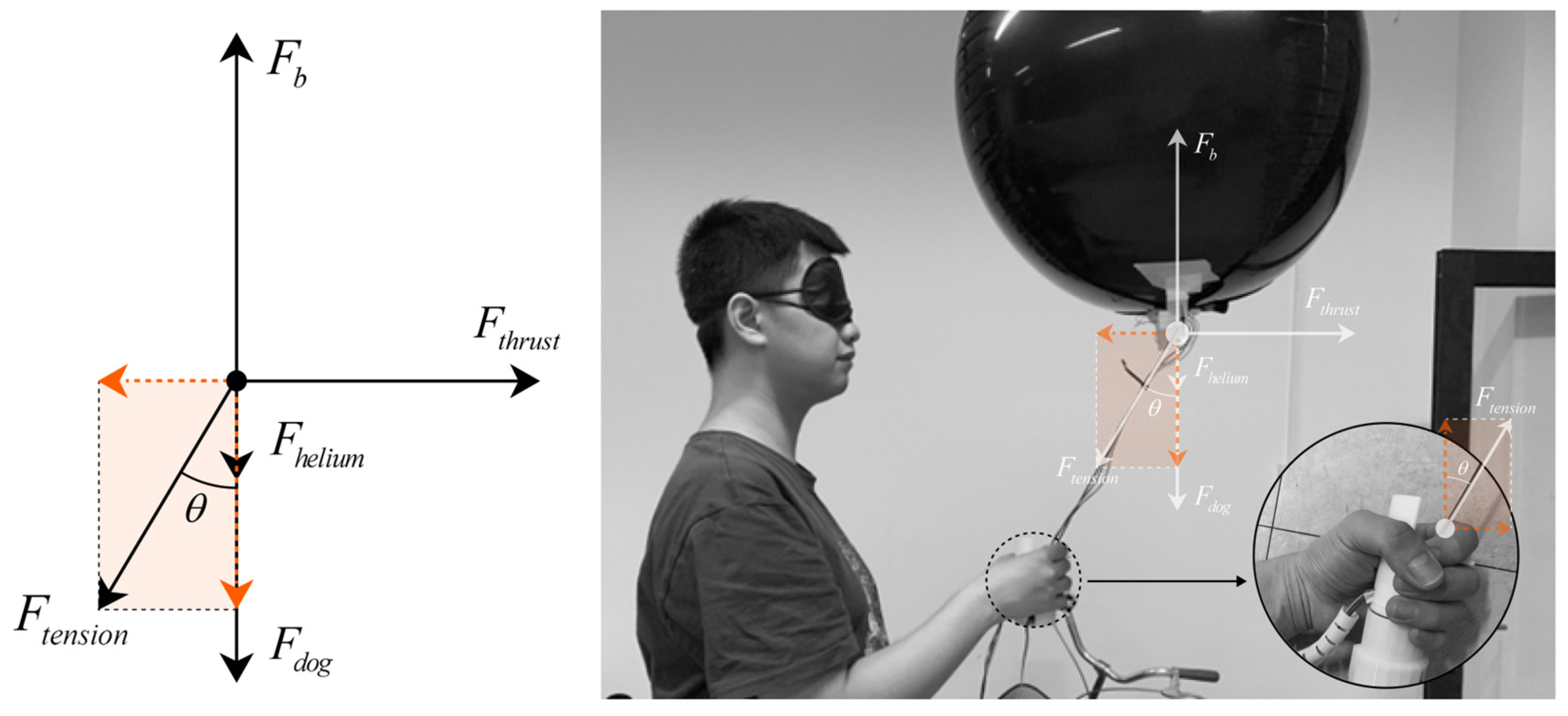

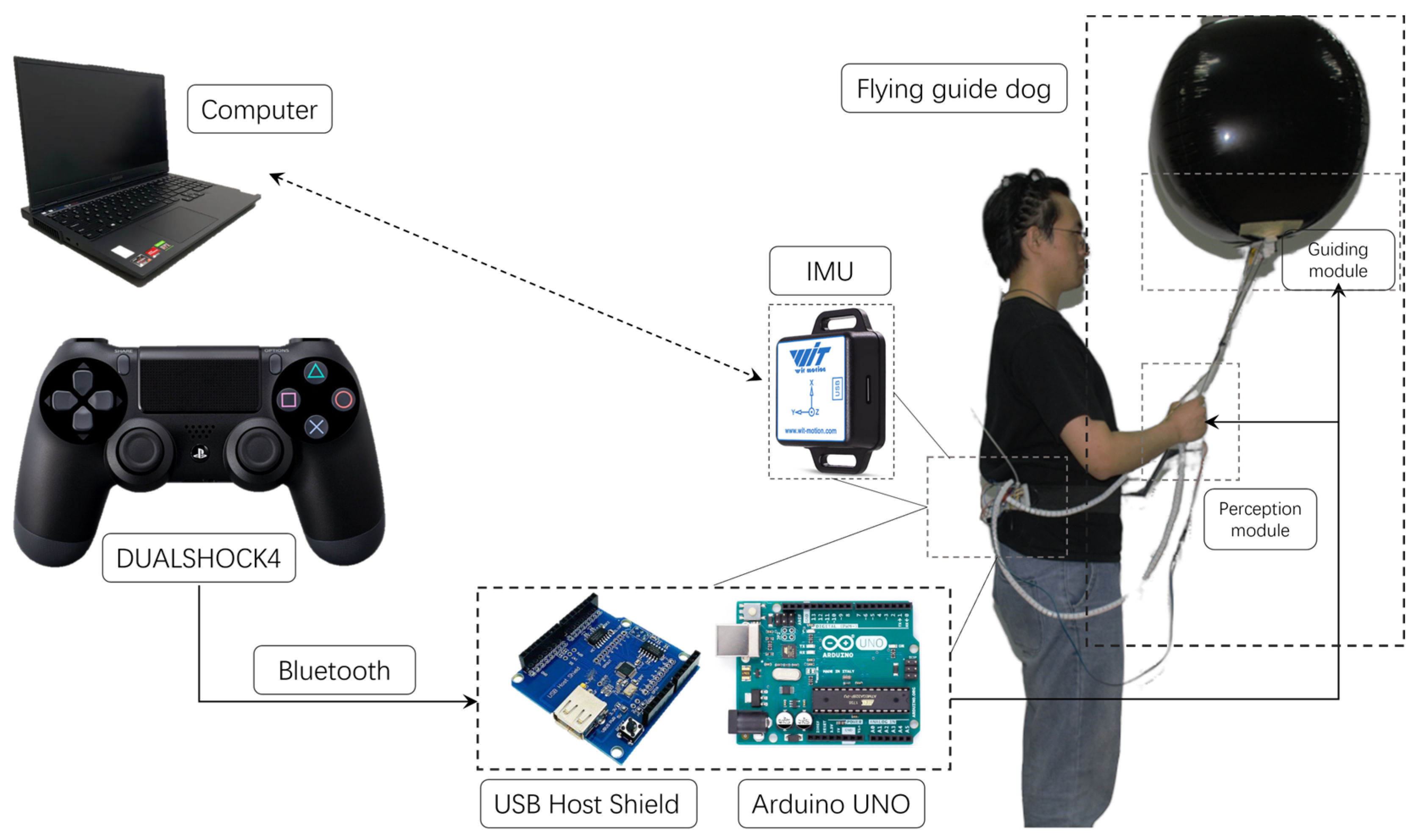

- The guidance module: as shown in Figure 3a, the main component is a 32-inch aluminum film balloon filled with helium (here referred to as the helium balloon aerostat drone). A 3D-printed prop acts as a connecting element, securing the helium balloon aerostat drone to a flexible carbon fiber rod. The bottom of the helium balloon aerostat drone is also equipped with 2 propellers, which generate horizontal thrust when rotating, propelling the aerostat drone forward.

- The perception module: as shown in Figure 3a, the MG90S servo motor inside the perception module is connected to the flexible carbon fiber rod, controlling its angular position to convey the direction of movement to the user’s fingers. As depicted in Figure 3b, the exterior of the perception module features a rotatable 3D-printed handle, which the user grips to assist in adjusting wrist rotation. Additionally, the handle is embedded with a vibration motor, providing extra tactile feedback for each movement of the rod.

- The auxiliary module: as shown in Figure 3b, the auxiliary module primarily consists of a servo motor, a 3D-printed support structure, and a waist belt. It is designed to assist users in adjusting their body orientation.

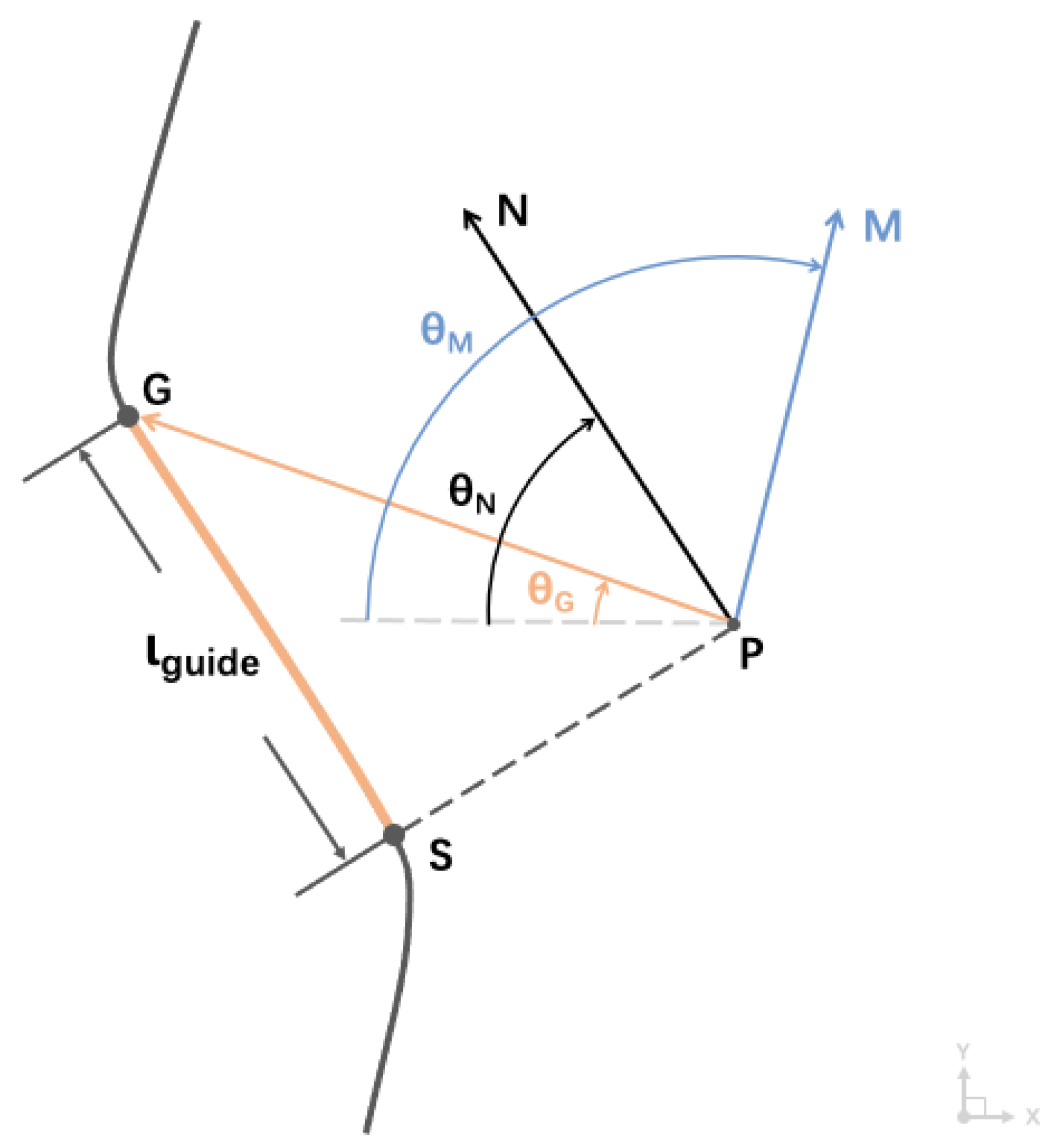

3.3. Guidance Strategy

3.3.1. Direction Indication Strategy

3.3.2. Path-Following Strategy

4. Pilot Study

4.1. Directional Perception Study

4.1.1. Participants and Apparatus

4.1.2. Procedure

4.1.3. Results

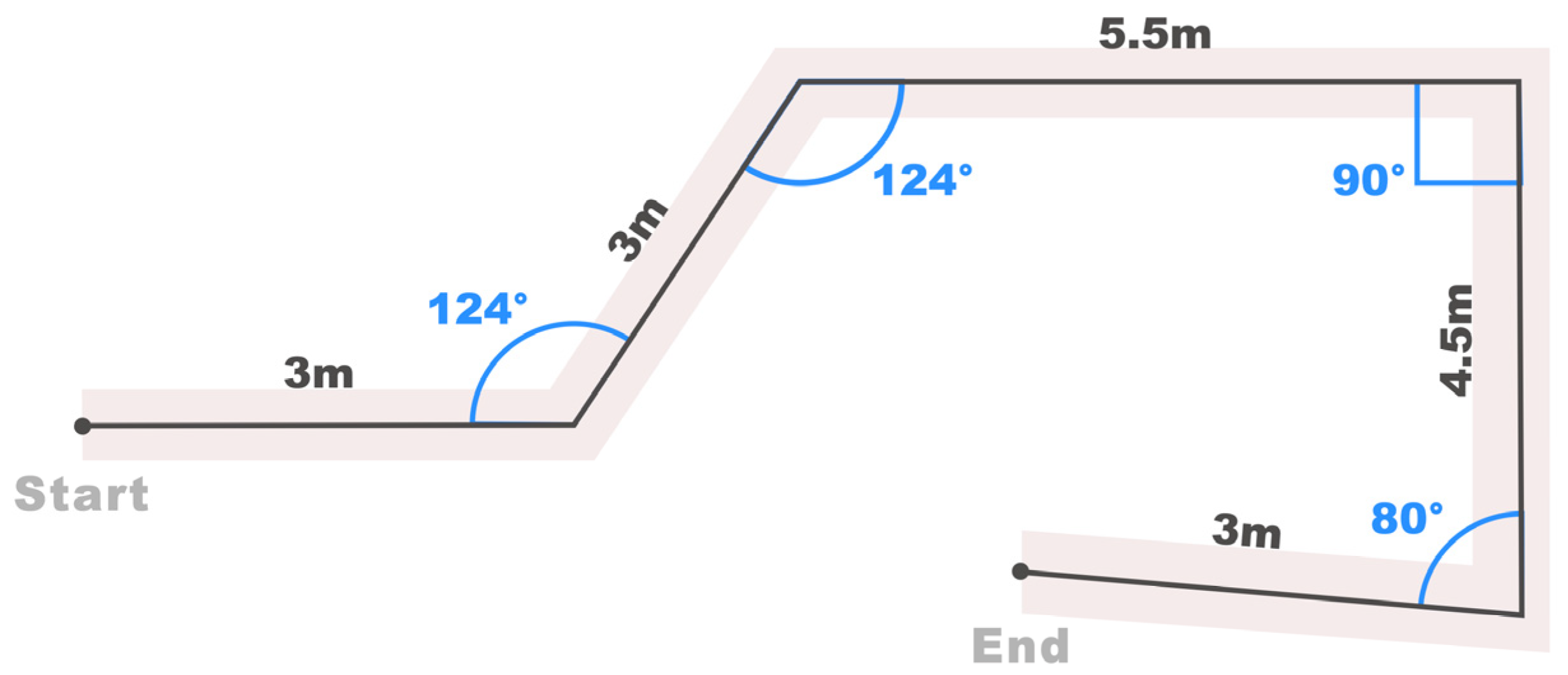

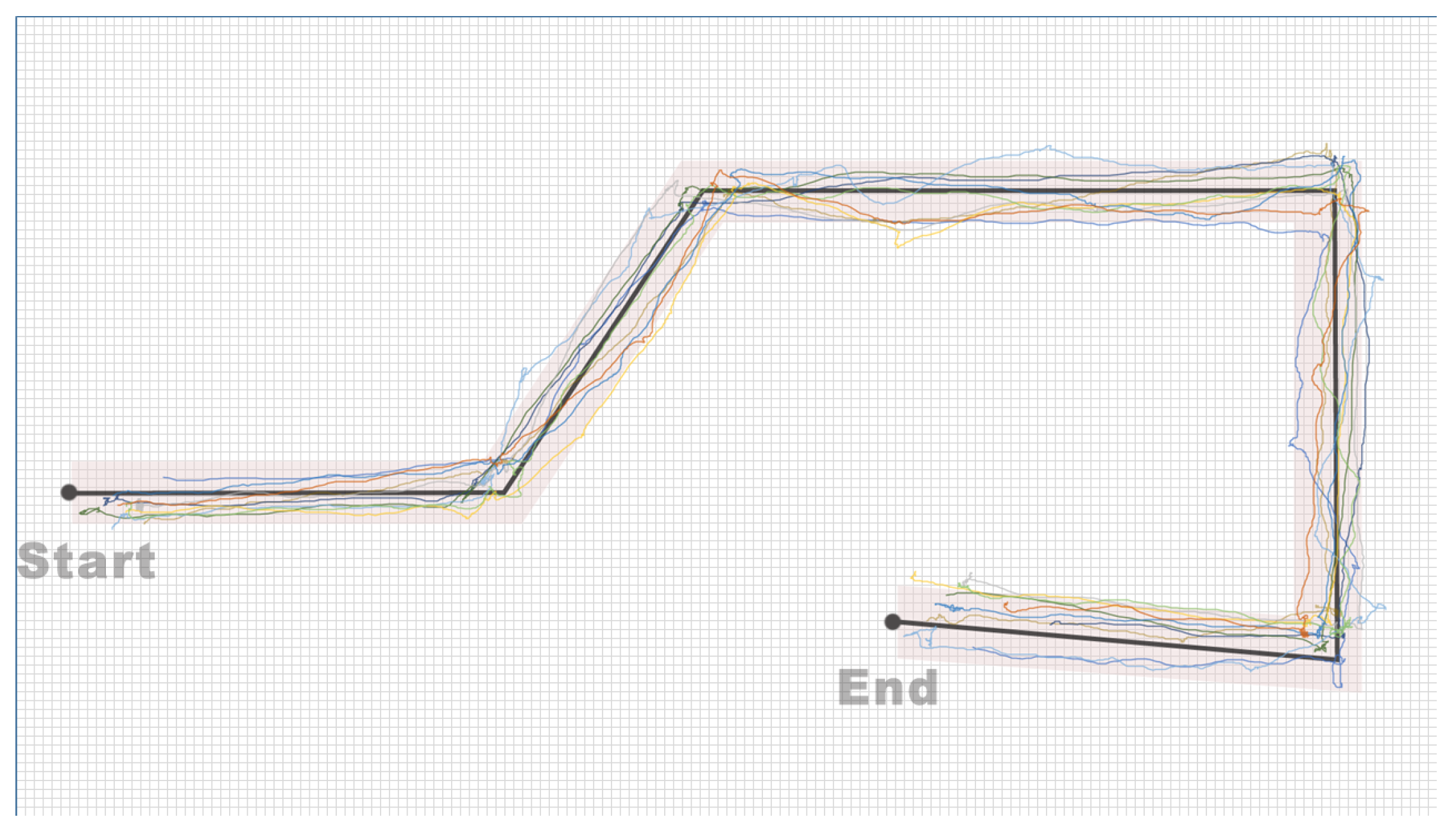

4.2. Evaluation of Path-Following Performance

4.2.1. Participants and Apparatus

4.2.2. Experimental Path

4.2.3. Procedure

4.2.4. Result

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bourne, R.R.A.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, Temporal Trends, and Projections of the Global Prevalence of Blindness and Distance and near Vision Impairment: A Systematic Review and Meta-Analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef] [PubMed]

- Vu, H.T.V. Impact of Unilateral and Bilateral Vision Loss on Quality of Life. Br. J. Ophthalmol. 2005, 89, 360–363. [Google Scholar] [CrossRef] [PubMed]

- Vision Impairment and Blindness. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 14 November 2023).

- Crewe, J.M.; Morlet, N.; Morgan, W.H.; Spilsbury, K.; Mukhtar, A.; Clark, A.; Ng, J.Q.; Crowley, M.; Semmens, J.B. Quality of Life of the Most Severely Vision-Impaired. Clin. Exp. Ophthalmol. 2011, 39, 336–343. [Google Scholar] [CrossRef] [PubMed]

- Slade, P.; Tambe, A.; Kochenderfer, M.J. Multimodal Sensing and Intuitive Steering Assistance Improve Navigation and Mobility for People with Impaired Vision. Sci. Robot. 2021, 6, eabg6594. [Google Scholar] [CrossRef] [PubMed]

- Plikynas, D.; Žvironas, A.; Budrionis, A.; Gudauskis, M. Indoor Navigation Systems for Visually Impaired Persons: Mapping the Features of Existing Technologies to User Needs. Sensors 2020, 20, 636. [Google Scholar] [CrossRef]

- Masal, K.M.; Bhatlawande, S.; Shingade, S.D. Development of a Visual to Audio and Tactile Substitution System for Mobility and Orientation of Visually Impaired People: A Review. Multimed. Tools Appl. 2023, 82, 1–41. [Google Scholar] [CrossRef]

- Nair, V.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Evaluating the Usability and Performance of an Indoor Navigation Assistant for Blind and Visually Impaired People. Assist. Technol. 2022, 34, 289–299. [Google Scholar] [CrossRef] [PubMed]

- Gharghan, S.K.; Al-Kafaji, R.D.; Mahdi, S.Q.; Zubaidi, S.L.; Ridha, H.M. Indoor Localization for the Blind Based on the Fusion of a Metaheuristic Algorithm with a Neural Network Using Energy-Efficient WSN. Arab. J. Sci. Eng. 2023, 48, 6025–6052. [Google Scholar] [CrossRef]

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating Wearable Haptics and Obstacle Avoidance for the Visually Impaired in Indoor Navigation: A User-Centered Approach. IEEE Trans. Haptics 2021, 14, 109–122. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable Assistive Devices for Visually Impaired: A State of the Art Survey. Pattern Recognit. Lett. 2020, 137, 37–52. [Google Scholar] [CrossRef]

- Real, S.; Araujo, A. Navigation Systems for the Blind and Visually Impaired: Past Work, Challenges, and Open Problems. Sensors 2019, 19, 3404. [Google Scholar] [CrossRef] [PubMed]

- Kassim, A.M.; Yasuno, T.; Suzuki, H.; Jaafar, H.I.; Aras, M.S.M. Indoor Navigation System Based on Passive RFID Transponder with Digital Compass for Visually Impaired People. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 604–611. [Google Scholar] [CrossRef]

- Wise, E.; Li, B.; Gallagher, T.; Dempster, A.G.; Rizos, C.; Ramsey-Stewart, E.; Woo, D. Indoor Navigation for the Blind and Vision Impaired: Where Are We and Where Are We Going? In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, Australia, 13–15 November 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–7. [Google Scholar]

- Romeo, K.; Pissaloux, E.; Gay, S.L.; Truong, N.-T.; Djoussouf, L. The MAPS: Toward a Novel Mobility Assistance System for Visually Impaired People. Sensors 2022, 22, 3316. [Google Scholar] [CrossRef] [PubMed]

- The Importance of Cognitive Load Theory|Society for Education and Training. Available online: https://set.et-foundation.co.uk/resources/the-importance-of-cognitive-load-theory (accessed on 17 December 2023).

- Chanana, P.; Paul, R.; Balakrishnan, M.; Rao, P. Assistive Technology Solutions for Aiding Travel of Pedestrians with Visual Impairment. J. Rehabil. Assist. Technol. Eng. 2017, 4, 205566831772599. [Google Scholar] [CrossRef] [PubMed]

- Loomis, J.; Klatzky, R.; Giudice, N. Sensory Substitution of Vision: Importance of Perceptual and Cognitive Processing. In Assistive Technology for Blindness and Low Vision; CRC Press: Boca Raton, FL, USA, 2012; pp. 162–191. [Google Scholar]

- Liu, G.; Yu, T.; Yu, C.; Xu, H.; Xu, S.; Yang, C.; Wang, F.; Mi, H.; Shi, Y. Tactile Compass: Enabling Visually Impaired People to Follow a Path with Continuous Directional Feedback. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–13. [Google Scholar]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A Navigational Cognitive Assistant for the Blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 90–99. [Google Scholar]

- Fiannaca, A.; Apostolopoulous, I.; Folmer, E. Headlock: A Wearable Navigation Aid That Helps Blind Cane Users Traverse Large Open Spaces. In Proceedings of the 16th International ACM SIGACCESS Conference on Computers & Accessibility, Rochester, NY, USA, 20–24 October 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 19–26. [Google Scholar]

- Guerreiro, J.; Ahmetovic, D.; Sato, D.; Kitani, K.; Asakawa, C. Airport Accessibility and Navigation Assistance for People with Visual Impairments. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–14. [Google Scholar]

- Sato, D.; Oh, U.; Naito, K.; Takagi, H.; Kitani, K.; Asakawa, C. NavCog3: An Evaluation of a Smartphone-Based Blind Indoor Navigation Assistant with Semantic Features in a Large-Scale Environment. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Baltimore, MD, USA, 29 October–1 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 270–279. [Google Scholar]

- Avila Soto, M.; Funk, M.; Hoppe, M.; Boldt, R.; Wolf, K.; Henze, N. DroneNavigator: Using Leashed and Free-Floating Quadcopters to Navigate Visually Impaired Travelers. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Baltimore, MD, USA, 29 October–1 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 300–304. [Google Scholar]

- Blum, J.R.; Bouchard, M.; Cooperstock, J.R. What’s around Me? Spatialized Audio Augmented Reality for Blind Users with a Smartphone. In Proceedings of the Mobile and Ubiquitous Systems: Computing, Networking, and Services, Beijing, China, 12–14 December 2012; Puiatti, A., Gu, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 104, pp. 49–62. [Google Scholar]

- Katz, B.F.G.; Kammoun, S.; Parseihian, G.; Gutierrez, O.; Brilhault, A.; Auvray, M.; Truillet, P.; Denis, M.; Thorpe, S.; Jouffrais, C. NAVIG: Augmented Reality Guidance System for the Visually Impaired. Virtual Real. 2012, 16, 253–269. [Google Scholar] [CrossRef]

- Kay, L. A Sonar Aid to Enhance Spatial Perception of the Blind: Engineering Design and Evaluation. Radio Electron. Eng. 1974, 44, 605–627. [Google Scholar] [CrossRef]

- Avila, M.; Funk, M.; Henze, N. DroneNavigator: Using Drones for Navigating Visually Impaired Persons. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, Lisbon, Portugal, 26–28 October 2015; Association for Computing Machinery: Lisbon, Portugal, 2015; pp. 327–328. [Google Scholar]

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A Review of Assistive Spatial Orientation and Navigation Technologies for the Visually Impaired. Univ. Access. Inf. Soc. 2019, 18, 155–168. [Google Scholar] [CrossRef]

- Xu, S.; Yang, C.; Ge, W.; Yu, C.; Shi, Y. Virtual Paving: Rendering a Smooth Path for People with Visual Impairment through Vibrotactile and Audio Feedback. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Ryu, D.; Yang, G.-H.; Kang, S. T-Hive: Bilateral Haptic Interface Using Vibrotactile Cues for Presenting Spatial Information. IEEE Trans. Syst. Man Cybern. C 2012, 42, 1318–1325. [Google Scholar] [CrossRef]

- Heuten, W.; Henze, N.; Boll, S.; Pielot, M. Tactile Wayfinder: A Non-Visual Support System for Wayfinding. In Proceedings of the 5th Nordic Conference on Human-Computer Interaction: Building Bridges, Lund, Sweden, 20–22 October 2008; Association for Computing Machinery: Lund, Sweden, 2008; pp. 172–181. [Google Scholar]

- Kammoun, S.; Jouffrais, C.; Guerreiro, T.; Nicolau, H.; Jorge, J. Guiding Blind People with Haptic Feedback. Front. Access. Pervasive Comput. 2012, 3, 18–22. [Google Scholar]

- Tsukada, K.; Yasumura, M. ActiveBelt: Belt-Type Wearable Tactile Display for Directional Navigation. In Proceedings of the UbiComp 2004: Ubiquitous Computing, Nottingham, UK, 7–10 September 2004; Davies, N., Mynatt, E.D., Siio, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 384–399. [Google Scholar]

- Erp, J.B.F.V.; Veen, H.A.H.C.V.; Jansen, C.; Dobbins, T. Waypoint Navigation with a Vibrotactile Waist Belt. ACM Trans. Appl. Percept. 2005, 2, 106–117. [Google Scholar] [CrossRef]

- Petrausch, V.; Schwarz, T.; Stiefelhagen, R. Prototype Development of a Low-Cost Vibro-Tactile Navigation Aid for the Visually Impaired. In Proceedings of the Computers Helping People with Special Needs, Linz, Austria, 11–13 July 2018; Miesenberger, K., Kouroupetroglou, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 10897, pp. 63–69. [Google Scholar]

- Kammoun, S.; Bouhani, W.; Jemni, M. Sole Based Tactile Information Display for Visually Impaired Pedestrian Navigation. In Proceedings of the 8th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 1–3 July 2015; ACM: Corfu, Greece, 2015; pp. 1–4. [Google Scholar]

- Spiers, A.J.; Van Der Linden, J.; Wiseman, S.; Oshodi, M. Testing a Shape-Changing Haptic Navigation Device with Vision-Impaired and Sighted Audiences in an Immersive Theater Setting. IEEE Trans. Human-Mach. Syst. 2018, 48, 614–625. [Google Scholar] [CrossRef]

- Antolini, M.; Bordegoni, M.; Cugini, U. A Haptic Direction Indicator Using the Gyro Effect. In Proceedings of the 2011 IEEE World Haptics Conference, Istanbul, Turkey, 21–24 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 251–256. [Google Scholar]

- Spiers, A.J.; Dollar, A.M. Design and Evaluation of Shape-Changing Haptic Interfaces for Pedestrian Navigation Assistance. IEEE Trans. Haptics 2017, 10, 17–28. [Google Scholar] [CrossRef] [PubMed]

- Spiers, A.J.; van Der Linden, J.; Oshodi, M.; Dollar, A.M. Development and Experimental Validation of a Minimalistic Shape-Changing Haptic Navigation Device. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2688–2695. [Google Scholar]

- Katzschmann, R.K.; Araki, B.; Rus, D. Safe Local Navigation for Visually Impaired Users with a Time-of-Flight and Haptic Feedback Device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 583–593. [Google Scholar] [CrossRef] [PubMed]

- Khusro, S.; Shah, B.; Khan, I.; Rahman, S. Haptic Feedback to Assist Blind People in Indoor Environment Using Vibration Patterns. Sensors 2022, 22, 361. [Google Scholar] [CrossRef]

- See, A.R.; Costillas, L.V.M.; Advincula, W.D.C.; Bugtai, N.T. Haptic Feedback to Detect Obstacles in Multiple Regions for Visually Impaired and Blind People. Sens. Mater. 2021, 33, 1799. [Google Scholar] [CrossRef]

- Tan, H.; Chen, C.; Luo, X.; Zhang, J.; Seibold, C.; Yang, K.; Stiefelhagen, R. Flying Guide Dog: Walkable Path Discovery for the Visually Impaired Utilizing Drones and Transformer-Based Semantic Segmentation. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 6–10 December 2021; pp. 1123–1128. [Google Scholar]

- Tognon, M.; Alami, R.; Siciliano, B. Physical Human-Robot Interaction with a Tethered Aerial Vehicle: Application to a Force-Based Human Guiding Problem. IEEE Trans. Robot. 2021, 37, 723–734. [Google Scholar] [CrossRef]

- Hwang, H.; Xia, T.; Keita, I.; Suzuki, K.; Biswas, J.; Lee, S.I.; Kim, D. System Configuration and Navigation of a Guide Dog Robot: Toward Animal Guide Dog-Level Guiding Work. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 9778–9784. [Google Scholar]

- Lederman, S.J.; Klatzky, R.L. Haptic Perception: A Tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef]

- Placzek, J.D.; Boyce, D.A. Orthopaedic Physical Therapy Secrets—E-Book, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Smith, J. Book Review: Fundamentals of Hand Therapy: Clinical Reasoning and Treatment Guidelines for Common Diagnoses of the Upper Extremity. Can. J. Occup. Ther. 2015, 82, 128. [Google Scholar] [CrossRef]

- Goodman, J.M.; Bensmaia, S.J. The Neural Mechanisms of Touch and Proprioception at the Somatosensory Periphery; Elsevier: Amsterdam, The Netherlands, 2020; Volume 4, pp. 2–27. [Google Scholar]

- Wachaja, A.; Agarwal, P.; Zink, M.; Adame, M.R.; Möller, K.; Burgard, W. Navigating Blind People with Walking Impairments Using a Smart Walker. Auton. Robot. 2017, 41, 555–573. [Google Scholar] [CrossRef]

- GB 50763-2012 English PDF (GB50763-2012). Available online: https://www.chinesestandard.us/products/gb50763-2012 (accessed on 22 November 2023).

- Perez-Navarro, A.; Montoliu, R.; Torres-Sospedra, J. Advances in Indoor Positioning and Indoor Navigation. Sensors 2022, 22, 7375. [Google Scholar] [CrossRef] [PubMed]

- Cuzick, J. A Wilcoxon-type Test for Trend. Stat. Med. 1985, 4, 87–90. [Google Scholar] [CrossRef] [PubMed]

| No. | Gender | Data Group | Proportion of Valid Data (%) | |

|---|---|---|---|---|

| SDS Situation | Non-SDS Situation | |||

| 1 | M | Group 1 | 11/12 (91.67%) | 10/12 (83.33%) |

| 2 | M | Group 1 | 11/12 (91.67%) | 11/12 (91.67%) |

| 3 | M | Group 1 | 11/12 (91.67%) | 11/12 (91.67%) |

| 4 | F | Group 2 | 10/11 (90.91%) | 10/11 (90.91%) |

| 5 | F | Group 2 | 10/11 (90.91%) | 10/11 (90.91%) |

| 6 | M | Group 2 | 9/11 (81.82%) | 11/11 (100.00%) |

| 7 | M | Group 2 | 10/11 (90.91%) | 11/11 (100.00%) |

| 8 | F | Group 2 | 9/11 (81.82%) | 10/11 (90.91%) |

| 9 | F | Group 2 | 11/11 (100.00%) | 11/11 (100.00%) |

| 10 | M | Group 1 | 10/12 (83.33%) | 10/12 (83.33%) |

| 11 | M | Group 2 | 8/11 (72.73%) | 9/11 (81.82%) |

| 12 | F | Group 1 | 10/12 (83.33%) | 10/12 (83.33%) |

| 13 | M | Group 1 | 12/12 (100.00%) | 12/12 (100.00%) |

| 14 | M | Group 2 | 11/11 (100.00%) | 11/11 (100.00%) |

| 15 | M | Group 1 | 12/12 (100.00%) | 12/12 (100.00%) |

| 16 | F | Group 1 | 11/12 (91.67%) | 12/12 (100.00%) |

| M (p25~p75) | Z | p | |

|---|---|---|---|

| Group 1 | 8.551 (4.932~15.575) | −0.763 | 0.445 |

| Group 2 | 8.686 (5.057~12.765) |

| M (p25~p75) | Z | p | |

|---|---|---|---|

| Turn counterclockwise | 8.108 (4.296~12.162) | −0.352 1 | 0.725 |

| Turn clockwise | 8.004 (4.349~12.175) |

| M (p25~p75) | Z | p | |

|---|---|---|---|

| Non-SDS-Situation | 9.014 (4.749~12.785) | −0.591 1 | 0.445 |

| SDS-Situation | 8.623 (5.654~15.170) |

| M (p25~p75) | Chi-Square | |

|---|---|---|

| Region A | 10.693 (7.947~17.224) | 14.533 |

| Region B | 5.427 (1.848~8.558) | |

| Region C | 7.614 (3.474~12.694) |

| Sig. (Adj. Sig. *) | ||

|---|---|---|

| Region B | Region C | |

| Region A | <0.001 (<0.001) | 0.027 (0.081) |

| Region B | - | 0.114 (0.342) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Pan, Z.; Song, Z.; Zhang, Y.; Li, W.; Ding, S. The Aerial Guide Dog: A Low-Cognitive-Load Indoor Electronic Travel Aid for Visually Impaired Individuals. Sensors 2024, 24, 297. https://doi.org/10.3390/s24010297

Zhang X, Pan Z, Song Z, Zhang Y, Li W, Ding S. The Aerial Guide Dog: A Low-Cognitive-Load Indoor Electronic Travel Aid for Visually Impaired Individuals. Sensors. 2024; 24(1):297. https://doi.org/10.3390/s24010297

Chicago/Turabian StyleZhang, Xiaochen, Ziyi Pan, Ziyang Song, Yang Zhang, Wujing Li, and Shiyao Ding. 2024. "The Aerial Guide Dog: A Low-Cognitive-Load Indoor Electronic Travel Aid for Visually Impaired Individuals" Sensors 24, no. 1: 297. https://doi.org/10.3390/s24010297

APA StyleZhang, X., Pan, Z., Song, Z., Zhang, Y., Li, W., & Ding, S. (2024). The Aerial Guide Dog: A Low-Cognitive-Load Indoor Electronic Travel Aid for Visually Impaired Individuals. Sensors, 24(1), 297. https://doi.org/10.3390/s24010297