Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception

Abstract

1. Introduction

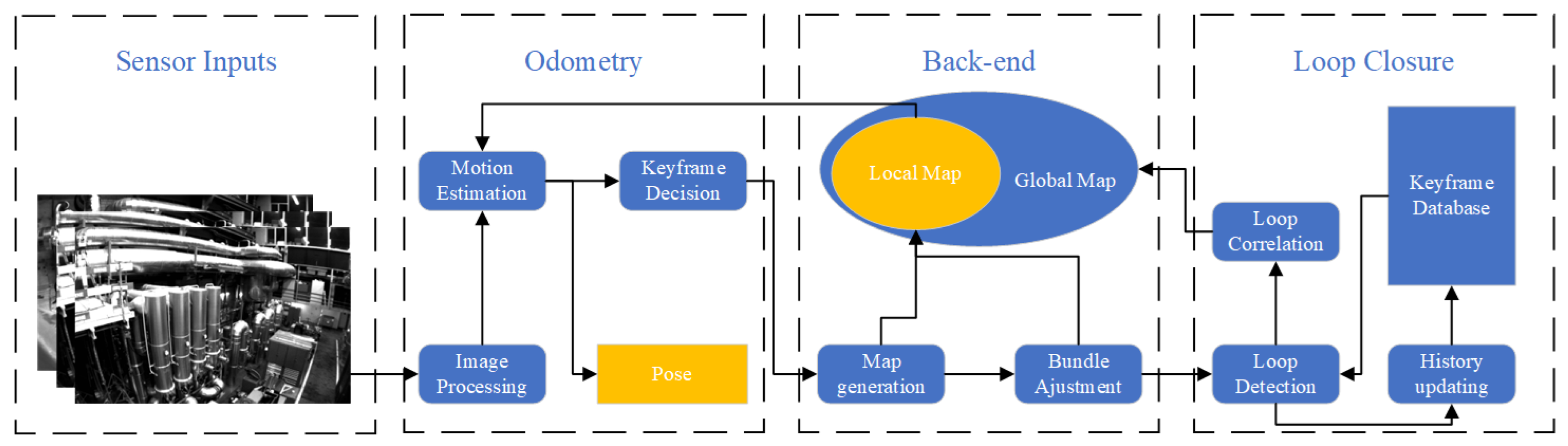

- Odometry: this is the basic module of the SLAM algorithm [13]. Its primary functionality is to process the latest received image by feature-based methods or direct methods, finding the correspondence between the current image and the reference image (or map). Once the correspondence is established, the camera pose can be estimated by epipolar geometry (2D–2D matches) [14], PnP (2D–3D matches) [15,16,17], or ICP (3D–3D matches) [18,19]. More recently, learning-based methods have been used for end-to-end estimating of the camera pose [20].

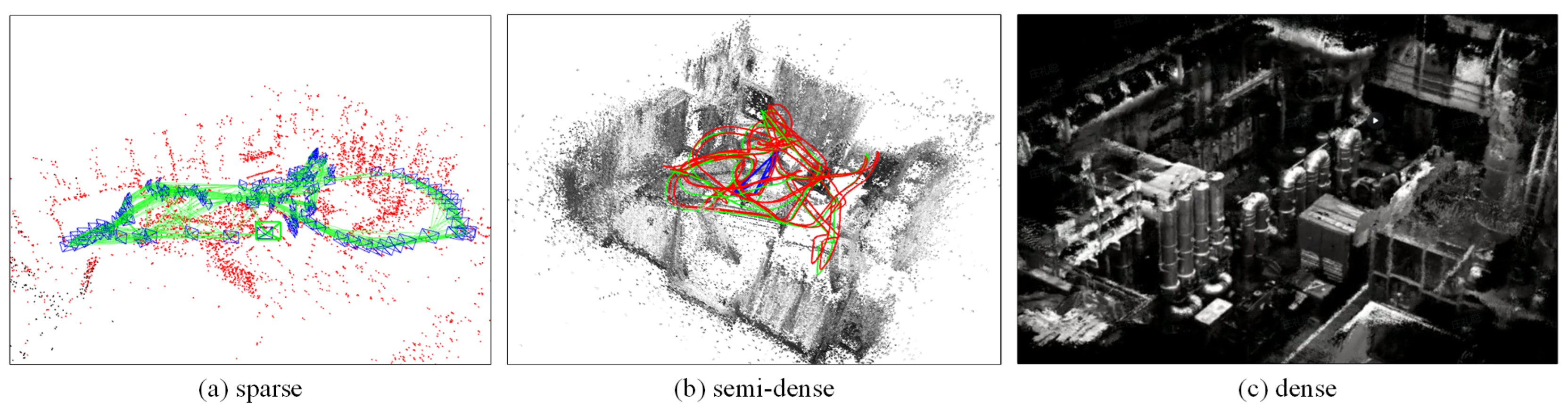

- Back end: this module maintains a global insistent map by performing Bundle Adjustment (BA) [21] for most state-of-the-art visual SLAM algorithms. On the one hand, the sliding window strategy is adopted, which keeps a fixed number of keyframes by marginalizing the old frame for controlling the BA cost in real time. On the other hand, a sparse map is constructed to optimize the motion and structure for more accurate results, since joint optimization with motion and dense map fail to run in real time.

- Loop closure: this module eliminates the accumulated error caused by large-scale, long-time estimation. To this end, a loop detection procedure is performed to detect the potential loop. Once a loop is detected, a lightweight pose graph optimization correlates with the trajectory, significantly improving the SLAM algorithm’s accuracy. Notably, the precision rate of the loop detection is critical and must be ensured. Otherwise, the wrong detection could directly lead the algorithm to fail.

- Low-cost sensor: Visual sensors are cheap and low-power. This is important for UAVs, which have relatively unstable control, poor loadability, and low power consumption. Unstable control results in a high damage rate and destruction of sensors; poor loadability and low power consumption mean the weight and power consumption are better to be lower. These factors make visual sensors popular in UAV applications.

- High frame rate: Visual sensors can capture images at a higher frame rate, enabling algorithms to provide more localization information. To utilize the flexibility of UAV, high-frame-rate odometry is necessary for precision of control.

- Capturing rich texture information: This is beneficial for UAV perception; rich texture information brings a high understanding of environments, subsequently enabling more intelligent missions for UAVs such as object tracking, semantic segmentation, and implicit reconstruction.

2. Camera Configuration and Data Processing

2.1. Camera Configuration

2.1.1. Regular Camera Type

2.1.2. Special Camera Type

2.2. Data Processing

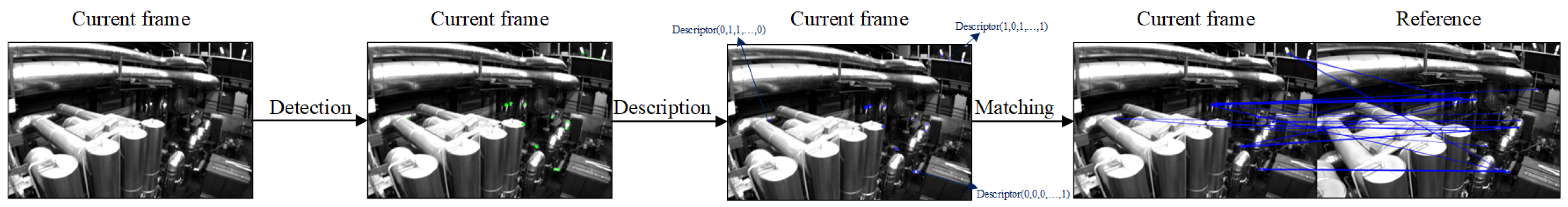

2.2.1. Feature Extraction Algorithms

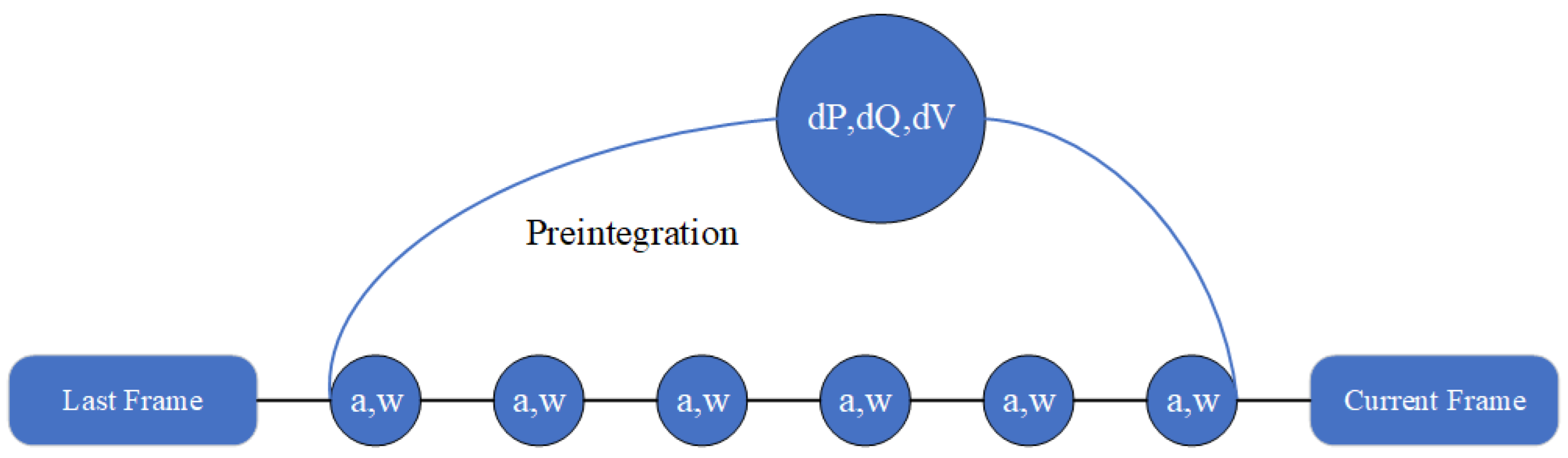

2.2.2. IMU Pre-Integration

3. Visual SLAM Algorithms

3.1. Real-Time Performance

- GPU boosting. A GPU has the capability of highly parallel computing and can be widely applied in learning-based methods. A common usage of GPUs is to boost feature extraction, since a GPU is a good computing matrix; however, in a visual SLAM system, there are other products that can be parallel-computed, such as map generation and solving BA equations. By utilizing the parallel performance of the CPU and the GPU, the efficiency of visual SLAM can be further improved.

- Data management. Data query is one of the most frequent operations; every new frame inputs to the system, finding correspondences to the reference frame, the local map, and the keyframe database. Therefore, how to use the appropriate data structure to manage the data stored in the system to achieve efficient queries is crucial. Especially in large-scale long-term visual SLAM systems.

3.2. Texture-Less Environment

3.2.1. Dense Direct Formulation

3.2.2. Sparse Direct Formulation

3.2.3. Structure Feature and Multi-Camera

- Fully utilizing current information, in such a situation, on how to extract useful information from images is crucial. Direct methods are proposed not only for discarding feature extraction but also to exploit the intensity information of images, making it possible to build correspondences with the current system. That is why direct methods also outperform feature-based methods. Unfortunately, intensity information is unstable compared with features and can be severely interfered with by photometric noise. Structure feature extracts useful information from another perspective. Line and plane are the common geometry similar to points and benefit for building correspondences. However, introducing these relatively complex geometries to the system undermines its efficiency and complexity. What is more, because of the limited FOV of the camera, the system will possibly face a structure-degeneration problem. On the one hand, direct methods need to reduce the influence of photometric noise such as photometric calibration and exposure control, or they need to combine with the feature extractor to provide more stable information, subsequently improving robustness. On the other hand, structure feature-based methods need to simplify the geometry expression and reduce system complexity to improve efficiency.

- Gathering more information, a texture-less environment usually means partial texture deficiency, because it is hard to find somewhere totally without texture. Therefore, simply enlarging the FOV of the sensor is a useful solution to gather more information and support to build correspondence. Subsequently, more data are inputted into the SLAM system, which will cause inefficiency and minimal improvement while in a rich texture environment. Therefore, reducing data redundancy, improving system efficiency, and building data connection (for multi-camera) is essential.

3.3. Dynamic Environment

3.3.1. Discarding Dynamic Information

3.3.2. Utilizing Dynamic Information

4. Visual–Inertial Fusion for UAV Localization

- Inertial measurements provide extra constraints for pure visual back-end optimization and improve its accuracy and robustness.

- The real-world scale can be recovered for monocular SLAM algorithms to solve the scale ambiguity problem.

- The high-frame-rate inertial measurements can fast propagate the odometry information for autonomous robot agents.

- While visual tracking fails to maintain the odometry, inertial measurements could provide a temporary prediction for keeping the system working.

4.1. EKF-Based Visual–Inertial SLAM

4.2. BA-Based Visual–Inertial SLAM

5. Learning-Based Enhancement for UAV Perception

- Learning-based methods are good for extracting texture information in images, and usually perform better than traditional methods.

- Learning-based perception uses the same inputs as in localization, improving consistency and, at the same time, saving the cost of sensors.

- With the rapid development of GPUs and Artificial Intelligence, learning-based methods have become mainstream.

5.1. Monocular Depth Estimation

5.2. NeRF-Based SLAM

6. Conclusions

- Robust localization: UAV localization may not be so accurate but is robust, especially for applications that require the UAV to cross various scenes, such as unknown space exploration. As in military investigation, dusty disturbance, complex environments, and the requirement for fast movement severely interfere with the data inputs. In cave or tunnel exploration, partial darkness, and reduced environmental texture also weaken information support for localization. These potential factors bring challenges to UAV localization, and only robust UAV localization can deal with various challenging environments, meeting the needs of the day-by-day growing complexity of applications.

- Intelligentized perception: A regular dense point cloud map or grid map is built for point-driven navigation; however, it is not adequate to support more and more intelligent missions, such as object searching in an unknown space. For instance, in disaster rescue, the regular map for navigation only supports the UAV to mechanically search victims, and it is inefficient. Intelligentized perception means a high-level understanding of surrounding environments: with this understanding, the UAV can infer potential victims by obtaining environmental clues, such as blood or a piece of clothing. Moreover, manual intervention can be significantly reduced and UAVs can take charge of strategy decisions, parameter adjustment, and risk prevention.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Al-Kaff, A.; Martin, D.; Garcia, F.; de la Escalera, A.; Armingol, J.M. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef]

- Aulinas, J.; Petillot, Y.; Salvi, J.; Lladó, X. The SLAM problem: A survey. Artif. Intell. Res. Dev. 2008, 184, 363–371. [Google Scholar]

- Takleh, T.T.O.; Bakar, N.A.; Rahman, S.A.; Hamzah, R.; Aziz, Z. A brief survey on SLAM methods in autonomous vehicle. Int. J. Eng. Technol. 2018, 7, 38–43. [Google Scholar] [CrossRef]

- Song, J.B.; Hwang, S.Y. Past and state-of-the-art SLAM technologies. J. Inst. Control Robot. Syst. 2014, 20, 372–379. [Google Scholar] [CrossRef][Green Version]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A comprehensive survey of visual slam algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Tourani, A.; Bavle, H.; Sanchez-Lopez, J.L.; Voos, H. Visual SLAM: What are the current trends and what to expect? Sensors 2022, 22, 9297. [Google Scholar] [CrossRef]

- Kazerouni, I.A.; Fitzgerald, L.; Dooly, G.; Toal, D. A survey of state-of-the-art on visual SLAM. Expert Syst. Appl. 2022, 205, 117734. [Google Scholar] [CrossRef]

- Servières, M.; Renaudin, V.; Dupuis, A.; Antigny, N. Visual and visual-inertial slam: State of the art, classification, and experimental benchmarking. J. Sens. 2021, 2021, 2054828. [Google Scholar] [CrossRef]

- Harris, C.G.; Pike, J. 3D positional integration from image sequences. Image Vis. Comput. 1988, 6, 87–90. [Google Scholar] [CrossRef]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. 1–652. [Google Scholar]

- Zhang, Z. Determining the epipolar geometry and its uncertainty: A review. Int. J. Comput. Vis. 1998, 27, 161–195. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, Z. PnP problem revisited. J. Math. Imaging Vis. 2006, 24, 131–141. [Google Scholar] [CrossRef]

- Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2344–2351. [Google Scholar]

- Hesch, J.A.; Roumeliotis, S.I. A direct least-squares (DLS) method for PnP. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 383–390. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Proceedings Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Sharp, G.C.; Lee, S.W.; Wehe, D.K. ICP registration using invariant features. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 90–102. [Google Scholar] [CrossRef]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2043–2050. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the Vision Algorithms: Theory and Practice: International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999 Proceedings; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An overview to visual odometry and visual SLAM: Applications to mobile robotics. Intell. Ind. Syst. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Aqel, M.O.; Marhaban, M.H.; Saripan, M.I.; Ismail, N.B. Review of visual odometry: Types, approaches, challenges, and applications. SpringerPlus 2016, 5, 1897. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Computer Vision—ECCV 2014, Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Wang, R.; Schworer, M.; Cremers, D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3903–3911. [Google Scholar]

- Engel, J.; Stückler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1935–1942. [Google Scholar]

- Kerl, C.; Sturm, J.; Cremers, D. Dense visual SLAM for RGB-D cameras. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2100–2106. [Google Scholar]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. Kinectfusion: Real-time 3d reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Schops, T.; Sattler, T.; Pollefeys, M. Bad slam: Bundle adjusted direct rgb-d slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 134–144. [Google Scholar]

- Weikersdorfer, D.; Hoffmann, R.; Conradt, J. Simultaneous localization and mapping for event-based vision systems. In Computer Vision Systems, Proceedings of the 9th International Conference, ICVS 2013, St. Petersburg, Russia, 16–18 July 2013; Proceedings 9; Springer: Berlin/Heidelberg, Germany, 2013; pp. 133–142. [Google Scholar]

- Kim, H.; Leutenegger, S.; Davison, A.J. Real-time 3D reconstruction and 6-DoF tracking with an event camera. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VI 14; Springer: Cham, Switzerland, 2016; pp. 349–364. [Google Scholar]

- Rebecq, H.; Horstschäfer, T.; Gallego, G.; Scaramuzza, D. Evo: A geometric approach to event-based 6-dof parallel tracking and mapping in real time. IEEE Robot. Autom. Lett. 2016, 2, 593–600. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef]

- Harmat, A.; Sharf, I.; Trentini, M. Parallel tracking and mapping with multiple cameras on an unmanned aerial vehicle. In Intelligent Robotics and Applications, Proceedings of the 5th International Conference, ICIRA 2012, Montreal, QC, Canada, 3–5 October 2012; Proceedings, Part I 5; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–432. [Google Scholar]

- Kuo, J.; Muglikar, M.; Zhang, Z.; Scaramuzza, D. Redesigning SLAM for arbitrary multi-camera systems. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2116–2122. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference; Citeseer: Princeton, NJ, USA, 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Computer Vision–ECCV 2006, Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision–ECCV 2006, Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Computer Vision–ECCV 2010, Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Proceedings, Part IV 11; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Mair, E.; Hager, G.D.; Burschka, D.; Suppa, M.; Hirzinger, G. Adaptive and generic corner detection based on the accelerated segment test. In Computer Vision–ECCV 2010, Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Proceedings, Part II 11; Springer: Berlin/Heidelberg, Germany, 2010; pp. 183–196. [Google Scholar]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Computer Vision–ECCV 2012, Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part VI 12; Springer: Berlin/Heidelberg, Germany, 2012; pp. 214–227. [Google Scholar]

- Alcantarilla, P.F.; Solutions, T. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Patt. Anal. Mach. Intell 2011, 34, 1281–1298. [Google Scholar]

- Tareen, S.A.K.; Raza, R.H. Potential of SIFT, SURF, KAZE, AKAZE, ORB, BRISK, AGAST, and 7 More Algorithms for Matching Extremely Variant Image Pairs. In Proceedings of the 2023 4th International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 17–18 March 2023; pp. 1–6. [Google Scholar]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of sift, surf, kaze, akaze, orb, and brisk. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. IMU Preintegration on Manifold for Efficient Visual-Inertial Maximum-a-Posteriori Estimation; Technical Report; In Robotics: Science and Systems XI; Sapienza University of Rome: Rome, Italy; 13–17 July 2015.

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Davison. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Proceedings Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 1403–1410. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. AAAI/IAAI 2002, 593598, 593–598. [Google Scholar]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM 2.0: An improved particle filtering algorithm for simultaneous localization and mapping that provably converges. In Proceedings of the IJCAI 2003, Acapulco, Mexico, 9–15 August 2003; Volume 3, pp. 1151–1156. [Google Scholar]

- Demim, F.; Nemra, A.; Louadj, K. Robust SVSF-SLAM for unmanned vehicle in unknown environment. IFAC-PapersOnLine 2016, 49, 386–394. [Google Scholar] [CrossRef]

- Ahmed, A.; Abdelkrim, N.; Mustapha, H. Smooth variable structure filter VSLAM. IFAC-PapersOnLine 2016, 49, 205–211. [Google Scholar] [CrossRef]

- Demim, F.; Boucheloukh, A.; Nemra, A.; Louadj, K.; Hamerlain, M.; Bazoula, A.; Mehal, Z. A new adaptive smooth variable structure filter SLAM algorithm for unmanned vehicle. In Proceedings of the 2017 6th International Conference on Systems and Control (ICSC), Batna, Algeria, 7–9 May 2017; pp. 6–13. [Google Scholar]

- Demim, F.; Nemra, A.; Boucheloukh, A.; Louadj, K.; Hamerlain, M.; Bazoula, A. Robust SVSF-SLAM algorithm for unmanned vehicle in dynamic environment. In Proceedings of the 2018 International Conference on Signal, Image, Vision and Their Applications (SIVA), Guelma, Algeria, 26–27 November 2018; pp. 1–5. [Google Scholar]

- Elhaouari, K.; Allam, A.; Larbes, C. Robust IMU-Monocular-SLAM For Micro Aerial Vehicle Navigation Using Smooth Variable Structure Filter. Int. J. Comput. Digit. Syst. 2023, 14, 1063–1072. [Google Scholar]

- Strasdat, H.; Montiel, J.M.; Davison, A.J. Visual SLAM: Why filter? Image Vis. Comput. 2012, 30, 65–77. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Semi-dense visual odometry for a monocular camera. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1449–1456. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Pumarola, A.; Vakhitov, A.; Agudo, A.; Sanfeliu, A.; Moreno-Noguer, F. PL-SLAM: Real-time monocular visual SLAM with points and lines. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4503–4508. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Fu, Q.; Yu, H.; Lai, L.; Wang, J.; Peng, X.; Sun, W.; Sun, M. A robust RGB-D SLAM system with points and lines for low texture indoor environments. IEEE Sens. J. 2019, 19, 9908–9920. [Google Scholar] [CrossRef]

- Yang, S.; Scherer, S. Direct monocular odometry using points and lines. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3871–3877. [Google Scholar]

- Gomez-Ojeda, R.; Briales, J.; Gonzalez-Jimenez, J. PL-SVO: Semi-direct monocular visual odometry by combining points and line segments. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4211–4216. [Google Scholar]

- Zuo, X.; Xie, X.; Liu, Y.; Huang, G. Robust visual SLAM with point and line features. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1775–1782. [Google Scholar]

- Shu, F.; Wang, J.; Pagani, A.; Stricker, D. Structure plp-slam: Efficient sparse mapping and localization using point, line and plane for monocular, rgb-d and stereo cameras. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2105–2112. [Google Scholar]

- Zhang, Z.; Rebecq, H.; Forster, C.; Scaramuzza, D. Benefit of large field-of-view cameras for visual odometry. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 801–808. [Google Scholar]

- Huang, H.; Yeung, S.K. 360vo: Visual odometry using a single 360 camera. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5594–5600. [Google Scholar]

- Matsuki, H.; Von Stumberg, L.; Usenko, V.; Stückler, J.; Cremers, D. Omnidirectional DSO: Direct sparse odometry with fisheye cameras. IEEE Robot. Autom. Lett. 2018, 3, 3693–3700. [Google Scholar] [CrossRef]

- Derpanis, K.G. Overview of the RANSAC Algorithm. Image Rochester NY 2010, 4, 2–3. [Google Scholar]

- Tan, W.; Liu, H.; Dong, Z.; Zhang, G.; Bao, H. Robust monocular SLAM in dynamic environments. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, SA, Australia, 1–4 October 2013; pp. 209–218. [Google Scholar]

- Li, S.; Lee, D. RGB-D SLAM in dynamic environments using static point weighting. IEEE Robot. Autom. Lett. 2017, 2, 2263–2270. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Yebes, J.J.; Almazán, J.; Bergasa, L.M. On combining visual SLAM and dense scene flow to increase the robustness of localization and mapping in dynamic environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1290–1297. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Wang, C.C.; Thorpe, C.; Thrun, S.; Hebert, M.; Durrant-Whyte, H. Simultaneous localization, mapping and moving object tracking. Int. J. Robot. Res. 2007, 26, 889–916. [Google Scholar] [CrossRef]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. Slam++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Runz, M.; Buffier, M.; Agapito, L. Maskfusion: Real-time recognition, tracking and reconstruction of multiple moving objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 10–20. [Google Scholar]

- Huang, J.; Yang, S.; Zhao, Z.; Lai, Y.K.; Hu, S.M. Clusterslam: A slam backend for simultaneous rigid body clustering and motion estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5875–5884. [Google Scholar]

- Huang, J.; Yang, S.; Mu, T.J.; Hu, S.M. ClusterVO: Clustering moving instances and estimating visual odometry for self and surroundings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13– 19 June 2020; pp. 2168–2177. [Google Scholar]

- Bescos, B.; Campos, C.; Tardós, J.D.; Neira, J. DynaSLAM II: Tightly-coupled multi-object tracking and SLAM. IEEE Robot. Autom. Lett. 2021, 6, 5191–5198. [Google Scholar] [CrossRef]

- Zhang, J.; Henein, M.; Mahony, R.; Ila, V. VDO-SLAM: A visual dynamic object-aware SLAM system. arXiv 2020, arXiv:2005.11052. [Google Scholar]

- Li, D.; Yang, W.; Shi, X.; Guo, D.; Long, Q.; Qiao, F.; Wei, Q. A visual-inertial localization method for unmanned aerial vehicle in underground tunnel dynamic environments. IEEE Access 2020, 8, 76809–76822. [Google Scholar] [CrossRef]

- Kelly, J.; Saripalli, S.; Sukhatme, G.S. Combined visual and inertial navigation for an unmanned aerial vehicle. In Field and Service Robotics: Results of the 6th International Conference; Springer: Berlin/Heidelberg, Germany, 2008; pp. 255–264. [Google Scholar]

- Lin, Y.; Gao, F.; Qin, T.; Gao, W.; Liu, T.; Wu, W.; Yang, Z.; Shen, S. Autonomous aerial navigation using monocular visual-inertial fusion. J. Field Robot. 2018, 35, 23–51. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R. Keyframe-based visual-inertial slam using nonlinear optimization. In Proceedings of the Robotis Science and Systems (RSS) 2013, Berlin, Germany, 24–28 June 2013. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Von Stumberg, L.; Usenko, V.; Cremers, D. Direct sparse visual-inertial odometry using dynamic marginalization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2510–2517. [Google Scholar]

- Von Stumberg, L.; Cremers, D. Dm-vio: Delayed marginalization visual-inertial odometry. IEEE Robot. Autom. Lett. 2022, 7, 1408–1415. [Google Scholar] [CrossRef]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Huang, A.S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual odometry and mapping for autonomous flight using an RGB-D camera. In Robotics Research: The 15th International Symposium ISRR; Springer: Cham, Switzerland, 2017; pp. 235–252. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. Adv. Neural Inf. Process. Syst. 2014, 27, 2366–2374. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Liu, F.; Shen, C.; Lin, G. Deep convolutional neural fields for depth estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5162–5170. [Google Scholar]

- Laina, I.; Rupprecht, C.; Belagiannis, V.; Tombari, F.; Navab, N. Deeper depth prediction with fully convolutional residual networks. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 239–248. [Google Scholar]

- Ma, F.; Karaman, S. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4796–4803. [Google Scholar]

- Ma, F.; Cavalheiro, G.V.; Karaman, S. Self-supervised sparse-to-dense: Self-supervised depth completion from lidar and monocular camera. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3288–3295. [Google Scholar]

- Wofk, D.; Ma, F.; Yang, T.J.; Karaman, S.; Sze, V. Fastdepth: Fast monocular depth estimation on embedded systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6101–6108. [Google Scholar]

- Dong, X.; Garratt, M.A.; Anavatti, S.G.; Abbass, H.A. Mobilexnet: An efficient convolutional neural network for monocular depth estimation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20134–20147. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. iMAP: Implicit mapping and positioning in real-time. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6229–6238. [Google Scholar]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. Nice-slam: Neural implicit scalable encoding for slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Sturm, J.; Burgard, W.; Cremers, D. Evaluating egomotion and structure-from-motion approaches using the TUM RGB-D benchmark. In Proceedings of the Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RJS International Conference on Intelligent Robot Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; Volume 13. [Google Scholar]

- Zhao, M.; Guo, X.; Song, L.; Qin, B.; Shi, X.; Lee, G.H.; Sun, G. A general framework for lifelong localization and mapping in changing environment. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3305–3312. [Google Scholar]

| Type | Advantage | Shortcoming | Algorithms |

|---|---|---|---|

| Monocular | simple and cheap | suffers from scale ambiguity | [22,28,29] |

| Stereo | indoor and outdoor depth sensing | relies on texture of environment and requires computing | [23,30,31] |

| RGB-D | high-quality depth sensing | only suitable for indoor scenes and has high power consumption | [32,33,34] |

| Event | sensitive to dynamic information | unable to capture regular-intensity images | [35,36,37] |

| Multi-camera | captures more information and arbitrary views combination | larger data need to be processed | [38,39,40] |

| Odometry Method | Algorithm | Sensor | Feature |

|---|---|---|---|

| Feature-based | Mono-SLAM [58] | Monocular | EKF-based |

| PTAM [67] | Monocular | parallel tracking and mapping | |

| ORB-SLAM [22] | Monocular | multi-threads | |

| DynamicFusion [89] | RGB-D | non-rigid dynamic scene motion tracking | |

| ORB-SLAM2 [23] | Monocular, stereo, RGB-D | multi-configurations | |

| PL-SLAM [72,73] | Monocular, stereo | point, line feature extraction | |

| Dyna-SLAM [86] | Monocular, stereo, RGB-D | segment dynamic objects | |

| MaskFusion [90] | RGB-D | tracks multiple objects | |

| VDO-SLAM [94] | RGB-D | joint optimization including camera poses, static, dynamic points, and object motion | |

| PLP-SLAM [78] | Monocular, stereo, RGB-D | point, line, plane feature extraction | |

| Direct | KinectFusion [33] | RGB-D | dense surface reconstruction |

| DTAM [69] | Monocular | monocular dense tracking and mapping | |

| DVO-SLAM [32] | RGB-D | tracks motion by minimizing both photometric and geometric errors | |

| LSD-SLAM [28] | Monocular | large-scale estimation | |

| SVO [38,71] * | Monocular, Multiple camera | hybrid odometry | |

| DSO [29] | Monocular | sparse direct formulation | |

| BAD-SLAM [34] | RGB-D | fast direct BA formulation |

| Algorithm | Odometry Method | Optimization | Loop Closure |

|---|---|---|---|

| MSCKF [98] | feature-based | EKF-based | - |

| OKVIS [100] | feature-based | Local BA | - |

| ROVIO [99] | direct | EKF-based | - |

| ORB-SLAM-VI [101] | feature-based | Local BA | PGBA |

| VINS-Mono [102] | feature-based | Local BA | PGBA |

| VI-DSO [103] | direct | Local BA | - |

| ORB-SLAM3 [24] | feature-based | Local BA, GBA | PGBA |

| DM-VIO [104] | direct | Local BA | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuang, L.; Zhong, X.; Xu, L.; Tian, C.; Yu, W. Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors 2024, 24, 2980. https://doi.org/10.3390/s24102980

Zhuang L, Zhong X, Xu L, Tian C, Yu W. Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors. 2024; 24(10):2980. https://doi.org/10.3390/s24102980

Chicago/Turabian StyleZhuang, Licong, Xiaorong Zhong, Linjie Xu, Chunbao Tian, and Wenshuai Yu. 2024. "Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception" Sensors 24, no. 10: 2980. https://doi.org/10.3390/s24102980

APA StyleZhuang, L., Zhong, X., Xu, L., Tian, C., & Yu, W. (2024). Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors, 24(10), 2980. https://doi.org/10.3390/s24102980