A Novel Lightweight Model for Underwater Image Enhancement

Abstract

:1. Introduction

- (1)

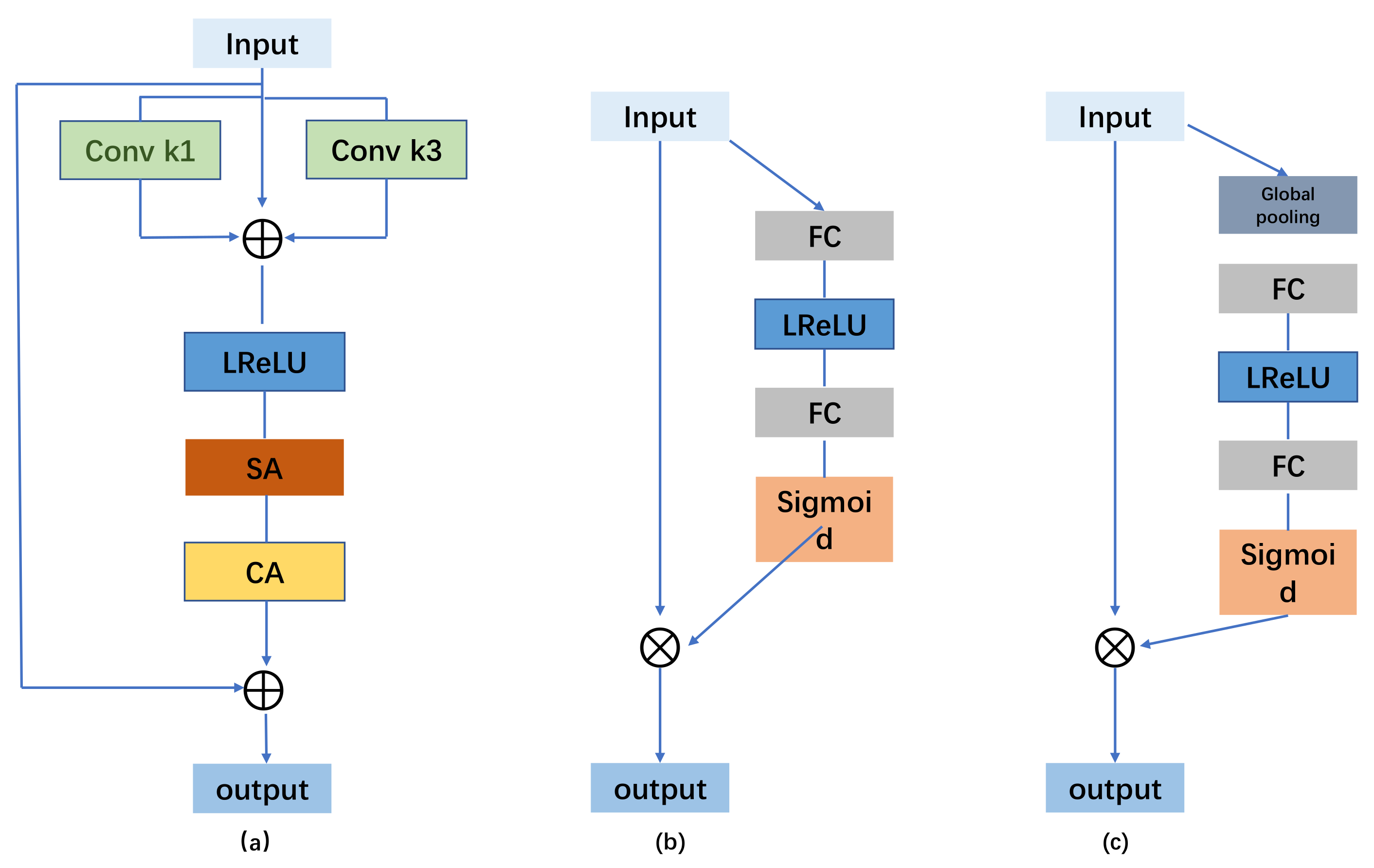

- A multi-scale hybrid convolutional attention module is designed. Considering the complex and diverse local features of underwater scenes, this paper uses a spatial attention mechanism and channel attention mechanism. The former is to improve the network’s ability to pay attention to complex regions such as light field distribution and color depth information in underwater images, while the latter focuses on the network’s representation of important channels in features, thus improving the overall representation performance of the model.

- (2)

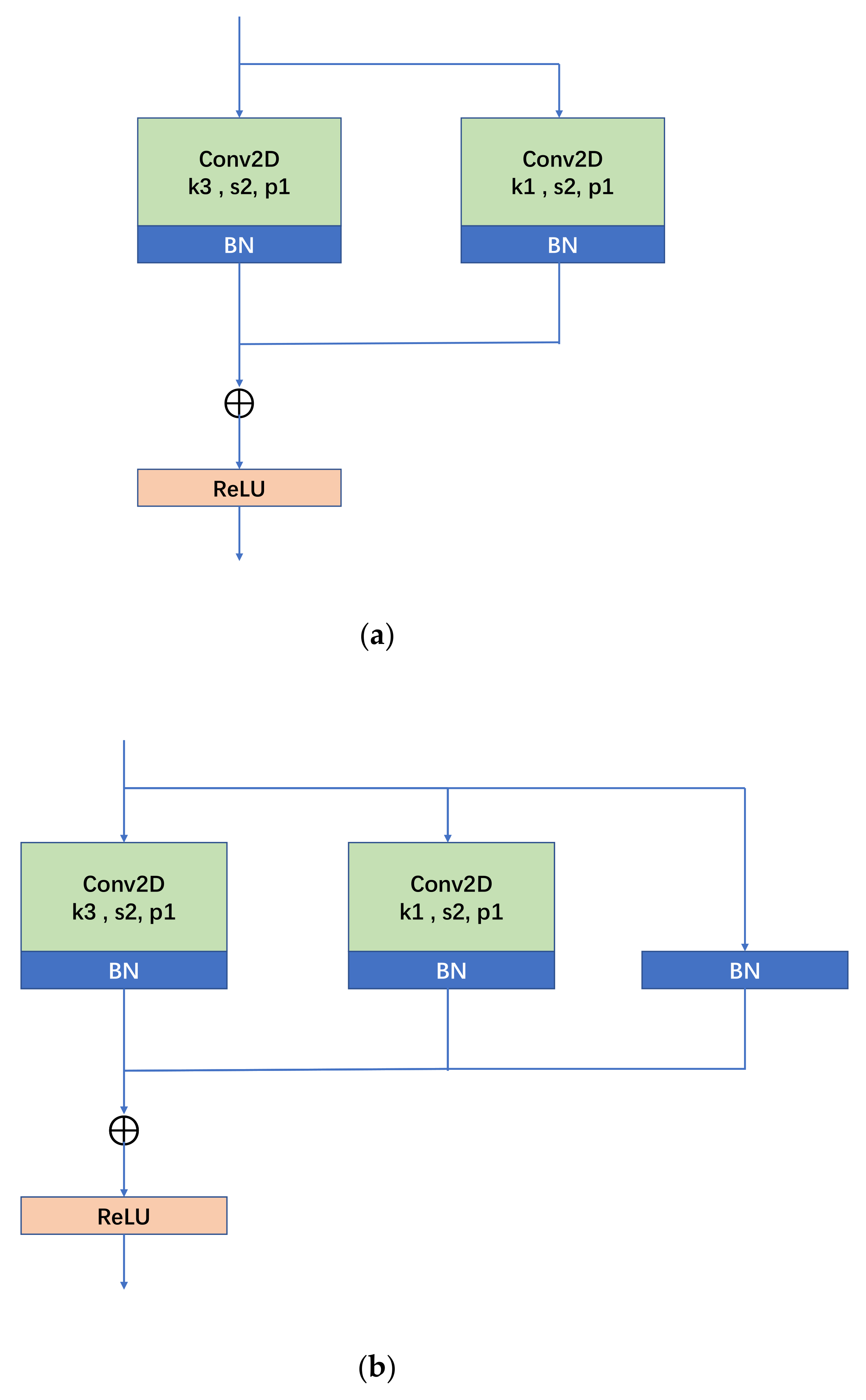

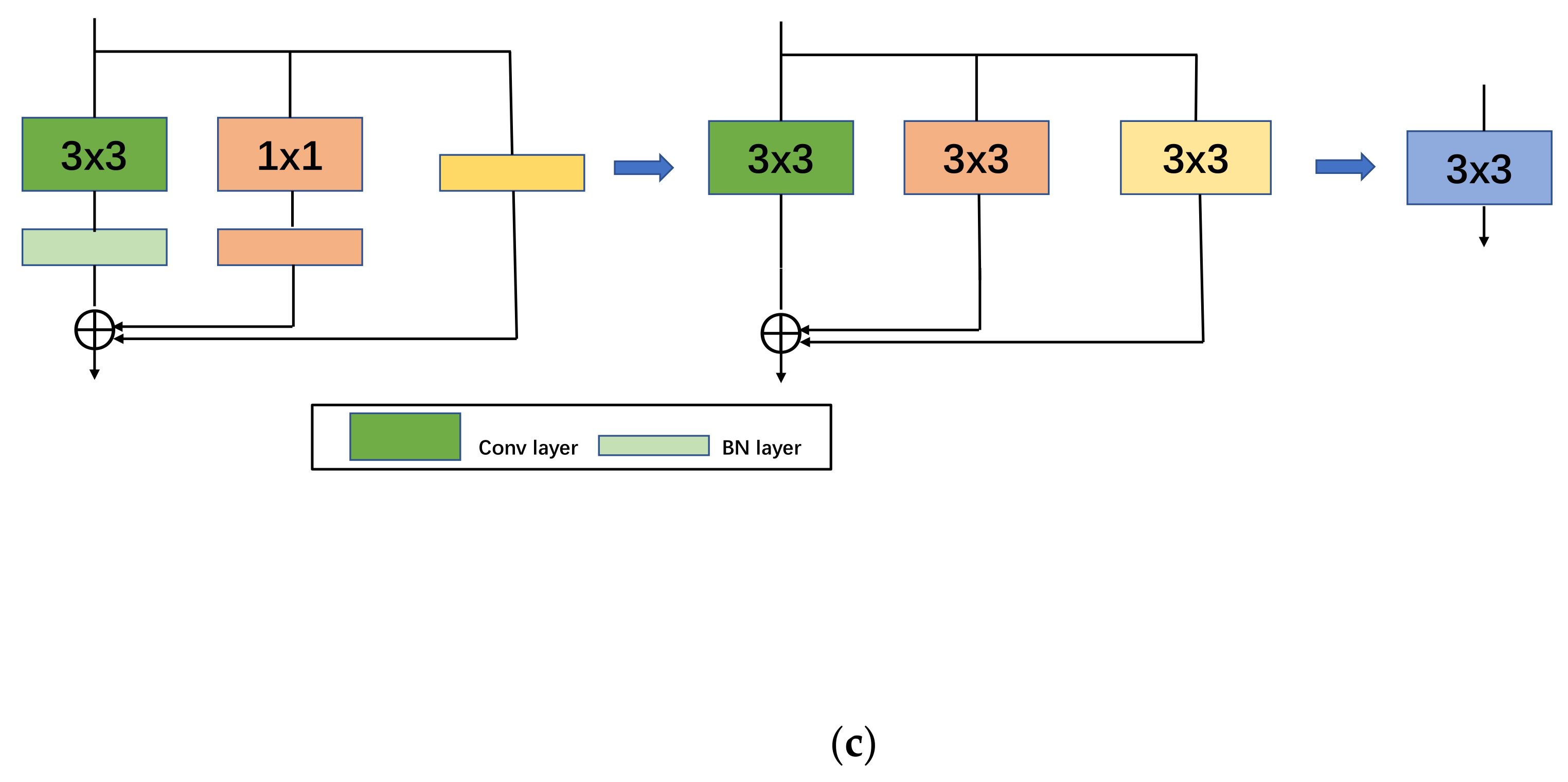

- RepVGG Block is used instead of ordinary convolution, and different network architectures are used in the network training and network inference phases. With more attention to accuracy in the training phase and more attention to speed in the inference phase, an average speedup of 0.11 s for a single image test is achieved.

- (3)

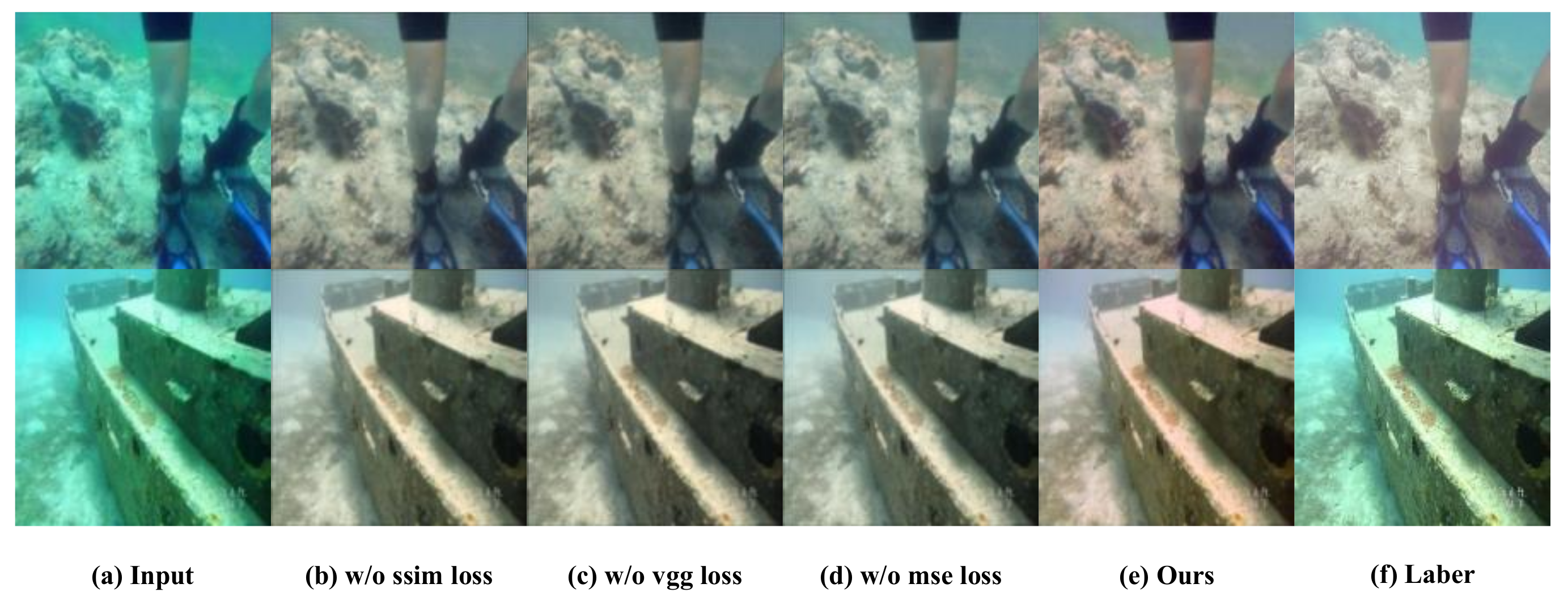

- A joint loss function combining content perception, mean square error, and structural similarity is designed, and weight coefficients are applied to reasonably assign each loss size. For the perceptual loss, layers 1, 3, 5, 9, and 13 of the VGG19 model are selected in this paper to extract hidden features and generate clearer underwater images while maintaining the original image texture and structure.

2. Related Work

2.1. Neural Net Feature Fusion

2.2. Underwater Datasets

- (1)

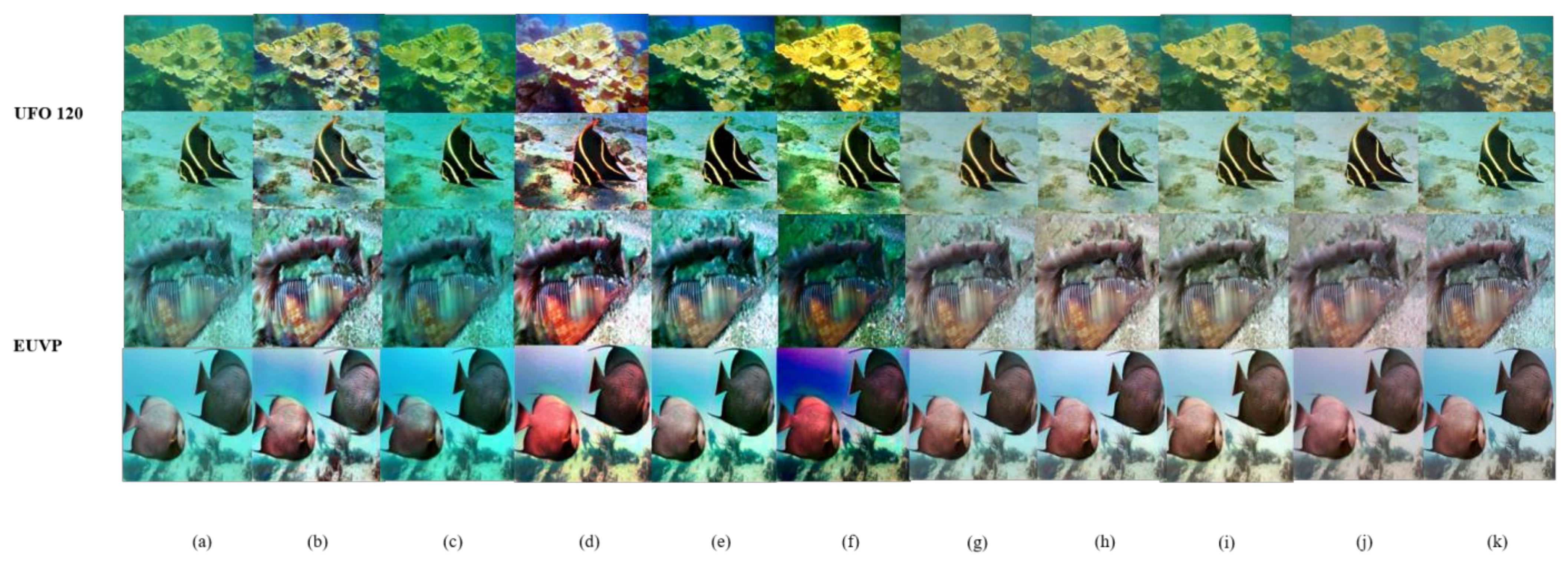

- The EUVP (Enhancing Underwater Visual Perception) dataset is a large dataset designed to facilitate supervised training of underwater image enhancement models. The dataset contains both paired and unpaired image samples, covering images with poor and good perceptual quality for model training. The EUVP dataset consists of three sub-datasets, namely, Underwater Dark, Underwater ImageNet, and Underwater Scene, which cover different types of waters and underwater landscapes, such as oceans, lakes, rivers, coral reefs, rocky terrains, and seagrass beds. These images exhibit rich diversity and representativeness, reflecting the typical characteristics of real-world underwater environments and covering waters in different geographic regions. In the process of acquiring the EUVP dataset, the researchers fully considered the effects of factors such as water quality, lighting conditions, and shooting equipment on image quality and model performance. The dataset utilized several different types of cameras, including GoPro cameras, Aqua AUV’s uEye cameras, low-light USB cameras, and Trident ROV’s HD cameras, to capture image samples under different conditions. These data were collected during ocean exploration and human–computer collaboration experiments in various locations and under different visibility conditions, including images extracted from a number of publicly available YouTube videos. The images in the EUVP dataset have therefore been carefully selected to accommodate the wide range of natural variability in the data, such as scene, water body type, lighting conditions, and so on. By controlling these factors, the quality and reliability of the data are ensured, providing an important database for model training.

- (2)

- The UFO 120 dataset, comprising 1500 pairs of underwater images, serves as a pivotal resource aimed at bolstering the training of algorithms and models for underwater image processing. Despite its relatively modest size, this dataset encapsulates a diverse array of scenes, water body types, and lighting conditions, showcasing a representative cross-section of underwater environments. Sourced from the Flickr platform, these images authentically capture the myriad complexities present in real-world underwater settings. Throughout the data collection process, meticulous consideration was given to factors such as water quality, lighting variations, and equipment configurations, ensuring the portrayal of a wide spectrum of visual characteristics and challenges. Serving as a real-world benchmark, the UFO 120 dataset imposes rigorous demands on underwater image processing models, necessitating their adeptness in handling diverse lighting scenarios and water conditions, while also addressing potential issues like noise and blurriness. Consequently, conducting evaluations and tests using the UFO 120 dataset facilitates a comprehensive appraisal of a model’s real-world performance, thereby fostering avenues for refinement and optimization. Despite its relatively modest size, the UFO 120 dataset holds significant research value, furnishing invaluable insights and benchmarks for advancing research in the realm of underwater image processing.

| Dataset Name | Training Pairs | Validation | Total Images | Size |

|---|---|---|---|---|

| Underwater Dark | 5550 | 570 | 11,670 | 256 × 256 |

| Underwater ImageNet | 3700 | 1270 | 8670 | 256 × 256 |

| Underwater Scenes | 2185 | 130 | 4500 | 256 × 256 |

| UFO 120 | 1500 | 120 | 3120 | 320 × 240 |

3. Proposed Method

3.1. Rep-UWnet Model

3.2. Multi-Scale Hybrid Convolutional Attention Module

3.3. RepVGG Block

3.4. Loss Function

4. Experiments

4.1. Dataset and Experimental Setup

4.2. Experimental Results

4.3. Ablation Experiments

4.3.1. Loss Function Ablation Experiment

4.3.2. Attention Ablation Experiment

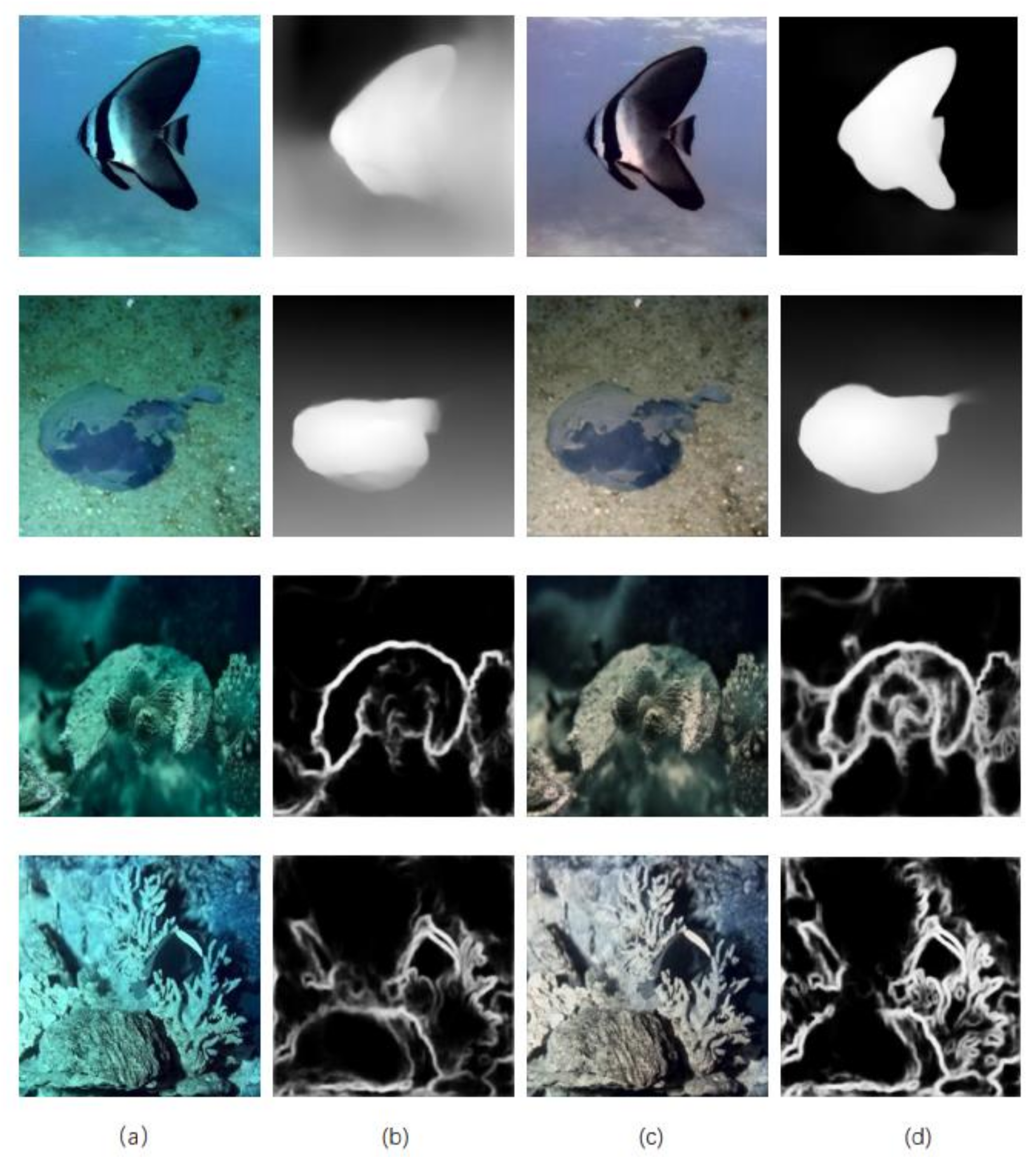

4.4. Application Testing Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kimball, P.W.; Clark, E.B.; Scully, M.; Richmond, K.; Flesher, C.; Lindzey, L.E.; Harman, J.; Huffstutler, K.; Lawrence, J.; Lelievre, S.; et al. The artemis under-ice auv docking system. J. Field Robot. 2018, 35, 299–308. [Google Scholar] [CrossRef]

- Bingham, B.; Foley, B.; Singh, H.; Camilli, R.; Delaporta, K.; Eustice, R.; Mallios, A.; Mindell, D.; Roman, C.; Sakellariou, D. Robotic tools for deep water archaeology: Surveying an ancient shipwreck with anautonomous underwater vehicle. J. Field Robot. 2010, 27, 702–717. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, Y.; Song, W.; Fortino, G.; Qi, L.Z.; Zhang, W.; Liotta, A. An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 2019, 7, 140233–140251. [Google Scholar] [CrossRef]

- Guo, J.C.; Li, C.Y.; Guo, C.L.; Chen, S.J. Research progress of underwater image enhancement and restoration methods. J. Image Graph. 2017, 22, 273–287. [Google Scholar]

- Hu, H.; Zhao, L.; Li, X.; Wang, H.; Liu, T. Underwater image recovery under the nonuniform optical field based on polarimetric imaging. IEEE Photonics J. 2018, 10, 1–9. [Google Scholar] [CrossRef]

- Gallagher, A.C. Book review: Image processing: Principles and applications. J. Electron. Imaging 2006, 15, 039901. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.-P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 4572–4576. [Google Scholar]

- Ghani, A.S.; Isa, N.A. Enhancement of low quality underwater image through integrated global and local contrast correction. Appl. Soft Comput. 2015, 37, 332–344. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar]

- He, K.M.; Sun, J.; Tang, X.O. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Drews, P., Jr.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 1–8 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 825–830. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Hu, Y.; Zou, L.; Zan, S.; Cao, F.; Zhao, M. Underwater image enhancement based on dark channel and gamma transform. Electron. Opt. Control 2021, 28, 81–85. [Google Scholar]

- Li, C.; Zhou, D.; Jia, H. Edge guided dual-channel convolutional neural network for single image super resolution algorithm. J. Nanjing Univ. Inf. Sci. Technol. (Nat. Sci. Ed.) 2017, 9, 669–674. [Google Scholar]

- Liu, P.; Wang, G.; Qi, H.; Zhang, C.; Zheng, H.; Yu, Z. Underwater image enhancement with a deep residual framework. IEEE Access 2019, 7, 94614–94629. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, P.; Quan, L.; Yi, C.; Lu, C. Underwater image enhancement algorithm combining deep learning and image formation model. Comput. Eng. 2022, 48, 243–249. [Google Scholar]

- Islam, M.J.; Xia, Y.Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7159–7165. [Google Scholar]

- Wang, N.; Zhou, Y.; Han, F.; Zhu, H.; Yao, J. UWGAN: Underwater GAN for Real-world Underwater Color Restoration and Dehazing. arXiv 2019, arXiv:1912.10269. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13733–13742. [Google Scholar]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-uwnet: Compressed model for underwater image enhancement (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 15853–15854. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Rain streak removal using layer priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2736–2744. [Google Scholar]

- Mușat, V.; Fursa, I.; Newman, P.; Cuzzolin, F.; Bradley, A. Multi-weather city: Adverse weather stacking for autonomous driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2906–2915. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1980, 207, 187–217. [Google Scholar]

- Yin, W.; Liu, Y.; Shen, C.; Yan, Y. Enforcing geometric constraints of virtual normal for depth prediction. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1623–1637. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

| Methods | UFO 120 | EUVP | ||||

|---|---|---|---|---|---|---|

| PSNR | SSIM | UIQM | PSNR | SSIM | UIQM | |

| CLAHE | 18.54 ± 2.08 | 0.69 ± 0.04 | 2.65 ± 0.38 | 19.31 ± 2.11 | 0.70 ± 0.11 | 2.55 ± 0.13 |

| DCP | 13.35 ± 1.77 | 0.67 ± 0.09 | 1.93 ± 0.27 | 13.66 ± 2.18 | 0.66 ± 0.03 | 1.88 ± 0.15 |

| HE | 16.08 ± 2.22 | 0.63 ± 0.07 | 1.88 ± 0.31 | 17.13 ± 1.66 | 0.64 ± 0.02 | 1.91 ± 0.16 |

| IBLA | 16.63 ± 2.13 | 0.64 ± 0.06 | 1.83 ± 0.24 | 17.22 ± 2.28 | 0.68 ± 0.05 | 1.88 ± 0.12 |

| UDCP | 16.25 ± 2.47 | 0.61 ± 0.03 | 1.66 ± 0.33 | 15.33 ± 2.64 | 0.65 ± 0.04 | 1.63 ± 0.18 |

| Deep-SESR | 26.46 ± 2.63 | 0.72 ± 0.05 | 2.93 ± 0.18 | 25.20 ± 2.26 | 0.78 ± 0.06 | 2.88 ± 0.23 |

| FUnIE-GAN | 24.72 ± 2.54 | 0.74 ± 0.06 | 2.79 ± 0.27 | 26.12 ± 2.83 | 0.81 ± 0.13 | 2.85 ± 0.27 |

| UGAN | 24.33 ± 1.37 | 0.70 ± 0.12 | 2.55 ± 0.24 | 23.65 ± 2.17 | 0.71 ± 0.02 | 2.83 ± 0.33 |

| Ours | 25.25 ± 2.44 | 0.73 ± 0.08 | 2.85 ± 0.19 | 27.50 ± 2.78 | 0.83 ± 0.14 | 2.93 ± 0.17 |

| Algorithms | 1 | 2 | 3 | 4 | 5 | Average Score |

|---|---|---|---|---|---|---|

| CLAHE | 3 | 3 | 3 | 4 | 3 | 3.2 |

| DCP | 2 | 3 | 3 | 2 | 3 | 2.6 |

| HE | 2 | 2 | 2 | 1 | 2 | 1.8 |

| IBLA | 3 | 3 | 3 | 3 | 3 | 3 |

| UDCP | 3 | 3 | 2 | 2 | 3 | 2.8 |

| Deep-SESR | 4 | 3 | 4 | 4 | 4 | 3.8 |

| FUnIE-GAN | 4 | 4 | 4 | 3 | 4 | 3.8 |

| UGAN | 4 | 3 | 4 | 3 | 4 | 3.6 |

| Ours | 4 | 4 | 5 | 3 | 4 | 4 |

| Models | # Model Parameters | Compression Rate | Testing per Image (s) | Speed-Up |

|---|---|---|---|---|

| Deep-SESR | 2.45 M | 13.22 | 0.16 | 8 |

| FUnIE-GAN | 5.5 M | 17.67 | 0.18 | 7 |

| UGAN | 38.7 M | 55.34 | 1.13 | 24 |

| Ours w/o RepVGG Block | 1.23 M | 1.44 | 0.14 | 2 |

| Ours | 0.45 M | 1.22 | 0.03 | 1 |

| Methods | PSNR | SSIM | UIQM |

|---|---|---|---|

| w/o SSIM loss | 25.67 ± 2.52 | 0.75 ± 0.08 | 2.87 ± 0.36 |

| w/o VGG loss | 25.43 ± 2.49 | 0.77 ± 0.07 | 2.91 ± 0.25 |

| w/o MSE loss | 25.83 ± 2.86 | 0.78 ± 0.07 | 2.90 ± 0.31 |

| Ours | 27.50 ± 2.78 | 0.83 ± 0.14 | 2.93 ± 0.17 |

| Methods | UFO 120 | EUVP | ||||

|---|---|---|---|---|---|---|

| PSNR | SSIM | UIQM | PSNR | SSIM | UIQM | |

| Ours w/o SA | 24.57 ± 1.78 | 0.70 ± 0.06 | 2.80 ± 0.22 | 26.78 ± 2.53 | 0.80 ± 0.07 | 2.89 ± 0.23 |

| Ours w/o CA | 24.69 ± 2.32 | 0.72 ± 0.05 | 2.83 ± 0.17 | 26.83 ± 2.46 | 0.81 ± 0.05 | 2.91 ± 0.15 |

| Ours | 25.25 ± 2.44 | 0.73 ± 0.08 | 2.85 ± 0.19 | 27.50 ± 2.78 | 0.83 ± 0.04 | 2.93 ± 0.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Yang, Y.; Zhao, M.; Hu, M. A Novel Lightweight Model for Underwater Image Enhancement. Sensors 2024, 24, 3070. https://doi.org/10.3390/s24103070

Liu B, Yang Y, Zhao M, Hu M. A Novel Lightweight Model for Underwater Image Enhancement. Sensors. 2024; 24(10):3070. https://doi.org/10.3390/s24103070

Chicago/Turabian StyleLiu, Botao, Yimin Yang, Ming Zhao, and Min Hu. 2024. "A Novel Lightweight Model for Underwater Image Enhancement" Sensors 24, no. 10: 3070. https://doi.org/10.3390/s24103070

APA StyleLiu, B., Yang, Y., Zhao, M., & Hu, M. (2024). A Novel Lightweight Model for Underwater Image Enhancement. Sensors, 24(10), 3070. https://doi.org/10.3390/s24103070