DMAF-NET: Deep Multi-Scale Attention Fusion Network for Hyperspectral Image Classification with Limited Samples

Abstract

:1. Introduction

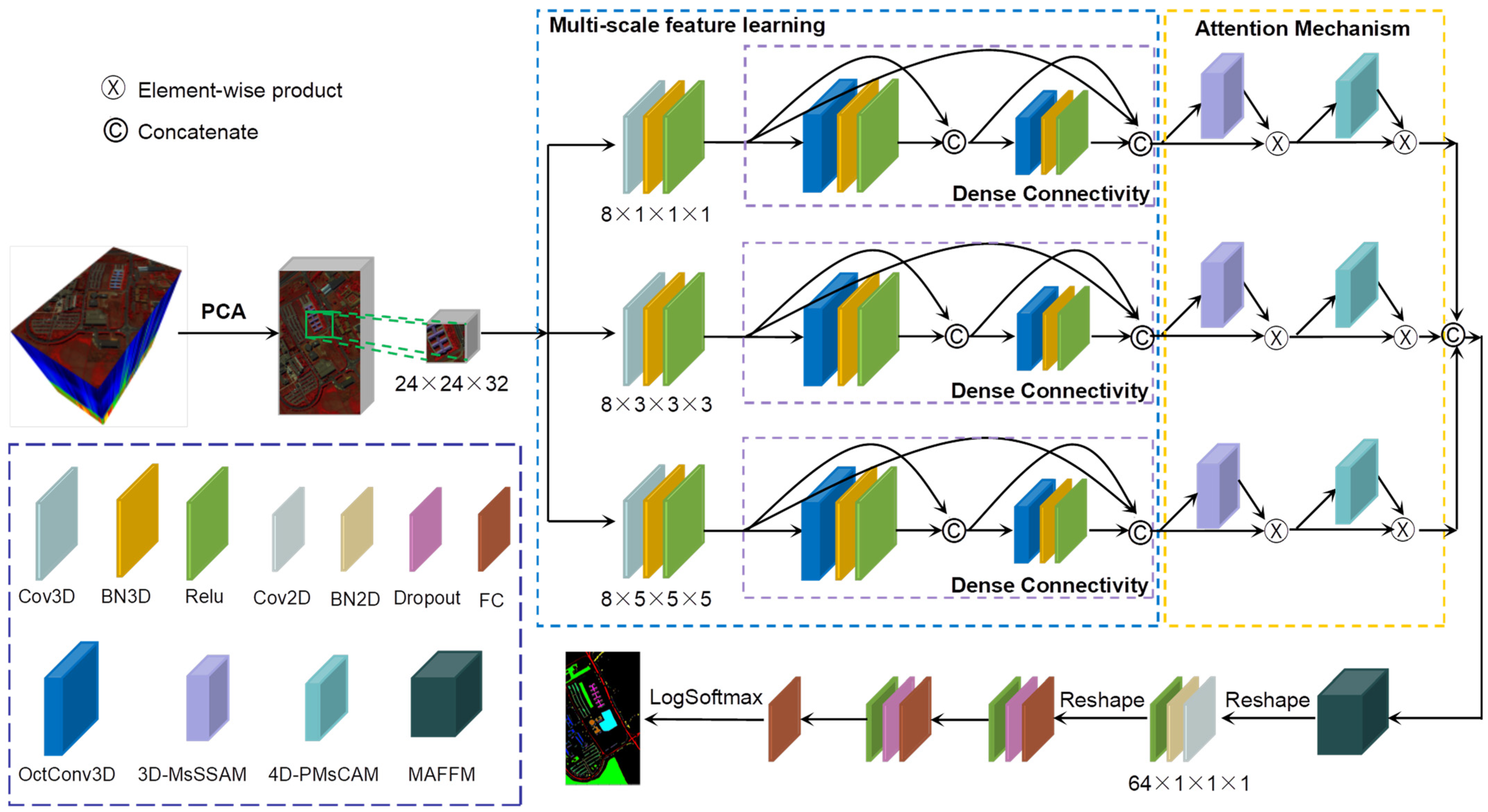

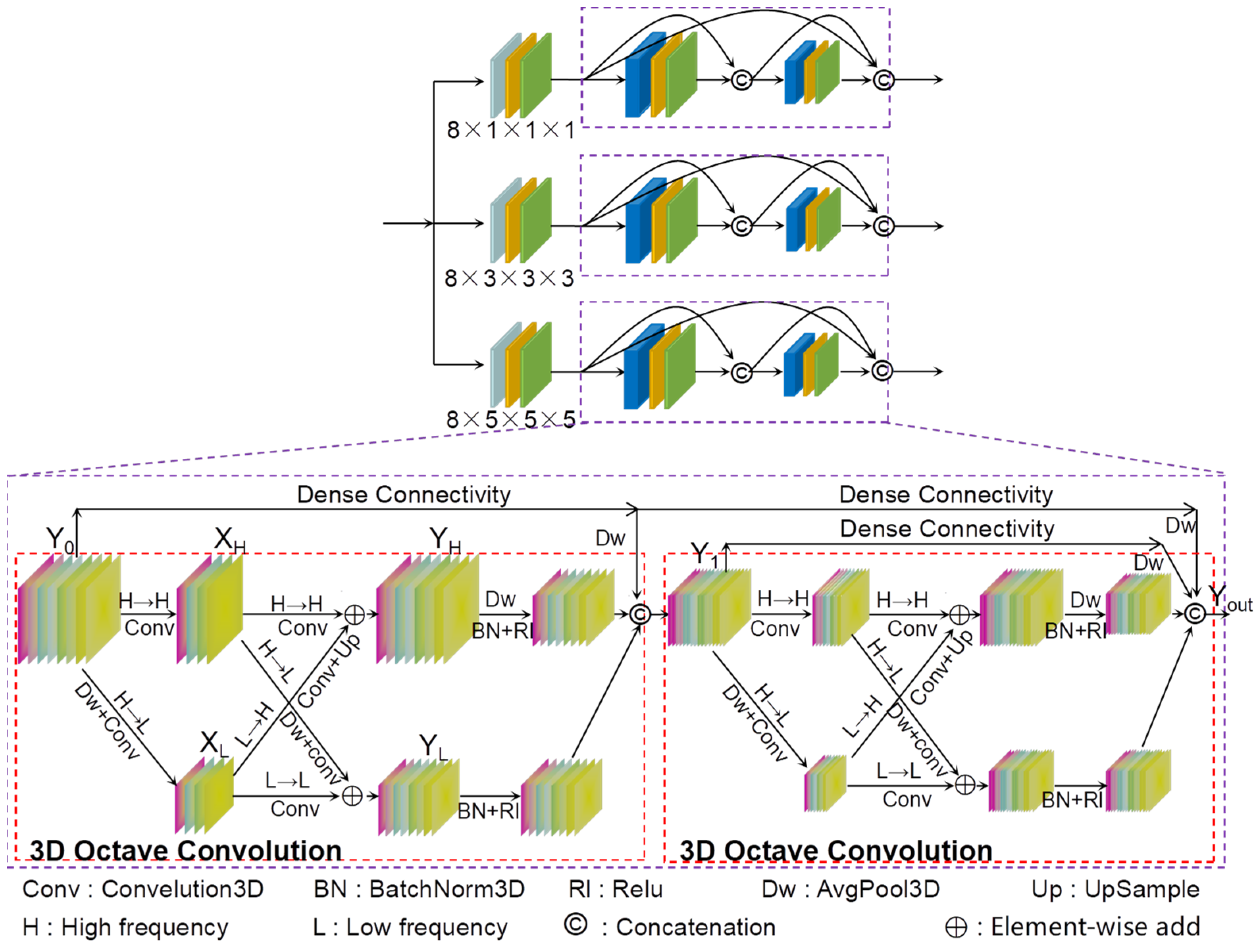

- A novel baseline network for multi-scale feature extraction is designed. The baseline comprises three branches. Firstly, a pyramid-like structure is employed for preliminary feature extraction to capture features at different scales. Subsequently, a dense-connected 3D octave convolutional network is utilized to learn deeper and finer-grained features within various scale windows. This allows for effective leveraging of semantic information at various levels with limited samples to extract more robust and highly generalizable features.

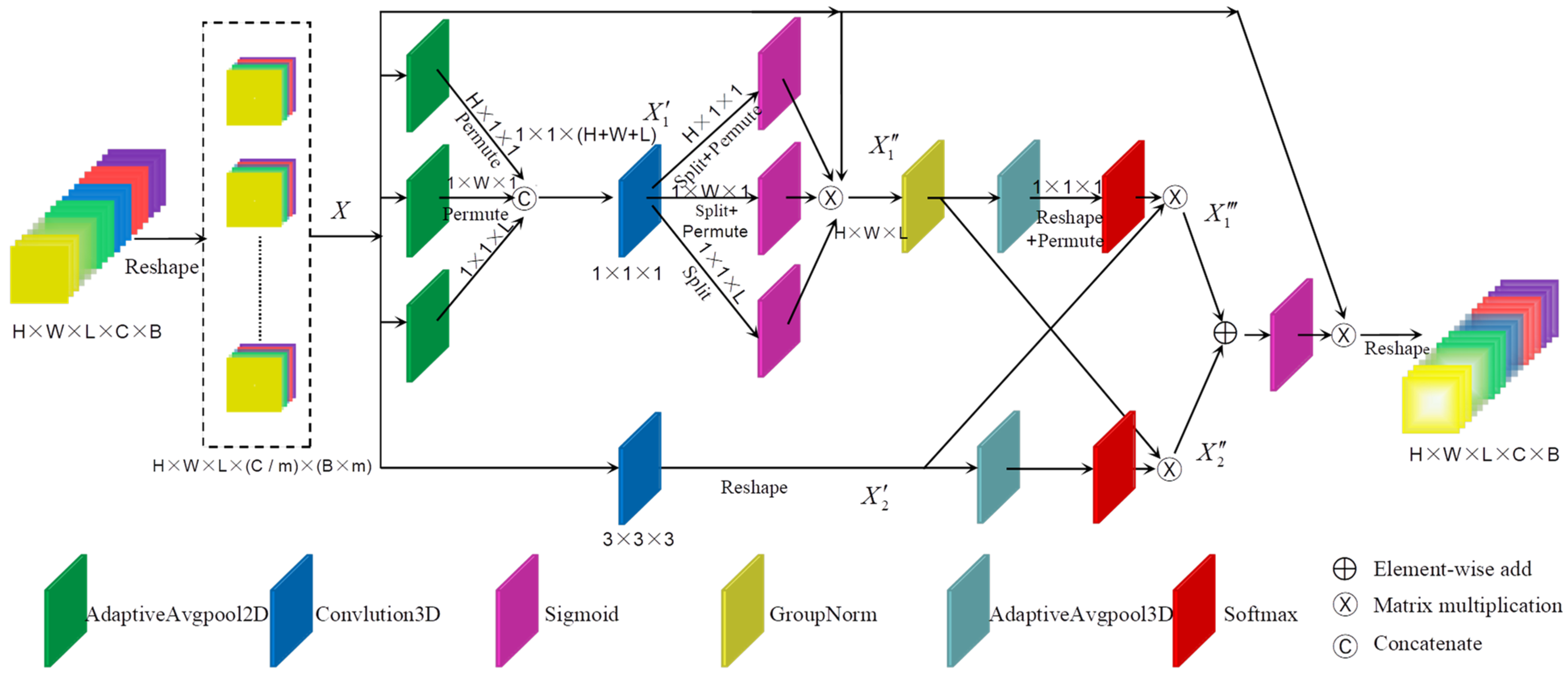

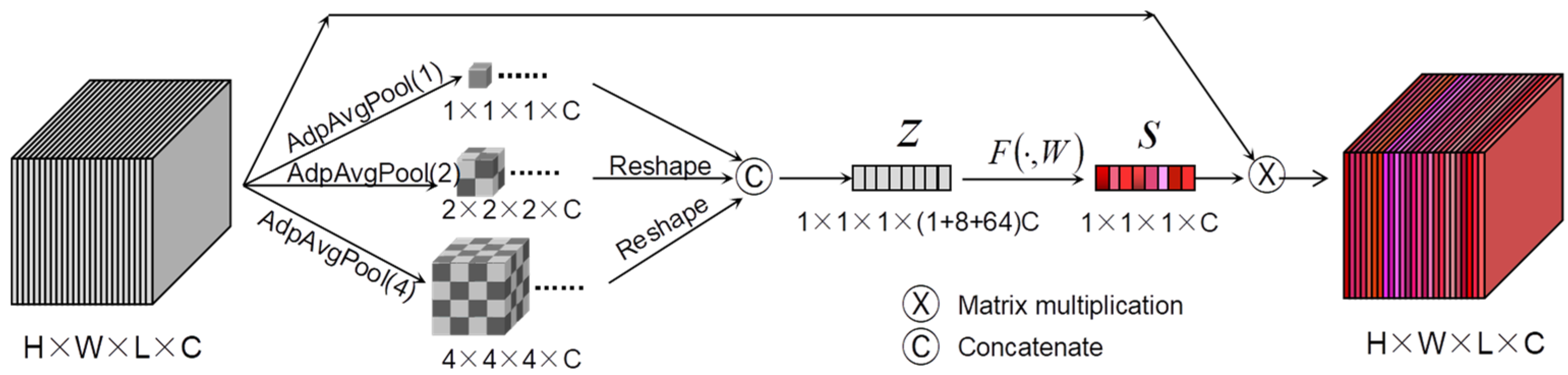

- Considering the high-resolution and multi-dimensional characteristics of HSIs, we have designed a 3D multi-scale spatial–spectral attention module and a 4D pyramid-type multi-scale channel attention module, respectively. This models the comprehensive dependencies of coordinates and directions, local and global, in four dimensions, making the model more focused on extracting information useful for classification.

- A multi-attention feature fusion module is designed. By fully utilizing the strong complementary and correlated information from different hierarchical features, this approach effectively integrates feature information from various levels and scales, thereby improving the performance of HSIC results under limited sample conditions.

- Extensive experiments based on limited labeled samples were conducted on four typical HSI datasets. The results demonstrate that the proposed DMAF-NET model outperforms other state-of-the-art deep learning-based methods in terms of both efficacy and efficiency.

2. Proposed Method

2.1. Overview of the Proposed Model

2.2. Multi-Scale Feature Extraction Backbone Network

2.3. Attention Mechanism Unit

2.3.1. Three-Dimensional Multi-Scale Space–Spectral Attention Enhancement Module

2.3.2. Four-Dimensional Pyramid-Style Multi-Scale Channel Attention Module

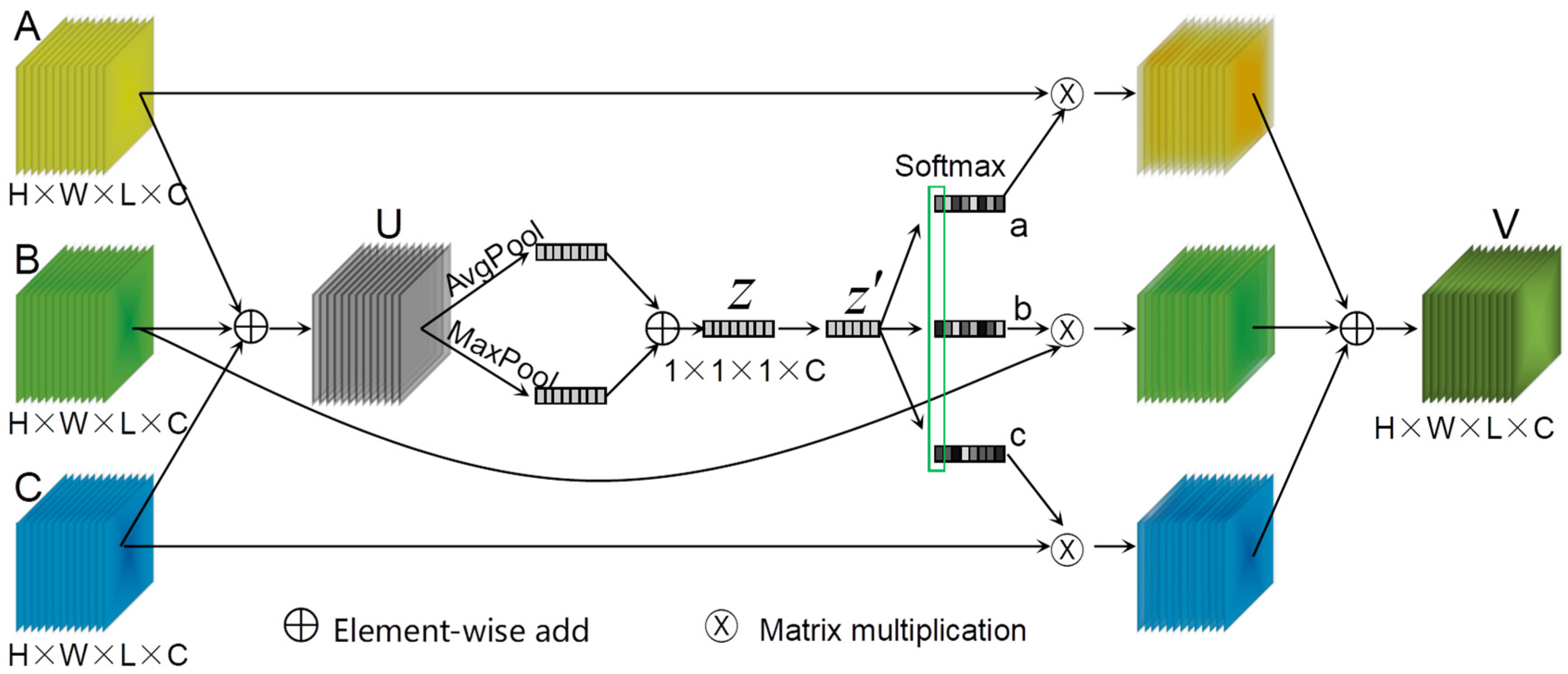

2.4. Multi-Attention Feature Fusion Module

3. Experiments and Results

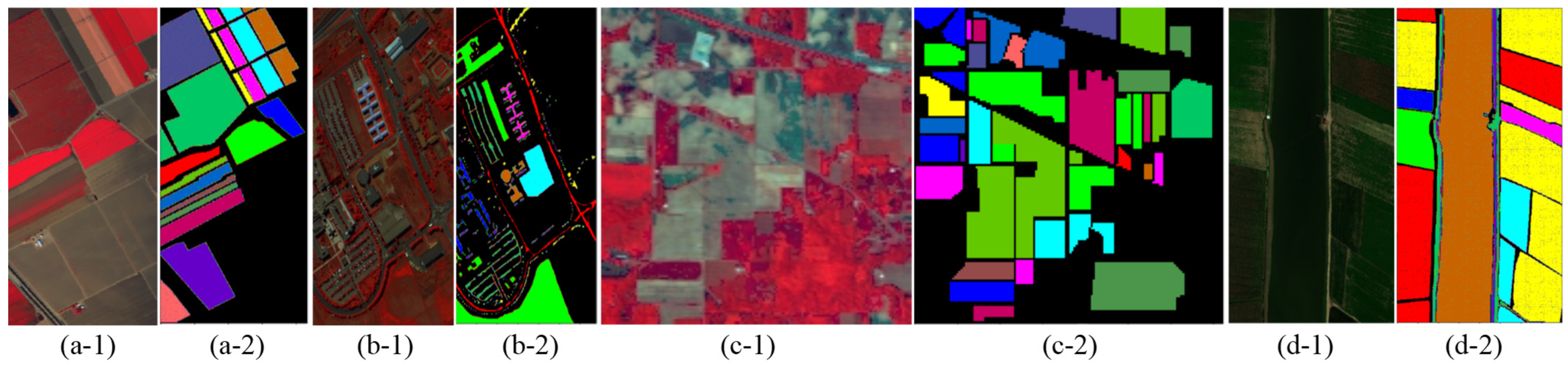

3.1. Dataset Description

3.2. Experimental Settings

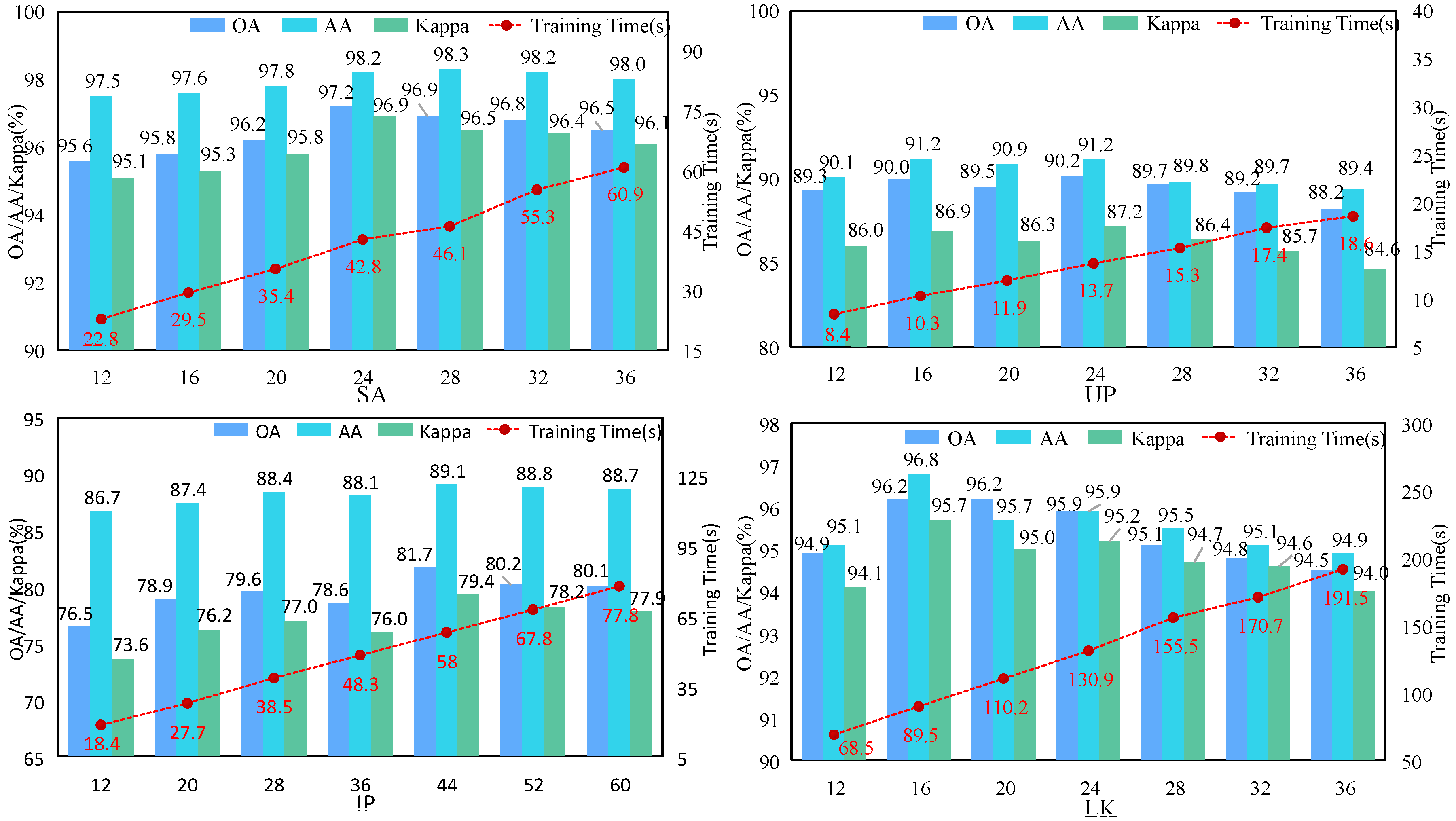

3.3. Parametric Analysis

3.3.1. Analysis of the Patch Size

3.3.2. Analysis of the PCA Components

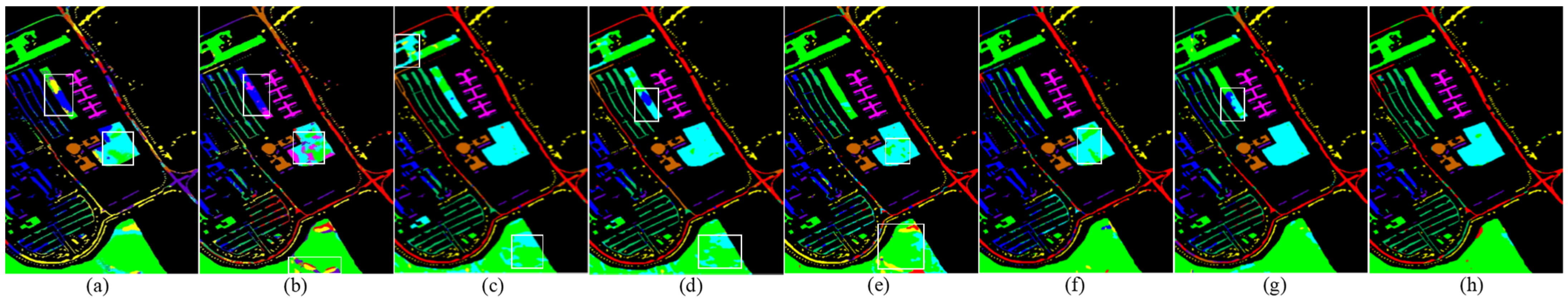

3.4. Comparison with Other Methods

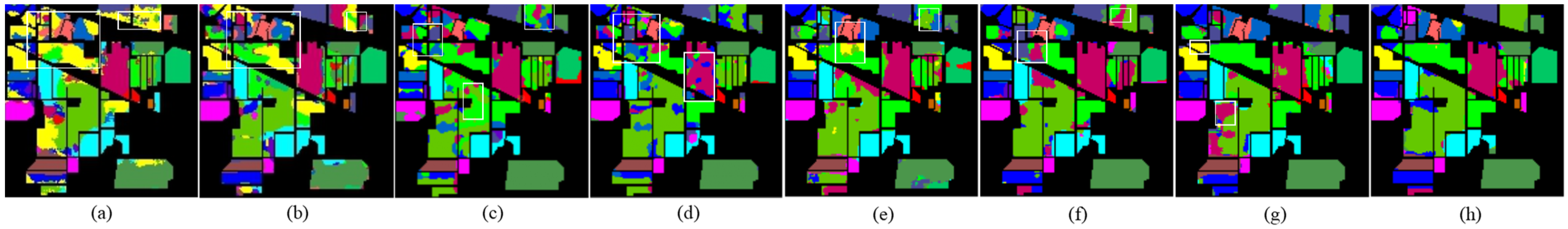

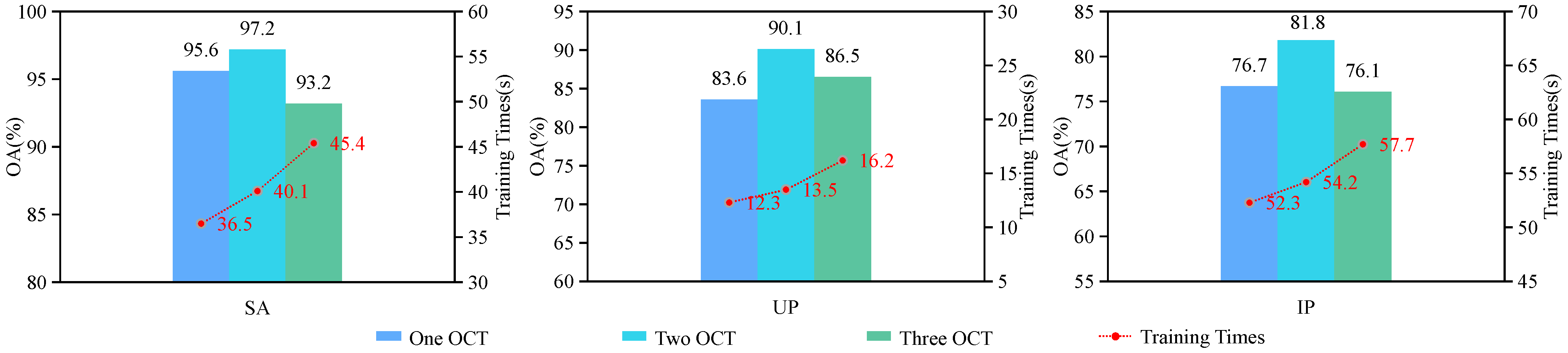

3.4.1. Evaluation Results with a Training Sample Limit of 10 for Each Category

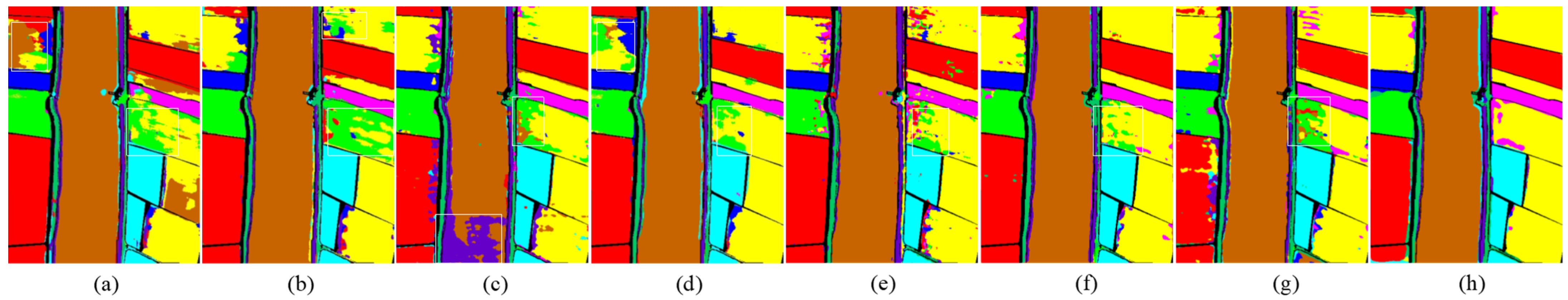

3.4.2. Evaluation Results with Different Training Sample Sizes

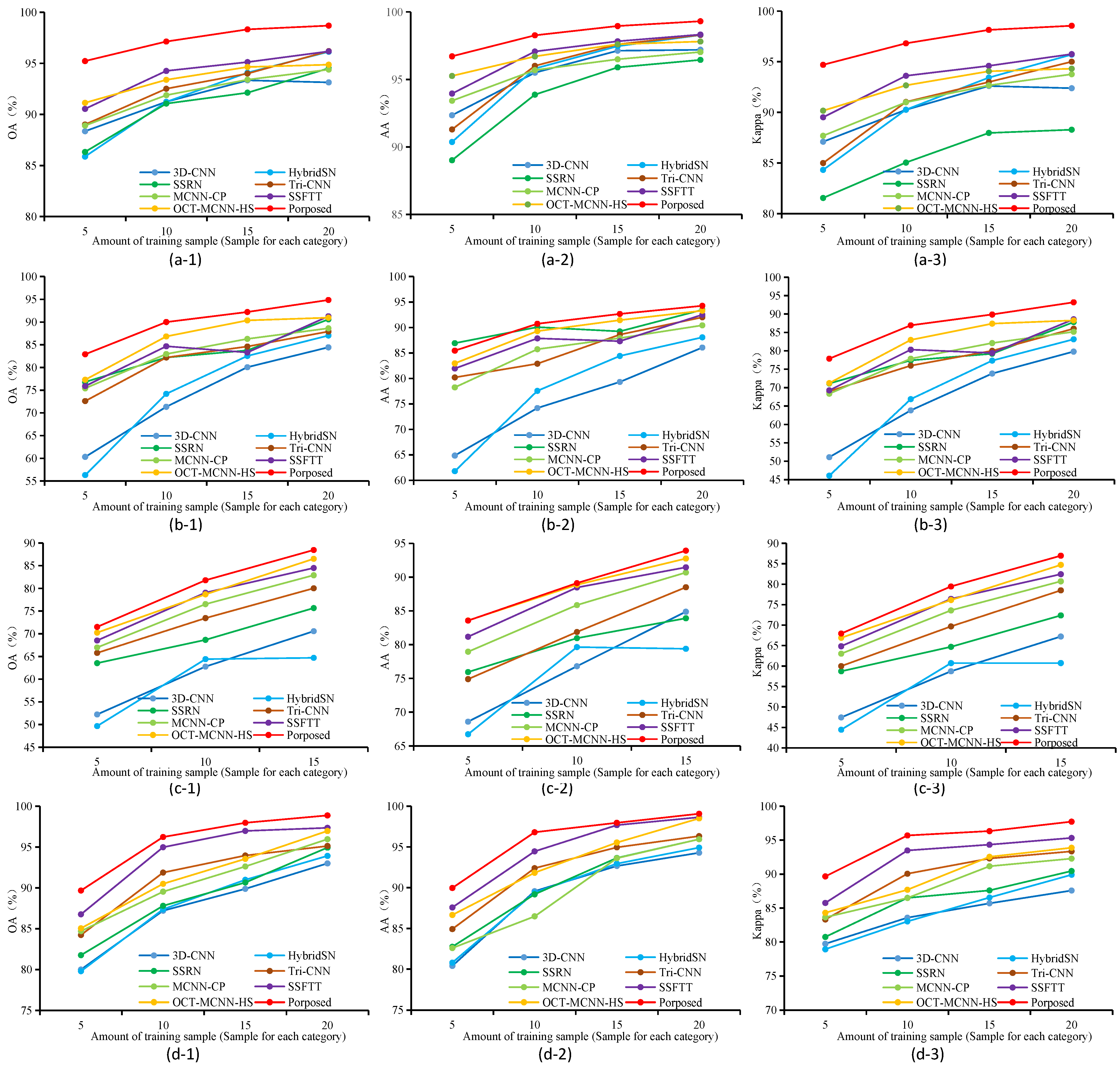

3.4.3. Computational Complexity

4. Discussion

4.1. Ablation Studies

4.2. Other Impact Studies

4.2.1. The Influence of Different Size Convolution Kernels in Three Branches of Baseline

4.2.2. The Influence of Varying Numbers of 3D Octave Convolutions in Three Branches of Baseline

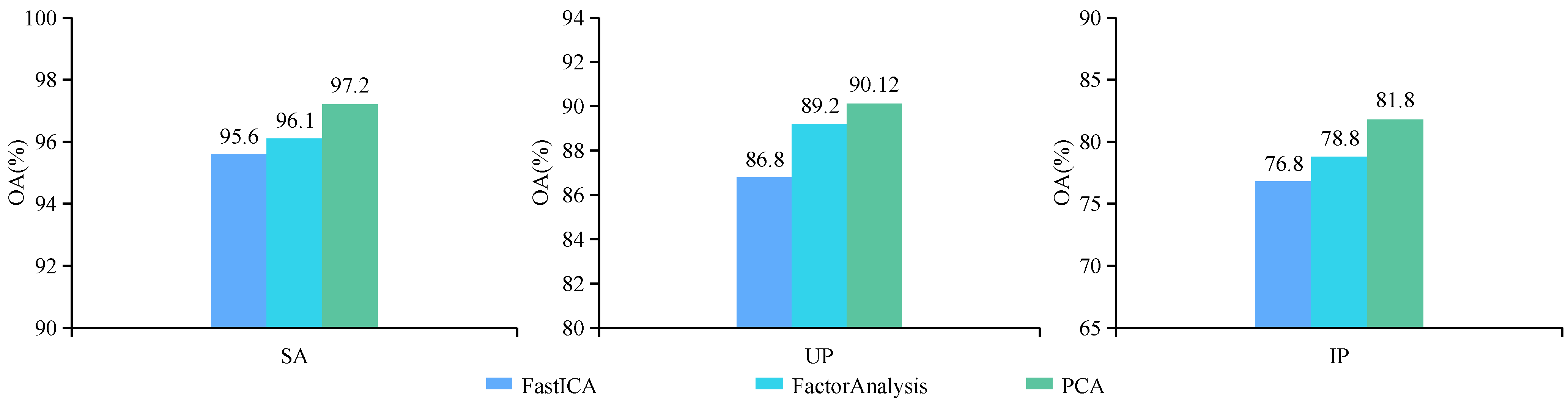

4.2.3. The Influence of Different Dimensionality Reduction Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Omia, E.; Bae, H.; Park, E.; Kim, M.S.; Baek, I.; Kabenge, I.; Cho, B.-K. Remote Sensing in Field Crop Monitoring: A Comprehensive Review of Sensor Systems, Data Analyses and Recent Advances. Remote Sens. 2023, 15, 354. [Google Scholar] [CrossRef]

- Aneece, I.; Foley, D.J.; Thenkabail, P.S.; Oliphant, A. Pardhasaradhi Teluguntla New Generation Hyperspectral Data from DESIS Compared to High Spatial Resolution PlanetScope Data for Crop Type Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7846–7858. [Google Scholar] [CrossRef]

- Kouadio, L.; El Jarroudi, M.; Belabess, Z.; Laasli, S.-E.; Roni, M.Z.K.; Amine, I.D.I.; Mokhtari, N.; Mokrini, F.; Junk, J.; Lahlali, R. A Review on UAV-Based Applications for Plant Disease Detection and Monitoring. Remote Sens. 2023, 15, 4273. [Google Scholar] [CrossRef]

- Mohamed, A.; Emam, A.; Zoheir, B. SAM-HIT: A Simulated Annealing Multispectral to Hyperspectral Imagery Data Transformation. Remote Sens. 2023, 15, 1154. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; James, D.E.; Ahmad, S. Measurement of Total Dissolved Solids and Total Suspended Solids in Water Systems: A Review of the Issues, Conventional, and Remote Sensing Techniques. Remote Sens. 2023, 15, 3534. [Google Scholar] [CrossRef]

- GUAN, J.; Li, L.; Ao, Z.; Zhao, K.; Pan, Y.; Ma, W. Extraction of Pig Farms from GaoFen Satellite Images Based on Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9622–9631. [Google Scholar] [CrossRef]

- Kang, X.; Deng, B.; Duan, P.; Wei, X.; Li, S. Self-Supervised Spectral–Spatial Transformer Network for Hyperspectral Oil Spill Mapping. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5507410. [Google Scholar] [CrossRef]

- Wang, Y.; Gu, Y.; Li, X. A Novel Low Rank Smooth Flat-Field Correction Algorithm for Hyperspectral Microscopy Imaging. IEEE Trans. Med. Imaging 2022, 41, 3862–3872. [Google Scholar] [CrossRef]

- Gao, H.; Yang, M.; Cao, X.; Liu, Q.; Xu, P. Semi-supervised enhanced discriminative local constraint preserving projection for dimensionality reduction of medical hyperspectral images. Comput. Biol. Med. 2023, 167, 107568. [Google Scholar] [CrossRef]

- Qiu, R.; Zhao, Y.; Kong, D.; Wu, N.; He, Y. Development and comparison of classification models on VIS-NIR hyperspectral imaging spectra for qualitative detection of the Staphylococcus aureus in fresh chicken breast. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 285, 121838. [Google Scholar] [CrossRef]

- Farmonov, N.; Amankulova, K.; Szatmári, J.; Sharifi, A.; Abbasi-Moghadam, D.; Mirhoseini Nejad, S.M.; Mucsi, L. Crop Type Classification by DESIS Hyperspectral Imagery and Machine Learning Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1576–1588. [Google Scholar] [CrossRef]

- Yu, D.; Fang, C. Urban Remote Sensing with Spatial Big Data: A Review and Renewed Perspective of Urban Studies in Recent Decades. Remote Sens. 2023, 15, 1307. [Google Scholar] [CrossRef]

- Li, Q.; Li, J.; Li, T.; Li, Z.; Zhang, P. Spectral–Spatial Depth-Based Framework for Hyperspectral Underwater Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4204615. [Google Scholar] [CrossRef]

- Ahmed, M.R.; Ghaderpour, E.; Gupta, A.; Dewan, A.; Hassan, Q.K. Opportunities and Challenges of Spaceborne Sensors in Delineating Land Surface Temperature Trends: A Review. IEEE Sens. J. 2023, 23, 6460–6472. [Google Scholar] [CrossRef]

- Chen, L.; Han, B.; Wang, X.; Zhao, J.; Yang, W.; Yang, Z. Machine Learning Methods in Weather and Climate Applications: A Survey. Appl. Sci. 2023, 13, 12019. [Google Scholar] [CrossRef]

- Fang, L.; He, N.; Li, S.; Plaza, A.J.; Plaza, J. A New Spatial–Spectral Feature Extraction Method for Hyperspectral Images Using Local Covariance Matrix Representation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3534–3546. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral Image Classification via Kernel Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2011, 51, 217–231. [Google Scholar] [CrossRef]

- Su, H.; Zhao, B.; Du, Q.; Du, P. Kernel Collaborative Representation with Local Correlation Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1230–1241. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 4834–4837. [Google Scholar]

- Waske, B.; Linden, S.V.; Benediktsson, J.A.; Rabe, A.; Hostert, P. Sensitivity of Support Vector Machines to Random Feature Selection in Classification of Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2880–2889. [Google Scholar] [CrossRef]

- Wu, Z.; Shi, L.; Li, J.Y.; Wang, Q.; Sun, L.; Wei, Z.; Plaza, J.; Plaza, A.J. GPU Parallel Implementation of Spatially Adaptive Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1131–1143. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Deep Learning for Classification of Hyperspectral Data: A Comparative Review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef]

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Özdemir, O.B.; Gedik, E.; Yardimci, Y. Hyperspectral classification using stacked autoencoders with deep learning. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Li, T.; Zhang, J.; Zhang, Y. Classification of hyperspectral image based on deep belief networks. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5132–5136. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral Image Classification with Deep Feature Fusion Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Fang, L.; Liu, G.; Li, S.; Ghamisi, P.; Benediktsson, J.A. Hyperspectral Image Classification with Squeeze Multibias Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1291–1301. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Ben Hamida, A.; Benoît, A.; Lambert, P.; Ben Amar, C. 3-D Deep Learning Approach for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4420–4434. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.; Zhang, X.; Huang, X. Hyperspectral Image Classification with Deep Learning Models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2019, 17, 277–281. [Google Scholar] [CrossRef]

- Zheng, J.; Feng, Y.; Bai, C.; Zhang, J. Hyperspectral Image Classification Using Mixed Convolutions and Covariance Pooling. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 522–534. [Google Scholar] [CrossRef]

- Ding, C.; Chen, Y.; Li, R.; Wen, D.; Xie, X.; Zhang, L.; Wei, W.; Zhang, Y. Integrating Hybrid Pyramid Feature Fusion and Coordinate Attention for Effective Small Sample Hyperspectral Image Classification. Remote Sens. 2022, 14, 2355. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M.A. Spectral–Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Zhang, T.; Shi, C.; Liao, D.; Wang, L. Deep Spectral Spatial Inverted Residual Network for Hyperspectral Image Classification. Remote Sens. 2021, 13, 4472. [Google Scholar] [CrossRef]

- Zahisham, Z.; Lim, K.M.; Koo, V.C.; Chan, Y.K.; Lee, C.P. 2SRS: Two-Stream Residual Separable Convolution Neural Network for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5501505. [Google Scholar] [CrossRef]

- Dong, W.; Qu, J.; Zhang, T.; Li, Y.; Du, Q. Context-Aware Guided Attention Based Cross-Feedback Dense Network for Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5530814. [Google Scholar] [CrossRef]

- Xie, J.; He, N.; Fang, L.; Ghamisi, P. Multiscale Densely-Connected Fusion Networks for Hyperspectral Images Classification. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 246–259. [Google Scholar] [CrossRef]

- Wang, X.; Tan, K.; Du, P.; Pan, C.; Ding, J. A Unified Multiscale Learning Framework for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Qing, Y.; Liu, W. Hyperspectral Image Classification Based on Multi-Scale Residual Network with Attention Mechanism. Remote Sens. 2021, 13, 335. [Google Scholar] [CrossRef]

- Qing, Y.; Huang, Q.; Feng, L.; Qi, Y.; Liu, W. Multiscale Feature Fusion Network Incorporating 3D Self-Attention for Hyperspectral Image Classification. Remote Sens. 2022, 14, 742. [Google Scholar] [CrossRef]

- Zhang, C.; Zhu, S.; Xue, D.; Sun, S. Gabor Filter-Based Multi-Scale Dense Network Hyperspectral Remote Sensing Image Classification Technique. IEEE Access 2023, 11, 114146–114154. [Google Scholar] [CrossRef]

- Zhao, A.; Wang, C.; Li, X. A Global + Multiscale Hybrid Network for Hyperspectral Image Classification. Remote Sens. Lett. 2023, 14, 1002–1010. [Google Scholar] [CrossRef]

- Sun, L.; Zhao, G.; Zheng, Y.; Wu, Z. Spectral–Spatial Feature Tokenization Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5522214. [Google Scholar] [CrossRef]

- Yang, X.; Cao, W.; Lu, Y.; Zhou, Y. Hyperspectral Image Transformer Classification Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Cao, X.; Lin, H.; Guo, S.; Xiong, T.; Jiao, L. Transformer-Based Masked Autoencoder with Contrastive Loss for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5524312. [Google Scholar] [CrossRef]

- Guo, H.; Liu, W. S3L: Spectrum Transformer for Self-Supervised Learning in Hyperspectral Image Classification. Remote Sens. 2024, 16, 970. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. Available online: https://arxiv.org/pdf/1409.4842 (accessed on 2 May 2024).

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. Available online: https://arxiv.org/pdf/1502.03167.pdf (accessed on 2 May 2024).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. Available online: https://arxiv.org/pdf/1512.00567 (accessed on 2 May 2024).

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv 2016, arXiv:1602.07261. Available online: https://arxiv.org/pdf/1602.07261.pdf (accessed on 2 May 2024). [CrossRef]

- Chen, X.; Qiu, C.; Guo, W.; Yu, A.; Tong, X.; Schmitt, M. Multiscale Feature Learning by Transformer for Building Extraction from Satellite Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 2503605. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Gao, L.; Benediktsson, J.A.; Zhao, M.; Shi, C. Simple Multiscale UNet for Change Detection with Heterogeneous Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 2504905. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, H.; Xu, B.; Yan, Z.; Kalantidis, Y.; Rohrbach, M.; Yan, S.; Feng, J. Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3434–3443. Available online: https://arxiv.org/pdf/1904.05049 (accessed on 2 May 2024).

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. Available online: https://arxiv.org/pdf/1709.01507 (accessed on 2 May 2024).

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. Available online: https://arxiv.org/pdf/1903.06586 (accessed on 2 May 2024).

- Woo, S.; Park, J.; Lee, J.; Kweon, I. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. Available online: https://arxiv.org/pdf/1807.06521.pdf3 (accessed on 2 May 2024).

- Alkhatib, M.Q.; Al-Saad, M.; Aburaed, N.; Almansoori, S.; Zabalza, J.; Marshall, S.; Al-Ahmad, H. Tri-CNN: A Three Branch Model for Hyperspectral Image Classification. Remote Sens. 2023, 15, 316. [Google Scholar] [CrossRef]

- Feng, Y.; Zheng, J.; Qin, M.; Bai, C.; Zhang, J. 3D Octave and 2D Vanilla Mixed Convolutional Neural Network for Hyperspectral Image Classification with Limited Samples. Remote Sens. 2021, 13, 4407. [Google Scholar] [CrossRef]

- Hyvärinen, A. Fast and robust fixed-point algorithms for independent component analysis. IEEE Trans. Neural Netw. 1999, 10, 626–634. Available online: http://www.cs.helsinki.fi/u/ahyvarin/papers/TNN99_reprint.pdf (accessed on 2 May 2024). [CrossRef] [PubMed]

- Yan, R.; Peng, J.; Ma, D. Dimensionality Reduction Based on Parallel Factor Analysis Model and Independent Component Analysis Method. J. Appl. Remote Sens. 2019, 13, 1. [Google Scholar] [CrossRef]

| SA | UP | IP | LK | |

|---|---|---|---|---|

| Sensor | AVIRIS | ROSIS | AVIRIS | Headwall Nano-Hyperspec |

| Wavelength (nm) | 400–2500 | 430–860 | 400–2500 | 400–1000 |

| Spatial Size (pixels) | 512 × 217 | 610 × 340 | 145 × 145 | 550 × 400 |

| Spectral Bands | 204 | 103 | 200 | 270 |

| No. of Classes | 16 | 9 | 16 | 9 |

| Labeled Samples | 54,129 | 42,776 | 10,249 | 204,542 |

| Spatial Resolution (m) | 3.7 | 1.3 | 20 | 0.463 |

| Areas | California | Pavia | Indiana | Longkou |

| No | Map Color | Class Name | Train Samples | Total Samples | |||

|---|---|---|---|---|---|---|---|

| 5 | 10 | 0.1% | 0.5% | ||||

| 1 |  | Broccoli_weeds1 | 5 | 10 | 2 | 10 | 2009 |

| 2 |  | Broccoli_weeds2 | 5 | 10 | 4 | 19 | 3726 |

| 3 |  | Fallow | 5 | 10 | 2 | 10 | 1976 |

| 4 |  | Fallow_rough_plow | 5 | 10 | 1 | 7 | 1394 |

| 5 |  | Fallow_smooth | 5 | 10 | 3 | 13 | 2678 |

| 6 |  | Stubble | 5 | 10 | 4 | 20 | 3959 |

| 7 |  | Celery | 5 | 10 | 4 | 18 | 3579 |

| 8 |  | Grapes_untrained | 5 | 10 | 11 | 56 | 11,271 |

| 9 |  | Soil_vineyard_develop | 5 | 10 | 6 | 31 | 6203 |

| 10 |  | Corn_weeds | 5 | 10 | 3 | 16 | 3278 |

| 11 |  | Lettuce_romaine_4wk | 5 | 10 | 1 | 5 | 1068 |

| 12 |  | Lettuce_romaine_5wk | 5 | 10 | 2 | 10 | 1927 |

| 13 |  | Lettuce_romaine_6wk | 5 | 10 | 1 | 5 | 916 |

| 14 |  | Lettuce_romaine_7wk | 5 | 10 | 1 | 5 | 1070 |

| 15 |  | Vineyard_untrained | 5 | 10 | 7 | 36 | 7268 |

| 16 |  | Vineyard_trellis | 5 | 10 | 2 | 9 | 1807 |

| Total Samples | 80 | 160 | 54 | 270 | 54,129 | ||

| No | Map Color | Class Name | Train Samples | Total Samples | |||

|---|---|---|---|---|---|---|---|

| 5 | 10 | 0.1% | 0.5% | ||||

| 1 |  | Asphalt | 5 | 10 | 7 | 33 | 6631 |

| 2 |  | Meadows | 5 | 10 | 19 | 93 | 18,649 |

| 3 |  | Gravel | 5 | 10 | 2 | 10 | 2099 |

| 4 |  | Trees | 5 | 10 | 3 | 15 | 3064 |

| 5 |  | Painted metal sheets | 5 | 10 | 1 | 7 | 1345 |

| 6 |  | Bare Soil | 5 | 10 | 5 | 25 | 5029 |

| 7 |  | Bitumen | 5 | 10 | 1 | 7 | 1330 |

| 8 |  | Self-Blocking Bricks | 5 | 10 | 4 | 18 | 3682 |

| 9 |  | Shadows | 5 | 10 | 1 | 5 | 947 |

| Total Samples | 45 | 90 | 43 | 213 | 42,776 | ||

| No | Map Color | Class Name | Train Samples | Total Samples | |||

|---|---|---|---|---|---|---|---|

| 5 | 10 | 5% | 10% | ||||

| 1 |  | Alfalfa | 5 | 10 | 2 | 5 | 46 |

| 2 |  | Cornnotill | 5 | 10 | 71 | 143 | 1428 |

| 3 |  | Corn-mintill | 5 | 10 | 42 | 83 | 830 |

| 4 |  | Corn | 5 | 10 | 12 | 24 | 237 |

| 5 |  | Grass-pasture | 5 | 10 | 24 | 48 | 483 |

| 6 |  | Grass–trees | 5 | 10 | 37 | 73 | 730 |

| 7 |  | Grass-pasture-mowed | 5 | 10 | 1 | 3 | 28 |

| 8 |  | Hay-windrowed | 5 | 10 | 24 | 48 | 478 |

| 9 |  | Oats | 5 | 10 | 1 | 2 | 20 |

| 10 |  | Soybean-notill | 5 | 10 | 49 | 97 | 972 |

| 11 |  | Soybean-mintill | 5 | 10 | 123 | 246 | 2455 |

| 12 |  | Soybean clean | 5 | 10 | 30 | 59 | 593 |

| 13 |  | Wheat | 5 | 10 | 10 | 21 | 205 |

| 14 |  | Woods | 5 | 10 | 63 | 127 | 1265 |

| 15 |  | Buildings-Gra-Trees | 5 | 10 | 19 | 39 | 386 |

| 16 |  | Stone-Steel-Towers | 5 | 10 | 5 | 9 | 93 |

| Total Samples | 80 | 160 | 513 | 1027 | 10,249 | ||

| No | Map Color | Class Name | Train Samples | Total Samples | |||

|---|---|---|---|---|---|---|---|

| 5 | 10 | 0.1% | 0.5% | ||||

| 1 |  | Corn | 5 | 10 | 35 | 173 | 34,511 |

| 2 |  | Cotton | 5 | 10 | 8 | 42 | 8374 |

| 3 |  | Sesame | 5 | 10 | 3 | 15 | 3031 |

| 4 |  | Broad-leaf soybean | 5 | 10 | 63 | 316 | 63,212 |

| 5 |  | Narrow-leaf soybean | 5 | 10 | 4 | 21 | 4151 |

| 6 |  | Rice | 5 | 10 | 12 | 59 | 11,854 |

| 7 |  | Water | 5 | 10 | 67 | 335 | 67,056 |

| 8 |  | Roads and houses | 5 | 10 | 7 | 36 | 7124 |

| 9 |  | Mixed weed | 5 | 10 | 5 | 26 | 5229 |

| Total Samples | 45 | 90 | 204 | 1023 | 204,542 | ||

| SA | UP | IP | LK | |

|---|---|---|---|---|

| Patch size | 24 × 24 | 16 × 16 | 20 × 20 | 24 × 24 |

| PCA components | 24 | 24 | 44 | 16 |

| Class No. | 3D-CNN | HybridSN | SSRN | Tri-CNN | MCNN-CP | SSFTT | Oct-MCNN-HS | Proposed |

|---|---|---|---|---|---|---|---|---|

| 1 | 100.00 | 99.33 | 96.46 | 99.60 | 100.00 | 99.97 | 99.93 | 99.80 |

| 2 | 99.06 | 98.67 | 99.98 | 98.57 | 99.00 | 99.89 | 99.98 | 99.78 |

| 3 | 99.44 | 99.33 | 94.29 | 99.38 | 99.50 | 99.91 | 99.90 | 100.00 |

| 4 | 99.30 | 99.33 | 79.90 | 98.30 | 98.00 | 98.82 | 98.33 | 98.99 |

| 5 | 92.76 | 96.03 | 98.58 | 97.59 | 92.67 | 95.94 | 91.82 | 98.86 |

| 6 | 98.89 | 96.33 | 100.00 | 98.30 | 99.50 | 98.86 | 99.13 | 99.13 |

| 7 | 99.19 | 99.67 | 99.85 | 98.23 | 100.00 | 99.91 | 99.67 | 99.34 |

| 8 | 81.71 | 74.67 | 90.66 | 80.87 | 79.83 | 85.94 | 79.70 | 93.17 |

| 9 | 99.74 | 100.00 | 94.66 | 99.60 | 99.00 | 99.87 | 99.98 | 99.46 |

| 10 | 94.21 | 95.67 | 89.39 | 95.87 | 96.50 | 96.82 | 96.42 | 95.95 |

| 11 | 97.22 | 100.00 | 98.13 | 98.02 | 100.00 | 99.89 | 99.87 | 100.00 |

| 12 | 99.03 | 94.67 | 99.93 | 96.50 | 95.83 | 96.28 | 98.77 | 98.96 |

| 13 | 99.45 | 98.67 | 100.00 | 97.67 | 95.17 | 97.81 | 98.86 | 99.37 |

| 14 | 98.77 | 98.87 | 98.03 | 98.90 | 97.83 | 99.28 | 97.78 | 97.30 |

| 15 | 71.73 | 81.67 | 65.93 | 80.74 | 79.83 | 84.66 | 89.35 | 94.52 |

| 16 | 97.07 | 99.13 | 96.16 | 97.19 | 98.67 | 99.17 | 97.54 | 97.70 |

| OA (%) | 91.20 ± 2.01 | 91.20 ± 1.07 | 91.10 ± 1.71 | 92.52 ± 1.97 | 91.90 ± 1.77 | 94.20 ± 1.07 | 93.40 ± 1.25 | 97.20 ± 1.05 |

| AA (%) | 95.50 ± 1.38 | 95.80 ± 0.90 | 93.90 ± 1.90 | 96.01 ± 1.95 | 95.70 ± 0.95 | 97.00 ± 0.84 | 96.70 ± 0.47 | 98.30 ± 0.73 |

| Kappa × 100 | 90.25 ± 2.25 | 90.30 ± 1.19 | 90.00 ± 1.91 | 91.04 ± 1.90 | 91.00 ± 1.97 | 93.60 ± 1.19 | 92.70 ± 1.38 | 96.90 ± 1.08 |

| Class No. | 3D-CNN | HybridSN | SSRN | Tri-CNN | MCNN-CP | SSFTT | Oct-MCNN-HS | Proposed |

|---|---|---|---|---|---|---|---|---|

| 1 | 50.82 | 60.42 | 80.81 | 66.52 | 72.67 | 79.52 | 83.02 | 81.29 |

| 2 | 76.62 | 79.78 | 73.26 | 79.98 | 85.67 | 85.64 | 87.60 | 93.22 |

| 3 | 74.38 | 81.96 | 83.25 | 80.77 | 82.17 | 92.24 | 84.98 | 90.04 |

| 4 | 68.22 | 82.62 | 87.90 | 85.60 | 88.17 | 85.84 | 91.22 | 83.68 |

| 5 | 97.74 | 99.78 | 100.00 | 99.90 | 99.33 | 99.41 | 100.00 | 99.64 |

| 6 | 81.22 | 69.64 | 91.39 | 70.04 | 81.50 | 92.60 | 88.83 | 95.40 |

| 7 | 95.62 | 99.40 | 99.32 | 97.41 | 96.67 | 97.99 | 98.68 | 98.33 |

| 8 | 51.02 | 47.54 | 94.78 | 65.55 | 72.00 | 59.33 | 73.06 | 79.19 |

| 9 | 72.02 | 76.92 | 99.93 | 78.82 | 94.00 | 98.23 | 96.28 | 95.90 |

| OA (%) | 71.33 ± 3.04 | 74.20 ± 2.2 | 82.20 ± 0.99 | 82.20 ± 2.90 | 83.00 ± 1.67 | 84.67 ± 5.46 | 86.80 ± 1.47 | 90.12 ± 1.09 |

| AA (%) | 74.19 ± 3.53 | 77.60 ± 3.73 | 90.10 ± 2.20 | 82.90 ± 4.01 | 85.70 ± 1.56 | 87.87 ± 3.34 | 89.30 ± 1.33 | 90.75 ± 1.23 |

| Kappa × 100 | 63.82 ± 3.89 | 66.90 ± 3.20 | 77.40 ± 1.34 | 75.97 ± 3.66 | 77.90 ± 2.01 | 80.30 ± 6.57 | 83.00 ± 1.90 | 87.30 ± 1.19 |

| Class No. | 3D-CNN | HybridSN | SSRN | Tri-CNN | MCNN-CP | SSFTT | Oct-MCNN-HS | Proposed |

|---|---|---|---|---|---|---|---|---|

| 1 | 99.40 | 97.62 | 100.00 | 98.52 | 100.00 | 99.53 | 98.61 | 98.15 |

| 2 | 37.34 | 42.02 | 52.19 | 60.12 | 66.00 | 64.46 | 77.26 | 67.98 |

| 3 | 54.31 | 58.62 | 58.23 | 59.02 | 65.60 | 78.39 | 78.37 | 77.03 |

| 4 | 79.23 | 84.44 | 79.88 | 84.84 | 96.40 | 95.72 | 96.99 | 93.83 |

| 5 | 76.99 | 81.56 | 85.90 | 83.59 | 88.40 | 85.52 | 84.50 | 90.80 |

| 6 | 92.18 | 95.28 | 86.78 | 95.98 | 96.80 | 97.32 | 97.94 | 94.37 |

| 7 | 99.80 | 99.82 | 100.00 | 99.98 | 100.00 | 100.00 | 100.00 | 100.00 |

| 8 | 96.47 | 99.62 | 86.86 | 99.60 | 95.80 | 93.27 | 96.44 | 99.61 |

| 9 | 99.80 | 99.94 | 100.00 | 99.99 | 100.00 | 100.00 | 100.00 | 100.00 |

| 10 | 64.55 | 67.02 | 75.29 | 68.12 | 77.80 | 85.68 | 85.74 | 80.27 |

| 11 | 52.03 | 53.42 | 53.05 | 60.42 | 66.80 | 64.36 | 58.47 | 74.34 |

| 12 | 39.89 | 56.62 | 42.65 | 56.65 | 58.60 | 69.94 | 70.18 | 70.14 |

| 13 | 99.49 | 96.62 | 99.23 | 94.69 | 97.80 | 99.82 | 100.00 | 96.92 |

| 14 | 81.63 | 76.82 | 93.55 | 79.77 | 88.00 | 94.62 | 85.77 | 96.27 |

| 15 | 56.41 | 85.42 | 81.74 | 85.40 | 81.40 | 87.45 | 91.53 | 90.78 |

| 16 | 99.00 | 95.20 | 100.00 | 97.21 | 94.20 | 99.60 | 100.00 | 97.19 |

| OA (%) | 62.77 ± 4.73 | 66.40 ± 3.16 | 68.70 ± 4.30 | 73.46 ± 3.76 | 76.50 ± 2.72 | 79.05 ± 2.95 | 78.70 ± 1.14 | 81.80 ± 1.21 |

| AA (%) | 76.81 ± 2.19 | 80.60 ± 1.93 | 80.10 ± 2.12 | 81.88 ± 1.93 | 85.90 ± 1.35 | 88.47 ± 1.67 | 88.90 ± 0.90 | 89.20 ± 0.98 |

| Kappa × 100 | 58.72 ± 4.80 | 62.70 ± 3.42 | 64.70 ± 4.46 | 69.70 ± 2.49 | 73.60 ± 2.96 | 76.43 ± 3.18 | 76.10 ± 1.27 | 79.50 ± 1.08 |

| Class No. | 3D-CNN | HybridSN | SSRN | Tri-CNN | MCNN-CP | SSFTT | Oct-MCNN-HS | Proposed |

|---|---|---|---|---|---|---|---|---|

| 1 | 99.99 | 99.80 | 80.81 | 99.80 | 93.77 | 97.57 | 86.11 | 98.29 |

| 2 | 96.77 | 97.33 | 73.26 | 97.33 | 77.68 | 93.59 | 92.58 | 99.49 |

| 3 | 99.86 | 99.96 | 83.25 | 99.96 | 98.10 | 100 | 99.37 | 96.67 |

| 4 | 62.99 | 70.78 | 87.90 | 70.78 | 86.27 | 93.12 | 85.68 | 89.69 |

| 5 | 80.16 | 82.28 | 100.00 | 82.28 | 92.63 | 96.76 | 96.47 | 98.24 |

| 6 | 99.89 | 99.98 | 91.39 | 99.98 | 89.56 | 90.10 | 91.25 | 100.00 |

| 7 | 95.23 | 95.70 | 99.32 | 95.70 | 91.67 | 98.97 | 98.68 | 98.43 |

| 8 | 70.68 | 73.64 | 94.78 | 73.64 | 62.89 | 87.31 | 90.86 | 89.29 |

| 9 | 90.55 | 86.48 | 99.93 | 86.48 | 85.60 | 94.25 | 87.99 | 95.99 |

| OA (%) | 87.20 ± 3.00 | 87.38 ± 3.12 | 87.80 ± 2.99 | 91.88 ± 3.62 | 89.54 ± 2.45 | 94.99 ± 4.47 | 90.50 ± 3.17 | 96.24 ± 2.09 |

| AA (%) | 89.57 ± 3.66 | 89.40 ± 4.00 | 89.19 ± 2.18 | 92.41 ± 4.20 | 86.49 ± 2.36 | 94.47 ± 3.22 | 91.84 ± 3.03 | 96.81 ± 2.13 |

| Kappa × 100 | 83.58 ± 2.86 | 83.03 ± 3.65 | 86.49 ± 3.01 | 90.05 ± 3.55 | 86.48 ± 3.01 | 93.48 ± 6.28 | 87.70 ± 4.01 | 95.70 ± 1.89 |

| Model | SA | UP | IP | LK | ||||

|---|---|---|---|---|---|---|---|---|

| Total Params | Training Time | Total Params | Training Time | Total Params | Training Time | Total Params | Training Time | |

| 3D-CNN | 9,073,184 | 43.4 s | 9,072,281 | 29.2 s | 36,168,224 | 190.3 s | 9,072,281 | 49.7 s |

| HybridSN | 4,845,696 | 58.9 s | 4,844,793 | 34.8 s | 5,122,176 | 263.3 s | 4,844,793 | 64.9 s |

| SSRN | 749,996 | 1470 s | 396,993 | 395 s | 735,884 | 1440 s | 760,155 | 1491 s |

| Tri-CNN | 6,878,436 | 69.8 s | 6,870,593 | 40.9 s | 7,420,236 | 250.6 s | 6,819,399 | 82.1 s |

| MCNN-CP | 1,654,368 | 97.5 s | 1,367,986 | 28.4 s | 3,128,928 | 434.1 s | 1,653,465 | 1249 s |

| SSFTT | 153,224 | 5.9 s | 152,769 | 5.8 s | 153,224 | 5.3 s | 153,621 | 6.5 s |

| Oct-MCNN | 3,846,096 | 63.9 s | 3,681,353 | 27.8 s | 5,156,816 | 232 s | 3,845,193 | 67.8 s |

| Proposed | 2,932,878 | 40.1 s | 2,604,295 | 13.5 s | 2,778,254 | 54.2 s | 2,717,895 | 46.1 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, H.; Liu, W. DMAF-NET: Deep Multi-Scale Attention Fusion Network for Hyperspectral Image Classification with Limited Samples. Sensors 2024, 24, 3153. https://doi.org/10.3390/s24103153

Guo H, Liu W. DMAF-NET: Deep Multi-Scale Attention Fusion Network for Hyperspectral Image Classification with Limited Samples. Sensors. 2024; 24(10):3153. https://doi.org/10.3390/s24103153

Chicago/Turabian StyleGuo, Hufeng, and Wenyi Liu. 2024. "DMAF-NET: Deep Multi-Scale Attention Fusion Network for Hyperspectral Image Classification with Limited Samples" Sensors 24, no. 10: 3153. https://doi.org/10.3390/s24103153

APA StyleGuo, H., & Liu, W. (2024). DMAF-NET: Deep Multi-Scale Attention Fusion Network for Hyperspectral Image Classification with Limited Samples. Sensors, 24(10), 3153. https://doi.org/10.3390/s24103153