Machine Learning Methods and Visual Observations to Categorize Behavior of Grazing Cattle Using Accelerometer Signals

Abstract

:1. Introduction

Objectives

2. Methods

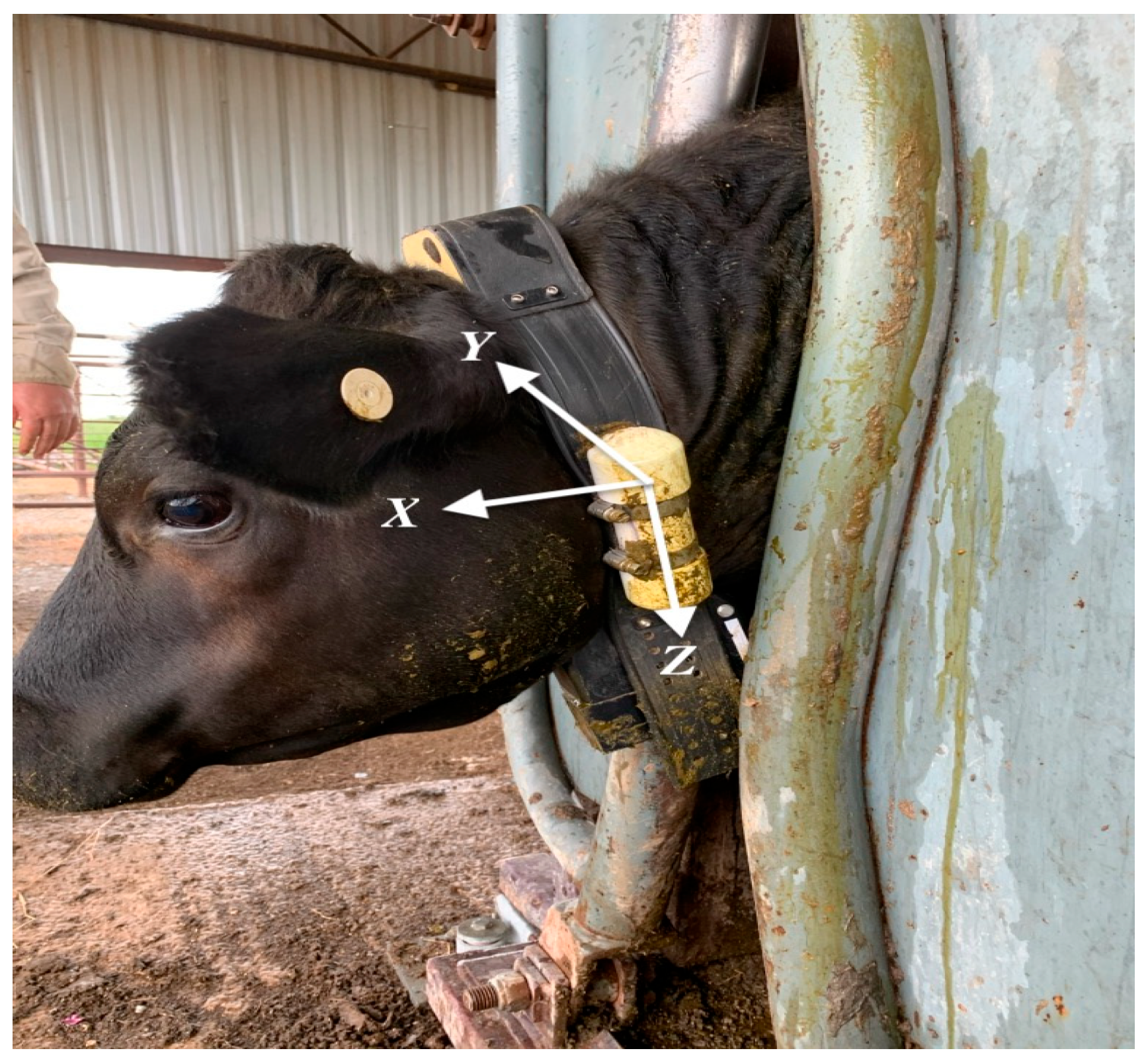

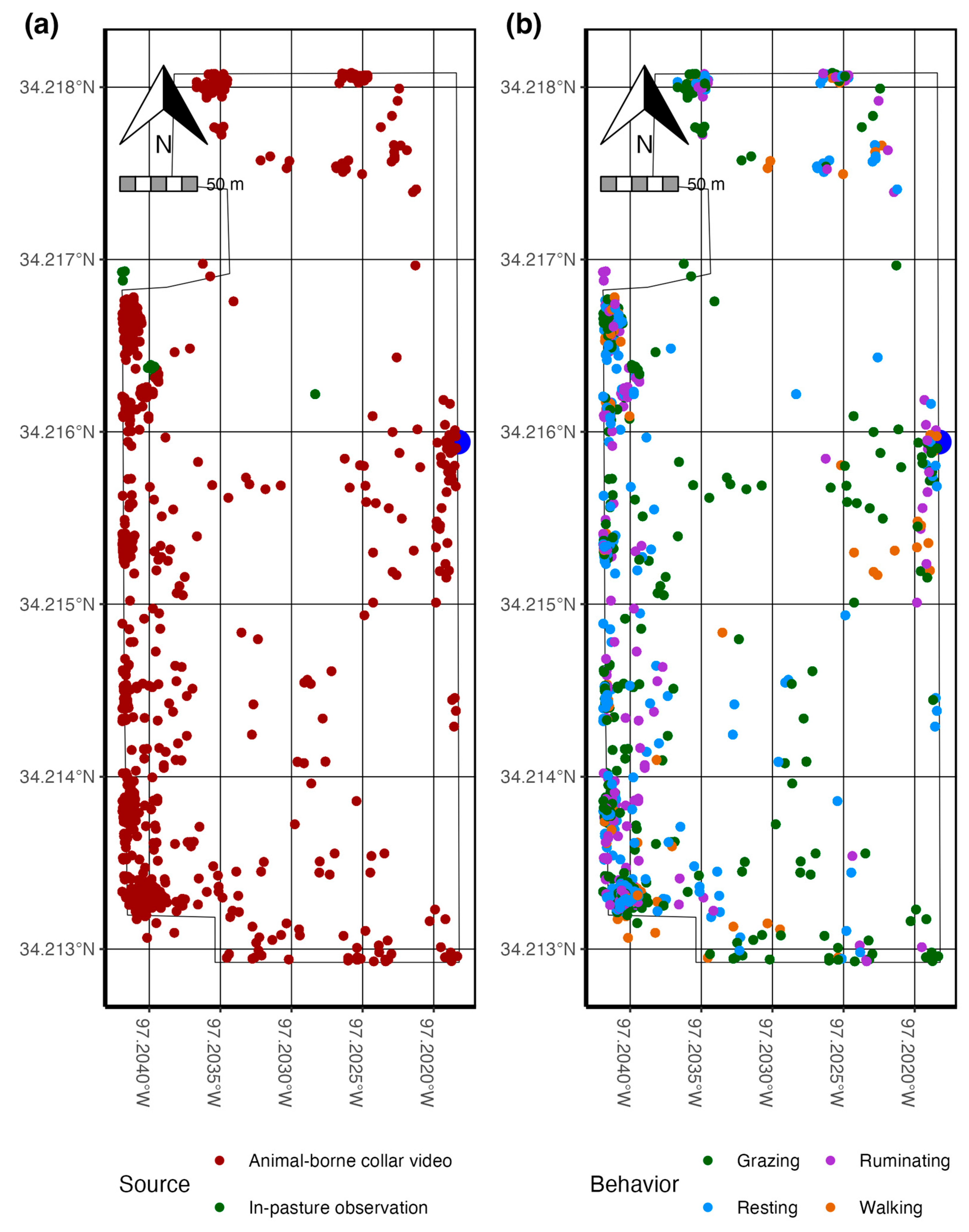

2.1. Animals and Equipment

2.2. Behavior Collection

2.3. Data Management

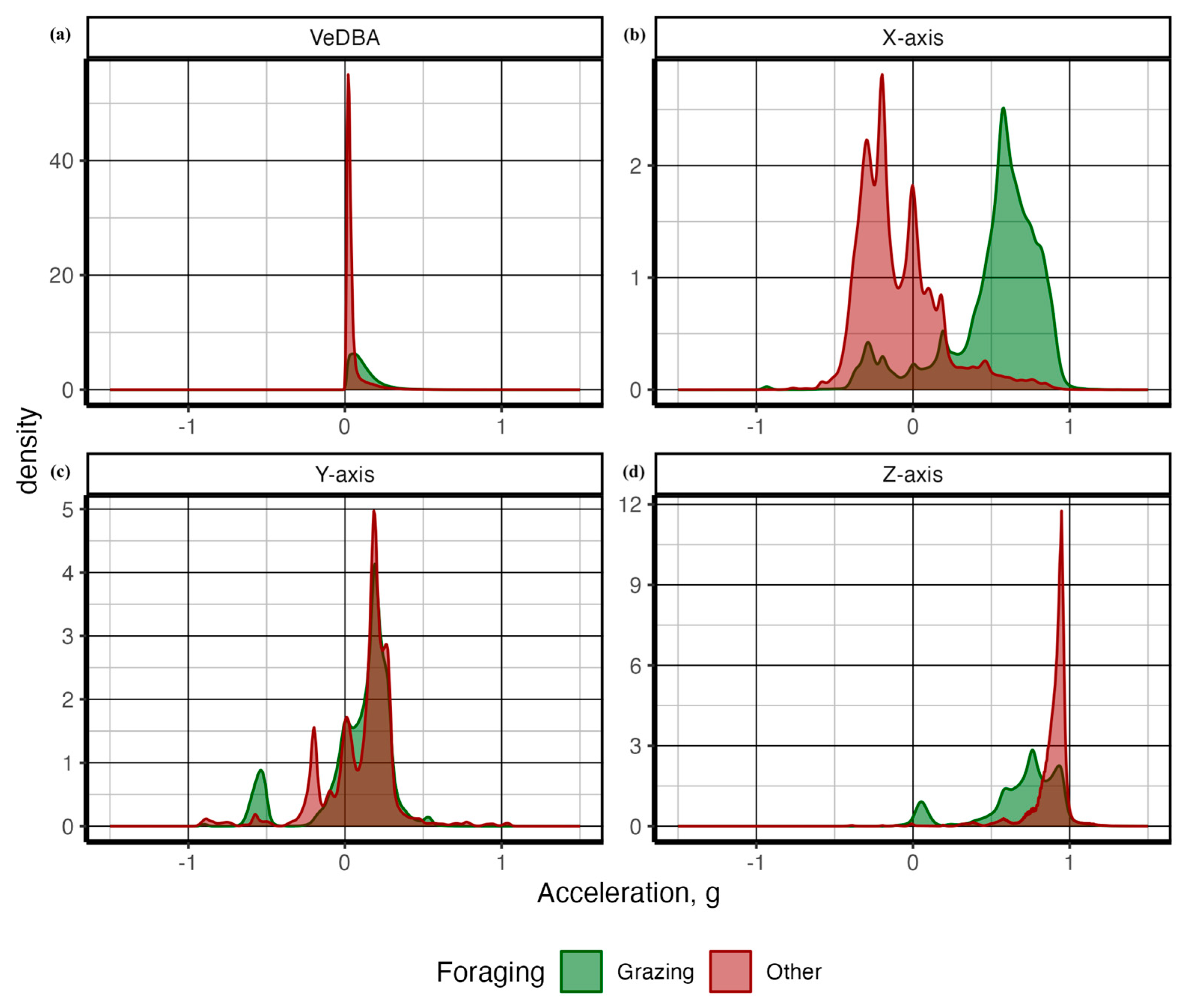

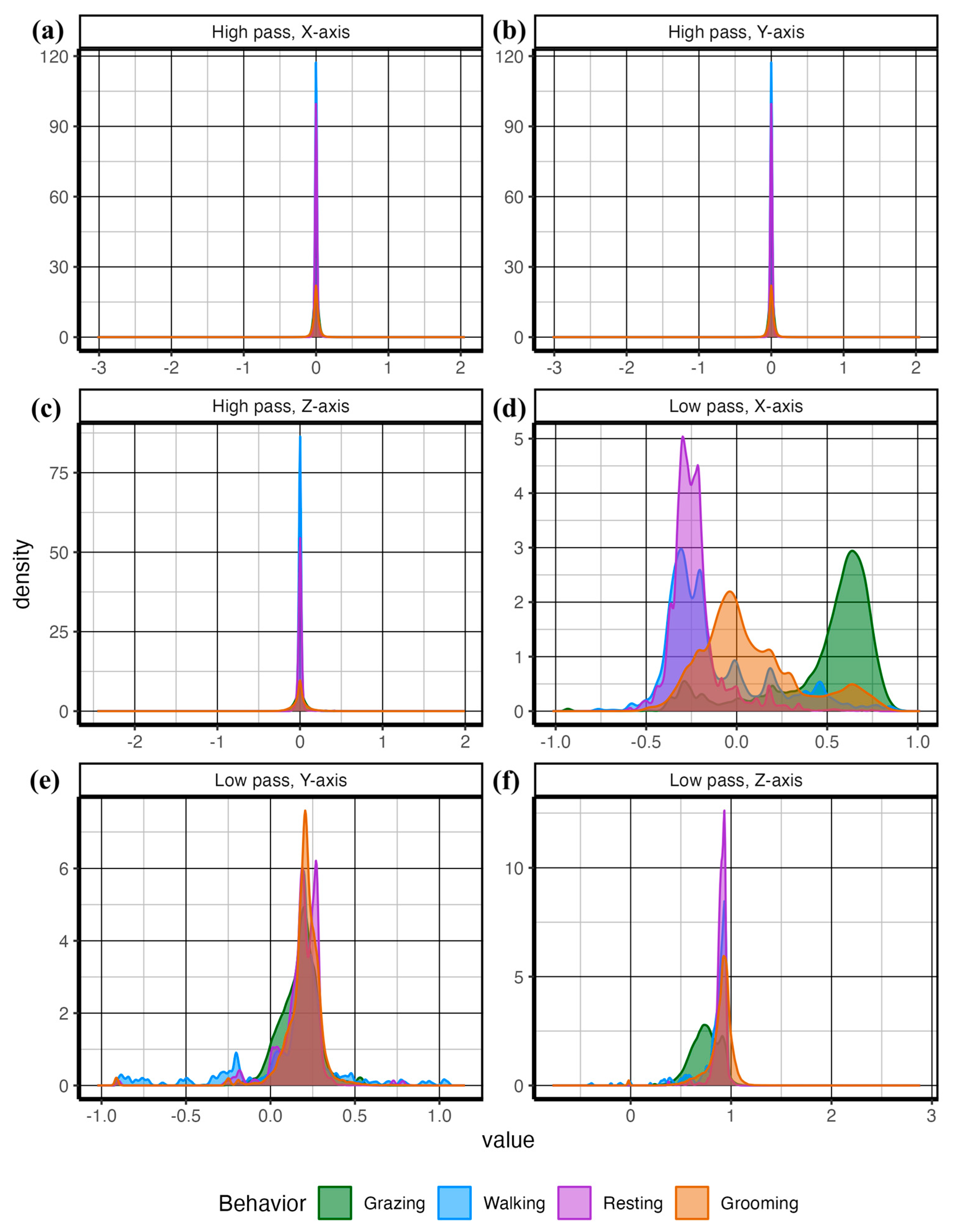

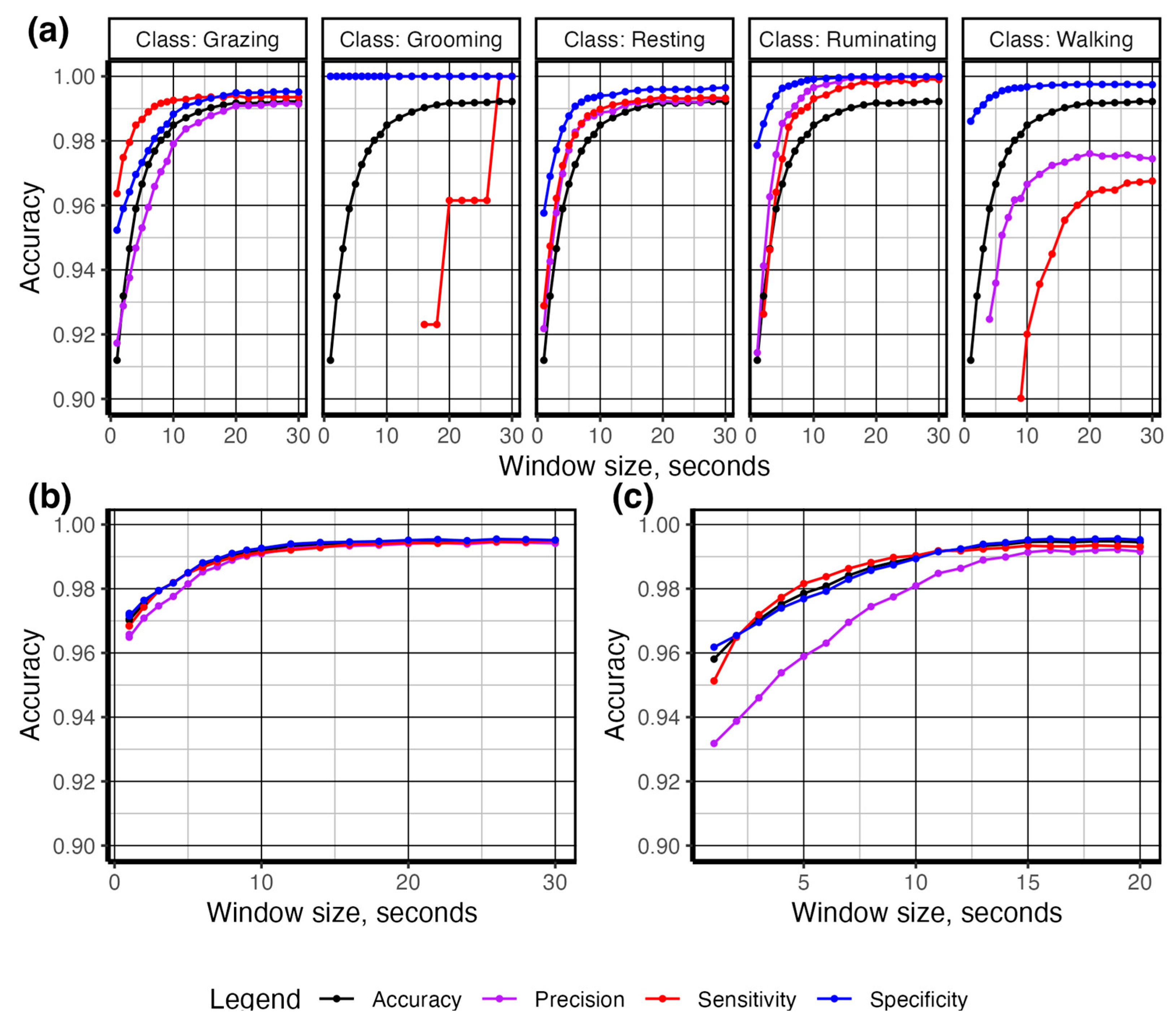

2.4. Data Analysis and Model Fitting

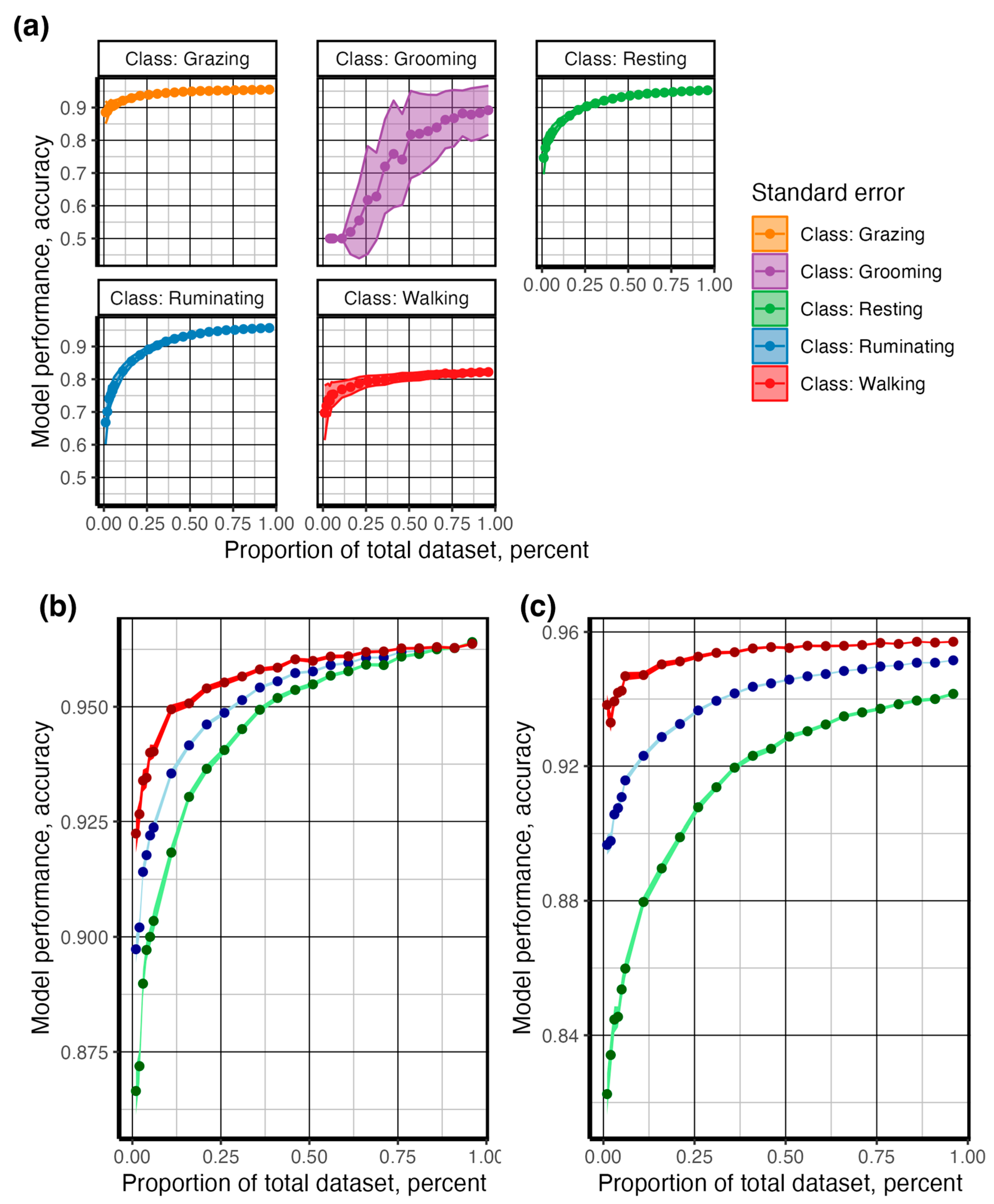

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. System Report

References

- Asher, A.; Brosh, A. Decision support system (DSS) for managing a beef herd and its grazing habitat’s sustainability: Biological/agricultural basis of the technology and its validation. Agronomy 2022, 12, 288. [Google Scholar] [CrossRef]

- González, L.A.; Bishop-Hurley, G.J.; Handcock, R.N.; Crossman, C. Behavioral classification of data from collars containing motion sensors in grazing cattle. Comput. Electron. Agric. 2015, 110, 91–102. [Google Scholar] [CrossRef]

- González-Sánchez, C.; Sánchez-Brizuela, G.; Cisnal, A.; Fraile, J.-C.; Pérez-Turiel, J.; de la Fuente-López, E. Prediction of cow calving in extensive livestock using a new neck-mounted sensorized wearable device: A pilot study. Sensors 2021, 21, 8060. [Google Scholar] [CrossRef] [PubMed]

- Dutta, D.; Natta, D.; Mandal, S.; Ghosh, N. MOOnitor: An IoT based multi-sensory intelligent device for cattle activity monitoring. Sens. Actuators A Phys. 2022, 333, 113271. [Google Scholar] [CrossRef]

- Parsons, I. Animal Husbandry in the 21st Century: Application of Ecological Theory and Precision Technology to Inform Understanding of Modern Grazing Systems. Ph.D. Thesis, Mississippi State University, Starkville, MS, USA, 2022. Available online: https://scholarsjunction.msstate.edu/td/5650 (accessed on 18 April 2024).

- Bailey, D.W.; Trotter, M.G.; Knight, C.W.; Thomas, M.G. Use of GPS tracking collars and accelerometers for rangeland livestock production research. Transl. Anim. Sci. 2018, 2, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Munro, J.; Schenkel, F.; Miller, S.; Tennessen, T.; Montanholi, Y. Remote sensing of heart rate and rumen temperature in feedlot beef cattle: Associations with feeding and activity patterns. Proc. N. Z. Soc. Anim. Prod. 2015, 75, 5. [Google Scholar]

- Moll, R.J.; Millspaugh, J.J.; Beringer, J.; Sartwell, J.; He, Z. A new “view” of ecology and conservation through animal-borne video systems. Trends Ecol. Evol. 2007, 22, 660–668. [Google Scholar] [CrossRef] [PubMed]

- Riaboff, L.; Shalloo, L.; Smeaton, A.F.; Couvreur, S.; Madouasse, A.; Keane, M.T. Predicting livestock behaviour using accelerometers: A systematic review of processing techniques for ruminant behaviour prediction from raw accelerometer data. Comput. Electron. Agric. 2022, 192, 106610. [Google Scholar] [CrossRef]

- Galyean, M.L.; Gunter, S.A. Predicting forage intake in extensive grazing systems. J. Anim. Sci. 2016, 94, 26–43. [Google Scholar] [CrossRef]

- Smith, W.B.; Galyean, M.L.; Kallenbach, R.L.; Greenwood, P.L.; Scholljegerdes, E.J. Understanding intake on pastures: How, why, and a way forward. J. Anim. Sci. 2021, 99, skab062. [Google Scholar] [CrossRef]

- Tedeschi, L.O.; Greenwood, P.L.; Halachmi, I. Advancements in sensor technology and decision support intelligent tools to assist smart livestock farming. J. Anim. Sci. 2021, 99, skab038. [Google Scholar] [CrossRef] [PubMed]

- Garde, B.; Wilson, R.P.; Fell, A.; Cole, N.; Tatayah, V.; Holton, M.D.; Rose, K.A.R.; Metcalfe, R.S.; Robotka, H.; Wikelski, M.; et al. Ecological inference using data from accelerometers needs careful protocols. Methods Ecol. Evol. 2022, 13, 813–825. [Google Scholar] [CrossRef] [PubMed]

- Kleanthous, N.; Hussain, A.; Khan, W.; Sneddon, J.; Liatsis, P. Deep transfer learning in sheep activity recognition using accelerometer data. Expert Syst. Appl. 2022, 207, 117925. [Google Scholar] [CrossRef]

- Pavlovic, D.; Davison, C.; Hamilton, A.; Marko, O.; Atkinson, R.; Michie, C.; Crnojević, V.; Andonovic, I.; Bellekens, X.; Tachtatzis, C. Classification of cattle behaviours using neck-mounted accelerometer-equipped collars and convolutional neural networks. Sensors 2021, 21, 4050. [Google Scholar] [CrossRef] [PubMed]

- Thang, H.M.; Viet, V.Q.; Thuc, N.D.; Choi, D. Gait identification using accelerometer on mobile phone. In Proceedings of the 2012 International Conference on Control, Automation and Information Sciences (ICCAIS), IEEE, Saigon, Vietnam, 26–29 November 2012; pp. 344–348. Available online: http://ieeexplore.ieee.org/document/6466615/ (accessed on 20 April 2024).

- Riaboff, L.; Aubin, S.; Bédère, N.I.; Couvreur, S.; Madouasse, A.; Goumand, E.; Chauvin, A.; Plantier, G. Evaluation of pre-processing methods for the prediction of cattle behaviour from accelerometer data. Comput. Electron. Agric. 2019, 165, 104961. [Google Scholar] [CrossRef]

- Kaczensky, P.; Khaliun, S.; Payne, J.; Boldgiv, B.; Buuveibaatar, B.; Walzer, C. Through the eye of a gobi khulan—Application of camera collars for ecological research of far-ranging species in remote and highly variable ecosystems. PLoS ONE 2019, 14, e0217772. [Google Scholar] [CrossRef] [PubMed]

- Pagano, A.M.; Williams, T.M. Estimating the energy expenditure of free-ranging polar bears using tri-axial accelerometers: A validation with doubly labeled water. Ecol. Evol. 2019, 9, 4210–4219. [Google Scholar] [CrossRef]

- Sprinkle, J.E.; Sagers, J.K.; Hall, J.B.; Ellison, M.J.; Yelich, J.V.; Brennan, J.R.; Taylor, J.B.; Lamb, J.B. Predicting cattle grazing behavior on rangeland using accelerometers. Rangel. Ecol. Manag. 2021, 76, 157–170. [Google Scholar] [CrossRef]

- Anderson, D.M.; Winters, C.; Estell, R.E.; Fredrickson, E.L.; Doniec, M.; Detweiler, C.; Rus, D.; James, D.; Nolen, B. Characterising the spatial and temporal activities of free-ranging cows from GPS data. Rangel. J. 2012, 34, 149. [Google Scholar] [CrossRef]

- Thompson, I.D.; Bakhtiari, M.; Rodgers, A.R.; Baker, J.A.; Fryxell, J.M.; Iwachewski, E. Application of a high-resolution animal-borne remote video camera with global positioning for wildlife study: Observations on the secret lives of woodland caribou. Wildl. Soc. Bull. 2012, 36, 365–370. [Google Scholar] [CrossRef]

- Lyftingsmo, E. Combining GPS Activity Measurements and Real-Time Video Recordings to Quantify the Activity Budget of Wild Reindeer (Rangifer tarandus). Ph.D. Thesis, Norwegian University of Life Sciences, Ås, Norway, 2016. Available online: http://hdl.handle.net/11250/2449765 (accessed on 20 April 2024).

- Parsons, I.L.; Norman, D.A.; Karisch, B.B.; Webb, S.L.; Stone, A.E.; Proctor, M.D.; Street, G.M. Automated walk-over-weigh system to track daily body mass and growth in grazing steers. Comput. Electron. Agric. 2023, 212, 108113. [Google Scholar] [CrossRef]

- Kilgour, R.J. In pursuit of “normal”: A review of the behaviour of cattle at pasture. Appl. Anim. Behav. Sci. 2012, 138, 1–11. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2023; Available online: https://www.r-project.org/ (accessed on 18 April 2024).

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.; Franois, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Brennan, J.; Johnson, P.; Olson, K. Classifying season long livestock grazing behavior with the use of a low-cost GPS and accelerometer. Comput. Electron. Agric. 2021, 181, 105957. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Kuhn, M.; Wing, J.; Weston, S.; Williams, A.; Keefer, C.; Engelhardt, A.; Cooper, T.; Mayer, Z.; Kenkel, B.; Benesty, M.; et al. Classification and Regression Training. 2022. Available online: https://cran.r-project.org/web/packages/caret/index.html (accessed on 18 April 2024).

- Wilkins, D. Treemapify: Draw treemaps in ’ggplot2’; GitHub, Inc.: San Francisco, CA, USA, 2021. [Google Scholar]

- Dowle, M.; Srinivasan, A.; Gorecki, J.; Chirico, M.; Stetsenko, P.; Short, T.; Lianoglou, S.; Antonyan, E.; Bonsch, M.; Parsonage, H.; et al. Package Data.Table, Version 1.15.4. 2021. Available online: https://cran.r-project.org/web/packages/data.table/data.table.pdf (accessed on 20 April 2024).

- Pebesma, E. Simple features for r: Standardized support for spatial vector data. R J. 2018, 10, 439–446. [Google Scholar] [CrossRef]

- Barwick, J.; Lamb, D.W.; Dobos, R.; Welch, M.; Schneider, D.; Trotter, M. Identifying sheep activity from tri-axial acceleration signals using a moving window classification model. Remote Sens. 2020, 12, 646. [Google Scholar] [CrossRef]

- Vuillaume, B.; Richard, J.H.; Côté, S.D. Using camera collars to study survival of migratory caribou calves. Wildl. Soc. Bull 2021, 45, 325–332. [Google Scholar] [CrossRef]

- Andersen, G.E.; McGregor, H.W.; Johnson, C.N.; Jones, M.E. Activity and social interactions in a wide-ranging specialist scavenger, the tasmanian devil (Sarcophilus harrisii), revealed by animal-borne video collars. P. Corti, editor. PLoS ONE 2020, 15, e0230216. [Google Scholar] [CrossRef]

| Behavior | Description |

|---|---|

| Grazing | Foraging for grass with head down |

| Walking | Minimum of 2 steps forward, with each stride initiating a 1, 2, 3, 4 pattern. |

| Resting | Animal idle, either standing or lying, with minimal head and foot movement. |

| Ruminating | The process of regurgitating a bolus, chewing, and re-swallowing, may be performed either standing or lying. |

| Grooming | Animal either licking, scratching, or shooing flies from themselves (personal grooming) or performing licking or scratching activity on other animals (social grooming). |

| Type | Accelerometer Features |

|---|---|

| Raw acceleration | Acceleration X, Y, Z, g |

| Smoothed acceleration | Mean acceleration X, Y, Z, g |

| Median acceleration X, Y, Z, g | |

| Standard deviation of acceleration X, Y, Z, g | |

| Minimum acceleration X, Y, Z, g | |

| Maximum acceleration X, Y, Z, g | |

| Acceleration vectors | Dynamic acceleration X, Y, Z, g |

| Overall dynamic body acceleration (ODBA) | |

| Vector of dynamic body acceleration (VeDBA) | |

| Mean ODBA and VeDBA | |

| Median ODBA and VeDBA | |

| Standard deviation ODBA and VeDBA | |

| Minimum ODBA and VeDBA | |

| Maximum ODBA and VeDBA | |

| Raw magnetometry | Magnetometry X, Y, Z, geomagnetism |

| Smoothed magnetometry | Mean geomagnetism X, Y, Z, geomagnetism |

| Median geomagnetism X, Y, Z | |

| Standard deviation geomagnetism X, Y, Z, geomagnetism | |

| Minimum geomagnetism X, Y, Z, geomagnetism | |

| Maximum geomagnetism X, Y, Z, geomagnetism |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parsons, I.L.; Karisch, B.B.; Stone, A.E.; Webb, S.L.; Norman, D.A.; Street, G.M. Machine Learning Methods and Visual Observations to Categorize Behavior of Grazing Cattle Using Accelerometer Signals. Sensors 2024, 24, 3171. https://doi.org/10.3390/s24103171

Parsons IL, Karisch BB, Stone AE, Webb SL, Norman DA, Street GM. Machine Learning Methods and Visual Observations to Categorize Behavior of Grazing Cattle Using Accelerometer Signals. Sensors. 2024; 24(10):3171. https://doi.org/10.3390/s24103171

Chicago/Turabian StyleParsons, Ira Lloyd, Brandi B. Karisch, Amanda E. Stone, Stephen L. Webb, Durham A. Norman, and Garrett M. Street. 2024. "Machine Learning Methods and Visual Observations to Categorize Behavior of Grazing Cattle Using Accelerometer Signals" Sensors 24, no. 10: 3171. https://doi.org/10.3390/s24103171

APA StyleParsons, I. L., Karisch, B. B., Stone, A. E., Webb, S. L., Norman, D. A., & Street, G. M. (2024). Machine Learning Methods and Visual Observations to Categorize Behavior of Grazing Cattle Using Accelerometer Signals. Sensors, 24(10), 3171. https://doi.org/10.3390/s24103171