Towards Generating Authentic Human-Removed Pictures in Crowded Places Using a Few-Second Video

Abstract

:1. Introduction

2. Related Work

2.1. Object Removal

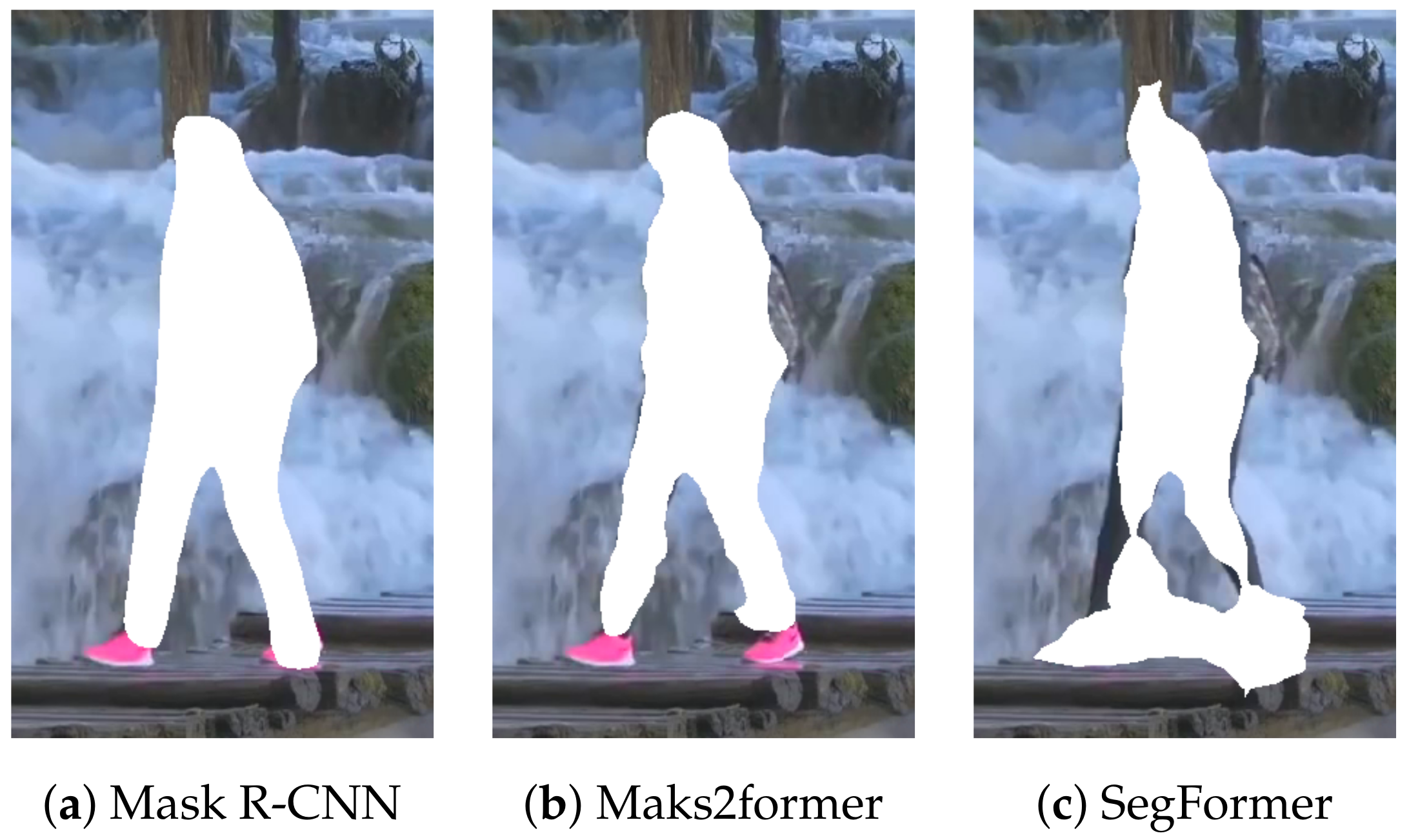

2.2. Object Detection and Segmentation

2.3. Image Inpainting

2.4. Key Differences from Prior Works

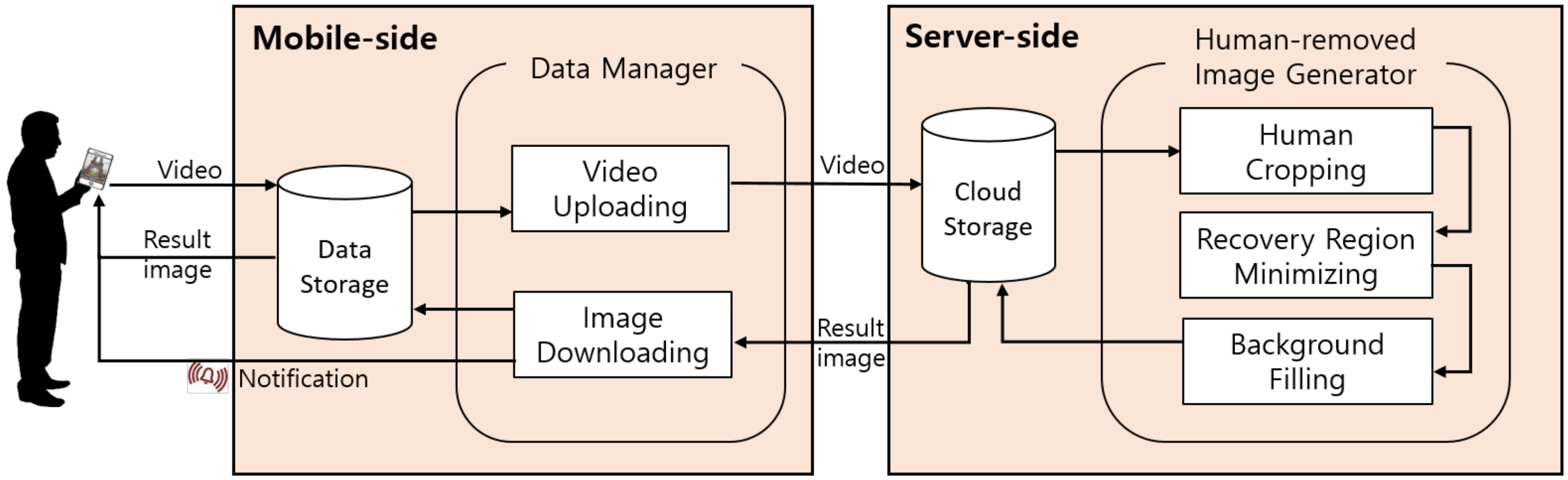

3. System Overview

3.1. Design Considerations

- High-quality image generation: The system should be able to generate satisfactory images for users. To achieve this, it should be able to accurately segment and crop human-related areas and then naturally restore the cropped areas. Additionally, the system should support resolutions of Full High Definition (FHD) or higher.

- Low user burden: The system should be able to produce desired results without imposing any additional burdens on users, such as manually selecting areas for removal or being involved in the image restoration process. It should automatically identify areas for removal and process images to achieve this.

3.2. The Proposed Approach

- By maximizing the utilization of actual pixel data from image frames, it is possible to minimize the use of restored image pixels. This improves the quality of the resulting images.

- By reducing the need for high-cost recovery operations, it is possible to decrease the computational resources required even though it should handle multiple frames.

3.3. System Architecture

3.4. System Scope and Limitations

4. System Design and Implementation

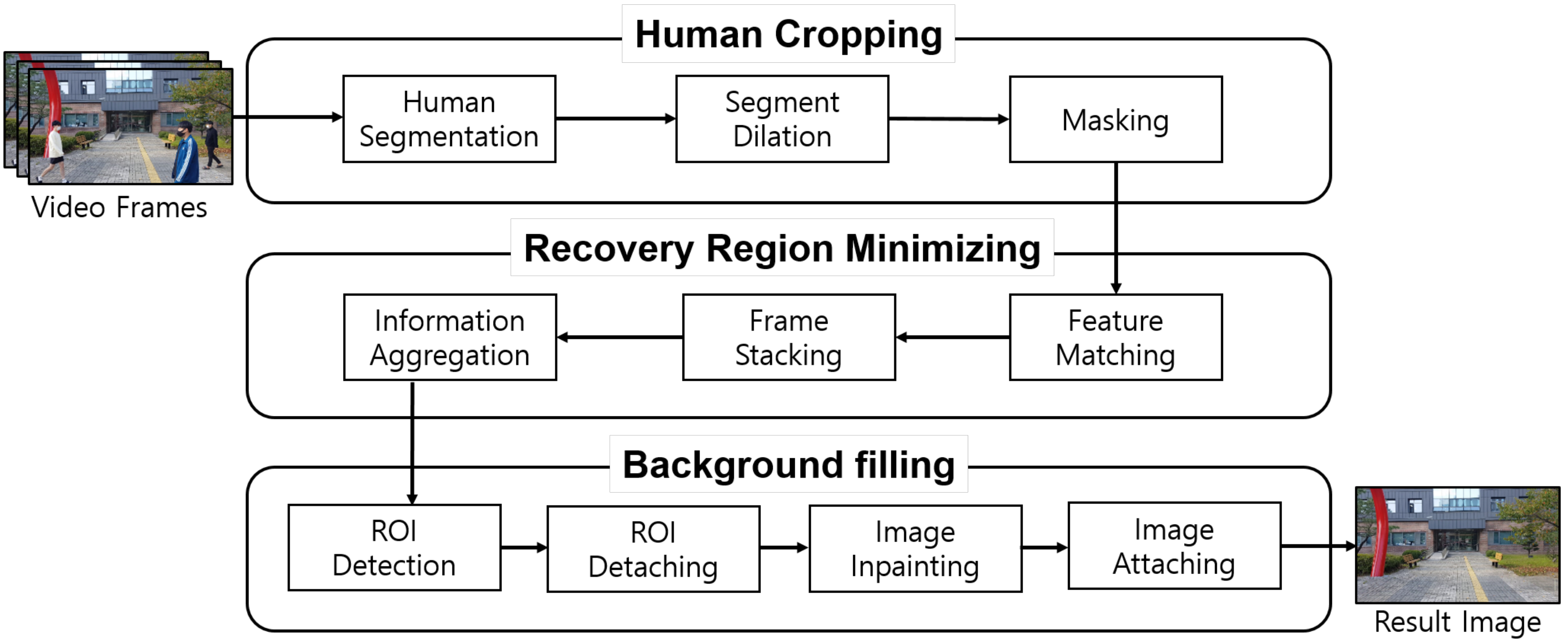

4.1. Processing Pipeline Overview

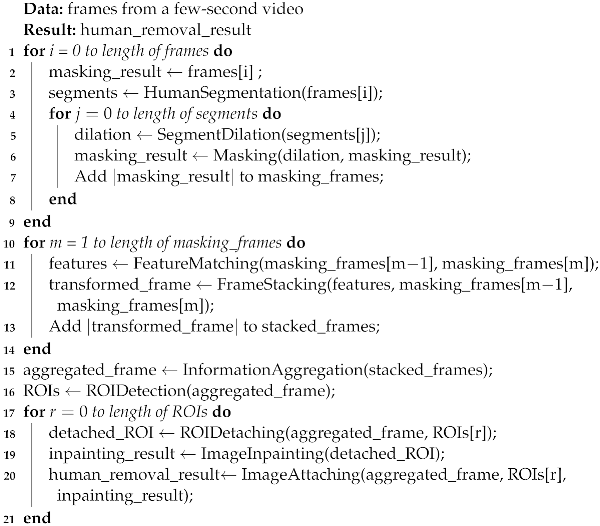

| Algorithm 1: Human removal image processing |

|

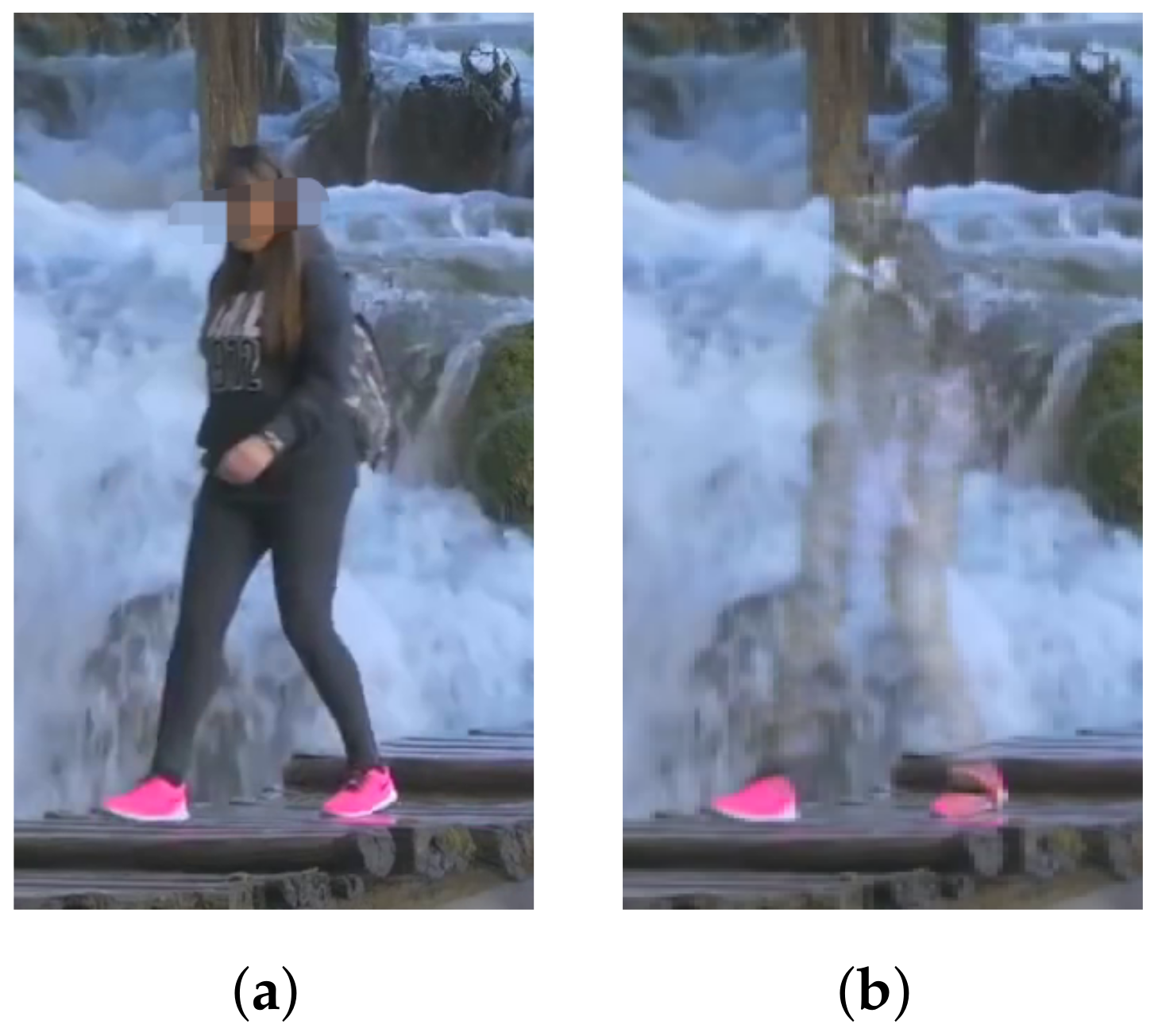

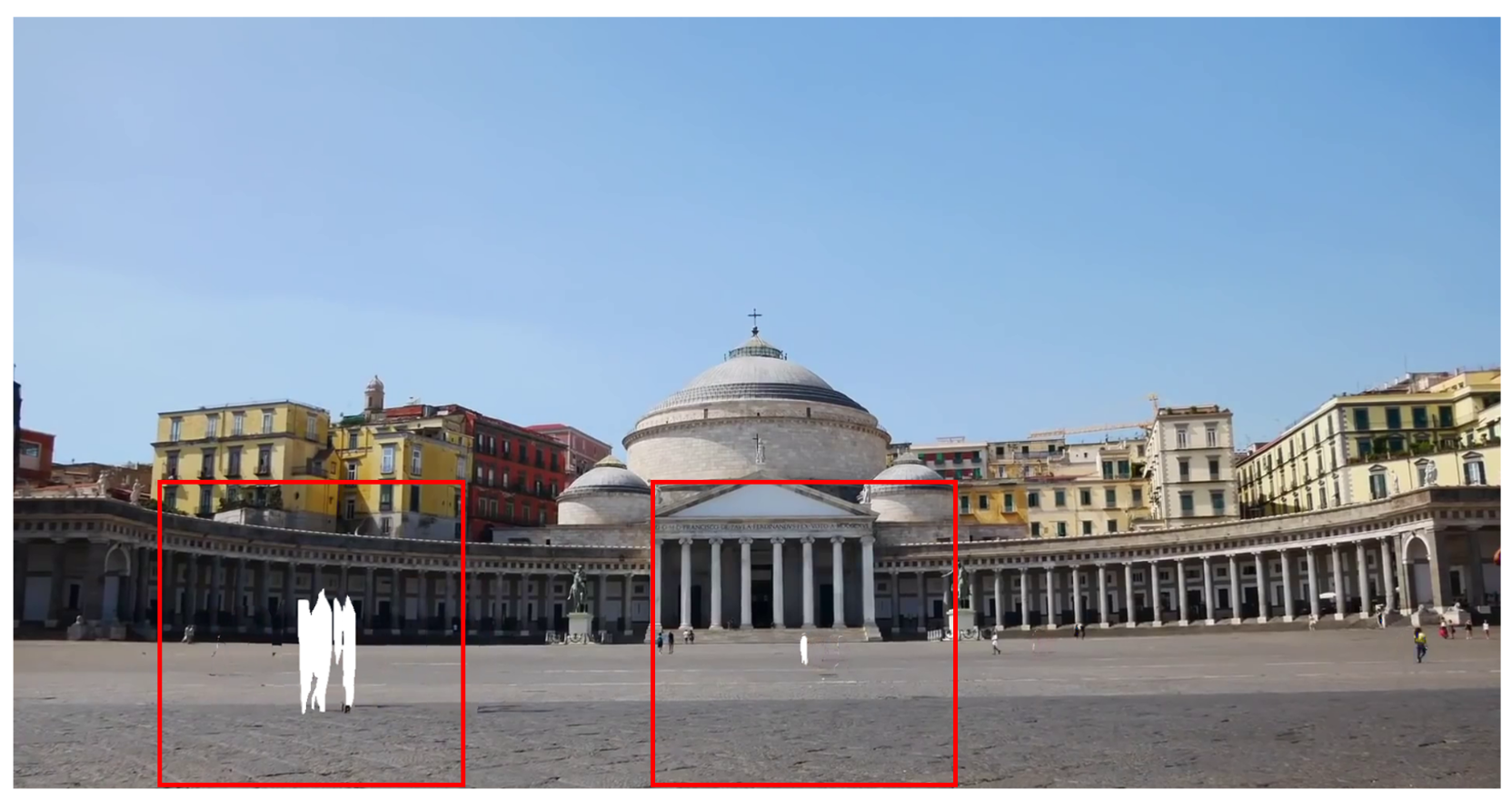

4.2. Human Cropping

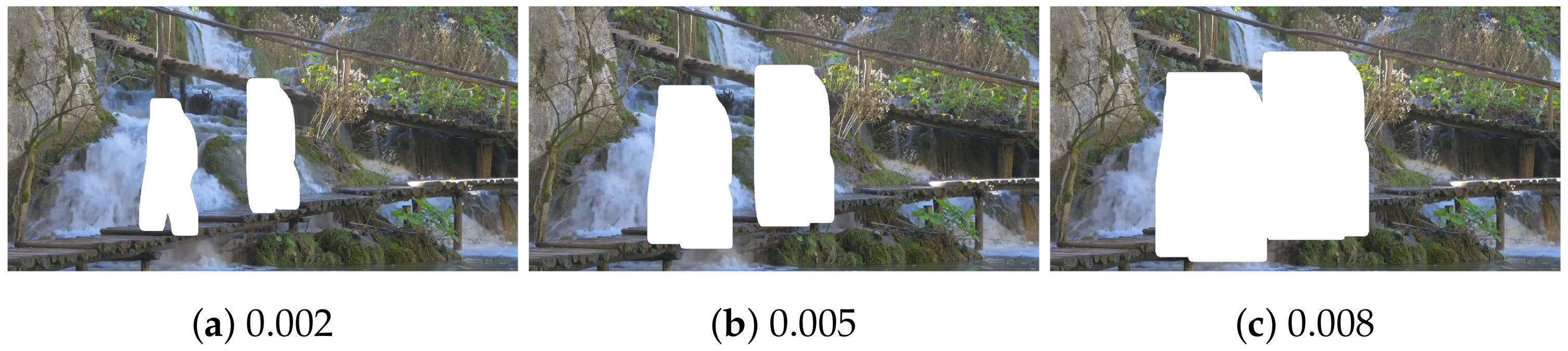

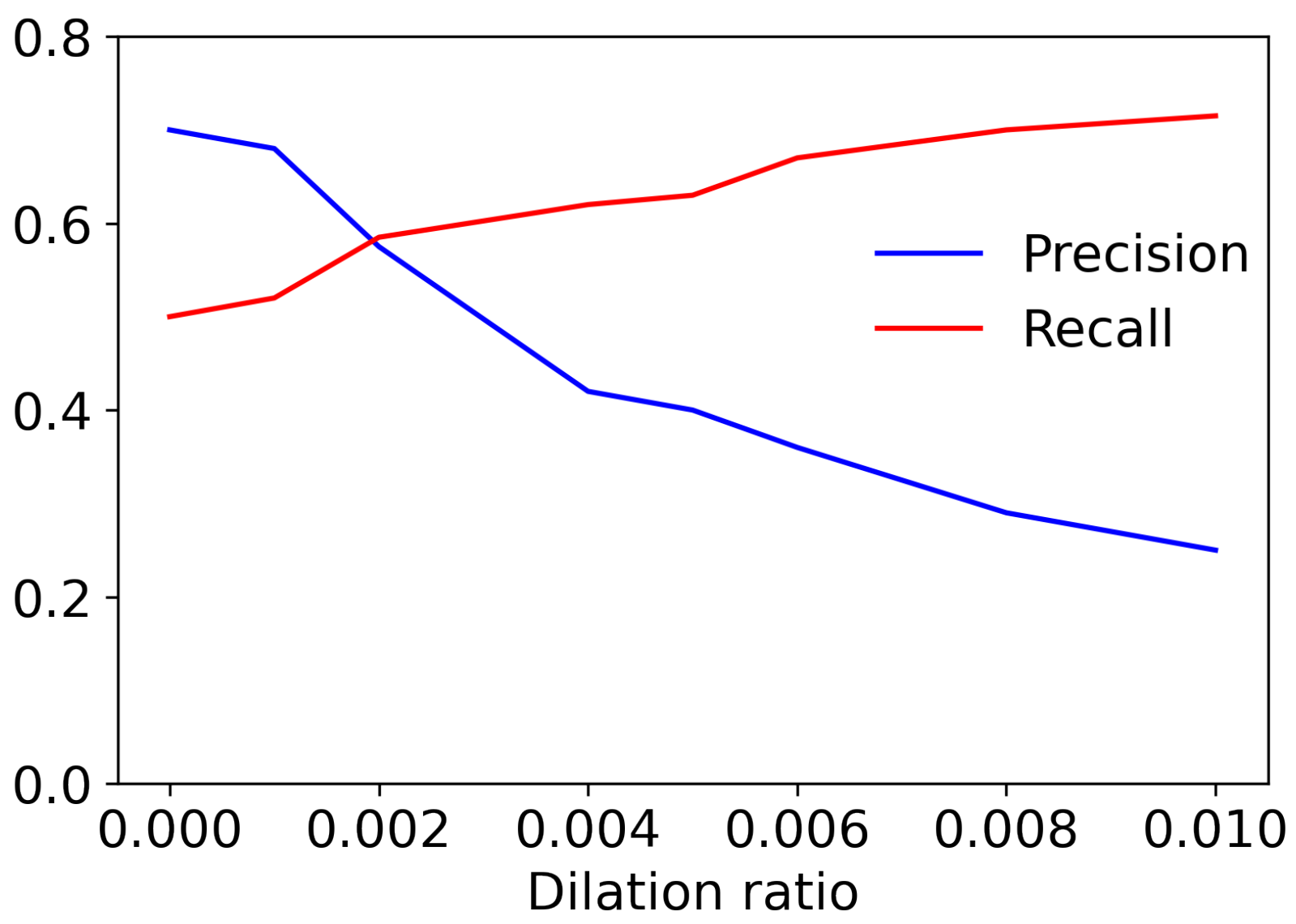

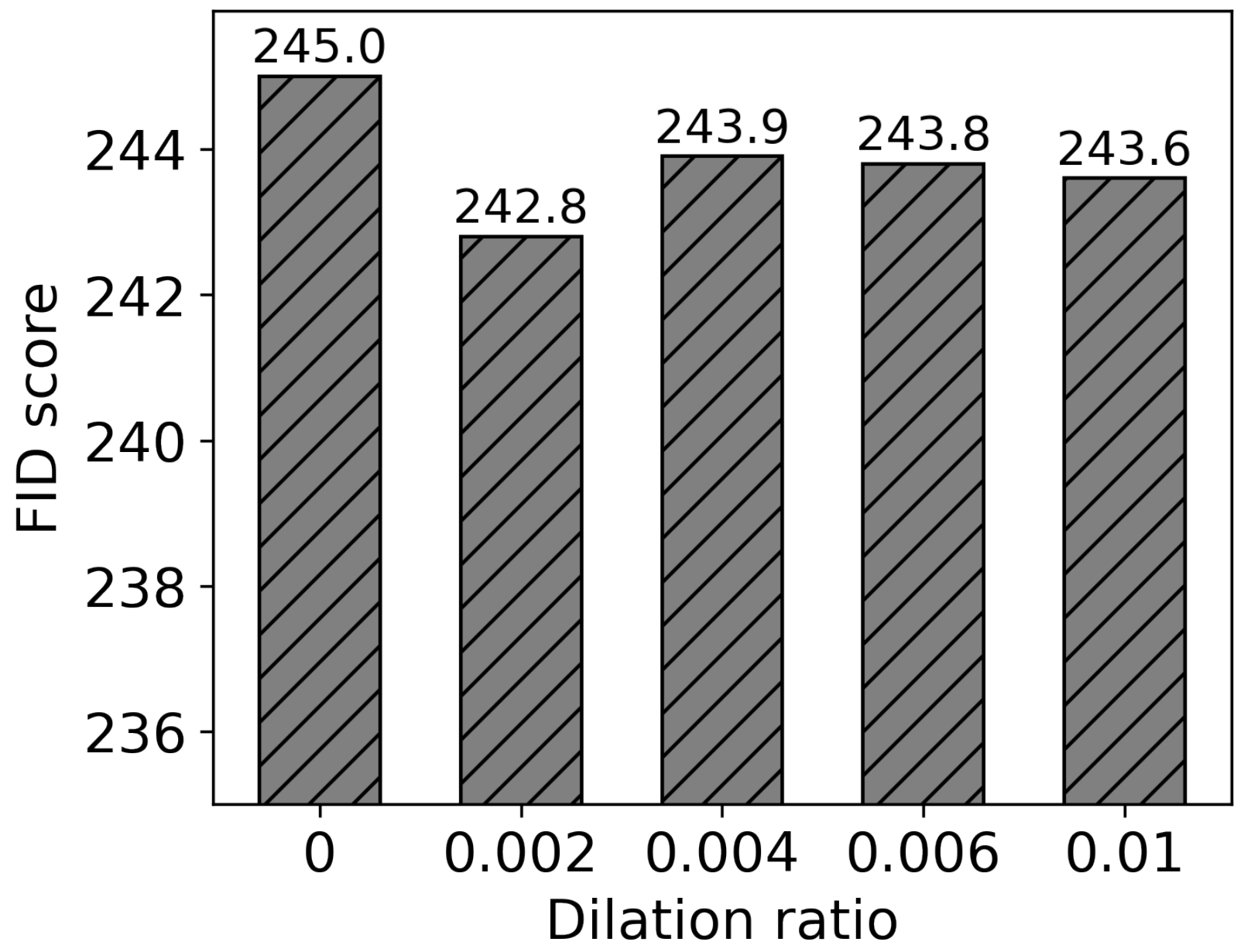

4.3. Recovery Region Minimizing

4.4. Background Filling

5. Evaluation

5.1. Experimental Setup

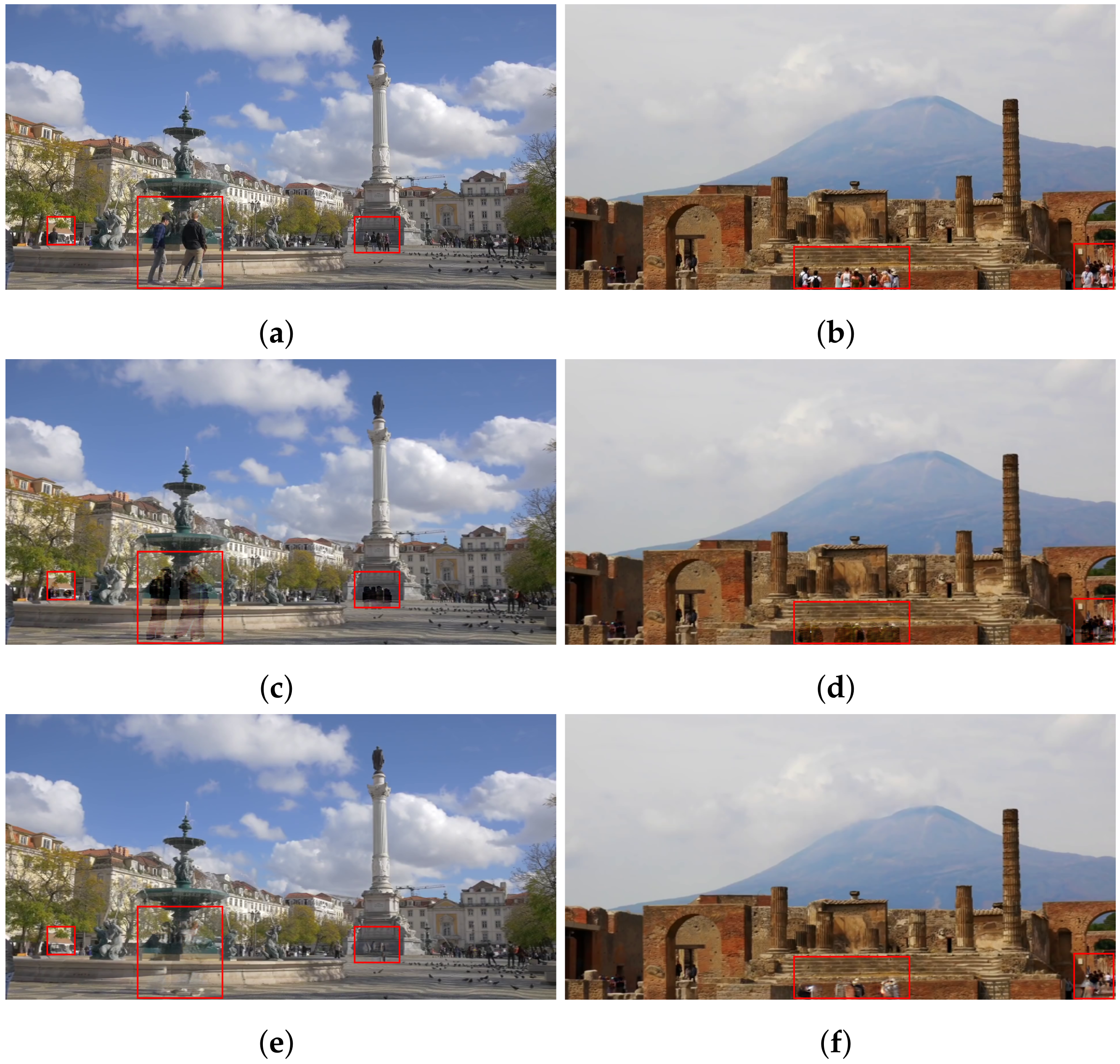

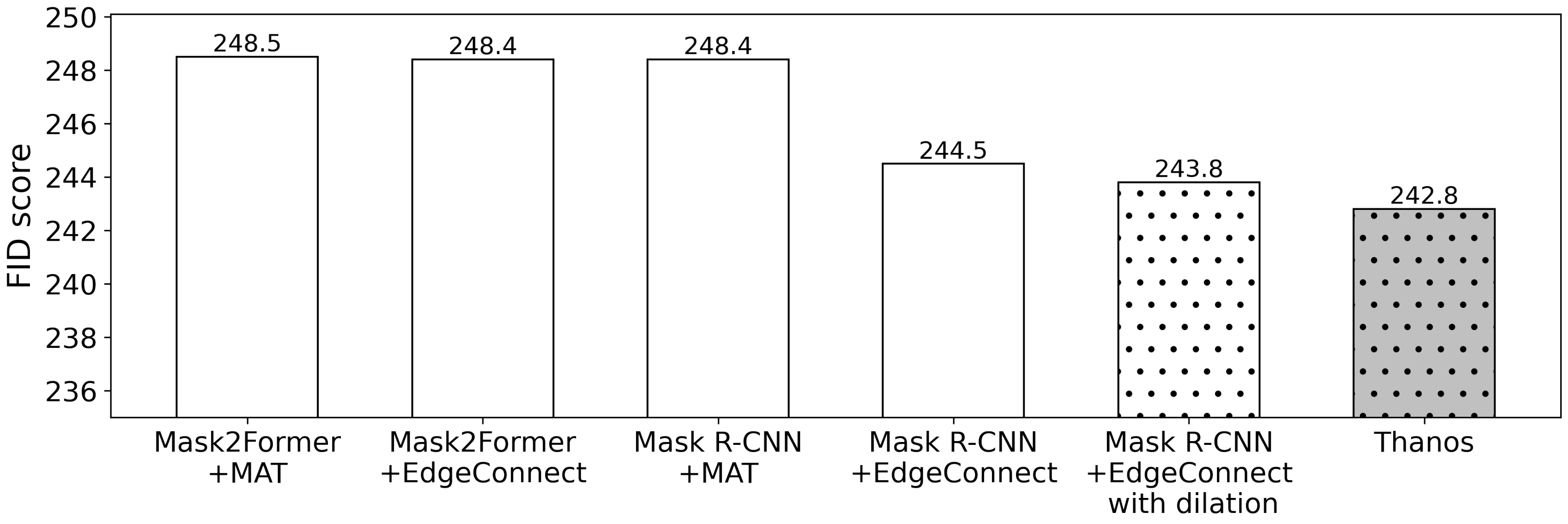

5.2. Thanos Performance

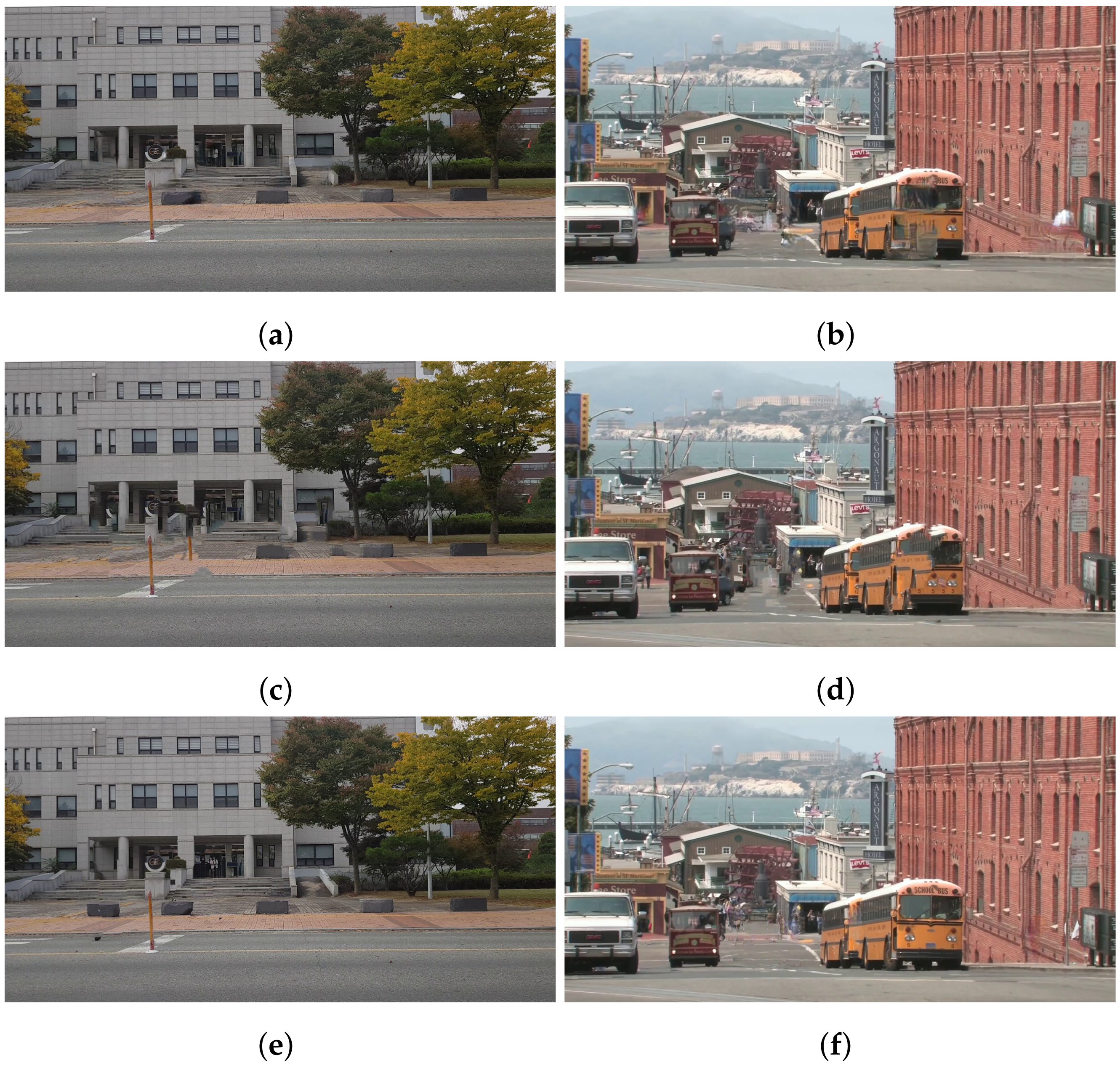

5.2.1. Image Quality

5.2.2. Processing Latency

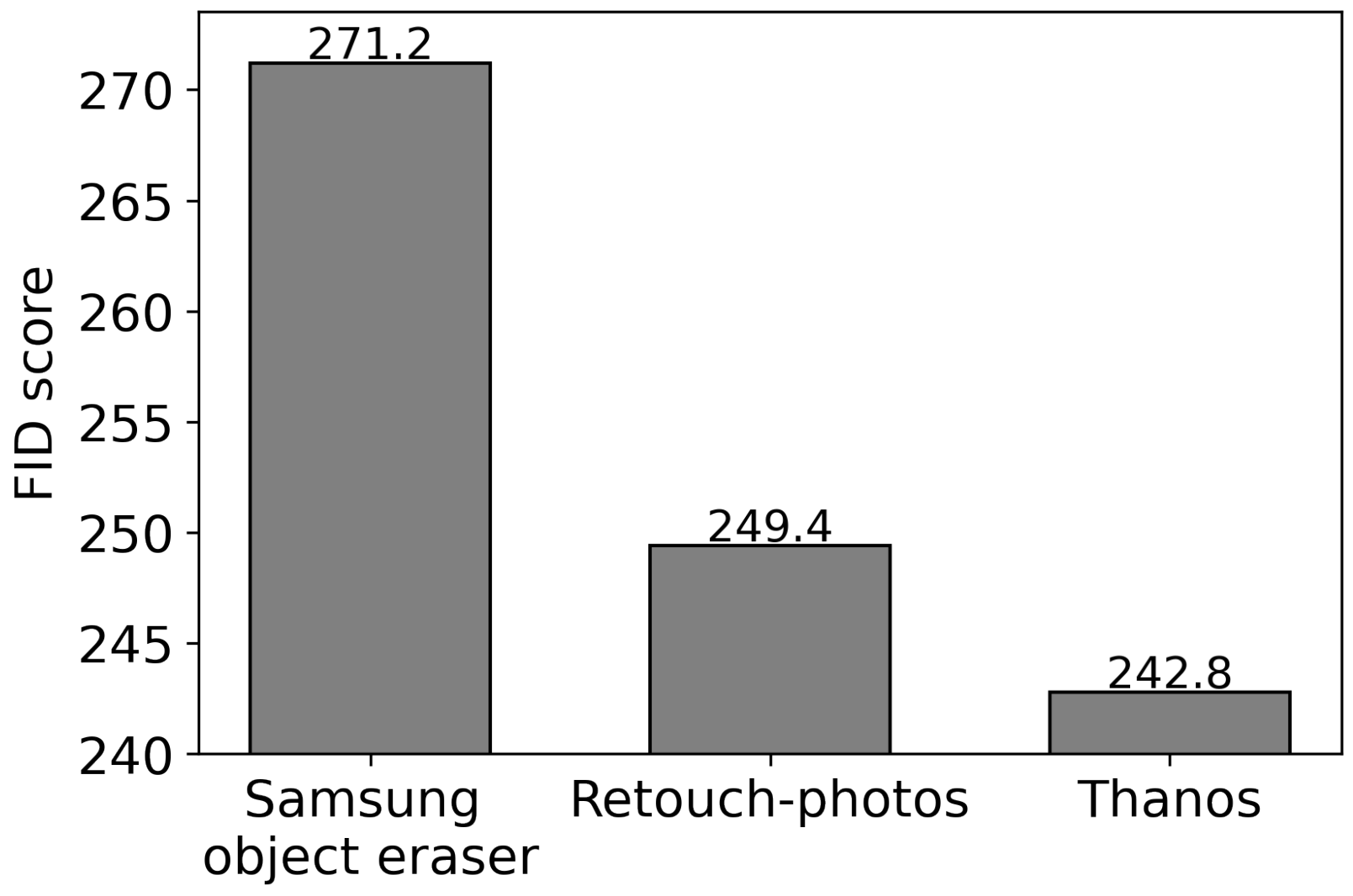

5.3. Comparison with Existing Applications

6. Limitation and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Retouch-Phothos. Available online: https://play.google.com/store/apps/details?id=royaln.Removeunwantedcontent (accessed on 5 April 2024).

- Spectre Camera. Available online: https://spectre.cam/ (accessed on 5 April 2024).

- Samsung Object Eraser. Available online: https://www.samsung.com/latin_en/support/mobile-devices/how-to-remove-unwanted-objects-from-photos-on-your-galaxy-phone/ (accessed on 5 April 2024).

- Lee, J. Deep Learning Based Human Removal and Background Synthesis Application. Master’s Thesis, Korea University of Technology and Education, Cheonan, Republic of Korea, 2021. [Google Scholar]

- Pitaksarit, S. Diminished Reality Based on Texture Reprojection of Backgrounds, Segmented with Deep Learning. Master’s Thesis, Nara Institute of Science and Technology, Ikoma, Japan, 2016. [Google Scholar]

- Tajbakhsh, N.; Jeyaseelan, L.; Li, Q.; Chiang, J.N.; Wu, Z.; Ding, X. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med. Image Anal. 2020, 63, 101693. [Google Scholar] [CrossRef] [PubMed]

- Qin, Z.; Zeng, Q.; Zong, Y.; Xu, F. Image inpainting based on deep learning: A review. Displays 2021, 69, 102028. [Google Scholar] [CrossRef]

- Xiang, H.; Zou, Q.; Nawaz, M.A.; Huang, X.; Zhang, F.; Yu, H. Deep learning for image inpainting: A survey. Pattern Recognit. 2023, 134, 109046. [Google Scholar] [CrossRef]

- Jam, J.; Kendrick, C.; Walker, K.; Drouard, V.; Hsu, J.G.-S.; Yap, M.H. A comprehensive review of past and present image inpainting methods. Comput. Vis. Image Underst. 2021, 203, 103147. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6629–6640. [Google Scholar]

- Shetty, R.R.; Fritz, M.; Schiele, B. Adversarial scene editing: Automatic object removal from weak supervision. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December; pp. 7717–7727.

- Dhamo, H.; Farshad, A.; Laina, I.; Navab, N.; Hager, G.D.; Tombari, F.; Rupprecht, C. Semantic image manipulation using scene graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 5213–5222. [Google Scholar]

- Din, N.U.; Javed, K.; Bae, S.; Yi, J. A novel GAN-based network for unmasking of masked face. IEEE Access 2020, 8, 44276–44287. [Google Scholar] [CrossRef]

- Hosen, M.; Islam, M. HiMFR: A Hybrid Masked Face Recognition Through Face Inpainting. arXiv 2022, arXiv:2209.08930. [Google Scholar]

- Sola, S.; Gera, D. Unmasking Your Expression: Expression-Conditioned GAN for Masked Face Inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5908–5916. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.; Shum, H. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3041–3050. [Google Scholar]

- Jain, J.; Li, J.; Chiu, M.; Hassani, A.; Orlov, N.; Shi, H. Oneformer: One trans-former to rule universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2989–2998. [Google Scholar]

- Wu, J.; Ji, W.; Fu, H.; Xu, M.; Jin, Y.; Xu, Y. MedSegDiff-V2: Diffusion-Based Medical Image Segmentation with Transformer. Proc. AAAI Conf. Artif. Intell. 2024, 38, 6030–6038. [Google Scholar] [CrossRef]

- Bi, Q.; You, S.; Gevers, T. Learning content-enhanced mask transformer for domain generalized urban-scene segmentation. AAAI Conf. Artif. Intell. 2024, 38, 819–827. [Google Scholar] [CrossRef]

- Telea, A. An image inpainting technique based on the fast marching method. J. Graph. Tools 2004, 9, 23–34. [Google Scholar] [CrossRef]

- Bertalmio, M.; Bertozzi, A.L.; Sapiro, G. Navier-stokes, fluid dynamics, and image and video inpainting. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 355–362. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative image inpainting with contextual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5505–5514. [Google Scholar]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.Z.; Ebrahimi, M. Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv 2019, arXiv:1901.00212. [Google Scholar]

- Zheng, H.; Lin, Z.; Lu, J.; Cohen, S.; Shechtman, E.; Barnes, C.; Zhang, J.; Xu, N.; Amirghodsi, S.; Luo, J. CM-GAN: Image Inpainting with Cascaded Modulation GAN and Object-Aware Training. arXiv 2022, arXiv:2203.11947. [Google Scholar]

- Li, W.; Lin, Z.; Zhou, K.; Qi, L.; Wang, Y.; Jia, J. Mat: Mask-aware transformer for large hole image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10758–10768. [Google Scholar]

- Shamsolmoali, P.; Zareapoor, M.; Granger, E. TransInpaint: Trans-former-based Image Inpainting with Context Adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 849–858. [Google Scholar]

- Ko, K.; Kim, C. Continuously masked transformer for image inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 13169–13178. [Google Scholar]

- Wu, J.; Feng, Y.; Xu, H.; Zhu, C.; Zheng, J. SyFormer: Structure-Guided Syner-gism Transformer for Large-Portion Image Inpainting. Proc. AAAI Conf. Artif. Intell. 2024, 38, 6021–6029. [Google Scholar] [CrossRef]

- Wu, Z.; Sun, C.; Xuan, H.; Liu, G.; Yan, Y. WaveFormer: Wavelet Transformer for Noise-Robust Video Inpainting. Proc. AAAI Conf. Artif. Intell. 2024, 38, 6180–6188. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Shin, Y.; Sagong, M.; Yeo, Y.; Kim, S.; Ko, S. Pepsi++: Fast and lightweight network for image inpainting. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 252–265. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Xia, R.; Yang, K.; Zou, K. GCAM: Lightweight image inpainting via group convolution and attention mechanism. Int. J. Mach. Learn. Cybern. 2024, 15, 1815–1825. [Google Scholar] [CrossRef]

- Drolia, U.; Guo, K.; Tan, J.; Gandhi, R.; Narasimhan, P. Cachier: Edge-caching for recognition applications. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 276–286. [Google Scholar]

| Input | Output | Automatic Target Object Detection | Fine-Tuning Target Object Area | Recovery Region Minimizing | |

|---|---|---|---|---|---|

| Mask R-CNN [19] | Image | Semantic segmented image | Yes | No | N/A |

| SegFormer [22] | Image | Semantic segmented image | Yes | No | N/A |

| Mask2former [23] | Image | Semantic segmented image | Yes | No | N/A |

| Mask DINO [24] | Image | Semantic segmented image | Yes | No | N/A |

| OneFormer [25] | Image | Semantic segmented image | Yes | No | N/A |

| MedSegDiff [26] | Medical image | Semantic segmented image | Yes | No | N/A |

| CMFormer [27] | Image | Semantic segmented image | Yes | No | N/A |

| Jiahui et al. [31] | Damaged image | Recovered image | No | No | No |

| Edge-connect [32] | Damaged image | Recovered image | No | No | No |

| CM-GAN [33] | Damaged image | Recovered image | No | No | No |

| MAT [34] | Damaged image | Recovered image | No | No | No |

| TransInpaint [35] | Damaged image | Recovered image | No | No | No |

| CMT [36] | Damaged image | Recovered image | No | No | No |

| SyFormer [37] | Damaged image | Recovered image | No | No | No |

| WaveFormer [38] | Damaged video | Recovered video | No | No | No |

| Shetty et al. [11] | Image, text | Specific object-removed image | Yes | Yes | No |

| Dhamo et al. [12] | Image, text | Semantic manipulated image | Yes | No | No |

| Din et al. [13] | Image | Mask-removed face image | Yes | Yes | No |

| HiMFR [14] | Image | Mask-removed face image | Yes | No | No |

| Sola et al. [15] | Image, text | Mask-removed face image | Yes | No | No |

| Thanos (ours) | A few-second video | Human-removed image | Yes | Yes | Yes |

| Single Frame without Dilation | Single Frame with Dilation | Thanos | |

|---|---|---|---|

| Small | 257.0 | 257.4 | 254.7 |

| Large | 306.7 | 309.2 | 299.8 |

| CPU | Intel i7-7700 |

| GPU | NVIDIA Geforce RTX 2080Ti |

| RAM | 32 GB |

| Compiler | PyTorch 1.13.1 (with CUDA(10.2) + cuDNN) |

| Single Frame without Dilation (ms) | Thanos (ms) | |

|---|---|---|

| Small | 761 | 754 |

| Large | 644 | 663 |

| Samsung Object Eraser | Retouch-Photos | Thanos | |

|---|---|---|---|

| Small | 301.8 | 260.1 | 254.7 |

| Large | 311.1 | 306.6 | 299.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Lee, E.; Kang, S. Towards Generating Authentic Human-Removed Pictures in Crowded Places Using a Few-Second Video. Sensors 2024, 24, 3486. https://doi.org/10.3390/s24113486

Lee J, Lee E, Kang S. Towards Generating Authentic Human-Removed Pictures in Crowded Places Using a Few-Second Video. Sensors. 2024; 24(11):3486. https://doi.org/10.3390/s24113486

Chicago/Turabian StyleLee, Juhwan, Euihyeok Lee, and Seungwoo Kang. 2024. "Towards Generating Authentic Human-Removed Pictures in Crowded Places Using a Few-Second Video" Sensors 24, no. 11: 3486. https://doi.org/10.3390/s24113486