High-Density Electroencephalogram Facilitates the Detection of Small Stimuli in Code-Modulated Visual Evoked Potential Brain–Computer Interfaces

Abstract

:1. Introduction

2. Materials and Methods

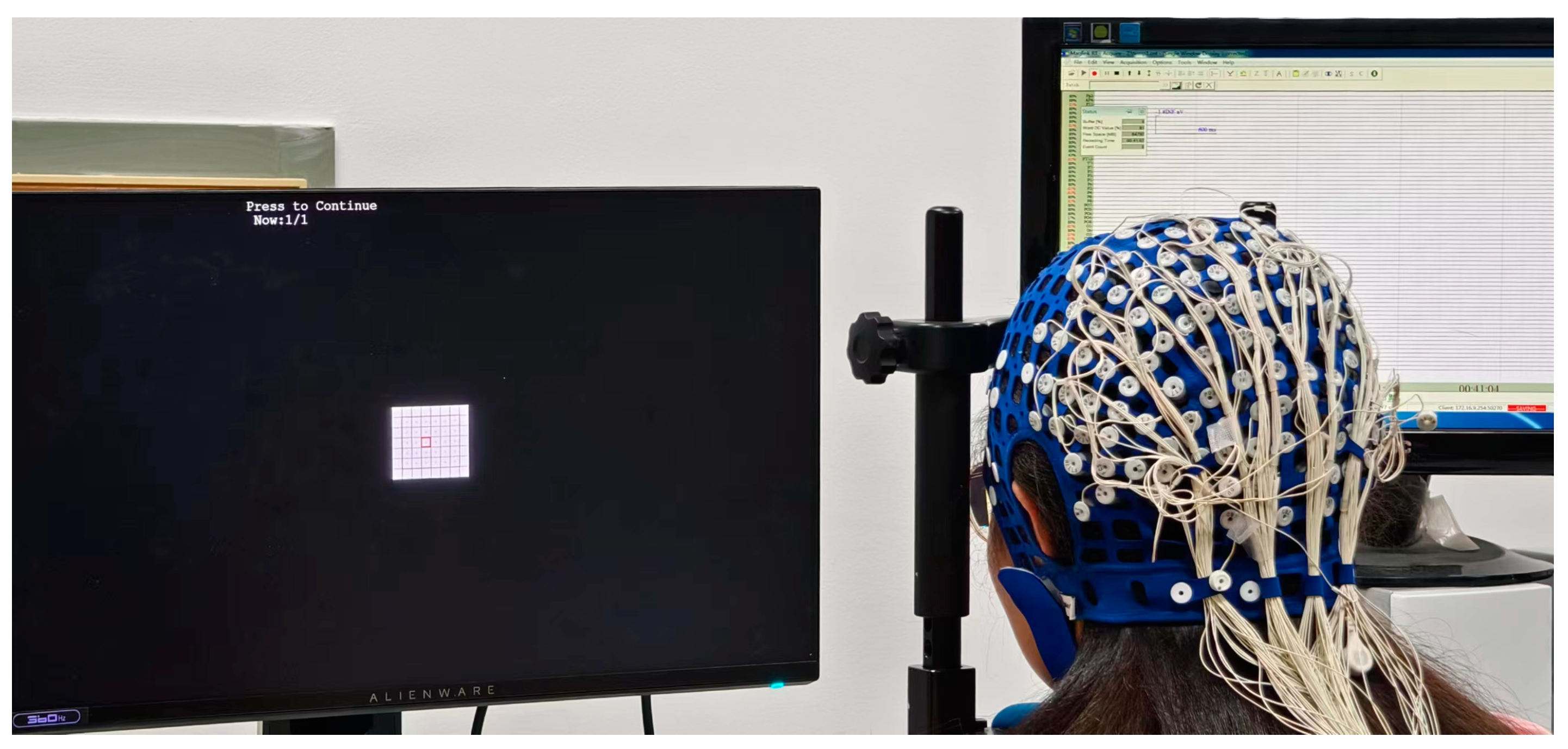

2.1. Experimental Environment

2.1.1. Subjects

2.1.2. Data Acquisition

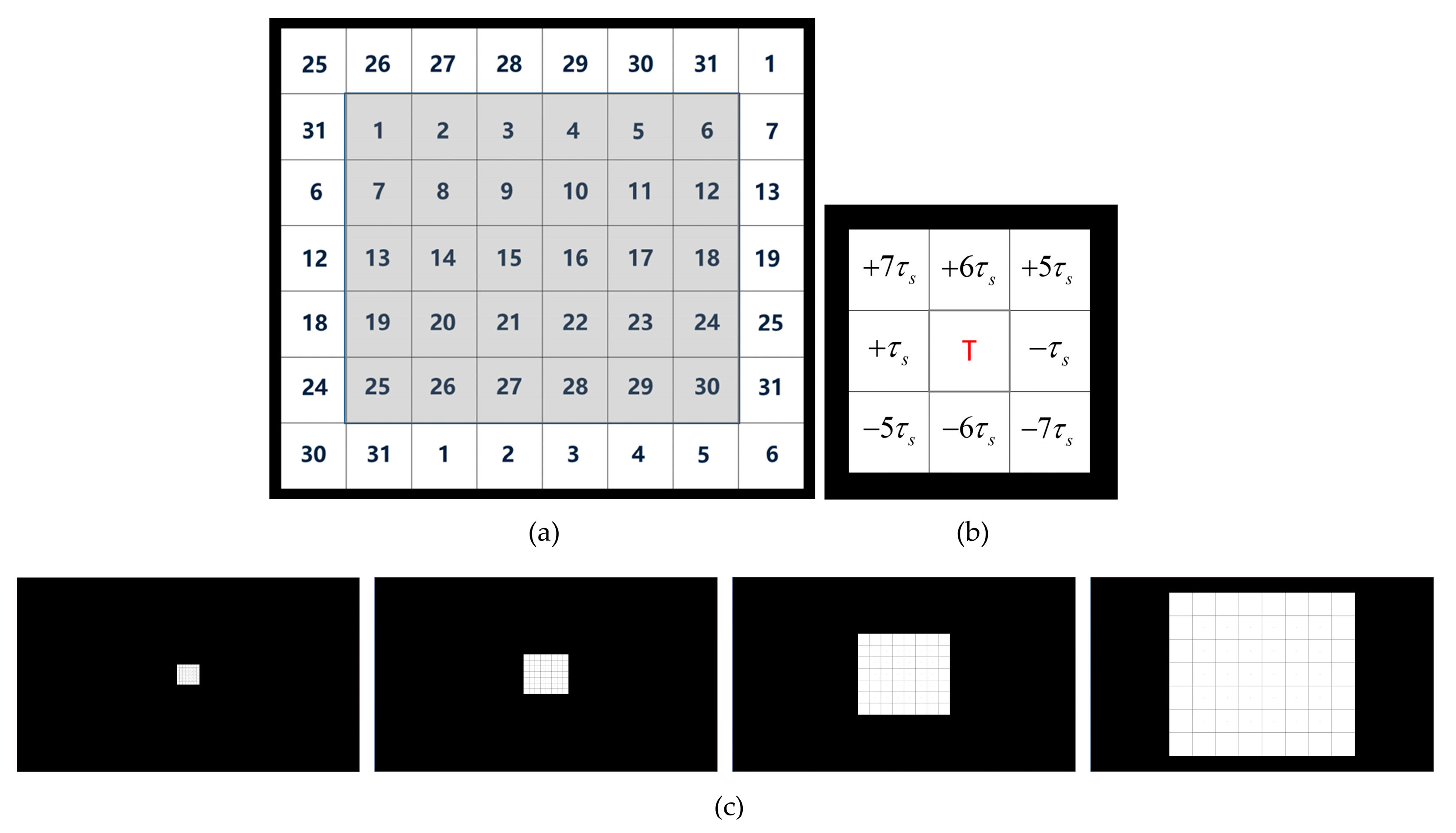

2.2. Target Modulation

2.3. Experimental Setup

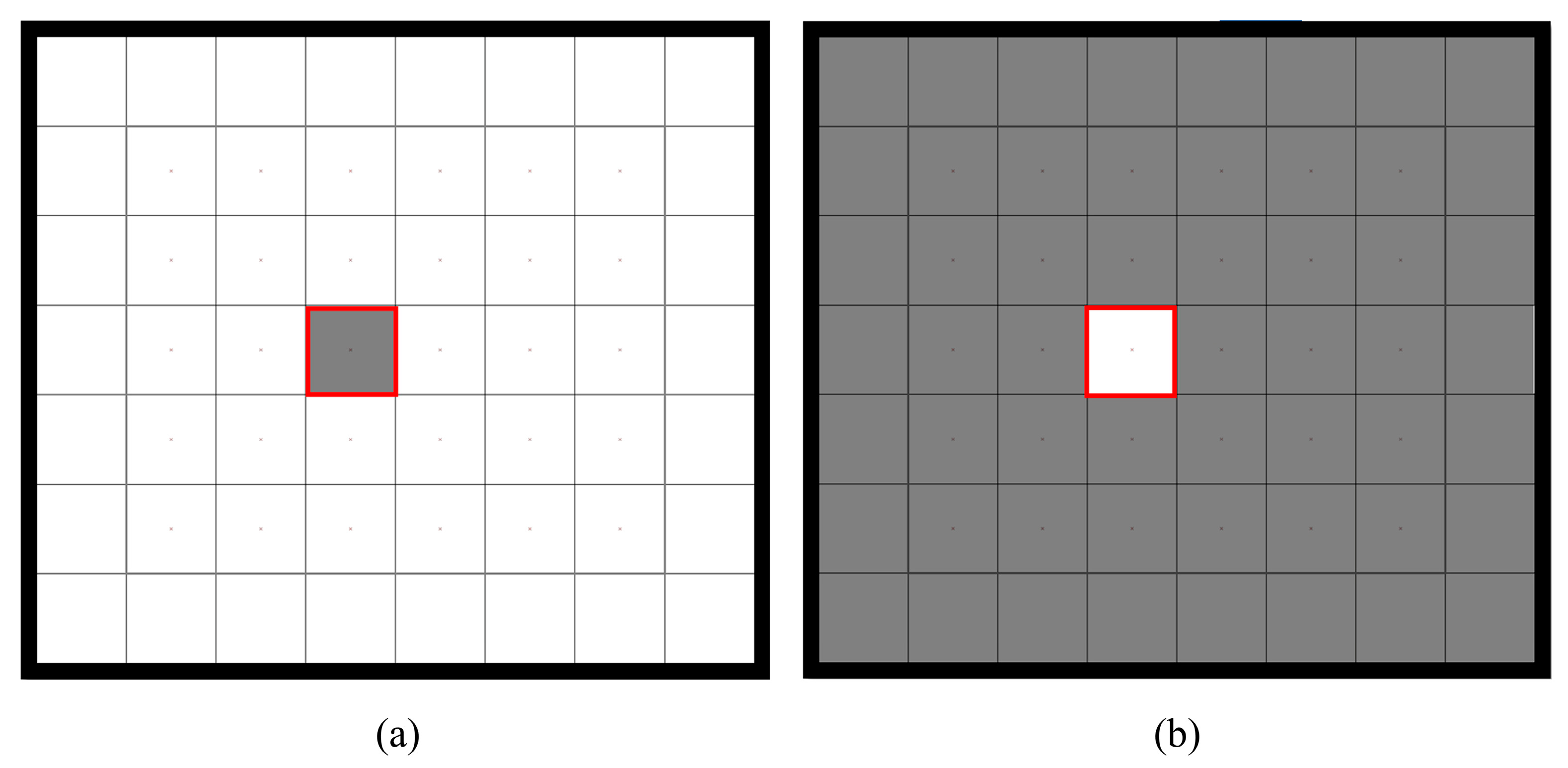

2.3.1. Offline Experiment

2.3.2. Online Experiment

2.4. Task-Discriminant Component Analysis

2.5. Data Analysis

2.5.1. Data Preprocessing

2.5.2. Performance Evaluation

2.5.3. Electrode Selection

3. Results

3.1. EEG Feature

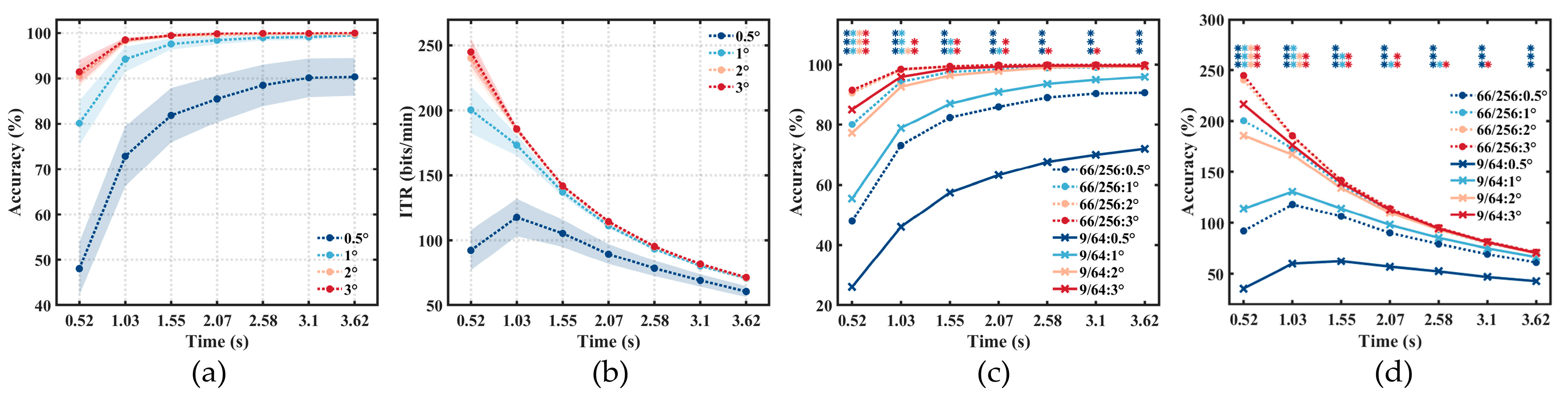

3.2. Offline Results

3.3. Online Results

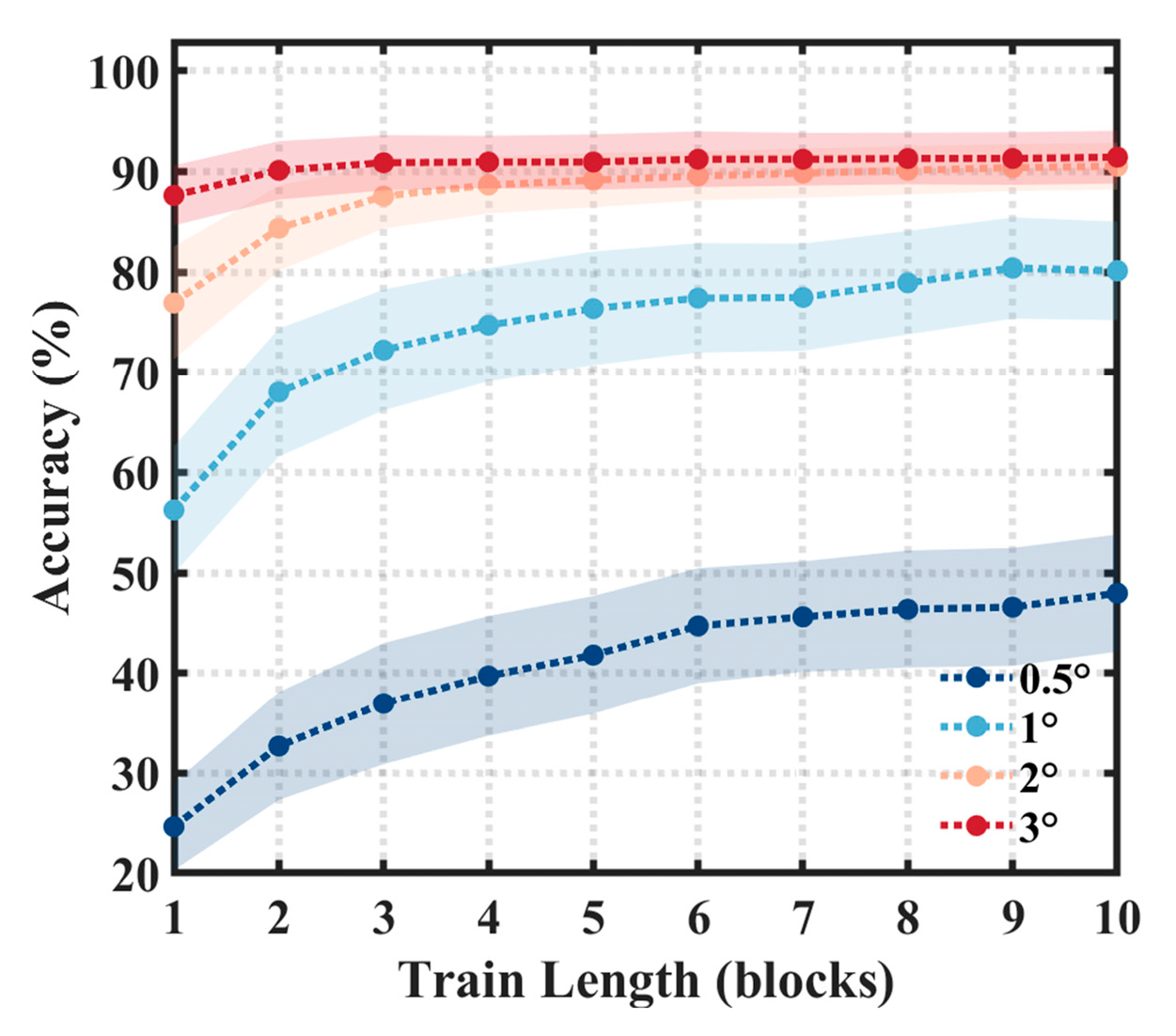

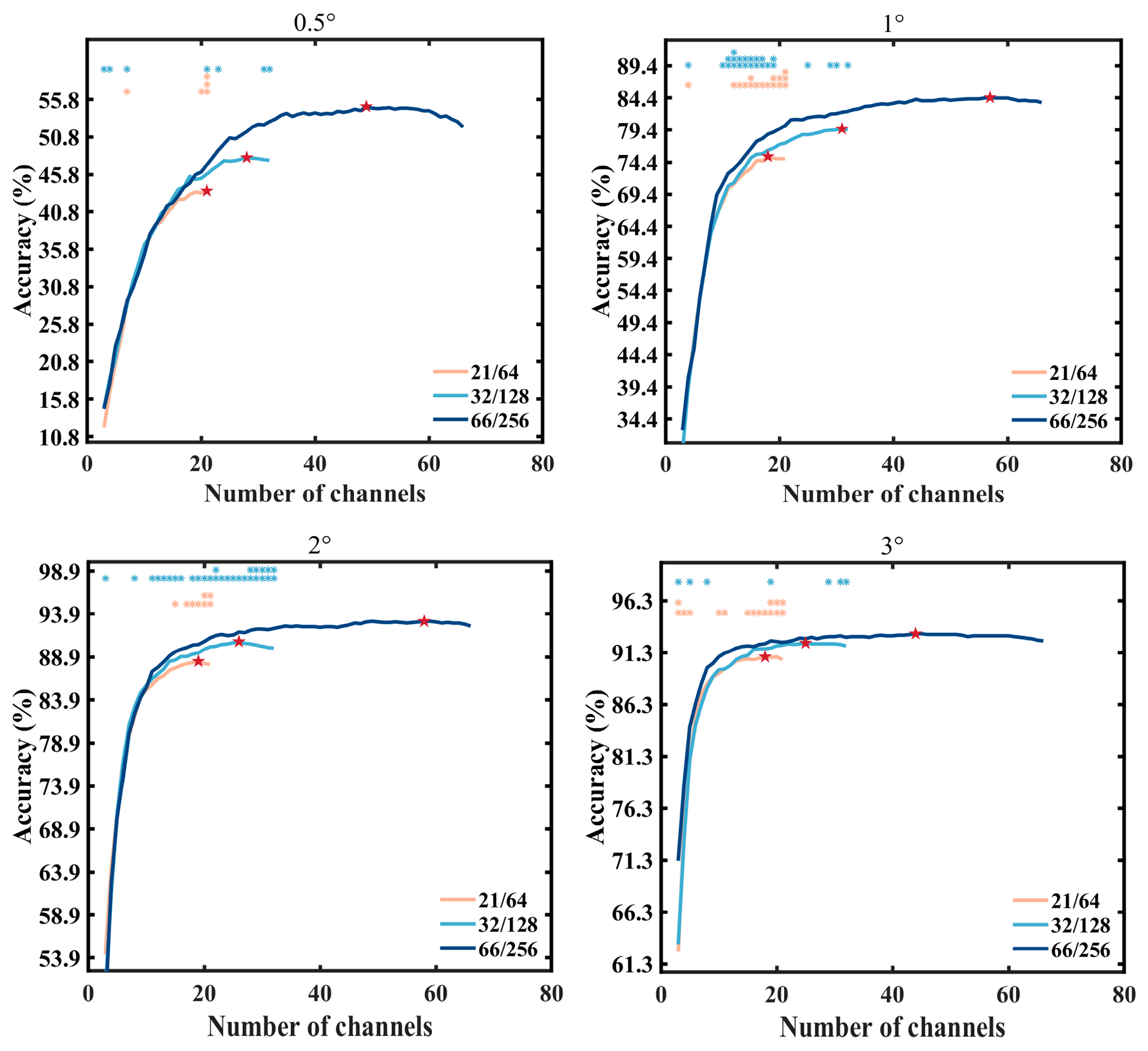

3.4. Electrode Selection Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef] [PubMed]

- Muller-Putz, G.R.; Pfurtscheller, G. Control of an Electrical Prosthesis With an SSVEP-Based BCI. IEEE Trans. Biomed. Eng. 2008, 55, 361–364. [Google Scholar] [CrossRef] [PubMed]

- Gerson, A.D.; Parra, L.C.; Sajda, P. Cortically coupled computer vision for rapid image search. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 174–179. [Google Scholar] [CrossRef] [PubMed]

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain–Computer Interface Spellers: A Review. Brain Sciences 2018, 8, 57. [Google Scholar] [CrossRef] [PubMed]

- McFarland, D.J.; Wolpaw, J.R. EEG-based brain–computer interfaces. Curr. Opin. Biomed. Eng. 2017, 4, 194–200. [Google Scholar] [CrossRef] [PubMed]

- Värbu, K.; Muhammad, N.; Muhammad, Y. Past, Present, and Future of EEG-Based BCI Applications. Sensors 2022, 22, 3331. [Google Scholar] [CrossRef] [PubMed]

- Bin, G.; Gao, X.; Wang, Y.; Hong, B.; Gao, S. VEP-based brain-computer interfaces: Time, frequency, and code modulations [Research Frontier]. IEEE Comput. Intell. Mag. 2009, 4, 22–26. [Google Scholar] [CrossRef]

- Viterbi, A.J. CDMA: Principles of Spread Spectrum Communication; Addison Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1995. [Google Scholar]

- Martínez-Cagigal, V.; Thielen, J.; Santamaría-Vázquez, E.; Pérez-Velasco, S.; Desain, P.; Hornero, R. Brain–computer interfaces based on code-modulated visual evoked potentials (c-VEP): A literature review. J. Neural Eng. 2021, 18, 061002. [Google Scholar] [CrossRef] [PubMed]

- Bin, G.; Gao, X.; Wang, Y.; Li, Y.; Hong, B.; Gao, S. A high-speed BCI based on code modulation VEP. J. Neural Eng. 2011, 8, 025015. [Google Scholar] [CrossRef]

- He, B.; Liu, Y.; Wei, Q.; Lu, Z. A multi-target brain-computer interface based on code modulated visual evoked potentials. PLoS ONE 2018, 13, e0202478. [Google Scholar]

- Sun, Q.; Zheng, L.; Pei, W.; Gao, X.; Wang, Y. A 120-target brain-computer interface based on code-modulated visual evoked potentials. J. Neurosci. Methods 2022, 375, 109597. [Google Scholar] [CrossRef] [PubMed]

- Sutter, E.E. The brain response interface: Communication through visually-induced electrical brain responses. J. Microcomput. Appl. 1992, 15, 31–45. [Google Scholar] [CrossRef]

- Sutter, E.E. The visual evoked response as a communication channel. In Proceedings of the IEEE Symposium on Biosensors, Los Angeles, CA, USA, 15–17 September 1984; pp. 95–100. [Google Scholar]

- Zhang, N.; Liu, Y.; Yin, E.; Deng, B.; Cao, L.; Jiang, J.; Zhou, Z.; Hu, D. Retinotopic and topographic analyses with gaze restriction for steady-state visual evoked potentials. Sci. Rep. 2019, 9, 95–100. [Google Scholar] [CrossRef] [PubMed]

- Ng, K.B.; Bradley, A.P.; Cunnington, R. Stimulus specificity of a steady-state visual-evoked potential-based brain–computer interface. J. Neural Eng. 2012, 9, 036008. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Wei, Q.; Feng, S.; Lu, Z. Stimulus Specificity of Brain-Computer Interfaces Based on Code Modulation Visual Evoked Potentials. PLoS ONE 2016, 11, e0156416. [Google Scholar]

- Cymbalyuk, G.; Duszyk, A.; Bierzyńska, M.; Radzikowska, Z.; Milanowski, P.; Kuś, R.; Suffczyński, P.; Michalska, M.; Łabęcki, M.; Zwoliński, P.; et al. Towards an Optimization of Stimulus Parameters for Brain-Computer Interfaces Based on Steady State Visual Evoked Potentials. PLoS ONE 2014, 9, e112099. [Google Scholar]

- Nakanishi, M.; Wang, Y.; Wang, Y.-T.; Mitsukura, Y.; Jung, T.-P. A High-Speed Brain Speller Using Steady-State Visual Evoked Potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar] [CrossRef] [PubMed]

- Song, J.; Davey, C.; Poulsen, C.; Luu, P.; Turovets, S.; Anderson, E.; Li, K.; Tucker, D. EEG source localization: Sensor density and head surface coverage. J. Neurosci. Methods 2015, 256, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Odabaee, M.; Freeman, W.J.; Colditz, P.B.; Ramon, C.; Vanhatalo, S. Spatial patterning of the neonatal EEG suggests a need for a high number of electrodes. NeuroImage 2013, 68, 229–235. [Google Scholar] [CrossRef]

- Freeman, W.J.; Holmes, M.D.; Burke, B.C.; Vanhatalo, S. Spatial spectra of scalp EEG and EMG from awake humans. Clin. Neurophysiol. 2003, 114, 1053–1068. [Google Scholar] [CrossRef]

- Petrov, Y.; Nador, J.; Hughes, C.; Tran, S.; Yavuzcetin, O.; Sridhar, S. Ultra-dense EEG sampling results in two-fold increase of functional brain information. NeuroImage 2014, 90, 140–145. [Google Scholar] [CrossRef] [PubMed]

- Robinson, A.K.; Venkatesh, P.; Boring, M.J.; Tarr, M.J.; Grover, P.; Behrmann, M. Very high density EEG elucidates spatiotemporal aspects of early visual processing. Sci. Rep. 2017, 7, 16248. [Google Scholar] [CrossRef] [PubMed]

- Brainard, D.H. The Psychophysics Toolbox. Spat. Vis. 1997, 10, 433–436. [Google Scholar] [CrossRef]

- Tadel, F.; Baillet, S.; Mosher, J.C.; Pantazis, D.; Leahy, R.M. Brainstorm: A User-Friendly Application for MEG/EEG Analysis. Comput. Intell. Neurosci. 2011, 2011, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Chen, X.; Shi, N.; Wang, Y.; Gao, S.; Gao, X. Improving the Performance of Individually Calibrated SSVEP-BCI by Task- Discriminant Component Analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1998–2007. [Google Scholar] [CrossRef] [PubMed]

- Haufe, S.; Meinecke, F.; Görgen, K.; Dähne, S.; Haynes, J.-D.; Blankertz, B.; Bießmann, F. On the interpretation of weight vectors of linear models in multivariate neuroimaging. NeuroImage 2014, 87, 96–110. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Liu, Y.; Gao, X.; Wang, Y.; Yang, C.; Lu, Z.; Gong, H. A Novel c-VEP BCI Paradigm for Increasing the Number of Stimulus Targets Based on Grouping Modulation With Different Codes. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1178–1187. [Google Scholar] [CrossRef] [PubMed]

- Başaklar, T.; Tuncel, Y.; Ider, Y.Z. Effects of high stimulus presentation rate on EEG template characteristics and performance of c-VEP based BCIs. Biomed. Phys. Eng. Express 2019, 5, 035023. [Google Scholar] [CrossRef]

- Gembler, F.; Benda, M.; Saboor, A.; Volosyak, I. A multi-target c-VEP-based BCI speller utilizing n-gram word prediction and filter bank classification. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 2719–2724. [Google Scholar]

- Gembler, F.W.; Benda, M.; Rezeika, A.; Stawicki, P.R.; Volosyak, I. Asynchronous c-VEP communication tools—Efficiency comparison of low-target, multi-target and dictionary-assisted BCI spellers. Sci. Rep. 2020, 10, 17064. [Google Scholar] [CrossRef]

- Zarei, A.; Mohammadzadeh Asl, B. Automatic detection of code-modulated visual evoked potentials using novel covariance estimators and short-time EEG signals. Comput. Biol. Med. 2022, 147, 105771. [Google Scholar] [CrossRef]

- Fernández-Rodríguez, Á.; Martínez-Cagigal, V.; Santamaría-Vázquez, E.; Ron-Angevin, R.; Hornero, R. Influence of spatial frequency in visual stimuli for cVEP-based BCIs: Evaluation of performance and user experience. Front. Hum. Neurosci. 2023, 17, 1288438. [Google Scholar] [CrossRef] [PubMed]

- Campbell, F.W.; Maffei, L. Electrophysiological evidence for the existence of orientation and size detectors in the human visual system. J. Physiol. 1970, 207, 635–652. [Google Scholar] [CrossRef] [PubMed]

- Dow, B.M.; Snyder, A.Z.; Vautin, R.G.; Bauer, R. Magnification factor and receptive field size in foveal striate cortex of the monkey. Exp. Brain. Res. 1981, 44, 213–228. [Google Scholar] [CrossRef] [PubMed]

- Copenhaver, R.M.; Nathan, W.; Perry, J. Factors Affecting Visually Evoked Cortical Potentials such as Impaired Vision of Varying Etiology. J. Investig. Ophthalmol. 1965, 3, 665–675. [Google Scholar]

- Zelmann, R.; Lina, J.M.; Schulze-Bonhage, A.; Gotman, J.; Jacobs, J. Scalp EEG is not a Blur: It Can See High Frequency Oscillations Although Their Generators are Small. Brain Topogr. 2013, 27, 683–704. [Google Scholar] [CrossRef]

- Han, J.; Xu, M.; Xiao, X.; Yi, W.; Jung, T.-P.; Ming, D. A high-speed hybrid brain-computer interface with more than 200 targets. J. Neural Eng. 2023, 20, 016025. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, C.; Ye, X.; Chen, X.; Wang, Y.; Gao, X. Implementing a calibration-free SSVEP-based BCI system with 160 targets. J. Neural. Eng. 2021, 18, 046094. [Google Scholar] [CrossRef]

| Order of Columns | Columns | 21/64 Layout | 32/128 Layout | 66/256 Layout | Area (cm2) |

|---|---|---|---|---|---|

| Initial | Z | POz, Oz, Pz | POz, Oz, Pz, CBz | POOz, Pz, POz, Oz, PPOz, CBz | 0 |

| 1 | 1h/2h | ― | PPO1h, PPO2h | OCB1h, O1h, P1h, PPO1h, OCB2h, O2h, P2h, PPO2h | 35.99 |

| 2 | 1/2 | P1, P2, O1, O2, CB1, CB2 | PO1, PO2, P1, P2, O1, O2, CB1, CB2 | POO1, P1, O1, CB1, OCB1, PO1, OCB2, P2, O2, CB2, POO2, PO2 | 79.61 |

| 3 | 3h/4h | ― | PPO3h, PPO4h | P3h, PPO3h, P4h, PPO4h | 93.35 |

| 4 | 3/4 | PO3, PO4, P3, P4 | PO3, PO4, P3, P4 | PO3, PO4, P3, P4 | 106.89 |

| 5 | 5h/6h | ― | ― | P5h, POO5h, PPO5h, P6h, POO6h, PPO6h | 120.31 |

| 6 | 5/6 | PO5, PO6, P5, P6 | PO5, PO6, P5, P6 | PO5, PO6, P5, P6 | 133.55 |

| 7 | 7h/8h | ― | ― | PPO7h, P7h, PPO8h, P8h | 146.70 |

| 8 | 7/8 | PO7, PO8, P7, P8 | PO7, PO8, P7, P8 | P7, PO7, POO7, P8, PO8, POO8 | 159.86 |

| 9 | 9h/10h | ― | ― | P9h, PO9h, PPO9h, POO9h, P10h, PO10h, PPO10h, POO10h | 179.74 |

| 10 | 9/10 | ― | PO9, PO10, P9, P10 | PO9, PO10, P9, P10 | 204.75 |

| Subjects | 0.5° | 1° | 2° | 3° | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Data Trials | Accuracy (%) | ITR (bits/min) | Data Trials | Accuracy (%) | ITR (bits/min) | Data Trials | Accuracy (%) | ITR (bits/min) | Data Trials | Accuracy (%) | ITR (bits/min) | |

| S1 | 3 | 91.11 | 118.38 | 2 | 90 | 154.88 | 2 | 100 | 192.01 | 1 | 95.56 | 262.94 |

| S2 | 2 | 95.56 | 173.45 | 1 | 95.56 | 261.60 | 1 | 94.44 | 257.48 | 1 | 95.56 | 262.94 |

| S3 | 3 | 87.78 | 110.61 | 2 | 100 | 192.01 | 1 | 92.22 | 245.04 | 1 | 93.33 | 250.15 |

| S4 | 2 | 100 | 192.01 | 1 | 100 | 289.59 | 1 | 98.89 | 282.25 | 1 | 98.89 | 282.25 |

| S5 | 2 | 86.67 | 144.68 | 1 | 95.56 | 262.94 | 1 | 97.78 | 276.26 | 1 | 98.89 | 282.25 |

| S6 | 1 | 82.22 | 199.27 | 1 | 100 | 289.59 | 1 | 100 | 289.59 | 1 | 97.78 | 276.26 |

| S7 | 3 | 68.89 | 73.91 | 1 | 71.11 | 155.94 | 1 | 84.44 | 208.77 | 1 | 92.22 | 245.04 |

| S8 | 5 | 36.67 | 17.21 | 2 | 95.56 | 173.45 | 1 | 90 | 236.99 | 1 | 93.33 | 249.62 |

| S9 | 6 | 66.67 | 40.30 | 3 | 83.33 | 101.26 | 2 | 95.56 | 174.69 | 2 | 98.89 | 187.15 |

| S10 | 2 | 100 | 192.01 | 1 | 97.78 | 274.92 | 1 | 100 | 289.59 | 1 | 100 | 289.59 |

| S11 | 3 | 83.33 | 101.01 | 1 | 94.44 | 255.61 | 1 | 98.89 | 282.25 | 1 | 98.89 | 282.25 |

| S12 | 2 | 100 | 192.01 | 1 | 100 | 289.59 | 1 | 100 | 289.59 | 1 | 100 | 289.59 |

| S13 | 2 | 77.78 | 120.15 | 1 | 93.33 | 250.15 | 1 | 96.67 | 268.93 | 1 | 94.44 | 255.61 |

| S14 | 3 | 78.89 | 91.96 | 3 | 92.22 | 121.53 | 1 | 96.67 | 268.93 | 1 | 97.78 | 274.92 |

| S15 | 2 | 93.33 | 166.45 | 1 | 88.89 | 229.65 | 1 | 98.89 | 268.93 | 1 | 98.89 | 282.25 |

| S16 | 2 | 65.56 | 90.39 | 1 | 92.22 | 245.04 | 1 | 100 | 289.59 | 1 | 100 | 289.59 |

| Mean | - | 82.15 | 126.48 | - | 93.13 | 221.73 | - | 96.52 | 258.39 | - | 97.15 | 266.40 |

| STE | - | 4.18 | 14.14 | - | 1.87 | 15.69 | - | 1.10 | 9.28 | - | 0.67 | 6.52 |

| Article | Number of Targets | Stimuli Size | Accuracy (%) | ITR (bits/min) |

|---|---|---|---|---|

| Wei et al., 2018 [29] | 48 | 2.64° | 91.67 | 181.05 |

| Başaklar et al., 2019 [30] | 36 | 4.95° | 97 | 94.21 |

| Gembler et al., 2019 [31] | 32 | 6.2° | 100 | 149.3 |

| Gembler et al., 2020 [32] | 32 | 4.04° | 95.5 | 96.9 |

| Zarei et al., 2022 [33] | 32 | 3.8° | 88.13 | 187.38 |

| Fernández-Rodrígue et al., 2023 [34] | 9 | 6.7° | 96.53 | 164.54 |

| This study | 30 | 1° | 93.13 | 221.73 |

| This study | 30 | 3° | 97.15 | 266.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Q.; Zhang, S.; Dong, G.; Pei, W.; Gao, X.; Wang, Y. High-Density Electroencephalogram Facilitates the Detection of Small Stimuli in Code-Modulated Visual Evoked Potential Brain–Computer Interfaces. Sensors 2024, 24, 3521. https://doi.org/10.3390/s24113521

Sun Q, Zhang S, Dong G, Pei W, Gao X, Wang Y. High-Density Electroencephalogram Facilitates the Detection of Small Stimuli in Code-Modulated Visual Evoked Potential Brain–Computer Interfaces. Sensors. 2024; 24(11):3521. https://doi.org/10.3390/s24113521

Chicago/Turabian StyleSun, Qingyu, Shaojie Zhang, Guoya Dong, Weihua Pei, Xiaorong Gao, and Yijun Wang. 2024. "High-Density Electroencephalogram Facilitates the Detection of Small Stimuli in Code-Modulated Visual Evoked Potential Brain–Computer Interfaces" Sensors 24, no. 11: 3521. https://doi.org/10.3390/s24113521

APA StyleSun, Q., Zhang, S., Dong, G., Pei, W., Gao, X., & Wang, Y. (2024). High-Density Electroencephalogram Facilitates the Detection of Small Stimuli in Code-Modulated Visual Evoked Potential Brain–Computer Interfaces. Sensors, 24(11), 3521. https://doi.org/10.3390/s24113521